Abstract

Code smells are code structures that indicate a potential issue in code design or implementation. These issues could affect the processes of code testing and maintenance, and overall software quality. Therefore, it is important to detect code smells in the early stages of software development to enhance system quality. Most studies have focused on detecting code smells of a single programming language. This article explores TL for cross-language code smell detection, where Java is the source, and both C# and Python are the target datasets, focusing on Large Class, Long Method, and Long Parameter List code smells. We conducted a comparison study across two transfer learning approaches—instance-based (Importance Weighting Classifier, Nearest Neighbors Weighting, and Transfer AdaBoost) and parameter-based (Transfer Tree, Transfer Forest)—with various base models. The results showed that the instance-based approach outperformed the parameter-based approach, particularly with Transfer AdaBoost using ensemble learning base models. The Transfer AdaBoost approach with Gradient Boosting and Extra Trees achieved consistent and robust results across both C# and Python, with an 83% winning rate, as indicated by the Wilcoxon signed-rank test. These findings underscore the effectiveness of transfer learning for cross-language code smell detection, supporting its generalizability across different programming languages.

1. Introduction

Code smells are characteristics in the code indicating potential flaws in the software architecture, design, or implementation. There are several code smells, including Large Class (LC), Long Method (LM), Long Parameter List (LPL), and Feature Envy (FE). Code smells always occur while delivering the deadlines and the software requirements. These software flaws could cause serious problems when addressing non-functional aspects (e.g., maintainability and testability) [1]. Software engineers are strongly encouraged to routinely perform refactoring to address code smells, enhancing the software’s maintainability and overall quality. Therefore, identifying these smells early in the development process is crucial as it can significantly reduce the effort, time, and cost associated with later refactoring [2].

Recent studies have employed various supervised machine learning (ML) techniques in code smell detection, such as God Class (GC) and LM code smell detection. However, most studies have focused on detecting code smells for a specific programming language. In this paper, we propose utilization of Transfer Learning (TL) to examine its ability to detect code smells across different programming languages with a single ML model. TL can enable the detection of code smells across several programming languages without developing a language-based detection model from scratch. TL employs ML models to transfer knowledge between similar domains. This involves leveraging knowledge gained from a related domain, known as the Source Domain, to enhance the learning performance in another domain, known as the Target Domain [3]. TL includes several approaches: instance-based, parameter-based, and feature-based. Instance-based TL leverages the similarity of the data instances between two tasks. Parameter-based TL shares the parameters between the models. Feature-based TL identifies the shared features between the two tasks [4].

TL has recently been recognized as a promising approach in software engineering tasks where labeled data is scarce or expensive to obtain. A recent systematic literature review (SLR) by R. Malhotra and S. Meena [5] examined 39 studies applying TL across diverse software engineering domains and reported its advantages for defect prediction, testing, and other quality assurance tasks. According to their SLR, TL can be leveraged for tasks such as code smell detection, which can contribute to improving software maintainability and reusability. Additionally, it emphasized that TL can reduce resource costs, shorten project completion times, and improve the likelihood of successful project outcomes, making it a valuable strategy for enhancing software quality in practice. This aligns with the objective of our study, which applies TL to cross-language code smell detection.

In this study, we have two main objectives. First, to propose the application of TL to enable code smell detection across different programming languages with a single model. Second, to conduct a comparative analysis of instance-based and parameter-based TL approaches by examining various methods within each to determine the most effective method for cross-language code smell detection. To the best of our knowledge, this work is one of the earliest to conduct a comparative study of TL approaches in the code smell detection field. We will use instance-based and parameter-based methods to evaluate their performance in detecting LC, LM, and LPL code smells across different programming languages, specifically, Java as a source dataset with C# and Python as target datasets. For instance-based TL, we will investigate the Importance Weighting Classifier (IWC), Nearest Neighbors Weighting (NNW), and Transfer AdaBoost (TAB) methods. Each method will be evaluated using nine ML base models from different classification families. For parameter-based TL, we will examine the Transfer Tree (TT) and Transfer Forest (TF) methods.

The rest of this paper is organized as follows: Section 2 states the definition of TL methods. Section 3 summarizes the related studies on code smell detection. Section 4 outlines the research methodology. Section 5 presents the details of the conducted empirical study. Section 6 analyzes the study results. Section 7 discusses the potential threats to the validity of the results. Finally, Section 8 concludes the study and states avenues for future work.

2. Transfer Learning

TL is a technique that enables the transfer of knowledge gained from a source model pertaining to the source task to another model that relates to the target task. The goal of TL is to improve performance on the target task, particularly when both tasks are within similar domains. Successful implementation should result in improved model performance. Otherwise, a negative transfer occurs [3].

In the context of code smell detection, TL can enhance the efficiency of detecting common code smells across various programming languages. It increases the opportunities for detecting code smells without creating a language-based smell detection tool from scratch. TL has three main approaches: feature-based, instance-based, and parameter-based. In this study, we will focus on the instance-based and parameter-based approaches, which will be further explained in the following sections.

2.1. Instance-Based Transfer Learning

Instance-based TL refers to an approach that fine-tunes pre-trained models learned from a source domain to match the data in a target domain. This method corrects the differences between source and target distributions. Instance-based TL is performed through the following steps [6]:

- Filtering out the instances that are not similar to the target domain.

- Re-weighting instances in the source domain to obtain a similar distribution to the target domain.

- Training an ML model on the collection of re-weighted source instances and the labeled instances in the target domain.

The instance-based TL approach includes several methods. Next, we will briefly overview the methods that will be examined in this study.

2.1.1. Importance Weighting Classifier (IWC)

IWC is a technique used in ML classification tasks to enhance model performance by re-weighting the features based on their corresponding importance. The most important features will gain higher weights based on the training weight vector [7]. IWC can be employed to enhance the effectiveness of TL. It can transfer knowledge from a pre-trained classifier to a new classifier by re-weighting the training instances to match the target task instances. IWC consists of two main components: the feature extractor and the classifier. The feature extractor takes the input data and converts it into a feature vector. Then, this feature vector will be passed to the classifier to make the final prediction. The IWC assigns higher weights to training features that are more similar to the target task, and vice versa. This allows the IWC to focus on the most relevant features and improve the performance of the target task [8].

2.1.2. Transfer AdaBoost Classifier (TAB)

Ada (Adaptive Boosting) is an ML ensemble algorithm used for both binary and multi-class classification tasks, and it extends boosting-based learning algorithms [9]. It works by combining multiple weak learners to form a strong one that can make more accurate predictions. In this algorithm, weak learners are Decision Trees (DT) with one level. Each weak learner is trained on a different subset of the training data and is weighted according to its performance. The algorithm then combines the predictions of the weak learners to make the final prediction. In the context of TL, TAB can be used to transfer knowledge learned from a source domain to a target domain by re-weighting instances based on relevance. Then, fine-tuning is performed on the target data and weak learners are iteratively adjusted to improve accuracy despite differing data distributions [10].

2.1.3. Nearest Neighbors Weighting (NNW)

NNW is an instance-based TL method where a target sample is compared to the source samples in the dataset, and the closest neighbors are identified based on a distance metric, such as Euclidean distance or cosine similarity. Based on the nearest neighbors, the relevant features are re-weighted with higher-importance weights to adjust the target task [11].

2.2. Parameter-Based Transfer Learning

Parameter-based TL is a TL type that transfers knowledge at the model level. This approach shares the parameters of the source model with the target model assuming that the source task parameters will also be relevant for the target task. After sharing the parameters, it can be re-weighted through fine-tuning to fit similar tasks [12]. In the following section, we will briefly overview the methods that will be used in this study.

2.2.1. Transfer Tree Classifier (TT)

TT is a tree-based classifier that uses a pre-trained DT on a source dataset to classify instances in a target dataset. The idea behind this approach is to enhance the classification performance of the DT when applied to the target dataset. During adaptation, the TT Classifier transfers the DT structure from the source domain to the target domain. The transfer process involves mapping the features of the source domain to those of the target domain to generalize the same DT for both domains [13].

2.2.2. Transfer Forest Classifier (TF)

TF is similar to TT; however, it uses a pre-trained Random Forest (RF) instead of DT. In this approach, the RF is modified to be suitable for the target dataset by transferring the knowledge learned from the source domain. The classifier adapts the RF to improve classification performance on the target dataset. The process involves adjusting the RF structure to be adapted to the feature differences between the source and target domains [13].

2.3. Summary of Transfer Learning Approaches

In the context of code smell detection, both instance-based and parameter-based TL approaches can enhance code smell detection performance and enable code smell detection across different programming languages. The main difference between the two approaches is how knowledge is transferred from the source to the target domain. Instance-based TL transfers knowledge at the instance level, for example, assigning weights to code smell dataset instances based on their similarity to another code smell dataset, ensuring that relevant instances contribute more to the learning process. On the other hand, parameter-based TL transfers knowledge at the model level, for instance, using the parameters of a pre-trained model on a large dataset of code smells as a starting point to train a new model on a smaller target code smell dataset.

Table 1 compares the main aspects of instance-based and parameter-based transfer learning (TL). Instance-based TL directly utilizes source instances during the transfer process, thus requiring ongoing access to the source data and typically assuming a higher degree of task similarity. In contrast, parameter-based TL transfers knowledge through pre-trained model parameters and does not require continuous access to the source data after pre-training. Instead, it adapts to the target task via fine-tuning. Although parameter-based TL generally incurs higher computational costs, it often achieves better generalization, especially when the source and target tasks are less similar.

Table 1.

Instance-based vs. parameter-based TL.

3. Related Work

Different approaches have been proposed in the literature for code smell detection, with the main three being metrics-based, rule-based, and ML-based. The metrics-based approach relies on code metrics (e.g., lines of code) to identify code smells. In this approach, a threshold value must be defined for each metric to differentiate between smelly and non-smelly code. Consequently, the accuracy of code smell detection depends on selecting appropriate threshold values. However, this approach is considered unreliable because determining the proper threshold is a challenging task [14,15].

The rule-based approach relies on software engineering experts to manually optimize rules to identify code smells. However, this approach faces challenges due to the lack of standardization in rule definition [16]. In contrast, ML and deep learning (DL) algorithms offer the capability to detect more complex code smells by establishing sophisticated correlations between metrics and predictions. As a result, this approach has recently gained attention in automating the detection process of a wider variety of code smell types [17].

3.1. ML-Based Code Smell Detection

ML algorithms have been utilized in numerous research studies for code smell detection, and they have shown effective performance in the field [17]. In an early study, the DT algorithm was proposed by Kreimer [18] for Big Class and LM code smell detection and achieved high accuracy. The effectiveness of the DT technique was then validated by L. Amorim et al. [19] on 12 different code smells of four medium-scaled open-source projects. In contrast, Support Vector Machines (SVMs) were used by Y. Tian et al. [20] to detect GC, Spaghetti Code Instances, Functional Decomposition, and Swiss Army Knife code smells.

F. Arcelli Fontana et al. [21] utilized 16 ML algorithms and 1986 code smell samples to detect Data Class (DC), LC, FE, and LM code smells. Their findings revealed that ML algorithms performed well, with J48 achieving the highest accuracy, exceeding 99%. Subsequently, D. K. Kim [22] employed Multi-Layer Perceptron (MLP) to detect six code smells: GC, FE, DC, Lazy Class, and Parallel Inheritance, achieving an impressive accuracy of 99.25%.

F. Khomh et al. [23] and S. Vaucher et al. [24] introduced Bayesian Belief Networks (BBN) for the detection of GCs. However, X. Wang et al. [25] applied the same algorithms to detect duplicated code. In another study, a variety of ML models were employed by S. Dewangan and R. S. Rao [26] for code smell detection. As a result, they discovered that the RF model yielded the highest performance, achieving 99.12% accuracy in detecting the FE code smell.

Most of the studies mentioned above have focused on detecting code smells in the Java programming language. In contrast, two recent studies [27,28] have explored ML approaches for Python code smell detection. Vatanapakorn et al. [27] and Sandouka and Aljamaan [28] expanded the PySmell dataset [29] by adding code metrics as extra features to create a Python code smell dataset suitable for ML classification. They assessed the effectiveness of several ML algorithms in detecting code smells at both the class and method levels in Python.

Moreover, ensemble learning techniques have been utilized in a number of studies on code smell detection. Voting ensemble learning was employed by H. Aljamaan [30] to explore the effectiveness of detecting various code smells at the class level and method level. The results demonstrated that voting ensemble learning delivered consistent and high performance across all code smells.

Then, stacking ensemble learning was investigated by A. Alazba and H. Aljamaan [31]. They detected Java code smell using three distinct stacking ensemble learning algorithms. This study achieved consistently high detection performance in both class-level and method-level smells when stacking ensemble was built using SVM and linear regression as meta-classifiers. Further, S. Jain and A. Saha [32] conducted a series of experiments, concluding that stacking ensemble learning consistently outperforms individual classifiers and yields superior results. Additionally, dynamic stacking ensemble was proposed by H. Aljamaan [33] for Java and Python code smell detection. As a result, this approach achieved more stable detection performance than all base ML models and reduced complexity compared to traditional full stacking ensembles.

Ensemble learning techniques have also been applied for Python code smell detection. R. S. Rao et al. [34] utilized the Bagging, Gradient Boost, Max Voting, AdaBoost, and XGBoost methods to detect LC and LM smells in a Python dataset. The results showed that Max Voting achieved the highest performance for LC, while Gradient Boost performed best for LM.

Although ML demonstrated high performance in code smell detection, it has some limitations. ML models must be trained on a raw dataset that contains code metrics as independent variables and labels (smelly or not smelly) as a dependent variables. Therefore, this approach has two main drawbacks. Firstly, an external tool will be required to extract the code metrics from the source code before applying the ML algorithms. Secondly, the performance of ML models will depend on the provided set of metrics only. Thus, any pattern not covered by the given set of features cannot be detected by ML models, which makes ML’s classification capabilities limited with the provided metrics.

Based on the stated limitations, DL techniques have recently been utilized in the code smell detection field. Unlike ML models, DL can detect code smells of raw source code without the need for a feature extraction tool. The next section summarizes recent studies on code smell detection using DL.

3.2. DL-Based Code Smell Detection

M. Hadj-Kacem and N. Bouassida [35] proposed a hybrid detection approach to detect four code smells: GC, DC, FE, and LM. The proposed approach is based on a deep autoencoder to reduce the dimensionality of data, and Artificial Neural Network (ANN) algorithms to classify the samples into smelly and non-smelly categories. They achieved the highest F-measure of 98.93% with GC.

H. Liu et al. [36] were the first to propose a DL technique for FE detection in addition to three code smells: the LM, LC, and Misplaced Classes code smells. The DL approach used by the researchers was a Convolutional Neural Network (CNN) with two layers. Then, A. Das [37] used 1D-CNN to detect brain class and brain method code smells and demonstrated high performance for both code smells. Later, T. Lin et al. [38] used a two-layer CNN model to detect eight different code smells: LM, Lazy Class, speculative generality, refused bequest, duplicated code, contrived complexity, shotgun surgery, and uncontrolled side effects. The experiments conducted by the researchers demonstrated high performance in terms of F1-scores.

H. Gupta et al. [39] developed eight CNN models by varying the number of hidden layers from one to eight layers to detect eight different code smells and compared the results. As a result, the authors found that increasing the number of hidden layers did not enhance model performance consistently. However, the models with eight hidden layers outperformed the other model. T. Sharma et al. [40] was the first to apply the transfer learning technique for the detection of Java and C# code smells. They used CNN, recurrent neural network, and autoencoder deep learning models on source code datasets and found that the models achieved promising performance.

Sandouka and Aljamaan [41] investigated models including CNN, LSTM, and GRU, as well as heterogeneous ensembles including stacking, Hard Voting, and Soft Voting, to detect multiple Python code smells (LC, LM, Long Scope Chaining, LPL, and Long Base Class List). Their results showed that stacking achieved the highest overall stability and detection performance, while CNN demonstrated strong results for certain smells but had limitations with complex nested structures, where ensemble methods provided greater robustness.

Recently, transformer-based pre-trained models such as CodeBERT [42], GraphCodeBERT [43], and PLBART [44] have been developed for code-understanding tasks. These models can capture both semantic and structural relationships in code. Therefore, they have the potential to improve code smell detection in cross-language tasks or when labeled data is limited. Leveraging such models may enhance both the accuracy and generalizability of code smell detection.

3.3. Gap Analysis

Table 2 presents a summary of recent deep learning-based studies on code smell detection, detailing the types of detected code smells, the employed DL approaches, the dataset formats, the target programming languages, and whether cross-language smell detection was addressed. From the summarized studies, numerous limitations can be observed. Most existing studies focus on code smell detection within a single programming language, which restricts the generalizability of their findings across different programming languages. This limitation reduces the model’s capability to detect code smells across multiple programming languages. In addition, few studies have explored TL for code smell detection. Even when TL is considered, the range of approaches evaluated is often limited, and comparisons between different TL methods are rare. These limitations highlight the need for approaches capable of detecting code smells across multiple programming languages, supported by an investigation of different TL techniques.

Table 2.

Recent DL studies on code smell detection.

Table 2.

Recent DL studies on code smell detection.

| Paper | Detected Code Smells | Detection Approach | Dataset Type | Programming Language | Cross-Language Smell Detection |

|---|---|---|---|---|---|

| [35] | God Class, DataClass, Feature Envy, Long Method | ANN | Tabular data | Java | ✗ |

| [36] | Feature Envy, Long Method, Large Class, Misplaced Classes | CNN | Tabular data | Java | ✗ |

| [37] | Brain Class, Brain Method | CNN-1d | Tabular data | Java | ✗ |

| [38] | Long Method, Lazy Class, Speculative Generality, Refused Bequest, Duplicated Code, Uncontrolled Side Effect | CNN | XML-based dataset | Java | ✗ |

| [39] | Blob Class, Complex Class, Internal Getter/Setter, Leaking Inner Class, Long Method, No Low Memory Resolver, Member Ignoring Method, Swiss Army Knife | CNN | Tabular data | Java | ✗ |

| [40] | Complex Conditional, Complex Method, Feature Envy, Multifaceted Abstraction | Auto encoder, CNN, RNN | Tokenized source code | Java and C# | ✓ |

| [41] | Large Class, Long Method, Long Scope Chaining, Long Parameter List, Long Base Class List | CNN, LSTM, GRU | Tabular data | Python | ✗ |

| This study | Large Class, Long Method, Long Parameter List | Instance-based TL, Parameter-based TL | Tabular data | Java, Python, and C# | ✓ |

Based on our summary, we identified the following gaps in the research on code smell detection:

- Gap 1: Most studies focus on developing models to detect code smells in a single programming language. An exception is the work by T. Sharma et al. [40], which leveraged TL to build a cross-language code smell detection model. However, their study did not investigate different TL approaches and did not achieve superior detection results. Therefore, further research is needed to evaluate the effectiveness of the TL approach in code smell detection.

- Gap 2: While T. Sharma et al. [40] utilized TL for code smell detection, studies have not investigated different TL approaches. Particularly, there is a lack of empirical comparative studies evaluating instance-based and parameter-based TL approaches. Therefore, more studies need to evaluate different TL methods in code smell detection.

In this research, we aim to address these identified gaps by conducting a comparative study between instance-based and parameter-based TL approaches in the context of code smell detection. We will empirically investigate the performance of these two approaches to evaluate the effectiveness of detecting code smells across multiple programming languages using a single TL model.

4. Research Methodology

The objective of this research is to investigate the capability of TL in detecting cross-language code smells by investigating various TL approaches and conducting a comparative analysis of their effectiveness. To achieve our objective, we formulated the following research questions:

- RQ1: Which instance-based TL method achieves the highest performance in code smell detection?Rationale. We will investigate several instance-based TL methods, including IWC, NNW, and TAB, in order to determine which method achieves the highest performance in code smell detection.

- RQ2: Which parameter-based TL method achieves the highest performance in code smell detection?Rationale. We will investigate several parameter-based TL methods, including TT and TF classifiers, in order to determine the most effective parameter-based approach in code smell detection.

- RQ3: Which TL approach achieves higher performance when comparing instance-based and parameter-based transfer learning?Rationale. This RQ aims to identify the TL approach that provides consistent and robust performance in code smell detection. Addressing this RQ will fill the gap in the literature, since no prior studies have compared these TL methods in the context of code smell detection.

- RQ4: Is the detection performance of TL approaches consistent across target datasets from different programming languages?Rationale. This research question aims to investigate the effectiveness of TL approaches in consistently detecting code smells across datasets written in different programming languages. Addressing this RQ will evaluate the generalizability of TL methods in cross-language code smell detection.

4.1. Methodology Steps

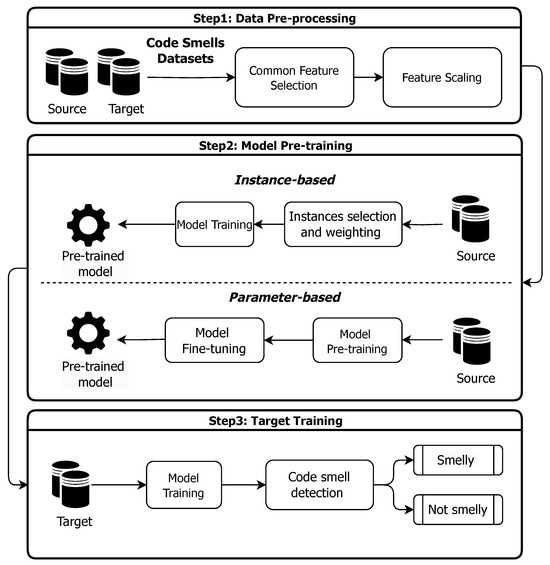

To achieve the research objective, we will conduct a comprehensive study comparing instance-based and parameter-based TL approaches for code smell detection by following the methodology steps illustrated in Figure 1. Our methodology process begins with collecting the source and target datasets. In this study, we utilized Java code smells as the source dataset and Python and C# code smells as the target dataset. Then, we conducted the following main steps:

Figure 1.

Research methodology.

- 1.

- Data Pre-processing: First, we performed common-feature selection to identify the shared attributes between the source and target datasets, aligning them with TL criteria, which will be explained below. Then, we applied min-max normalization to scale the features to enhance the dataset quality and improve the model’s performance.

- 2.

- Model Pre-training: We investigated two TL approaches: instance-based and parameter-based. Instance-based: Various instance-based TL methods were performed, including IWC, NNW, and TAB. In this approach, source dataset instances are weighed and selected based on their relevance to the target dataset. These selected and weighted instances are then used to train an ML model. Parameter-based: Two parameter-based methods were performed: TT and TF. This process involves pre-training a model on the source dataset, followed by fine-tuning the ML model to enhance its generalization for the target dataset. Finally, both approaches result in a pre-trained model that can be leveraged to improve the detection performance on the target dataset.

- 3.

- Target dataset Training: The pre-trained models from both TL approaches were used for target dataset training. The trained model was then used for code smell detection on the target dataset.

In the following sub-sections, we will present the TL dataset criteria and an exploration of the methods investigated within each TL approach.

4.2. Transfer Learning Dataset

To ensure the effectiveness of the TL process, the source and target datasets must meet specific criteria to achieve accurate results. These criteria are essential to align the datasets and improve the reliability of the TL approach. The criteria considered for preparing the source and target datasets in this study are as follows [45]:

- Consistent Feature Set: The source and target datasets must have the same feature set to ensure the transfer of knowledge between the datasets is reliable.

- Different Marginal Distributions: The distribution of feature values must differ between the two datasets to ensure the TL model can be generalized across different data patterns.

- Domain Relevance: The source and target datasets must be relevant to the same task.

4.3. Transfer Learning Approaches

In this research, we will empirically investigate instance-based and parameter-based TL approaches for code smell detection, as detailed below.

4.3.1. Instance-Based

In the instance-based TL approach we investigated three distinct methods: IWC, NNW, and TAB. These methods were utilized to assess their effectiveness in code smell detection across various base models. The base model in the instance-based TL framework serves as the essential classifier, which is then adapted using TL techniques to enhance its performance on the target dataset. We selected a diverse set of nine ML models from different classification families. Four of them are standalone ML models: DT, Logistic Regression (LR), MLP, K-Nearest Neighbors (KNN). However, the remaining six are ensemble-based ML models: RF, Extremely Randomized Trees (ET), Histogram-based Gradient Boosting (HGB), Ada, and Gradient Boosting (GB).

4.3.2. Parameter-Based TL

In the parameter-based TL approach, we investigated two models: the TT and TF models. The TT model utilizes a DT classifier as a base model, while the TF model employs an RF classifier.

5. Empirical Study

In this section, we describe the details of our conducted empirical study. All TL experiments in this study were implemented using the ADAPT Python library. ADAPT is a Python package that provides well-known domain adaptation methods, including both instance-based and parameter-based TL approaches. This library facilitated the application of various TL methods in our study, ensuring an efficient implementation process [46].

5.1. Code Smell Datasets

In this empirical study, we will investigate the efficiency of TL in detecting code smells in the C# and Python programming languages utilizing Java code smell datasets as the source domain. These languages are widely used and continuously developing, as indicated by their ranking among the ten most-used programming languages in the 2024 StackOverflow survey [47]. Java and C# have similar structures as both are object-oriented and strictly typed programming languages [48]. In contrast, Python presents a greater challenge due to its dynamic typing and more flexible syntax. Nonetheless, Python remains a fundamental language in the fields of data science and machine learning, emphasizing the need for further investigation into code smell detection for Python-based systems [49].

Java code smells are the most widely studied datasets and are available with a larger number of instances compared to C# and Python code smell datasets. Therefore, in this study, Java will be employed as the source dataset, and both C# and Python as the target datasets. Code smells can be categorized into several levels, the class level, method level, or statement level, based on the code level in the whole code script. In this research, we selected common code smells across most of the programming languages from different granularities (class level and method level) as follows [2]:

- Large Class (LC): This is a class-level code smell characterized by a large and complex class containing numerous lines of code.

- Long Method (LM): This is a method-level code smell and refers to a large and complex method with large lines of code. Also, it refers to a huge method that centralizes a class’s operations.

- Long Parameter List (LPL): This is a method-level code smell that refers to a method that has too many arguments or parameters.

5.1.1. Source Datasets

Based on the literature, most publicly available Java code smell datasets primarily focus on the GC smell. GC is characterized by a complex class with many lines of code, methods, and attributes, which closely aligns with the defining features of the broader LC smell. Lanza et al. [50] has explicitly stated that GC is comparable to Fowler’s LC code smell, where LC is defined as a class that has grown too large. Additionally, GC has been referred to using interchangeable terms such as Large Class, Blob, and God Class Disharmony by Alkharabsheh et al. [51]. Considering these findings, GC can reasonably be regarded as a specific type or subset of the LC code smell, sharing similar structural and behavioral characteristics. Therefore, to address the scarcity of dedicated Java datasets for LC, this study utilizes the available GC dataset and treats it as a valid representative of the LC smell. For this study, we employed the GC code smell dataset published by Cruz et al. [52] which contains around 35,000 labeled samples with 17 different features. For LM code smell we utilized the dataset published by Reis et al. [53], which contains 1327 labeled samples with 82 features. As for LPL code smell, we employed the dataset published by Arcelli Fontana et al. [21], which contains 420 labeled instances with 55 features.

5.1.2. Target Datasets

For C# code smells we utilized the dataset published by Reis et al. [8] for both LC and LM code smells. The dataset contains 921 labeled samples with 25 and 18 features for LC and LM, respectively. For Python code smells we utilized the dataset published by Vatanapakorn et al. [27] for LC, LM, and LPL code smells. The dataset contains 360 labeled samples with 42 and 21 features for class-level and method-level code smells, respectively.

5.2. Dataset Preparation for Transfer Learning

After collecting each dataset separately, we aligned them to meet the TL datasets criteria highlighted in Section 4 to ensure the effectiveness of TL process. To satisfy feature set consistency, we adjusted the collected datasets by eliminating features that were not common between the source and target datasets to achieve dataset consistency. Table 3 summarizes the characteristics of the datasets used, including the number of selected features shared with the Java code smell datasets.

Table 3.

Dataset description and the number of common features with Java.

The common features selected for C# code smells are as follows:

- LC Code Smell Metrics: lines of code (LOC), Coupling Between Objects (CBO), Weighted Methods per Class (WMC), Lack of Cohesion of Methods (LCOM), Tight Class Cohesion (TCC), Total Number Of Comparison operators from all class members (NOC), Response For a Class (RFC), and Depth Inheritance Hierarchy (DIT).

- LM Code Smell Metrics: LOC, Cyclomatic complexity (CYCLO), Number of Parameters (NOP), and Number of Local Variables (NOLV).

The common features selected for Python code smells are as follows:

- LC Code Smell Metrics: CBO, CYCLO, LOC, NOC, WMC, and DIT.

- LM and LPL Code Smell Metrics: NOP, LOC, CYCLO, and Max Nesting.

To confirm that feature elimination would not affect detection performance, we ensured that the selected common features included the essential metrics for detecting LC, LM, and LPL code smells.

For LC code smells, the common features were derived from the top-ranked features employed in prior studies for code smell detection, as highlighted in a recent SLR [54]. Further, the selected metrics have been repeatedly validated in the literature as reliable indicators of this smell. Lanza et al. [50] emphasized that GC often exhibits a large size in terms of LOC and CBO. Additionally, they mentioned that the detection strategies for this code smell include ATFD, WMC, and TCC metrics.

For LM, the study by Abdou et al. [55] mentioned LOC, CYCLO, NOP, NOLV, and Max Nesting as effective indicators for detecting this smell. The study empirically validated these metrics in a large-scale study, demonstrating that they can accurately classify the severity of LM code smells using ML techniques. This provides strong evidence that the selected metrics are essential for LM detection and justifies their inclusion in the preparation of our TL dataset.

For LPL, NOP has been empirically validated as an effective indicator of this smell [56]. Methods with too many parameters reduce readability and increase coupling, which are the core characteristics of LPL.

The data distribution criterion is satisfied, as the datasets have different distributions. Additionally, the domain similarity criterion is met, as both datasets belong to the same domain; that is, they have code smells in different programming languages. Finally, the existing literature mainly focuses on Java code smell detection, and the most published datasets are from the Java-based programming language [17]. Therefore, we employed Java code smells as the source dataset and C# code smells as the target dataset.

5.3. Feature Scaling

Feature scaling is a recommended pre-processing practice for ML classification problems to normalize the ranges of independent features. This technique aims to enhance dataset quality and model performance [57]. In this study, we employed the max-min normalization (MMN) technique to rescale feature values to fall within the range of zero to one [58]. The MMN equation is as follows:

5.4. Hyperparameter Optimization

In this study, hyperparameter optimization was conducted for the NNW and TAB methods, as they contain critical hyperparameters that have a significant impact on model performance and can be efficiently tuned. These are the number of neighbors (n_neighbors) for NNW and the number of estimators (n_estimators) for TAB. For both methods, the tested values were 3, 5, 7, 10, and 15. Other methods showed limited sensitivity to parameter changes and therefore did not require further optimization, for these methods, default parameter settings were used. The grid search method was applied for parameter optimization. Grid search is a traditional approach that systematically evaluates all possible combinations from a predefined set of hyperparameter values, selecting the combination that produces the best performance according to a chosen metric [59]. In this study, the best parameters were determined based on the MCC metric.

5.5. Model Validation

In this study, we followed the stratified 10-fold cross-validation technique, repeated 10 times, to validate the TL models [60]. In this approach, the dataset is randomly divided into ten equal partitions, where nine folds are used for training and one for testing. This process is repeated ten times, ensuring that each fold is used as the test set exactly once. The average performance across these iterations is reported as the final evaluation metric. This technique ensures that every instance in the dataset is used for both training and testing, reducing bias and providing more reliable evaluation results [61]. Furthermore, cross-validation ensures that the test set remains unseen during training, helping to avoid overfitting and assess the generalization performance of the model with more precision [62].

5.6. Detection Performance Measures

In this empirical study, we employed Accuracy (Acc), F1-score, and the Matthews Correlation Coefficient (MCC) evaluation measures to assess the TL methods’ performance in cross-language code smell detection. Acc is a widely utilized evaluation metric for binary classification problems, including code smell detection [17,63]. It represents the proportion of correctly predicted instances to the total instances.

Both F1-score and MCC are particularly suitable for imbalanced datasets. The F1-score, the harmonic mean of precision and recall, provides a balanced assessment of the model’s performance across both classes by accounting for trade-offs between precision and recall.

MCC is particularly recommended for imbalanced datasets, as it performs well when the majority of each class is correctly classified. Further, it is a statistical metric that yields a high score when predictions excel in the four confusion matrix areas: True Positives (TPs), False Negatives (FNs), True Negatives (TNs), and False Positives (FPs). It returns score values between 1 and −1, where 1 denotes an ideal model, −1 denotes an ideal misclassification, and 0 denotes random prediction [64].

5.7. Statistical Test

In this study, we employed the Wilcoxon signed-rank statistical test to evaluate the significance of detection performance differences among each TL technique. This is a non-parametric test which is more robust and flexible since it does not require the data to be normally distributed. This statistical test assesses whether one model significantly outperforms another. The null hypothesis of this test is “there is no significant difference between the TL techniques”. An value of 0.05 was chosen and the p-value was examined to determine the outcome. If the p-value is less than 0.05, the null hypothesis is rejected, otherwise it will failed to be rejected. Upon rejecting the null hypothesis, pairwise comparisons will be conducted and the model with the highest performance is identified as the winner.

6. Results and Discussion

In this section, we will analyze the results of our empirical study to evaluate the TL methods’ performance in LC, LM, and LPL code smell detection where Java is the source dataset, and both C# and Python are the target datasets, to address the formulated research questions.

6.1. RQ1 Discussion

To address the first RQ, “Which instance-based TL method achieves the highest performance in code smell detection?”, we analyzed the performance of instance-based TL models. Table 4 reports their accuracy, F1-scores, and MCC scores for both C# and Python code smell detection. By analyzing the results, we can observe that most instance-based models achieved high scores. However, the TAB technique consistently outperformed IWC in detecting LC, LM, and LPL code smells across both languages. The performance gaps were generally marginal, often below 0.08 MCC, but in some cases, particularly for LC detection in Python, the differences were more significant, exceeding 0.10 MCC and reaching up to 0.40. These substantial margins emphasize the robustness and effectiveness of the TAB method in code smell detection.

Table 4.

Instance-based TL detection performance.

As each instance-based model was investigated across different base models, we found that ensemble-based models, including RF, ET, GB, and HGB, consistently demonstrated strong performance in code smell detection across both the C# and Python datasets, particularly with the TAB method. LM code smell detection in C# achieved high performance with the standalone models, which were MLP with the NNW TL method, and LR with both the IWC and NNW TL methods. Despite this, ensemble models consistently demonstrated high performance, especially with the TAB method. These findings emphasize the robustness of ensemble-based models, particularly with TAB, across both languages and different types of code smells.

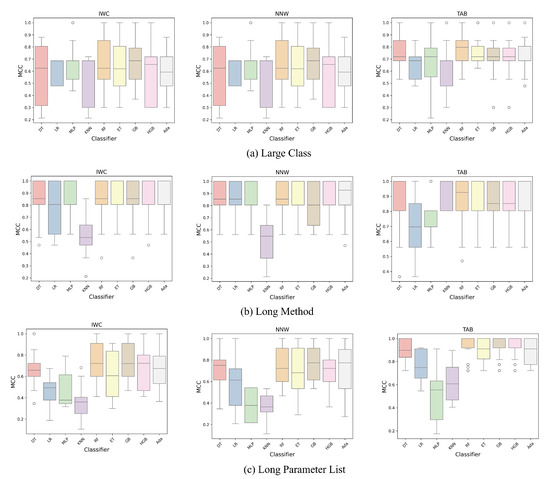

Further, we utilized boxplots to visualize the distribution of MCC scores for each classifier across the three instance-based TL methods, as illustrated in Figure 2 and Figure 3, for the C# and Python datasets, respectively. By visually analyzing the plots, we can observe that all instance-based TL methods achieved high MCC scores across most base models for both languages, indicating the effectiveness of this TL approach. Additionally, the TAB method achieved more consistent results, especially with ensemble-based models, as indicated by the small boxes and shorter whiskers. In contrast, the NNW method has wider variability, especially in the Python dataset, indicating that its performance can vary based on the dataset characteristic or classifier types.

Figure 2.

Boxplots of instance-based TL models’ detection performance for C# dataset.

Figure 3.

Boxplots of instance-based TL models’ detection performance for Python dataset.

Box 1. RQ1 Answer

All instance-based TL methods exhibited strong performance in detecting code smells across both C#

and Python. However, the TAB approach delivered more consistent and reliable results, particularly

when paired with ensemble-based base models.

6.2. RQ2 Discussion

To address the second RQ, “Which parameter-based TL method achieves the highest performance in code smell detection?”, we analyzed the results presented in Table 5 for both the C# and Python programming languages based on the MCC scores. The results show that the TT method outperformed the TF method in detecting LC code smell across both languages. In contrast, the TF method excelled in detecting LM code smell in both C# and Python. For LPL code smell in Python, TT achieved a slightly higher MCC score than TF, and both methods showed competitive performance. This may refer to the larger number of instances of LC code smell in the source dataset, which improves the generalization process when applied to the target dataset. Conversely, with the LM and LPL datasets, TF performed better or achieved competitive results compared to TT because of the smaller number of code smell instances by combining multiple trees, which increases its robustness. This indicates that TF can handle smaller source datasets more effectively by leveraging ensemble learning, which improved the model’s robustness.

Table 5.

Parameter-based TL’s detection performance.

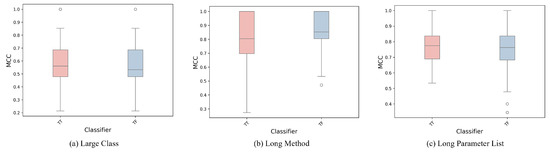

Further, the boxplots in Figure 4 and Figure 5 for the C# and Python datasets, respectively, support these findings. For the C# dataset, TT showed a higher median MCC and less spread compared to TF when detecting LC code smells, indicating its stability, while TF achieved a higher median in LM detection. In contrast, the Python dataset displayed more comparable distributions between TT and TF across LC, LM, and LPL code smells, with overlapping medians and similar spreads. These results emphasize that dataset characteristics significantly impact the effectiveness of classifiers in parameter-based approaches.

Figure 4.

Boxplots of parameter-based TL models’ detection performance for C# dataset.

Figure 5.

Boxplots of parameter-based TL models’ detection performance for Python dataset.

Box 2. RQ2 Answer

The performance of parameter-based methods varied with the size of the source dataset. In both C#

and Python, the TT method was more effective in detecting LC code smells when the source dataset

was larger, whereas the TF method performed better for LM code smells. Both methods showed

comparable results for LPL, likely due to the characteristics of that dataset.

6.3. RQ3 Discussion

To address the third RQ, “Which TL approach achieves higher performance when comparing instance-based and parameter-based transfer learning?”, we conducted a non-parametric Wilcoxon statistical test to evaluate the differences in model performance based on MCC scores across both the C# and Python programming languages for the LC, LM, and LPL datasets. The Wilcoxon signed-rank test is a non-parametric method used to compare paired data by analyzing the differences between the pairs. Each comparison is classified as a win, loss, or tie. A model is considered the winner if it significantly outperforms the second model, while the underperforming model is labeled as the loser. A tie occurs when no statistically significant difference is detected between the two models, which means the null hypothesis cannot be rejected at a significance level of = 0.05.

To evaluate the performance of each TL model, 28 pairwise comparisons were conducted per model for each code smell dataset within both languages. This resulted in a total of 56 comparisons for C# and 84 comparisons for Python per model. This approach provides a comprehensive analysis of model effectiveness in detecting code smells across different programming languages. Table 6 summarizes the number of wins and losses for each model in C#, in Python, and in total, sorted in descending order based on the overall number of wins.

Table 6.

Wilcoxon test results.

Based on the results, we can observe that instance-based TL outperformed the parameter-based approach, with the top performing models lying under the category of instance-based TL, particularly the TAB models. I_TAB_GB and and I_TAB_ET are the best models overall, achieving the highest win rate of 83% with only 4% and 5% losses, respectively, demonstrating superior stability and reliability. Additionally, I_TAB_RF, and I_TAB_HGB achieved high numbers of wins and low losses, indicating the effectiveness of ensemble-based models with the TAB method. However, the IWC and NNW methods showed moderate performance with less consistency than the top-ranked models. In contrast, parameter-based models showed weak performance, with P_TT_DT achieving 25% wins and 66% losses, and P_TF_RF achieving 24% wins and 59% losses.

Box 3. RQ3 Answer

The instance-based TL approach delivered more consistent and reliable detection performance than

the parameter-based approach in code smell detection when using Java code smells as the source

dataset and both C# and Python code smells as the target datasets.

6.4. RQ4 Discussion

We will address the fourth research question, “ Is the detection performance of TL approaches consistent across target datasets from different programming languages? ”, based on our overall findings. First, we observed that the instance-based approach consistently outperforms the parameter-based approach in both C# and Python. Second, among instance-based methods, TAB demonstrated the highest performance consistently, particularly with ensemble-based models. Finally, the parameter-based approach showed variable performance across different code smells and languages based on the source dataset size and the nature of the data.

To emphasize these observations, we compared the C# and Python results using the Wilcoxon test. TAB achieved the highest win percentages in both languages, though the rankings of the base models varied. For instance, I_TAB_ET achieved the top win rate in Python and ranked fourth in C#, yet the winning percentages were similar, 83% in Python and 82% in C#. These results highlight the potential of TL methods to generalize across different programming languages for detecting the same code smells. While Python differs from Java in terms of its object-oriented structure, it still yielded performance comparable to C#, which shares more structural similarities with Java. However, the results may still vary depending on other factors, such as the size of the source dataset, the number of features, and the nature of the data itself.

Box 4. RQ4 Answer

The detection performance of TL approaches is generally consistent across target datasets from

different programming languages. Instance-based methods, particularly TAB with ensemble models,

consistently outperformed parameter-based methods in both C# and Python. While minor variations

exist, due to differences in source dataset size, feature composition, and code structure, these results

confirm the potential of TL methods to generalize effectively across languages when detecting

similar code smells.

6.5. Comparison with Prior Work

For comparison with prior work, we compared our results with the study by Sharma et al. [40], which used TL for cross-language code smell detection. They applied TL on tokenized Java code as the source and C# as the target. In contrast, our approach uses tabular code representations with Java as the source and both C# and Python as targets. Based on the F1-scores, our methodology outperforms that of Sharma et al., demonstrating higher predictive performance.

7. Threats to Validity of Our Results

One potential internal threat to the validity of our results is that the Java, C#, and Python datasets used in this study are imbalanced, which could introduce bias into the model’s performance evaluation. To mitigate this threat, we employed stratified 10-fold cross-validation and repeated it ten times. This approach ensured that all instances were utilized in both the training and testing phases, thus minimizing bias. Additionally, we used the MCC metric to assess detection performance. MCC is particularly suitable for imbalanced binary classification tasks because it provides reliable evaluation by considering all aspects of the confusion matrix.

Another potential threat is overfitting, where the model performs well on training data but fails to generalize to new data. To reduce this risk, repeated cross-validation was used so that the model was always tested on data it had not seen during training. Additionally, since our transfer learning methods were tested on a separate target dataset, the models were evaluated on completely unseen data from a different domain, which helped prevent overfitting and improved generalization.

A further internal threat to the validity of our results is the reduction in the number of features required to meet the TL criteria. This step was required to ensure consistency between the source and target datasets, but it is a trade-off that may have limited the diversity of features available for detecting code smells and affected detection performance. To mitigate this threat, we made sure the common features were core attributes consistently associated with code smells based on previous studies [2,54]. Despite this mitigation, a possible decrease in model effectiveness due to fewer features could not be completely avoided.

An external threat to the validity of our results is the ability to generalize the findings of this study. To mitigate this threat, we investigated TL’s detection performance on code smells of two programming languages with different writing structures (C# and Python) to generalize our findings. However, more experiments are required on code smells of other programming languages such as JavaScript. Also, our results are limited to the detection of LC, LM, and LPL smells, and further research is required to generalize these findings across other types of code smells.

A potential overall threat to the validity of our results is that the obtained performance differences might not be statistically significant between the TL methods, which may affect the reliability of the comparative results. To address this threat, we utilized the Wilcoxon statistical test to examine the differences between each TL method, ensuring that there were significant differences between the models. This approach allowed us to obtain more robust and reliable results.

8. Conclusions

In this study, we conducted a comparative analysis of two TL approaches, instance-based and parameter-based TL, to detect LC, LM, and LPL code smells. Java was used as the source dataset, while C# and Python were used as target datasets. The instance-based approach included several methods, including IWC, NNW, and TAB, while the parameter-based approach included the TT and TF methods. Additionally, we examined various base models for each instance-based method, including standalone and ensemble-based ML models. TL models were evaluated using stratified 10-fold cross-validation repeated ten times. Further, the Wilcoxon signed-rank test was used to examine the statistical differences between the models.

The findings of our empirical study can be summarized as follows: (1) Instance-based TL demonstrated superior results in code smell detection across several methods. TAB achieved more consistent performance specifically when paired with the ensemble-based base models in both the C# and Python programming languages. (2) The performance of parameter-based methods varied according to the source dataset size. The TT method was more effective in LC code smell detection, while the TF method performed better in LM code smell detection across both languages; hence, the source LC code smell dataset is larger than the LM dataset. However, both models achieved good results in detecting LPL code smell in the Python dataset. (3) The instance-based TL approach outperformed the parameter-based approach in code smell detection, with more consistent and reliable results. (4) The investigated TL approaches achieved consistent results across different target programming languages (C# and Python).

This study has a few limitations that should be defined. First, the experiments were conducted to detect code smells in only three programming languages (Java, C#, and Python), which may limit the applicability of the results to other languages. Second, the feature set was limited to the common attributes across datasets to satisfy TL requirements, which may have reduced the diversity of features available for code smell detection. Third, the evaluation focused on a selected set of TL approaches, so the performance of other potential methods was not investigated. These factors define the scope of our findings and should be considered when considering the results.

In terms of future work, this research can be extended further to perform a more in-depth investigation of the capabilities of TL in code smell detection. Future research directions may include the following: First, investigate the model’s performance across different datasets with varying feature sets and distributions to assess its generalizability and robustness. Second, examine TL’s performance in detecting other code smells. Third, investigate code smell detection performance for more programming languages with a target dataset such as JavaScript.

Author Contributions

Conceptualization, R.S. and H.A.; methodology, R.S. and H.A.; software, R.S.; validation, R.S. and H.A.; formal analysis, R.S. and H.A.; investigation, R.S. and H.A.; data curation, R.S. and H.A.; writing—original draft preparation, R.S.; writing—review and editing, H.A.; visualization, R.S. and H.A.; supervision, H.A. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the funding provided by the Interdisciplinary Research Center for Finance and Digital Economy (IRC-FDE) at King Fahd University of Petroleum and Minerals (KFUPM) under Grant No. INFE2410.

Data Availability Statement

The data presented in this study are openly available in: Java-GC: http://dvscross.github.io/BadSmellsDetectionStudy, Java-LM: https://github.com/dataset-cs-surveys/Crowdsmelling, Java-LPL: https://essere.disco.unimib.it/machine-learning-for-code-smell-detection/, and Python: https://github.com/NatthidaW/pythoncodesmell.

Acknowledgments

The authors would like to acknowledge the support of King Fahd University of Petroleum and Minerals (KFUPM) in the development of this work.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

References

- Güzel, A.; Aktas, Ö. A survey on bad smells in codes and usage of algorithm analysis. Int. J. Comput. Sci. Softw. Eng. 2016, 5, 114. [Google Scholar]

- Fowler, M. Refactoring: Improving the Design of Existing Code. Addison-Wesley Professional: Boston, MA, USA, 2018. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Pan, S.J. Transfer learning. Learning 2020, 21, 1–2. [Google Scholar]

- Malhotra, R.; Meena, S. A systematic review of transfer learning in software engineering. Multimed. Tools Appl. 2024, 83, 87237–87298. [Google Scholar] [CrossRef]

- Chen, Q.; Xue, B.; Zhang, M. Instance based transfer learning for genetic programming for symbolic regression. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 3006–3013. [Google Scholar]

- Kolcz, A.; Teo, C.H. Feature Weighting for Improved Classifier Robustness. In Proceedings of the 6th Conference on Email and Anti-Spam (CEAS 2009), Mountain View, CA, USA, 16–17 July 2009. [Google Scholar]

- Lu, N.; Zhang, T.; Fang, T.; Teshima, T.; Sugiyama, M. Rethinking importance weighting for transfer learning. In Federated and Transfer Learning; Springer: Berlin/Heidelberg, Germany, 2022; pp. 185–231. [Google Scholar]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Al-Stouhi, S.; Reddy, C.K. Adaptive Boosting for Transfer Learning Using Dynamic Updates. In Machine Learning and Knowledge Discovery in Databases ECML/PKDD (1); Springer: Berlin/Heidelberg, Germany, 2011; Volume 6. [Google Scholar]

- Loog, M. Nearest neighbor-based importance weighting. In Proceedings of the 2012 IEEE International Workshop on Machine Learning for Signal Processing, Santander, Spain, 23–26 September 2012; pp. 1–6. [Google Scholar]

- Pinto, G.; Messina, R.; Li, H.; Hong, T.; Piscitelli, M.S.; Capozzoli, A. Sharing is caring: An extensive analysis of parameter-based transfer learning for the prediction of building thermal dynamics. Energy Build. 2022, 276, 112530. [Google Scholar] [CrossRef]

- Minvielle, L.; Atiq, M.; Peignier, S.; Mougeot, M. Transfer learning on decision tree with class imbalance. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 1003–1010. [Google Scholar]

- Lacerda, G.; Petrillo, F.; Pimenta, M.; Guéhéneuc, Y.G. Code smells and refactoring: A tertiary systematic review of challenges and observations. J. Syst. Softw. 2020, 167, 110610. [Google Scholar] [CrossRef]

- Menshawy, R.S.; Yousef, A.H.; Salem, A. Code Smells and Detection Techniques: A Survey. In Proceedings of the 2021 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 26–27 May 2021; pp. 78–83. [Google Scholar]

- Moha, N.; Guéhéneuc, Y.G.; Duchien, L.; Le Meur, A.F. Decor: A method for the specification and detection of code and design smells. IEEE Trans. Softw. Eng. 2009, 36, 20–36. [Google Scholar] [CrossRef]

- Al-Shaaby, A.; Aljamaan, H.; Alshayeb, M. Bad smell detection using machine learning techniques: A systematic literature review. Arab. J. Sci. Eng. 2020, 45, 2341–2369. [Google Scholar] [CrossRef]

- Kreimer, J. Adaptive detection of design flaws. Electron. Notes Theor. Comput. Sci. 2005, 141, 117–136. [Google Scholar] [CrossRef]

- Amorim, L.; Costa, E.; Antunes, N.; Fonseca, B.; Ribeiro, M. Experience report: Evaluating the effectiveness of decision trees for detecting code smells. In Proceedings of the 2015 IEEE 26th International Symposium on Software Reliability Engineering (ISSRE), Gaithersbury, MD, USA, 2–5 November 2015; pp. 261–269. [Google Scholar]

- Tian, Y.; Lo, D.; Sun, C. Information retrieval based nearest neighbor classification for fine-grained bug severity prediction. In Proceedings of the 2012 19th Working Conference on Reverse Engineering, Kingston, ON, Canada, 15–18 October 2012; pp. 215–224. [Google Scholar]

- Arcelli Fontana, F.; Mäntylä, M.V.; Zanoni, M.; Marino, A. Comparing and experimenting machine learning techniques for code smell detection. Empir. Softw. Eng. 2016, 21, 1143–1191. [Google Scholar] [CrossRef]

- Kim, D.K. Finding bad code smells with neural network models. Int. J. Electr. Comput. Eng. 2017, 7, 3613. [Google Scholar] [CrossRef]

- Khomh, F.; Vaucher, S.; Guéhéneuc, Y.G.; Sahraoui, H. BDTEX: A GQM-based Bayesian approach for the detection of antipatterns. J. Syst. Softw. 2011, 84, 559–572. [Google Scholar] [CrossRef]

- Vaucher, S.; Khomh, F.; Moha, N.; Guéhéneuc, Y.G. Tracking design smells: Lessons from a study of god classes. In Proceedings of the 2009 16th Working Conference on Reverse Engineering, Lille, France, 13–16 October 2009; pp. 145–154. [Google Scholar]

- Wang, X.; Dang, Y.; Zhang, L.; Zhang, D.; Lan, E.; Mei, H. Can I clone this piece of code here? In Proceedings of the 27th IEEE/ACM International Conference on Automated Software Engineering, Essen, Germany, 3–7 September 2012; pp. 170–179. [Google Scholar]

- Dewangan, S.; Rao, R.S. Code Smell Detection Using Classification Approaches. In Intelligent Systems; Springer: Singapore, 2022; pp. 257–266. [Google Scholar]

- Vatanapakorn, N.; Soomlek, C.; Seresangtakul, P. Python code smell detection using machine learning. In Proceedings of the 2022 26th International Computer Science and Engineering Conference (ICSEC), Sakon Nakhon, Thailand, 21–23 December 2022; pp. 128–133. [Google Scholar]

- Sandouka, R.; Aljamaan, H. Python code smells detection using conventional machine learning models. PeerJ Comput. Sci. 2023, 9, e1370. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Chen, L.; Ma, W.; Xu, B. Detecting code smells in Python programs. In Proceedings of the 2016 international conference on Software Analysis, Testing and Evolution (SATE), Kunming, China, 3–4 November 2016; pp. 18–23. [Google Scholar]

- Aljamaan, H. Voting Heterogeneous Ensemble for Code Smell Detection. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 13–16 December 2021; pp. 897–902. [Google Scholar]

- Alazba, A.; Aljamaan, H. Code smell detection using feature selection and stacking ensemble: An empirical investigation. Inf. Softw. Technol. 2021, 138, 106648. [Google Scholar] [CrossRef]

- Jain, S.; Saha, A. Improving performance with hybrid feature selection and ensemble machine learning techniques for code smell detection. Sci. Comput. Program. 2021, 212, 102713. [Google Scholar] [CrossRef]

- Aljamaan, H. Dynamic stacking ensemble for cross-language code smell detection. PeerJ Comput. Sci. 2024, 10, e2254. [Google Scholar] [CrossRef]

- Rao, R.S.; Dewangan, S.; Mishra, A. An Empirical Evaluation of Ensemble Models for Python Code Smell Detection. Appl. Sci. 2025, 15, 7472. [Google Scholar] [CrossRef]

- Hadj-Kacem, M.; Bouassida, N. A Hybrid Approach To Detect Code Smells using Deep Learning. In Proceedings of the ENASE, Setubal, Portugal, 23–24 March 2018; pp. 137–146. [Google Scholar]

- Liu, H.; Jin, J.; Xu, Z.; Zou, Y.; Bu, Y.; Zhang, L. Deep learning based code smell detection. IEEE Trans. Softw. Eng. 2019, 47, 1811–1837. [Google Scholar] [CrossRef]

- Das, A.K.; Yadav, S.; Dhal, S. Detecting code smells using deep learning. In Proceedings of the TENCON 2019—2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 2081–2086. [Google Scholar]

- Lin, T.; Fu, X.; Chen, F.; Li, L. A Novel Approach for Code Smells Detection Based on Deep Leaning. In EAI International Conference on Applied Cryptography in Computer and Communications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 171–174. [Google Scholar]

- Gupta, H.; Kulkarni, T.G.; Kumar, L.; Neti, L.B.M.; Krishna, A. An empirical study on predictability of software code smell using deep learning models. In International Conference on Advanced Information Networking and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 120–132. [Google Scholar]

- Sharma, T.; Efstathiou, V.; Louridas, P.; Spinellis, D. Code smell detection by deep direct-learning and transfer-learning. J. Syst. Softw. 2021, 176, 110936. [Google Scholar] [CrossRef]

- Sandouka, R.; Aljamaan, H. Enhancing Python Code Smell Detection with Heterogeneous Ensembles. Int. J. Softw. Eng. Knowl. Eng. 2025, 35, 963–986. [Google Scholar] [CrossRef]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. Codebert: A pre-trained model for programming and natural languages. arXiv 2020, arXiv:2002.08155. [Google Scholar]

- Guo, D.; Ren, S.; Lu, S.; Feng, Z.; Tang, D.; Liu, S.; Zhou, L.; Duan, N.; Svyatkovskiy, A.; Fu, S.; et al. Graphcodebert: Pre-training code representations with data flow. arXiv 2020, arXiv:2009.08366. [Google Scholar]

- Ahmad, W.U.; Chakraborty, S.; Ray, B.; Chang, K.W. Unified pre-training for program understanding and generation. arXiv 2021, arXiv:2103.06333. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Cariucci, F.M. ADAPT: Python Toolbox for Domain Adaptation. Version 0.4.4. 2021. Available online: https://adapt-python.github.io/adapt/index.html (accessed on 18 November 2024).

- Overflow, S. Stack Overflow. Available online: https://survey.stackoverflow.co/2024 (accessed on 20 August 2025).

- Dmytrenko, T.; Derkach, T.; Dmytrenko, A. Using java and C# programming languages for server platforms and workstations. Navig. Commun. Control Syst. Collect. Sci. Pap. 2023, 3, 93–94. [Google Scholar]

- Srinath, K. Python–the fastest growing programming language. Int. Res. J. Eng. Technol. 2017, 4, 354–357. [Google Scholar]

- Lanza, M.; Marinescu, R. Object-Oriented Metrics in Practice: Using Software Metrics to Characterize, Evaluate, and Improve the Design of Object-Oriented Systems; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Alkharabsheh, K.; Crespo, Y.; Fernández-Delgado, M.; Cotos, J.M.; Taboada, J.A. Assessing the influence of size category of the project in god class detection, an experimental approach based on machine learning (MLA). In Proceedings of the International Conference on Software Engineering & Knowledge Engineering, Lisbon, Portugal, 10–12 July 2019; pp. 361–366. [Google Scholar]

- Cruz, D.; Santana, A.; Figueiredo, E. Detecting bad smells with machine learning algorithms: An empirical study. In Proceedings of the 3rd International Conference on Technical Debt, Seoul, Republic of Korea, 25–26 May 2020; pp. 31–40. [Google Scholar]

- Reis, J.P.d.; Abreu, F.B.e.; Carneiro, G.d.F. Crowdsmelling: A preliminary study on using collective knowledge in code smells detection. Empir. Softw. Eng. 2022, 27, 69. [Google Scholar] [CrossRef]

- Yadav, P.S.; Rao, R.S.; Mishra, A.; Gupta, M. Machine learning-based methods for code smell detection: A survey. Appl. Sci. 2024, 14, 6149. [Google Scholar] [CrossRef]

- Abdou, A.; Darwish, N. Severity classification of software code smells using machine learning techniques: A comparative study. J. Softw. Evol. Process 2024, 36, e2454. [Google Scholar] [CrossRef]

- Moinuddin, S.; Iqbal, F.; Hamza, H.A.; Fayyaz, S. Empirical study of Long Parameter List code smell and refactoring tool comparison. Int. J. Multidiscip. Sci. Eng. 2017, 8, 11–16. [Google Scholar]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Matthews correlation coefficient (MCC) is more informative than Cohen’s Kappa and Brier score in binary classification assessment. IEEE Access 2021, 9, 78368–78381. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Liashchynskyi, P.; Liashchynskyi, P. Grid search, random search, genetic algorithm: A big comparison for NAS. arXiv 2019, arXiv:1912.06059. [Google Scholar] [CrossRef]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- Tantithamthavorn, C.; McIntosh, S.; Hassan, A.E.; Matsumoto, K. An empirical comparison of model validation techniques for defect prediction models. IEEE Trans. Softw. Eng. 2016, 43, 1–18. [Google Scholar] [CrossRef]

- Montesinos López, O.A.; Montesinos López, A.; Crossa, J. Overfitting, model tuning, and evaluation of prediction performance. In Multivariate Statistical Machine Learning Methods for Genomic Prediction; Springer: Berlin/Heidelberg, Germany, 2022; pp. 109–139. [Google Scholar]

- Azeem, M.I.; Palomba, F.; Shi, L.; Wang, Q. Machine learning techniques for code smell detection: A systematic literature review and meta-analysis. Inf. Softw. Technol. 2019, 108, 115–138. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).