Real-Time Monitoring of 3D Printing Process by Endoscopic Vision System Integrated in Printer Head

Abstract

1. Introduction

Current Research in Online Monitoring

2. FDM Technology

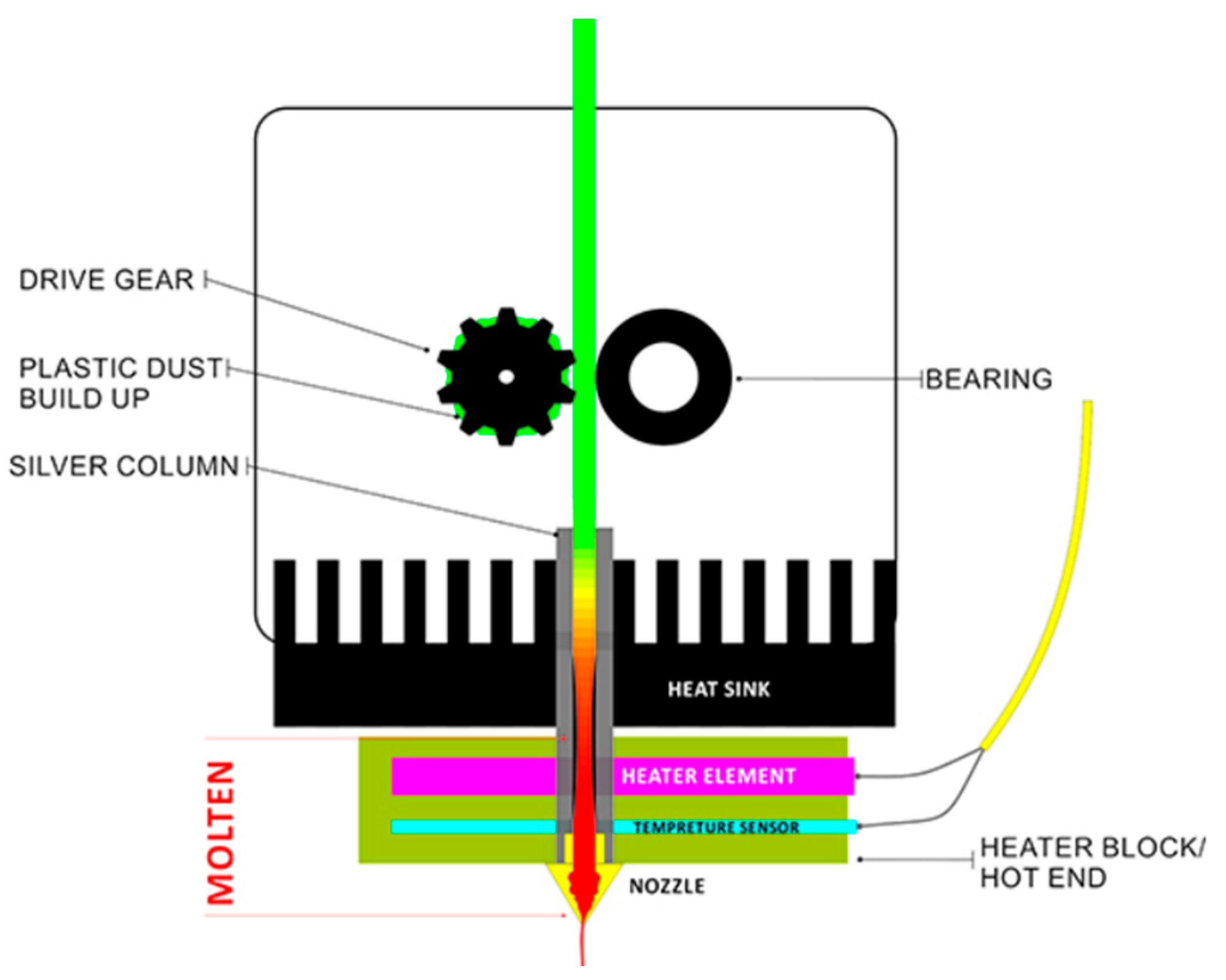

2.1. FDM Print Head

2.1.1. Motors and Drives

2.1.2. Extruder

2.1.3. Hot End

- Heater block—heats the hot end of the print head to the desired temperature.

- Thermistor or temperature sensor—monitors the temperature of the heating block to maintain temperature stability.

2.1.4. Cooling

2.1.5. Filament Guidance

3. Methodology

3.1. Mechanical Errors and Their Impact on Print Quality

3.2. Errors Caused by Incorrect Print Settings

3.3. Selection of Critical Errors That We Can Detect

3.3.1. Layer Shifting

3.3.2. Adhesion to the Surface

3.3.3. Stringing

4. Material and Methods

4.1. Types of Endoscopic Cameras

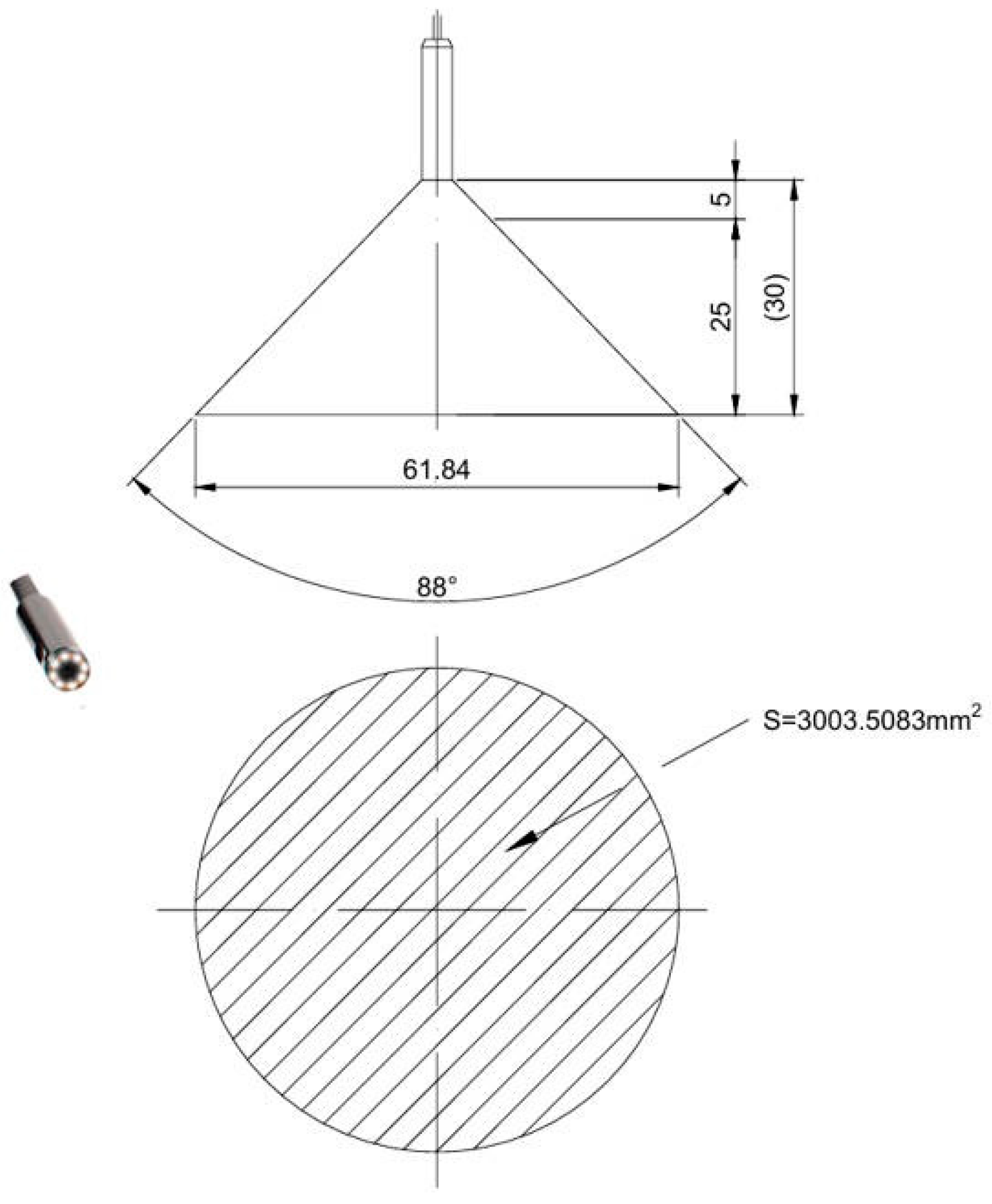

Endoscopic Camera Used in Experiment

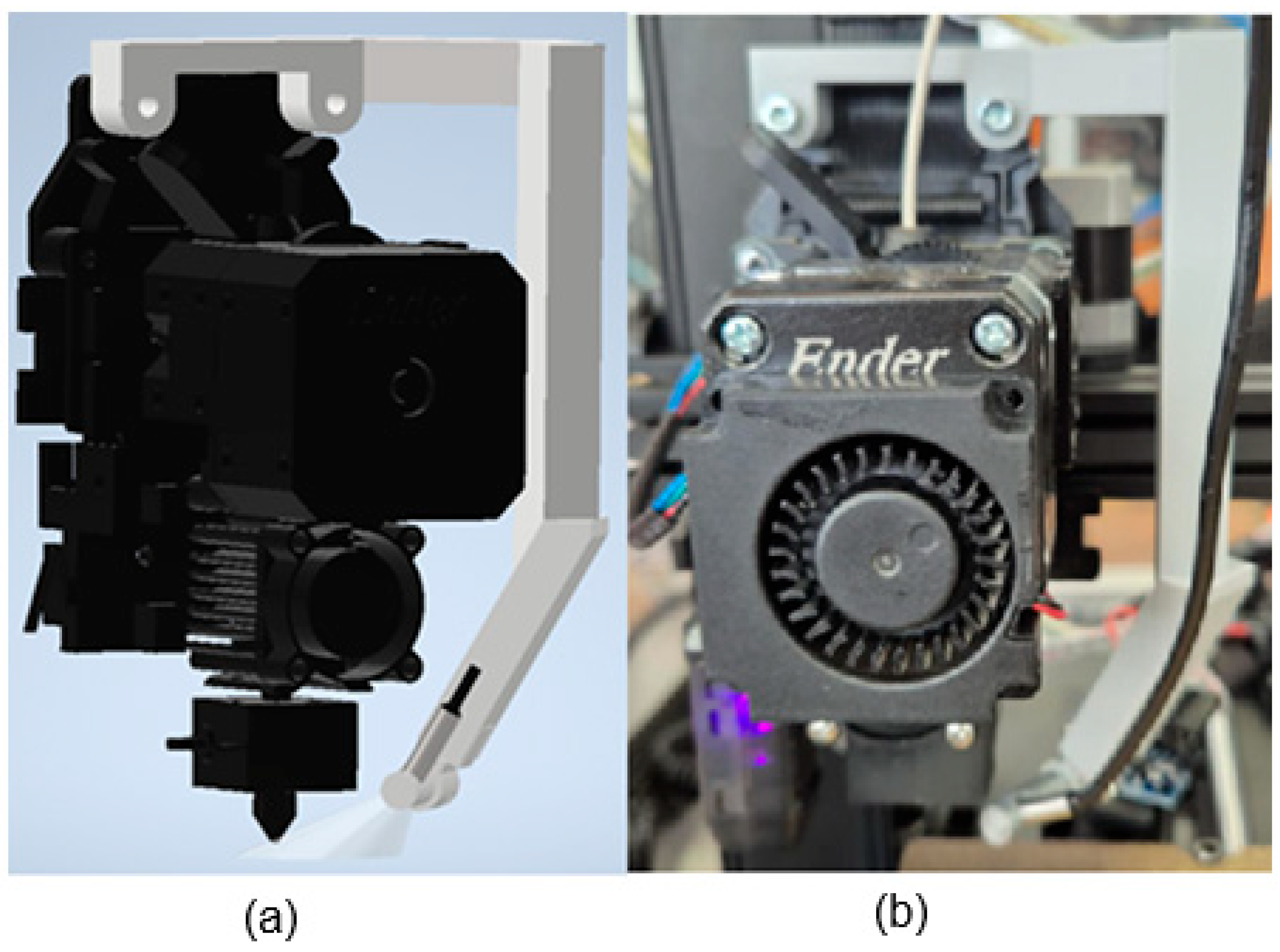

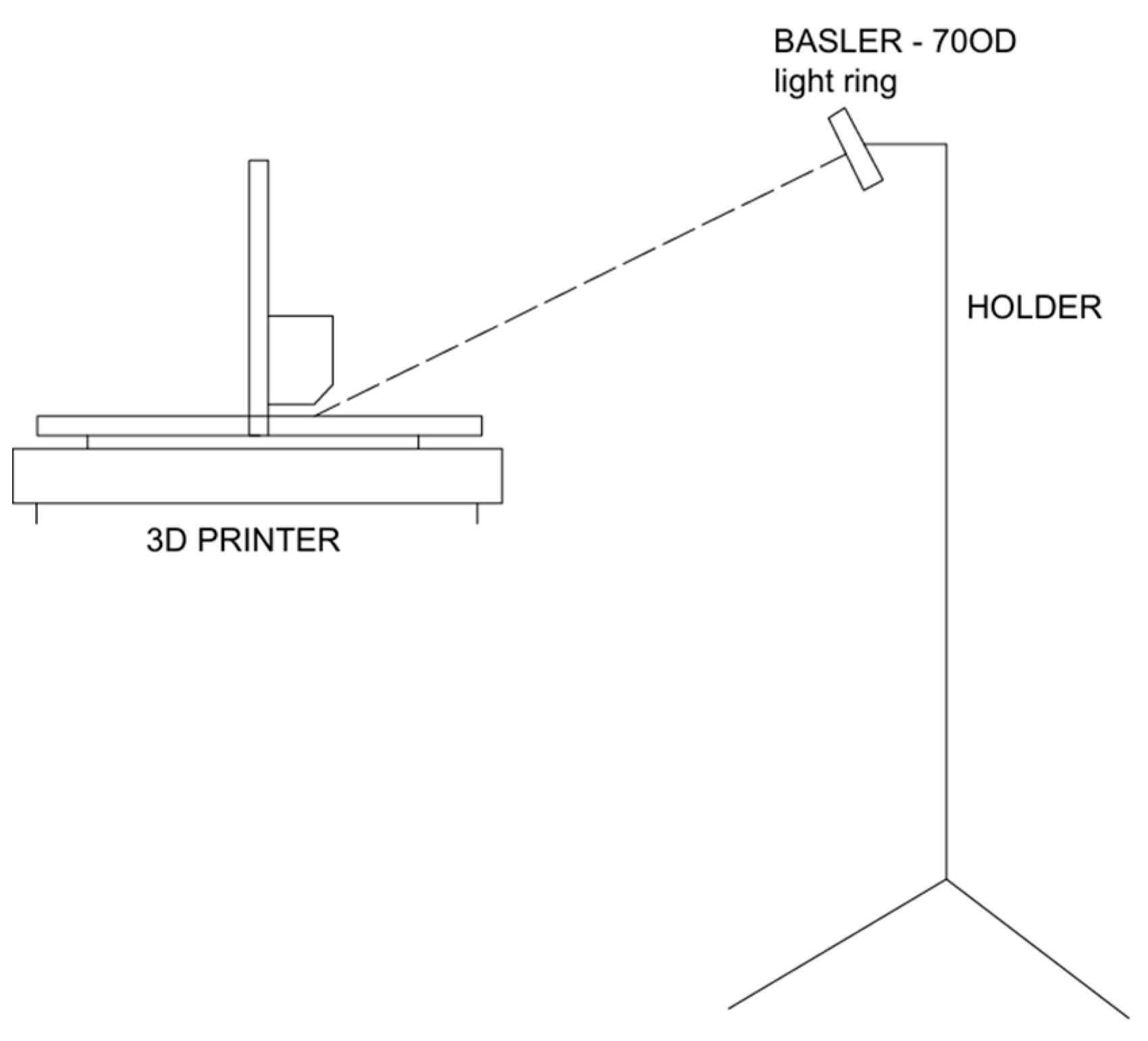

4.2. Endoscopic Camera Placement Design

4.3. External Light Source

5. Training Process

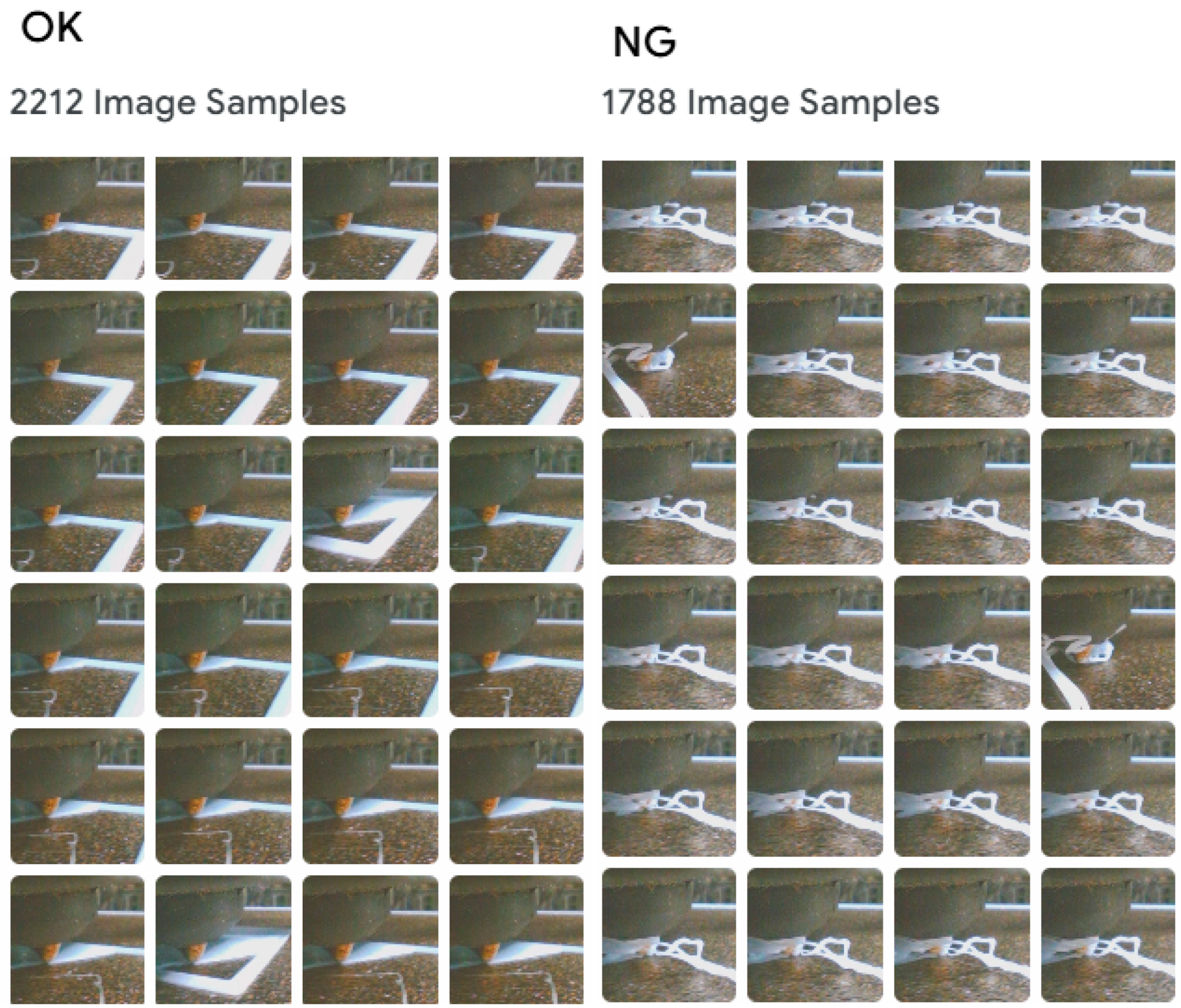

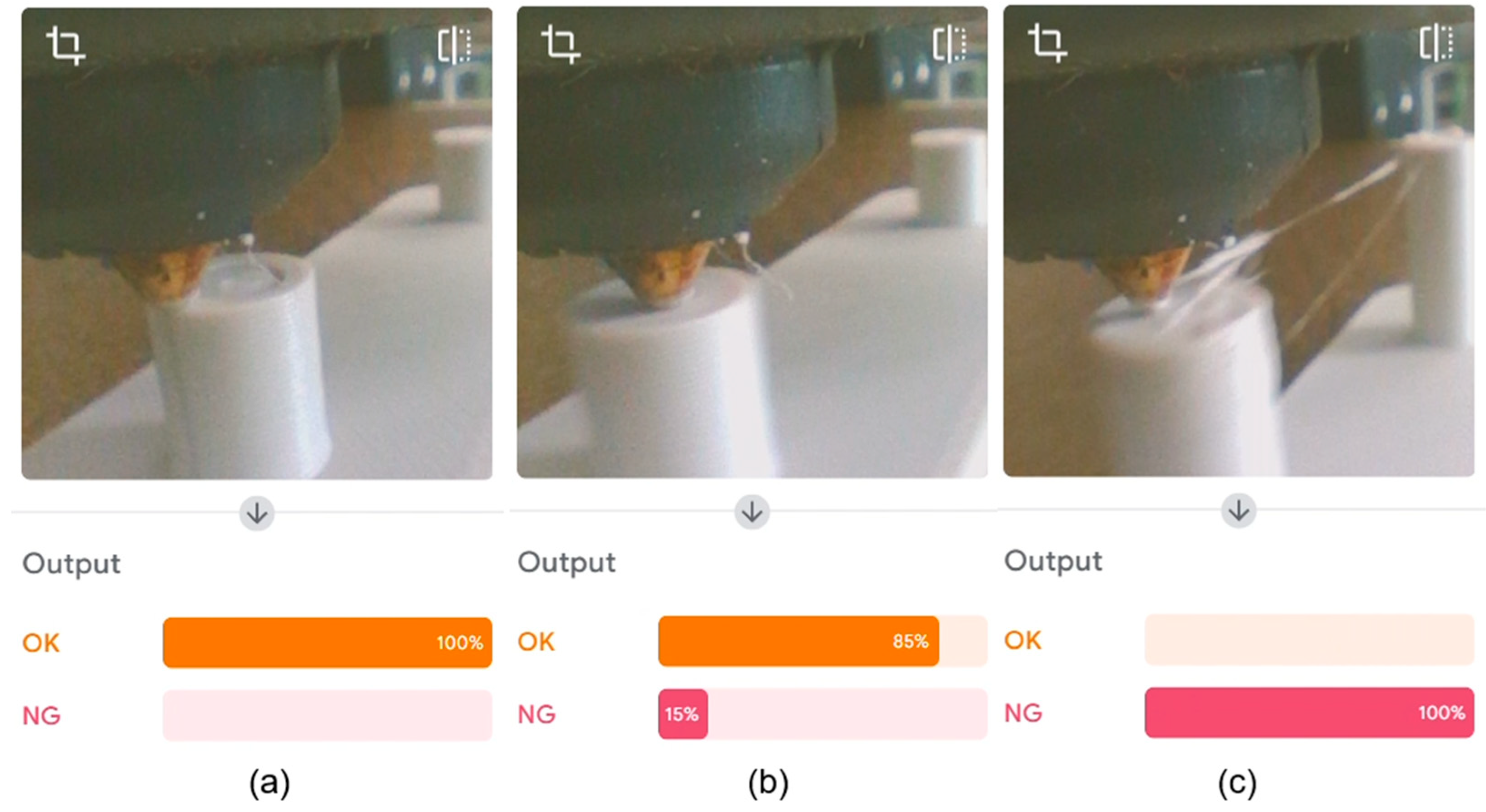

5.1. Stringing

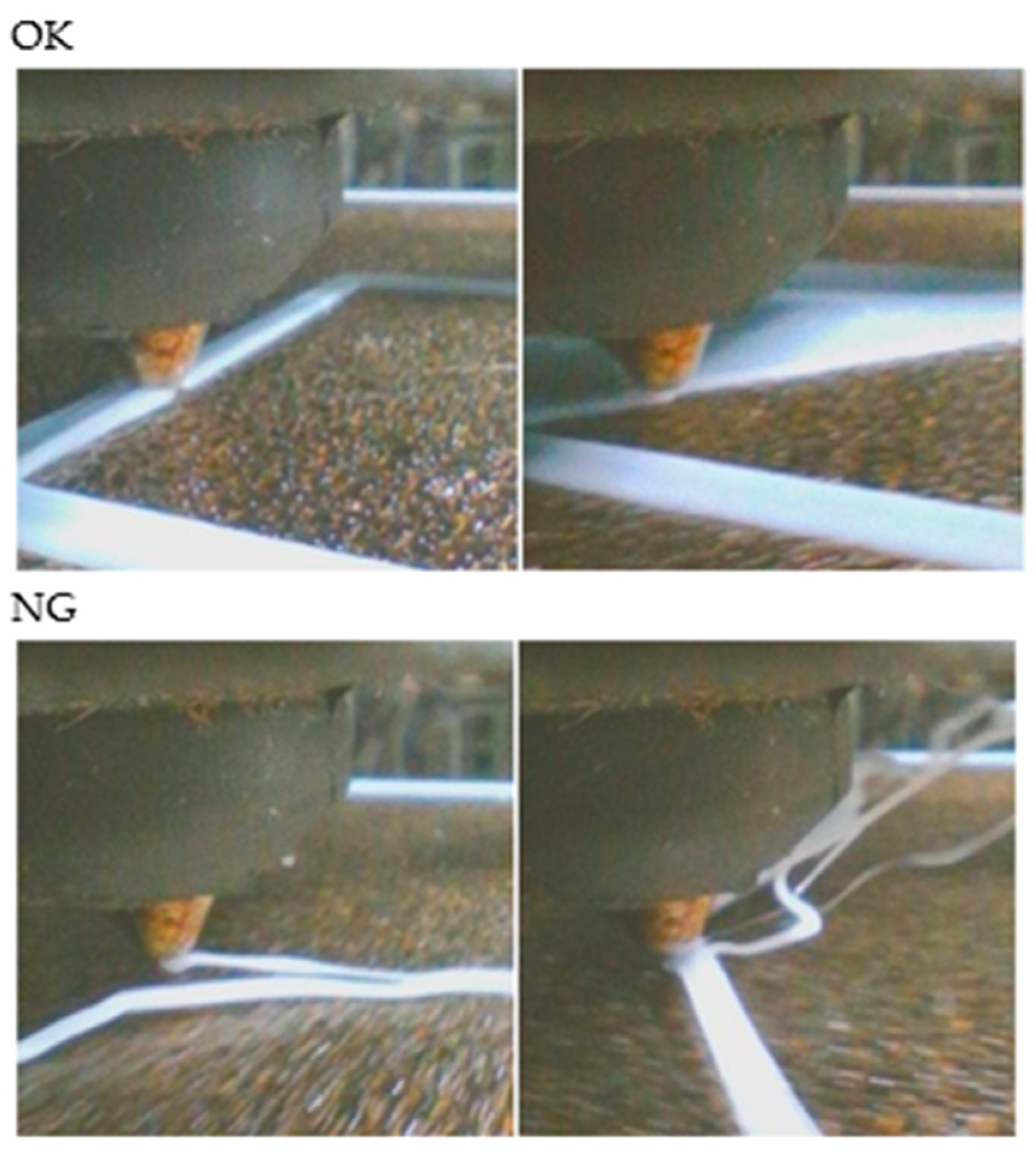

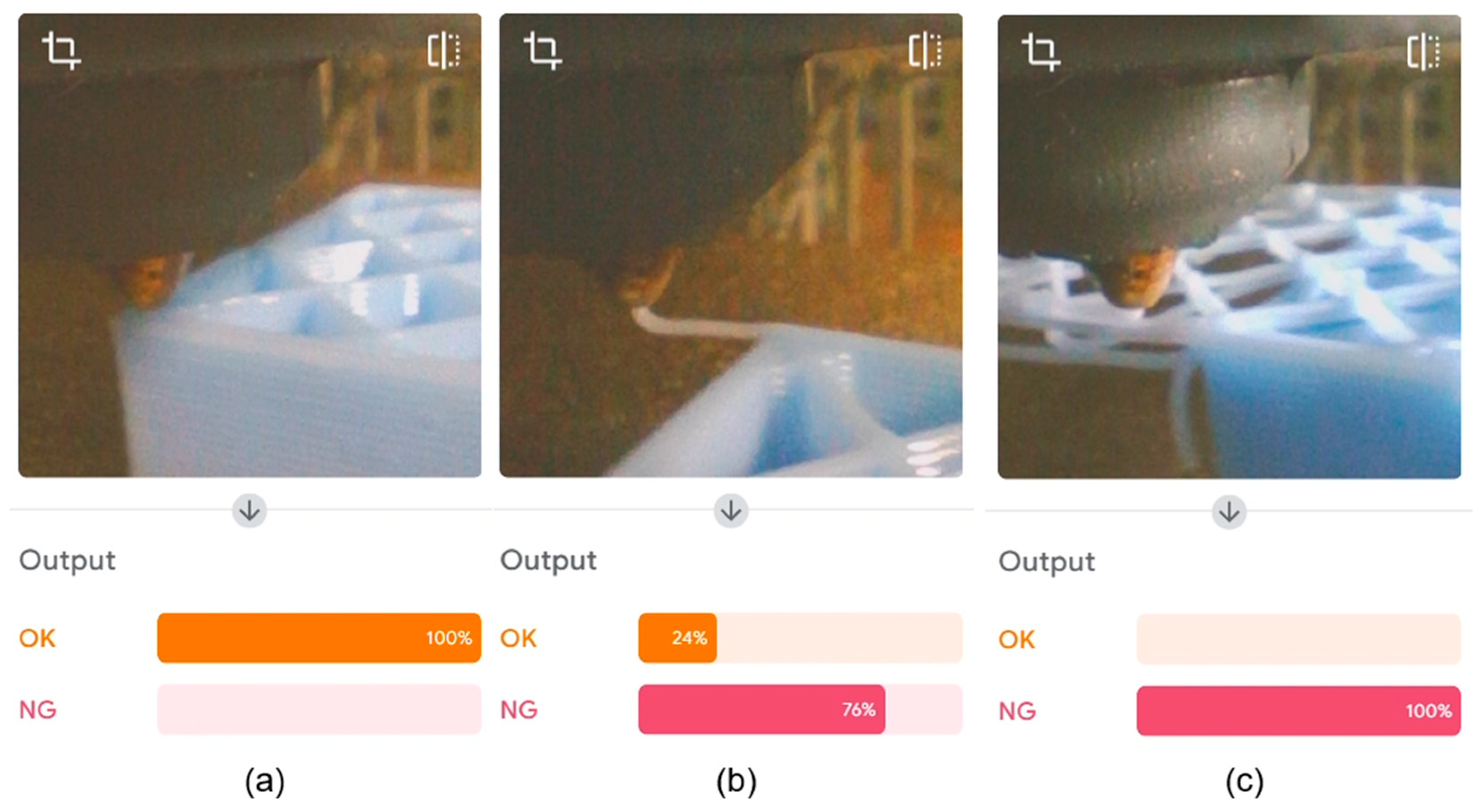

5.2. Layer Shifting

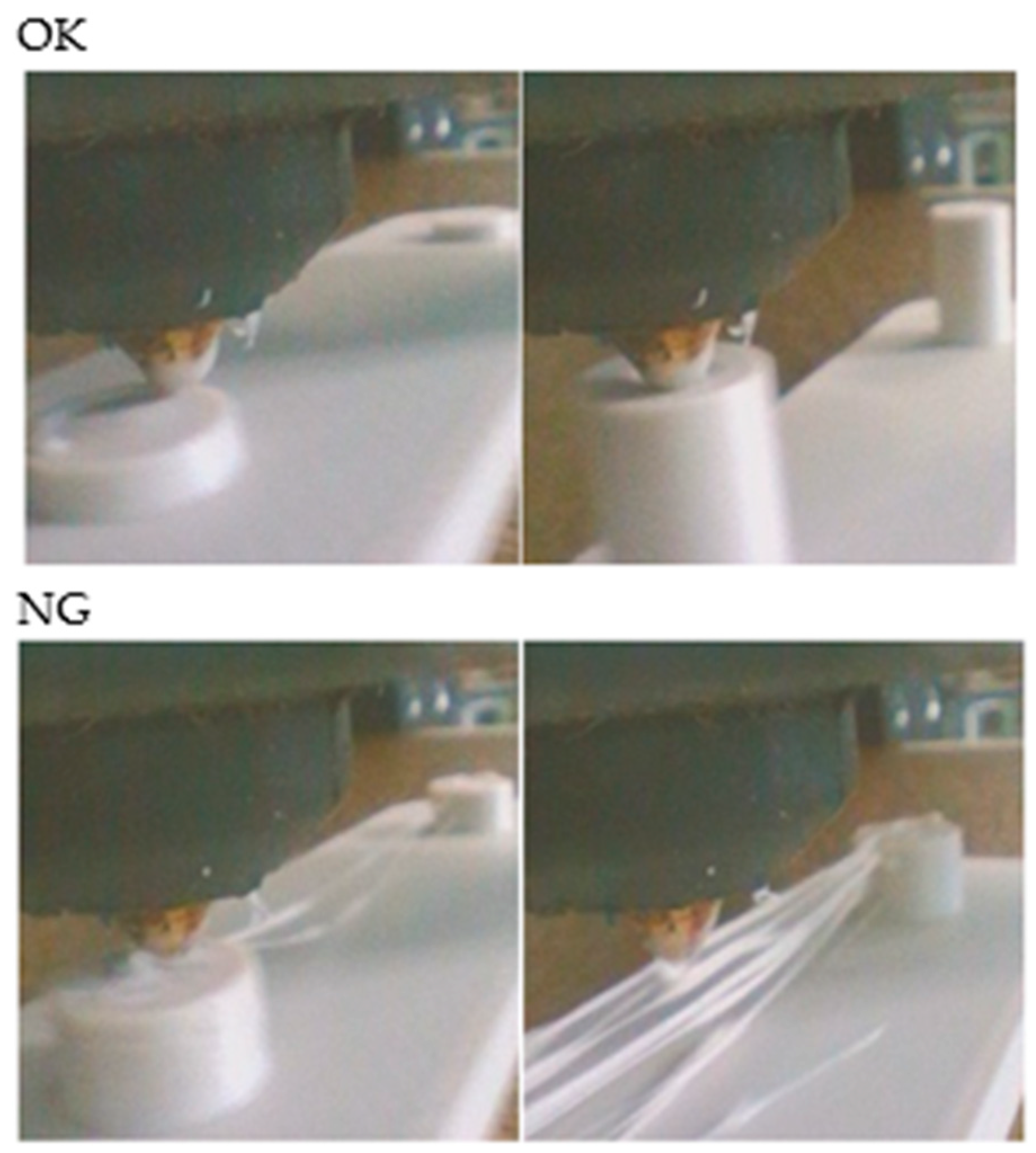

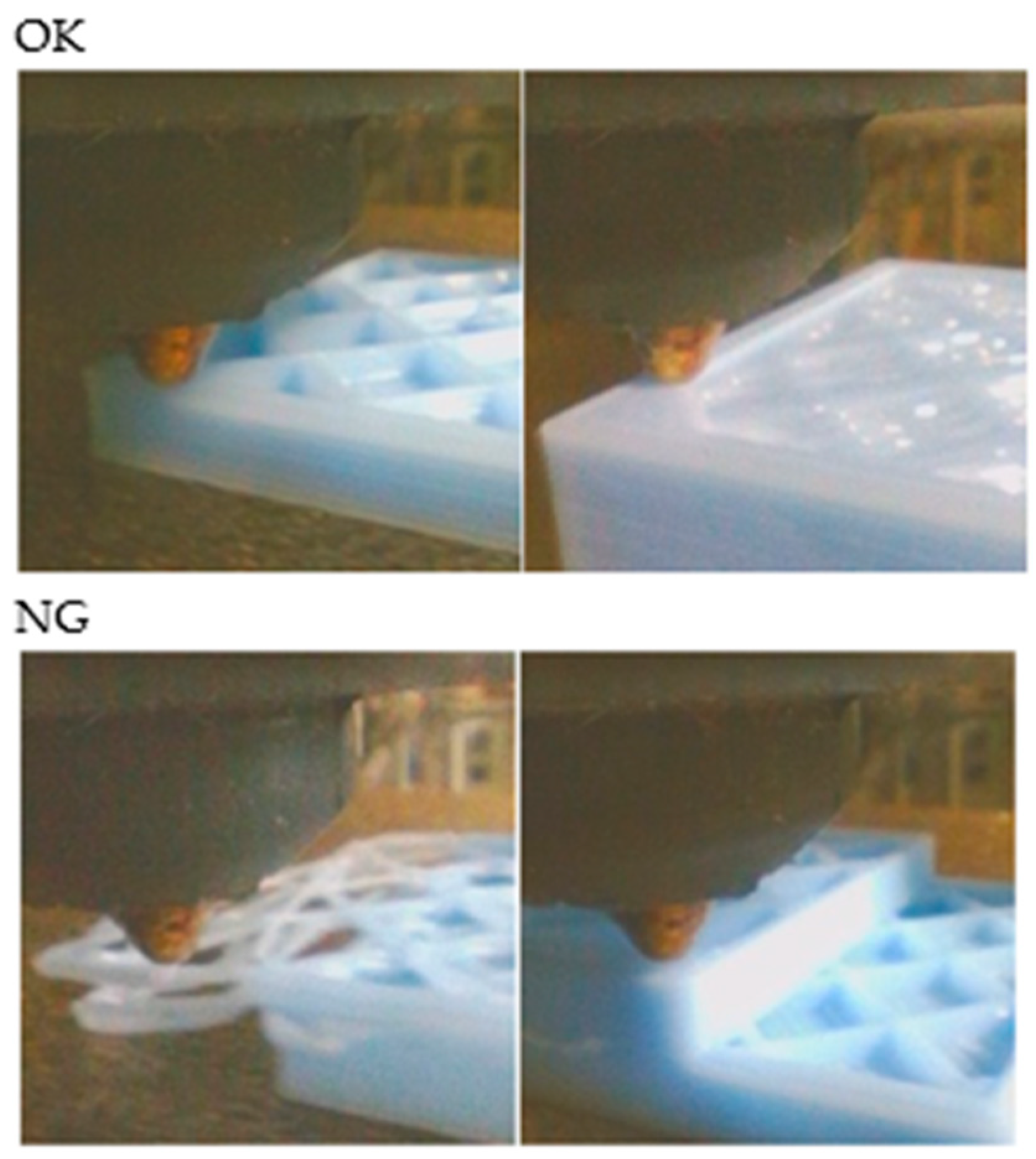

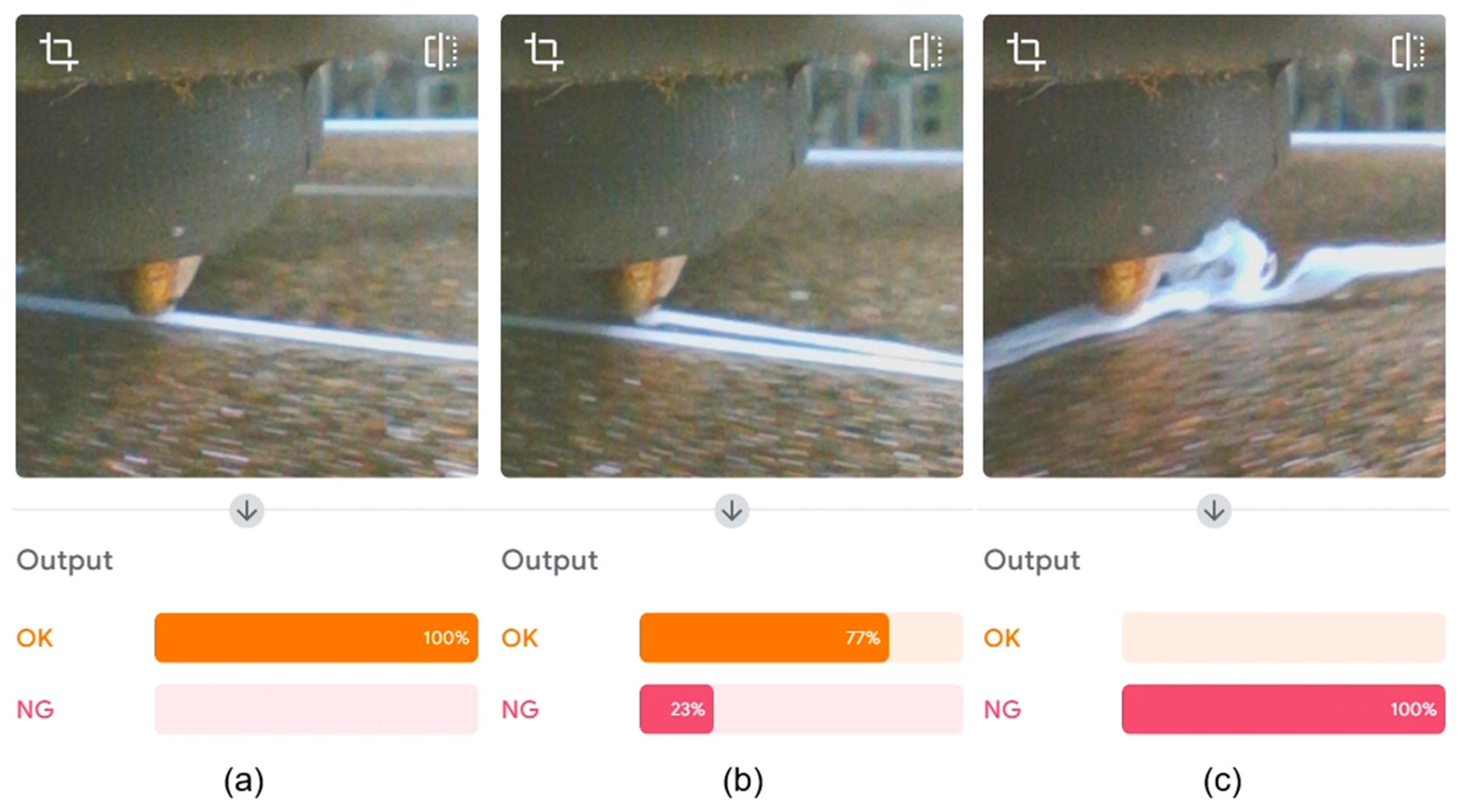

5.3. Adhesion to the Surface

5.4. CNN

5.5. Teachable Machine

6. Results

7. Discussion

8. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Samykano, M.S.; Kumaresan, R.K.; Kananathan, J.K.; Kadirgama, K.K. An overview of fused filamnet fabrication technology and the advanced in PLA-biocomposites. Int. J. Adv. Manuf. Technol. 2024, 132, 27–62. [Google Scholar] [CrossRef]

- Mehrpouya, M.; Dehghanghadikolaei, A.; Fotovvati, B.; Vosooghnia, A.; Emamian, S.S.; Gisario, A. The Potential of Additive Manufacturing in the Smart Factory Industrial 4.0: A Review. Appl. Sci. 2019, 9, 3865. [Google Scholar] [CrossRef]

- Baumann, F.W.; Roller, D. Survey on Additive Manufacturing, Cloud 3D Printing and Services, Computer and Society. arXiv 2017, arXiv:1708.04875. [Google Scholar] [CrossRef]

- Tack, P.; Victor, J.; Gemmel, P. 3D-printing techniques in a medical setting: A systematic literature review. Biomed. Eng. Online 2016, 15, 115. [Google Scholar] [CrossRef]

- Kangwa, D.; Mwale, J.T.; Shaikh, J.M. Evolutionary dynamics of financial inclusion of generation z in a sub-saharan digital financial ecosystem. Copernic. J. Financ. Account. 2021, 9, 27–50. [Google Scholar] [CrossRef]

- Miralles, F.F.; Gual, J. Construction of scale models in industrial design: The irruption of additive manufacturing. Rubrics proposal for an objective evaluation. In Proceedings of the 11th International Conference on Education and New Learning Technologies, Palma, Spain, 1–3 July 2019. [Google Scholar] [CrossRef]

- Ewart, P.D. The Use of Particulate Injection Moulding for Fabrication of Sports and Leisure Equipment from Titanium Metals. Proceedings 2018, 2, 254. [Google Scholar] [CrossRef]

- Cortina, M.; Arrizubieta, J.I.; Ruiz, J.E.; Ukar, E.; Lamikiz, A. Latest Developments in Industrial Hybrid Machine Tools that Combine Additive and Subtractive Operations. Materials 2018, 11, 2583. [Google Scholar] [CrossRef]

- Schrimer, W.R.; Abendroth, M.; Roth, S.; Kühnel, L.; Zeidler, H.; Kiefer, B. Simulation-supported characterization of 3D-printed biodegradable structures. GAMM-Mitteilungen 2021, 44, e202100018. [Google Scholar] [CrossRef]

- Blakey-Milner, B.; Gradl, P.; Snedden, G.; Brooks, M.; Pitot, J.; Lopez, E.; Leary, M.; Berto, F.; Plessis, A. Metal additive manufacturing in aerospace: A review. Mater. Des. 2021, 209, 110008. [Google Scholar] [CrossRef]

- Trucco, D.; Sharma, A.; Manferdini, C.; Gabusi, E.; Petretta, M.; Giovanna, D.; Ricotti, L.; Chakraborty, J.; Lisignol, G. Modeling and Fabrication of Silk Fibroin–Gelatin-Based Constructs Using Extrusion-Based Three-Dimensional Bioprinting. ACS Biomater. Sci. Eng. 2021, 7, 3306–3320. [Google Scholar] [CrossRef] [PubMed]

- Rasheed, A.; San, O.; Kvamsdal, T. Digital Twin: Values, Challenges and Enablers From a Modeling Perspective. IEEE Access 2020, 8, 21980–22012. [Google Scholar] [CrossRef]

- Ziev, T.; Vaishnav, P. Expert elicitation to assess real-world productivity gains in laser powder bed fusion. Rapid Prototyp. J. 2024, 31, 344–358. [Google Scholar] [CrossRef]

- Presz, W.; Szostak-Staropiętka, R.; Dziubińska, A.; Kołacz, K. Ultrasonic Atomization as a Method for Testing Material Properties of Liquid Metals. Materials 2024, 17, 6109. [Google Scholar] [CrossRef]

- Tariq, U.; Joy, R.; Wu, S.H.; Mahmood, A.M.; Malik, A.; Liou, F. A state-of-the-art digital factory integrating digital twin for laser additive and subtractive manufacturing processes. Rapid Prototyp. J. 2023, 29, 2061–2097. [Google Scholar] [CrossRef]

- Crespo, R.N.F.; Cannizzaro, D.; Bottaccioli, L.; Macii, E.; Patti, E.; Di Cataldo, S. A Distributed Software Platform for Additive Manufacturing. In Proceedings of the IEEE 28th International Conference on Emerging Technologies and Factory Automation, Sinaia, Romania, 12–15 September 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, C.; Wang, R.; Kong, J.Z.; Suresh, B.; Chase, J.; James, F. Real-Time 3D Surface Measurement in Additive Manufacturing Using Deep Learning. In Proceedings of the Solid Freeform Fabrication 2019: Proceedings of the 30th Annual InternationalSolid Freeform Fabrication Symposium: An Additive Manufacturing Conference, Austin, TX, USA, 12–14 August 2019. [Google Scholar] [CrossRef]

- Nazir, A.; Gokcekaya, O.; Billah, M.K.; Ertugrul, O.; Jiang, J.; Sun, J.; Sargana, H.S. Multi-material additive manufacturing: A systematic review of design, properties, applications, challenges, and 3D Printing of materials and cellular metamaterials. Mater. Des. 2023, 226, 111661. [Google Scholar] [CrossRef]

- Pillai, S.; Upadhyay, A.; Khayambashi, P.; Farooq, I.; Sabri, H.; Tarar, M.; Lee, K.T.; Harb, I.; Zhou, S.; Wang, Y.; et al. Dental 3D-Printing: Transferring Art from the Laboratories to the Clinics. Polymers 2021, 13, 157. [Google Scholar] [CrossRef]

- Shi, J.; Liu, S.; Zhang, L.; Yang, B.; Shu, L.; Yang, Y.; Ren, M.; Wang, Y.; Chen, J.; Chen, W.; et al. Smart Textile-Integrated Microelectronic Systems for Wearable Applications. Adv. Mater. 2019, 32, 1901958. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, H.; Punpongsanon, P.; Kim, J. Programmable Filament: Printed Filaments for Multi-material 3D Printing. In UIST ’20, Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, Virtual Event, 20–23 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1209–1221. [Google Scholar] [CrossRef]

- Hrehova, S.; Husár, J.; Duhančík, M. Characteristics of Selected Software Tools for the Design of Augmented Reality Models. In Proceedings of the 25th International Carpathian Control Conference (ICCC), Krynica Zdrój, Poland, 22–24 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Duhančík, M.; Židek, K.; Husár, J. The Automated Quality Control of 3D Printing using Technical SMART Device. In Proceedings of the 25th International Carpathian Control Conference (ICCC), Krynica Zdrój, Poland, 22–24 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Buj-Corral, I.; Bagheri, A.; Domínguez-Fernández, A.; Casado-Lopéz, R. Influence of infill and nozzle diameter on porosity of FDM printed parts with rectilinear grid pattern. Procedia Manuf. 2019, 41, 288–295. [Google Scholar] [CrossRef]

- Wawryniuk, Z.; Brancewicz-Steinmetz, E.; Sawicki, J. Revolutionizing transportation: An overview of 3D printing in aviation, automotive, and space industries. Int. J. Adv. Manuf. Technol. 2024, 134, 3083–3105. [Google Scholar] [CrossRef]

- Jena, M.C.; Mishra, S.K.; Moharana, H.S. Application of 3D printing across various fields to enhance sustainable manufacturing. Sustian. Social Dev. 2024, 2, 2864. [Google Scholar] [CrossRef]

- Brooks, H.; Ulmeanu, E.M.; Piorkowski, B. Research towards high speed extrusion freeforming. Int. J. Rapid Manuf. 2013, 3, 154–171. [Google Scholar] [CrossRef]

- Ranjan, N.; Kumar, V.; Ozdemir, B.O. Chapter 1—3D to 4D printing: Perspective and development. In Woodhead Publishing Series in Composites Science and Engineering 4D Printing of Composites Methods and Applications; Woodhead Publishing: Sawston, UK, 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Základné Pojmy V 3d Tlači. 2020. Available online: https://www.materialpro3d.sk/blog/pojmy-v-3d-tlaci/ (accessed on 2 December 2022).

- How Does the UP 3D Printer’s Print Head (Extruder) Work? 2014. Available online: https://3dprintingsystems.freshdesk.com/support/solutions/articles/4000003132-how-does-the-up-3d-printer-s-print-head-extruder-work- (accessed on 3 October 2014).

- Padró, A.; Gall Trabal, G. Design and Manufacturing of a Selective Laser Sintering Test Bench to Test Sintering Materials; UPCommons: Barcelona, Spain, 2016; pp. 1–146. Available online: https://upcommons.upc.edu/entities/publication/f70b3458-0e4c-49ca-9734-f202c024f667 (accessed on 29 June 2025).

- Zhai, H.; Wu, Q.; Xiong, K.; Yoshikawa, N.; Sun, T.; Grattan, T.V.K. Investigation of the viscoelastic effect on optical-fiber sensing and its solution for 3D-printed sensor packages. Appl. Opt. 2019, 58, 4306–4314. [Google Scholar] [CrossRef]

- Utilization of Recycled Filament for 3D Printing for Consumer Goods. 2020. Available online: https://scholarworks.uark.edu/ampduht/13 (accessed on 5 May 2020).

- Michiels, S.; Dhollander, A.; Lammens, N.; Depuydt, T. Towards 3D printed multifunctional immobilization for proton therapy: Initial materials characterization. Med. Phys. 2016, 43, 5392–5402. [Google Scholar] [CrossRef] [PubMed]

- Židek, K.; Duhančík, M.; Hrehova, S. Real-Time Material Flow Monitoring in SMART Automated Lines using a 3D Digital Shadow with the Industry 4.0 Concept. In Proceedings of the 25th International Carpathian Control Conference (ICCC), Krynica Zdrój, Poland, 22–24 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Identifying an Optimization Technique for Maker Usage to Address COVID-19 Supply Shortfalls. 2021. Available online: https://trace.tennessee.edu/utk_graddiss/7015 (accessed on 2 December 2021).

- Walsh, J.; Ranmal, S.R.; Ernest, T.B.; Liu, F. Patient acceptability, safety and access: A balancing act for selecting age-appropriate oral dosage forms for paediatric and geriatric populations. Int. J. Pharm. 2018, 536, 547–562. [Google Scholar] [CrossRef] [PubMed]

- Delli, U.; Chang, S. Automated Process Monitoring in 3D Printing Using Supervised Machine Learning. Procedia Manuf. 2018, 26, 865–870. [Google Scholar] [CrossRef]

- Simplify3D. Available online: https://www.simplify3d.com/resources/print-quality-troubleshooting/stringing-or-oozing/ (accessed on 29 June 2025).

- Spaghetti Detection. 2025. Available online: https://wiki.bambulab.com/en/knowledge-sharing/Spaghetti_detection (accessed on 11 June 2024).

- Bambu A1 Camera Relocator. Available online: https://makerworld.com/en/models/473954-bambu-a1-camera-relocator#profileId-384156 (accessed on 24 May 2025).

- Creality Nebula Camera Review. 2024. Available online: https://in3d.org/creality-nebula-camera-review/ (accessed on 5 March 2024).

- Creality Nebula Camera Holder for Ender-3 V3 KE(/SE). Available online: https://www.thingiverse.com/thing:6574108 (accessed on 3 April 2024).

- What Is an Endoscopy. Available online: https://www.healthline.com/health/endoscopy (accessed on 4 August 2017).

- Portable Industrial Endoscope. Available online: https://www.ezon-endosope.com/industrial-endoscope/portable-industrial-endoscope.html (accessed on 29 June 2025).

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Teachable Machine. Available online: https://teachablemachine.withgoogle.com/ (accessed on 29 June 2025).

| Rating | Layer Shifting | Non-Adhesion to the Surface | Stringing |

|---|---|---|---|

| Detection rate | Excellent | Very good | Good |

| Detection quality | Excellent | Good | Good |

| Error detection position in printing | All layers | First layer | Middle layers |

| Error influence to stop 3D printer | According shifting size | Instant stop | Change printing parameters only |

| Defects | Total Printing Time [s] | Time of Low and Incorrect Classification [s] | Percentage Success Rate [%] |

|---|---|---|---|

| Layer shifting | 9060 | 128 | 98.59 |

| Non-adhesion to surface | 180 | 7 | 96.20 |

| Stringing | 2477 | 44 | 98.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kondrat, M.; Nazim, A.; Zidek, K.; Pitel, J.; Lazorík, P.; Duhancik, M. Real-Time Monitoring of 3D Printing Process by Endoscopic Vision System Integrated in Printer Head. Appl. Sci. 2025, 15, 9286. https://doi.org/10.3390/app15179286

Kondrat M, Nazim A, Zidek K, Pitel J, Lazorík P, Duhancik M. Real-Time Monitoring of 3D Printing Process by Endoscopic Vision System Integrated in Printer Head. Applied Sciences. 2025; 15(17):9286. https://doi.org/10.3390/app15179286

Chicago/Turabian StyleKondrat, Martin, Anastasiia Nazim, Kamil Zidek, Jan Pitel, Peter Lazorík, and Michal Duhancik. 2025. "Real-Time Monitoring of 3D Printing Process by Endoscopic Vision System Integrated in Printer Head" Applied Sciences 15, no. 17: 9286. https://doi.org/10.3390/app15179286

APA StyleKondrat, M., Nazim, A., Zidek, K., Pitel, J., Lazorík, P., & Duhancik, M. (2025). Real-Time Monitoring of 3D Printing Process by Endoscopic Vision System Integrated in Printer Head. Applied Sciences, 15(17), 9286. https://doi.org/10.3390/app15179286

_Yang.png)