1. Introduction

Learning English as a second language remains a persistent challenge, particularly in the areas of speaking and listening, where many non-native learners struggle with pronouncing phonemes absent from their native language [

1,

2,

3]. These phonological limitations adversely affect communicative fluency, hinder effective verbal interaction, and may diminish learners’ motivation throughout the learning process [

4].

Information and Communication Technologies (ICT) have significantly transformed educational environments by enabling more dynamic, accessible, and personalized learning experiences. Their integration into the classroom has facilitated the development of interactive materials, on-demand content delivery, and adaptive platforms tailored to individual student needs. Several studies agree that the use of ICT increases motivation, autonomy, and academic performance, positioning it as a cornerstone of contemporary education [

5,

6,

7].

In particular, Virtual Reality and Artificial Intelligence have emerged as two of the most promising technologies for education. VR provides immersive environments that safely simulate real-life scenarios, thereby supporting conceptual understanding and skill development in authentic, context-rich settings [

8,

9,

10]. Meanwhile, AI enables the creation of intelligent systems capable of assessing student performance, personalizing content, and providing immediate feedback. The integration of both technologies facilitates the creation of more effective, inclusive, and self-directed learning experiences, with notable applications in language learning, science education, and technical training [

11,

12]. Furthermore, accessible platforms such as Teachable Machine have enabled the integration of custom-trained audio recognition models capable of identifying phonetic patterns and evaluating user pronunciation in real time. These models, when connected to databases such as Firebase, allow for the storage and analysis of information to deliver automated, objective, and scalable assessments [

13,

14,

15]. Recent studies support the effectiveness of these solutions in educational settings by reducing the teacher’s evaluative burden and promoting learner autonomy [

16,

17].

This paper presents the design, implementation, and validation of an educational virtual reality application developed in Unity, aimed at improving English pronunciation. The virtual environment, designed as a library-inspired immersive space, allows users to interact with a virtual book and practice six selected words through two distinct modes: practice and assessment. The system utilizes voice recognition models trained with Teachable Machine, divided into two groups, and connects to Firebase to compute a final score based on the successful recognition of the target words. This proposal is grounded in the principles of constructivist learning and situated cognition, where learners build knowledge through active interaction with a meaningful, contextualized, and autonomous environment [

18,

19].

2. State of the Art

2.1. Virtual Reality Applications in Education

The use of Virtual Reality in educational contexts has demonstrated its effectiveness in enhancing conceptual understanding and student engagement. Rahman and Islam [

8] developed VREd, a virtual classroom platform built using WebGL 2.0, and Unity3D, 2022.3.7f1, which allowed students to attend simulated classes during the pandemic. They employed 3D modeling and interactive animations, resulting in increased levels of student engagement and satisfaction. Similarly, Tytarenko [

9] analyzed graphical and performance-related factors in the development of VR environments using Unity. The study proposed texture and load optimization strategies to improve the immersive experience, which led to more fluid simulations and reduced latency in educational applications.

On another front, Lu et al. [

11] designed a VR application for teaching architecture to children. Using Unity and OpenXR, they created a modular learning system in which students constructed virtual structures that were later fabricated using laser cutting. The results showed a 20% improvement in spatial understanding and a notable increase in student motivation. Complementarily, Logothetis et al. [

12] developed EduARdo, an augmented reality educational environment integrating Unity components to enhance classroom interaction. The implementation emphasized collaborative activities and achieved a 25% increase in content retention, as evidenced by comparative testing.

These studies highlight the potential of VR to simulate real-world experiences that promote learner engagement and enhance knowledge comprehension and retention, particularly when combined with development platforms such as Unity.

2.2. Automated Pronunciation Assessment and Teachable Machine

The work of Rahman and Islam [

8] presents a virtual classroom platform developed with Unity and WebGL, focused on synchronous classes, but without automated student performance assessment capabilities. Wang [

16] developed a system based on linear predictive coding (LPC) to analyze acoustic signals and evaluate pronunciation, achieving 91.3% accuracy, although without providing an immersive environment or adaptive feedback. Zhang et al. [

17], on the other hand, applied natural language processing (NLP) techniques to offer phonetic, spelling, and grammatical corrections using neural networks, achieving improvements over traditional methods, but with dependence on external computing infrastructure and without real-time interactive components. Likewise, Al-Shallakh [

20] proposed an AI-based educational application to improve pronunciation, with an 87% match with human evaluators, but focused on repetitive exercises, without contextualization of learning or immersive virtual environment. In contrast, the solution presented in this work offers a comprehensive educational ecosystem that combines automatic pronunciation assessment, a 3D immersive environment, local processing using lightweight in-browser models, and asynchronous real-time interaction through Firebase. This architecture eliminates the need for high-performance servers, allowing for a portable and efficient implementation in real-world educational environments. Furthermore, immediate visual feedback based on user performance strengthens autonomous and contextualized learning, which represents a substantial advantage over traditional or partially integrated systems.

In terms of accessible tools, Farid et al. [

13] implemented Teachable Machine in a school attendance application. They trained custom audio models to detect student names during roll call, achieving a recognition accuracy of 95% recognition rate. Along the same lines, Malahina [

14] applied Teachable Machine for dialect recognition in the Sumba Timur region. The system trained on localized speech samples achieved an average performance of 90%, demonstrating its efficacy in cost-effective and rapidly deployable educational settings.

Additionally, Colombo et al. [

3] explored the limitations of automated language evaluation metrics. They analyzed various algorithms such as BLEU, ROUGE, and BERTScore across tasks including translation, summarization, and dialogue, finding that these metrics exhibited greater consistency among themselves than with human evaluators. This discrepancy highlights the need to develop complementary evaluation frameworks specifically tailored to educational applications.

Taken together, these studies underscore the feasibility and reliability of automated assessment systems, particularly those based on lightweight machine learning models like Teachable Machine, which are also compatible with tools such as Firebase for real-time monitoring and feedback delivery.

2.3. Pronunciation in EFL and Pedagogical Needs

Numerous studies have addressed the specific difficulties faced by English as a Foreign Language (EFL) learners, especially in producing sounds that do not exist in their native phonological systems. Yulianti et al. [

1] conducted a qualitative study with Indonesian university students, focusing on pronunciation errors involving English vowels. Through recordings and phonetic analysis, they found that half of the identified errors were concentrated in long vowels such as /iː/ and /uː/, concluding that these require repeated practice supplemented by targeted feedback. Similarly, Kulsum et al. [

2] assessed secondary school students and reported that the main barriers to speaking were both psychological (e.g., anxiety) and technical (e.g., lack of precise corrective feedback). They recommended implementing interactive learning environments to support traditional instruction.

Muklas et al. [

4] implemented an intervention with seventh-grade students in Indonesia using audio recordings, oral corrections, and read-aloud exercises. They observed a 40% improvement in the correct articulation of consonants by the end of the intervention. Meanwhile, Marzuki [

7] reviewed technological strategies for English language learning, concluding that those integrating immediate feedback—such as AI or VR-based applications—yielded significant improvements in both comprehension and oral production.

3. Methodology

This research adopted a descriptive approach with both qualitative and quantitative components. Its objective was to design, implement, and validate a virtual reality educational application aimed at enhancing English pronunciation for second language learners. The application was developed in Unity and designed for use with HTC Vive Pro 2 headsets, haptic controllers, a microphone, and integrated audio output, enabling user interaction within an immersive environment.

To evaluate the system, analytical-synthetic, technical-computational, and experimental methodologies were applied. The analytical method was used to structure the theoretical and technological underpinnings of the proposal. The technical-computational method facilitated the system’s implementation through C# scripting and the integration of machine learning models developed with Teachable Machine. Finally, the experimental method validated the system’s functionality through functional testing, simulation activities, and analysis of data extracted from the real-time database.

3.1. Architecture and Technological Tools

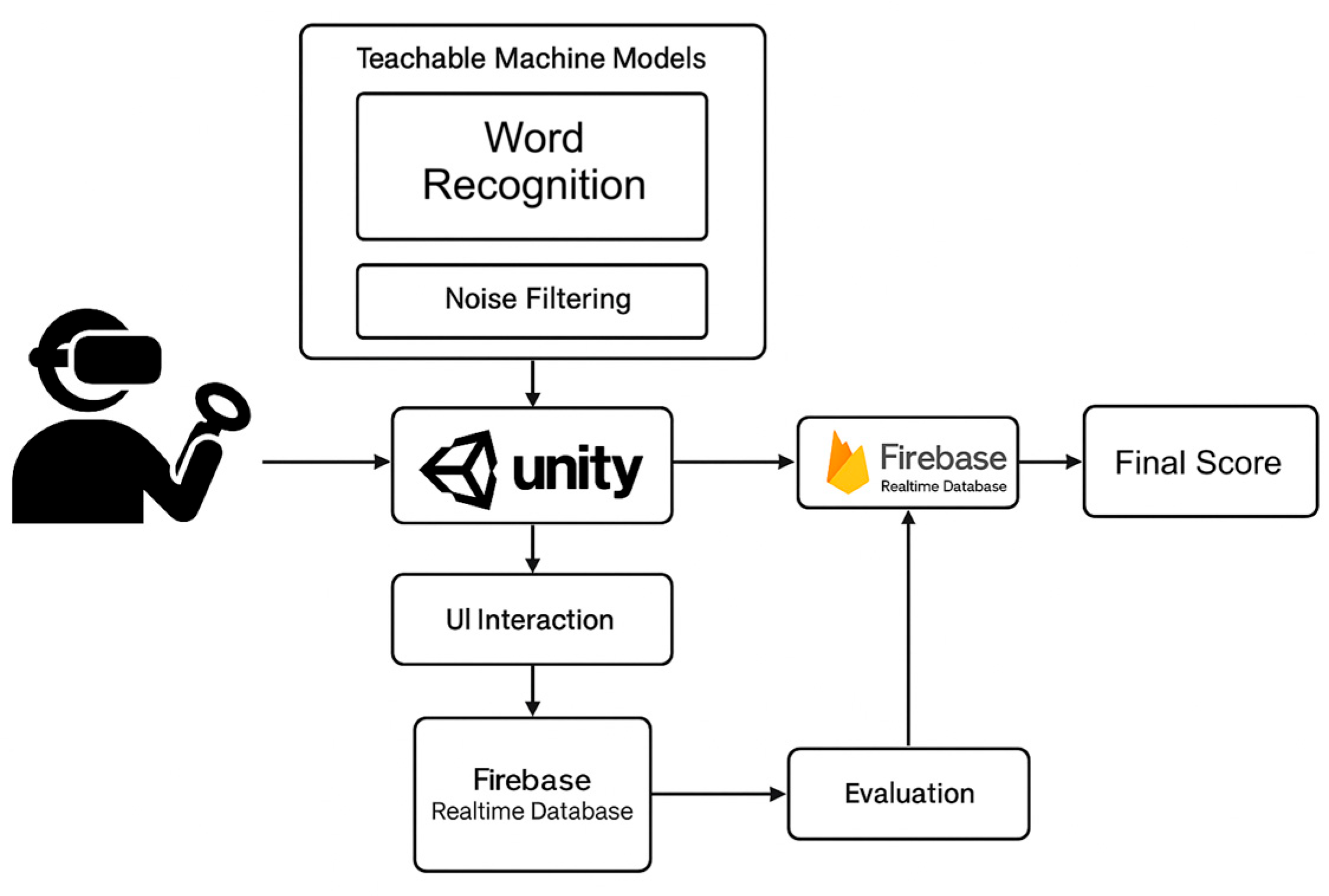

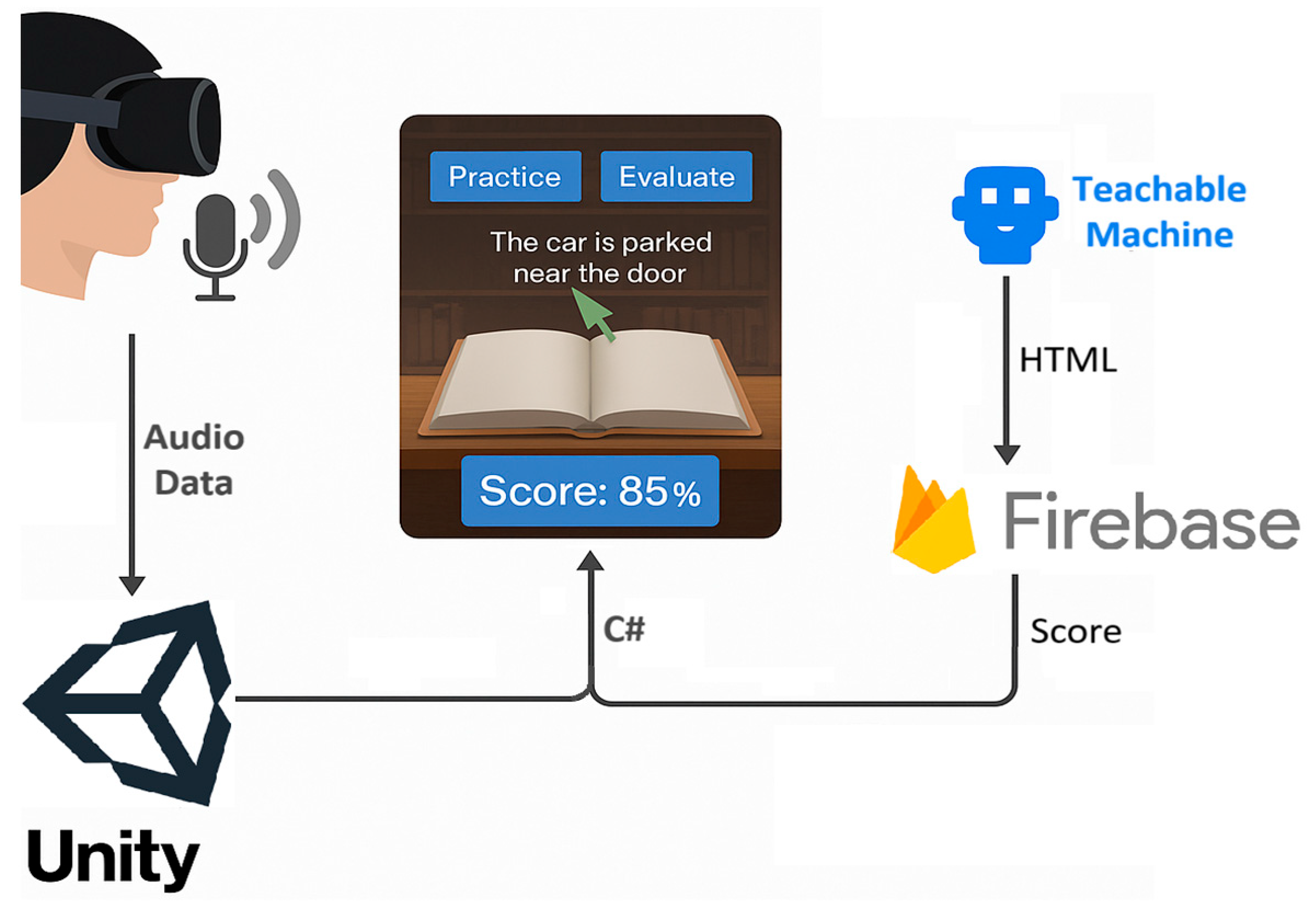

The application was developed using Unity 2022.3.7f1 within the Universal Render Pipeline (URP) and designed to operate in a virtual reality environment with HTC Vive Pro 2. Custom voice classification models created with Teachable Machine (divided into two separate models, each containing three target words and background noise) were trained with high-quality, curated voice samples. During the evaluation phase, user-generated data is transmitted and stored in the Firebase Realtime Database, from which it is retrieved by Unity to calculate the phonetic accuracy score. The Firebase SDK was utilized for seamless integration with Unity, and JSON structures were employed for efficient data handling and exchange (

Figure 1).

The application’s functional architecture consists of three main interconnected modules: the immersive interface developed in Unity, a machine learning-based speech recognition model hosted in the cloud through Teachable Machine, and a real-time database (Firebase Realtime Database) that acts as an intermediary between the two. The system operates under an asynchronous, event-based approach, optimizing synchronization between voice capture, processing, and real-time display of results.

During evaluation mode, the data flow begins when the user pronounces a specific word through the interface. This acoustic signal is captured by the built-in microphone of the HTC Vive Pro 2 headset and transmitted to a locally hosted HTML application, which implements the model exported from Teachable Machine using the TensorFlow.js library. This pre-trained model has been split into two submodels to optimize loading and processing: each contains three classes corresponding to keywords, plus an additional class for detecting background noise.

The input signal is processed by the neural network model directly in the browser, using real-time spectrograms generated through the Web Audio API. The resulting classification is expressed as a JSON object containing the detection probabilities by class. If the class with the highest score exceeds the established confidence threshold, it is considered a valid detection. This validation significantly reduces false positives and improves reliability in noisy environments. Once validated, the result is encoded in JSON format and sent via HTTP REST methods (PUT/POST) to the Firebase Realtime Database, where it is stored in a hierarchical structure with keys corresponding to the detected word, its timestamp, and the confidence value. The database acts as a messaging channel between the HTML module and the Unity application.

In parallel, the main Unity module runs a C# listener that maintains a persistent connection to Firebase using the Firebase SDK for Unity platforms. This listener listens for changes in the database nodes by subscribing to ValueChanged events. When a new registered word is detected, the system validates that the detection corresponds to the word expected by the interface (according to the evaluation order), and if the match is verified, it is counted as a correct guess.

Once the evaluation is complete, Unity calculates the percentage of correct guesses out of the total valid guesses. This information is visually represented using UI elements with TextMeshPro, showing the final score and adaptive textual feedback based on performance.

3.2. Target Population and Experience Design

The system was designed for students currently engaged in learning English as a second language, particularly those who need to reinforce their pronunciation and listening comprehension. The application simulates a virtual library environment where users interact with a digital book displaying generic English-language content. From there, users can access two modes: Practice and Evaluation. In Practice mode, the user listens to the correct pronunciation of six selected words by interacting with floating interface buttons. In Evaluation mode, a green arrow sequentially indicates which word the user should pronounce, and the system assesses the spoken input based on data recorded in Firebase.

3.3. Implementation and Validation Procedure

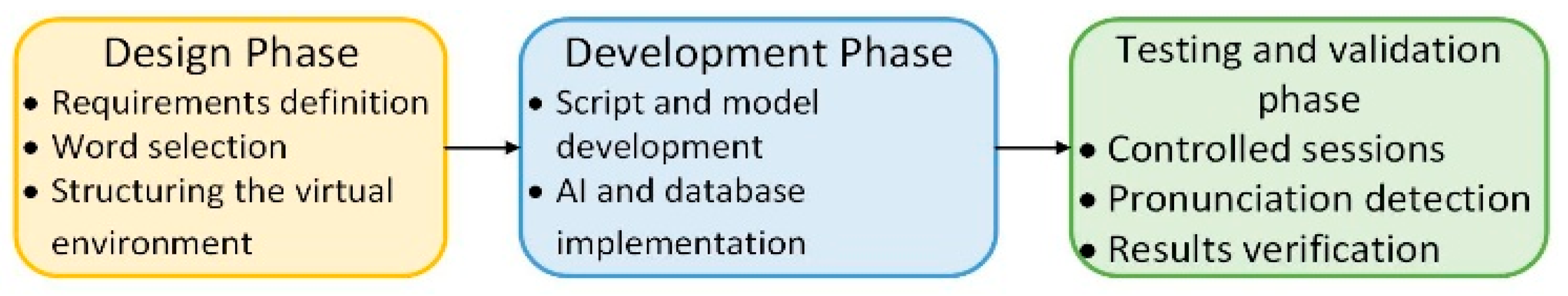

The development process was organized into three main phases: design, development, and testing/validation. Each phase included specific tasks executed sequentially and in coordination, as illustrated in

Figure 2.

3.3.1. Design Phase

In this phase, the technical specifications and functional requirements of the system were defined, including the characteristics of the VR environment, user interaction mechanisms, and pedagogical objectives. The keywords used in both the Practice and Evaluation modes were selected based on their phonetic complexity and frequency of occurrence in basic English-language contexts. The initial layout of the Unity environment was designed, including the positioning of interactive elements, navigation flow logic, and user interface structure.

3.3.2. Development Phase

This phase involved implementing the application’s full functionality using C# scripts. Modules were developed to manage scene transitions, interactive buttons, the virtual book interface, and the score display system. Simultaneously, two separate models were trained in Teachable Machine, each consisting of three target words and background noise samples, using a balanced voice sample dataset. These models were integrated into Unity via the Teachable Machine HTTP client and connected to Firebase Realtime Database for real-time data storage and analysis.

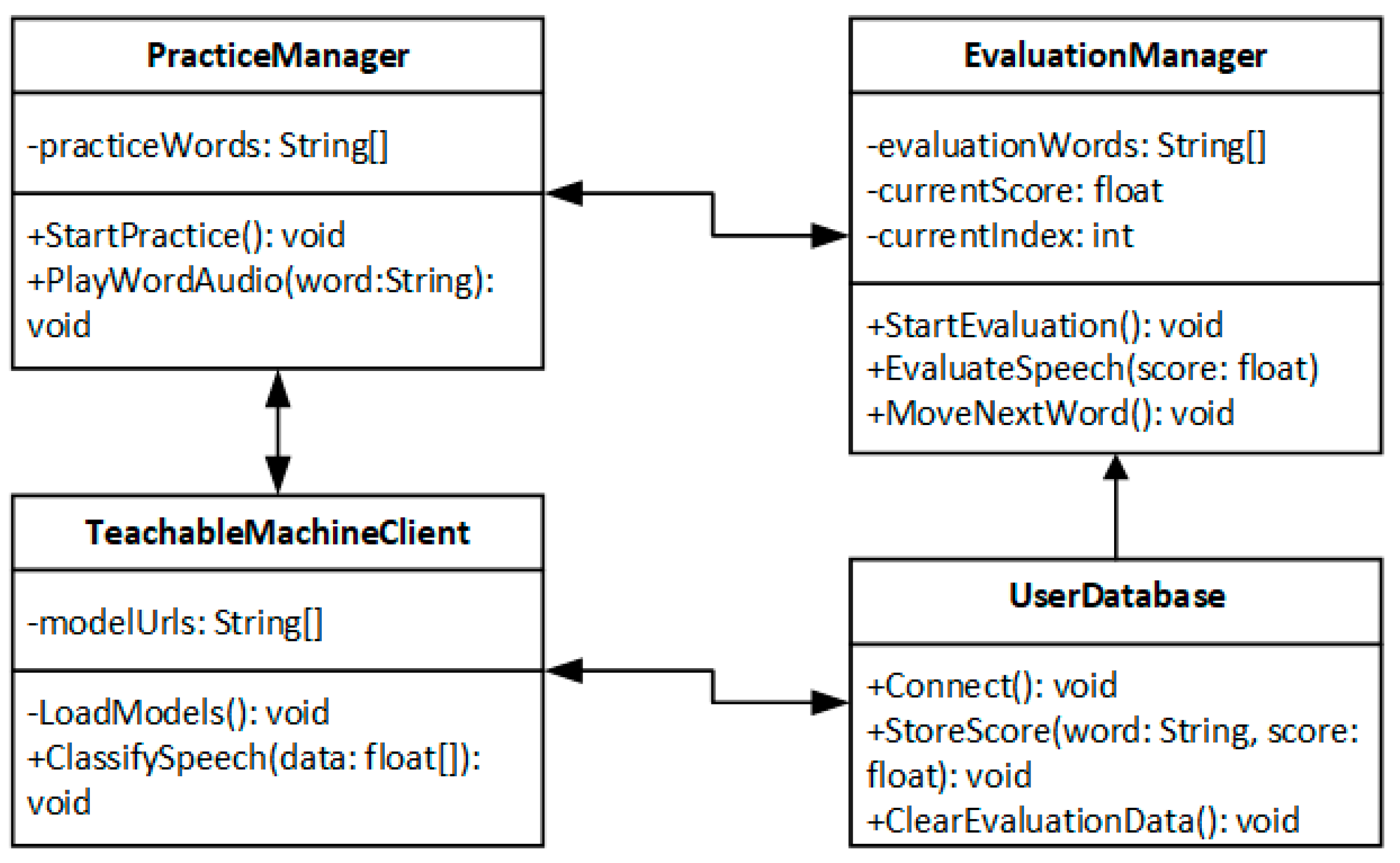

At the structural level, specific classes were designed and coded to ensure clear separation of responsibilities: one module for practice management, another for evaluation, a client for communication with Teachable Machine, and a database handler. This object-oriented architecture is illustrated in

Figure 3.

3.3.3. Testing and Validation Phase

In the final phase, both functional and user acceptance testing were conducted under controlled experimental conditions. These tests aimed to verify the correct classification of the pronounced words by the models, proper data management in Firebase (including automatic clearing before each evaluation), and ensuring that results were displayed only after the completion of the evaluation process. User experience was also assessed, focusing on response accuracy, clarity of instructions, synchronization with audio input/output devices, and the fluidity of user interaction within the virtual environment.

The system’s functional architecture—including communication between the VR application, AI module, audio peripherals, and visualization subsystem—is summarized in

Figure 4. This diagram clearly illustrates the communication framework and the integration of diverse components to deliver an immersive and adaptive learning experience.

The results obtained will be analyzed in the following section to evaluate the impact of the application on automated pronunciation assessment and its potential application in real educational settings.

4. Results

For the results stage, the focus was on analyzing the application’s performance based on both technical metrics and user feedback. AI was employed to detect and correct pronunciation errors and to analyze the system’s performance with respect to the phonetic configurations and characteristics of the target words. Audio evaluation and phonetic error detection were performed because the application was designed to identify incorrectly pronounced words. Each user’s pronunciation must be considered, taking into account vowel and consonant sounds characteristic of Spanish that are non-native to English. The learning time was also analyzed based on the presented models to improve system performance.

The system validation was approached comprehensively, considering both the accuracy of the voice recognition models and the performance of the VR application’s functional architecture. Word-level accuracy, false positive incidence, dataset size impact, phonetic characteristics of the words, and technical flow between modules were all evaluated. Each result is analyzed in terms of its probable cause to gain a complete understanding of the system’s behavior.

4.1. Usage Environment and Immersive Interaction

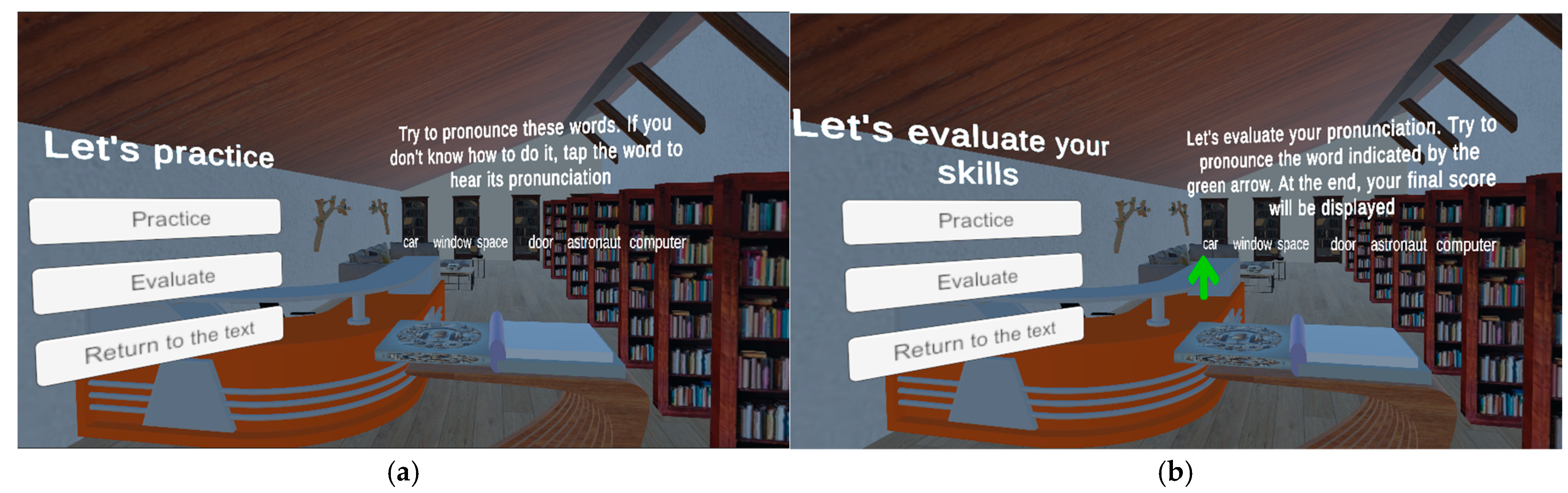

The application was designed as a VR-based English practice and assessment environment resembling a library setting, offering users a calm and academic context. This setting aims to reduce anxiety and foster focus—both key factors in second language acquisition. Using HTC Vive Pro 2 controllers, users interact with a virtual book that opens upon trigger pull activation, as illustrated in

Figure 5.

Once the text is displayed, users can choose between two modes: Practice and Evaluation. In Practice mode, they can click on each of the six selected words to hear their correct pronunciation, reinforcing audio–visual association. In Evaluation mode, a green arrow sequentially indicates which word should be pronounced, simulating a short assessment quiz. Upon completion, the system displays a percentage score and a personalized message, providing immediate feedback.

This workflow is designed to be indefinitely repeatable, allowing users to return to the initial text or redo practice sessions. This cyclical structure of exploration and feedback is fundamental to gamifying the learning process, ensuring users do not feel discouraged by mistakes and can improve intuitively.

4.2. Technical Structure: Data Flow and Synchronization

The application’s architecture includes three interconnected subsystems:

Unity (VR and interaction logic);

Firebase Realtime Database (manages event synchronization and storage);

HTML interface (executes Teachable Machine models in real time).

When a user pronounces a word, the HTML-based interface uses TensorFlow.js to process the microphone input and classify the spoken word into the most probable category. If the prediction exceeds the confidence threshold, an update is sent to Firebase. Unity, which continuously monitors the database for updates, compares the prediction with the currently prompted word (as indicated by the directional arrow) and records a correct attempt if there is a match, as illustrated in

Figure 6.

This modular design offers advantages, such as separating voice processing from visualization. However, it also requires precise synchronization mechanisms to avoid errors like premature evaluations or out-of-order detections. To prevent data from previous sessions affecting the results, the system performs automatic data clearing in Firebase before each evaluation, thereby ensuring that all data inputs are fresh, isolated, and valid.

4.3. Model Training: Accuracy and Loss Behavior

Two models were trained, each with three words and one background noise class:

Model 1: 192 samples per class, confidence threshold ≥ 95%;

Model 2: 400 samples per class (maximum recommended), confidence threshold ≥ 99.99%.

The accuracy curves shown in

Figure 7a reveal consistent improvement over the first 50 epochs, after which performance gains plateaued. In parallel, the loss curves displayed in

Figure 7b show a smooth and gradual decline, suggesting that the models achieved appropriate generalization without overfitting.

In Teachable Machine, the model progressively learns to separate classes within the latent feature space. With a small number of samples, the model adjusts quickly but tends to overfit the training data. Increasing the sample size—as in Model 2—requires more epochs to converge but results in a more robust and generalizable decision boundary, capable of handling greater phonetic variability.

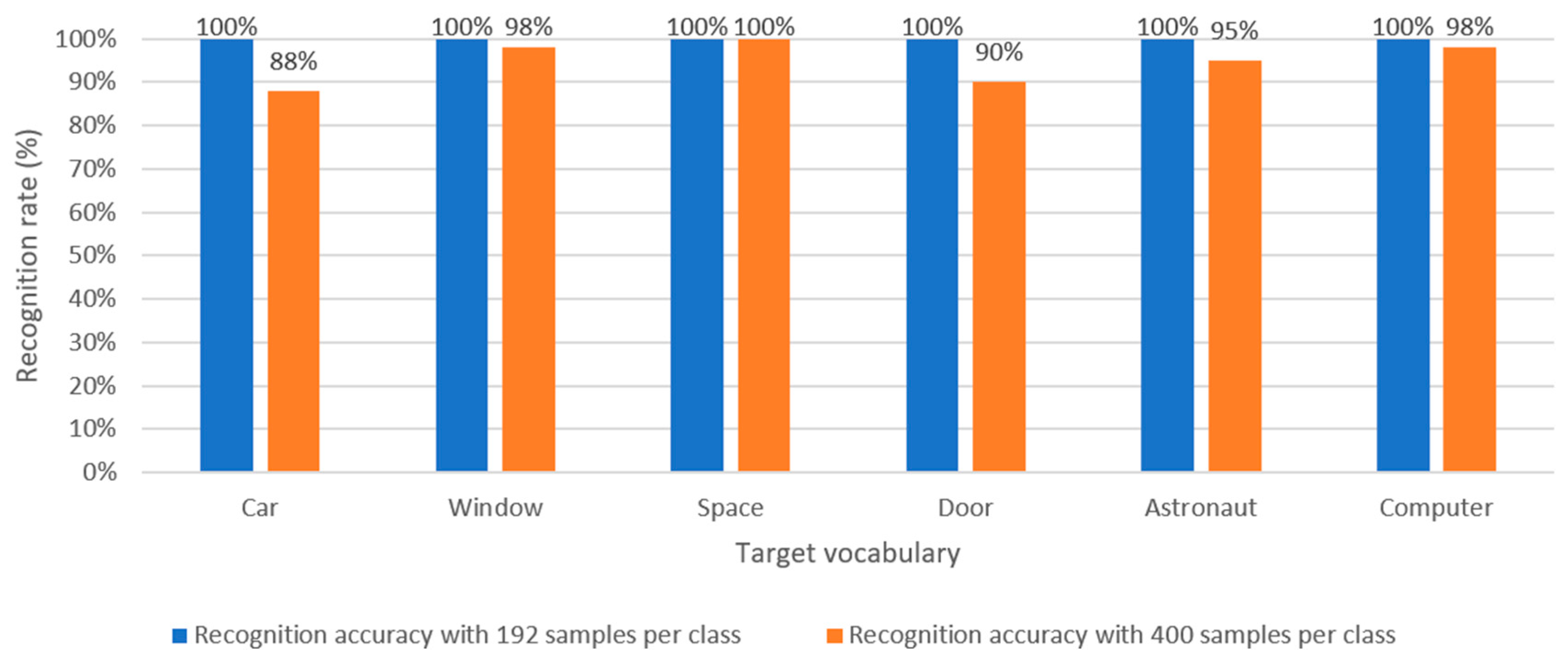

4.4. Word-Level Accuracy and Phonetic Sensitivity

Figure 8 shows word-level classification accuracy across both attempts. With 192 samples, accuracy was often ≥95%, but this was accompanied by a high incidence of false positives. In the second attempt, accuracy slightly decreased for words like Car (88%) and Door (90%).

Short words such as “Car” and “Door” have brief phonetic duration and lower melodic complexity, making them harder to detect for machine learning models. Longer words such as “Computer” and “Window” contain richer acoustic information and more complex temporal patterns, which support more reliable classification. Additionally, shorter lexical items are more susceptible to overlapping with background noise, increasing the risk of misclassification unless confidence thresholds are strictly managed.

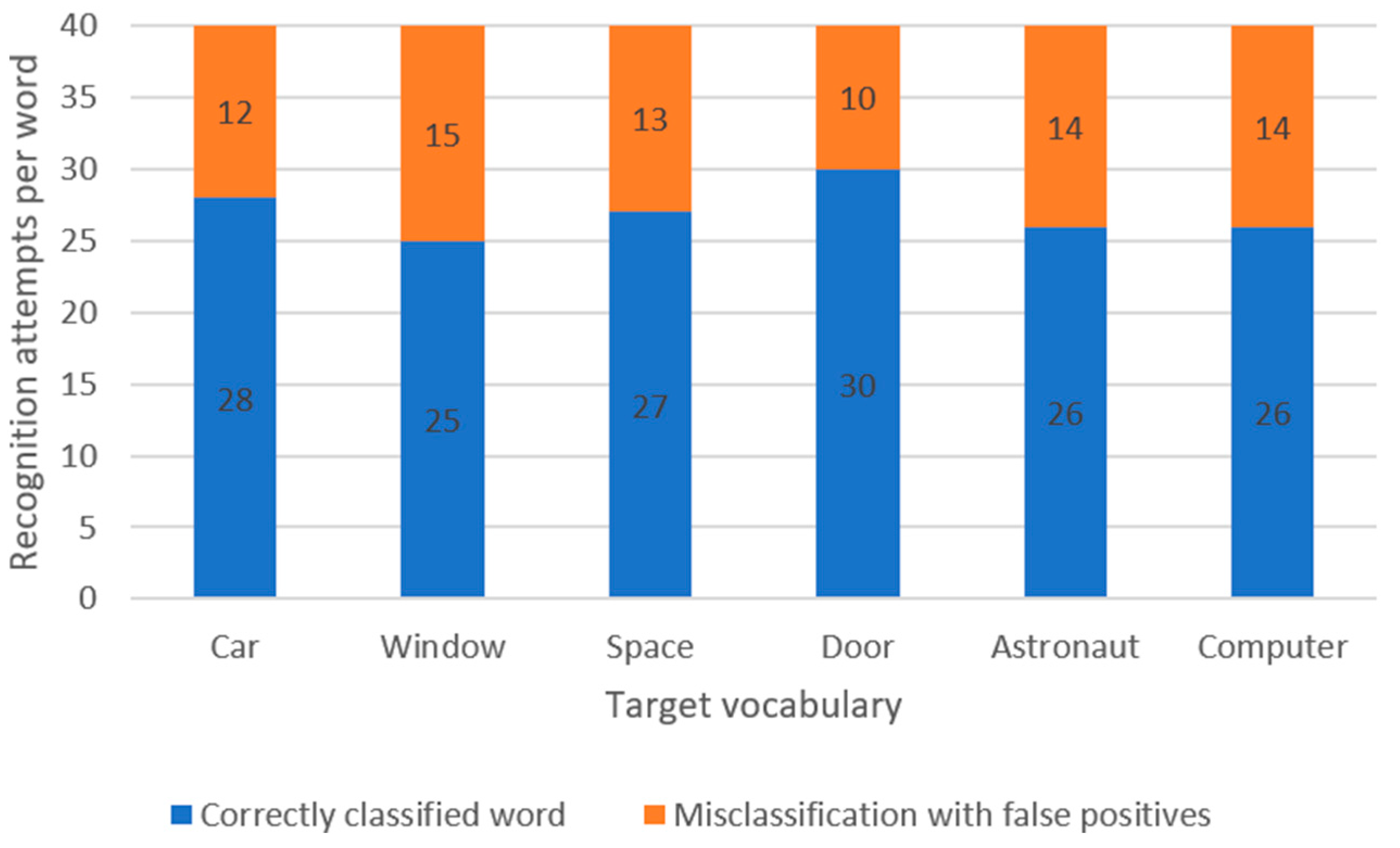

4.5. False Positives: Impact and Correction

In Model 1 (

Figure 9), between 10 and 15 false positives per word were recorded. This was due to the low activation threshold (≥95%), which allowed predictions even when users were silent or said unrelated words.

In Model 2 (

Figure 10), the higher threshold (≥99.99%) and enhanced training (400 samples) eliminated false positives entirely.

Lower thresholds make the model more sensitive but less stable—it reacts to vague acoustic similarities. Raising the threshold forces the model to predict only when high confidence is achieved, which reduces false activations but may increase the rate of false negatives. Empirical testing demonstrated that increasing the threshold was beneficial: while it led to a slight reduction in raw accuracy, it significantly enhanced the overall reliability and stability of the system.

To quantitatively characterize the performance of the phonetic recognition models implemented in the application, the classic binary classification metrics were calculated: precision, sensitivity or recall, and F1-score. These metrics were calculated individually for each keyword evaluated, both in the model trained with 192 samples per class and in the model with 400 samples per class, allowing for a precise comparative evaluation of the classifiers’ performance.

The results shown in

Table 1 show that the model based on 192 samples per class exhibits variable and limited sensitivity in all cases, with F1-score values ranging from 0.6 to 0.75, reflecting a high proportion of false positives and inconsistent detections in noisy environments. The words “Car” and “Door” show the greatest impact on accuracy degradation due to their short phonetic duration or high acoustic similarity with ambient sounds.

Based on this diagnosis, the training process was optimized by increasing the number of samples per class (400 per class), and the detection threshold was tightened to 99.99% confidence. The results of the new model are presented below in

Table 2.

In this second scenario, a substantial improvement is observed in all the metrics evaluated. Accuracy increases significantly, as all false positives are eliminated in all classes, reaching the maximum value (1.0) in several cases. Furthermore, the average F1 score exceeds 0.97, reflecting an optimal balance between accuracy and sensitivity and confirming the model’s stability under more demanding conditions.

This analysis supports the hypothesis that the performance of models based on lightweight machine learning (such as Teachable Machine with TensorFlow.js) can scale favorably by improving the quality and volume of the training dataset, even within the technical constraints of the platform. The calculated metrics provide key quantitative support for validating the system as a reliable tool for automatic phonetic feedback in immersive learning environments.

4.6. Overall Performance and User Perception

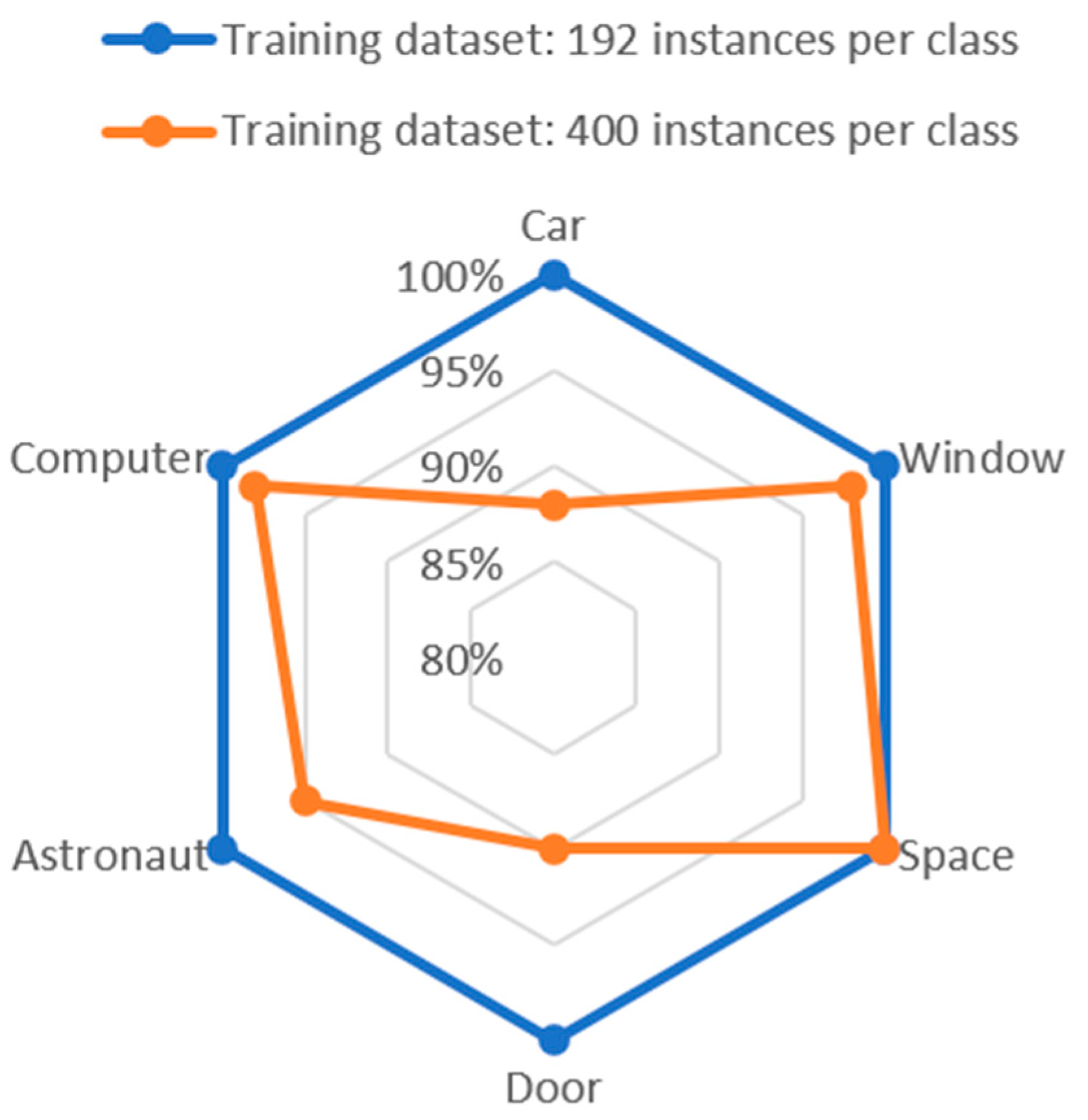

The analysis revealed the average classification accuracy per test attempt. The 192-sample model achieved a mean accuracy of 100%, but this was accompanied by a high number of false positives. In contrast, the 400-sample model achieved a lower mean accuracy of 94.83%, yet delivered a cleaner execution with no critical errors.

Figure 11 presents a radar-style visualization of word-level performance. Words such as “Computer” and “Window” maintained stable recognition rates across both test conditions, while “Door “and “Car” showed greater variability in detection accuracy.

Words with greater length and syllable counts produce richer feature vectors, enabling the model to generate more stable decisions. Shorter words lack sufficient phonetic material and are more susceptible to small variations in tone, speech rate, or microphone sensitivity—explaining their variability.

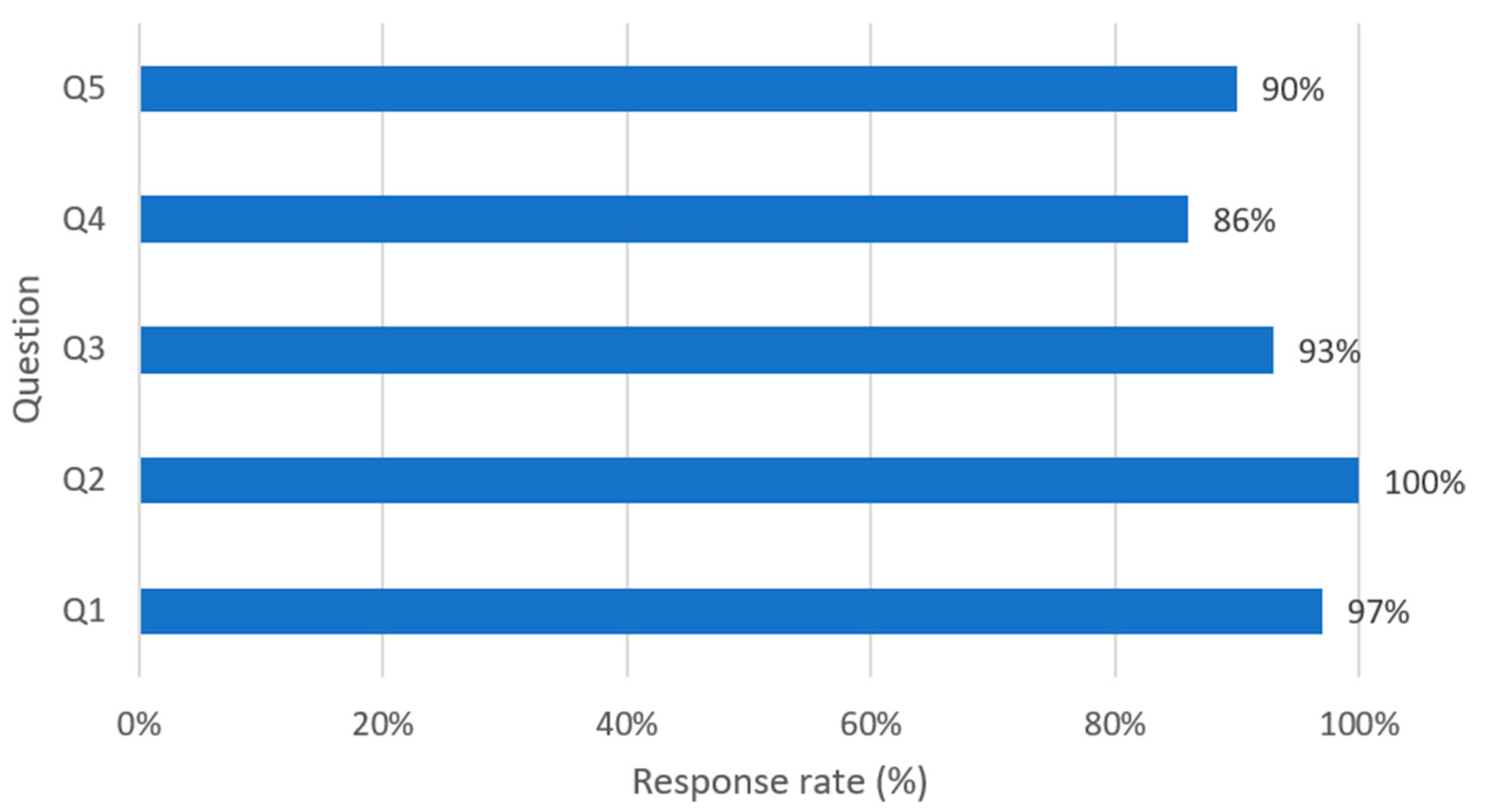

To evaluate user perception regarding the system’s functionality, pedagogical effectiveness, and immersive experience, a survey was conducted with 30 student participants. The questionnaire included five key questions addressing usability, motivation, the effectiveness of the immersive environment, and the impact of AI-assisted feedback on learning outcomes.

Table 3 presents the questions, while

Figure 12 shows the percentage-based responses, reflecting high levels of user acceptance and overall positive evaluations.

5. Discussion

The results obtained demonstrate that the proposed application successfully integrates an immersive virtual reality environment with an automated pronunciation evaluation system based on machine learning. Throughout the design, training, and testing process, both functional advantages and technical challenges were identified as factors that should be considered for future iterations or similar applications.

First, the system proved functional in terms of facilitating natural user interaction. The choice of a library-like setting, combined with an intuitive interface using an interactive book, floating buttons, and immediate feedback, generated a user experience aligned with the principles of immersive and autonomous learning. This approach is consistent with Rahman and Islam [

8] who affirm that well-designed virtual environments enhance motivation and content retention in language learning contexts.

From a technical standpoint, the distributed architecture across Unity, Firebase, and Teachable Machine demonstrated effectiveness, though it was demanding regarding data synchronization and network stability. Unlike centralized approaches such as that of Farid et al. [

13], where the AI is embedded directly into a mobile app, the separation between recognition (HTML + TM), storage (Firebase), and visualization (Unity) enabled modularity but introduced a critical dependency on the response time between services. Nevertheless, data cleanup before each evaluation mitigated inconsistencies, ensuring valid results per session.

Regarding model performance, the use of Teachable Machine facilitated an accessible and effective training process. However, findings indicate that the platform’s default configuration is sensitive to the phonetic length of words. Short words like “Car” or “Door”, due to their limited acoustic content, were more difficult to detect consistently. This phenomenon was also reported by Al-Shallakh [

20], who highlighted that short phonetic units are more prone to confusion with background noise unless reinforced through more specific audio preprocessing.

The comparison between the two trained models (192 vs. 400 samples per class) shows that increasing the number of samples and tightening the confidence threshold improves reliability but slightly reduces the detection rate. This trade-off is consistent with Wang [

16], who notes that more robust models tend to prioritize specificity over sensitivity. In this case, eliminating false positives at the cost of a slight drop in raw accuracy was a justifiable decision, as the application’s goal is to assess users fairly without overestimating their performance.

One of the critical points identified in this development is the structural limitation of Teachable Machine, which prevents training with more than 400 samples per class without compromising the project’s stability and functionality. This restriction limits the system’s scalability in more complex educational contexts, such as the incorporation of regional accents, dialectal variations, or customized adaptations for different user profiles. Additionally, the platform’s model portability is limited, restricting its execution to web environments dependent on TensorFlow.js and hindering integration with other platforms.

Therefore, migration to more robust architectures based on TensorFlow 2.13, PyTorch 2.1, or the interoperable ONNX format is recommended. These alternatives not only offer greater control over model engineering and training, but also allow for the implementation of a more sophisticated audio preprocessing pipeline, including techniques such as Mel-Frequency Cepstral Coefficients (MFCC). This transformation converts the acoustic signal into a set of coefficients that represent the spectral envelope of the voice, more accurately capturing the phonetic characteristics of speech even in the presence of noise. Its incorporation would improve the system’s robustness to short words, such as “car” or “door,” whose short phonetic duration increases their susceptibility to classification errors. Furthermore, more advanced environments would allow the integration of spectral smoothing filters, energy normalization, or data augmentation with controlled background noise, optimizing performance in real-world scenarios. Finally, this migration would also facilitate execution on offline or mobile devices, without relying exclusively on browsers or permanent connectivity.

Pedagogically, the system functions as an effective tool for active English practice, combining auditory recognition, oral repetition, and visual feedback in a setting that simulates real reading. Unlike conventional mobile applications, the user is not limited to flat screens or touch interactions but engages with spoken evaluation in an immersive context. This strategy aligns with the work of Logothetis et al. [

12], who advocate for combining AR/VR with personalized feedback systems to enhance learner autonomy significantly.

The use of immersive technologies in education is still developing, and to fully leverage their benefits, users require proper familiarity with these technologies is essential. The learning process also depends on motivation and is influenced by the novelty effect, which increases cognitive load. Therefore, the application must be intuitive, efficient, and user-friendly, thereby reducing the initial cognitive load for students. Furthermore, the use of realistic scenarios increases motivation to communicate and maintain student attention.

The survey results show a strong acceptance of the use of immersive environments with AI, where the combination of these technologies provides more engaging and motivating experiences and fosters positive attitudes toward language learning compared to traditional methods. Furthermore, the proposed application offers immediate feedback to continuously improve oral skills and significantly enhance learning outcomes compared to traditional methods.

Finally, it is important to acknowledge that the system evaluation was conducted under controlled conditions. Although the results were positive, deployment in real-world settings (classrooms, language labs, or even home environments) would require further testing, particularly regarding tolerance to ambient noise, accent variability, and hardware robustness.

6. Conclusions

This research presented the design and implementation of an educational virtual reality application aimed at facilitating the practice and assessment of English pronunciation as a second language. The solution combines open-source technologies and accessible services such as Unity, Firebase, and Teachable Machine, resulting in a modular, distributed architecture capable of delivering an immersive, interactive, and real-time evaluation experience. Through a library-like setting and an interface centered around the metaphor of an interactive book, the system fosters autonomous exploration and continuous self-assessment—aligned with key principles of meaningful learning and educational gamification. The results of using the application demonstrate that the combination of these VR and AI technologies significantly contributes to the learning process by creating motivating, and student-friendly environments, where the system is not only capable of evaluating, detecting and correcting phonetic errors with high precision, but also, by providing feedback, contributes to improving pronunciation in English learners through practice and evaluation.

Results from both functional and quantitative tests demonstrate that the application effectively fulfills its pedagogical and technical objectives. The accuracy of the voice recognition models improved significantly by increasing the number of samples per class and applying stricter confidence thresholds, ultimately eliminating false positives in the second test iteration. This finding highlights the importance of proper dataset curation and classifier parameter tuning to ensure evaluation reliability. Additionally, it was observed that words with lower phonetic complexity, such as “Car” or “Door”, posed greater detection challenges, underscoring the need to account for phonetic variability when designing automated speech recognition tasks.

From an architectural perspective, the integration of the system’s components proved effective, but was reliant on specific conditions. The application relies on a stable internet connection and requires precise synchronization between the HTML environment executing Teachable Machine models and the Firebase real-time database, as well as the structural limitations of the training platform (which crashes when exceeding 400 samples per class), represent constraints that should be addressed in future developments. Nonetheless, the system demonstrated stability, modularity, and extensibility within the defined operational framework.

From an educational perspective, the development of this application is based on modern instructional approaches that promote active, contextual, and self-regulated learning experiences, integrating technological components aligned with established theoretical frameworks. The interactive structure of the immersive environment, set up as a library, responds to principles of situated cognition by providing a symbolic and familiar setting that facilitates the anchoring of new knowledge in functional and meaningful contexts, optimizing phonological retention and semantic recognition [

11,

18].

The free-flowing navigation, the ability to repeat exercises, and the immediate visualization of assessment performance encourage self-regulated learning, framed within metacognitive strategies supported by Information and Communication Technologies (ICTs), which have been shown to improve student autonomy, motivation, and performance [

5,

6]. Through this structure, the user assumes an active role in constructing their learning, strengthening transfer processes to real-world contexts of language use. Furthermore, the implementation of an asynchronous assessment system with real-time visual feedback responds to technology-mediated meaningful learning models, where students accurately identify their strengths and weaknesses without relying exclusively on a human instructor agent [

13,

16]. This process is operationalized through an automatic detection and analysis architecture that utilizes lightweight, browser-based, self-executing speech classifiers, connected to a dynamic database for results traceability.

Overall, the proposal not only delivers instructional content but also structures a training experience that functionally integrates elements of immersion, adaptability, and automatic analysis, contributing to the progressive development of phonological competence in English as a second language, from a pedagogical perspective supported by meaningful interaction between the user and the virtual environment.