4.1. Dataset Preprocessing

The dataset was constructed using spoken utterance data in five languages: Korean, Japanese, Chinese, Spanish, and French. The number of samples per language varies significantly, with 12,854 samples for Korean [

25], 9013 for Japanese [

26], 3914 for Chinese [

27], 14,713 for Spanish [

28], and 12,061 for French [

29]. All datasets were sourced from publicly available speech corpora on Kaggle, consisting of speaker utterances in response to specific prompts, provided in WAV format.

Notably, the Chinese subset is considerably smaller than the others, raising concerns about data imbalance. To address this issue and improve model generalization, we applied several data augmentation techniques. Specifically, speed perturbation (varying playback speed), volume perturbation (modifying amplitude), and reverberation (simulating real-world acoustic environments) [

30] were employed to further diversify the acoustic characteristics of the training data. As a result, an additional 7000 augmented training samples were generated, increasing the total number of Chinese speech samples to 10,914 in the final dataset.

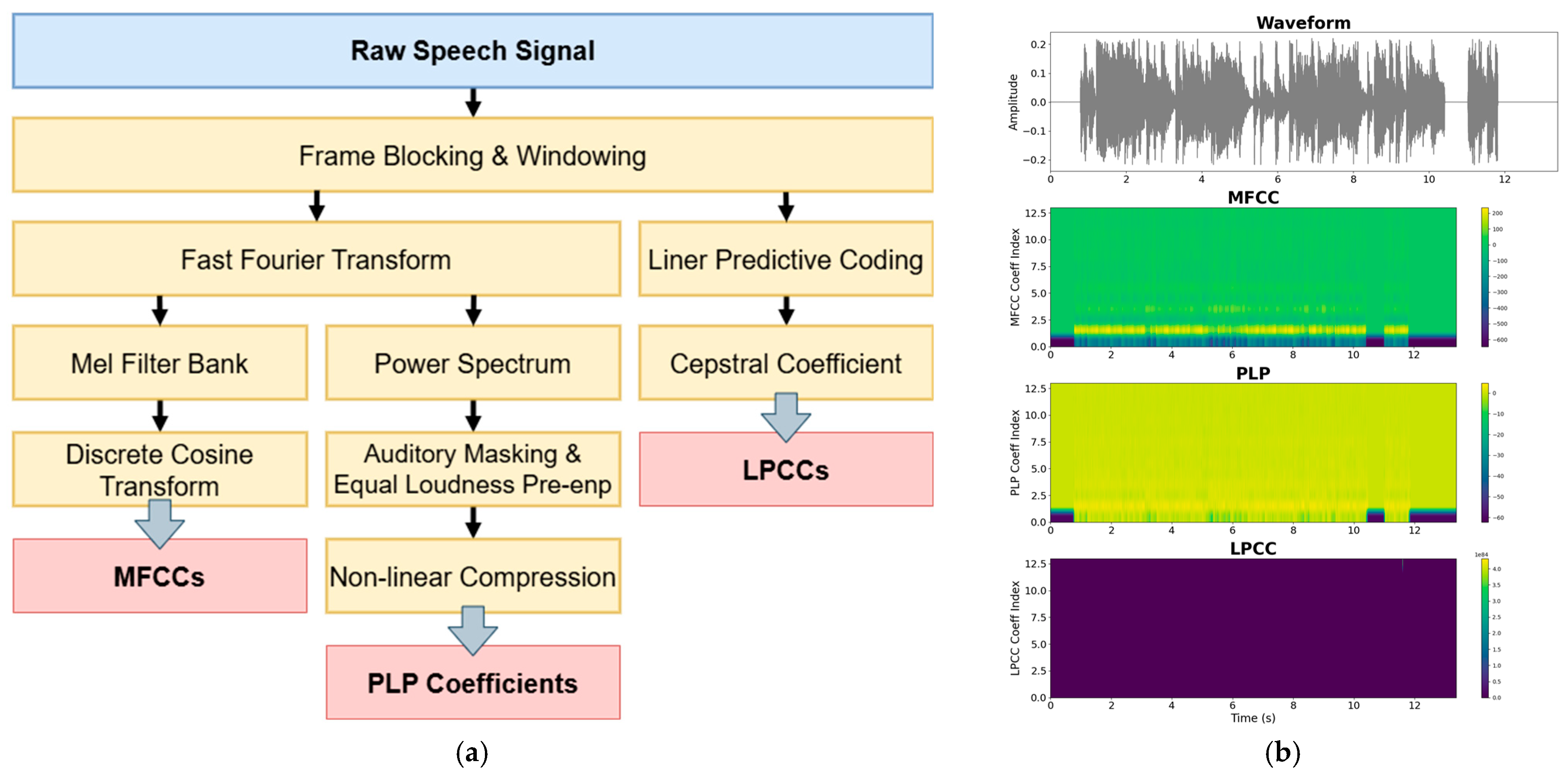

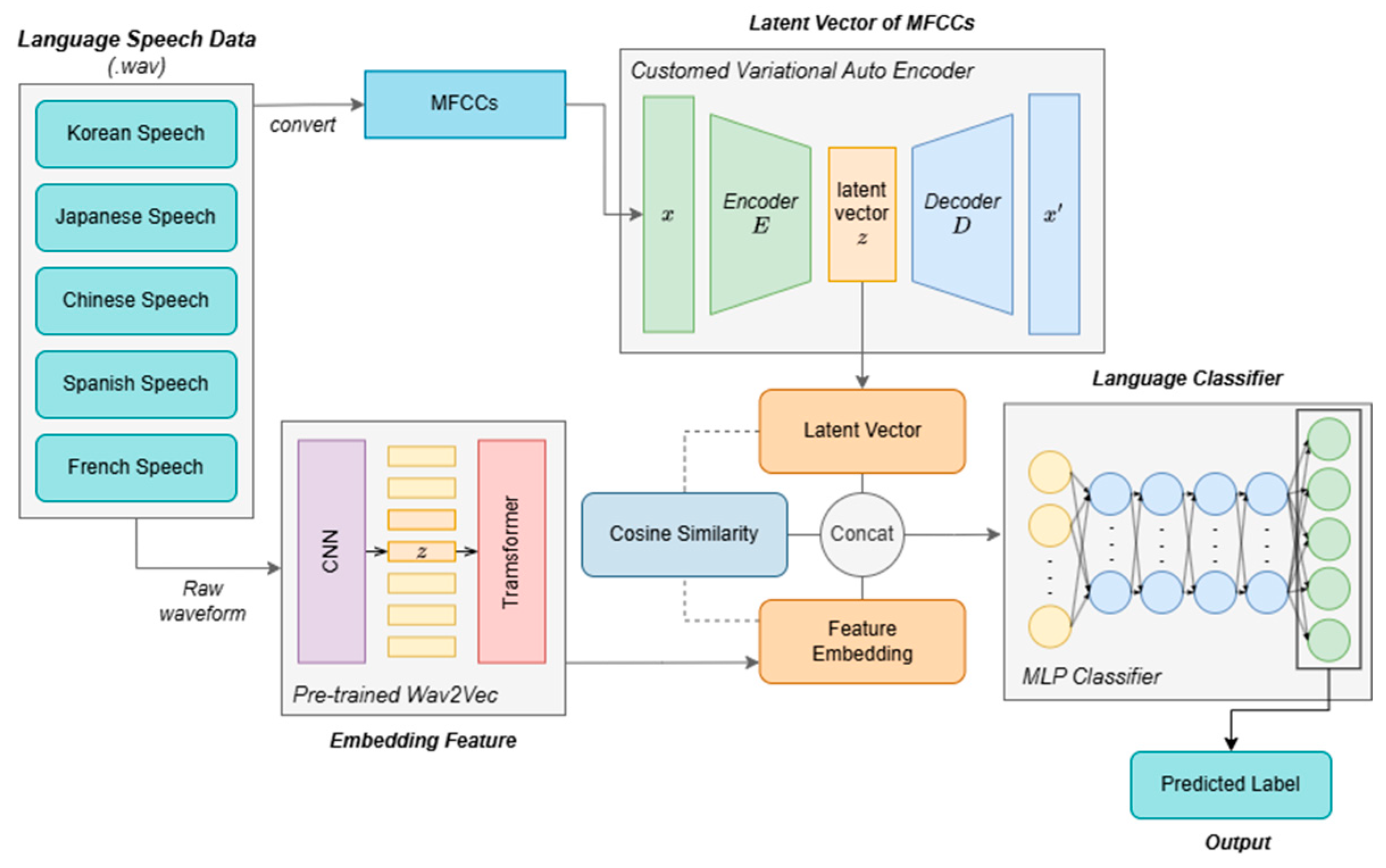

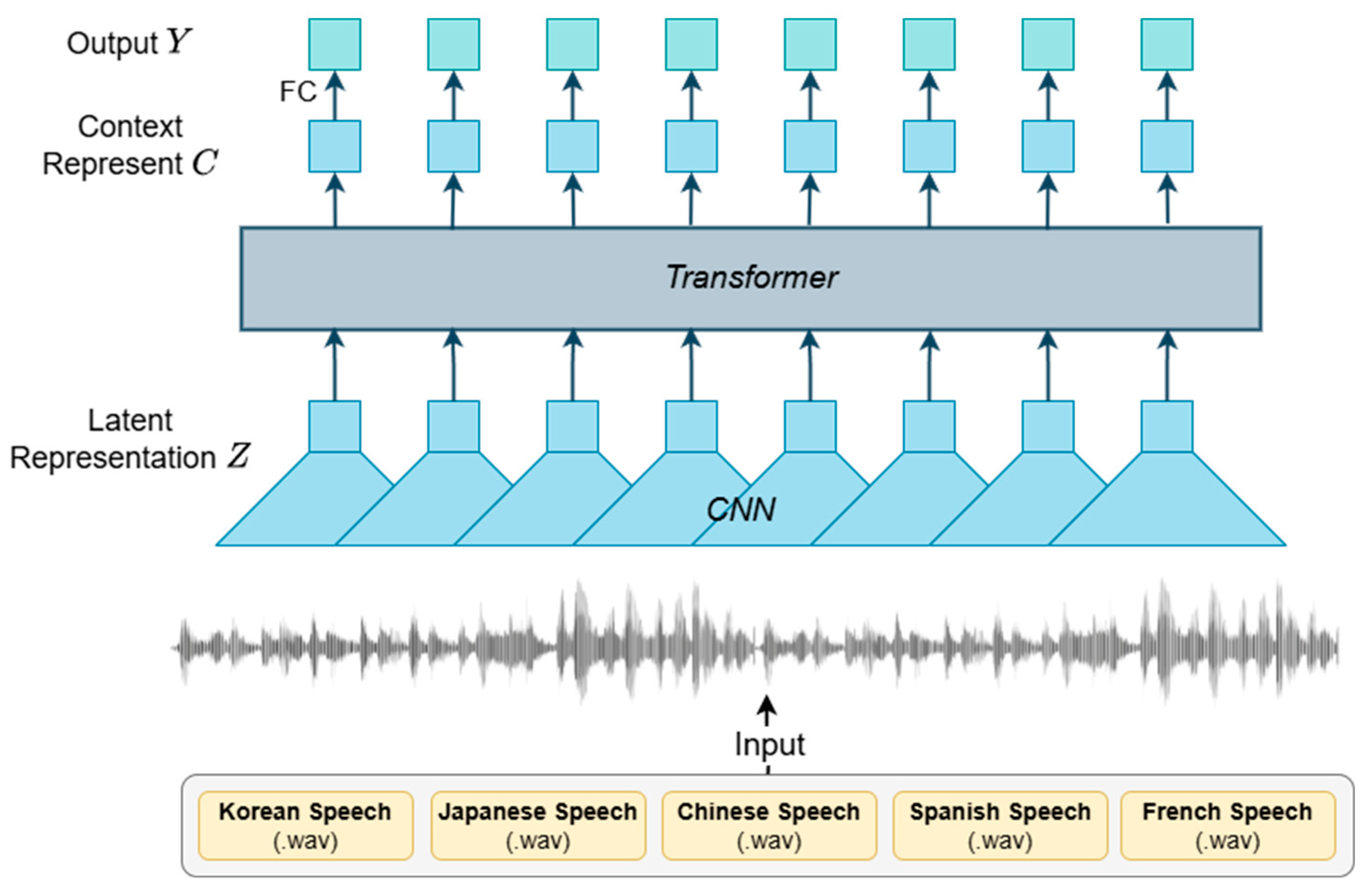

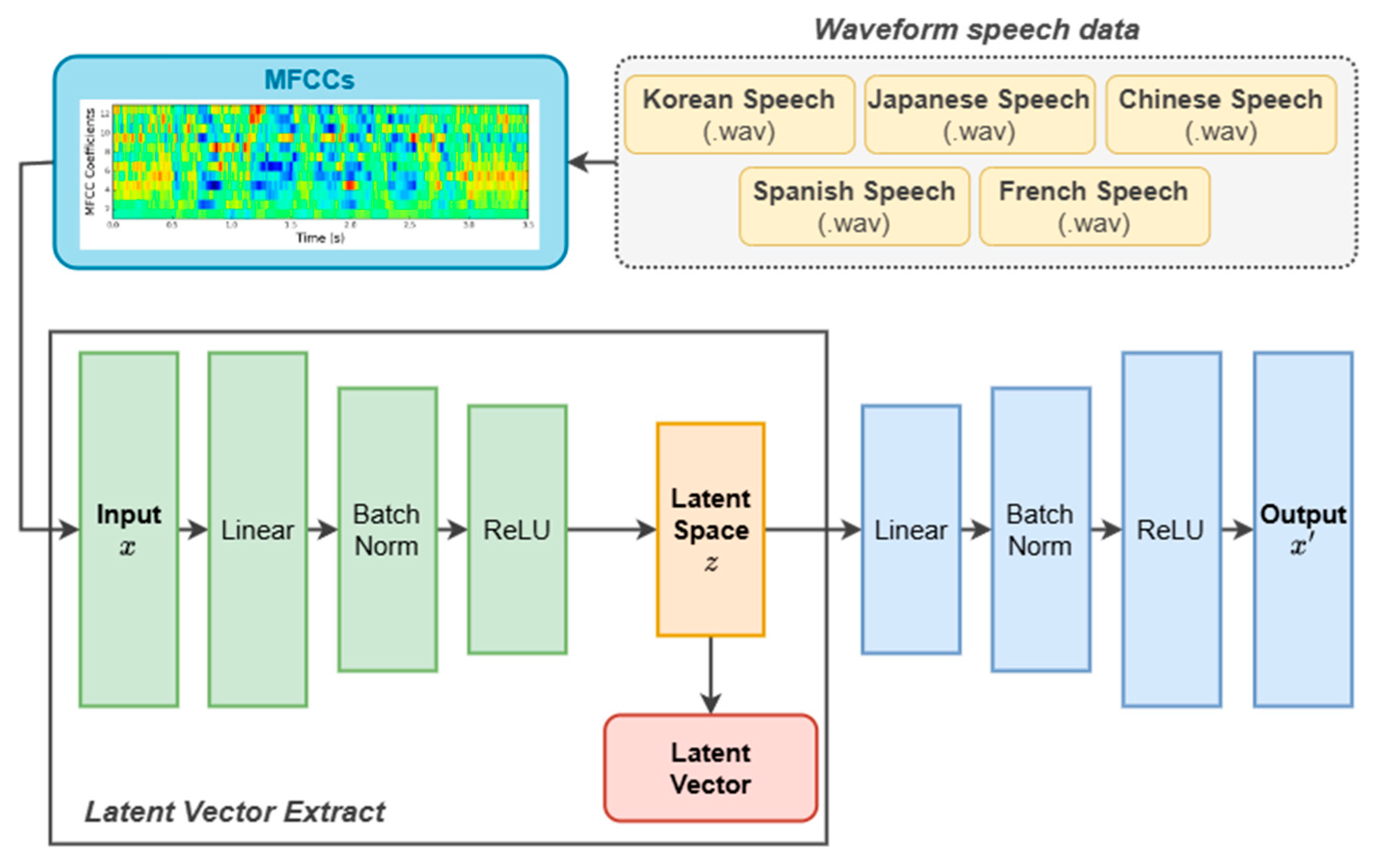

In order to extract meaningful acoustic features for model training, all audio samples were preprocessed using the MFCC technique. MFCCs effectively capture the spectral characteristics of speech signals in a manner that aligns with human auditory perception. Each waveform was transformed into a sequence of MFCC feature vectors through standard signal processing steps including framing, windowing, short-time Fourier transform, and Mel-scale filtering. These MFCC features served as the input representations for the VAE model.

The language-specific speech dataset consisted of a total of 52,555 utterances, which were divided into training, validation, and test sets using an 80:20 split for training and testing, with 20% of the training set further allocated for validation. Additionally, 100 noise samples were incorporated into each language set to enhance the model’s robustness against background noise. The final distribution is shown in

Table 2.

The noise dataset was sourced from AI Hub [

31] Living Environment Noise AI Learning Data [

32], which includes 38 types of noise categorized into four groups: interfloor noise, construction noise, business noise, and traffic noise. From this dataset, 100 samples were selected, specifically consisting of traffic and engine noise. These noise samples were then randomly mixed into the speech datasets.

4.3. Performance Evaluation

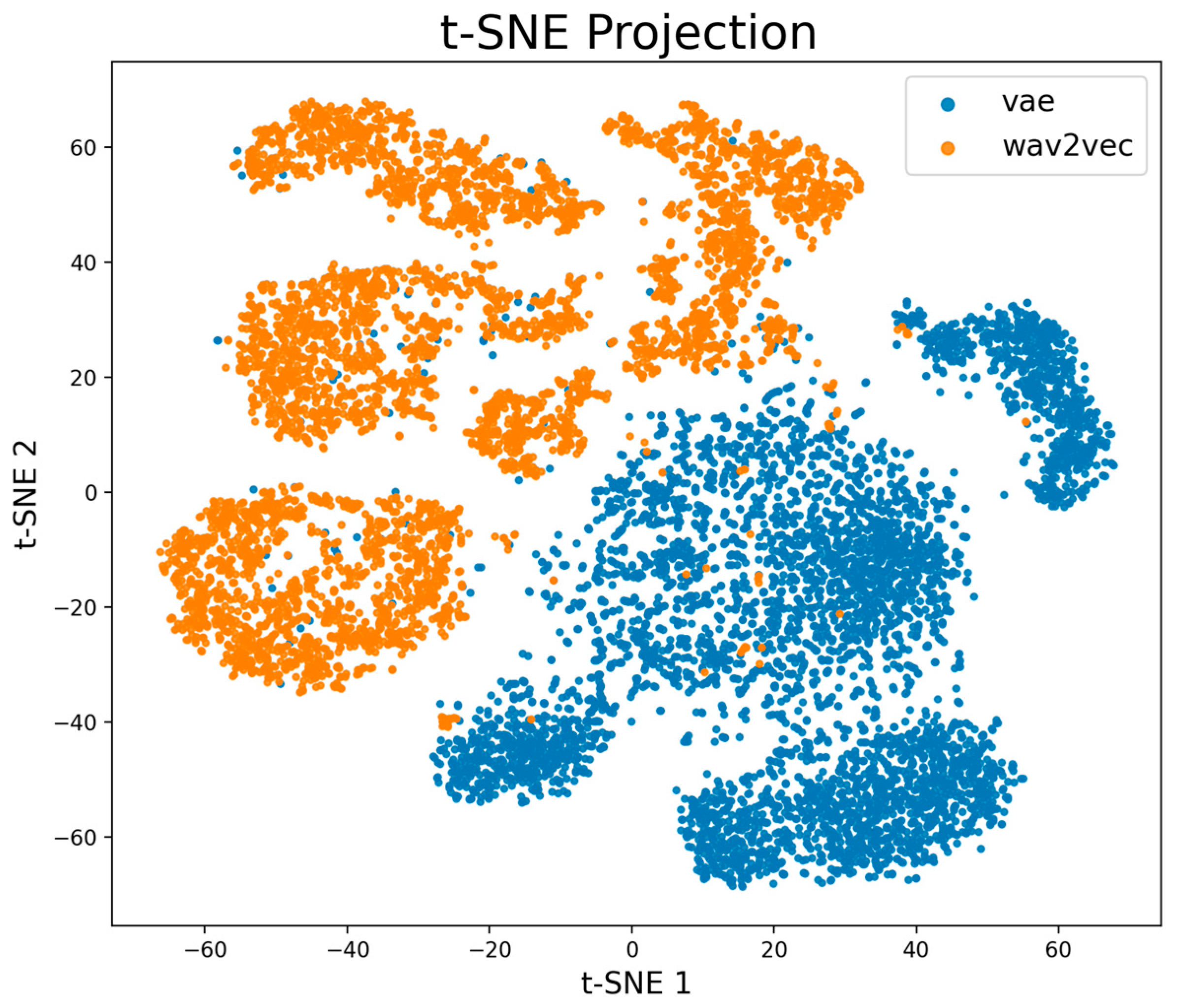

Figure 8 presents the t-SNE projection results for VAE and Wav2Vec embeddings, each reduced from their original 128-dimensional space to two dimensions for visualization. The projection was computed with a mean σ of 0.033, yielding a KL divergence of 84.64 after 250 iterations with early exaggeration and converging to 1.93 after 1000 iterations. As shown, the two embedding types form distinct, well-separated clusters, with minimal overlap between VAE and Wav2Vec representations. This separation suggests that each embedding space captures unique feature distributions, indicating complementary representational properties.

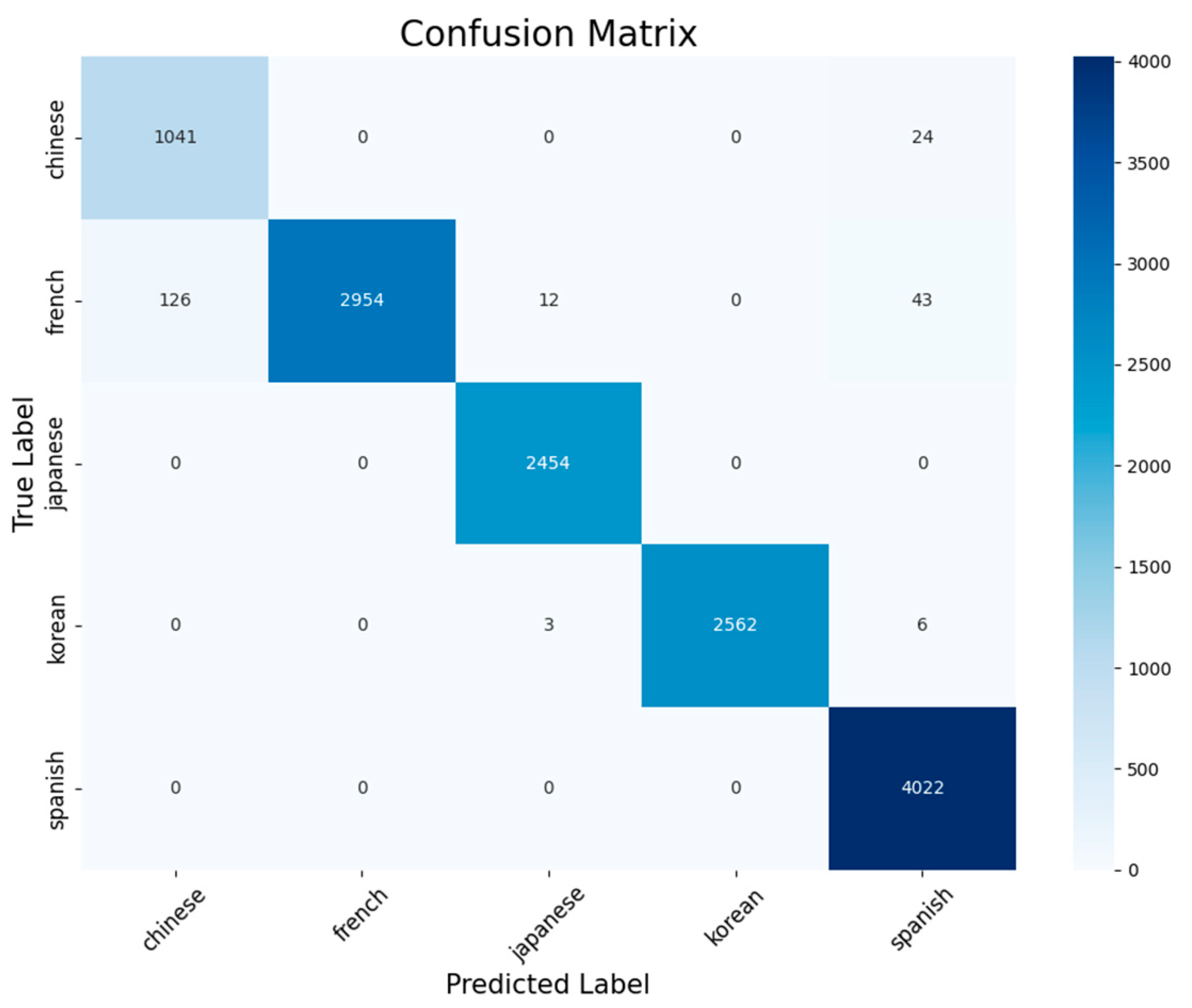

The final model achieved a loss of 0.1063 and an accuracy of 98.38% on the test set, demonstrating overall strong performance in language classification. The confusion matrix indicates that the model performs with high accuracy across most language classes, particularly for Japanese, Korean, and Spanish, each showing minimal or no confusion with other classes.

Figure 9 presents the confusion matrix of the model, illustrating its performance across different classes. Complementing this,

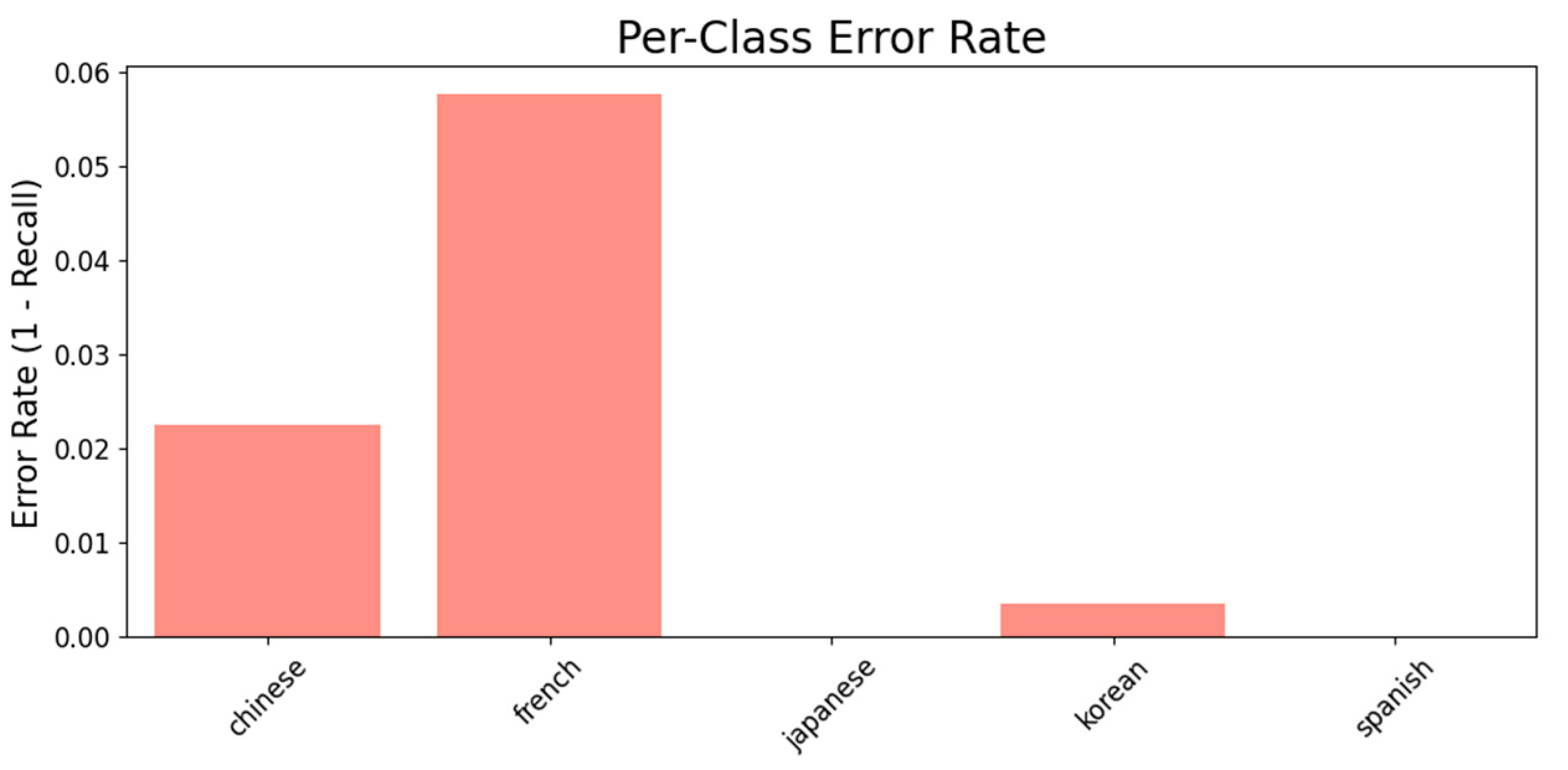

Figure 10 displays the per-class error rate, providing a detailed breakdown of misclassifications for each individual class.

However, the French class exhibited the most frequent misclassifications. Out of a total of 3135 French samples, 126 were incorrectly classified as Chinese, 12 as Japanese, and 43 as Spanish, suggesting that the model occasionally confuses French with phonetically or acoustically similar languages. This may be due to shared phonetic patterns between French and these languages in certain acoustic contexts.

Meanwhile, Chinese had a minor confusion with Spanish (24 instances), and Korean showed only 9 misclassifications in total (3 as Japanese, 6 as Spanish), further supporting the robustness of the classifier for these languages.

Overall, the confusion matrix highlights strong class separability, with the few misclassifications largely concentrated in language pairs that may share overlapping acoustic features. These insights could guide future work on enhancing the model’s discriminative power, especially for closely related or phonetically ambiguous language pairs.

The per-class error rate plot further supports the findings from the confusion matrix by quantifying the misclassification rate for each language class. Notably, the French class exhibits the highest error rate, exceeding 5.5%, which aligns with the previously observed confusion with Chinese and Spanish samples. The Chinese class also shows a moderate error rate of approximately 2.2%, primarily due to misclassifications as Spanish. In contrast, Japanese and Spanish classes demonstrate perfect or near-perfect recall, indicating zero or negligible misclassifications. The Korean class shows a minimal error rate below 0.5%, affirming the model’s strong ability to distinguish Korean from other languages. These results highlight the model’s robustness across most classes, while also identifying French as a challenging category.

The classification report in

Table 6 provides detailed per-class evaluation metrics that reinforce the observations from the confusion matrix and the per-class error rate plot. To assess the statistical reliability of these results, the evaluation was conducted over 50 independent test runs, each initialized with a different random seed. For each run, precision, recall, and F1-score were computed per class, and their mean values and standard deviations were reported. Across all metrics and classes, the standard deviations were found to be negligible (less than 0.001), indicating highly stable and reproducible classification performance across different initializations.

The model achieves perfect precision and recall (1.00) for both Korean and Japanese, resulting in flawless F1-scores of 1.00, which demonstrates exceptional discriminative capability for these languages. Spanish also shows consistently high performance, with a precision of 0.98, recall of 1.00, and F1-score of 0.99, suggesting minimal false negatives and strong overall classification.

The Chinese class yields a slightly lower precision of 0.89 while maintaining a high recall of 0.98, which indicates that while most Chinese samples are correctly identified, some samples from other languages are mistakenly classified as Chinese, leading to a higher false positive rate.

The French class presents the most noticeable drop in performance, with a recall of 0.94, despite a perfect precision of 1.00. This suggests that while all samples predicted as French are indeed correct, the model fails to identify approximately 6% of actual French samples, often misclassifying them as Chinese or Spanish. This aligns precisely with the error distribution observed earlier.

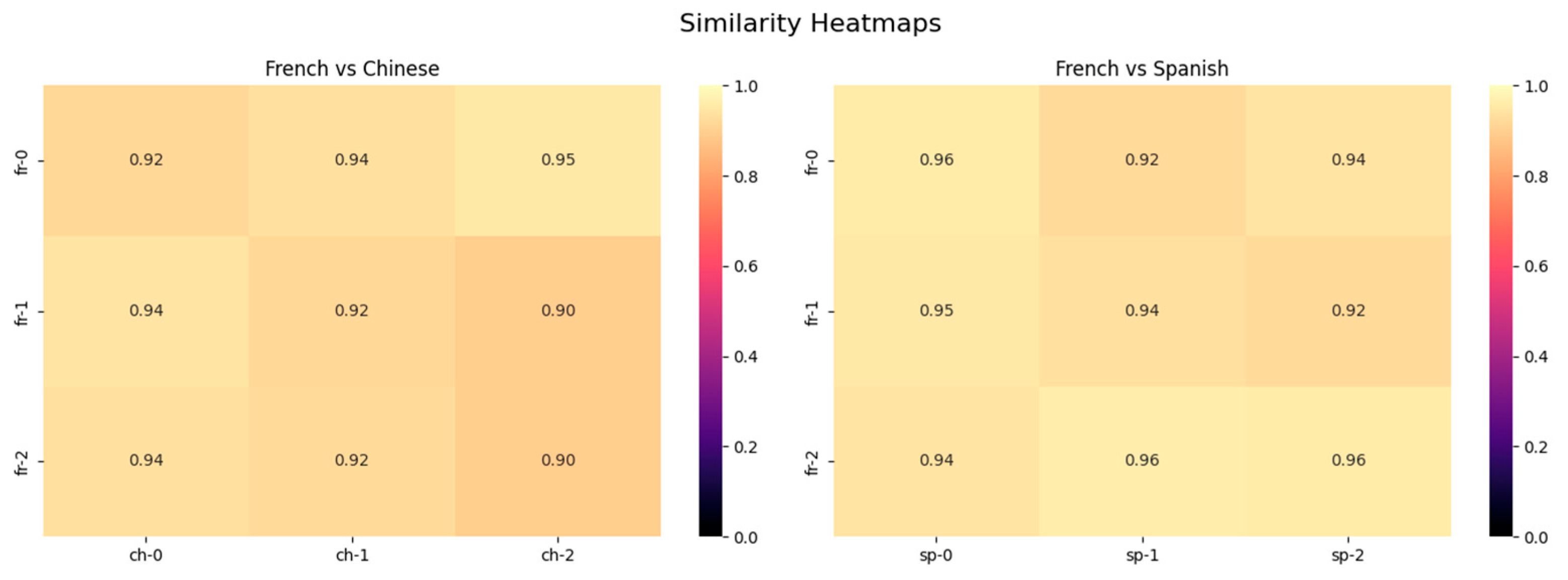

These misclassification patterns can be further understood by examining the acoustic similarities between the languages. French and Spanish both belong to the Romance language family and share similar phonetic structures, including consonant-vowel ratios, phoneme durations, and prosodic characteristics [

42]. French is particularly notable for its nasalization and fluid phoneme transitions, while Spanish tends to exhibit clearer syllable boundaries. Mandarin Chinese, although distinct as a tonal language, demonstrates phonetic overlap with French in non-tonal regions [

43]. MFCC similarity heatmaps shown in

Figure 11 further confirm these overlaps, with French–Chinese and French–Spanish pairs exhibiting high similarity scores ranging from 0.90 to 0.96. These findings indicate that the model perceives both language pairs as acoustically similar in the MFCC domain, contributing to the observed classification confusions.

The model’s overall accuracy of 98.38% confirms strong global performance, with high consistency across most language classes. However, the imbalance in precision and recall for certain classes (particularly French and Chinese) indicates specific areas for further refinement, possibly by incorporating more robust feature representations or addressing phonetic overlap between confusing pairs.

To evaluate the feasibility of deploying the proposed lightweight classifier in real-world embedded scenarios, we conducted a profiling analysis measuring key performance metrics including computational cost, inference latency, and memory consumption. The classifier requires approximately 0.27 MFLOPs per input and achieves an average inference time of 0.344 ms per sample on an NVIDIA RTX 8000 GPU. The peak memory usage is limited to 11.47 MB, indicating that the model is highly efficient in terms of both computation and memory footprint.

To assess the practical deployment potential, we compared expected inference performance across two widely used edge devices: Raspberry Pi 5 and Jetson Nano. Based on known hardware capabilities and extrapolated benchmarking data, the Jetson Nano is estimated to perform inference at approximately 2–3 FPS with moderate latency, whereas the Raspberry Pi 5 would likely achieve 1–2 FPS, subject to thermal constraints and CPU-only execution. A detailed comparison is provided in

Table 7.

These results demonstrate that the proposed model is suitable for resource-constrained environments where real-time or near real-time performance is required. In contrast to heavy-weight transformer-based or multi-stream architectures, our model offers a highly deployable alternative for practical LID applications without sacrificing classification accuracy.

We implemented a prototype on the NVIDIA Jetson Nano, a compact embedded computing platform that is widely adopted in mobility-oriented hardware systems. The Jetson Nano was selected because hardware configurations in mobility devices are often highly heterogeneous, making it difficult to standardize deployment targets. The model was first trained on an RTX 8000 GPU, after which the trained model files were converted to TensorRT format and deployed to the Jetson Nano. This conversion not only ensured compatibility but also typically yielded improved inference performance as shown in

Table 7.

To validate the deployment, we conducted real-time tests on the Jetson Nano with and without TensorRT optimization, observing that the TensorRT version generally achieved better performance. The evaluation involved a test group of 20 participants (10 male and 10 female), ranging in age from their teens to their fifties, all of whom were capable of speaking at least two languages. Each participant was tested in multiple languages, and the results were analyzed on a per-language basis. The prototype achieved an overall accuracy of approximately 96% in this embedded setting, while maintaining real-time responsiveness.

Table 8 presents a comparative analysis between the proposed model, a traditional CNN baseline [

44], and two representative state-of-the-art language identification systems, Whisper [

45] and Speechbrain [

46], in terms of F1-score, memory usage, and inference speed. The CNN baseline records the lowest overall performance, with marked drops for Korean (0.50) and Spanish (0.67). Whisper attains the highest or near-highest F1-scores for all five languages, but its memory footprint approaches 3 GB, which limits applicability in embedded or mobile environments. Speechbrain performs consistently well for most languages but shows a pronounced weakness in Spanish (0.26), reflecting reduced robustness for that category.

In contrast, the proposed model sustains high F1-scores across all languages achieving 1.00 for Korean and Japanese, 0.99 for Spanish, and competitive scores for Chinese and French, while requiring only 11.47 MB of memory and delivering an inference time of 0.344 ms. This combination of strong accuracy, low resource usage, and fast processing makes it an effective choice for real-time multilingual speech recognition, especially where both high performance and computational efficiency are essential.