Employing AI for Better Access to Justice: An Automatic Text-to-Video Linking Tool for UK Supreme Court Hearings

Abstract

1. Introduction

- 1

- Customised ASR System for Legal DomainWe developed and fine-tuned an ASR system specifically for UK SC hearings. This involved training a custom language model using legal documents and human-edited transcripts and integrating domain-specific vocabulary to enhance transcription accuracy and efficiency.

- 2

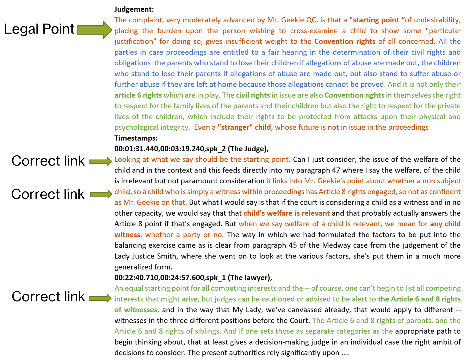

- Semantic Linking Between Judgement Text and Hearing VideoWe designed an automated information retrieval system that links paragraphs in written judgements to semantically relevant segments in hearing videos, enabling precise navigation and contextual understanding of legal arguments.

- 3

- Integrated User Interface for Legal Professionals and Public UseWe created a user-friendly interface that synchronises textual and audiovisual data and allows users to select judgement paragraphs and view corresponding video segments with playback and transcript functionality.

- 4

- General Framework for Audio-Text Alignment in Specialised DomainsWe proposed a scalable and adaptable methodology for linking audiovisual content with textual information, applicable to other specialised domains such as education, healthcare, and policy analysis.

2. Related Work

2.1. Automatic Speech Recognition

2.2. Information Retrieval for Legal Domain

3. Models and Methods

3.1. Stage One: A Customised ASR Model

3.1.1. Dataset Compilation

- Overlapping speech and background noise due to the logistics of the court hearings’ settings, as the barristers frequently ask the court to turn to specific pages in the case file.

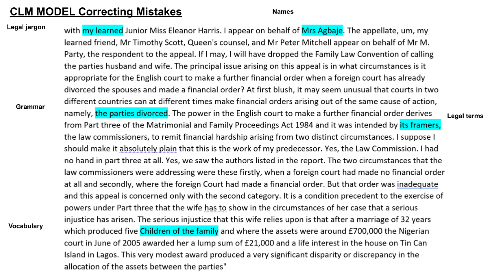

- UK legal jargon was consistently mistranscribed due to special pronunciation of some phrases in the English court as is the case in the first example in Table 1. Also, repeated forms of address that have a special pronunciation lead to transcription errors. For example, a barrister addressing a colleague as “My learned friend” is pronounced as “my learn’id friend” with a stress on the second syllable of “learned”.

- Legal entities such as case names with non-English names (e.g., Agbaje (Respondent) v Akinnoye-Agbaje (FC) (Appellant)), provisions (e.g., Section 84 1 ), and cardinals crucial to the discussed case were frequently mistranscribed.

- Legal terms specific to the deliberated case were often mistaken by the ASR system to phrases with similar pronunciation. For example, the legal term “inherent vice” was consistently mistranscribed as “in your advice”. This most likely relates to the fact that the ASR system opts for the most acoustically similar phrase provided by its language model, which is trained on non-domain data.

3.1.2. Customising the ASR System

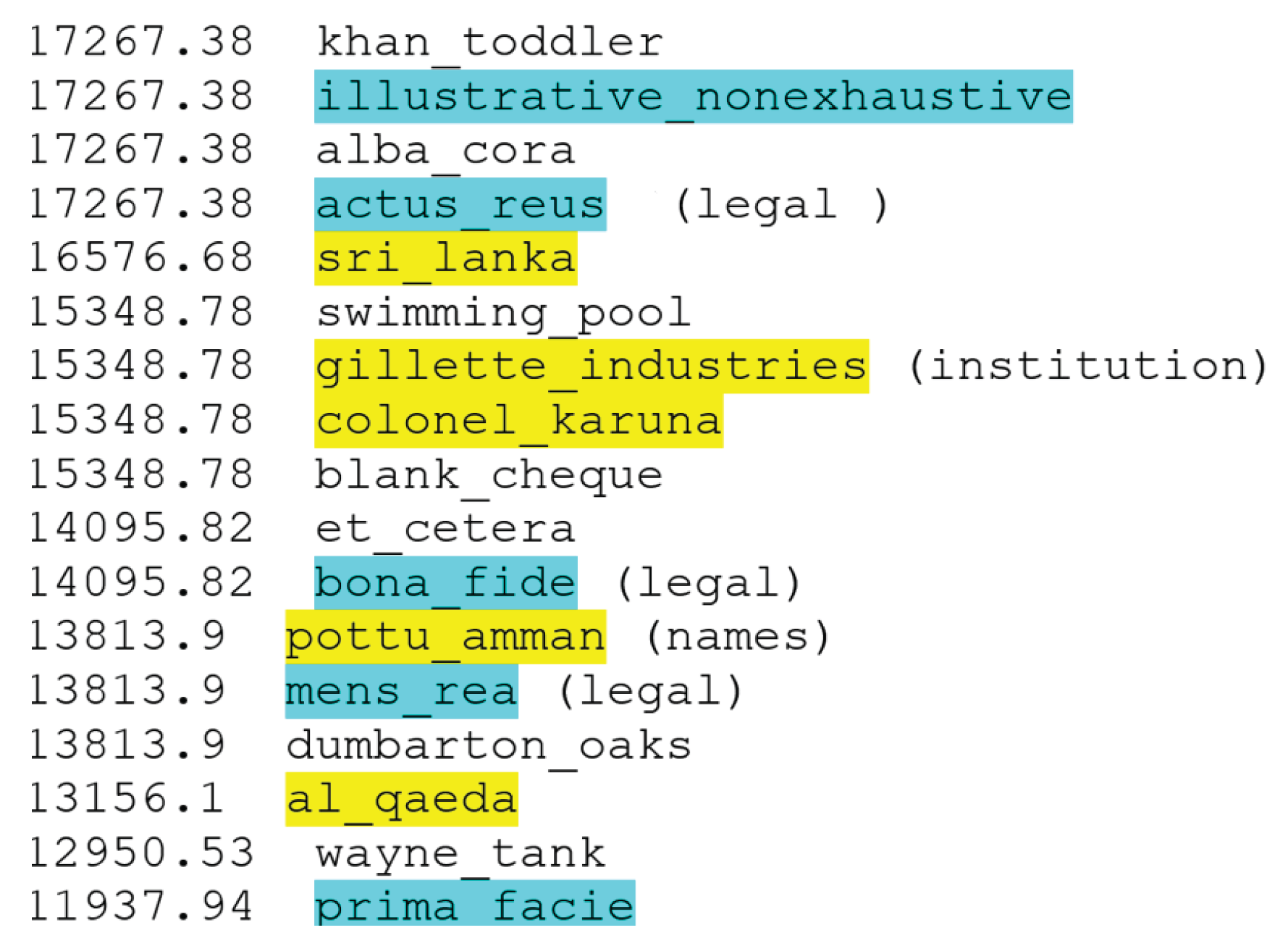

3.1.3. Phrase Extraction Model

3.2. Stage Two: Text-to-Video IR System

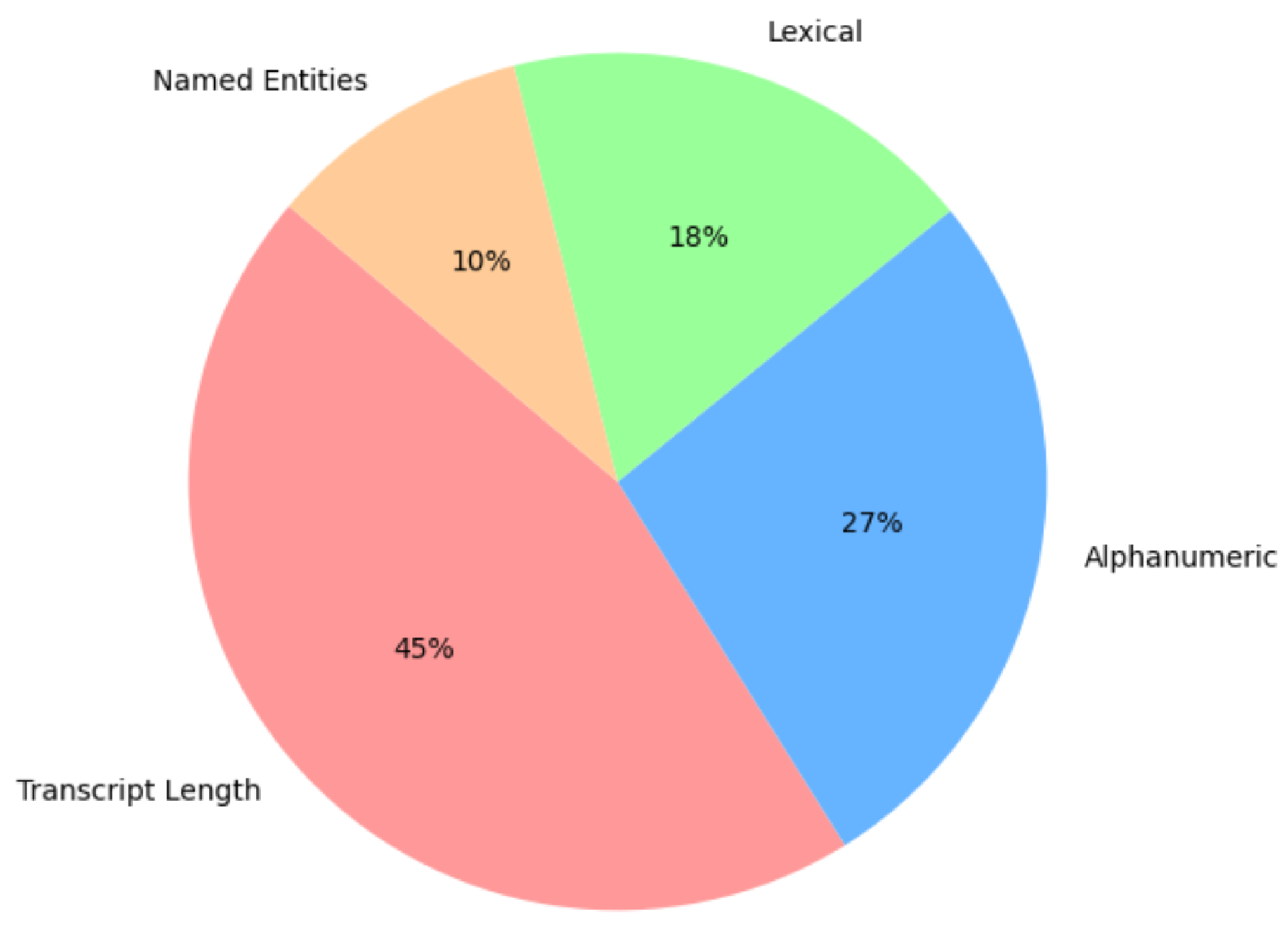

3.2.1. Data Processing and Preparation

3.2.2. Zero-Shot Information Retrieval

- A

- Frequency-based Methods (keyword search)

- B

- Embedding-based Methods

3.2.3. Results of Pre-Fetching

- is the number of relevant links retrieved among the top k candidates.

- is the total number of relevant links that exist for a given query.

| Model | MAP@5 | Recall@5 | MAP@10 | Recall@10 | MAP@15 | Recall@15 |

|---|---|---|---|---|---|---|

| GPT | 0.96 | 0.33 | 0.89 | 0.57 | 0.85 | 0.77 |

| Entailment | 0.87 | 0.32 | 0.85 | 0.55 | 0.82 | 0.79 |

| Glove | 0.81 | 0.27 | 0.77 | 0.53 | 0.61 | 0.78 |

| BM25 | 0.87 | 0.29 | 0.81 | 0.53 | 0.78 | 0.77 |

| Asymmetric | 0.94 | 0.32 | 0.88 | 0.54 | 0.83 | 0.77 |

3.2.4. Data Augmentation

I want you to act like a British lawyer. Paraphrase the following text:

{original text}

3.2.5. Paragraph-Timestamp Link Classifiers

- Baseline Model:For our baseline, we train a logistic regression model with the GPT-3 embedding representations of the original data with and without the augmentation. We conduct the two experiments with two settings: (1) we use the concatenated vectors of each judgement–segment pair as the input features, and (2) we add the cosine-similarity score between each judgement–segment pair as an additional scalar feature.

- Cross-encoder:Recently, one of the most accurate methods of sentence comparison in IR tasks is cross-encoding. In a cross-encoder, two sequences are concatenated and sent in one pass to the sentence pair model, which is built on top of a Transformer-based language model. The attention heads of a Transformer can directly model which elements of one sequence correlate with which elements of the other, enabling the computation of an accurate relevance score [53]. We trained a cross-encoder built on top of the distilled version of the RoBERTa-base model [54] from the Huggingface library (https://huggingface.co/distilroberta-base, accessed on 19 June 2024). The hyperparameters we used for training are: batch size 16, num_epochs 4, warmup_step 10% of the training data, and a binary classification evaluator every 1000 steps. We trained the cross-encoder on both the augmented and non-augmented dataset.

- Cross Tension with In-batch Negative Sampling:To minimise the effect of random negative sampling in the augmented dataset, we experiment with an unsupervised learning approach with in-batch negative sampling. Adopting the contrasting learning (CT) from Carlsson et al. [55], we train two independent encoders on judgement–hearing segment pairs initialised with identical weights, where for each randomly selected segment s, K irrelevant segments are sampled along with one relevant segment to create a batch as a training sample. The CT objective of the two independent encoders is to maximise the dot product between sentence representations of irrelevant segments and minimise the dot product between relevant ones. We hypothesise that using in-batch negative sampling gives a stronger training signal than the random shuffling of judgement–hearing segments in creating semantic representations. We initialise our two encoder models with distil-bert-base-uncased pretrained embeddings [54] from the Huggingface library (https://huggingface.co/distilroberta-base, accessed on 19 June 2024). We train the encoders for four epochs with a batch size of 16 segments with 300 max size tokens and a learning rate of 5 × 10 −5.

- GPT-3 Embedding CustomisationTo optimise the performance of our best-performing IR model, we customised GPT embeddings to better reflect the semantic characteristics of our legal dataset. The base GPT embedding model (text-embedding-ada-002) used is trained on diverse corpora including text search, text similarity, and code search tasks. To adapt it to the legal domain, we followed the embedding customisation approach proposed by OpenAI [56] and extended it with a transparent workflow tailored to our annotated legal data.Workflow Overview:

- 1.

- We start with a set of human-annotated transcript–judgement pairs, labelled as either relevant (positive) or non-relevant (negative).

- 2.

- For each pair, we compute the cosine similarity between their original GPT embeddings.

- 3.

- We perform a threshold sweep over cosine similarity values in increments of 0.01.

- 4.

- At each threshold x, we compute the standard error of the mean (SE) for the similarity scores of the positive and negative classes.

- 5.

- We identify the threshold that minimises the standard error:

- 6.

- Using the optimal threshold , we train a linear transformation matrix M that maximises the separation between positive and negative pairs in the embedding space.

- 7.

- The customised embedding for each segment is computed aswhere v is the original GPT embedding and M is the learned transformation matrix.

To assess generalisability, we applied the customised embeddings to our training and held-out datasets of UK SC cases. The customised embeddings were used to train a regression model on both augmented and non-augmented datasets. Additionally, we experimented with incorporating the transformed cosine similarity scores as scalar features. Results of experiments with customised embeddings are explained in the following section.

4. Results

4.1. Results for Stage One

- S = Number of substitutions (incorrect words);

- D = Number of deletions (missing words);

- I = Number of insertions (extra words);

- N = Total number of words in the reference transcript.

- 1

- CLM1 is trained on only the texts of the Supreme Court judgements.

- 2

- CLM2 is trained on both the judgements and the gold-standard transcripts.

- 3

- CLM2 + Vocab uses CLM2 for transcription plus the global vocabulary list extracted by our phrase detection model.

- 4

- CLM2 + Vocab2 uses CLM2 for transcription plus the legal entities vocabulary list extracted by Blackstone and spaCy v3.4 library.

| Model | WER Case1 | WER Case2 | WER Average | Transcription Time |

|---|---|---|---|---|

| AWS base | 8.7 | 16.2 | 12.3 | 85 min |

| CLM1 | 8.5 | 16.5 | 12.4 | 77 min |

| CLM2 | 7.9 | 15.5 | 11.6 | 77 min |

| CLM2 + Vocab | 7.9 | 15.6 | 11.6 | 132 min |

| CLM2 + Vocab2 | 8.0 | 15.6 | 11.7 | 112 min |

| Whisper | 9.6 | 15.3 | 12.4 | 191 min |

4.2. Results for Stage Two

5. Error Analysis and User Feedback

6. Discussion

7. Patents

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASR | Automatic Speech Recognition |

| STT | speech-to-text |

| WER | word error rate |

| IR | Information Retrieval |

| PMI | Point Mutual Information |

| CLM | Customised Language Model |

| SC | Supreme Court |

| LLM | Large Language Model |

| NLP | Natural Language Processing |

Appendix A

|

|

Appendix B

|

Appendix C

“My Lords. My Lady, In this matter, I appear with Mr Desmond Rutledge for the Appellant Mr Barry Austin, the Respondent, Southwark London Borough Council is represented by my learned friends Mr Drabble, Queen’s Counsel, and Mr Shaw Kelly. My Lords and and My Lady will have seen that we have identified five issues on which we would invite the attention of this court and I propose to deal with them in the sequence set out in the agreed statement of facts and issues starting with issue one, which might probably be described as the tolerated trespass question. The question posed for this court by issue one is whether the possession order made against him ended the late Mr Allen Austin’s tenancy or whether, in the alternative, he was at the date of his death, still the tenant of the council. That’s the issue to which the first of the matters on this appeal goes.”

AI-Generated Paraphrasing

“My Lords and My Lady, I appear before you with Mr Desmond Rutledge to represent the Appellant, Mr Barry Austin. The Respondent, Southwark London Borough Council, is represented by my learned friends Mr Drabble, Queen’s Counsel, and Mr Shaw Kelly. We have identified five issues that we would like the court to address, and we will deal with them in the sequence set out in the agreed statement of facts and issues. The first issue is whether the possession order made against the late Mr Allen Austin ended his tenancy or whether, in the alternative, he was still the tenant of the council at the time of his death. This is the first matter on the appeal.”

References

- UK Supreme Court. Annual Report and Accounts 2024–25. 2025. Available online: https://supremecourt.uk/uploads/The_Supreme_Court_Annual_Report_and_Accounts_2024_25_ec4ecbe2e5.pdf (accessed on 30 July 2025).

- Saadany, H.; Breslin, C.; Orăsan, C.; Walker, S. Better Transcription of UK Supreme Court Hearings. In Proceedings of the Workshop on Artificial Intelligence for Access to Justice (AI4AJ 2023), Braga, Portugal, 19 June 2023; Volume 3435. [Google Scholar]

- Saadany, H.; Orǎsan, C. Automatic Linking of Judgements to UK Supreme Court Hearings. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: Industry Track, Singapore, 6–10 December; pp. 492–500.

- Saadany, H.; Orasan, C.; Walker, S.; Breslin, C. Linking Judgement Text to Court Hearing Videos: UK Supreme Court as a Case Study. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 10598–10609. [Google Scholar]

- Elwany, E.; Moore, D.; Oberoi, G. Bert goes to law school: Quantifying the competitive advantage of access to large legal corpora in contract understanding. arXiv 2019, arXiv:1911.00473. [Google Scholar] [CrossRef]

- Nay, J.J. Natural Language Processing for Legal Texts. In Legal Informatics; Cambridge University Press: Cambridge, UK, 2021; pp. 99–113. [Google Scholar] [CrossRef]

- Mumcuoğlu, E.; Öztürk, C.E.; Ozaktas, H.M.; Koç, A. Natural language processing in law: Prediction of outcomes in the higher courts of Turkey. Inf. Process. Manag. 2021, 58, 102684. [Google Scholar] [CrossRef]

- Frankenreiter, J.; Nyarko, J. Natural Language Processing in Legal Tech. In Legal Tech and the Future of Civil Justice; Engstrom, D., Ed.; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Watanabe, S.; Mandel, M.; Barker, J.; Vincent, E.; Arora, A.; Chang, X.; Khudanpur, S.; Manohar, V.; Povey, D.; Raj, D.; et al. CHiME-6 Challenge: Tackling multispeaker speech recognition for unsegmented recordings. In Proceedings of the CHiME 2020-6th International Workshop on Speech Processing in Everyday Environments, Online, 4 May 2020. [Google Scholar]

- Feng, S.; Kudina, O.; Halpern, B.M.; Scharenborg, O. Quantifying bias in Automatic Speech Recognition. arXiv 2021, arXiv:2103.15122. [Google Scholar] [CrossRef]

- Zhang, Y. Mitigating bias against non-native accents. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022; Delft University of Technology: Delft, The Netherlands, 2022. [Google Scholar]

- Mai, L.; Carson-Berndsen, J. Unsupervised domain adaptation for speech recognition with unsupervised error correction. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022; pp. 5120–5124. [Google Scholar]

- Huo, Z.; Hwang, D.; Sim, K.C.; Garg, S.; Misra, A.; Siddhartha, N.; Strohman, T.; Beaufays, F. Incremental layer-wise self-supervised learning for efficient speech domain adaptation on device. arXiv 2021, arXiv:2110.00155. [Google Scholar]

- Sato, H.; Komori, T.; Mishima, T.; Kawai, Y.; Mochizuki, T.; Sato, S.; Ogawa, T. Text-Only Domain Adaptation Based on Intermediate CTC. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022; pp. 2208–2212. [Google Scholar]

- Dingliwa, S.; Shenoy, A.; Bodapati, S.; Gandhe, A.; Gadde, R.T.; Kirchhoff, K. Domain prompts: Towards memory and compute efficient domain adaptation of ASR systems. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022. [Google Scholar]

- Mani, A.; Palaskar, S.; Meripo, N.V.; Konam, S.; Metze, F. Asr error correction and domain adaptation using machine translation. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6344–6348. [Google Scholar]

- Del Rio, M.; Delworth, N.; Westerman, R.; Huang, M.; Bhandari, N.; Palakapilly, J.; McNamara, Q.; Dong, J.; Zelasko, P.; Jetté, M. Earnings-21: A practical benchmark for ASR in the wild. arXiv 2021, arXiv:2104.11348. [Google Scholar] [CrossRef]

- Wang, H.; Dong, S.; Liu, Y.; Logan, J.; Agrawal, A.K.; Liu, Y. ASR Error Correction with Augmented Transformer for Entity Retrieval. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022; pp. 1550–1554. [Google Scholar]

- Das, N.; Chau, D.H.; Sunkara, M.; Bodapati, S.; Bekal, D.; Kirchhoff, K. Listen, Know and Spell: Knowledge-Infused Subword Modeling for Improving ASR Performance of OOV Named Entities. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 7887–7891. [Google Scholar]

- Shukla, A.; Bhattacharya, P.; Poddar, S.; Mukherjee, R.; Ghosh, K.; Goyal, P.; Ghosh, S. Legal case document summarization: Extractive and abstractive methods and their evaluation. arXiv 2022, arXiv:2210.07544. [Google Scholar] [CrossRef]

- Hellesoe, L.J. Automatic Domain-Specific Text Summarisation with Deep Learning Approaches. Ph.D. Thesis, Auckland University of Technology, Auckland, New Zealand, 2022. [Google Scholar]

- Aletras, N.; Tsarapatsanis, D.; Preoţiuc-Pietro, D.; Lampos, V. Predicting judicial decisions of the European Court of Human Rights: A natural language processing perspective. PeerJ Comput. Sci. 2016, 2, e93. [Google Scholar] [CrossRef]

- Trautmann, D.; Petrova, A.; Schilder, F. Legal Prompt Engineering for Multilingual Legal Judgement Prediction. arXiv 2022, arXiv:2212.02199. [Google Scholar] [CrossRef]

- Hendrycks, D.; Burns, C.; Chen, A.; Ball, S. Cuad: An expert-annotated nlp dataset for legal contract review. arXiv 2021, arXiv:2103.06268. [Google Scholar] [CrossRef]

- Dixit, A.; Deval, V.; Dwivedi, V.; Norta, A.; Draheim, D. Towards user-centered and legally relevant smart-contract development: A systematic literature review. J. Ind. Inf. Integr. 2022, 26, 100314. [Google Scholar] [CrossRef]

- Zheng, L.; Guha, N.; Anderson, B.R.; Henderson, P.; Ho, D.E. When does pretraining help? assessing self-supervised learning for law and the casehold dataset of 53,000+ legal holdings. In Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law, São Paulo, Brazil, 21–25 June 2021; pp. 159–168. [Google Scholar]

- Rabelo, J.; Kim, M.; Goebel, R.; Yoshioka, M.; Kano, Y.; Satoh, K. COLIEE 2020: Methods for Legal Document Retrieval and Entailment. 2020. Available online: https://sites.ualberta.ca/~rabelo/COLIEE2021/COLIEE_2020_summary.pdf (accessed on 19 June 2024).

- Chalkidis, I.; Fergadiotis, M.; Manginas, N.; Katakalou, E.; Malakasiotis, P. Regulatory compliance through Doc2Doc information retrieval: A case study in EU/UK legislation where text similarity has limitations. arXiv 2021, arXiv:2101.10726. [Google Scholar]

- Kiyavitskaya, N.; Zeni, N.; Breaux, T.D.; Antón, A.I.; Cordy, J.R.; Mich, L.; Mylopoulos, J. Automating the extraction of rights and obligations for regulatory compliance. In Proceedings of the Conceptual Modeling-ER 2008: 27th International Conference on Conceptual Modeling, Barcelona, Spain, 20–24 October 2008; Proceedings 27. Springer: Berlin/Heidelberg, Germany, 2008; pp. 154–168. [Google Scholar]

- Yujian, L.; Bo, L. A normalized Levenshtein distance metric. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1091–1095. [Google Scholar] [CrossRef]

- Graves, A. Sequence transduction with recurrent neural networks. arXiv 2012, arXiv:1211.3711. [Google Scholar] [CrossRef]

- Guo, J.; Tiwari, G.; Droppo, J.; Van Segbroeck, M.; Huang, C.W.; Stolcke, A.; Maas, R. Efficient minimum word error rate training of RNN-Transducer for end-to-end speech recognition. arXiv 2020, arXiv:2007.13802. [Google Scholar]

- Rao, K.; Sak, H.; Prabhavalkar, R. Exploring architectures, data and units for streaming end-to-end speech recognition with rnn-transducer. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Okinawa, Japan, 16–20 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 193–199. [Google Scholar]

- Bouma, G. Normalized (pointwise) mutual information in collocation extraction. Proc. GSCL 2009, 30, 31–40. [Google Scholar]

- Řehůřek, R.; Sojka, P. Software Framework for Topic Modelling with Large Corpora. In Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks, Valletta, Malta, 22 May 2010; pp. 45–50. Available online: http://is.muni.cz/publication/884893/en (accessed on 7 August 2025).

- Agirre, E.; Banea, C.; Cardie, C.; Cer, D.; Diab, M.; Gonzalez-Agirre, A.; Guo, W.; Mihalcea, R.; Rigau, G.; Wiebe, J. Semeval-2014 task 10: Multilingual semantic textual similarity. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 81–91. [Google Scholar]

- Boteva, V.; Gholipour, D.; Sokolov, A.; Riezler, S. A Full-Text Learning to Rank Dataset for Medical Information Retrieval. In Proceedings of the 38th European Conference on Information Retrieval, Padua, Italy, 20–23 March 2016; Available online: http://www.cl.uni-heidelberg.de/~riezler/publications/papers/ECIR2016.pdf (accessed on 7 August 2025).

- Thakur, N.; Reimers, N.; Rücklé, A.; Srivastava, A.; Gurevych, I. BEIR: A Heterogeneous Benchmark for Zero-shot Evaluation of Information Retrieval Models. In Proceedings of the Thirty-Fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2), Online, 6–14 December 2021; Available online: https://openreview.net/forum?id=wCu6T5xFjeJ (accessed on 7 August 2025).

- Robertson, S.; Zaragoza, H. The probabilistic relevance framework: BM25 and beyond. Found. Trends® Inf. Retr. 2009, 3, 333–389. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. 2014. Available online: https://nlp.stanford.edu/projects/glove/ (accessed on 7 August 2025).

- Wang, W.; Wei, F.; Dong, L.; Bao, H.; Yang, N.; Zhou, M. MiniLM: Deep Self-Attention Distillation for Task-Agnostic Compression of Pre-Trained Transformers. arXiv 2020, arXiv:2002.10957. [Google Scholar]

- Williams, C. Tradition and Change in Legal English: Verbal Constructions in Prescriptive Texts; Peter Lang: Lausanne, Switzerland, 2007; Volume 20. [Google Scholar]

- Haigh, R. Legal English; Routledge: London, UK, 2018. [Google Scholar]

- Chalkidis, I.; Fergadiotis, M.; Malakasiotis, P.; Aletras, N.; Androutsopoulos, I. LEGAL-BERT: The Muppets straight out of Law School. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 2898–2904. [Google Scholar] [CrossRef]

- Hofstätter, S.; Lin, S.C.; Yang, J.H.; Lin, J.; Hanbury, A. Efficiently Teaching an Effective Dense Retriever with Balanced Topic Aware Sampling. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021. [Google Scholar]

- Qin, C.; Zhang, A.; Zhang, Z.; Chen, J.; Yasunaga, M.; Yang, D. Is ChatGPT a general-purpose natural language processing task solver? arXiv 2023, arXiv:2302.06476. [Google Scholar]

- Wang, J.; Liang, Y.; Meng, F.; Shi, H.; Li, Z.; Xu, J.; Qu, J.; Zhou, J. Is chatgpt a good nlg evaluator? a preliminary study. arXiv 2023, arXiv:2303.04048. [Google Scholar] [CrossRef]

- Törnberg, P. Chatgpt-4 outperforms experts and crowd workers in annotating political twitter messages with zero-shot learning. arXiv 2023, arXiv:2304.06588. [Google Scholar]

- Cegin, J.; Simko, J.; Brusilovsky, P. ChatGPT to Replace Crowdsourcing of Paraphrases for Intent Classification: Higher Diversity and Comparable Model Robustness. arXiv 2023, arXiv:2305.12947. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Liu, F.; Jiao, Y.; Massiah, J.; Yilmaz, E.; Havrylov, S. Trans-encoder: Unsupervised sentence-pair modelling through self-and mutual-distillations. arXiv 2022, arXiv:2109.13059. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Carlsson, F.; Gyllensten, A.C.; Gogoulou, E.; Hellqvist, E.Y.; Sahlgren, M. Semantic re-tuning with contrastive tension. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Sanders, T. Customizing Embeddings, 2023. OpenAI. Available online: https://github.com/openai/openai-cookbook/blob/main/examples/Customizing_embeddings.ipynb (accessed on 7 August 2025).

| Model | Transcript |

|---|---|

| Reference | So my lady um it is difficult to… |

| AWS ASR | So melody um it is difficult to… |

| Reference | All rise … |

| AWS ASR | All right … |

| Reference | it makes further financial order |

| AWS ASR | it makes further five natural |

| Model | MAP@5 | Recall@5 | MAP@10 | Recall@10 | MAP@15 | Recall@15 |

|---|---|---|---|---|---|---|

| GPT | 0.691 | 0.391 | 0.622 | 0.657 | 0.711 | 0.914 |

| Entailment | 0.615 | 0.348 | 0.568 | 0.611 | 0.66 | 0.885 |

| Glove | 0.526 | 0.316 | 0.506 | 0.602 | 0.607 | 0.884 |

| BM25 | 0.655 | 0.377 | 0.612 | 0.659 | 0.698 | 0.902 |

| Asymmetric | 0.602 | 0.347 | 0.553 | 0.619 | 0.664 | 0.908 |

| LegalBert | 0.557 | 0.326 | 0.531 | 0.613 | 0.632 | 0.896 |

| Entity | AWS BASE | Whisper | CLM2 + Vocab |

|---|---|---|---|

| Judge | 0.66 | 0.77 | 0.84 |

| CASE NAME | 0.69 | 0.85 | 0.71 |

| Court | 0.98 | 1 | 0.93 |

| Provision | 0.88 | 0.95 | 0.97 |

| Cardinal | 1 | 0.97 | 1 |

| Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| GPT-3(-) | 0.69 | 0.84 | 0.64 | 0.73 |

| GPT-3(+) | 0.78 | 0.85 | 0.75 | 0.80 |

| GPT-3(+) + cos_sim | 0.83 | 0.91 | 0.79 | 0.85 |

| GPT-3 Customised(+) | 0.83 | 0.84 | 0.83 | 0.83 |

| GPT-3 Customised(+) + cos_sim | 0.85 | 0.85 | 0.84 | 0.85 |

| Cross-encoder(-) | 0.69 | 0.61 | 0.93 | 0.74 |

| Cross-encoder(+) | 0.81 | 0.79 | 0.84 | 0.81 |

| CT with in-batch negatives | 0.69 | 0.63 | 0.90 | 0.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saadany, H.; Orăsan, C.; Breslin, C.; Barczentewicz, M.; Walker, S. Employing AI for Better Access to Justice: An Automatic Text-to-Video Linking Tool for UK Supreme Court Hearings. Appl. Sci. 2025, 15, 9205. https://doi.org/10.3390/app15169205

Saadany H, Orăsan C, Breslin C, Barczentewicz M, Walker S. Employing AI for Better Access to Justice: An Automatic Text-to-Video Linking Tool for UK Supreme Court Hearings. Applied Sciences. 2025; 15(16):9205. https://doi.org/10.3390/app15169205

Chicago/Turabian StyleSaadany, Hadeel, Constantin Orăsan, Catherine Breslin, Mikolaj Barczentewicz, and Sophie Walker. 2025. "Employing AI for Better Access to Justice: An Automatic Text-to-Video Linking Tool for UK Supreme Court Hearings" Applied Sciences 15, no. 16: 9205. https://doi.org/10.3390/app15169205

APA StyleSaadany, H., Orăsan, C., Breslin, C., Barczentewicz, M., & Walker, S. (2025). Employing AI for Better Access to Justice: An Automatic Text-to-Video Linking Tool for UK Supreme Court Hearings. Applied Sciences, 15(16), 9205. https://doi.org/10.3390/app15169205