Predicting Student Dropout from Day One: XGBoost-Based Early Warning System Using Pre-Enrollment Data

Abstract

Featured Application

Abstract

1. Introduction

2. Theoretical Foundations and Literature Review

3. Materials and Methods

3.1. Business Understanding

3.2. Data Understanding

3.3. Data Preparation

3.4. Modeling

3.4.1. Model Selection

- Logistic Regression: 0.6752 ± 0.0124

- Random Forest: 0.6842 ± 0.0131

- LightGBM: 0.6882 ± 0.0090

- XGBoost: 0.6892 ± 0.0100

- Random Forest: AUC-ROC = 0.7055, Accuracy = 64%, MCC = 0.27. Dropout class: Precision = 0.42, Recall = 0.65, F1-score = 0.51, correctly identifying 1276 true positives.

- LightGBM: AUC-ROC = 0.7023, Accuracy = 64%, MCC = 0.26. Dropout class: Precision = 0.42, Recall = 0.67, F1-score = 0.51, accurately identifying 1312 true positives.

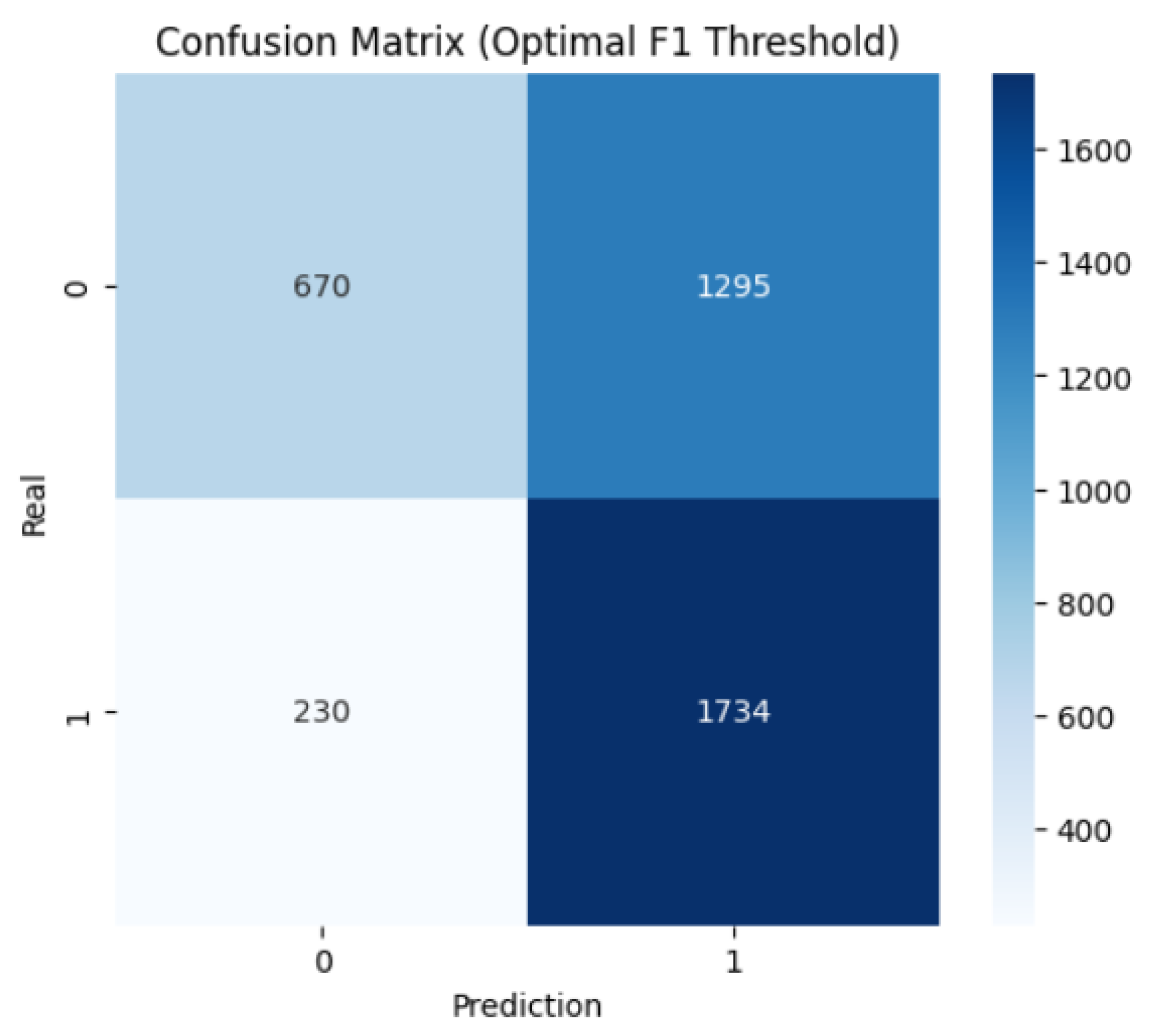

- XGBoost: AUC-ROC = 0.7018, Accuracy = 60%, MCC = 0.22. Dropout class: Precision = 0.34, Recall = 0.91, F1-score = 0.50, correctly identifying 1788 true positives.

3.4.2. Model Training and Tuning

- 200 trees;

- maximum depth of 7;

- learning rate of 0.05;

- 80% subsampling for both rows and features per tree.

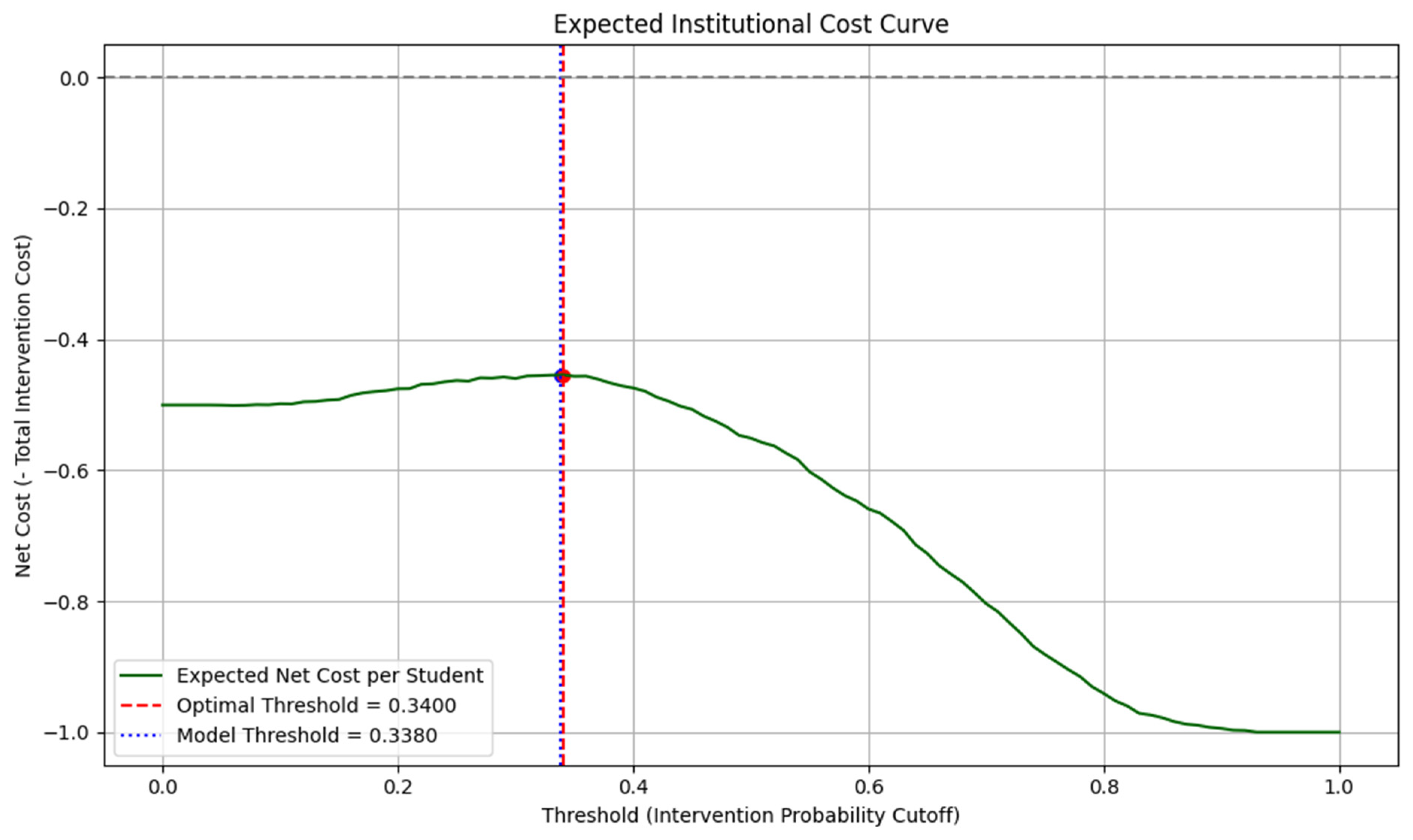

3.5. Evaluation

3.6. Model Deployment

3.7. Ethical Considerations and Availability

4. Results

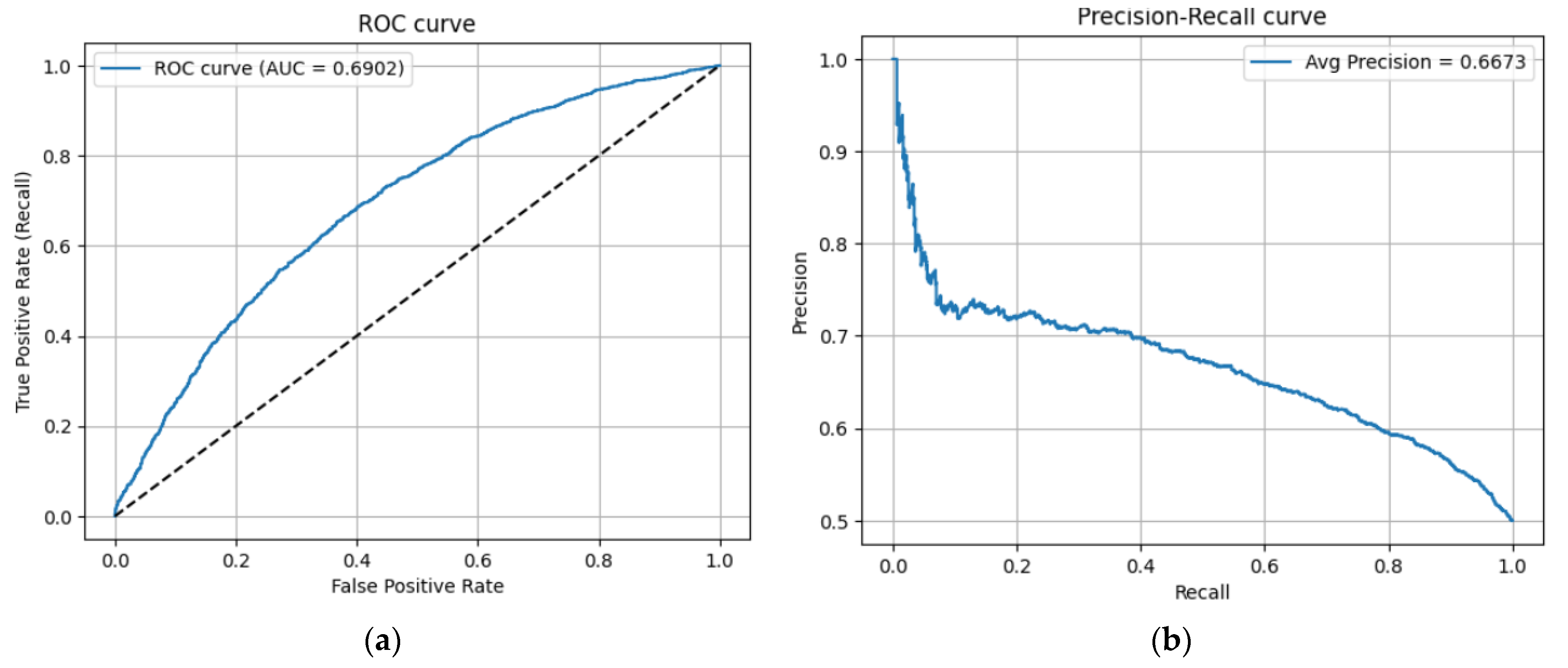

4.1. Model Performance

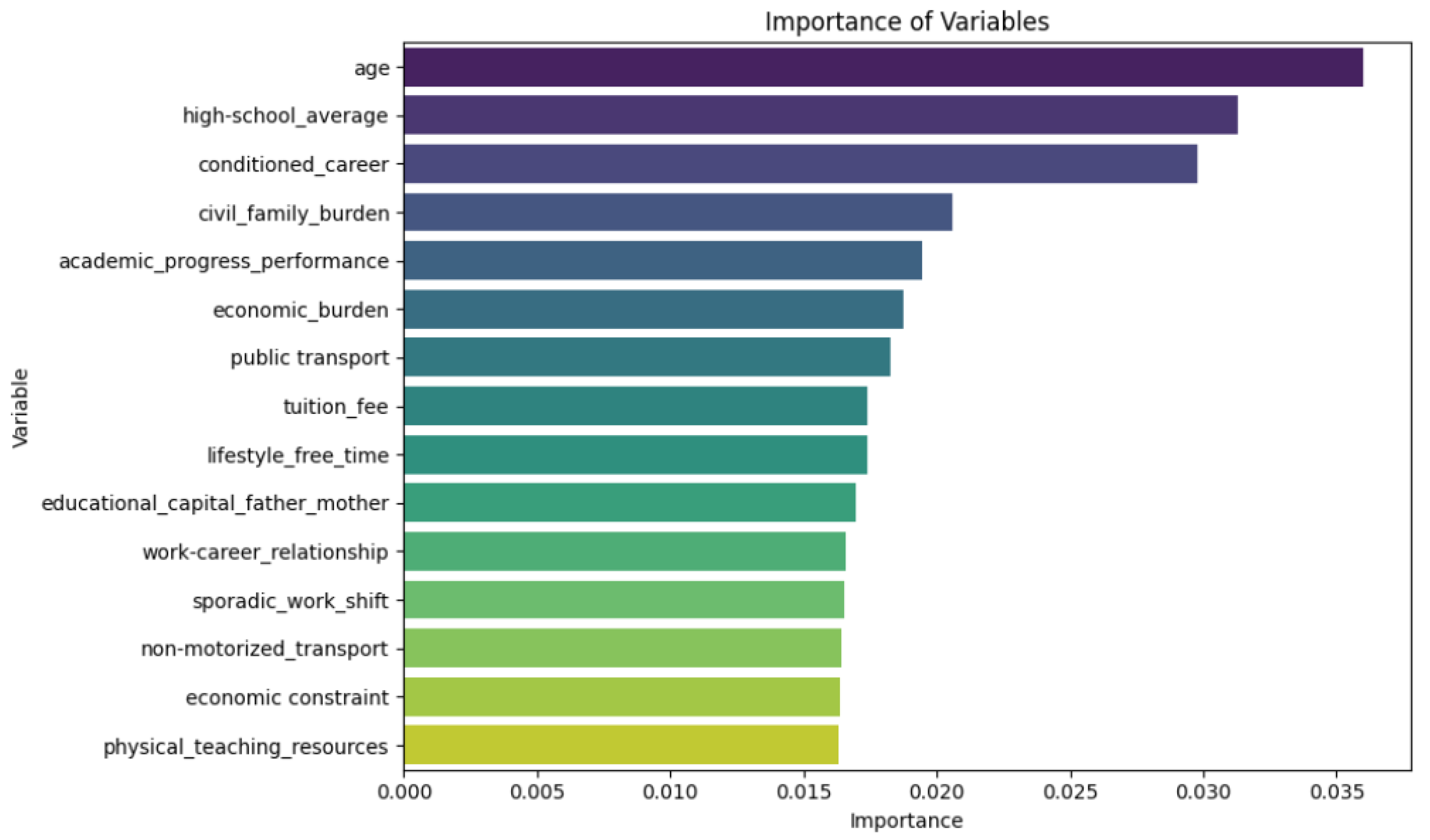

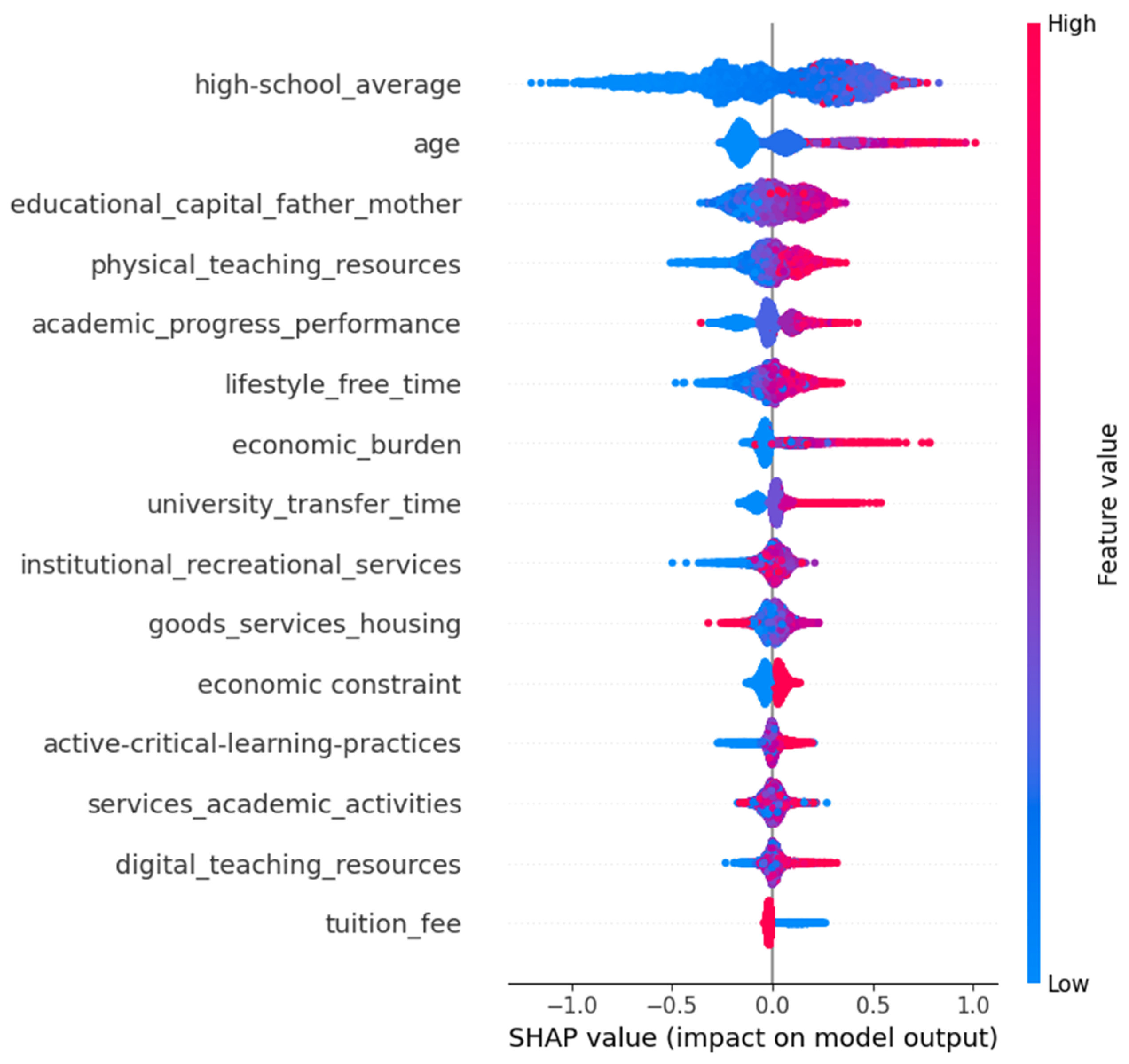

4.2. Predictor Importance

4.3. Prospective Application to New Cohorts

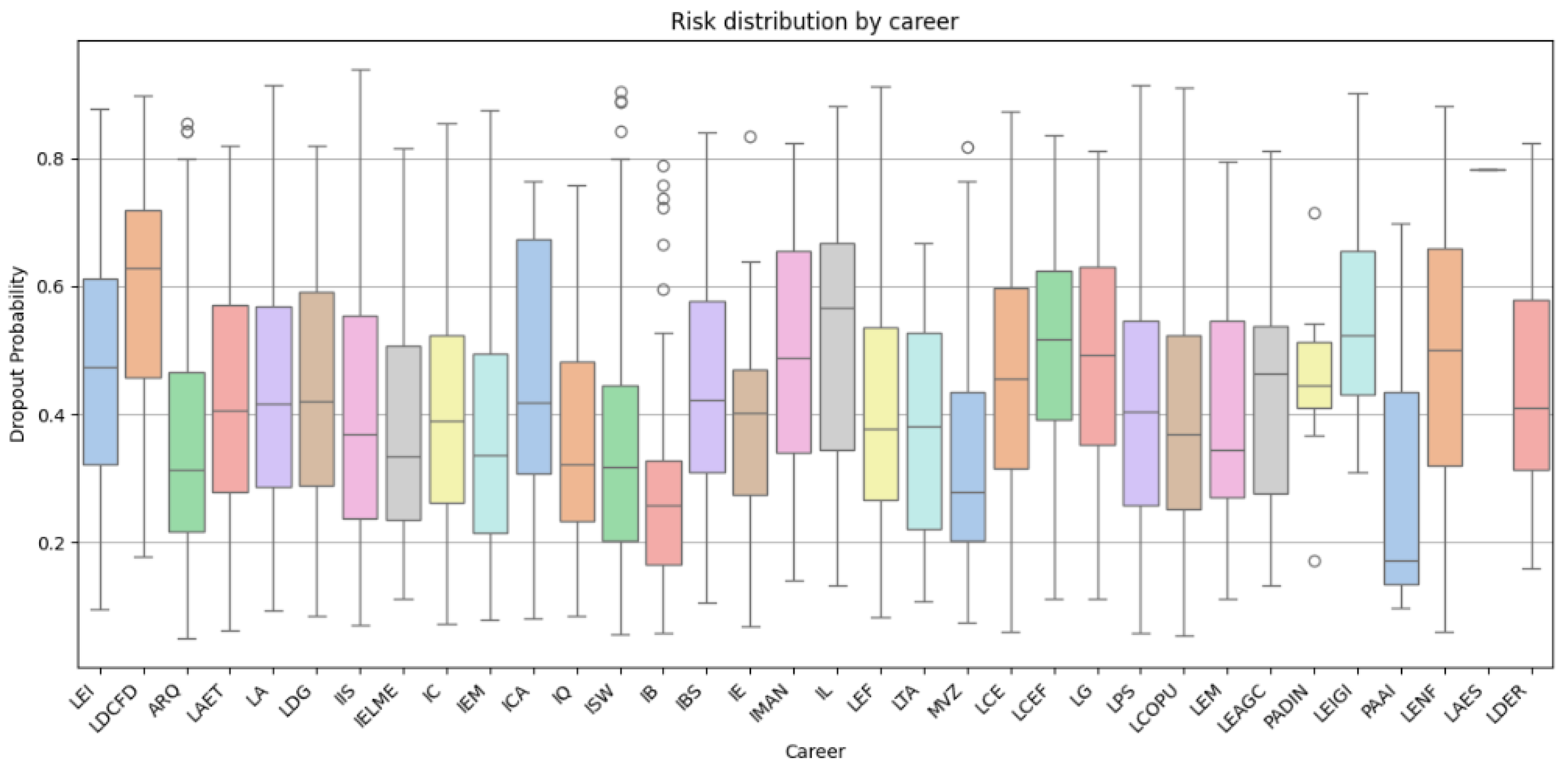

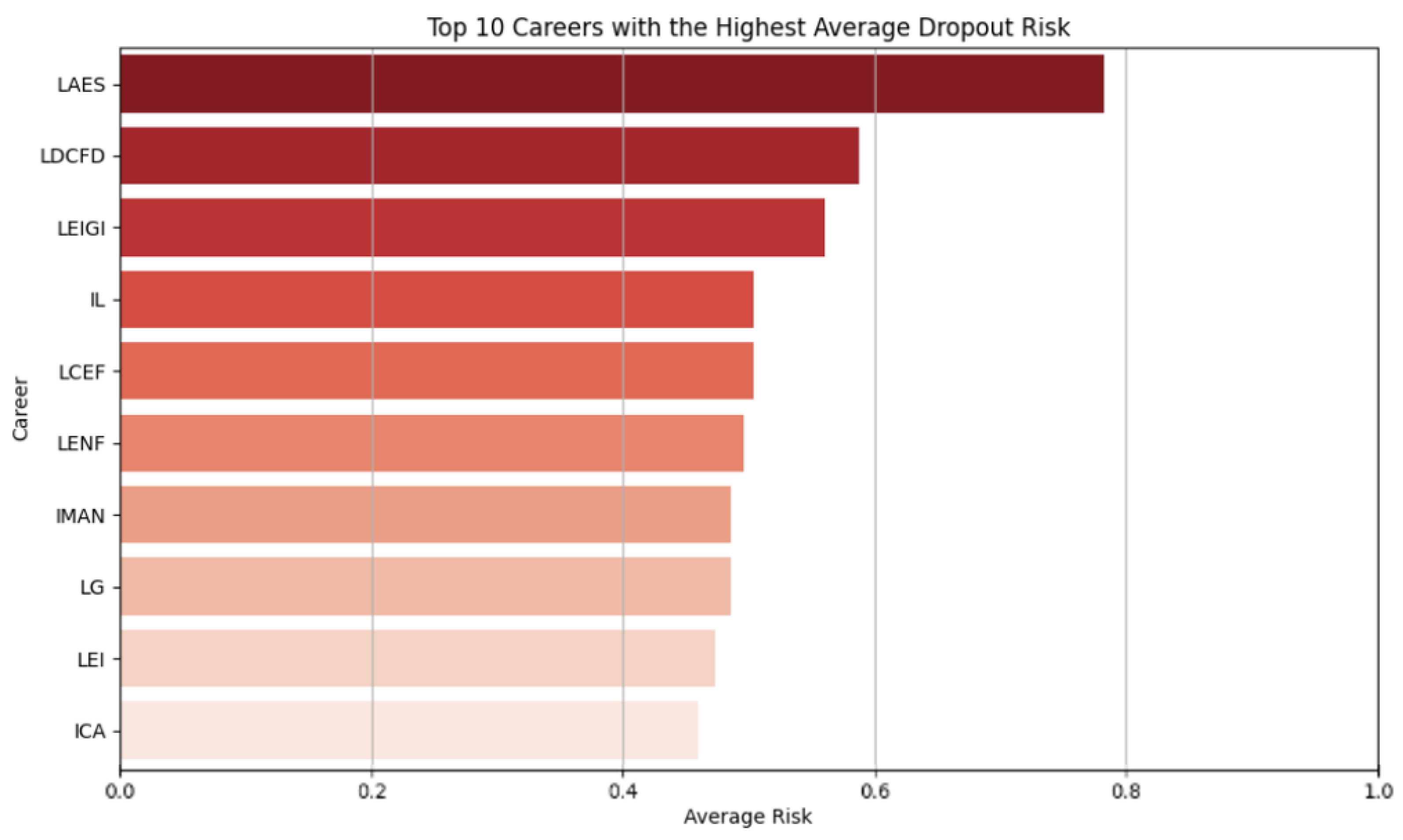

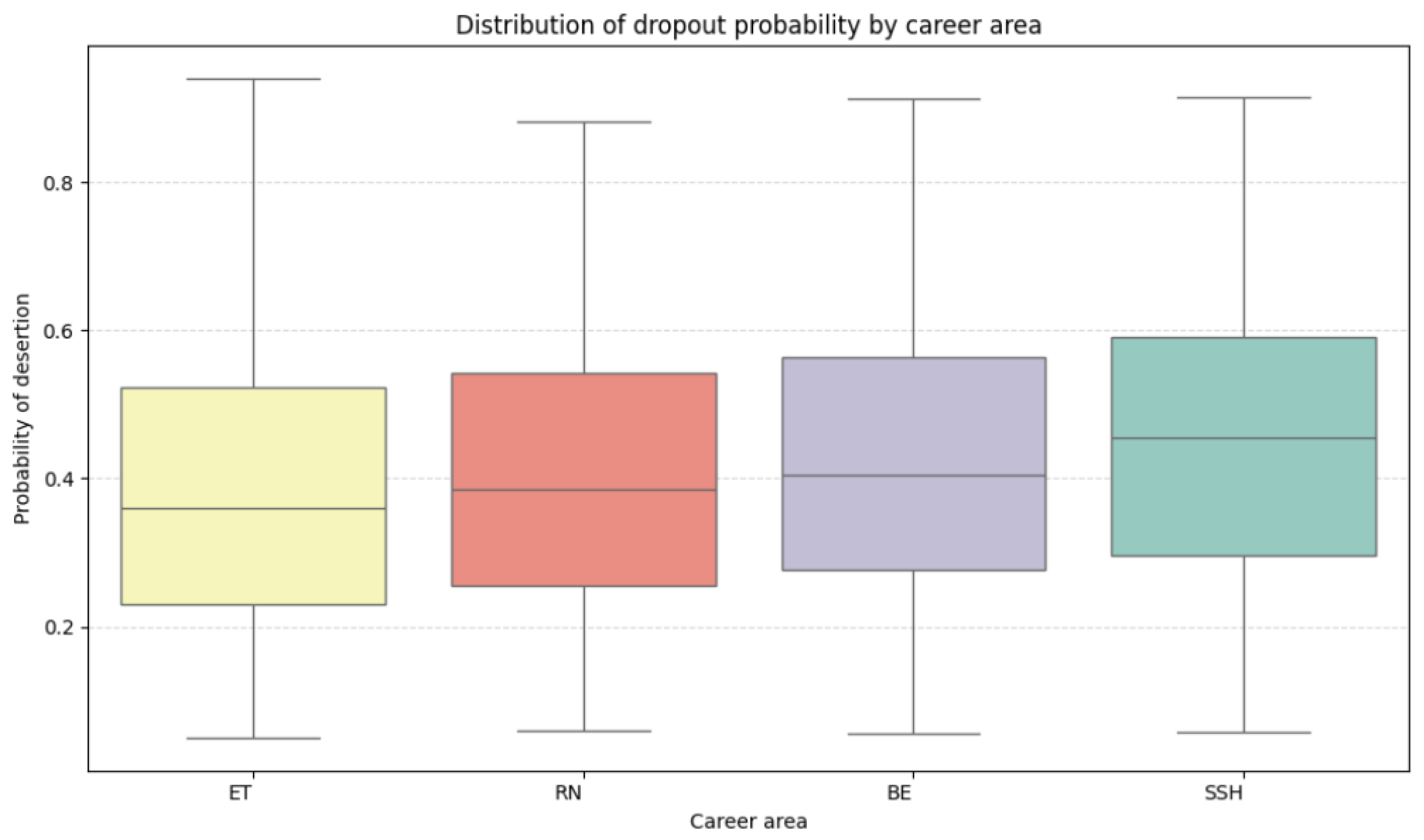

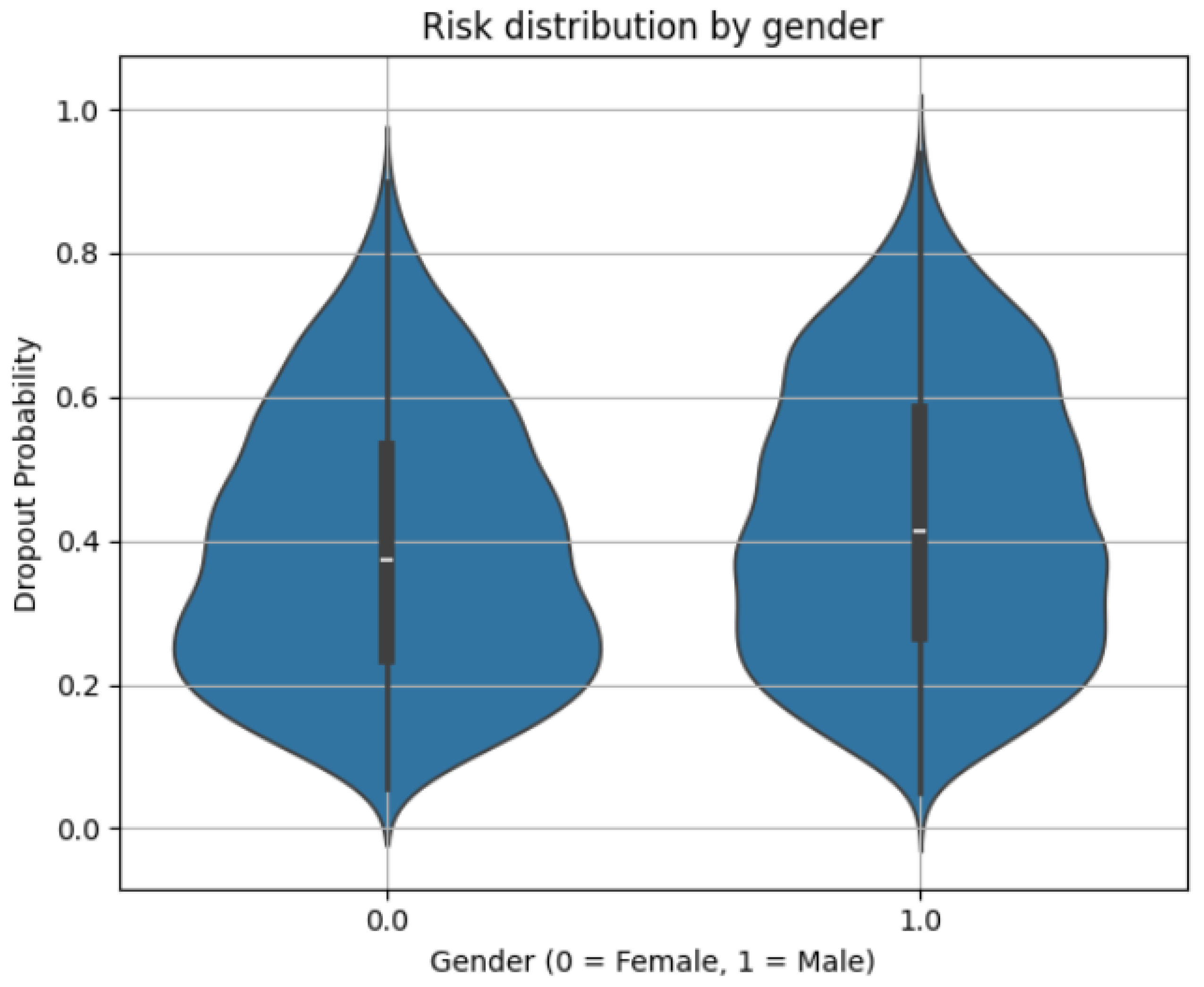

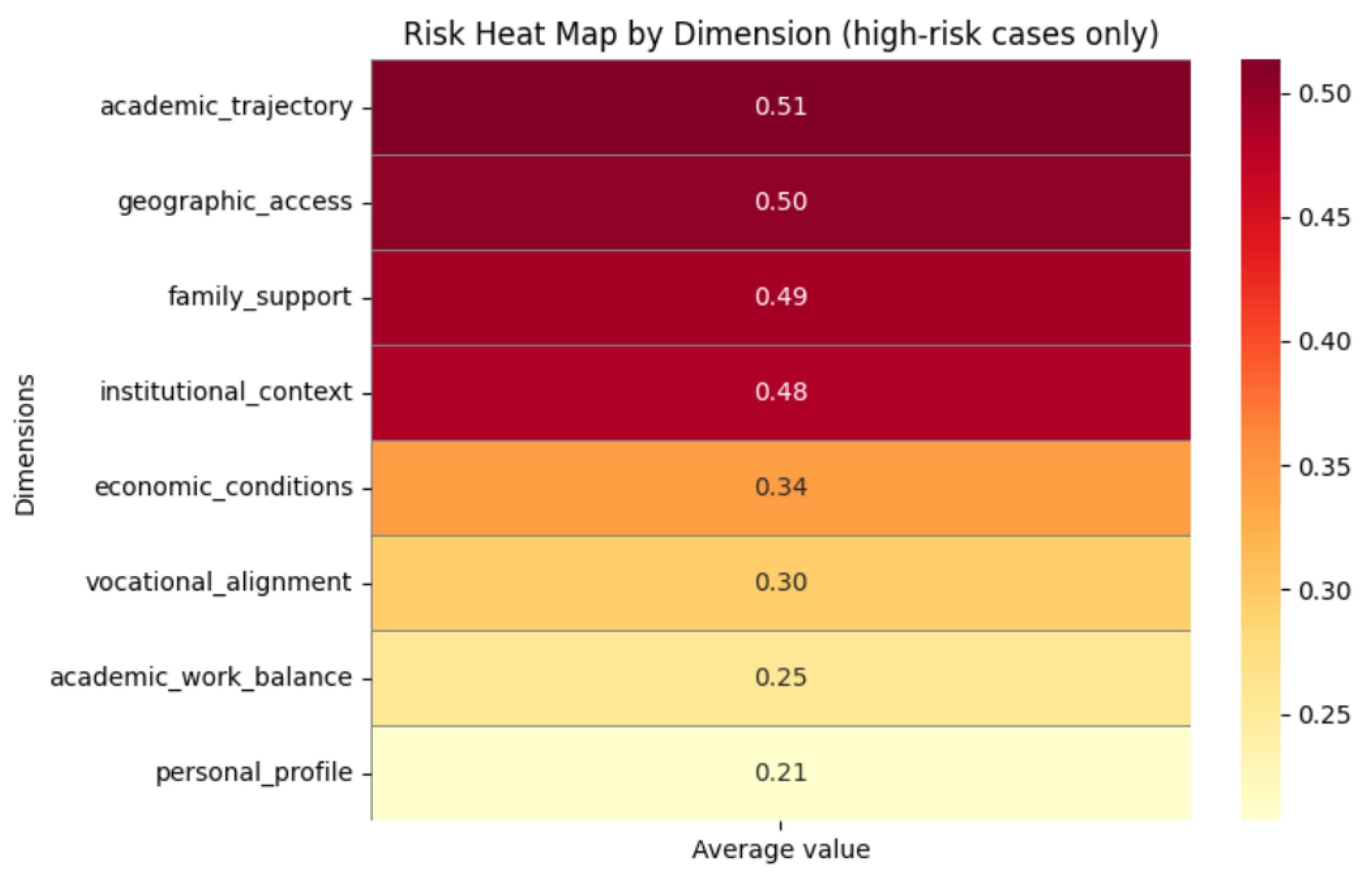

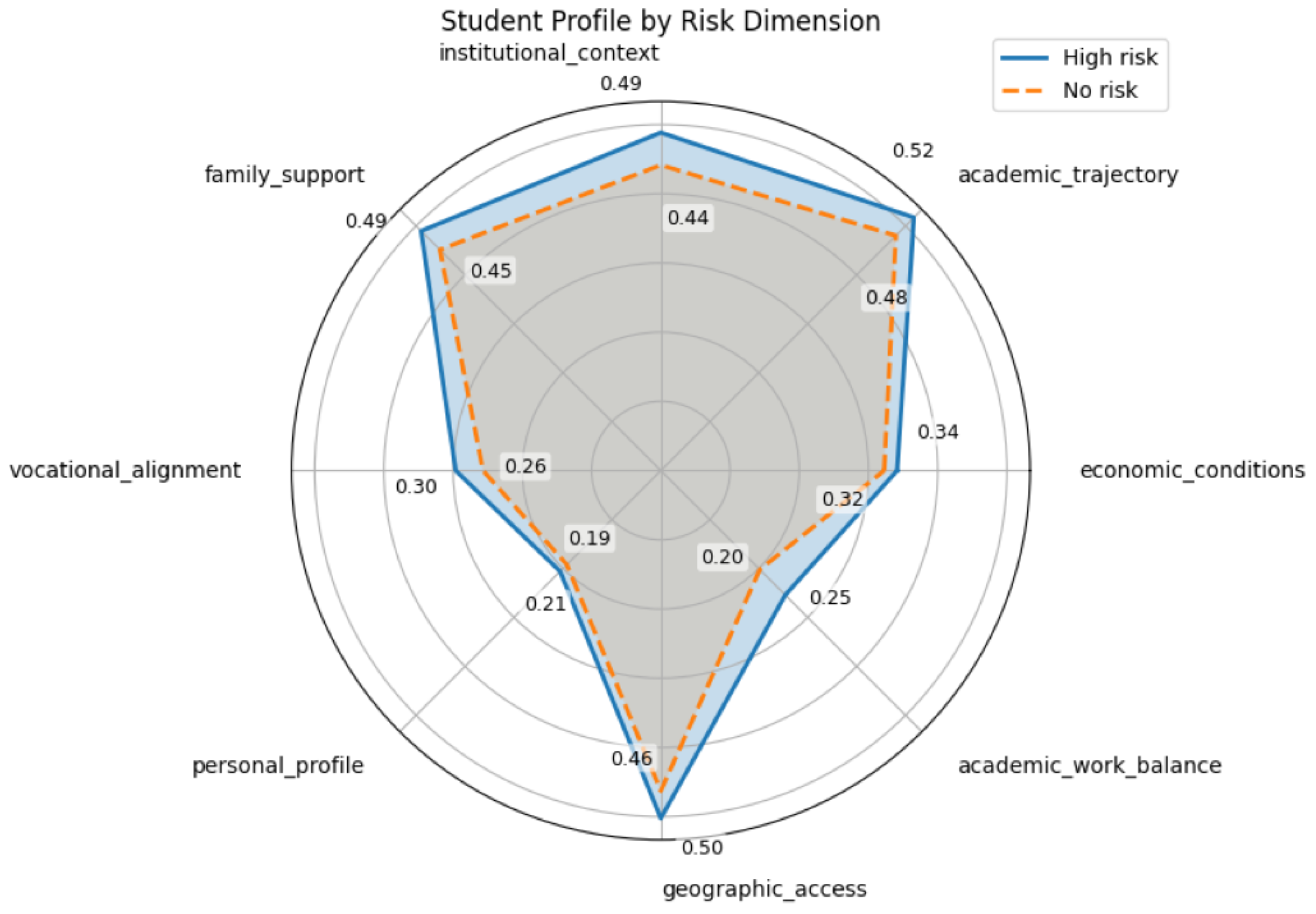

4.4. Risk Dimension Analysis

5. Discussion

5.1. Interpretation of Findings

5.2. Research Limitations

5.3. Practical Implications and Future Research

5.4. Ethical Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ARQ | Bachelor’s in Architecture |

| IB | Biotechnology Engineering |

| IBS | Biosystems Engineering |

| IC | Civil Engineering |

| ICA | Environmental Science Engineering |

| IELME | Electrical and Electronics Engineering |

| IEM | Electromechanical Engineering |

| IEM | Electronics Engineering |

| IIS | Industrial and Systems Engineering |

| IL | Logistics Engineering |

| IMAN | Manufacturing Engineering |

| IQ | Chemical Engineering |

| ISW | Software Engineering |

| LA | Bachelor’s in Business Administration |

| LAES | Bachelor’s in Strategic Management |

| LAET | Bachelor’s in Tourism Business Administration |

| LCE | Bachelor’s in Educational Sciences |

| LCEF | Bachelor’s in Exercise and Physical Sciences |

| LCP | Bachelor’s in Public Accounting |

| LDCFD | Bachelor’s in Physical Activity and Sport Management |

| LDER | Bachelor’s in Law |

| LDG | Bachelor’s in Graphic Design |

| LEAGC | Bachelor’s in Arts Education and Cultural Management |

| LEF | Bachelor’s in Economics and Finance |

| LEGI | Bachelor’s in Early Education and Institutional Management |

| LEI | Bachelor’s in Early Childhood Education |

| LEM | Bachelor’s in Marketing |

| LENF | Bachelor’s in Nursing |

| LG | Bachelor’s in Gastronomy |

| LGDA | Bachelor’s in Arts Management and Development |

| LPS | Bachelor’s in Psychology |

| LTA | Bachelor’s in Food Technology |

| MVZ | Veterinary Medicine and Animal Science |

| PAAI | Associate Degree in Industrial Automation |

| PADIN | Associate Degree in Child Development |

Appendix A

| Dimension | Description | Theoretical Foundation | Variables |

|---|---|---|---|

| Economic Conditions | Assesses financial burden, economic independence, and perceived resource sufficiency. | Bean & Metzner (external factors), Bourdieu (economic capital), Latin American dropout studies | 01–12 |

| Academic Trajectory | Captures prior academic performance, perceived academic limitations, and admission conditions. | Tinto (pre-entry attributes), EDM literature on academic history | 13–19 |

| Academic Experience and Teaching Quality | Measures classroom dynamics, teaching quality, and access to academic support resources. | Tinto (academic integration), Kerby (institutional experience) | 20–26 |

| Institutional Context and Perception | Includes prior educational background, modality, and perception of institutional prestige and support. | Tinto (institutional fit), Bourdieu (symbolic capital), EDM on student satisfaction | 27–33 |

| Family Support and Sociocultural Capital | Reflects family stability, parental education, involvement, and household environment. | Bourdieu (social/cultural capital), Bean & Metzner (external environment) | 34–41 |

| Vocational Alignment and Decision Clarity | Captures motivations, expectations, and emotional alignment with the chosen career. | Kerby (adaptation), Bean & Metzner (goal commitment), Latin American research on vocational fit | 42–47 |

| Personal Profile and Vulnerability | Includes health status, personal limitations, habits, age, and gender as potential risk factors. | Kerby (adaptive level), equity and risk literature, health and dropout links | 48–55 |

| Geographic Access | Analyzes physical barriers to attending university, including transport and distance. | Bean & Metzner (environmental variables), access literature | 56–60 |

| Academic–Work Balance | Examines the student’s work schedules and their alignment with academic responsibilities. | Bean & Metzner (working students), research on time conflict and fatigue | 61–69 |

| # | Variable | Description |

|---|---|---|

| 1 | property_type_housing | Structural indicator of socioeconomic status (type of housing ownership). |

| 2 | goods_services_housing | Access to basic services in the household (material infrastructure). |

| 3 | economic_burden | Direct financial pressure on the student or their family. |

| 4 | economic_restriction | Economic difficulties that limit access or persistence. |

| 5 | tuition_fee | Sources of tuition funding. |

| 6 | financial_independence | Degree of personal financial autonomy. |

| 7 | resources_academic_activities | Availability of academic resources at home. |

| 8 | accessibility | General financial accessibility to the university (transport, services, etc.). |

| 9 | job_opportunity | Employment expectations as a financial motivator. |

| 10 | economic_perception_itson_parents | Family opinion on the institutional financial situation. |

| 11 | perception_economic_terms | Personal perception of the economic benefits of studying. |

| 12 | economic constraint | Self-perception of finances as a constraint for studying. |

| 13 | high-school_average | Objective indicator of prior academic performance. |

| 14 | academic_progress_performance | History of academic lag (failed or delayed subjects). |

| 15 | habits_study_participation | Daily study behaviors and practices. |

| 16 | techniques_study_organization | Personal study organization strategies. |

| 17 | areas_reinforcement | Self-diagnosis of specific academic weaknesses. |

| 18 | academic_limitation | Explicit recognition of academic limitations. |

| 19 | conditioned_career | Admission status conditioned by academic risk. |

| 20 | passive teaching | Traditional teaching style centered on the instructor. |

| 21 | active-critical-learning-practices | Alternative teaching style based on active participation and critical thinking. |

| 22 | teaching_performance | Perceived quality of teaching staff. |

| 23 | academic_information_received | Clarity and usefulness of academic information provided. |

| 24 | services_academic_activities | Access to and quality of services complementing instruction. |

| 25 | physical_teaching_resources | Availability of physical resources (classrooms, equipment, materials). |

| 26 | digital_teaching_resources | Access to learning technologies and digital resources. |

| 27 | schooled_institution | Previous study modality (in-person). |

| 28 | previous_studies_open-line | Previous study modality (remote/online). |

| 29 | public_institution | Type of prior institution (public). |

| 30 | private_institution | Type of prior institution (private). |

| 31 | cultural_activities | Participation in institutional cultural integration activities. |

| 32 | prestige_quality | General perception of institutional prestige and quality. |

| 33 | institutional_recreational_services | Access to and use of university recreational services. |

| 34 | lifestyle_free_time | Personal use of free time and study–recreation balance. |

| 35 | educational_capital_father_mother | Parents’ educational level: structural cultural capital. |

| 36 | housing_company | Living arrangement (with parents/guardians): indicator of structure and family support. |

| 37 | academic_parental_involvement | Parental academic involvement (monitoring, help, supervision). |

| 38 | educational_parental_assessment | Parents’ valuation of education: expectations and educational beliefs. |

| 39 | civil_family_burden | Civil family burden (marriage, children, direct family responsibilities). |

| 40 | social_environment_community | Perception of the social environment in the university community: openness and integration. |

| 41 | influence_outsiders | Influence of external people (friends, acquaintances) on educational decisions. |

| 42 | vocational_influence | Influence of genuine vocational motives in career choice. |

| 44 | expectation_professional_prestige | Career choice influenced by status or job prestige expectations. |

| 45 | single_option | Perception of not having had real options in choosing a career. |

| 46 | uncertainty_election | Regret, confusion, or lack of clarity about career choice. |

| 47 | career_satisfaction | Level of satisfaction with chosen career at entry. |

| 48 | gender | Basic sociodemographic variable with potential differential effects. |

| 49 | age | May reflect maturity, lag, or non-traditional status. |

| 50 | health_condition_special_needs | Health limitations or special needs. |

| 51 | personal_limitation | Non-medical personal barriers (family, psychological, etc.). |

| 52 | intensity_tobacco_alcohol_consumption | Consumption habits as a risk factor. |

| 53 | non_drinker | Possible indicator of self-care or healthy habits. |

| 54 | practice_sport | Physical activity as a wellness indicator. |

| 55 | desire_work_experience | Willingness toward independence and personal development. |

| 56 | distance_campus | Physical distance between home and campus; direct impact on accessibility. |

| 57 | university_transfer_time | Actual commuting time; reflects daily logistical barriers. |

| 58 | public transport | Access or reliance on collective transport; common among low-resource students. |

| 59 | private_motorized_transport | Availability of car/motorbike; associated with greater autonomy. |

| 60 | non-motorized_transport | Walking or biking; reflects proximity or lack of other means. |

| 61 | work-career_relationship | Evaluates match between current job and career; key to analyzing conflict or synergy. |

| 62 | public_institutional_labor_scope | Intention to work in the public/institutional sector; may align better with academic contexts. |

| 63 | work_field_private_entrepreneur | Intention to work in the private/informal sector; usually less compatible with academic life. |

| 64 | morning_work_shift | Morning shift; may interfere with classes. |

| 65 | evening_work_shift | Evening shift; may impact extracurriculars or study. |

| 66 | night_shift_work | Night shift; associated with fatigue and schedule disruption. |

| 67 | mixed_work_shift | Irregular or mixed shifts; reflects instability. |

| 68 | weekend_work_shift | Weekend work; reduces rest and study time. |

| 69 | sporadic_work_shift | Sporadic or irregular work; signals precariousness or flexibility. |

Appendix B. Sensitivity Analysis of Self-Reported Variables

| Variable | AUC Drop | F1 Drop |

|---|---|---|

| tuition_fee | 0.0021 | 0.0054 |

| financial_independence | 0.0019 | 0.0032 |

| gender | 0.0017 | 0.0007 |

| high-school_average | 0.0014 | 0.0015 |

| university_transfer_time | 0.0014 | −0.0004 |

| economic_burden | 0.0013 | 0.0009 |

| resources_academic_activities | 0.0013 | 0.0006 |

| age | 0.0009 | 0.0017 |

| conditioned_career | 0.0008 | 0.0001 |

| distance_campus | 0.0008 | −0.0006 |

| lifestyle_free_time | 0.0007 | 0.0031 |

| goods_services_housing | 0.0007 | −0.0001 |

| institutional_recreational_services | 0.0006 | 0.0026 |

| passive teaching | 0.0005 | 0.0029 |

| educational_capital_father_mother | 0.0005 | 0.0024 |

| techniques_study_organization | 0.0004 | 0 |

| economic constraint | 0.0004 | 0.0013 |

| expectation_professional_prestige | 0.0004 | 0.0004 |

| accessibility | 0.0004 | 0.0003 |

| health_condition_special_needs | 0.0003 | 0.0008 |

| private_motorized_transport | 0.0003 | 0.0009 |

| civil_family_burden | 0.0003 | −0.0002 |

| mixed_work_shift | 0.0003 | 0 |

| public_institutional_labor_scope | 0.0002 | 0.0014 |

| evening_work_shift | 0.0002 | −0.0001 |

| non-motorized_transport | 0.0002 | 0.0004 |

| previous_studies_open-line | 0.0002 | 0.0001 |

| private_institution | 0.0002 | 0 |

| practice_sport | 0.0001 | 0.0017 |

| social_environment_community | 0.0001 | 0.0007 |

| vocational_influence | 0.0001 | 0.0003 |

| academic_parental_involvement | 0.0001 | 0.0015 |

| night_shift_work | 0.0001 | 0 |

| academic_progress_performance | 0.0001 | 0.0011 |

| job_opportunity | 0 | −0.0003 |

| prestige_quality | 0 | 0.0021 |

| public_institution | 0 | −0.0003 |

| economic_perception_itson_parents | 0 | −0.0001 |

| perception_economic_terms | 0 | 0.001 |

| property_type_housing | 0 | 0 |

| non_drinker | 0 | 0 |

| personal_limitation | −0.0001 | −0.0003 |

| sporadic_work_shift | −0.0001 | 0 |

| desire_work_experience | −0.0001 | 0.0003 |

| single_option | −0.0001 | −0.0001 |

| services_academic_activities | −0.0001 | −0.0002 |

| schooled_institution | −0.0001 | −0.0004 |

| intensity_tobacco_alcohol_consumption | −0.0001 | −0.0001 |

| morning_work_shift | −0.0001 | 0.0002 |

| habits_study_participation | −0.0001 | −0.0008 |

| weekend_work_shift | −0.0001 | −0.0004 |

| economic_restriction | −0.0002 | −0.0002 |

| influence_outsiders | −0.0002 | 0.0017 |

| academic_limitation | −0.0002 | 0 |

| academic_information_received | −0.0002 | 0.0006 |

| physical_teaching_resources | −0.0002 | 0.0013 |

| career_satisfaction | −0.0003 | −0.0017 |

| cultural_activities | −0.0003 | 0.0006 |

| teaching_performance | −0.0003 | 0.0001 |

| housing_company | −0.0003 | 0 |

| educational_parental_assessment | −0.0003 | 0.0019 |

| work-career_relationship | −0.0004 | −0.0005 |

| work_field_private_entrepreneur | −0.0005 | 0.0007 |

| areas_reinforcement | −0.0005 | 0.0011 |

| active-critical-learning-practices | −0.0005 | 0.0001 |

| public transport | −0.0006 | −0.0002 |

| digital_teaching_resources | −0.0006 | 0.0011 |

| uncertainty_election | −0.0007 | 0.0008 |

References

- Heredia, R.; Carcausto-Calla, W. Factors Associated with Student Dropout in Latin American Universities: Scoping Review. J. Educ. Soc. Res. 2024, 14, 62. [Google Scholar] [CrossRef]

- Sandoval-Palis, I.; Naranjo, D.; Vidal, J.; Gilar-Corbí, R. Early Dropout Prediction Model: A Case Study of University Leveling Course Students. Sustainability 2020, 12, 9314. [Google Scholar] [CrossRef]

- Nurmalitasari; Long, Z.A.; Noor, M.F.M.; Xie, H. Factors Influencing Dropout Students in Higher Education. Educ. Res. Int. 2023, 2023, 7704142. [Google Scholar] [CrossRef]

- Aina, C.; Baici, E.; Casalone, G.; Pastore, F. The Determinants of University Dropout: A Review of the Socio-Economic Literature. Socio-Econ. Plan. Sci. 2022, 79, 101102. [Google Scholar] [CrossRef]

- Ranasinghe, K.; Fernando, T.; Vineeshiya, N.; Bozkurt, A. Identifying Reasons That Contribute to Dropout Rates in Open and Distance Learning. Int. Rev. Res. Open Distrib. Learn. 2025, 26, 162–183. [Google Scholar] [CrossRef]

- Orozco-Rodríguez, C.; Viegas, C.; Costa, A.; Lima, N.; Alves, G. Dropout Rate Model Analysis at an Engineering School. Educ. Sci. 2025, 15, 287. [Google Scholar] [CrossRef]

- Kemper, L.; Vorhoff, G.; Wigger, B. Predicting Student Dropout: A Machine Learning Approach. Eur. J. High. Educ. 2020, 10, 28–47. [Google Scholar] [CrossRef]

- Lakshmi, S.; Maheswaran, C. Effective Deep Learning Based Grade Prediction System Using Gated Recurrent Unit (GRU) with Feature Optimization Using Analysis of Variance (ANOVA). Automatika 2024, 65, 425–440. [Google Scholar] [CrossRef]

- Zapata-Giraldo, P.; Acevedo-Osorio, G. Desafíos y Perspectivas de los Sistemas Educativos en América Latina: Un Análisis Comparativo. Pedagog. Constellations 2024, 3, 89–101. [Google Scholar] [CrossRef]

- Gonzalez-Nucamendi, A.; Noguez, J.; Neri, L.; Robledo-Rella, V.; García-Castelán, R.M.G. Predictive Analytics Study to Determine Undergraduate Students at Risk of Dropout. Front. Educ. 2023, 8, 1244686. [Google Scholar] [CrossRef]

- Nimy, E.; Mosia, M.; Chibaya, C. Identifying At-Risk Students for Early Intervention—A Probabilistic Machine Learning Approach. Appl. Sci. 2023, 13, 3869. [Google Scholar] [CrossRef]

- Martins, M.; Baptista, L.; Machado, J.; Realinho, V. Multi-class phased prediction of academic performance and dropout in higher education. Appl. Sci. 2023, 13, 4702. [Google Scholar] [CrossRef]

- Vaarma, M.; Li, H. Predicting student dropouts with machine learning: An empirical study in Finnish higher education. Technol. Soc. 2024, 78, 102474. [Google Scholar] [CrossRef]

- Song, Z.; Sung, S.; Park, D.; Park, B. All-year dropout prediction modeling and analysis for university students. Appl. Sci. 2023, 13, 1143. [Google Scholar] [CrossRef]

- Ridwan, A.; Priyatno, A. Predict students’ dropout and academic success with XGBoost. J. Educ. Comput. Appl. 2024, 1, 1–8. [Google Scholar] [CrossRef]

- Glandorf, D.; Lee, H.; Orona, G.; Pumptow, M.; Yu, R.; Fischer, C. Temporal and between-group variability in college dropout prediction. In Proceedings of the 14th Learning Analytics and Knowledge Conference, Kyoto, Japan, 18–24 March 2024. [Google Scholar] [CrossRef]

- Berens, J.; Schneider, K.; Görtz, S.; Oster, S.; Burghoff, J. Early detection of students at risk—Predicting student dropouts using administrative student data and machine learning methods. SSRN Electron. J. 2018, 11, 7259. [Google Scholar] [CrossRef]

- Shynarbek, N.; Saparzhanov, Y.; Saduakassova, A.; Orynbassar, A.; Sagyndyk, N. Forecasting dropout in university based on students’ background profile data through automated machine learning approach. In Proceedings of the 2022 International Conference on Smart Information Systems and Technologies (SIST), Nur-Sultan, Kazakhstan, 28–30 April 2022. [Google Scholar] [CrossRef]

- Baranyi, M.; Nagy, M.; Molontay, R. Interpretable deep learning for university dropout prediction. In Proceedings of the 21st Annual Conference on Information Technology Education, New York, NY, USA, 7–9 October 2020. [Google Scholar] [CrossRef]

- Del Bonifro, F.; Gabbrielli, M.; Lisanti, G.; Zingaro, S. Student dropout prediction. In Artificial Intelligence in Education; Springer: Cham, Switzerland, 2020; Volume 12163, pp. 109–121. [Google Scholar] [CrossRef]

- Kabathova, J.; Drlík, M. Towards predicting student’s dropout in university courses using different machine learning techniques. Appl. Sci. 2021, 11, 3130. [Google Scholar] [CrossRef]

- Villar, A.; De Andrade, C. Supervised machine learning algorithms for predicting student dropout and academic success: A comparative study. Discov. Artif. Intell. 2024, 4, 2. [Google Scholar] [CrossRef]

- Putra, L.; Prasetya, D.; Mayadi, M. Student Dropout Prediction Using Random Forest and XGBoost Method. INTENSIF J. Ilm. Penelit. Penerapan Teknol. Sist. Inf. 2025, 9, 147–157. [Google Scholar] [CrossRef]

- Romsaiyud, W.; Nurarak, P.; Phiasai, T.; Chadakaew, M.; Chuenarom, N.; Aksorn, P.; Thammakij, A. Predictive Modeling of Student Dropout Using Intuitionistic Fuzzy Sets and XGBoost in Open University. In Proceedings of the 2024 7th International Conference on Machine Learning and Machine Intelligence (MLMI), Osaka, Japan, 2–4 August 2024. [Google Scholar] [CrossRef]

- Segura, M.; Mello, J.; Hernández, A. Machine Learning Prediction of University Student Dropout: Does Preference Play a Key Role? Mathematics 2022, 10, 3359. [Google Scholar] [CrossRef]

- Tinto, V. Dropout from Higher Education: A Theoretical Synthesis of Recent Research. Rev. Educ. Res. 1975, 45, 125–189. [Google Scholar] [CrossRef]

- Tinto, V. Limits of Theory and Practice in Student Attrition. J. High. Educ. 1982, 53, 687–700. [Google Scholar] [CrossRef]

- Tinto, V. Research and Practice of Student Retention: What Next? J. Coll. Stud. Retent. 2006, 8, 1–19. [Google Scholar] [CrossRef]

- Tinto, V. Through the Eyes of Students. J. Coll. Stud. Retent. 2017, 19, 254–269. [Google Scholar] [CrossRef]

- Bean, J.P.; Metzner, B.S. A Conceptual Model of Nontraditional Undergraduate Student Attrition. Rev. Educ. Res. 1985, 55, 485–540. [Google Scholar] [CrossRef]

- Bourdieu, P. Poder, Derecho y Clases Sociales, 2nd ed.; Desclée de Brouwer: Bilbao, Spain, 2001; Available online: https://erikafontanez.com/wp-content/uploads/2015/08/pierre-bourdieu-poder-derecho-y-clases-sociales.pdf (accessed on 23 May 2025).

- Kerby, M.B. Toward a New Predictive Model of Student Retention in Higher Education: An Application of Classical Sociological Theory. J. Coll. Stud. Retent. 2015, 17, 138–161. [Google Scholar] [CrossRef]

- Elder, A.C. Holistic Factors Related to Student Persistence at a Large, Public University. J. Furth. High. Educ. 2020, 45, 65–78. [Google Scholar] [CrossRef]

- Mitra, S.; Zhang, Y. Factors Related to First-Year Retention Rate in a Public Minority Serving Institution with Nontraditional Students. J. Educ. Bus. 2021, 97, 312–319. [Google Scholar] [CrossRef]

- Franco, E.; Martínez, R.; Domínguez, V. Modelos Predictivos de Riesgo Académico en Carreras de Computación con Minería de Datos Educativos. RED Rev. Educ. Distancia. 2021, 21, 1–36. [Google Scholar] [CrossRef]

- Matheu-Pérez, A.; Ruff-Escobar, C.; Ruiz-Toledo, M.; Benites-Gutierrez, L.; Morong-Reyes, G. Modelo de Predicción de la Deserción Estudiantil de Primer Año en la Universidad Bernardo O’Higgins. Educ. Pesqui. 2018, 44, e172094. [Google Scholar] [CrossRef]

- Campbell, C.M.; Mislevy, J.L. Student Perceptions Matter: Early Signs of Undergraduate Student Retention/Attrition. J. Coll. Stud. Retent. 2013, 14, 467–493. [Google Scholar] [CrossRef]

- Hung, J.; Wang, M.; Wang, S.; Abdelrasoul, M.; Li, Y.; He, W. Identifying At-Risk Students for Early Interventions—A Time-Series Clustering Approach. IEEE Trans. Emerg. Top. Comput. 2017, 5, 45–55. [Google Scholar] [CrossRef]

- Hoffait, A.S.; Schyns, M. Early Detection of University Students with Potential Difficulties. Decis. Support Syst. 2017, 101, 1–11. [Google Scholar] [CrossRef]

- Marbouti, F.; Diefes-Dux, H.; Madhavan, K. Models for Early Prediction of At-Risk Students in a Course Using Standards-Based Grading. Comput. Educ. 2016, 103, 1–15. [Google Scholar] [CrossRef]

- Andrade-Girón, D.; Sandivar-Rosas, J.; Marín-Rodriguez, W.; Susanibar-Ramirez, E.; Toro-Dextre, E.; Ausejo-Sanchez, J.; Villarreal-Torres, H.; Angeles-Morales, J. Predicting student dropout based on machine learning and deep learning: A systematic review. EAI Endorsed Trans. Scalable Inf. Syst. 2023, 10, 1–10. [Google Scholar] [CrossRef]

- Bertola, G. University dropout problems and solutions. J. Econ. 2022, 138, 221–248. [Google Scholar] [CrossRef]

- Carruthers, C.; Dougherty, S.; Goldring, T.; Kreisman, D.; Theobald, R.; Urban, C.; Villero, J. Career and Technical Education Alignment Across Five States. AERA Open 2024, 10, n1. [Google Scholar] [CrossRef]

- Goulart, V.; Liboni, L.; Cezarino, L. Balancing skills in the digital transformation era: The future of jobs and the role of higher education. Ind. High. Educ. 2021, 36, 118–127. [Google Scholar] [CrossRef]

- Armstrong-Carter, E.; Panter, A.; Hutson, B.; Olson, E. A university-wide survey of caregiving students in the US: Individual differences and associations with emotional and academic adjustment. Humanit. Soc. Sci. Commun. 2022, 9, 300. [Google Scholar] [CrossRef]

- Buizza, C.; Bornatici, S.; Ferrari, C.; Sbravati, G.; Rainieri, G.; Cela, H.; Ghilardi, A. Who Are the Freshmen at Highest Risk of Dropping Out of University? Psychological and Educational Implications. Educ. Sci. 2024, 14, 1201. [Google Scholar] [CrossRef]

| Study | Main Algorithm | Sample and Country | Academic Data | Model Performance | Key Predictors |

|---|---|---|---|---|---|

| [7] | Logistic Regression (LR), Decision Tree (DT) | 3176 (Germany) | Exam results from the first 3 semesters | Accuracy: 87–95%, Recall: 62–80% | Failed exams and average exam grades |

| [10] | Random Forest (RF) | 14,495 (Mexico) | Early grades, prior GPA and math scores | Recall > 50%, Acc > 78% | First-semester performance, entrance data |

| [11] | Probabilistic Logistic Regression (PLR) | 517 (South Africa) | Moodle grades, assessments | Acc: 63–93%, F1: 61–93% | Moodle activity, test scores, gender |

| [12] | RF, RusBoost, Easy Ensemble | 4433 (Portugal) | Admission and 1st–2nd sem. GPA | F1: 0.65–0.74 | Sem. GPA, age |

| [13] | CatBoost, Neural Networks (NNs), LR | 8813 (Finland) | Grades, Moodle activity | AUC: 0.84–0.85, F1: 56–59% | Credits, fails, LMS use |

| [14] | Light Gradient Boosting Machine (LightGBM) | 60,010 (South Korea) | GPA, attendance, aid data | Acc: 94%, F1: 0.79 | Fees, scholarships, year of entry |

| [15] | Extreme Gradient Boosting (XGBoost) | 4423 (Indonesia) | Sem. GPA, credits | Acc: 87%, F1: 0.81, Recall: 74% | Grades, credits, SES |

| [16] | RF | 33,133 (USA) | HS and college GPA, course data | AUC: 0.83–0.91, Acc: 90–94% | GPA, course completions |

| [17] | LR + NN + DT (AdaBoost) | 19,396 (Germany) | Sem. GPA, failed exams | Acc: 79–95% (sem. 1 to 4+) | GPA, credits, exam success |

| [18] | ANN, NB, DT, RF, SVM, kNN | 2066 (Kazakhstan) | Entrance exam and demographics | Acc: 77–84% | Test scores, gender, school type |

| [19] | Deep FCNN | 8319 (Hungary) | Enrollment attributes only | Acc: 72–77% | Years since HS, funding, math, gender |

| [20] | LR, ANN, RF | 15,000 (Italy) | HS academic records | Acc: 56–62%, Recall: 58–65% | HS GPA, school ID |

| [21] | ANN, NB, DT, SVM, RF, kNN | 261 (Slovakia) | Course views, assignments, tests | Acc: 77–93% | LMS usage, test and assignment scores |

| [22] | DT, SVM, RF, GB, XGB, CB, LB | 4424 (Portugal) | HS GPA, early credits | Acc/Recall: 85–90% | Approved units, scholarships |

| [23] | RF, XGBoost | 4424 (Indonesia) | Admission and academic history | Acc: ~79–81%, Recall: ~72% | Not specified |

| [24] | XGBoost | N/A (Thailand, open uni) | Degree structure and credits | Acc: 91%, AUC: 0.913 | Major code, credit needs, faculty ID |

| [25] | SVM, DT, ANN, LR, kNN | 428 (Spain) | Admission and first-semester academic results | Acc: 77–93%, F1 (kNN): 0.86 | Pass rate 1st sem, 1st sem GPA, admission preference |

| Cohort | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Students | 3726 | 4335 | 4004 | 3949 | 4069 | 4983 | 4433 | 787 | 3964 | 3938 | 744 | 38,932 |

| Model | AUC-ROC | Accuracy | Recall (1) | Precision (1) | F1-Score (1) | MCC | Balanced Accuracy |

|---|---|---|---|---|---|---|---|

| Logistic Regression | 0.690 | 0.65 | 0.62 | 0.43 | 0.51 | — | — |

| Random Forest | 0.703 | 0.65 | 0.65 | 0.43 | 0.52 | — | — |

| Random Forest (Optim.) | 0.705 | 0.65 | 0.66 | 0.42 | 0.51 | 0.269 | 0.648 |

| XGBoost (Optim.) | 0.701 | 0.60 | 0.91 | 0.34 | 0.50 | 0.215 | 0.601 |

| N | Year | Age | Gender | Program | Dropout Prob. (%) | Credits Passed | GPA | Failed Courses | Enrolled JM25 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2023 | 24 | M | IIS | 94 | 0 | 0.0 | 6 | 0 |

| 2 | 2023 | 21 | M | LA | 92 | 5 | 8.4 | 0 | 0 |

| 3 | 2023 | 31 | F | LPS | 92 | 16 | 8.5 | 3 | 1 |

| 4 | 2023 | 24 | M | ISW | 90 | 1 | 7.0 | 2 | 0 |

| 5 | 2024 | 23 | M | LPS | 90 | 0 | 0.0 | 0 | 0 |

| 6 | 2023 | 22 | F | LENF | 89 | 0 | 0.0 | 7 | 0 |

| 7 | 2024 | 31 | F | LCE | 89 | 12 | 9.5 | 0 | 1 |

| 8 | 2023 | 24 | M | ISW | 88 | 11 | 8.3 | 13 | 1 |

| 9 | 2024 | 39 | M | LCOPU | 88 | 6 | 9.1 | 0 | 0 |

| 10 | 2023 | 22 | F | LCOPU | 88 | 14 | 8.5 | 6 | 0 |

| 11 | 2023 | 23 | F | LPS | 88 | 2 | 7.0 | 3 | 0 |

| 12 | 2023 | 24 | M | ISW | 88 | 12 | 9.0 | 1 | 0 |

| 13 | 2024 | 34 | M | LCOPU | 87 | 11 | 8.9 | 1 | 1 |

| 14 | 2023 | 20 | F | LAET | 87 | 19 | 8.7 | 1 | 0 |

| 15 | 2023 | 20 | M | LEF | 87 | 24 | 7.8 | 3 | 1 |

| 16 | 2023 | 29 | F | IL | 87 | 22 | 9.1 | 0 | 1 |

| 17 | 2023 | 23 | F | LDG | 87 | 0 | 0.0 | 0 | 0 |

| 18 | 2023 | 20 | F | LCE | 87 | 15 | 8.9 | 0 | 1 |

| 19 | 2023 | 39 | F | LDCFD | 87 | 21 | 7.8 | 4 | 1 |

| 20 | 2023 | 26 | F | ARQ | 86 | 16 | 9.2 | 1 | 1 |

| Area | Key Recommendation |

|---|---|

| Technology | Make the model part of the university’s everyday tools, integrating it into platforms like SITE, so that identifying and supporting students at risk becomes a natural, ongoing part of how the institution cares for its community. |

| Tutoring | Use the model’s predictions to identify and prioritize students who might benefit from extra guidance so that support can reach them early, personally, and with purpose. |

| Financial Aid | Use the risk profiles to better align scholarship and support programs, ensuring that limited resources reach the students who need them most, when they need them most. |

| Communication | Provide training and guidance to academic coordinators so that they feel confident interpreting the reports and using them to support their students with empathy, clarity, and purpose. |

| Evaluation | Regularly monitor how well the interventions guided by the model are working, not just in numbers but in real student outcomes, so that the system can continue to learn and improve alongside the people it is meant to support. |

| Ethics and Fair Use | Establish clear and compassionate guidelines to ensure that the model is used as a tool for support and encouragement, not for judgment or punishment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carballo-Mendívil, B.; Arellano-González, A.; Ríos-Vázquez, N.J.; Lizardi-Duarte, M.d.P. Predicting Student Dropout from Day One: XGBoost-Based Early Warning System Using Pre-Enrollment Data. Appl. Sci. 2025, 15, 9202. https://doi.org/10.3390/app15169202

Carballo-Mendívil B, Arellano-González A, Ríos-Vázquez NJ, Lizardi-Duarte MdP. Predicting Student Dropout from Day One: XGBoost-Based Early Warning System Using Pre-Enrollment Data. Applied Sciences. 2025; 15(16):9202. https://doi.org/10.3390/app15169202

Chicago/Turabian StyleCarballo-Mendívil, Blanca, Alejandro Arellano-González, Nidia Josefina Ríos-Vázquez, and María del Pilar Lizardi-Duarte. 2025. "Predicting Student Dropout from Day One: XGBoost-Based Early Warning System Using Pre-Enrollment Data" Applied Sciences 15, no. 16: 9202. https://doi.org/10.3390/app15169202

APA StyleCarballo-Mendívil, B., Arellano-González, A., Ríos-Vázquez, N. J., & Lizardi-Duarte, M. d. P. (2025). Predicting Student Dropout from Day One: XGBoost-Based Early Warning System Using Pre-Enrollment Data. Applied Sciences, 15(16), 9202. https://doi.org/10.3390/app15169202