4.1. Experimental Settings

As for the experimental settings, the hardware environment consists of a single NVIDIA GeForce RTX 5080 Laptop GPU, an AMD Ryzen CPU and 64 GB RAM. The software includes Python 3.9 and PyTorch 2.8. During training, the GHMCLoss is employed as the loss function, which builds upon the FocalLoss to address sample class imbalance. The core idea of FocalLoss is to dynamically adjust loss weights, reducing the contribution of easy-to-classify or high-confidence samples to focus the model on hard-to-classify or low-confidence samples. Mathematically, it is defined as:

where

denotes the predicted probability, and increasing

amplifies the weight decay for easy samples. This makes FocalLoss suitable for long-tailed data distributions or scenarios with imbalanced easy/hard samples, such as marine radar observations where most echoes are sea clutter and only a fraction represent objects. GHMCLoss further optimises FocalLoss by dynamically adjusting sample weights based on gradient density, suppressing both easy samples and outliers (extremely hard samples). Its formulation is:

where

is the cross-entropy loss and

is the gradient density of sample

i within its gradient bin-higher density reduces the sample weight. For tasks with noisy data or annotation errors, such as object detection and tracking in sea clutter-rich radar echoes, GHMCLoss outperforms FocalLoss in complex real-world scenarios.

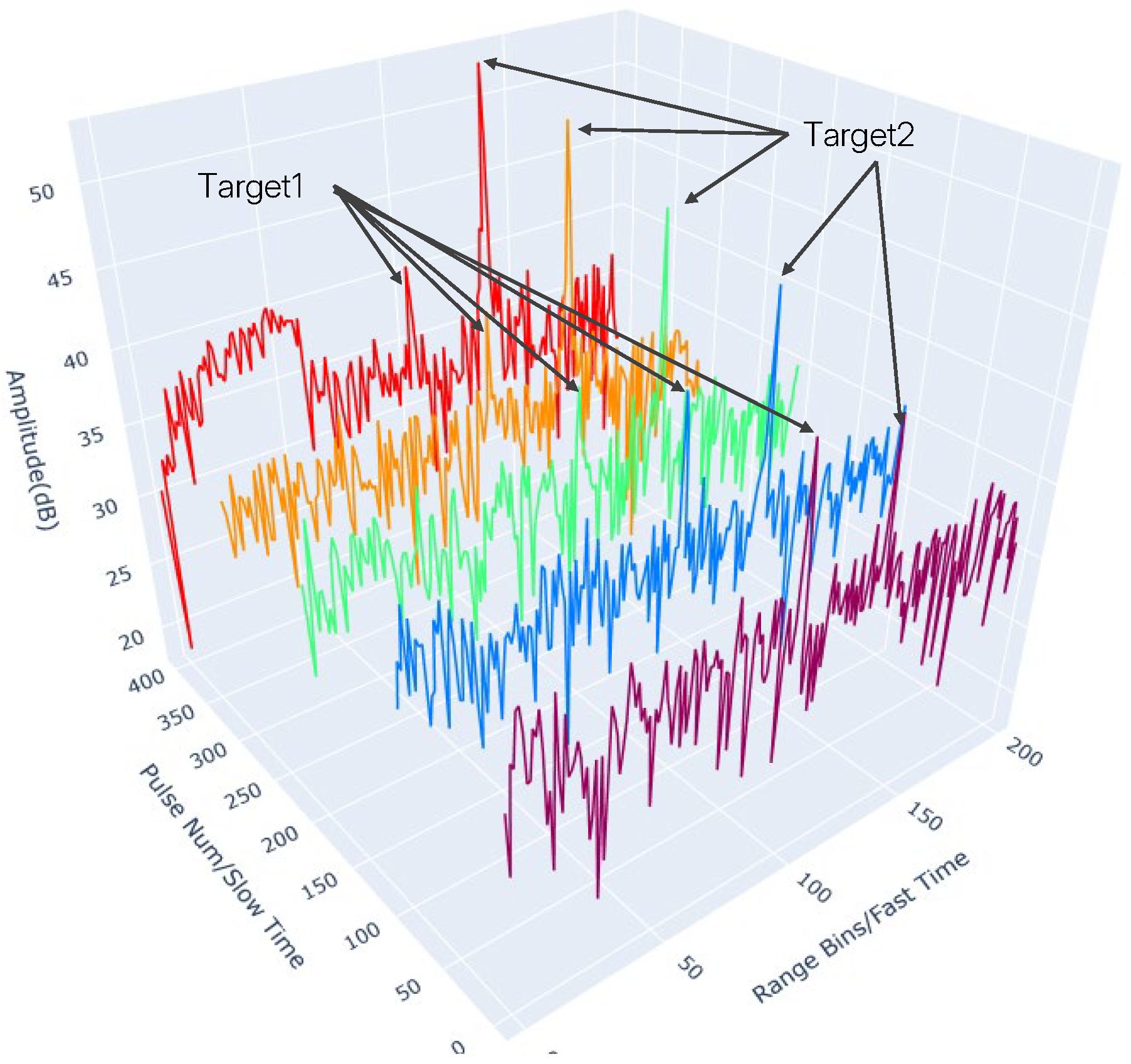

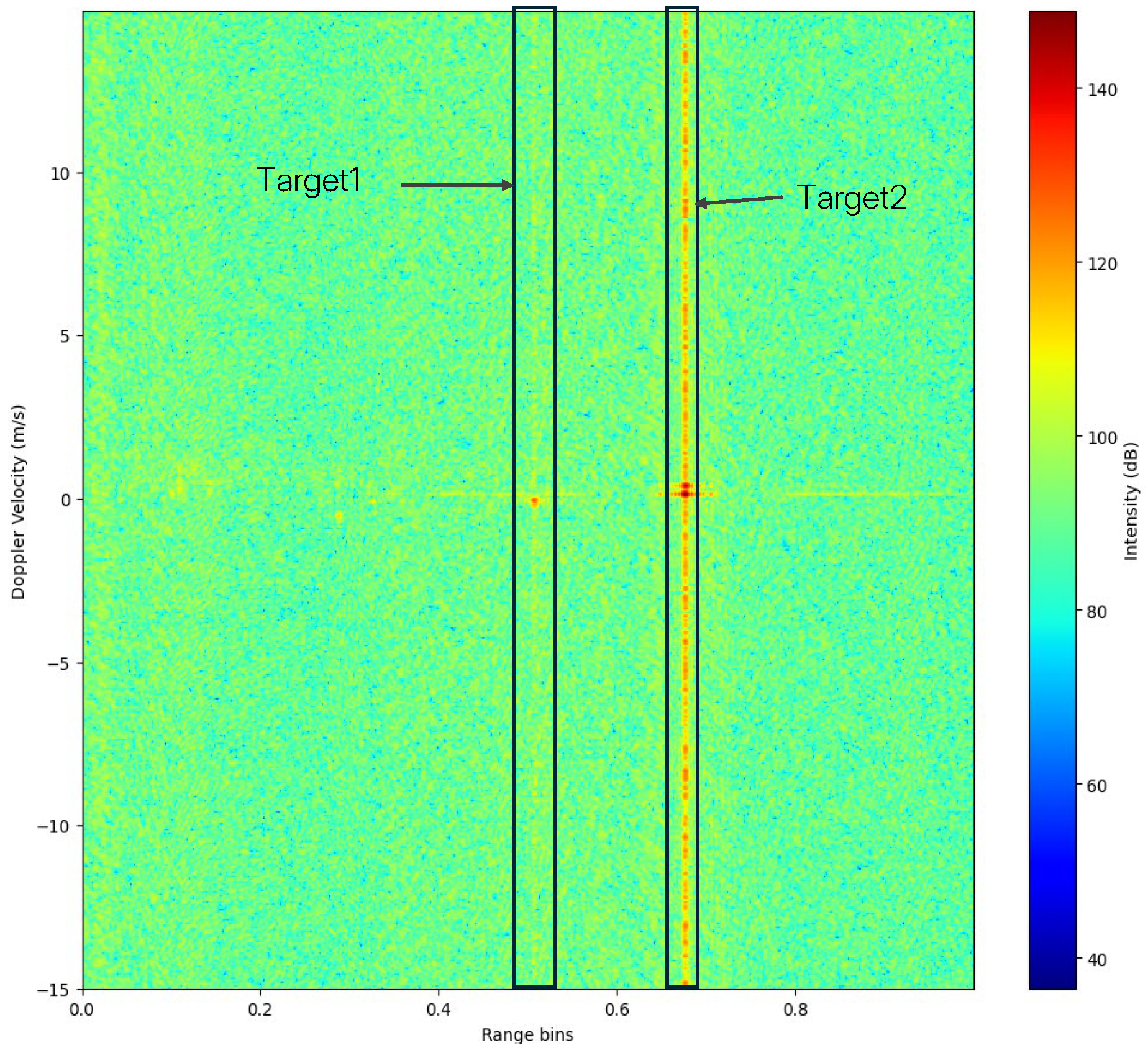

The proposed DFF detector is evaluated on two public datasets: the SDRDSP [

39] Dataset and the IPIX Radar Dataset. The parameters of radars in the two datasets are detailed in

Table 1. The former was collected in Yantai, Shandong Province, China. Given the large number of echo files and high intra-file redundancy, training and test sets are constructed based on “single-target” and “dual-target” configurations, as described in

Table 2. The IPIX Dataset, collected off the east coast of Canada, comprises 14 sub-datasets, each containing HH, HV, VV, and VH polarisation echoes (sub-dataset details in

Table 3). Preprocessing follows the official automated pipeline for each sub-dataset. The first 60% of echoes in each sub-dataset form the training set, with the remaining 40% reserved for testing. Sliding windows of length 32 with a step size of 10 pulses are used to construct samples. The numbers of training and test samples for both datasets are summarised in

Table 4.

The AdamW optimiser is used with a learning rate of , which is used in training phases. Batch sizes are set to 256 and 2 for the two datasets, with 100 training epochs.

Additionally, to standardise and quantify the model’s capabilities, we introduce several common metrics in deep learning, including accuracy, precision, recall, and the F-score. First, it is necessary to introduce the confusion matrix, as the calculation of these metrics is based on it. For clarity, we focus on reporting accuracy, the F1-score, and FAR to accurately demonstrate the model’s classification capability for different radar echo types and its robustness to sample imbalance.

The confusion matrix is a common visualisation tool in the fields of machine learning and deep learning. In image accuracy evaluation, it is primarily used to compare classification results with ground truths, displaying the accuracy of classification results in a matrix format. Each column of the confusion matrix represents the predicted class, where the total number in each column indicates the number of data points predicted to belong to that class. Each row represents the true class of the data, with the total number in each row indicating the number of data instances in that class. In this paper, each row of the confusion matrix represents the number of range cells labelled as pure clutter or object echoes in the sample labels, while each column represents the number of range cells predicted by the model as pure clutter or object echoes. A demonstration is shown in

Figure 8.

True Positive (TP): Range cells that belong to the object echoes and are correctly predicted as object echoes by the model as well.

False Negative (FN): Range cells that indicate object echoes but are incorrectly predicted as pure clutter by the model.

False Positive (FP): Range cells that actually belong to pure clutter but are incorrectly predicted as object echoes by the model.

True Negative (TN): Range cells that actually indicate pure clutter and are correctly predicted as pure clutter by the model as well.

Based on the confusion matrix, metrics such as precision, accuracy, recall, and the F-score can be used to quantify the model’s capabilities:

Accuracy represents the overall correctness of the model, defined as the ratio of correctly identified range cells to the total number of range cells, calculated as:

Precision indicates the proportion of correctly identified object echo range cells among all range cells predicted as object echoes, given by:

Generally, a higher value signifies better model performance.

Recall represents the proportion of object echo range cells correctly identified by the model among all actual object echo range cells, expressed as:

A higher value typically indicates better model performance.

Precision and recall often exhibit a trade-off. Therefore, in machine learning and deep learning, the F-score is used as an evaluation metric, which physically represents a weighted average of precision and recall. For example, the F1-score is defined as:

A higher F1-score signifies stronger capability in handling imbalanced datasets, making it a critical indicator for assessing the model’s effectiveness in real-world radar echo classification tasks.

False alarm rate (FAR) usually means the proportion of pure clutter cells misclassified as object echoes:

A lower FAR signifies better discrimination against false positives.

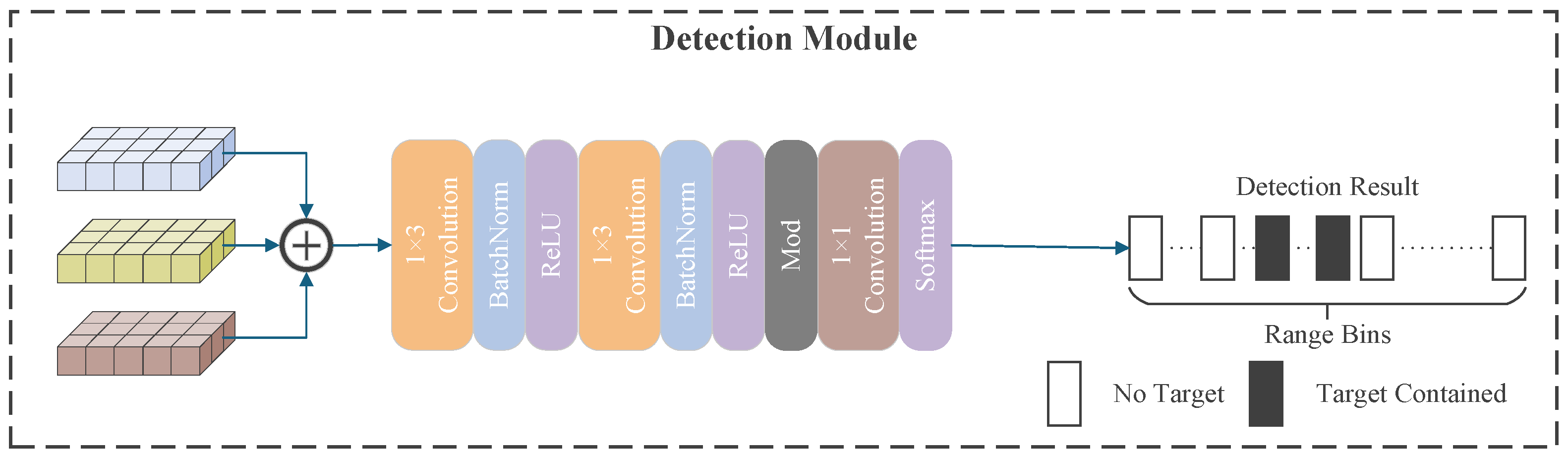

4.2. Comparison with State-of-the-Art Methods

The experiments compare the proposed DFF detector against classical CFAR detectors (CA-CFAR [

1], GO-CFAR [

2], SO-CFAR [

46]) and five machine/deep learning-based methods (support vector machine (SVM) [

47], Bi-LSTM [

48], MDCCNN [

22], MFF [

19]) using the IPIX dataset (

Table 3) and SDRDSP dataset (

Table 2). Detection performance in terms of average FAR, accuracy, and F1-score is evaluated at preset FAR levels of

and

. Results on both datasets are summarised in

Table 5.

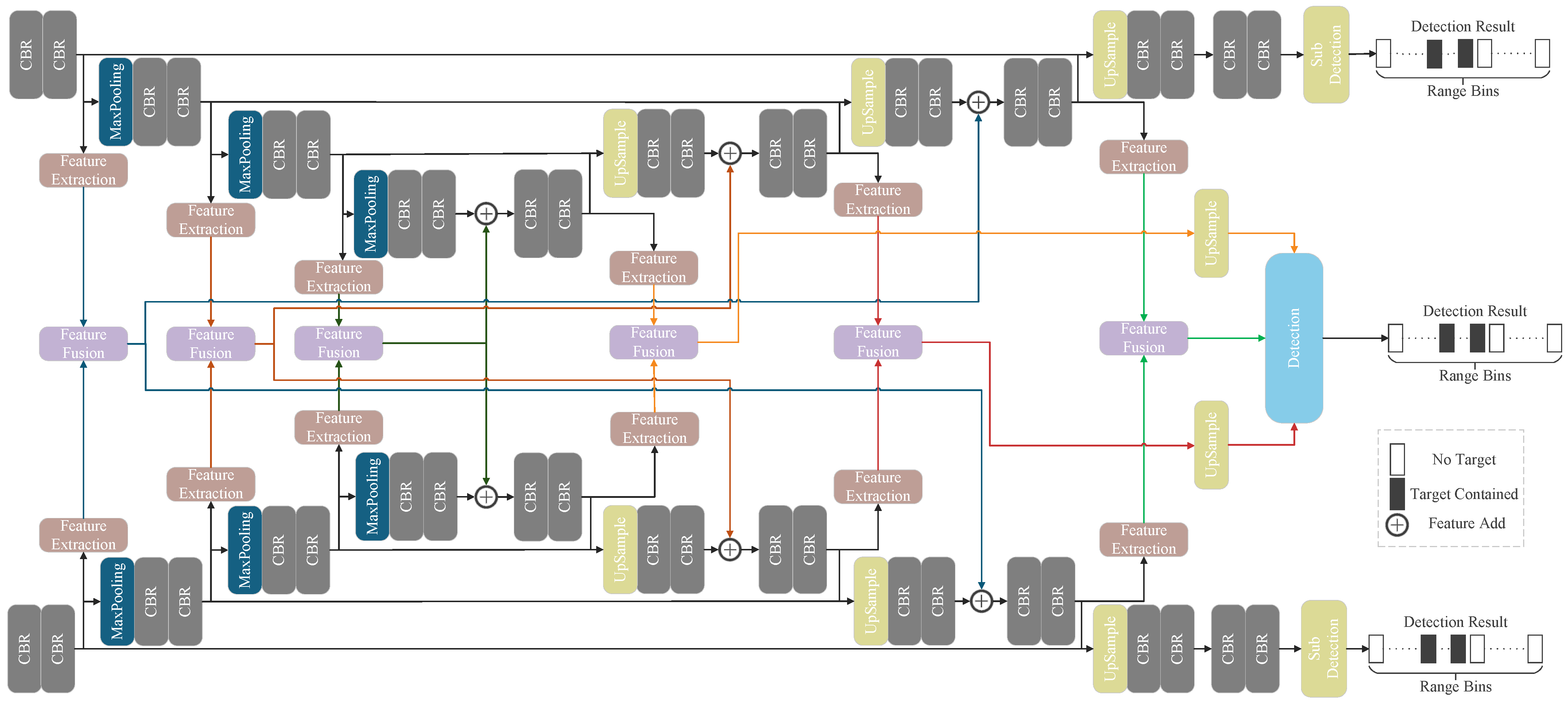

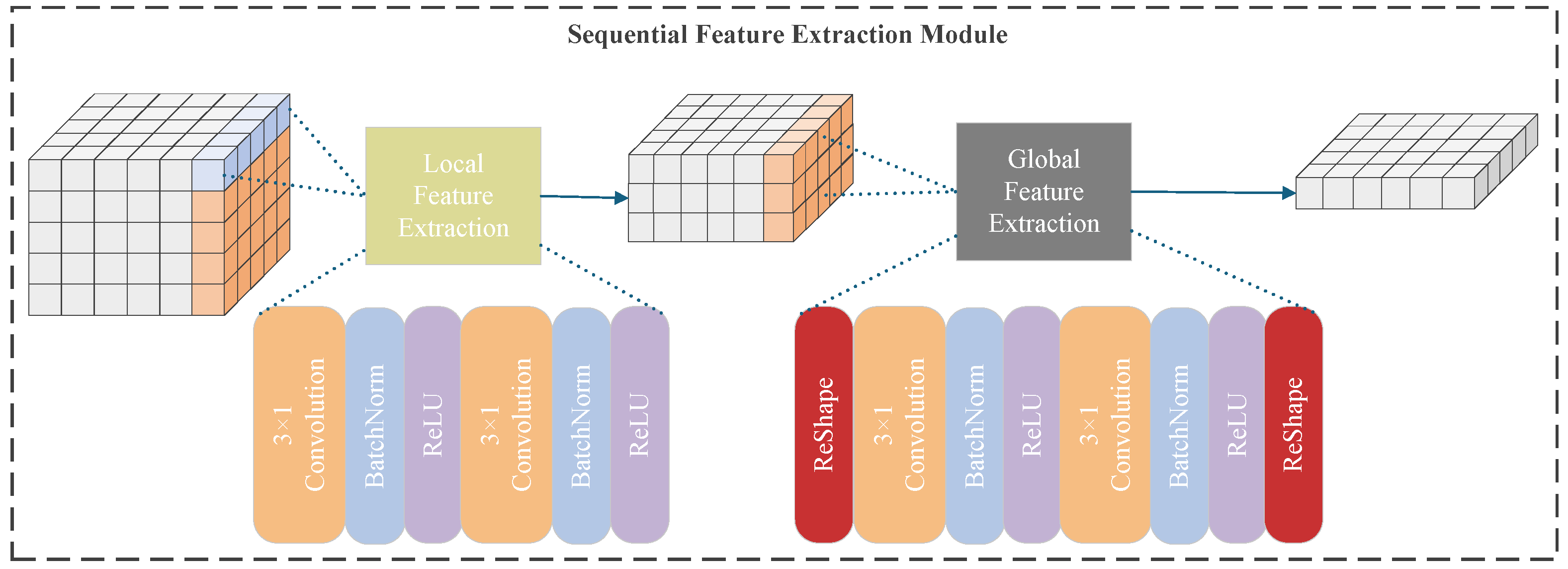

As shown, DFF achieves superior detection performance across both datasets. On IPIX and SDRDSP datasets, DFF’s actual FARs are and , respectively, lower than the next-best MFF, demonstrating the robustness and validating our video-inspired fusion against non-stationary distortions. The superior performance originates from the model’s synergistic design: (1) spatial feature learning within individual pulses via complex-valued U-Nets to suppress clutter spikes and (2) cross-pulse temporal consistency modelling through SFEM to distinguish persistent objects from transient clutter. Additionally, DFF’s accuracy and F1-score exceed MFF by at least 93%. The observed higher actual FARs compared to preset values are attributed to strong clutter nonstationarity, where training/testing clutter distributions may differ.

At a preset FAR of

, the detection performance of seven detectors, GO-CFAR [

2], SO-CFAR [

46], SVM [

47], Bi-LSTM [

48], MDCCNN [

22], MFF [

19], and DFF, on the SDRDSP dataset is illustrated in

Figure 9. The results show that the proposed DFF detector performs better across single-target and dual-target subsets. For single-object detection, DFF maintains an Accuracy above 97% and an F1-score above 70%; for dual-object detection, these metrics remain above 97% and 66%, respectively. In contrast, other detectors show degraded Accuracy and F1-score on certain SDRDSP subsets, particularly classical CFAR-based statistical methods (GO-CFAR, SO-CFAR) and traditional machine learning approaches, e.g., SVM. These experimental results demonstrate that the DFF detector outperforms other methods in detecting radar sea objects.

To verify the efficiency of our model, we obtain the results of the number of parameters and training and inference time of different models with the FAR of

on the SDRDSP dataset. As shown in

Table 6, the number of parameters of our model is relatively high, but the time required for the training and inference stages is relatively small, compared with MFF, the number of parameters is 12.3 M more, the training time is 14.9 h longer, and the inference latency is 7.9 ms longer.

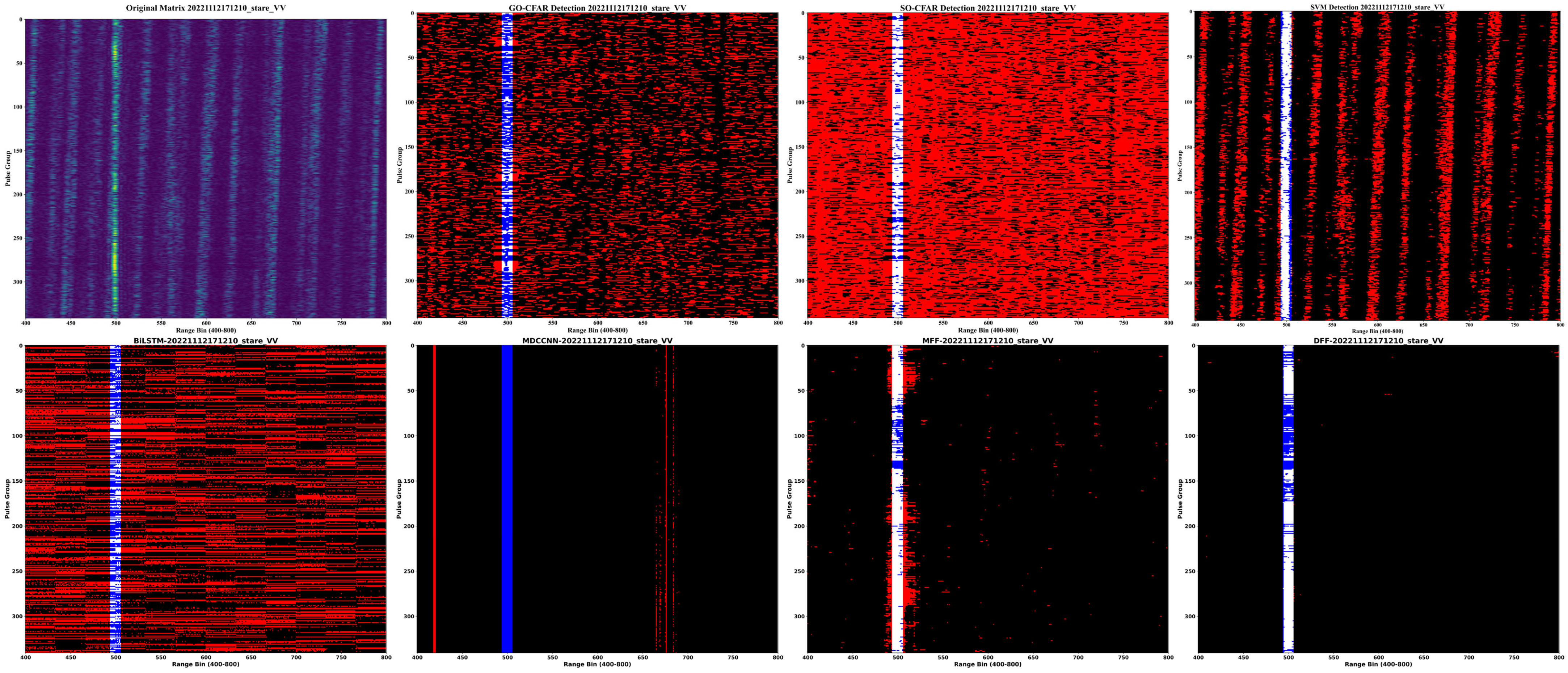

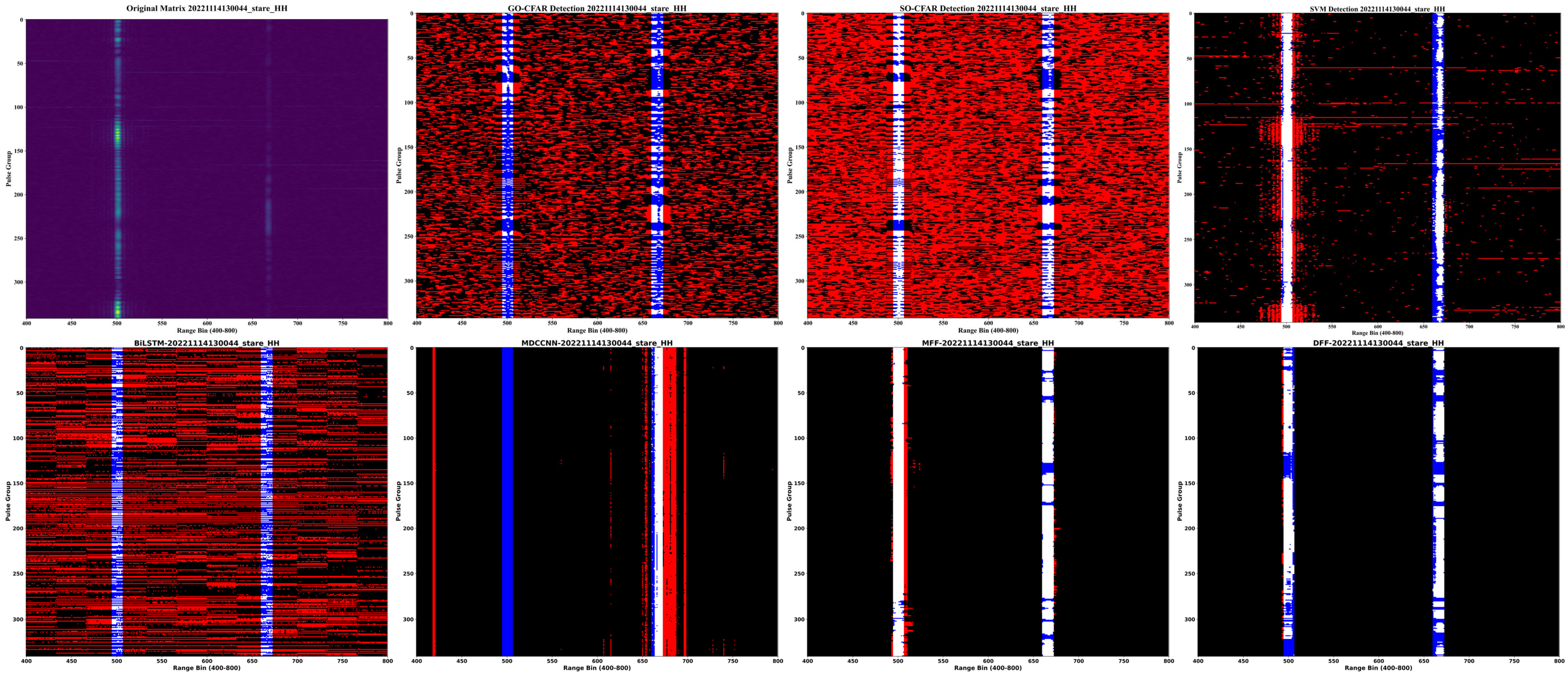

To visually characterise the detection performance of the proposed DFF detector, we further visualise the results of the seven detectors on the SDRDSP dataset. As depicted in

Figure 10 and

Figure 11, each visualisation classifies detection outcomes into four categories:

Black: Regions correctly labelled as sea clutter by all methods (true negatives).

White: Regions correctly identified as objects (true positives).

Red: False positives (clutter regions misclassified as objects).

Blue: False negatives (objects missed by the detectors).

The visualisations intuitively demonstrate that DFF achieves the most accurate detection, with minimal false-positive and false-negative regions. In contrast, traditional CFAR-based methods exhibit significant misclassifications to the lack of temporal context, particularly in high-clutter areas, highlighted by extensive red and blue pixels. DFF’s superior ability to suppress false alarms and retain true objects is evident from its sparse red/blue distributions, reinforcing the quantitative findings. These visual results provide intuitive evidence of DFF’s robustness in distinguishing objects from complex sea clutter, complementing the statistical analysis in

Table 5.

4.3. Ablation Study

To validate the necessity of range profiles and range–Doppler maps for radar echo object detection, we designed two variant detectors of DFF: Variant model 1 employs only range–Doppler features for detection, while Variant model 2 relies solely on fast/slow time features. At a preset FAR of

, the experimental results of DFF and the two variants on the test set of the SDRDSP dataset are presented in

Table 7.

The table shows that Variant 1’s FAR indicates that removing range–Doppler features reduces clutter suppression capability. Besides, DFF achieves an average FAR of , which is lower than the single-source feature variants. Moreover, DFF’s average accuracy and F1-score reach 98.76% and 68.75%, respectively, outperforming the single-branch detectors in detection capability. These findings underscore the critical role of multi-modality feature fusion in enhancing object-clutter discrimination and overall detection robustness.

To demonstrate the effectiveness of our proposed multi-feature fusion method with adaptive convolutional weight learning, we compared two common feature fusion techniques in deep learning—channel-wise concatenation and element-wise summation—against our adaptive fusion module under a preset FAR of

. The experimental results of the three methods on the test set of the SDRDSP dataset are shown in

Table 8. Unlike our approach, both baseline methods neglect the element-wise importance in feature maps, assuming uniform weights (i.e., weight = 1) for all channels or cross-source features.

As indicated in the table, while our method exhibits a slightly higher average FAR than the baselines, it significantly outperforms them in average accuracy and F1-score. This validates the effectiveness of our feature fusion method by demonstrating that AWL enhances feature discriminability, balancing detection accuracy and clutter robustness.