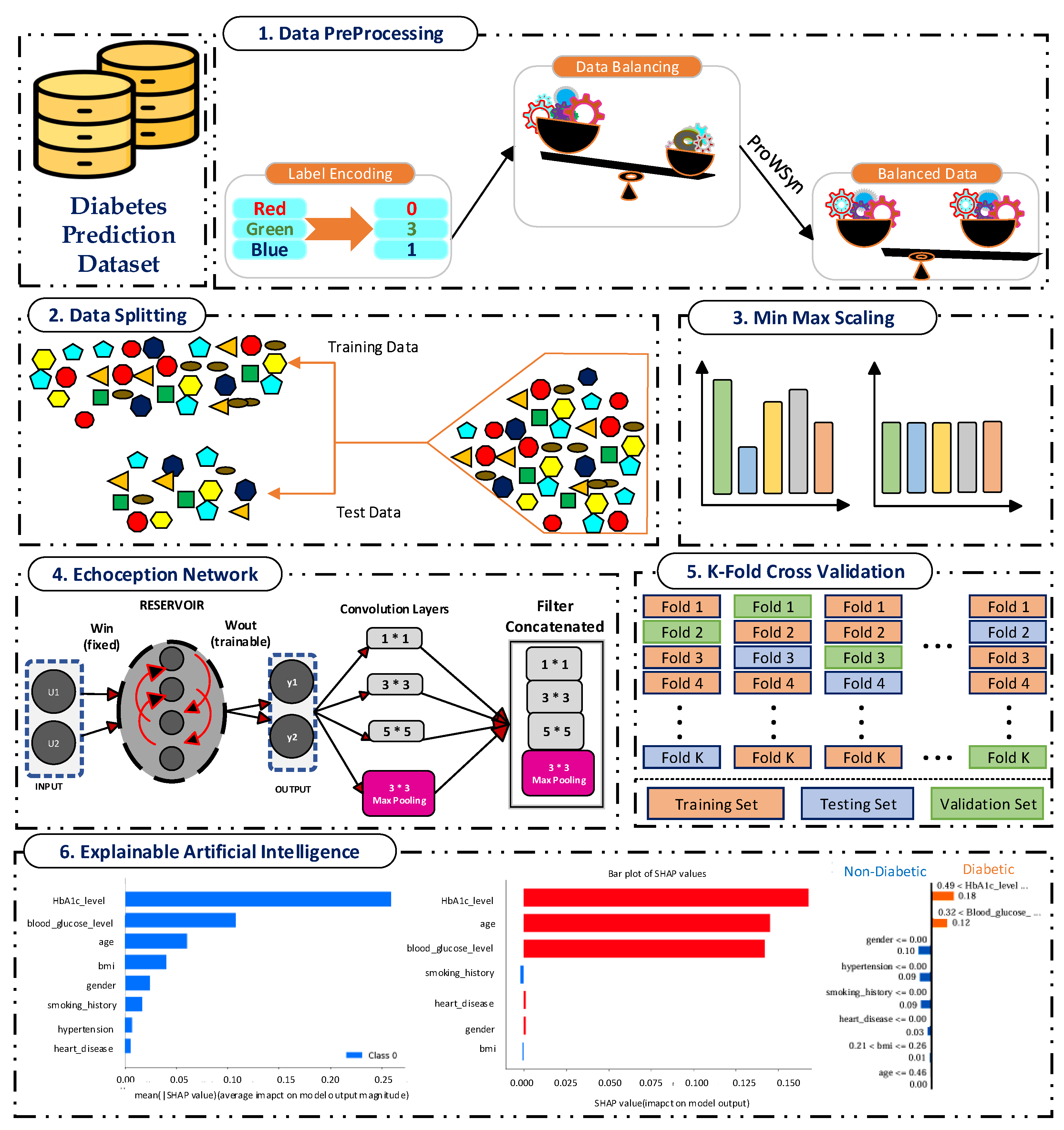

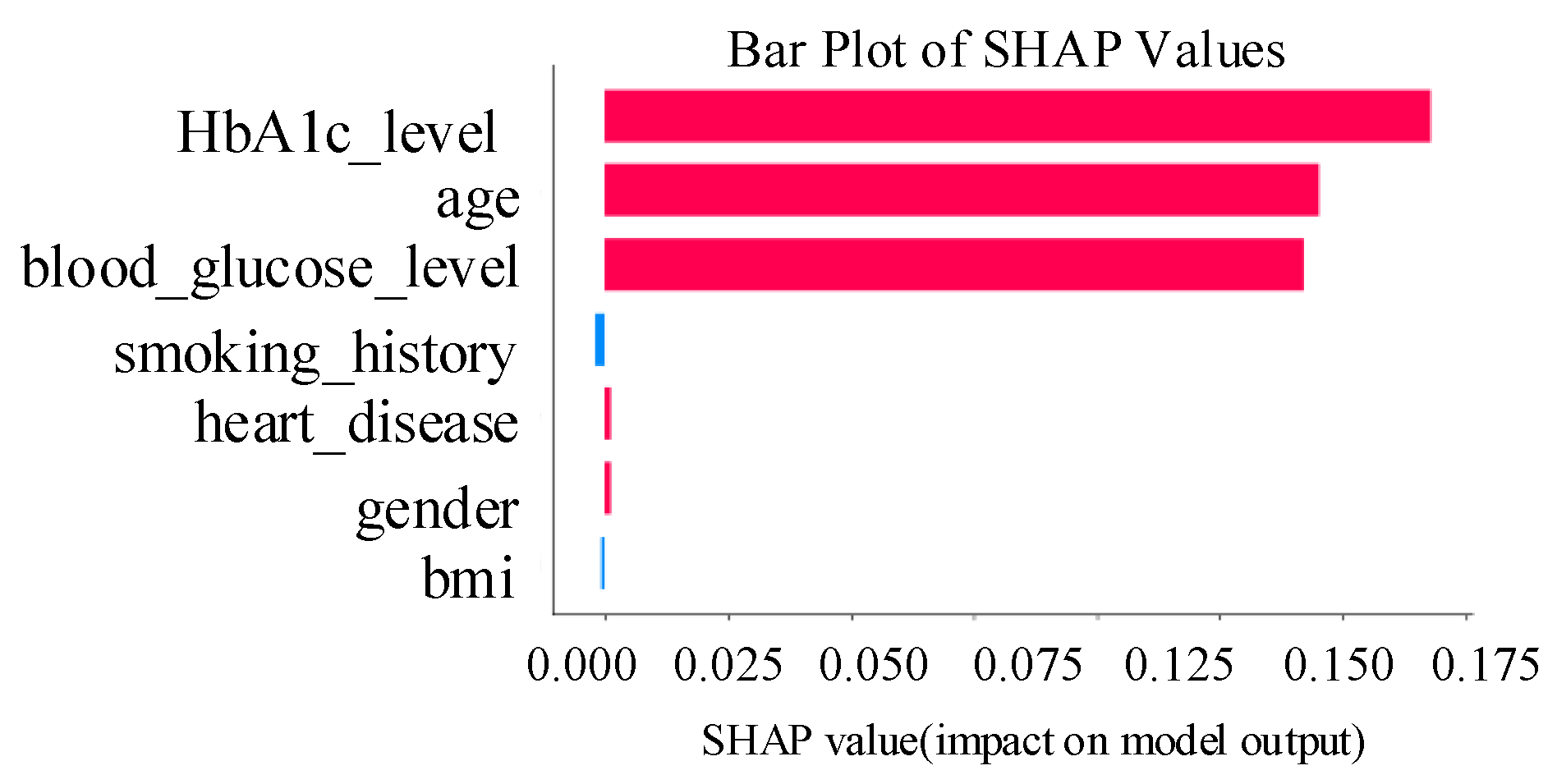

4.1. Discussion of Newly Proposed Echoception Network Results

The proposed EchoceptionNet was compared with existing and baseline models, including the temporal convolutional network (TCN), vanilla recurrent neural network (VRNN), residual network (ResNet), LeNet, DNN, LSTM, gated recurrent unit (GRU) [

27], inception network (InceptionNet), and echo state network (ESN). EchoceptionNet outperformed all these models in diabetes prediction based on all metrics, as shown in

Table 9. EchoceptionNet achieved superior performance, demonstrating its ability to handle the intricate patterns present in the dataset, with the highest accuracy of 0.95. The key strengths of EchoceptionNet lie in its ability to combine temporal feature extraction and spatial pattern detection through multi-scale convolutional operations, as shown in

Table 7, Lines 2 and 3. This dual capability enables the EchoceptionNet to efficiently process complex relationships between features such as

blood sugar levels, BMI, HbA1c levels, and other patient attributes. Consequently, EchoceptionNet excels at learning the intricate temporal dynamics and spatial interactions necessary for precise classification. The comparison of EchoceptionNet with existing DL models in all evaluation metrics is visualized in

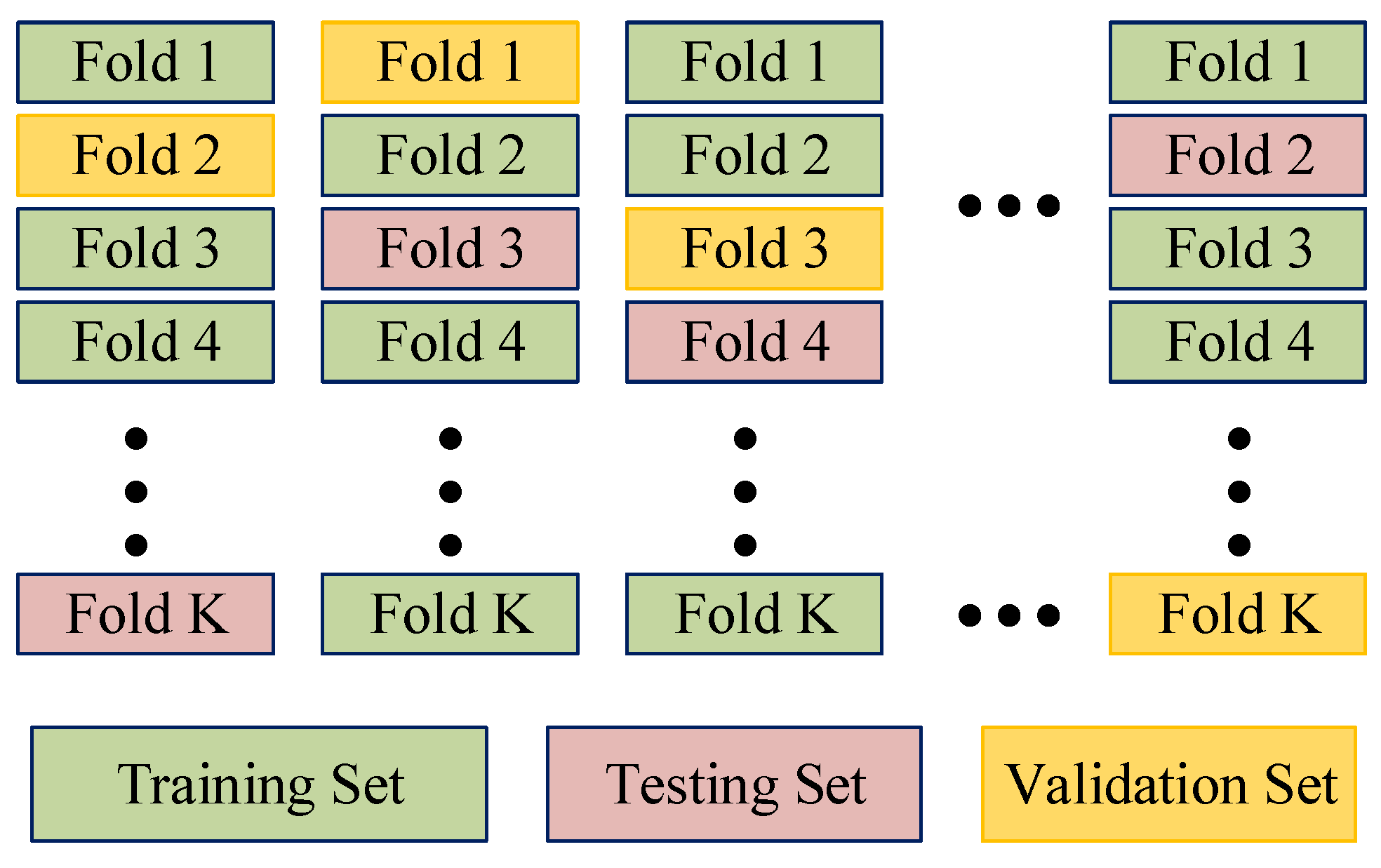

Figure 2. The insights behind the accuracy achieved by the baseline and proposed EchoceptionNet models are described in

Table 10.

Our proposed EchoceptionNet performs exceptionally well, especially when addressing the class imbalance problem in diabetes detection. A notable improvement in sensitivity is demonstrated by the false-negative (FN) count, which decreased from 557 to 360 after using ProWSyn. This implies that ProWSyn enhances EchoceptionNet’s capacity to learn accurate representations of the minority class by assisting it in efficiently detecting diabetic cases with few false negatives. The key to EchoceptionNet’s success is its capacity to combine temporal and spatial feature extraction methods, enabling it to identify intricate patterns in medical data. Class imbalance is addressed by creating synthetic data, which gives the EchoceptionNet a strong performance that beats baseline models in terms of sensitivity and specificity.

Baseline models, such as InceptionNet and DNN, demonstrate advancements but still have notable drawbacks. Although InceptionNet’s FNs decreased from 1708 to 816, it still does not have a specific temporal extraction mechanism (

Table 11). This makes it less capable of capturing the sequential nature of medical data, which is essential for predicting diabetes. Despite reducing FNs from 752 to 4127 with its fully connected architecture, DNN still performed worse than did EchoceptionNet because it is unable to capture intricate feature interactions. DNN’s inability to interact with deep features makes it less appropriate for diabetes prediction, where temporal and spatial dependencies are crucial.

Even though ProWSyn improved the performance of several models including GRU, TCN, VRNN, LSTM, and ResNet, and these architectures still exhibited notable limitations. GRU showed an increase in true positives (TPs), from 713 to 15,226; however, it struggled with spatial feature learning, as it is fundamentally designed for temporal dependencies only. GRU’s architecture simplifies the LSTM by combining the forget and input gates into a single update gate, making it computationally efficient, but this simplification limits its capacity to model complex spatial patterns in tabular medical data. As a result, it yielded a relatively lower F1-score of 0.77 and a recall of 0.72 despite balanced data. Similarly, the LSTM model improved its TPs from 467 to 15,574 after ProWSyn, but exhibited a high false-positive (FP) count of 15,226 and a low true-negative (TN) count of 1739, indicating poor specificity and misclassification of non-diabetic patients. LSTM’s architecture, with separate input, forget, and output gates, allows it to retain long-term dependencies effectively. However, in this case, it failed to generalize well, possibly due to overfitting or inefficiencies in managing diverse spatial–temporal feature interactions.

Even though TCN’s TPs increased substantially from 913 to 15,863, it continued to suffer from a high FP count of 1974, highlighting its limited specificity. VRNN improves its TPs to 14,415 but also saw an increase in FPs to 2419, showing poor distinction between diabetic and non-diabetic samples. ResNet, although powerful in spatial feature extraction, recorded a high FN count of 6158 after balancing, suggesting its inability to capture temporal relationships. Likewise, LeNet only marginally increased TPs but had a high FN count of 3034, indicating that its shallow architecture struggles with complex medical data and class imbalance. EchoceptionNet is the best option for diabetes prediction because of its integrated approach to both temporal and spatial data, although ProWSyn enhances the performance of these models.

The spatial pattern detection component of the proposed EchoceptionNet model is critical in preserving the temporal dependency in the data. Several spatial features, such as

insulin level changes periodically over a period, which are the most decisive signs of

diabetes progression, cannot be effectively managed by conventional DL models, including DNN or GRU. To address this challenge, EchoceptionNet adopts sparse and randomly connected neurons within this module to perform temporal pattern identification without the vanishing gradients that usually affect the recurrent-based architecture, as shown in

Table 7 Lines 1, 2, and 12. Meanwhile, the spatial component employs reservoir layers to capture varying degrees of feature interaction at various sizes. By addressing both local dependencies and global trends within the dataset, EchoceptionNet achieved the highest F1-score of 0.95 and a precision of 0.97, as shown in

Figure 2.

There is a noticeable difference between EchoceptionNet and InceptionNet, the model with the second-best performance. Despite achieving a commendable accuracy of 0.91, InceptionNet was unable to fully capture the sequential nature of the dataset due to its lack of temporal feature extraction, as shown in

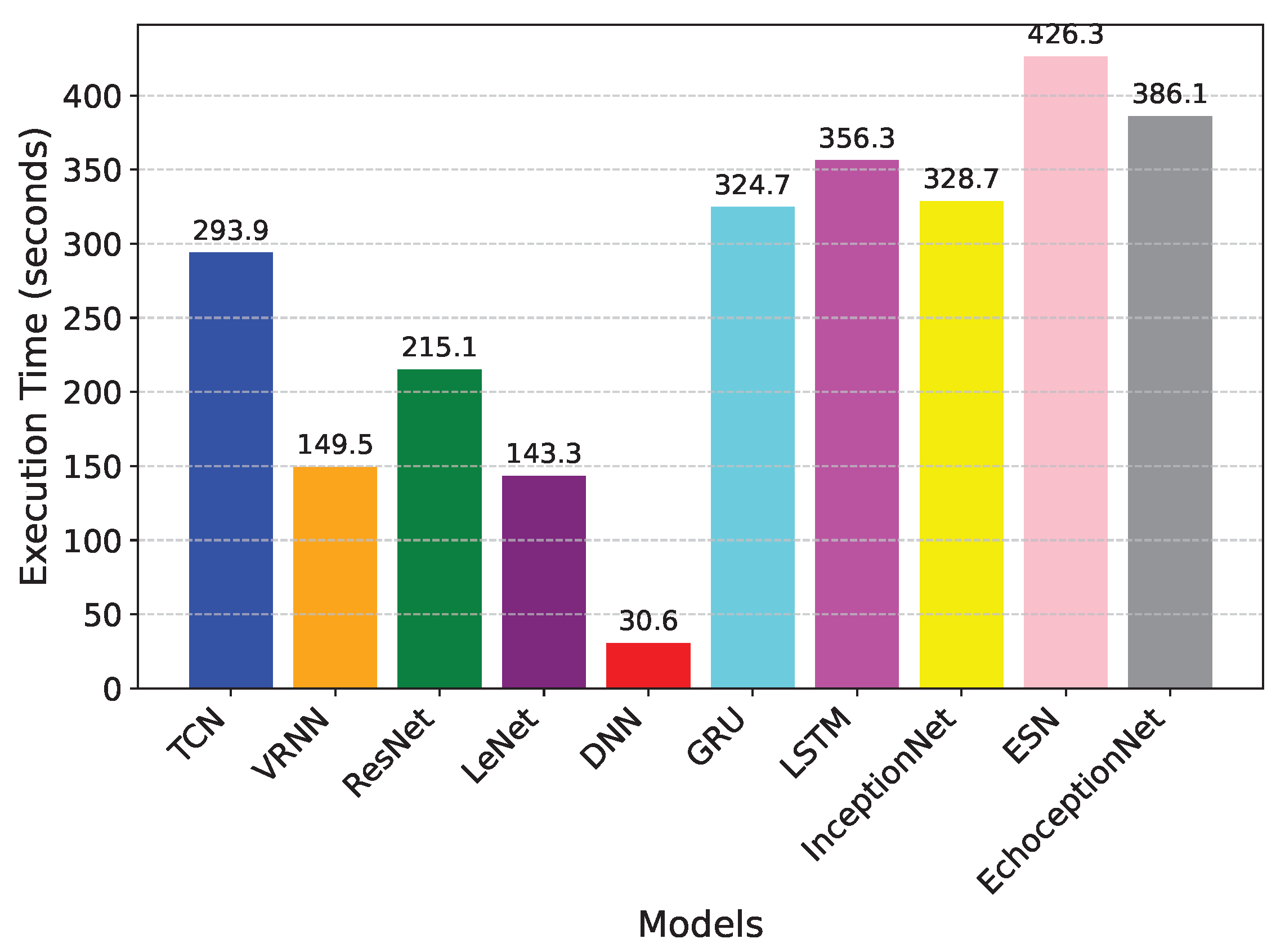

Figure 2. Its lower recall of 0.91, which indicates that it missed a larger percentage of TP cases as compared to EchoceptionNet’s recall of 0.93, reflects this weakness. The EchoceptionNet’s computational efficiency is further demonstrated by InceptionNet’s longer execution time of 328.7 s, although it has a simpler architecture than does the two-module EchoceptionNet. The execution time comparison is visualized in

Figure 3.

DNN, which is employed in many classification tasks, was considerably less accurate than was EchoceptionNet, with an accuracy of 0.81. For healthcare datasets with intricate interdependencies, DNN’s generalization potential is constrained by its inability to accurately capture temporal dependencies and its dependence on fully connected layers. Its tendency to generate a high rate of FPs is highlighted by its recall of 0.64, which is especially harmful in medical applications where accurate detection is crucial. DNN’s core strength is its computational speed. This efficiency is useful in low-latency applications, but it comes at the expense of decreased predictive power, making it inappropriate for applications requiring high accuracy and dependability, such as disease prediction.

GRU also showed a relatively moderate performance, achieving an accuracy of 0.78 and a recall of 0.72. Although the GRU is specifically designed for modeling sequential data, it lacks the architectural complexity required to capture intricate spatial feature relationships, which are crucial in medical datasets such as those used for diabetes prediction. GRU simplifies the internal mechanisms of the LSTM by combining the forget and input gates into a single update gate and merging the cell and hidden states, leading to faster training and lower memory usage. However, this simplification compromises its capacity to learn deep spatial representations, resulting in a lower F1-score of 0.77 and a precision of 0.83. In contrast to LSTM, which achieved similar recall (0.70) but a significantly lower precision (0.53) and F1-score (0.60), GRU offers slightly better generalization. Nevertheless, both models fall short of the performance required for accurate diabetes classification. Additionally, the execution time of GRU was 324.7 s, which is comparable to 386.1 s of the proposed EchoceptionNet, yet the latter significantly outperformed GRU across all performance metrics, particularly with an accuracy of 0.95 and an F1-score of 0.95. This highlights the importance of integrating both spatial and temporal learning capabilities, as done in EchoceptionNet, for reliable disease prediction.

With respective accuracies of 0.68 and 0.73, ResNet and LeNet performed among the worst of the models that were evaluated. ResNet works well with image data, but it has trouble with tabular datasets because it relies too much on residual connections that are not very useful for capturing non-linear dependencies in this situation. It appears unreliable for making medical predictions due to its poor F1-score of 0.55, which further emphasizes its inability to strike a balance between recall and precision. LeNet, which was initially created for image classification, has comparable difficulties. Its 0.47 recall score, which is a result of its dependence on fixed kernel sizes and lack of temporal pattern recognition mechanisms, suggests a high rate of missed diabetic cases. Although it has a faster execution time of 143 s as compared to most models, its predictive capabilities are not adequate for practical healthcare applications.

The performance of VRNN and TCN also suggests that healthcare data is more complex and requires more refined DL models. The accuracy of the VRNN model was 0.70, while the recall was 0.56, which shows that VRNN is unable to detect diabetic cases. Similarly, TCN yielded a higher recall of 0.90 but a low precision score of 0.72, resulting in an overall accuracy of 0.78. This evidence indicates that the performance of both models puts them in a dynamic situation of compromising between sensitivity and specificity, which is a crucial measure in medical prediction.

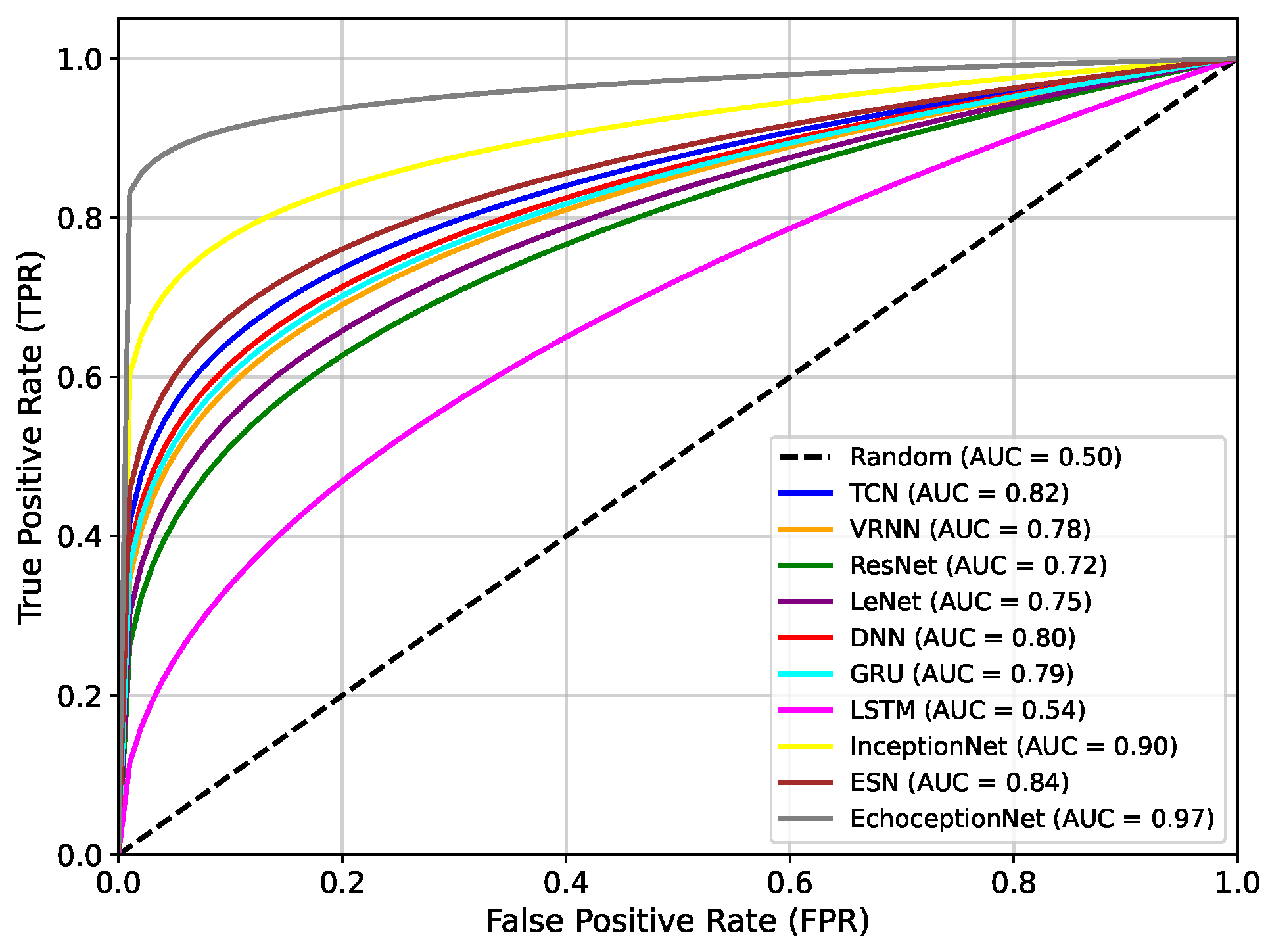

Figure 4 presents the area under the receiver operating characteristic curve (AUC-ROC) comparison of the proposed EchoceptionNet against various baseline DL models. AUC is a critical metric for evaluating a classifier’s ability to distinguish between positive (diabetic) and negative (non-diabetic) classes across all decision thresholds. Higher AUC values indicate better discrimination capabilities. The proposed EchoceptionNet achieved the highest AUC of 0.97, demonstrating superior predictive power and robustness in distinguishing diabetic patients from non-diabetic ones. This remarkable performance is attributed to its hybrid architecture that integrates both temporal and spatial feature extraction, enabling it to learn complex nonlinear patterns in the dataset effectively.

InceptionNet showed the second-best performance, with an AUC of 0.90. Although it performs well in spatial feature detection due to its multi-scale convolutional architecture, it lacks dedicated temporal modeling, which limits its sensitivity to sequential medical patterns such as blood glucose trends over time. ESN achieved an AUC of 0.84, benefiting from its reservoir-based temporal learning. However, its limited feature extraction capabilities and static internal weights hinder adaptability to complex patterns in the feature space. TCN and DNN registered AUC values of 0.82 and 0.80, respectively. TCN effectively models temporal dependencies using causal convolutions but struggles with spatial generalization. DNN, while good at static pattern recognition, lacks mechanisms for modeling temporal dynamics, limiting its ability to adapt to fluctuating patient profiles.

GRU recorded an AUC of 0.79, slightly lower than that of TCN and DNN. Its recurrent structure captures sequential data moderately well, but the absence of spatial feature modeling causes it to underperform in complex multimodal datasets.

VRNN and LeNet achieve AUCs of 0.78 and 0.75, respectively. VRNN handles uncertainty in sequences but suffers from overfitting due to its probabilistic nature. LeNet, being a shallow CNN, is inadequate for deep feature extraction in high-dimensional structured data, especially lacking both depth and temporal modeling. ResNet showed a relatively poor performance, with an AUC of 0.72. While effective in deep feature extraction, its architecture is primarily suited for image tasks and fails to adapt well to sequential tabular data. The lowest AUC was observed for green LSTM, with a value of only 0.54. Despite its powerful memory structure suitable for long-term dependencies, LSTM in this setting performs poorly due to overfitting, an excessive number of parameters, and ineffective spatial representation learning.

Overall, the steep and dominant ROC curve of EchoceptionNet validates its ability to make highly accurate predictions across different thresholds, significantly outperforming all other models in both sensitivity and specificity.

The dual-module architecture of EchoceptionNet, which synergistically integrates temporal and spatial feature extraction, is the main cause of this superiority. The temporal module aids in the model’s identification of minor temporal patterns that are crucial for the diagnosis of diabetes using reservoir layers to document sequential relationships. The spatial module simultaneously employs multi-scale convolutional filters to detect complex feature interactions that are often missed by standard DL models, such as the compounding effects of age, hypertension, and BMI. By addressing class imbalance using the ProWSyn approach, EchoceptionNet provides a balanced trade-off compared to InceptionNet, which is excellent at spatial extraction but lacks mechanisms for temporal dynamics. The proximity-based oversampling strategy, which generates synthetic data near decision boundaries, suggests that the model can better classify minority diabetic cases, as seen by its higher TP rate. To ensure convergence to a globally optimal solution without overfitting, the EchoceptionNet’s architecture is also tuned using the Adam optimizer, efficient convolutional layers, and modified hyperparameters. These features make EchoceptionNet a robust and clinically reliable model. Its steep and noticeable ROC curve shows that it can differentiate between cases with and without diabetes at all thresholds with remarkably accurate prediction.