Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis

Abstract

1. Introduction

- We address the important problem of detecting cognitive impairment from its very beginning (hence, including MCI) using non-invasive and cost-effective techniques.

- We validate the assumption that facial expressions in response to emotional elicitation are different in cognitively impaired and healthy subjects.

- To this end, we propose an AI framework based on facial emotion data, using a dimensional model of affect. We design and test an emotion elicitation protocol based on standardized stimuli.

- We test our system on video recordings collected from cognitively impaired and healthy control subjects, with a ground truth classification of CI based on a comprehensive neurological and neurocognitive assessment.

2. Background

2.1. Deep Learning for CI Detection Using Facial Features

2.2. Automated Facial Emotion Recognition

3. Materials and Methods

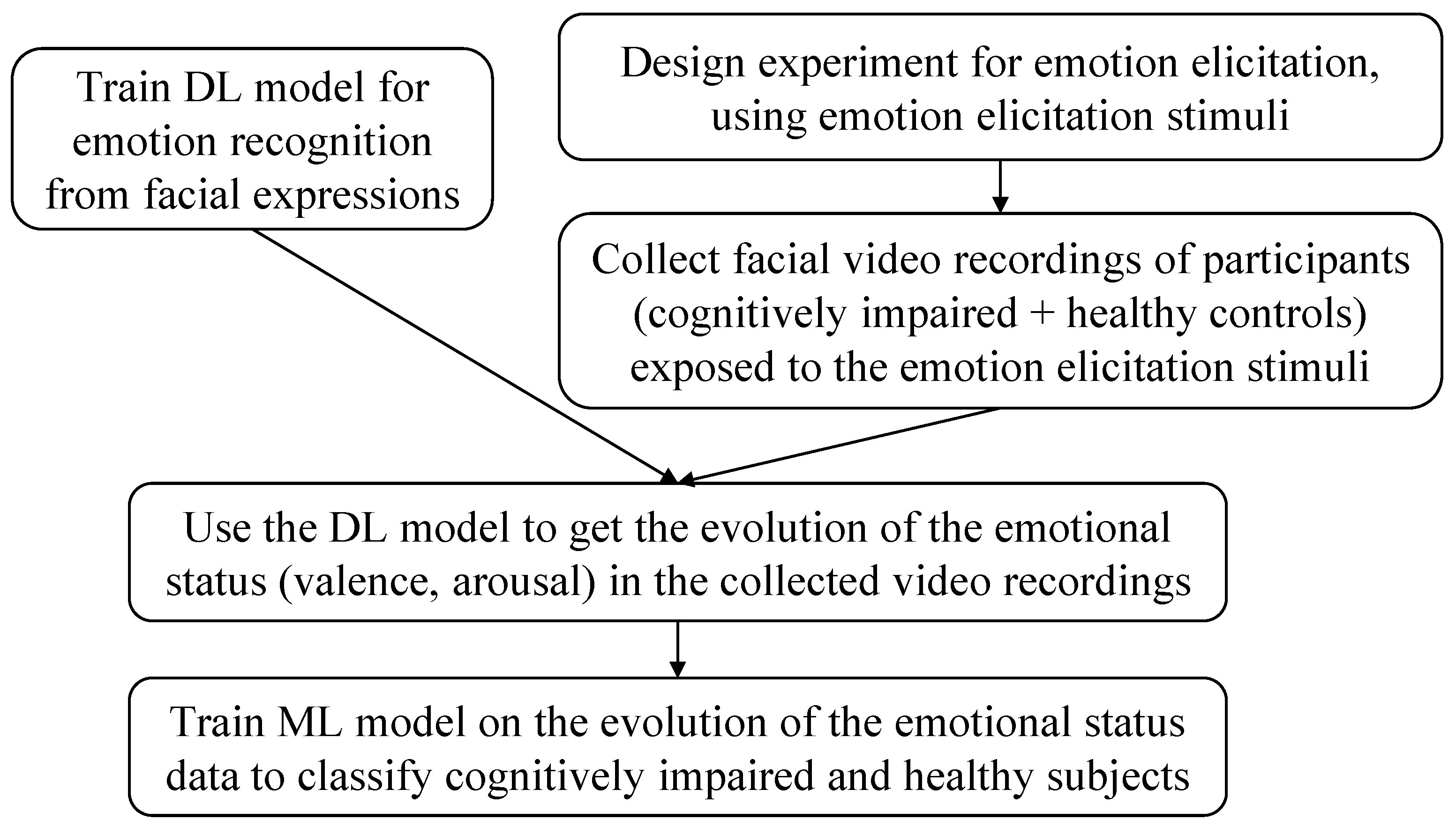

3.1. System Overview

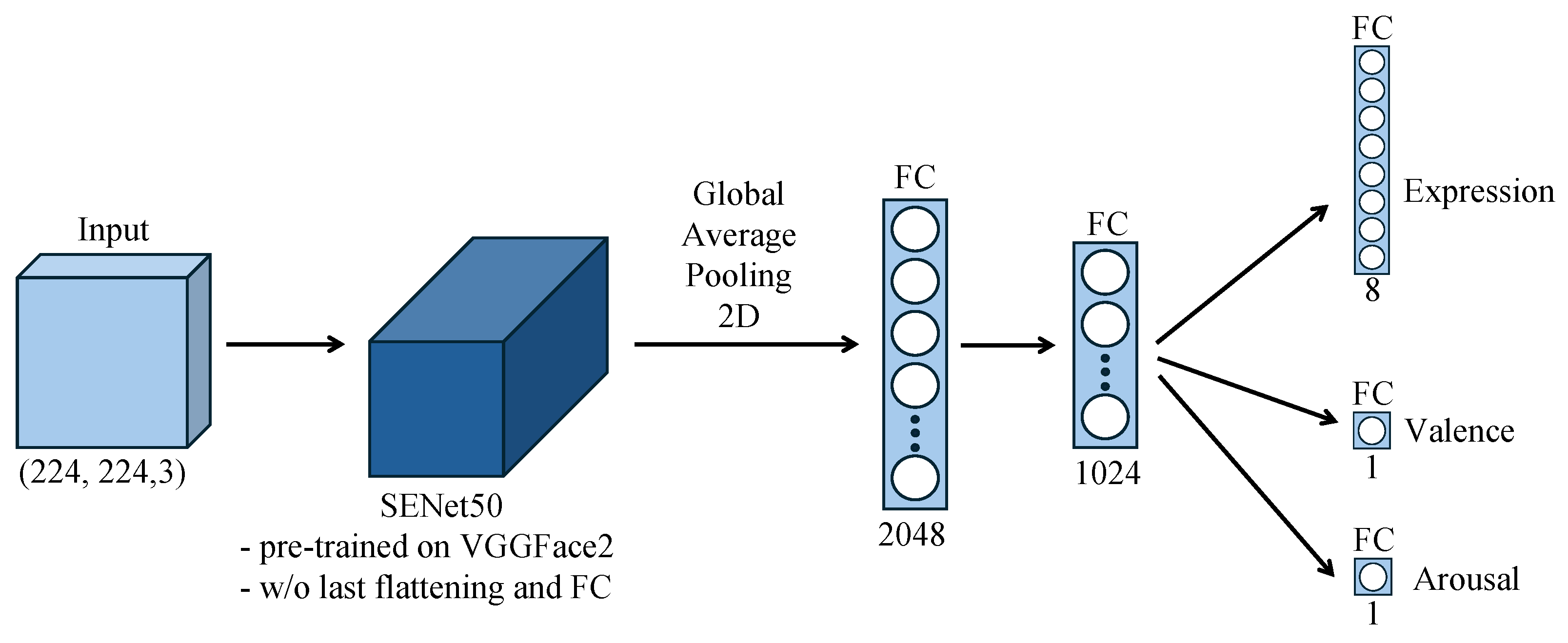

3.2. Facial Emotion Recognition Model

3.2.1. AffectNet Dataset

3.2.2. Model Architecture

3.2.3. Model Training

3.2.4. Model Evaluation

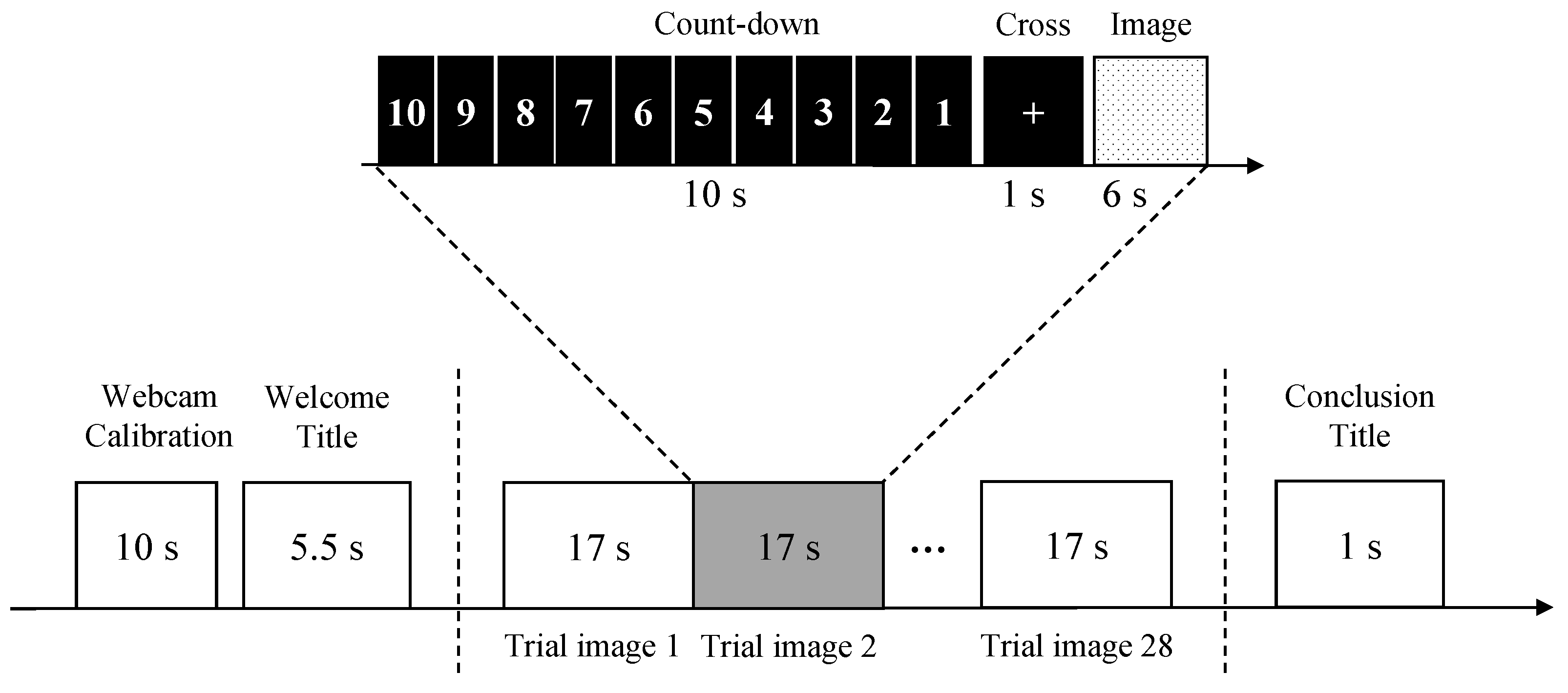

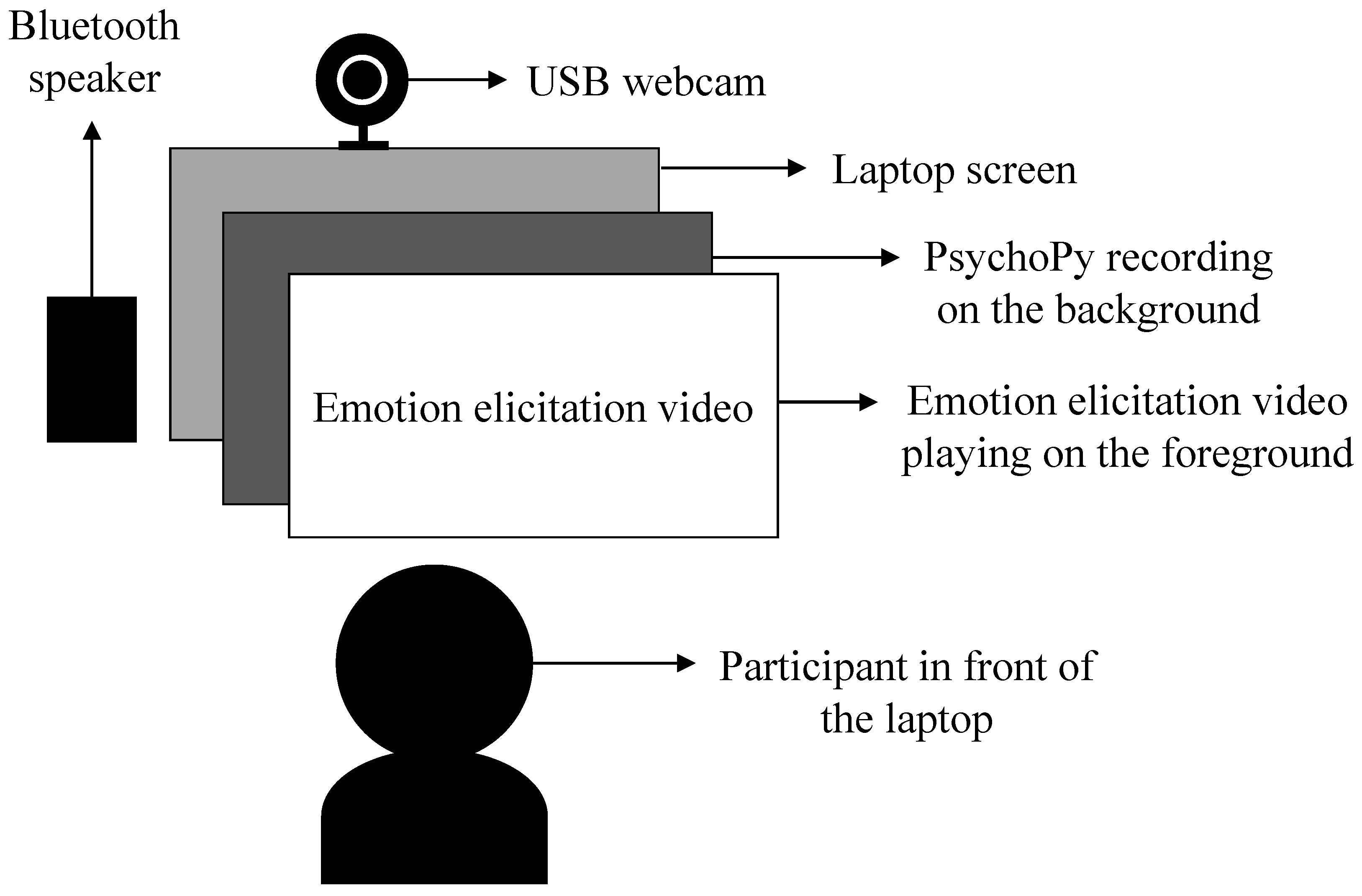

3.3. Data Collection Protocol

3.3.1. Emotion Elicitation Stimuli

3.3.2. Experimental Setup

3.3.3. Participants

3.4. Classification of Cognitively Impaired and Healthy Control Subjects

3.4.1. Emotional State Detection

3.4.2. Machine Learning Model Selection and Evaluation

4. Results

4.1. Facial Emotion Recognition

4.2. Cognitive Impairment Detection

| Valence | Arousal | |||

|---|---|---|---|---|

| RMSE | CCC | RMSE | CCC | |

| AffectNet benchmark [20] | 0.394 | 0.541 | 0.402 | 0.450 |

| CNN, = 0.4, = 0.6 | 0.4709 | 0.5013 | 0.391 | 0.3679 |

| CNN, = 0.5, = 0.5 | 0.4539 | 0.5105 | 0.3757 | 0.4213 |

| CNN, = 0.6, = 0.4 | 0.4661 | 0.4953 | 0.379 | 0.4102 |

| CNN, = 0.4, = 0.5 | 0.4372 | 0.5292 | 0.379 | 0.4067 |

| CNN, = 0.5, = 0.4 | 0.4567 | 0.5139 | 0.3898 | 0.3755 |

| CNN, = 0.3, = 0.5 | 0.4415 | 0.5377 | 0.3742 | 0.4163 |

| CNN, = 0.4, = 0.4 | 0.4462 | 0.5286 | 0.3827 | 0.3940 |

| CNN, = 0.5, = 0.3 | 0.4545 | 0.5240 | 0.3780 | 0.3947 |

| CNN, = 0.3, = 0.4 | 0.4360 | 0.5386 | 0.3641 | 0.4451 |

| CNN, = 0.4, = 0.3 | 0.4332 | 0.5571 | 0.3685 | 0.4491 |

| Optimal Parameter Combination | Accuracy | F1 Score | |

|---|---|---|---|

| KNN | A total of 5 neighbors, Manhattan distance | 0.767 ± 0.062 | 0.754 ± 0.077 |

| LR | L2 penalty, tolerance = 0.0001, C = 100 | 0.583 ± 0.105 | 0.593 ± 0.102 |

| SVM | linear kernel, tolerance = 0.001, C = 0.01 | 0.633 ± 0.085 | 0.626 ± 0.089 |

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Global Status Report on the Public Health Response to Dementia; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Koyama, A.; Okereke, O.I.; Yang, T.; Blacker, D.; Selkoe, D.J.; Grodstein, F. Plasma amyloid-β as a predictor of dementia and cognitive decline: A systematic review and meta-analysis. Arch. Neurol. 2012, 69, 824–831. [Google Scholar] [CrossRef] [PubMed]

- Alexander, G.C.; Emerson, S.; Kesselheim, A.S. Evaluation of aducanumab for Alzheimer disease: Scientific evidence and regulatory review involving efficacy, safety, and futility. JAMA 2021, 325, 1717–1718. [Google Scholar] [CrossRef]

- van Dyck, C.H.; Swanson, C.J.; Aisen, P.; Bateman, R.J.; Chen, C.; Gee, M.; Kanekiyo, M.; Li, D.; Reyderman, L.; Cohen, S.; et al. Lecanemab in Early Alzheimer’s Disease. N. Engl. J. Med. 2023, 388, 9–21. [Google Scholar] [CrossRef]

- Jack, C.R., Jr.; Bennett, D.A.; Blennow, K.; Carrillo, M.C.; Dunn, B.; Haeberlein, S.B.; Holtzman, D.M.; Jagust, W.; Jessen, F.; Karlawish, J.; et al. NIA-AA research framework: Toward a biological definition of Alzheimer’s disease. Alzheimer’s Dement. 2018, 14, 535–562. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.H.; Lwi, S.J.; Hua, A.Y.; Haase, C.M.; Miller, B.L.; Levenson, R.W. Increased subjective experience of non-target emotions in patients with frontotemporal dementia and Alzheimer’s disease. Curr. Opin. Behav. Sci. 2017, 15, 77–84. [Google Scholar] [CrossRef]

- Pressman, P.S.; Chen, K.H.; Casey, J.; Sillau, S.; Chial, H.J.; Filley, C.M.; Miller, B.L.; Levenson, R.W. Incongruences between facial expression and self-reported emotional reactivity in frontotemporal dementia and related disorders. J. Neuropsychiatry Clin. Neurosci. 2023, 35, 192–201. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Dodge, H.H.; Mahoor, M.H. MC-ViViT: Multi-branch Classifier-ViViT to detect Mild Cognitive Impairment in older adults using facial videos. Expert Syst. Appl. 2024, 238, 121929. [Google Scholar] [CrossRef]

- Umeda-Kameyama, Y.; Kameyama, M.; Tanaka, T.; Son, B.K.; Kojima, T.; Fukasawa, M.; Iizuka, T.; Ogawa, S.; Iijima, K.; Akishita, M. Screening of Alzheimer’s disease by facial complexion using artificial intelligence. Aging 2021, 13, 1765–1772. [Google Scholar] [CrossRef]

- Fei, Z.; Yang, E.; Yu, L.; Li, X.; Zhou, H.; Zhou, W. A Novel deep neural network-based emotion analysis system for automatic detection of mild cognitive impairment in the elderly. Neurocomputing 2022, 468, 306–316. [Google Scholar] [CrossRef]

- Alsuhaibani, M.; Fard, A.P.; Sun, J.; Poor, F.F.; Pressman, P.S.; Mahoor, M.H. A Review of Deep Learning Approaches for Non-Invasive Cognitive Impairment Detection. arXiv 2024. Available online: http://arxiv.org/abs/2410.19898 (accessed on 29 January 2025). [CrossRef]

- Dodge, H.H.; Yu, K.; Wu, C.Y.; Pruitt, P.J.; Asgari, M.; Kaye, J.A.; Hampstead, B.M.; Struble, L.; Potempa, K.; Lichtenberg, P.; et al. Internet-Based Conversational Engagement Randomized Controlled Clinical Trial (I-CONECT) Among Socially Isolated Adults 75+ Years Old With Normal Cognition or Mild Cognitive Impairment: Topline Results. Gerontol. 2023, 64, gnad147. [Google Scholar] [CrossRef]

- Zheng, C.; Bouazizi, M.; Ohtsuki, T.; Kitazawa, M.; Horigome, T.; Kishimoto, T. Detecting Dementia from Face-Related Features with Automated Computational Methods. Bioengineering 2023, 10, 862. [Google Scholar] [CrossRef]

- Kishimoto, T.; Takamiya, A.; Liang, K.; Funaki, K.; Fujita, T.; Kitazawa, M.; Yoshimura, M.; Tazawa, Y.; Horigome, T.; Eguchi, Y.; et al. The project for objective measures using computational psychiatry technology (PROMPT): Rationale, design, and methodology. Contemp. Clin. Trials Commun. 2020, 19, 100649. [Google Scholar] [CrossRef]

- Jiang, Z.; Seyedi, S.; Haque, R.U.; Pongos, A.L.; Vickers, K.L.; Manzanares, C.M.; Lah, J.J.; Levey, A.I.; Clifford, G.D. Automated analysis of facial emotions in subjects with cognitive impairment. PLoS ONE 2022, 17, e0262527. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Plutchik, R. A psycho evolutionary theory of emotions. Soc. Sci. Inf. 1982, 21, 529–553. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial action coding system. Environ. Psychol. Nonverbal Behav. 1978. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Schoneveld, L.; Othmani, A. Towards a General Deep Feature Extractor for Facial Expression Recognition. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2339–2342. [Google Scholar] [CrossRef]

- Singh, S.; Nasoz, F. Facial Expression Recognition with Convolutional Neural Networks. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 0324–0328. [Google Scholar] [CrossRef]

- Handrich, S.; Dinges, L.; Al-Hamadi, A.; Werner, P.; Al Aghbari, Z. Simultaneous prediction of valence/arousal and emotions on AffectNet, Aff-Wild and AFEW-VA. Procedia Comput. Sci. 2020, 170, 634–641. [Google Scholar] [CrossRef]

- Teixeira, T.; Granger, E.; Lameiras Koerich, A. Continuous Emotion Recognition with Spatiotemporal Convolutional Neural Networks. Appl. Sci. 2021, 11, 11738. [Google Scholar] [CrossRef]

- Li, J.; Jin, K.; Zhou, D.; Kubota, N.; Ju, Z. Attention mechanism-based CNN for facial expression recognition. Neurocomputing 2020, 411, 340–350. [Google Scholar] [CrossRef]

- Huang, Q.; Huang, C.; Wang, X.; Jiang, F. Facial expression recognition with grid-wise attention and visual transformer. Inf. Sci. 2021, 580, 35–54. [Google Scholar] [CrossRef]

- Xiaohua, W.; Muzi, P.; Lijuan, P.; Min, H.; Chunhua, J.; Fuji, R. Two-level attention with two-stage multi-task learning for facial emotion recognition. J. Vis. Commun. Image Represent. 2019, 62, 217–225. [Google Scholar] [CrossRef]

- Karnati, M.; Seal, A.; Bhattacharjee, D.; Yazidi, A.; Krejcar, O. Understanding Deep Learning Techniques for Recognition of Human Emotions Using Facial Expressions: A Comprehensive Survey. IEEE Trans. Instrum. Meas. 2023, 72, 1–31. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 29 January 2025).

- Peirce, J.; Gray, J.R.; Simpson, S.; MacAskill, M.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J.K. PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 2019, 51, 195–203. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ngo, Q.; Yoon, S. Facial Expression Recognition Based on Weighted-Cluster Loss and Deep Transfer Learning Using a Highly Imbalanced Dataset. Sensors 2020, 20, 2639. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. VGGFace2: A Dataset for Recognising Faces across Pose and Age. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar] [CrossRef]

- Keras VGGFace. VGGFace Implementation with Keras Framework. Available online: https://github.com/rcmalli/keras-vggface (accessed on 29 January 2025).

- Yik, M.; Mues, C.; Sze, I.N.; Kuppens, P.; Tuerlinckx, F.; De Roover, K.; Kwok, F.H.; Schwartz, S.H.; Abu-Hilal, M.; Adebayo, D.F.; et al. On the relationship between valence and arousal in samples across the globe. Emotion 2023, 23, 332–344. [Google Scholar] [CrossRef]

- Nandy, R.; Nandy, K.; Walters, S.T. Relationship between valence and arousal for subjective experience in a real-life setting for supportive housing residents: Results from an ecological momentary assessment study. JMIR Form. Res. 2023, 7, e34989. [Google Scholar] [CrossRef]

- Parthasarathy, S.; Busso, C. Jointly Predicting Arousal, Valence and Dominance with Multi-Task Learning. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 1103–1107. [Google Scholar] [CrossRef]

- Horvat, M.; Kukolja, D.; Ivanec, D. Comparing affective responses to standardized pictures and videos: A study report. In Proceedings of the 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 25–29 May 2015; pp. 1394–1398. [Google Scholar] [CrossRef]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report Technical Report A-8; University of Florida, NIMH Center for the Study of Emotion and Attention: Gainesville, FL, USA, 2008. [Google Scholar]

- Bradley, M.M.; Lang, P.J. The International Affective Digitized Sounds (IADS-2): Affective Ratings of Sounds and Instruction Manual; Technical Report Technical Report B-3; University of Florida, NIMH Center for the Study of Emotion and Attention: Gainesville, FL, USA, 2007. [Google Scholar]

- Yuen, K.; Johnston, S.; Martino, F.; Sorger, B.; Formisano, E.; Linden, D.; Goebel, R. Pattern classification predicts individuals’ responses to affective stimuli. Transl. Neurosci. 2012, 3, 278–287. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97. [Google Scholar] [CrossRef]

- Prajapati, V.; Guha, R.; Routray, A. Multimodal prediction of trait emotional intelligence-Through affective changes measured using non-contact based physiological measures. PLoS ONE 2021, 16, e0254335. [Google Scholar] [CrossRef] [PubMed]

- Merghani, W.; Davison, A.K.; Yap, M.H. A Review on Facial Micro-Expressions Analysis: Datasets, Features and Metrics. arXiv 2018. Available online: http://arxiv.org/abs/1805.02397 (accessed on 29 January 2025). [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019. Available online: http://arxiv.org/abs/1906.08172 (accessed on 29 January 2025). [CrossRef]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract Your Attention: Multi-Head Cross Attention Network for Facial Expression Recognition. Biomimetics 2023, 8, 199. [Google Scholar] [CrossRef]

- Ma, F.; Sun, B.; Li, S. Facial Expression Recognition With Visual Transformers and Attentional Selective Fusion. IEEE Trans. Affect. Comput. 2023, 14, 1236–1248. [Google Scholar] [CrossRef]

- Okunishi, T.; Zheng, C.; Bouazizi, M.; Ohtsuki, T.; Kitazawa, M.; Horigome, T.; Kishimoto, T. Dementia and MCI Detection Based on Comprehensive Facial Expression Analysis From Videos During Conversation. IEEE J. Biomed. Health Inform. 2025, 29, 3537–3548. [Google Scholar] [CrossRef]

| Cognitively Impaired | Healthy Controls | |

|---|---|---|

| Number of subjects | 32 | 28 |

| Age (mean ± standard deviation) | 69.3 ± 8.9 | 58.8 ± 6.9 |

| Sex (number of females, %) | 14 (43.8%) | 14 (50%) |

| Ethnicity | Caucasian | Caucasian |

| Years of education (mean ± standard deviation) | 12.7 ± 5.0 | 15.6 ± 4.8 |

| MMSE score (mean ± standard deviation) | 23.9 ± 5.3 | 29.2 ± 1.2 |

| MoCA score (mean ± standard deviation) | 18.7 ± 5.1 | 25.4 ± 2.2 |

| Severity of cognitive impairment | 23 MCI, 9 overt dementia | No cognitive impairment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bergamasco, L.; Lorenzo, F.; Coletta, A.; Olmo, G.; Cermelli, A.; Rubino, E.; Rainero, I. Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis. Appl. Sci. 2025, 15, 9103. https://doi.org/10.3390/app15169103

Bergamasco L, Lorenzo F, Coletta A, Olmo G, Cermelli A, Rubino E, Rainero I. Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis. Applied Sciences. 2025; 15(16):9103. https://doi.org/10.3390/app15169103

Chicago/Turabian StyleBergamasco, Letizia, Federica Lorenzo, Anita Coletta, Gabriella Olmo, Aurora Cermelli, Elisa Rubino, and Innocenzo Rainero. 2025. "Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis" Applied Sciences 15, no. 16: 9103. https://doi.org/10.3390/app15169103

APA StyleBergamasco, L., Lorenzo, F., Coletta, A., Olmo, G., Cermelli, A., Rubino, E., & Rainero, I. (2025). Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis. Applied Sciences, 15(16), 9103. https://doi.org/10.3390/app15169103