Single-Objective Surrogate Models for Continuous Metaheuristics: An Overview

Abstract

1. Introduction

2. Roles and Benefits of Surrogate Models

2.1. Methods to Express Quality of Points

2.2. Quality-Based Decisions Within the Metaheuristics Framework

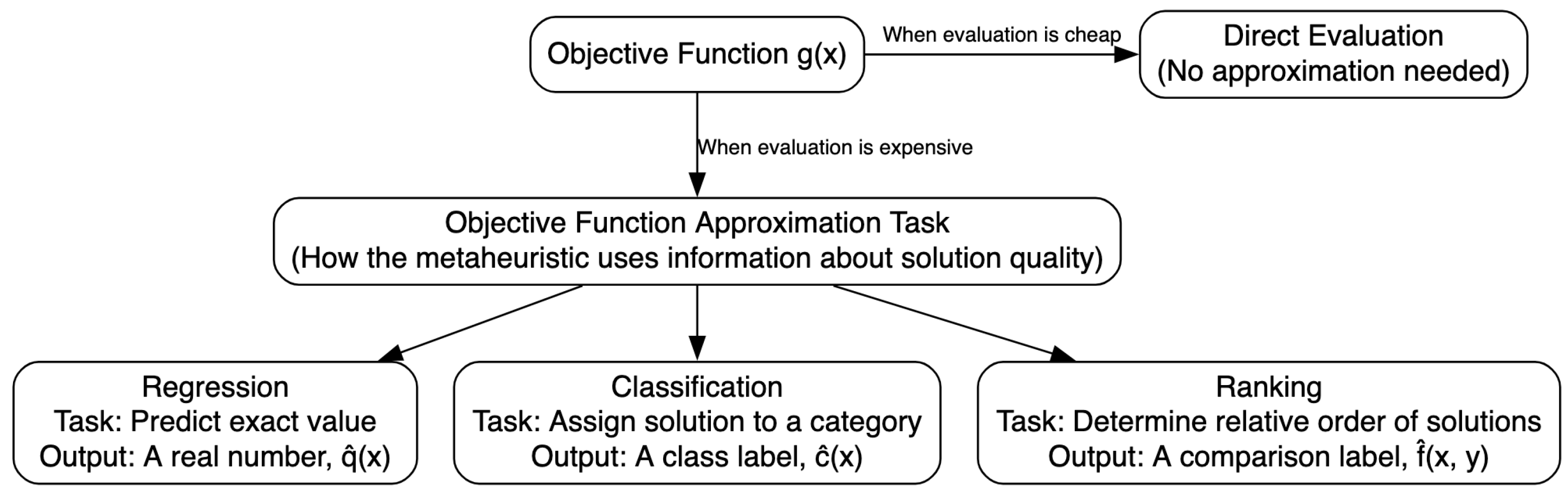

3. Types of Approximation Tasks for Surrogate Models

3.1. Regression Models

3.2. Classification Models

3.3. Ranking Models

4. Surrogate Models Types

4.1. Linear Models

4.2. Quadratic Models

4.3. Polynomial Regression

4.4. Neural Networks

4.5. Decision Trees

4.6. k Nearest Neighbors

4.7. Support Vector Machines

4.8. Radial Basis Functions

4.9. Kriging

4.10. Interpolation and Extrapolation Behavior

5. Structures of Surrogate Models

5.1. Global Surrogate Models

5.2. Local Surrogate Models

5.3. Combined Approaches

5.4. Hierarchical Approaches

6. Strategies of Using and Learning Surrogate Models

- Sampling strategy: which points should be evaluated using the surrogate model or the true quality criterion;

- Selection and forgetting strategy: to what degree the surrogate model should adapt to newly acquired samples and forget the older ones.

6.1. Sampling Strategy

6.2. Forgetting Strategy

7. Concluding Remarks

7.1. Summary

7.2. A Proposed Decision Framework

- Identify the Approximation Task: The first and most critical step is to determine the fundamental task the surrogate must perform. This depends entirely on the needs of the chosen metaheuristic—does it require regression (predicting exact values), classification (assigning categories), or ranking (determining relative order)?

- Select the Model Type: Once the task is defined, select a specific model (e.g., RBF, Random Forest, and Kriging) by evaluating trade-offs like the model’s complexity, its training cost, and its ability to handle the problem’s specific characteristics, such as high dimensionality or discontinuities.

- Determine the Model Structure: Consider if the problem requires a more advanced architecture than a single global model. Choices include using multiple local models for specific regions, or more complex ensemble or hierarchical frameworks to improve robustness and manage the fidelity–cost trade-off.

- Define the Management Strategy: Finally, define the dynamic strategies for using the model. This includes the sampling strategy (which points to evaluate with the expensive true function) and the forgetting strategy (which data to keep in the model’s training archive) to align with the computational budget and effectively balance exploration and exploitation.

7.3. Outlook and Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Glover, F.; Kochenberher, G. Handbook of Meta-Heuristics; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Talbi, E. Metaheuristics—From Design to Implementation; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Jin, Y. A comprehensive survey of fitness approximation in evolutionary computation. Soft Comput. 2005, 9, 3–12. [Google Scholar] [CrossRef]

- Jin, Y. Surrogate-assisted evolutionary computation: Recent advances and future challenges. Swarm Evol. Comput. 2011, 1, 61–70. [Google Scholar] [CrossRef]

- Tong, H.; Huang, C.; Minku, L.; Yao, X. Surrogate Models in Evolutionary Single-Objective Optimization: A New Taxonomy and Experimental Study. Inf. Sci. 2021, 562, 414–437. [Google Scholar] [CrossRef]

- He, C.; Zhang, Y.; Gong, D.; Ji, X. A review of surrogate-assisted evolutionary algorithms for expensive optimization problems. Expert Syst. Appl. 2023, 217, 119495. [Google Scholar] [CrossRef]

- Liang, J.; Lou, Y.; Yu, M.; Bi, Y.; Yu, K. A survey of surrogate-assisted evolutionary algorithms for expensive optimization. J. Membr. Comput. 2024, 7, 108–127. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhan, Z.H.; Zhang, J. Evolutionary Computation for Expensive Optimization: A Survey. Mach. Intell. Res. 2022, 19, 3–23. [Google Scholar] [CrossRef]

- Forrester, A.; Keane, A. Recent advances in surrogate-based optimization. Prog. Aerosp. Sci. 2009, 45, 50–79. [Google Scholar] [CrossRef]

- Wang, H.; Jin, Y.; Doherty, J. Committee-Based Active Learning for Surrogate-Assisted Particle Swarm Optimization of Expensive Problems. IEEE Trans. Cybern. 2017, 47, 2664–2677. [Google Scholar] [CrossRef]

- Emmerich, M.; Giannakoglou, K.; Naujoks, B. Single- and multiobjective evolutionary optimization assisted by Gaussian random field metamodels. IEEE Trans. Evol. Comput. 2006, 10, 421–439. [Google Scholar] [CrossRef]

- Yu, M.; Liang, J.; Wu, Z.; Yang, Z. A twofold infill criterion-driven heterogeneous ensemble surrogate-assisted evolutionary algorithm for computationally expensive problems. Knowl.-Based Syst. 2021, 236, 107747. [Google Scholar] [CrossRef]

- Viana, F.; Haftka, R.; Watson, L. Efficient global optimization algorithm assisted by multiple surrogate techniques. J. Glob. Optim. 2013, 56, 669–689. [Google Scholar] [CrossRef]

- Shan, S.; Wang, G. Survey of modeling and optimization strategies to solve high-dimensional design problems with computationally-expensive black-box functions. Struct. Multidiscip. Optim. 2010, 41, 219–241. [Google Scholar] [CrossRef]

- Li, F.; Cai, X.; Gao, L.; Shen, W. A Surrogate-Assisted Multiswarm Optimization Algorithm for High-Dimensional Computationally Expensive Problems. IEEE Trans. Cybern. 2021, 51, 1390–1402. [Google Scholar] [CrossRef] [PubMed]

- Branke, J.; Schmidt, C. Faster convergence by means of fitness estimation. Soft Comput. 2005, 9, 13–20. [Google Scholar] [CrossRef]

- Jin, Y.; Olhofer, M.; Sendhoff, B. A Framework for Evolutionary Optimization with Approximate Fitness Functions. IEEE Trans. Evol. Comput. 2002, 6, 481–494. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Q.; Gielen, G. A Gaussian Process Surrogate Model Assisted Evolutionary Algorithm for Medium Scale Expensive Optimization Problems. IEEE Trans. Evol. Comput. 2014, 18, 180–192. [Google Scholar] [CrossRef]

- Long, T.; Ye, N.; Chen, R.; Shi, R.; Zhang, B. Surrogate-assisted differential evolution using manifold learning-based sampling for high- dimensional expensive constrained optimization problems. Chin. J. Aeronaut. 2024, 37, 252–270. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhan, Z.H.; Wang, C.; Jin, H.; Zhang, J. Boosting Data-Driven Evolutionary Algorithm with Localized Data Generation. IEEE Trans. Evol. Comput. 2020, 24, 923–937. [Google Scholar] [CrossRef]

- Cheng, K.; Lu, Z.; Ling, C.; Zhou, S. Surrogate-assisted global sensitivity analysis: An overview. Struct. Multidiscip. Optim. 2020, 61, 1187–1213. [Google Scholar] [CrossRef]

- Guo, X.Q.; Wei, F.F.; Zhang, J.; Chen, W.N. A Classifier-Ensemble-Based Surrogate-Assisted Evolutionary Algorithm for Distributed Data-Driven Optimization. IEEE Trans. Evol. Comput. 2025, 29, 711–725. [Google Scholar] [CrossRef]

- Le, H.L.; Landa-Silva, D.; Galar, M.; Garcia, S.; Triguero, I. A Hybrid Surrogate Model for Evolutionary Undersampling in Imbalanced Classification. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Sun, G.; Pang, T. Multisurrogate-Assisted Ant Colony Optimization for Expensive Optimization Problems with Continuous and Categorical Variables. IEEE Trans. Cybern. 2022, 52, 11348–11361. [Google Scholar] [CrossRef]

- Ziegler, J.; Banzhaf, W. Decreasing the Number of Evaluations in Evolutionary Algorithms by Using a Meta-model of the Fitness Function. In Proceedings of the Genetic Programming, Essex, UK, 14–16 April 2003; Ryan, C., Soule, T., Keijzer, M., Tsang, E., Poli, R., Costa, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 264–275. [Google Scholar]

- Sun, X.; Gong, D.; Jin, Y.; Chen, S. A New Surrogate-Assisted Interactive Genetic Algorithm with Weighted Semisupervised Learning. IEEE Trans. Cybern. 2013, 43, 685–698. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Tang, K.; Yao, X. Classification-assisted Differential Evolution for computationally expensive problems. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 1986–1993. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, J.X.; Hu, Q.V. Boosting evolutionary optimization via fuzzy-classification-assisted selection. Inf. Sci. 2020, 519, 423–438. [Google Scholar] [CrossRef]

- Handoko, S.D.; Kwoh, C.K.; Ong, Y.S. Feasibility Structure Modeling: An Effective Chaperone for Constrained Memetic Algorithms. IEEE Trans. Evol. Comput. 2010, 14, 740–758. [Google Scholar] [CrossRef]

- Naharro, P.S.; Toharia, P.; LaTorre, A.; Peña, J.M. Comparative study of regression vs pairwise models for surrogate-based heuristic optimisation. Swarm Evol. Comput. 2022, 75, 101176. [Google Scholar] [CrossRef]

- Xu, H.; Xue, B.; Zhang, M. A Duplication Analysis-Based Evolutionary Algorithm for Biobjective Feature Selection. IEEE Trans. Evol. Comput. 2021, 25, 205–218. [Google Scholar] [CrossRef]

- Harada, T. A pairwise ranking estimation model for surrogate-assisted evolutionary algorithms. Complex Intell. Syst. 2023, 9, 6875–6890. [Google Scholar] [CrossRef]

- Huang, C.; Radi, B.; ELHami, A.; Bai, H. CMA evolution strategy assisted by kriging model and approximate ranking. Appl. Intell. 2018, 48, 4288–4304. [Google Scholar] [CrossRef]

- Loshchilov, I.; Schoenauer, M.; Sebag, M. Comparison-Based Optimizers Need Comparison-Based Surrogates. In Proceedings of the Parallel Problem Solving from Nature, PPSN XI, Krakov, Poland, 11–15 September 2010; Schaefer, R., Cotta, C., Kołodziej, J., Rudolph, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 364–373. [Google Scholar] [CrossRef]

- Blank, J.; Deb, K. PSAF: A probabilistic surrogate-assisted framework for single-objective optimization. In Proceedings of the Genetic and Evolutionary Computation Conference, GECCO ’21, Lille, France, 10–14 July 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 652–659. [Google Scholar] [CrossRef]

- Gong, W.; Zhou, A.; Cai, Z. A Multioperator Search Strategy Based on Cheap Surrogate Models for Evolutionary Optimization. IEEE Trans. Evol. Comput. 2015, 19, 746–758. [Google Scholar] [CrossRef]

- Runarsson, T.P. Ordinal Regression in Evolutionary Computation. In Proceedings of the Parallel Problem Solving from Nature—PPSN IX, Reykjavik, Iceland, 9–13 September 2006; Runarsson, T.P., Beyer, H.G., Burke, E., Merelo-Guervós, J.J., Whitley, L.D., Yao, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1048–1057. [Google Scholar]

- Lu, X.; Tang, K.; Sendhoff, B.; Yao, X. A new self-adaptation scheme for differential evolution. Neurocomputing 2014, 146, 2–16. [Google Scholar] [CrossRef]

- Runarsson, T. Constrained Evolutionary Optimization by Approximate Ranking and Surrogate Models. In Proceedings of the Parallel Problem Solving from Nature—PPSN VIII, Birmingham, UK, 18–22 September 2004; pp. 401–410. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer Series in Statistics; Springer: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Myers, R.; Montgomery, D.; Anderson-Cook, C. Response Surface Methodology: Process and Product Optimization Using Designed Experiments; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Shashaani, S.; Hunter, S.R.; Pasupathy, R. ASTRO-DF: Adaptive sampling trust-region optimization algorithms, heuristics, and numerical experience. In Proceedings of the 2016 Winter Simulation Conference, WSC ’16, Washington, DC, USA, 11–14 December 2016; IEEE Press: New York, NY, USA, 2016; pp. 554–565. [Google Scholar]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Tawarmalani, M.; Sahinidis, N. Convexification and Global Optimization in Continuous and Mixed-Integer Nonlinear Programming: Theory, Algorithms, Software, and Applications; Nonconvex Optimization and Its Applications; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Powell, M. The BOBYQA Algorithm for Bound Constrained Optimization without Derivatives; Technical Report; Department of Applied Mathematics and Theoretical Physics: Cambridge, UK, 2009. [Google Scholar]

- Box, G.E.P.; Wilson, K.B. On the Experimental Attainment of Optimum Conditions. In Breakthroughs in Statistics: Methodology and Distribution; Springer: New York, NY, USA, 1992; pp. 270–310. [Google Scholar] [CrossRef]

- Cai, X.; Gao, L.; Li, X. Efficient Generalized Surrogate-Assisted Evolutionary Algorithm for High-Dimensional Expensive Problems. IEEE Trans. Evol. Comput. 2020, 24, 365–379. [Google Scholar] [CrossRef]

- Wu, Y.; Yin, Q.; Jie, H.; Wang, B.; Zhao, J. A RBF-based constrained global optimization algorithm for problems with computationally expensive objective and constraints. Struct. Multidiscip. Optim. 2018, 58, 1633–1655. [Google Scholar] [CrossRef]

- Islam, M.M.; Singh, H.K.; Ray, T. A Surrogate Assisted Approach for Single-Objective Bilevel Optimization. IEEE Trans. Evol. Comput. 2017, 21, 681–696. [Google Scholar] [CrossRef]

- Box, G.E.P.; Draper, N.R. Empirical Model-Building and Response Surfaces; Wiley: Hoboken, NJ, USA, 1987. [Google Scholar]

- Holeňa, M.; Linke, D.; Rodemerck, U.; Bajer, L. Neural networks as surrogate models for measurements in optimization algorithms. In Proceedings of the 17th International Conference on Analytical and Stochastic Modeling Techniques and Applications, ASMTA’10, Cardiff, UK, 14–16 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 351–366. [Google Scholar] [CrossRef]

- Rakitianskaia, A.; Engelbrecht, A. Measuring Saturation in Neural Networks. In Proceedings of the 2015 IEEE Symposium Series on Computational Intelligence, Cape Town, South Africa, 7–10 December 2015; pp. 1423–1430. [Google Scholar] [CrossRef]

- Jin, Y.; Sendhoff, B. Reducing Fitness Evaluations Using Clustering Techniques and Neural Network Ensembles. In Proceedings, Part I, Proceedings of the Genetic and Evolutionary Computation—GECCO 2004, Genetic and Evolutionary Computation Conference, Seattle, WA, USA, 26–30 June 2004; Lecture Notes in Computer Science; Deb, K., Poli, R., Banzhaf, W., Beyer, H., Burke, E.K., Darwen, P.J., Dasgupta, D., Floreano, D., Foster, J.A., Harman, M., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3102, pp. 688–699. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees, 1st ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 1984. [Google Scholar] [CrossRef]

- Zheng, Y.; Fu, X.; Xuan, Y. Data-Driven Optimization Based on Random Forest Surrogate. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 487–491. [Google Scholar] [CrossRef]

- Krawczyk, K.; Arabas, J. What Is the Impact of Typical Surrogate Models on the Performance of the JADE Algorithm? In Proceedings of the Computational Intelligence, Kuala Lumpur, Malaysia, 14–16 February 2025; Bäck, T., van Stein, N., Wagner, C., Garibaldi, J.M., Marcelloni, F., Lam, H.K., Cottrell, M., Doctor, F., Filipe, J., Warwick, K., et al., Eds.; Springer: Cham, Switzerland, 2025; pp. 224–239. [Google Scholar] [CrossRef]

- Naharro, P.S.; LaTorre, A.; Peña, J.M. Surrogate-based optimisation for a hospital simulation scenario using pairwise classifiers. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, GECCO ’21, Lille, France, 10–14 July 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 15–16. [Google Scholar] [CrossRef]

- Aha, D.W.; Kibler, D.; Albert, M.K. Instance-based learning algorithms. Mach. Learn. 1991, 6, 37–66. [Google Scholar] [CrossRef]

- Shi, L.; Rasheed, K. A Survey of Fitness Approximation Methods Applied in Evolutionary Algorithms. In Computational Intelligence in Expensive Optimization Problems; Tenne, Y., Goh, C.K., Eds.; Springer: Berlin/Heidelberg, 2010; pp. 3–28. [Google Scholar] [CrossRef]

- Archetti, F.; Candelieri, A. Bayesian Optimization and Data Science; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent Advances in Bayesian Optimization. arXiv 2022, arXiv:2206.03301. [Google Scholar] [CrossRef]

- Fonseca, L.; Barbosa, H.; Lemonge, A. On similarity-based surrogate models for expensive single-and multi-objective evolutionary optimization. In Computational Intelligence in Expensive Optimization Problems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 219–248. [Google Scholar]

- Tian, J.; Tan, Y.; Sun, C.; Zeng, J.; Jin, Y. A self-adaptive similarity-based fitness approximation for evolutionary optimization. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Miranda-Varela, M.E.; Mezura-Montes, E. Constraint-handling techniques in surrogate-assisted evolutionary optimization. An empirical study. Appl. Soft Comput. 2018, 73, 215–229. [Google Scholar] [CrossRef]

- Krawczyk, K.; Arabas, J. JADE with k Nearest Neighbors Surrogate Model. In Proceedings of the Companion Conference on Genetic and Evolutionary Computation, GECCO ’23, Lisbon, Portugal, 15–19 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 2282–2285. [Google Scholar] [CrossRef]

- Smola, A.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Santin, G.; Haasdonk, B. Kernel methods for surrogate modeling. In System- and Data-Driven Methods and Algorithms; De Gruyter: Berlin, Germany, 2021; pp. 311–354. [Google Scholar] [CrossRef]

- Díaz-Manríquez, A.; Toscano-Pulido, G.; Gómez-Flores, W. On the selection of surrogate models in evolutionary optimization algorithms. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 2155–2162. [Google Scholar] [CrossRef]

- Shi, M.; Lv, L.; Sun, W.; Song, X. A multi-fidelity surrogate model based on support vector regression. Struct. Multidiscip. Optim. 2020, 61, 2363–2375. [Google Scholar] [CrossRef]

- Bhattacharjee, K.; Ray, T. A Novel Constraint Handling Strategy for Expensive Optimization Problems. In Proceedings of the Advances in Structural and Multidisciplinary Optimization, Proceedings of the 11th World Congress of Structural and Multidisciplinary Optimization (WCSMO-11), Sydney, Australia, 7–12 June 2015; Li, Q., Steven, G., Zhang, Z., Eds.; University of Sydney: Sydney, Australia, 2015. [Google Scholar]

- Praveen, C.; Duvigneau, R. Low cost PSO using metamodels and inexact pre-evaluation: Application to aerodynamic shape design. Comput. Methods Appl. Mech. Eng. 2009, 198, 1087–1096. [Google Scholar] [CrossRef]

- Regis, R. Evolutionary Programming for High-Dimensional Constrained Expensive Black-Box Optimization Using Radial Basis Functions. IEEE Trans. Evol. Comput. 2014, 18, 326–347. [Google Scholar] [CrossRef]

- Fasshauer, G.; Zhang, J. On choosing “optimal” shape parameters for RBF approximation. Numer. Algorithms 2007, 45, 345–368. [Google Scholar] [CrossRef]

- Li, G.; Zhang, Q.; Lin, Q.; Gao, W. A Three-Level Radial Basis Function Method for Expensive Optimization. IEEE Trans. Cybern. 2022, 52, 5720–5731. [Google Scholar] [CrossRef] [PubMed]

- Yi, J.; Gao, L.; Li, X.; Shoemaker, C.A.; Lu, C. An on-line variable-fidelity surrogate-assisted harmony search algorithm with multi-level screening strategy for expensive engineering design optimization. Knowl.-Based Syst. 2019, 170, 1–19. [Google Scholar] [CrossRef]

- Ren, Z.; Sun, C.; Tan, Y.; Zhang, G.; Qin, S. A bi-stage surrogate-assisted hybrid algorithm for expensive optimization problems. Complex Intell. Syst. 2021, 7, 1391–1405. [Google Scholar] [CrossRef]

- Su, Y.; Xu, L.; Goodman, E.D. Hybrid Surrogate-Based Constrained Optimization with a New Constraint-Handling Method. IEEE Trans. Cybern. 2022, 52, 5394–5407. [Google Scholar] [CrossRef]

- Regis, R.G.; Shoemaker, C.A. Combining radial basis function surrogates and dynamic coordinate search in high-dimensional expensive black-box optimization. Eng. Optim. 2013, 45, 529–555. [Google Scholar] [CrossRef]

- Urquhart, M.; Ljungskog, E.; Sebben, S. Surrogate-based optimisation using adaptively scaled radial basis functions. Appl. Soft Comput. 2020, 88, 106050. [Google Scholar] [CrossRef]

- Ong, Y.S.; Nair, P.; Lum, K. Max-min surrogate-assisted evolutionary algorithm for robust design. IEEE Trans. Evol. Comput. 2006, 10, 392–404. [Google Scholar] [CrossRef]

- Müller, J.; Shoemaker, C.; Piché, R. SO-MI: A surrogate model algorithm for computationally expensive nonlinear mixed-integer black-box global optimization problems. Comput. Oper. Res. 2013, 40, 1383–1400. [Google Scholar] [CrossRef]

- Palar, P.; Shimoyama, K. On efficient global optimization via universal Kriging surrogate models. Struct. Multidiscip. Optim. 2018, 57, 2377–2397. [Google Scholar] [CrossRef]

- Gramacy, R.B. Surrogates: Gaussian Process Modeling, Design, and Optimization for the Applied Sciences, 1st ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2020. [Google Scholar] [CrossRef]

- He, Y.; Sun, J.; Song, P.; Wang, X. Dual Kriging assisted efficient global optimization of expensive problems with evaluation failures. Aerosp. Sci. Technol. 2020, 105, 106006. [Google Scholar] [CrossRef]

- Fu, C.; Wang, P.; Zhao, L.; Wang, X. A distance correlation-based Kriging modeling method for high-dimensional problems. Knowl.-Based Syst. 2020, 206, 106356. [Google Scholar] [CrossRef]

- Akbari, H.; Kazerooni, A. KASRA: A Kriging-based Adaptive Space Reduction Algorithm for global optimization of computationally expensive black-box constrained problems. Appl. Soft Comput. 2020, 90, 106154. [Google Scholar] [CrossRef]

- Dong, H.; Song, B.; Dong, Z.; Wang, P. SCGOSR: Surrogate-based Constrained Global Optimization using Space Reduction. Appl. Soft Comput. 2018, 65, 462–477. [Google Scholar] [CrossRef]

- Awad, N.; Ali, M.; Mallipeddi, R.; Suganthan, P. An Improved Differential Evolution Algorithm using Efficient Adapted Surrogate Model for Numerical Optimization. Inf. Sci. 2018, 451, 326–347. [Google Scholar] [CrossRef]

- Forrester, A.; Sobester, A.; Keane, A. Exploring and Exploiting a Surrogate. In Engineering Design via Surrogate Modelling; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2008; Volume Chapter 3, pp. 77–107. [Google Scholar] [CrossRef]

- Rasmussen, C.; Bousquet, O.; Luxburg, U.; Rätsch, G. Gaussian Processes in Machine Learning. In Advanced Lectures on Machine Learning, ML Summer Schools 2003, Canberra, Australia, 2–14 February 2003, Tübingen, Germany, 4–16 August 2003, Revised Lectures; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3176, pp. 63–71. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, H.; Jiao, A.; Karniadakis, G.; Lu, L. Reliable Extrapolation of Deep Neural Operators Informed by Physics or Sparse Observations. Comput. Methods Appl. Mech. Eng. 2023, 412, 116064. [Google Scholar] [CrossRef]

- Liu, H.; Ong, Y.; Cai, J. A Survey of Adaptive Sampling for Global Metamodeling in Support of Simulation-based Complex Engineering Design. Struct. Multidiscip. Optim. 2018, 57, 393–416. [Google Scholar] [CrossRef]

- Lim, Y.F.; Ng, C.K.; Vaitesswar, U.; Hippalgaonkar, K. Extrapolative Bayesian Optimization with Gaussian Process and Neural Network Ensemble Surrogate Models. Adv. Intell. Syst. 2021, 3, 2100101. [Google Scholar] [CrossRef]

- Bhosekar, A.; Ierapetritou, M. Advances in surrogate based modeling, feasibility analysis and and optimization: A review. Comput. Chem. Eng. 2017, 108, 250–267. [Google Scholar] [CrossRef]

- Zhou, Z.; Ong, Y.; Nair, P.; Keane, A.; Lum, K.Y. Combining global and local surrogate models to accelerate evolutionary optimization. IEEE Trans. Syst. Man Cybern. SMC Part C 2007, 37, 66–76. [Google Scholar] [CrossRef]

- Ghassemi, P.; Behjat, A.; Zeng, C.; Lulekar, S.S.; Rai, R.; Chowdhury, S. Physics-Aware Surrogate-Based Optimization with Transfer Mapping Gaussian Processes: For Bio-Inspired Flow Tailoring. In Proceedings of the AIAA Aviation 2020 Forum, American Institute of Aeronautics and Astronautics, Virtual Online, 15–19 June 2020; p. 3183. [Google Scholar]

- Koziel, S.; Leifsson, L. Physics-Based Surrogate Modeling Using Response Correction. In Simulation-Driven Design by Knowledge-Based Response Correction Techniques; Springer International Publishing: Cham, Switzerland, 2016; pp. 211–243. [Google Scholar] [CrossRef]

- Liaw, R.T.; Wen, Y.W. Ensemble Learning Through Evolutionary Multitasking: A Formulation and Case Study. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 3081–3094. [Google Scholar] [CrossRef]

- Iuliano, E. Adaptive Sampling Strategies for Surrogate-Based Aerodynamic Optimization. In Application of Surrogate-based Global Optimization to Aerodynamic Design; Iuliano, E., Pérez, E.A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 25–46. [Google Scholar] [CrossRef]

- Liu, W.; Yuen, S.Y.; Sung, C.W. A Hybrid CMAES Method with Convex Hull Surrogate Model. In Proceedings of the 2024 IEEE Congress on Evolutionary Computation (CEC), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Sun, C.; Jin, Y.; Zeng, J.; Yu, Y. A two-layer surrogate-assisted particle swarm optimization algorithm. Soft Comput. 2015, 19, 1461–1475. [Google Scholar] [CrossRef]

- Si, L.; Zhang, X.; Tian, Y.; Yang, S.; Zhang, L.; Jin, Y. Linear Subspace Surrogate Modeling for Large-Scale Expensive Single/Multi-Objective Optimization. IEEE Trans. Evol. Comput. 2025, 29, 697–710. [Google Scholar] [CrossRef]

- Gorissen, D.; Dhaene, T.; De Turck, F. Evolutionary Model Type Selection for Global Surrogate Modeling. J. Mach. Learn. Res. 2009, 10, 2039–2078. [Google Scholar]

- Jin, Y.; Wang, H.; Chugh, T.; Guo, D.; Miettinen, K. Data-Driven Evolutionary Optimization: An Overview and Case Studies. IEEE Trans. Evol. Comput. 2019, 23, 442–458. [Google Scholar] [CrossRef]

- Wang, H.; Jin, Y.; Sun, C.; Doherty, J. Offline Data-Driven Evolutionary Optimization Using Selective Surrogate Ensembles. IEEE Trans. Evol. Comput. 2019, 23, 203–216. [Google Scholar] [CrossRef]

- Li, Z.; Dong, Z.; Liang, Z.; Ding, Z. Surrogate-based distributed optimisation for expensive black-box functions. Automatica 2021, 125, 109407. [Google Scholar] [CrossRef]

- Ong, Y.S.; Nair, P.B.; Keane, A.J. Evolutionary Optimization of Computationally Expensive Problems via Surrogate Modeling. AIAA J. 2003, 41, 687–696. [Google Scholar] [CrossRef]

- Yu, M.; Li, X.; Liang, J. A dynamic surrogate-assisted evolutionary algorithm framework for expensive structural optimization. Struct. Multidiscip. Optim. 2020, 61, 711–729. [Google Scholar] [CrossRef]

- Liu, Q.; Wu, X.; Lin, Q.; Ji, J.; Wong, K.C. A novel surrogate-assisted evolutionary algorithm with an uncertainty grouping based infill criterion. Swarm Evol. Comput. 2021, 60, 100787. [Google Scholar] [CrossRef]

- Chu, S.C.; Du, Z.G.; Peng, Y.J.; Pan, J.S. Fuzzy Hierarchical Surrogate Assists Probabilistic Particle Swarm Optimization for expensive high dimensional problem. Knowl.-Based Syst. 2021, 220, 106939. [Google Scholar] [CrossRef]

- Lu, X.; Tang, K. Classification- and Regression-Assisted Differential Evolution for Computationally Expensive Problems. J. Comput. Sci. Technol. 2012, 27, 1024–1034. [Google Scholar] [CrossRef]

- Goel, T.; Haftka, R.; Shyy, W.; Queipo, N. Ensemble of surrogates. Struct. Multidiscip. Optim. 2007, 33, 199–216. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhan, Z.H.; Wang, H.; Zhang, J. Data-Driven Evolutionary Algorithm with Perturbation-Based Ensemble Surrogates. IEEE Trans. Cybern. 2021, 51, 3925–3937. [Google Scholar] [CrossRef] [PubMed]

- Ye, Y.; Wang, Z.; Zhang, X. An optimal pointwise weighted ensemble of surrogates based on minimization of local mean square error. Struct. Multidiscip. Optim. 2020, 62, 529–542. [Google Scholar] [CrossRef]

- Tian, J.; Sun, C.; Tan, Y.; Zeng, J. Granularity-based surrogate-assisted particle swarm optimization for high-dimensional expensive optimization. Knowl.-Based Syst. 2020, 187, 104815. [Google Scholar] [CrossRef]

- Tenne, Y.; Armfield, S. A framework for memetic optimization using variable global and local surrogate models. Soft Comput. 2009, 13, 781–793. [Google Scholar] [CrossRef]

- Chen, H.; Li, W.; Cui, W. Surrogate-assisted evolutionary algorithm with hierarchical surrogate technique and adaptive infill strategy. Expert Syst. Appl. 2023, 232, 120826. [Google Scholar] [CrossRef]

- Lim, D.; Jin, Y.; Ong, Y.; Sendhoff, B. Generalizing Surrogate-Assisted Evolutionary Computation. IEEE Trans. Evol. Comput. 2010, 14, 329–355. [Google Scholar] [CrossRef]

- Lu, X.; Sun, T.; Tang, K. Evolutionary optimization with hierarchical surrogates. Swarm Evol. Comput. 2019, 47, 21–32. [Google Scholar] [CrossRef]

- Garud, S.S.; Karimi, I.A.; Kraft, M. Design of computer experiments: A review. Comput. Chem. Eng. 2017, 106, 71–95. [Google Scholar] [CrossRef]

- Tong, H.; Huang, C.; Liu, J.; Yao, X. Voronoi-based Efficient Surrogate-assisted Evolutionary Algorithm for Very Expensive Problems. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 1996–2003. [Google Scholar] [CrossRef]

- Yu, H.; Tan, Y.; Sun, C.; Zeng, J. Clustering-based evolution control for surrogate-assisted particle swarm optimization. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia, Spain, 5–8 June 2017; pp. 503–508. [Google Scholar]

- Ghassemi, P.; Mehmani, A.; Chowdhury, S. Adaptive in situ model refinement for surrogate-augmented population-based optimization. Struct. Multidiscip. Optim. 2020, 62, 2011–2034. [Google Scholar] [CrossRef]

- Kern, S.; Hansen, N.; Koumoutsakos, P. Local Meta-models for Optimization Using Evolution Strategies. In Proceedings of the Parallel Problem Solving from Nature—PPSN IX, Reykjavik, Iceland, 9–13 September 2006; Runarsson, T.P., Beyer, H.G., Burke, E., Merelo-Guervós, J.J., Whitley, L.D., Yao, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 939–948. [Google Scholar]

- Zhao, X.; Jia, X.; Zhang, T.; Liu, T.; Cao, Y. A Supervised Surrogate-Assisted Evolutionary Algorithm for Complex Optimization Problems. IEEE Trans. Instrum. Meas. 2023, 72, 2509714. [Google Scholar] [CrossRef]

- Zhong, R.; Yu, J.; Zhang, C.; Munetomo, M. Surrogate Ensemble-Assisted Hyper-Heuristic Algorithm for Expensive Optimization Problems. Int. J. Comput. Intell. Syst. 2023, 16, 169. [Google Scholar] [CrossRef]

- Loshchilov, I.; Schoenauer, M.; Sebag, M. Self-adaptive surrogate-assisted covariance matrix adaptation evolution strategy. In Proceedings of the 14th Annual Conference on Genetic and Evolutionary Computation, GECCO ’12, Philadelphia, PA, USA, 7–11 July 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 321–328. [Google Scholar] [CrossRef]

- Zhou, X.; Shroff, N. No-Regret Algorithms for Time-Varying Bayesian Optimization. In Proceedings of the 2021 55th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 24–26 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Zaborski, M.; Mańdziuk, J. Surrogate-Assisted LSHADE Algorithm Utilizing Recursive Least Squares Filter. In Proceedings of the Parallel Problem Solving from Nature—PPSN XVII, Dortmund, Germany, 10–14 September 2022; Rudolph, G., Kononova, A.V., Aguirre, H., Kerschke, P., Ochoa, G., Tušar, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 146–159. [Google Scholar]

- Wild, S.M.; Regis, R.G.; Shoemaker, C.A. ORBIT: Optimization by Radial Basis Function Interpolation in Trust-Regions. SIAM J. Sci. Comput. 2008, 30, 3197–3219. [Google Scholar] [CrossRef]

- Krityakierne, T.; Akhtar, T.; Shoemaker, C.A. SOP: Parallel surrogate global optimization with Pareto center selection for computationally expensive single objective problems. J. Glob. Optim. 2016, 66, 417–437. [Google Scholar] [CrossRef]

- Wolpert, D.; Macready, W. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Wolpert, D. The Lack of A Priori Distinctions Between Learning Algorithms. Neural Comput. 1996, 8, 1341–1390. [Google Scholar] [CrossRef]

- Suganthan, P.; Hansen, N.; Liang, J.; Deb, K.; Chen, Y.P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization. Nat. Comput. 2005, 341–357. [Google Scholar]

- Liang, J.; Qu, B.; Suganthan, P.; Hernández-Díaz, A. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization; Technical Report 201212; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2013. [Google Scholar]

- Wu, G.; Mallipeddi, R.; Suganthan, P. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition and Special Session on Constrained Single Objective Real-Parameter Optimization; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Hansen, N.; Finck, S.; Ros, R.; Auger, A. Real-Parameter Black-Box Optimization Benchmarking 2009: Noisy Functions Definitions. 2009. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=64437ca8d06b7dba2b9918cc1c2c26c9deca527c (accessed on 7 July 2025).

- Dua, D.; Graff, C. UCI Machine Learning Repository. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 7 July 2025).

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

| Model Type | Key Characteristics | Ideal Use Case | Main Limitations |

|---|---|---|---|

| Linear Models | Simple, interpretable, linear functional form, required number of samples grows linearly with dimension. | Baseline models; problems that are approximately linear; early-generation guidance. | Cannot capture complex nonlinear relationships. |

| Quadratic Models | Captures local curvature; foundational to classical Response Surface Methodology, required number of samples grows quadratically with dimension. | Local refinement during later search stages due to their mathematical properties. | Struggles to represent highly nonlinear or multimodal landscapes globally. |

| Polynomial Regression | Approximates the objective function using low-order polynomials. | Lower-dimensional problems or scenarios where computational resources are constrained. | Prone to unstable behavior with extreme values and poor accuracy in high-dimensional or nonlinear scenarios. |

| Neural Networks | A broad class of models with diverse architectures. In the surrogate modeling literature, this most often refers to Multi-Layer Perceptrons, which act as universal approximators capable of learning complex nonlinear relationships. | Problems with highly nonlinear and non-smooth response surfaces. | Requires substantial training data and careful tuning; outputs can saturate, creating uninformative “plateau” regions. |

| Decision Trees | Partition-based models that recursively divide the search space; often used in ensembles. | Problems with discontinuous or piecewise continuous functions, or those with mixed (continuous and categorical) variables. | The performance of a single tree can be unstable; ensembles require careful configuration. |

| k Nearest Neighbors | An instance-based “lazy learner” that interpolates predictions from the k closest training samples. | Problems where few assumptions can be made about the underlying function; provides exact interpolation at training points (when using distance weighting). | Prediction can be computationally intensive, as the main processing occurs at query time, not during a separate training phase. |

| Support Vector Machines | Employs the “kernel trick” to efficiently model nonlinear relationships in a high-dimensional feature space. | Noisy or irregular quality landscapes where good generalization is critical to avoid overfitting. | Performance is highly sensitive to the choice of the kernel function and its parameters, which require careful tuning. |

| Radial Basis Functions | A combination of radially symmetric basis functions, each centered on a training point. | Accurately modeling highly nonlinear problems; widely used in real-world optimization challenges. | Performance can depend on careful parameter tuning; tends to extrapolate poorly by flattening to zero far from training data. |

| Kriging (Gaussian Process) | A statistical model that uniquely provides an intrinsic estimate of its own prediction uncertainty along with the prediction itself. | Scenarios where the model’s uncertainty is needed to actively guide the sampling strategy, balancing exploration and exploitation. | The computational cost scales cubically with the number of training samples, making it difficult to apply to large datasets. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krawczyk, K.; Arabas, J. Single-Objective Surrogate Models for Continuous Metaheuristics: An Overview. Appl. Sci. 2025, 15, 9068. https://doi.org/10.3390/app15169068

Krawczyk K, Arabas J. Single-Objective Surrogate Models for Continuous Metaheuristics: An Overview. Applied Sciences. 2025; 15(16):9068. https://doi.org/10.3390/app15169068

Chicago/Turabian StyleKrawczyk, Konrad, and Jarosław Arabas. 2025. "Single-Objective Surrogate Models for Continuous Metaheuristics: An Overview" Applied Sciences 15, no. 16: 9068. https://doi.org/10.3390/app15169068

APA StyleKrawczyk, K., & Arabas, J. (2025). Single-Objective Surrogate Models for Continuous Metaheuristics: An Overview. Applied Sciences, 15(16), 9068. https://doi.org/10.3390/app15169068