Explainable AI Methods for Identification of Glue Volume Deficiencies in Printed Circuit Boards

Abstract

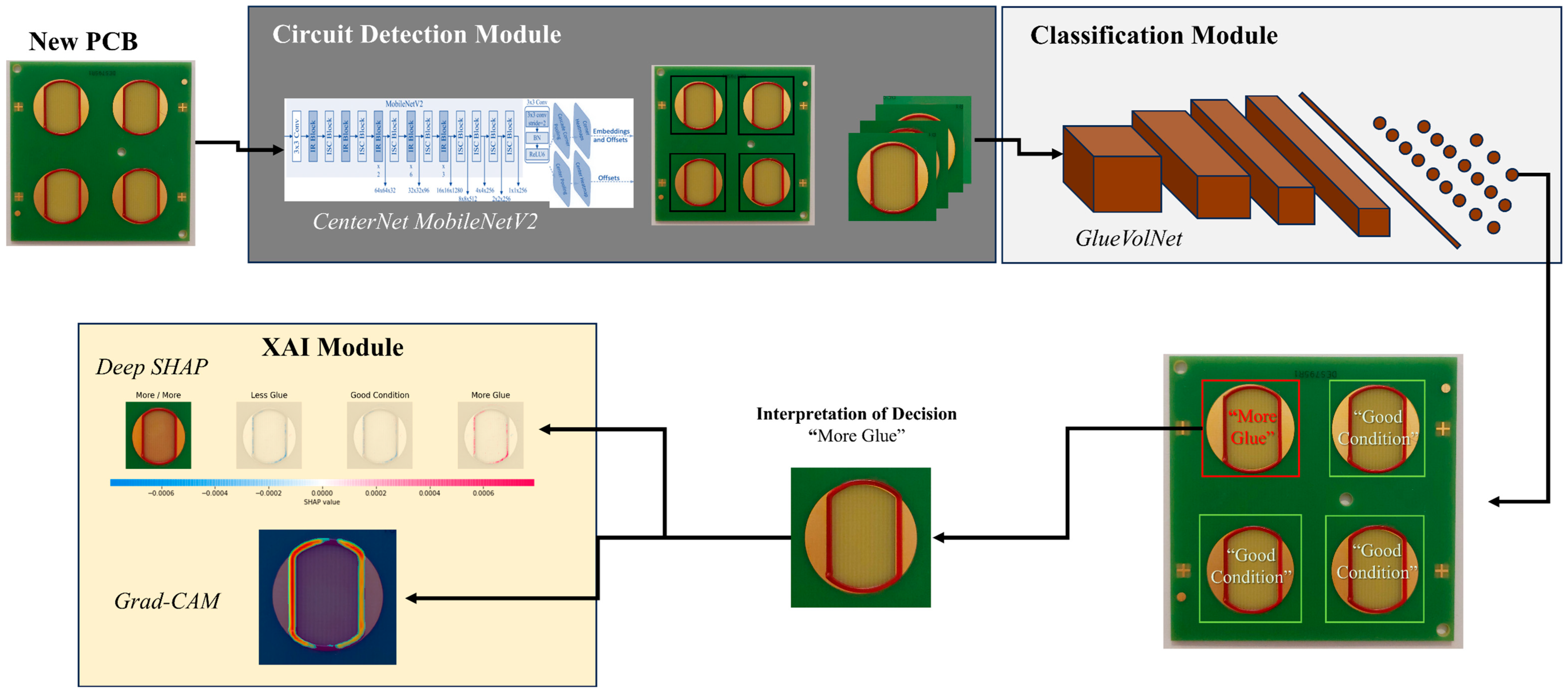

1. Introduction

- The development and evaluation of a holistic, computationally efficient DL-based AOI quality inspection method for a real-world industrial process in the electronics industry;

- The incorporation of high accuracy and interpretable decision-making in the proposed CNN, which contributes to a more human-centered and understandable AI in smart manufacturing.

2. Related Work

2.1. Convolutional Neural Networks and Post-Hoc Explainable Methods

2.2. Machine Vision for Glue Dispensing

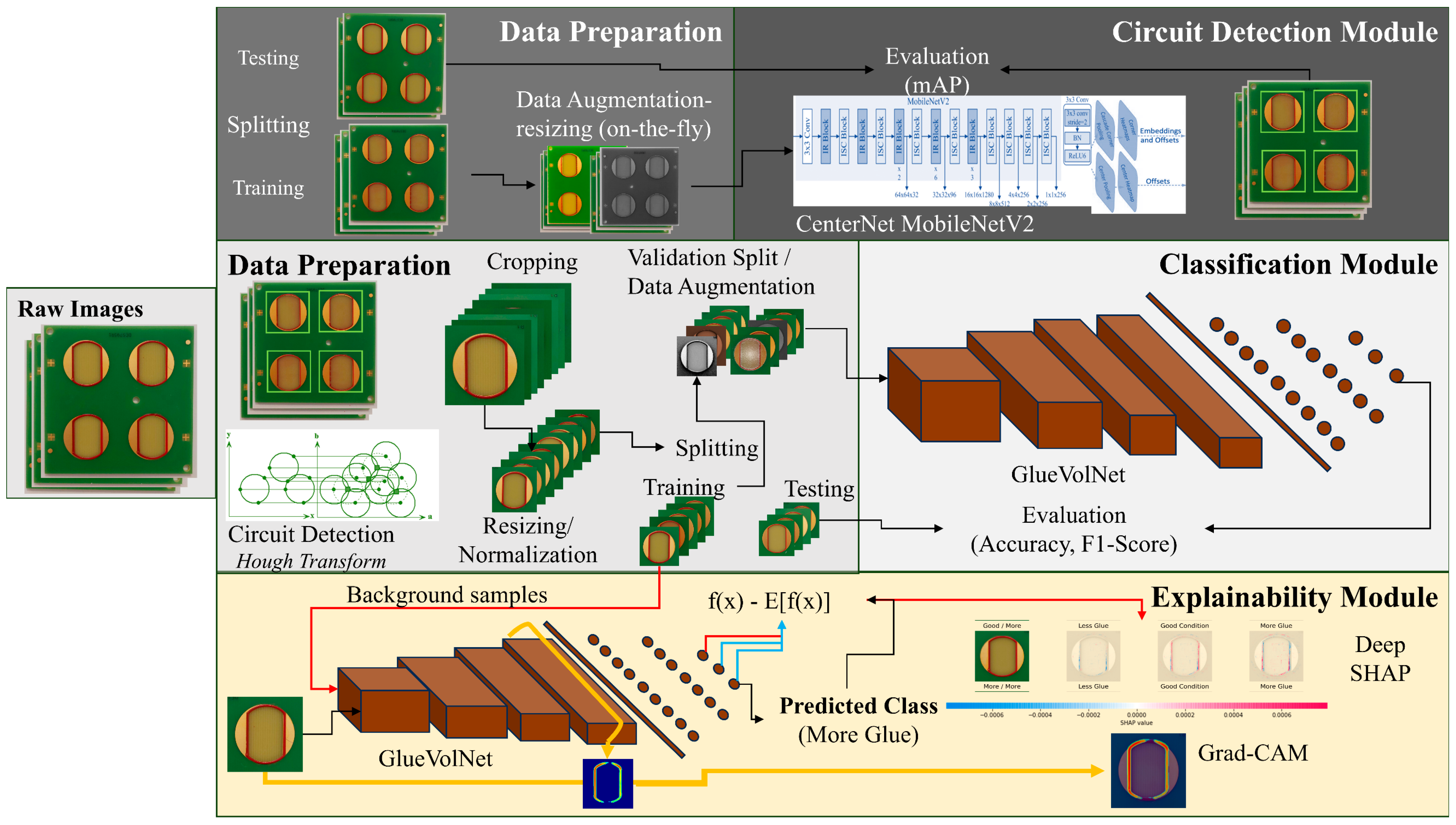

3. Materials and Methods

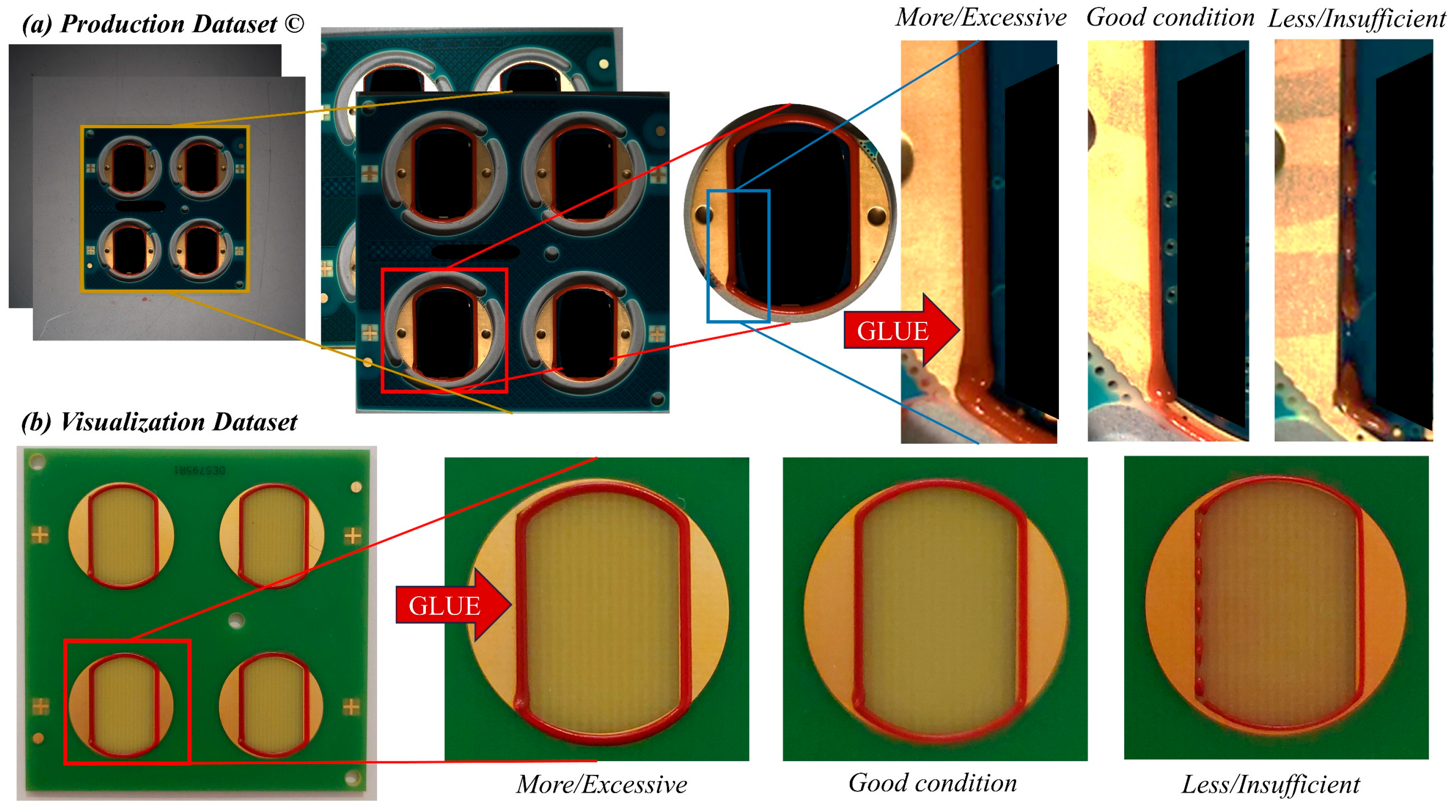

3.1. Dataset

3.2. Circuit Detection Module

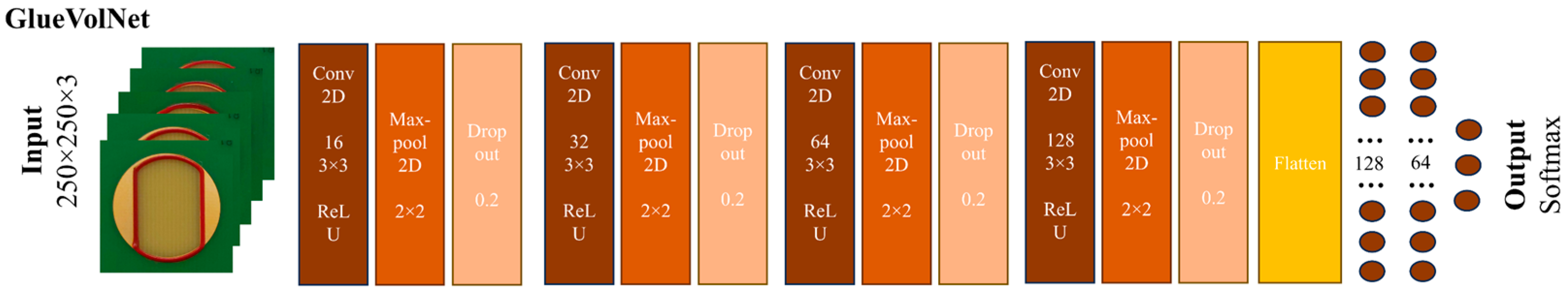

3.3. Classification Module

3.4. XAI Module

3.5. Data Preparation

3.5.1. Cropping

3.5.2. Resizing and Normalization

3.5.3. Splitting

3.5.4. Data Augmentation

4. Results and Discussion

4.1. Circuit Detection

4.2. Classification

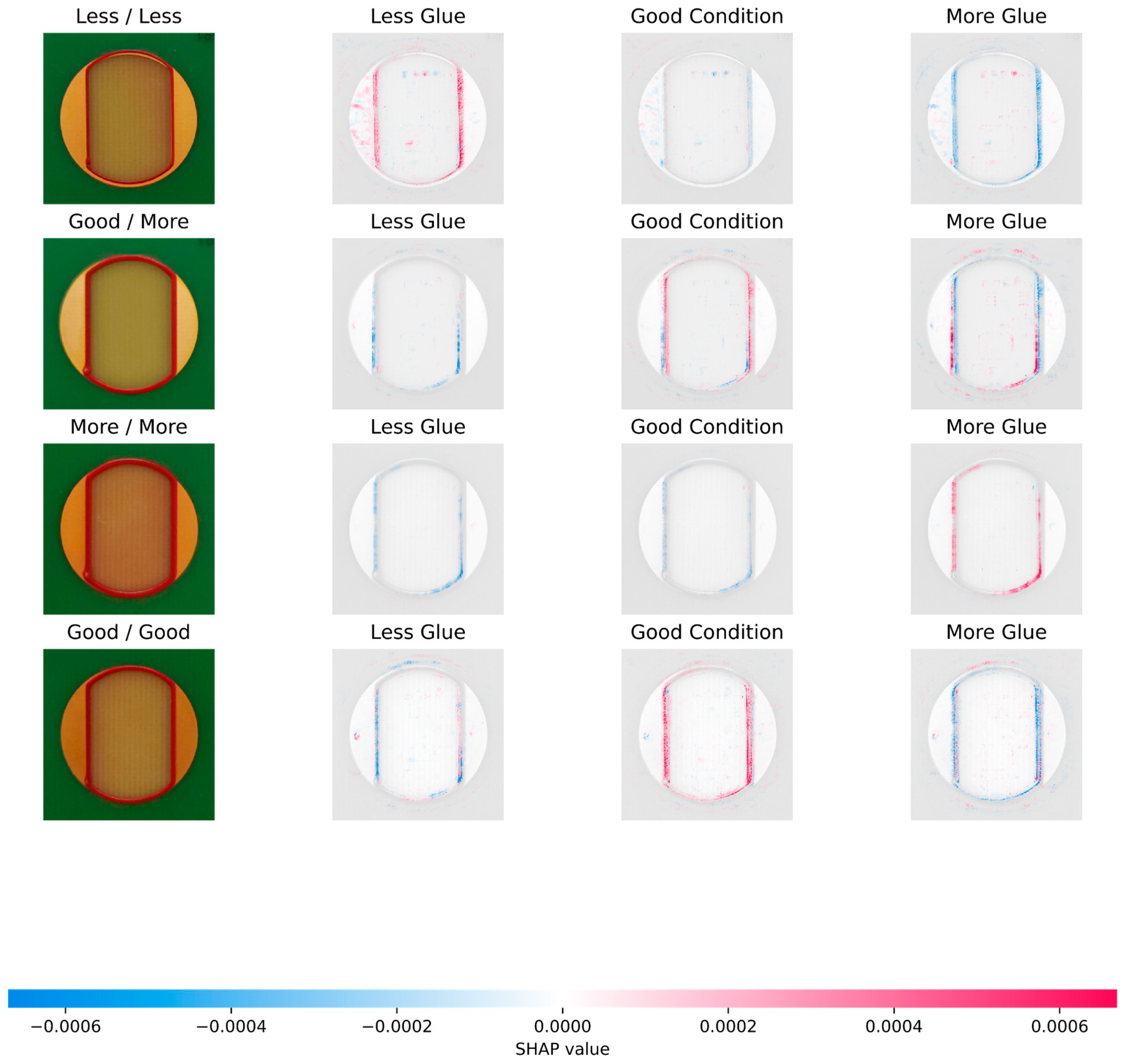

4.3. XAI Visualizations

4.4. Time Efficiency and Edge Processing

5. Summary

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gryna, F.M.; Juran, J.M. Quality and Costs; McGraw-Hill: New York, NY, USA, 1999. [Google Scholar]

- Ebayyeh, A.A.R.M.A.; Mousavi, A. A Review and Analysis of Automatic Optical Inspection and Quality Monitoring Methods in Electronics Industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar] [CrossRef]

- Wang, M.-J.J.; Huang, C.-L. Evaluating the Eye Fatigue Problem in Wafer Inspection. IEEE Trans. Semicond. Manuf. 2004, 17, 444–447. [Google Scholar] [CrossRef]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Ser, J.D.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What We Know and What Is Left to Attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Nguyen Ngoc, H.; Lasa, G.; Iriarte, I. Human-Centred Design in Industry 4.0: Case Study Review and Opportunities for Future Research. J. Intell. Manuf. 2022, 33, 35–76. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Ser, J.D.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Approximating XGBoost with an Interpretable Decision Tree. Inf. Sci. 2021, 572, 522–542. [Google Scholar] [CrossRef]

- van der Waa, J.; Nieuwburg, E.; Cremers, A.; Neerincx, M. Evaluating XAI: A Comparison of Rule-Based and Example-Based Explanations. Artif. Intell. 2021, 291, 103404. [Google Scholar] [CrossRef]

- Vale, D.; El-Sharif, A.; Ali, M. Explainable Artificial Intelligence (XAI) Post-Hoc Explainability Methods: Risks and Limitations in Non-Discrimination Law. AI Ethics 2022, 2, 815–826. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Association for Computing Machinery. San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Tercan, H.; Meisen, T. Machine Learning and Deep Learning Based Predictive Quality in Manufacturing: A Systematic Review. J. Intell. Manuf. 2022, 33, 1879–1905. [Google Scholar] [CrossRef]

- Zhou, Y.; Yuan, M.; Zhang, J.; Ding, G.; Qin, S. Review of Vision-Based Defect Detection Research and Its Perspectives for Printed Circuit Board. J. Manuf. Syst. 2023, 70, 557–578. [Google Scholar] [CrossRef]

- Tziolas, T.; Theodosiou, T.; Papageorgiou, K.; Rapti, A.; Dimitriou, N.; Tzovaras, D.; Papageorgiou, E. Wafer Map Defect Pattern Recognition Using Imbalanced Datasets. In Proceedings of the 2022 13th International Conference on Information, Intelligence, Systems & Applications (IISA), Corfu, Greece, 18–20 July 2022; pp. 1–8. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent Advances in Convolutional Neural Networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Ibrahim, R.; Shafiq, M.O. Explainable Convolutional Neural Networks: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2023, 55, 206. [Google Scholar] [CrossRef]

- Xie, D.; Wu, Z.; Hai, J.; Economou, M. Reliability Enhancement of Automotive Electronic Modules Using Various Glues. In Proceedings of the 2018 IEEE 68th Electronic Components and Technology Conference (ECTC), San Diego, CA, USA, 29 May–1 June 2018; pp. 172–178. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2019, 128, 336–359. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A Survey of Visual Transformers. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7478–7498. [Google Scholar] [CrossRef] [PubMed]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; Computational and Biological Learning Society. pp. 1–14. [Google Scholar]

- Özdem, S.; Orak, İ.M. A Novel Method Based on Deep Learning Algorithms for Material Deformation Rate Detection. J. Intell. Manuf. 2024. [Google Scholar] [CrossRef]

- Ross, N.S.; Sheeba, P.T.; Shibi, C.S.; Gupta, M.K.; Korkmaz, M.E.; Sharma, V.S. A Novel Approach of Tool Condition Monitoring in Sustainable Machining of Ni Alloy with Transfer Learning Models. J. Intell. Manuf. 2024, 35, 757–775. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Los Alamitos, CA, USA, 2016; pp. 2818–2826. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Rahimi, A. Deep Learning Methods for Object Detection in Smart Manufacturing: A Survey. J. Manuf. Syst. 2022, 64, 181–196. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Theodosiou, T.; Tziolas, T.; Papageorgiou, K.; Rapti, A.; Papageorgiou, E.; Pantoja, S.; Charalampous, P.; Dimitriou, N.; Tzovaras, D.; Cuiñas, A.; et al. Centernet-Based Models for the Detection of Defects in an Industrial Antenna Assembly Process. In Proceedings of the 10th ECCOMAS Thematic Conference on Smart Structures and Materials, Patras, Greece, 3–5 July 2023; Department of Mechanical Engineering & Aeronautics University of Patras: Patras, Greece, 2023; pp. 1209–1220. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Papandrianos, N.I.; Feleki, A.; Moustakidis, S.; Papageorgiou, E.I.; Apostolopoulos, I.D.; Apostolopoulos, D.J. An Explainable Classification Method of SPECT Myocardial Perfusion Images in Nuclear Cardiology Using Deep Learning and Grad-CAM. Appl. Sci. 2022, 12, 7592. [Google Scholar] [CrossRef]

- Noh, E.; Hong, S. Automatic Screening of Bolts with Anti-Loosening Coating Using Grad-CAM and Transfer Learning with Deep Convolutional Neural Networks. Appl. Sci. 2022, 12, 2029. [Google Scholar] [CrossRef]

- Hacıefendioğlu, K.; Adanur, S.; Demir, G. Automatic Landslide Segmentation Using a Combination of Grad-CAM Visualization and K-Means Clustering Techniques. Iran. J. Sci. Technol. Trans. Civ. Eng. 2024, 48, 943–959. [Google Scholar] [CrossRef]

- Pham, T.T.A.; Thoi, D.K.T.; Choi, H.; Park, S. Defect Detection in Printed Circuit Boards Using Semi-Supervised Learning. Sensors 2023, 23, 3246. [Google Scholar] [CrossRef]

- Park, J.-H.; Kim, Y.-S.; Seo, H.; Cho, Y.-J. Analysis of Training Deep Learning Models for PCB Defect Detection. Sensors 2023, 23, 2766. [Google Scholar] [CrossRef]

- Shapley, L.S. A Value for N-Person Games; Princeton University Press: Princeton, NJ, USA, 1953. [Google Scholar] [CrossRef]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning Important Features Through Propagating Activation Differences. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Meister, S.; Wermes, M.; Stüve, J.; Groves, R.M. Investigations on Explainable Artificial Intelligence Methods for the Deep Learning Classification of Fibre Layup Defect in the Automated Composite Manufacturing. Compos. Part B Eng. 2021, 224, 109160. [Google Scholar] [CrossRef]

- Raab, D.; Fezer, E.; Breitenbach, J.; Baumgartl, H.; Sauter, D.; Buettner, R. A Deep Learning-Based Model for Automated Quality Control in the Pharmaceutical Industry. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 27 June–1 July 2022; pp. 266–271. [Google Scholar]

- Ting, Y.; Chen, C.-H.; Feng, H.-Y.; Chen, S.-L. Glue Dispenser Route Inspection by Using Computer Vision and Neural Network. Int. J. Adv. Manuf. Technol. 2008, 39, 905–918. [Google Scholar] [CrossRef]

- Krol, A.; Fidali, M.; Jamrozik, W. Image Processing Method for the Improvement of Visibility of Adhesive Path Defects. In Proceedings of the Advances in Technical Diagnostics; Timofiejczuk, A., Lazarz, B.E., Chaari, F., Burdzik, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 511–521. [Google Scholar]

- Zhang, R.; Yan, T.; Zhang, J. Vision-Based Structural Adhesive Detection for Electronic Components on PCBs. Electronics 2025, 14, 2045. [Google Scholar] [CrossRef]

- Zhang, X.-W.; Zhang, K.; Xie, L.-W.; Zhao, Y.-J.; Lu, X.-J. An Enhancement and Detection Method for a Glue Dispensing Image Based on the CycleGAN Model. IEEE Access 2022, 10, 92036–92047. [Google Scholar] [CrossRef]

- Dimitriou, N.; Leontaris, L.; Vafeiadis, T.; Ioannidis, D.; Wotherspoon, T.; Tinker, G.; Tzovaras, D. Fault Diagnosis in Microelectronics Attachment via Deep Learning Analysis of 3-D Laser Scans. IEEE Trans. Ind. Electron. 2020, 67, 5748–5757. [Google Scholar] [CrossRef]

- Evangelidis, A.; Dimitriou, N.; Leontaris, L.; Ioannidis, D.; Tinker, G.; Tzovaras, D. A Deep Regression Framework Toward Laboratory Accuracy in the Shop Floor of Microelectronics. IEEE Trans. Ind. Inform. 2023, 19, 2652–2661. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. arXiv 2015, arXiv:1603.04467. [Google Scholar]

- Bradski, G. The OpenCV Library. 2000. Available online: https://opencv.org (accessed on 8 August 2025).

- Hunter, J.D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Bansal, M.A.; Sharma, D.R.; Kathuria, D.M. A Systematic Review on Data Scarcity Problem in Deep Learning: Solution and Applications. ACM Comput. Surv. 2022, 54, 208. [Google Scholar] [CrossRef]

- Yuen, H.K.; Princen, J.; Illingworth, J.; Kittler, J. Comparative Study of Hough Transform Methods for Circle Finding. Image Vis. Comput. 1990, 8, 71–77. [Google Scholar] [CrossRef]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Shah, S.R.; Qadri, S.; Bibi, H.; Shah, S.M.W.; Sharif, M.I.; Marinello, F. Comparing Inception V3, VGG 16, VGG 19, CNN, and ResNet 50: A Case Study on Early Detection of a Rice Disease. Agronomy 2023, 13, 1633. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Tzani, M.A. Industrial Object and Defect Recognition Utilizing Multilevel Feature Extraction from Industrial Scenes with Deep Learning Approach. J. Ambient Intell. Humaniz. Comput. 2023, 14, 10263–10276. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Proceedings of the Advances in Computer Vision; Arai, K., Kapoor, S., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 128–144. [Google Scholar]

| Method | Accuracy | mAP@IoU{0.5:0.95} | mAP@0.5 IoU | mAP@0.75 IoU | Detection Time (CPU) | Detection Time (GPU) |

|---|---|---|---|---|---|---|

| Circle Hough Transform | 100% | - | - | - | 1 s | - |

| CenterNet MobileNetV2 | - | 90% | 100% | 100% | 0.17 s | 0.10 s |

| Model | Accuracy | F1-Score | Precision | Recall |

|---|---|---|---|---|

| MobileNetV2 | 0.697 ± 0.100 | 0.492 ± 0.066 | 0.608 ± 0.104 | 0.490 ± 0.066 |

| VGG16 | 0.886 ± 0.041 | 0.819 ± 0.114 | 0.824 ± 0.122 | 0.823 ± 0.108 |

| VGG19 | 0.904 ± 0.091 | 0.876 ± 0.092 | 0.890 ± 0.074 | 0.879 ± 0.100 |

| ResNet50 | 0.892 ± 0.038 | 0.843 ± 0.041 | 0.901 ± 0.033 | 0.826 ± 0.059 |

| Branch-ResNet CNN | 0.881 ± 0.048 | 0.733 ± 0.130 | 0.767 ± 0.161 | 0.742 ± 0.113 |

| GlueVolNet | 0.922 ± 0.028 | 0.887 ± 0.068 | 0.894 ± 0.062 | 0.877 ± 0.066 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Less glue | 0.87 | 1.0 | 0.93 | 13 |

| Good condition | 1.0 | 0.6 | 0.75 | 15 |

| More glue | 0.89 | 1.0 | 0.94 | 32 |

| Micro average | 0.9 | 0.9 | 0.9 | 60 |

| Macro average | 0.92 | 0.87 | 0.87 | 60 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Less glue | 13 | 0 | 0 |

| Good condition | 2 | 9 | 4 |

| More glue | 0 | 0 | 32 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Less glue | 0.99 | 0.92 | 0.96 | 1024 |

| Good condition | 0.88 | 0.69 | 0.77 | 1100 |

| More glue | 0.86 | 0.98 | 0.92 | 2112 |

| Micro average | 0.89 | 0.89 | 0.89 | 4236 |

| Macro average | 0.91 | 0.87 | 0.88 | 4236 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Less glue | 947 | 77 | 0 |

| Good condition | 12 | 748 | 340 |

| More glue | 2 | 33 | 2077 |

| Circuit Detection | Classification | Grad-CAM Heatmaps | Total | |

|---|---|---|---|---|

| Desktop CPU | 0.14 | 0.09 | 0.51 | 2.36 |

| Desktop GPU | 0.10 | 0.06 | 0.37 | 1.88 |

| Jetson Nano CPU | 0.86 | 0.98 | 2.68 | 11.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tziolas, T.; Papageorgiou, K.; Theodosiou, T.; Ioannidis, D.; Dimitriou, N.; Tinker, G.; Papageorgiou, E. Explainable AI Methods for Identification of Glue Volume Deficiencies in Printed Circuit Boards. Appl. Sci. 2025, 15, 9061. https://doi.org/10.3390/app15169061

Tziolas T, Papageorgiou K, Theodosiou T, Ioannidis D, Dimitriou N, Tinker G, Papageorgiou E. Explainable AI Methods for Identification of Glue Volume Deficiencies in Printed Circuit Boards. Applied Sciences. 2025; 15(16):9061. https://doi.org/10.3390/app15169061

Chicago/Turabian StyleTziolas, Theodoros, Konstantinos Papageorgiou, Theodosios Theodosiou, Dimosthenis Ioannidis, Nikolaos Dimitriou, Gregory Tinker, and Elpiniki Papageorgiou. 2025. "Explainable AI Methods for Identification of Glue Volume Deficiencies in Printed Circuit Boards" Applied Sciences 15, no. 16: 9061. https://doi.org/10.3390/app15169061

APA StyleTziolas, T., Papageorgiou, K., Theodosiou, T., Ioannidis, D., Dimitriou, N., Tinker, G., & Papageorgiou, E. (2025). Explainable AI Methods for Identification of Glue Volume Deficiencies in Printed Circuit Boards. Applied Sciences, 15(16), 9061. https://doi.org/10.3390/app15169061