Self-Supervised Cloud Classification with Patch Rotation Tasks (SSCC-PR)

Abstract

1. Introduction

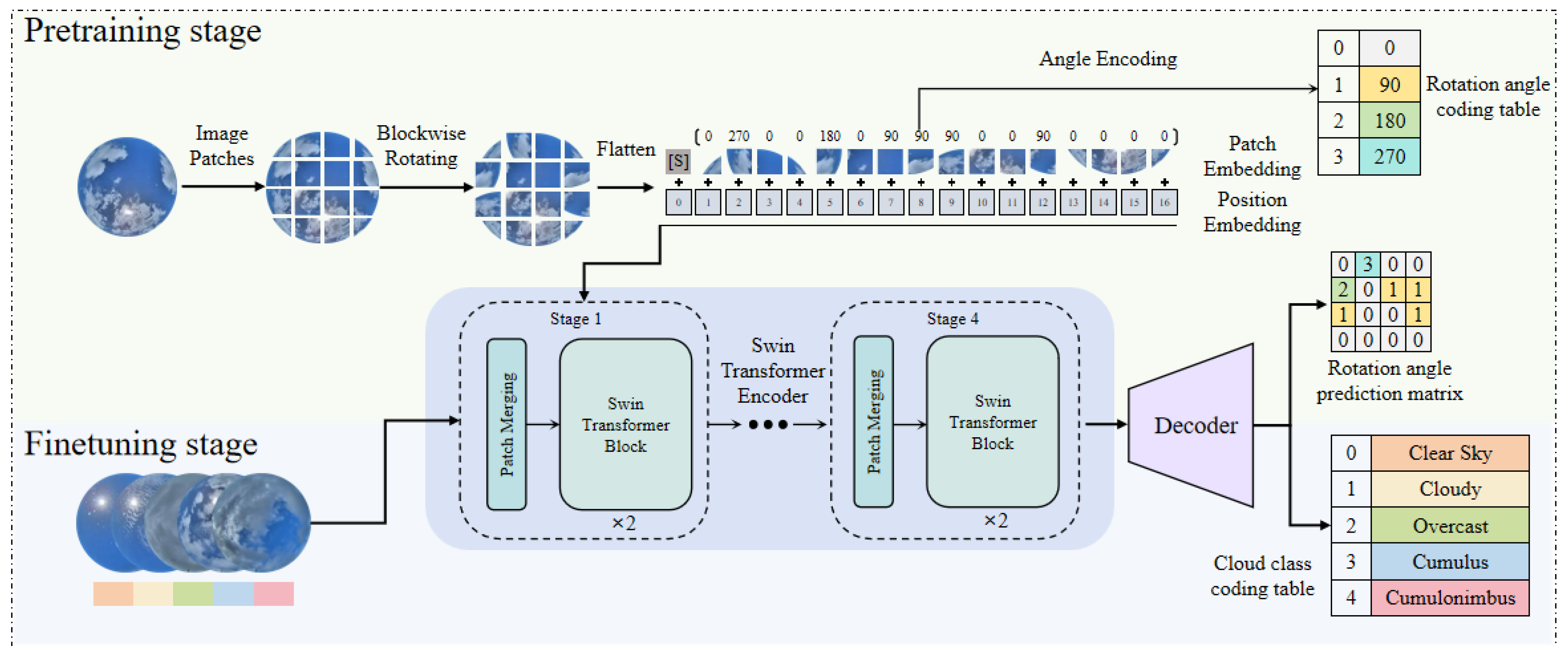

- A self-supervised learning approach is adopted for cloud classification research, and a patch rotation auxiliary task is designed for Pre-training.

- Most existing cloud datasets are constructed from the image aspect, with little consideration given to their impact on photovoltaic power generation. Consequently, this paper develops a new cloud classification dataset centered around the influence that different cloud types have on irradiance.

- The Swin Transformer serves as the backbone network for training. When compared with existing supervised and unsupervised models, it brings about substantial performance enhancements.

2. Related Works

2.1. Self-Supervised Learning

2.2. Swin Transformer

2.3. Cloud Classification

3. Approach

3.1. Pre-Training Stage

| Algorithm 1: The algorithm of patch rotation prediction |

| Input: , |

| Output: |

| Step1: , |

| Step2: |

| Step3: |

| Step4: |

| Step5: |

3.2. Finetuning Stage

4. Data

5. Experiment

5.1. Experimental Configuration

5.2. Evaluation Indicators

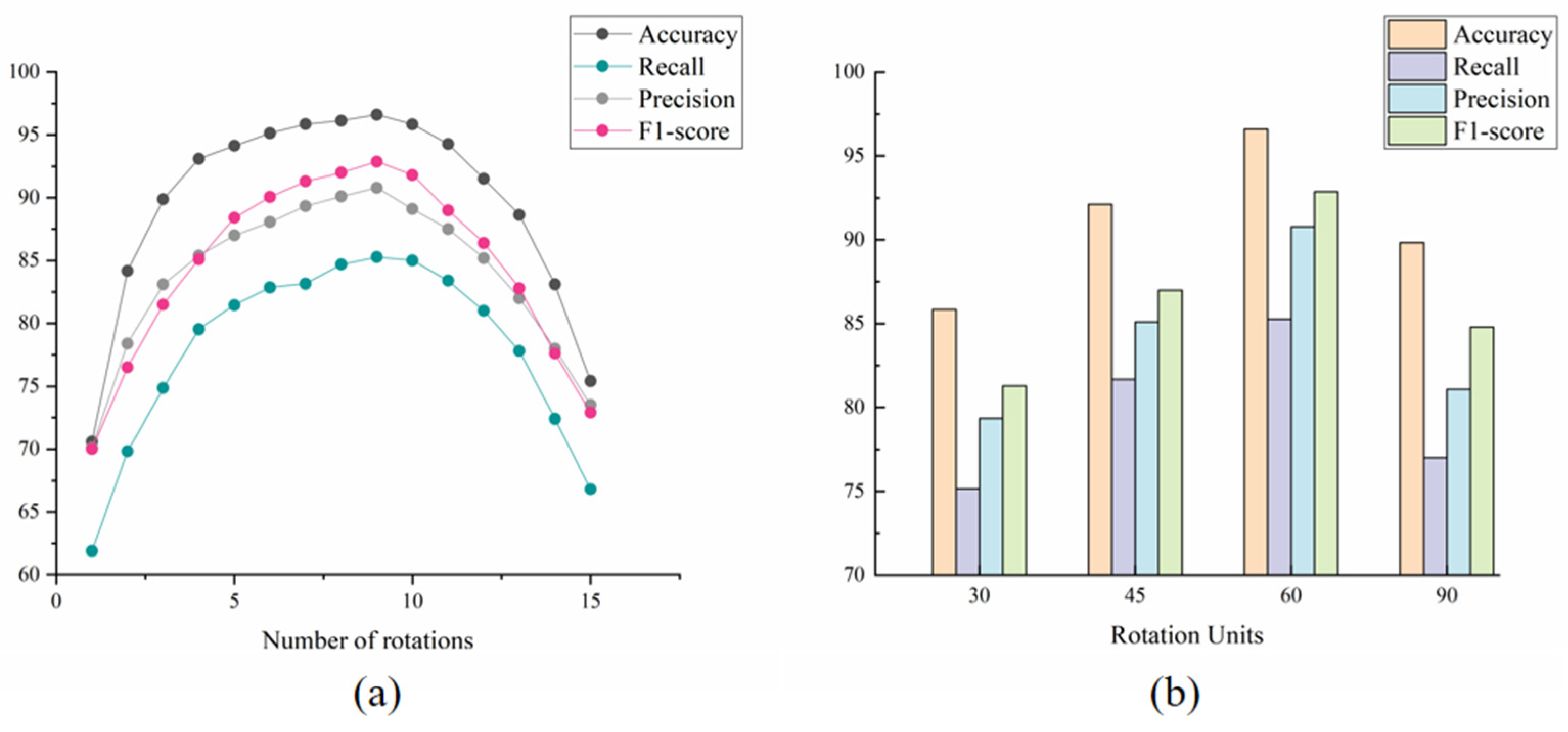

5.3. Parameter Analysis

5.4. Comparison Experiment

5.5. Performance Evaluation

6. Conclusions

7. Prospect

Author Contributions

Funding

Conflicts of Interest

References

- Rossow, W.B.; Garder, L.C.; Lacis, A.A. Global, seasonal cloud variations from satellite radiance measurements. Part I: Sensitivity of analysis. J. Clim. 1989, 2, 419–458. [Google Scholar] [CrossRef]

- Rossow, W.B.; Lacis, A.A. Global, seasonal cloud variations from satellite radiance measurements. Part II. Cloud properties and radiative effects. J. Clim. 1990, 3, 1204–1253. [Google Scholar] [CrossRef]

- Chiu, J.C.; Marshak, A.; Knyazikhin, Y.; Wiscombe, W.J.; Barker, H.W.; Barnard, J.C.; Luo, Y. Remote sensing of cloud properties using ground-based measurements of zenith radiance. J. Geophys. Res. Atmos. 2006, 111, D16201. [Google Scholar] [CrossRef]

- Zinner, T.; Mayer, B.; Schröder, M. Determination of three-dimensional cloud structures from high-resolution radiance data. J. Geophys. Res. Atmos. 2006, 111, D08204. [Google Scholar] [CrossRef]

- Singh, M.; Glennen, M. Automated ground-based cloud recognition. Pattern Aanalysis Appl. 2005, 8, 258–271. [Google Scholar] [CrossRef]

- Calbo, J.; Sabburg, J. Feature extraction from whole-sky ground-based images for cloud-type recognition. J. Atmos. Ocean. Technol. 2008, 25, 3–14. [Google Scholar] [CrossRef]

- Liu, L.; Sun, X.; Chen, F.; Zhao, S.; Gao, T. Cloud classification based on structure features of infrared images. J. Atmos. Ocean. Technol. 2011, 28, 410–417. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, P.; Zhang, F.; Song, Q. CloudNet: Ground-based cloud classification with deep convolutional neural network. Geophys. Res. Lett. 2018, 45, 8665–8672. [Google Scholar] [CrossRef]

- Fang, C.; Jia, K.; Liu, P.; Zhang, L. Research on cloud recognition technology based on transfer learning. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 791–796. [Google Scholar]

- Shi, C.; Wang, C.; Wang, Y.; Xiao, B. Deep convolutional activations-based features for ground-based cloud classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 816–820. [Google Scholar] [CrossRef]

- Liu, S.; Li, M.; Zhang, Z.; Cao, X.; Durrani, T.S. Ground-based cloud classification using task-based graph convolutional network. Geophys. Res. Lett. 2020, 47, e2020GL087338. [Google Scholar] [CrossRef]

- Jaiswal, A.; Babu, A.R.; Zadeh, M.Z.; Banerjee, D.; Makedon, F. A survey on contrastive self-supervised learning. Technologies 2020, 9, 2. [Google Scholar] [CrossRef]

- Zhai, X.; Oliver, A.; Kolesnikov, A.; Beyer, L. S4l: Self-supervised semi-supervised learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 1476–1485. [Google Scholar]

- Gui, J.; Chen, T.; Zhang, J.; Cao, Q.; Sun, Z.; Luo, H.; Tao, D. A survey on self-supervised learning: Algorithms, applications, and future trends. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9052–9071. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11 October 2021; pp. 10012–10022. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Convolutional deep belief networks on cifar-10. Unpubl. Manuscr. 2010, 40, 1–9. [Google Scholar]

- Feng, L.; Hu, C. Cloud adjacency effects on top-of-atmosphere radiance and ocean color data products: A statistical assessment. Remote Sens. Environ. 2016, 174, 301–313. [Google Scholar] [CrossRef]

- Wang, T.; Shi, J.; Letu, H.; Ma, Y.; Li, X.; Zheng, Y. Detection and removal of clouds and associated shadows in satellite imagery based on simulated radiance fields. J. Geophys. Res. Atmos. 2019, 124, 7207–7225. [Google Scholar] [CrossRef]

- Smith, G.; Priestley, K.; Loeb, N.; Wielicki, B.; Charlock, T.; Minnis, P.; Doelling, D.; Rutan, D. Clouds and Earth Radiant Energy System (CERES), a review: Past, present and future. Adv. Space Res. 2011, 48, 254–263. [Google Scholar] [CrossRef]

- Dev, S.; Lee, Y.H.; Winkler, S. Categorization of cloud image patches using an improved texton-based approach. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 422–426. [Google Scholar]

- Simonyan, K. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised representation learning by predicting image rotations. arXiv 2018, arXiv:1803.07728. [Google Scholar] [CrossRef]

- Grill, J.-B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.D.; Azar, M.G.; et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Misra, I.; Maaten, L. Self-supervised learning of pretext-invariant representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6707–6717. [Google Scholar]

- Amari, S. Backpropagation and stochastic gradient descent method. Neurocomputing 1993, 5, 185–196. [Google Scholar] [CrossRef]

- Cazenave, T.; Sentuc, J.; Videau, M. Cosine annealing, mixnet and swish activation for computer Go. In Advances in Computer Games; Springer International Publishing: Cham, Swizerland, 2021; pp. 53–60. [Google Scholar]

- Liu, Z.; Zhou, S.; Wang, M.; Peng, S.; Shen, A.; Zhou, S. Ground-based visible-light cloud image classification based on a convolutional neural network. In Proceedings of the 2019 4th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 21–22 November 2019; pp. 108–112. [Google Scholar]

- Fang, H.; Han, B.; Zhang, S.; Zhou, S.; Hu, C.; Ye, W.-M. Data Augmentation for Object Detection via Controllable Diffusion Models. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 1246–1255. [Google Scholar] [CrossRef]

- Tang, Y.; Yang, P.; Zhou, Z.; Pan, D.; Chen, J.; Zhao, X. Improving cloud type classification of ground-based images using region covariance descriptors. Atmos. Meas. Tech. 2021, 14, 737–747. [Google Scholar] [CrossRef]

- Sinaga, K.P.; Yang, M.S. A Globally Collaborative Multi-View k-Means Clustering. Electronics 2025, 14, 2129. [Google Scholar] [CrossRef]

- Salazar, A.; Vergara, L.; Vidal, E. A proxy learning curve for the Bayes classifier. Pattern Recognit. 2022, 136, 109240. [Google Scholar] [CrossRef]

| Varieties of Clouds | Description | Impact on Irradiance | Quantity |

|---|---|---|---|

| Clear Sky | The sky has no significant cloud layers or only a few clouds, with ample sunlight and high visibility. | Irradiance is high, with sunlight directly reaching the ground, typically representing the brightest moments. | 805 |

| Cloudy | The sky is partially covered by clouds, with cloud cover typically ranging from 40% to 70%. Clear skies alternate with cloud layers, and sunlight may appear intermittently. This condition may include various cloud types such as cumulus, stratus, and cirrostratus. | Irradiance is lower than on a clear day because the clouds partially block the sunlight, reducing the intensity of the sun. | 1200 |

| Overcast | The sky is completely covered by clouds, which are thick and dense, preventing sunlight from penetrating. | Irradiance is very low, with sunlight completely blocked, creating a gray and overcast feeling. | 845 |

| Cumulus | It has a distinct white, fluffy appearance with a flat base and a convex-shaped top. | Cumulus clouds block sunlight over a relatively small area, causing significant variations in irradiance. In some regions, irradiance may decrease due to the shading effect of cumulus clouds. | 1000 |

| Cumulonimbus | A highly developed cumulus cloud, typically characterized by intense convective activity, with the cloud top potentially reaching the tropopause. It is the most powerful type of convective cloud. | It has a significant impact on irradiance, as it can completely block sunlight, resulting in extremely low irradiance. It is usually associated with precipitation, thunderstorms, and other meteorological disasters. | 850 |

| Hyperparameter | Pre-Training | Fine-Tuning (RCCD) | Fine-Tuning (SWIMCAT) |

|---|---|---|---|

| Batch Size | 256 | 32 | 32 |

| Epochs | 300 | 100 | 50 |

| Optimizer | AdamW | SGD | AdamW |

| LR/Weight Decay | 0.05 | 5 × 10−5 | 1 × 10−4 |

| LR Schedule | Cosine Annealing | Cosine Annealing | Cosine Annealing |

| Models | RCCD | SWIMCAT | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Supervised | VGG16 | 81.08 | 70.55 | 79.14 | 80.68 | 80.29 | 64.59 | 79.41 | 79.38 |

| Resnet34 | 83.71 | 71.94 | 81.29 | 82.43 | 82.46 | 71.97 | 80.41 | 81.86 | |

| UNet | 77.58 | 65.83 | 76.97 | 77.29 | 76.85 | 65.33 | 76.29 | 77.91 | |

| EfficientNet-B4 | 91.85 | 79.54 | 88.64 | 91.57 | 88.57 | 76.52 | 86.98 | 87.54 | |

| SENet | 81.82 | 69.82 | 81.25 | 82.93 | 80.74 | 67.85 | 75.98 | 79.52 | |

| DenseNet-264 | 88.53 | 77.69 | 85.94 | 97.85 | 86.45 | 75.85 | 84.54 | 84.22 | |

| Inception-V3 | 85.84 | 74.56 | 84.18 | 85.49 | 82.57 | 71.76 | 82.05 | 79.84 | |

| Xception | 86.88 | 74.93 | 85.09 | 85.49 | 85.97 | 69.85 | 81.25 | 81.99 | |

| Vision Transformer | 92.67 | 80.73 | 89.31 | 90.19 | 82.95 | 69.86 | 80.54 | 81.46 | |

| Swin Transformer | 94.74 | 83.22 | 89.87 | 91.97 | 83.46 | 69.59 | 81.64 | 82.28 | |

| Unsupervised | Rotation | 74.57 | 62.98 | 73.46 | 71.94 | 71.38 | 60.35 | 71.58 | 70.29 |

| BYOL | 85.61 | 76.51 | 83.85 | 83.87 | 81.62 | 70.66 | 81.88 | 80.25 | |

| MoCo | 85.93 | 76.87 | 84.33 | 84.16 | 82.71 | 70.98 | 81.45 | 80.59 | |

| SimCLR | 91.54 | 80.26 | 89.00 | 88.64 | 86.45 | 73.52 | 86.54 | 85.15 | |

| PIRL | 82.44 | 71.59 | 81.61 | 80.58 | 82.26 | 71.14 | 82.64 | 81.57 | |

| Ours | SSCC-PR | 96.61 | 85.27 | 90.79 | 92.87 | 90.18 | 79.57 | 88.93 | 89.75 |

| Method | Accuracy |

|---|---|

| Fang et al. [41] | 88.1 |

| Tang et al. [42] | 88.75 |

| Liu et al. [40] | 85.81 |

| Ours | 90.18 |

| Baseline Model | Params (M) | FLOPs (G) | Inference Time (ms) | GPU Memory (GB) | |

|---|---|---|---|---|---|

| ViT-Base | 86 | 17.6 | 86 | 23.5 | 82.34 |

| DeiT-Small | 22 | 4.6 | 23 | 6.1 | 87.91 |

| PVTv2-B3 | 45 | 6.9 | 34 | 9.2 | 85.61 |

| Twins-SVT-B | 57 | 8.9 | 44 | 11.9 | 87.56 |

| Swin-Tiny | 28 | 4.5 | 22 | 6.0 | 90.79 |

| Auxiliary Tasks | ||||

|---|---|---|---|---|

| Rotation | 89.46 | 78.63 | 84.67 | 85.37 |

| Colorization | 90.45 | 79.88 | 85.53 | 85.74 |

| Inpainting | 94.75 | 83.54 | 87.41 | 88.96 |

| SSCC-PR (Ours) | 96.61 | 85.27 | 90.79 | 92.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, W.; Xiong, X.; Xia, X.; Zhang, Y.; Guo, X. Self-Supervised Cloud Classification with Patch Rotation Tasks (SSCC-PR). Appl. Sci. 2025, 15, 9051. https://doi.org/10.3390/app15169051

Yan W, Xiong X, Xia X, Zhang Y, Guo X. Self-Supervised Cloud Classification with Patch Rotation Tasks (SSCC-PR). Applied Sciences. 2025; 15(16):9051. https://doi.org/10.3390/app15169051

Chicago/Turabian StyleYan, Wuyang, Xiong Xiong, Xinyuan Xia, Yanchao Zhang, and Xiaojie Guo. 2025. "Self-Supervised Cloud Classification with Patch Rotation Tasks (SSCC-PR)" Applied Sciences 15, no. 16: 9051. https://doi.org/10.3390/app15169051

APA StyleYan, W., Xiong, X., Xia, X., Zhang, Y., & Guo, X. (2025). Self-Supervised Cloud Classification with Patch Rotation Tasks (SSCC-PR). Applied Sciences, 15(16), 9051. https://doi.org/10.3390/app15169051