1. Introduction

References to the authentication of cultural goods—and most often of paintings on canvas or wood panel—are most often limited to the validation of an attribution (by which we understand that a certain artist is the author of a known and possibly historical work, or of a work discovered after a long period and without a previous associated authorship); this understanding is correct but very narrow. Several situations require analytical physico-chemical and imaging analyses, especially non-invasive and non-destructive ones.

To this, we add the fact that these craquelure networks represent an “ID” of a work, and by comparing the network at two points in time, we can ensure that we have in front of us the same work, not a possible, particularly well-executed replica. We recall that the craquelure network is inimitable in the sense of confirmation of the same objects at two different points in time. Considering that all works in museums, galleries, or private collections are well documented, including through detailed photographic documentation, the data for the analysis must be readily available.

In addition, let us not forget that the craquelure network is sensitive even to small accidents (shocks, blows, local pressures, environmental variations, etc.). Events (accidents), in turn, induce new cracks and can “enrich” the original network but will not eliminate elements from the original network. From this, it is understood that the proposed method not only helps confirm the “identity” of a work but can also be a good tool for evaluators or insurers. They will easily notice if the works have been transported, stored, or exhibited correctly, according to agreed-upon terms and conservation standards.

Previous research has explored different approaches to craquelure analysis. Simonyan and Zisserman [

1] demonstrated the power of deep learning for art attribution by achieving 98% accuracy in identifying Raphael’s works using ResNet50 and an SVM classifier. This study highlights the ability of deep learning models to capture intricate features within paintings. However, this approach, while effective for general attribution, was not specifically designed to analyze craquelure patterns, a crucial aspect for authenticating artworks.

Recent advancements in combining image processing techniques with neural networks have demonstrated promising results in analyzing craquelure patterns. Traditional edge detection and morphology-based enhancements were integrated with neural networks to classify and interpret craquelure features in historical paintings. These studies highlighted the potential of hybrid methods but faced challenges in generalizing across diverse datasets [

2].

Microscopic cracks in the varnish layer of a work of art are a complex and often frustrating phenomenon for conservators. These cracks can significantly impact the artwork’s aesthetic value and structural integrity. Manually identifying and analyzing these cracks can be time-consuming and prone to human error. Understanding the causes and mechanisms that lead to these cracks’ appearance is essential to developing effective conservation and restoration strategies. The most significant factors contributing to varnish degradation include natural aging, the expansion and contraction of the support material (canvas and wood), using incompatible materials, mechanical damage, and biological factors such as insect infestation, fungal growth, and bacterial activity. These factors can have various consequences, from aesthetic deterioration to the loss of valuable pictorial material and the acceleration of overall degradation. Cultural heritage structures are primarily assessed and evaluated using nondestructive and non-contact methods [

3].

They undeniably stand out as the most obvious indication of a painting’s age. These cracks arrange themselves into interconnected networks, forming distinct patterns. The morphology of these patterns is linked to the material’s mechanical properties, allowing crack patterns to be seen as a painting’s unique “fingerprint,” bearing witness to its history. Consequently, how a painting is perceived is influenced by its existing cracks. Viewing a craquelure network in a painting is a subjective experience, varying based on the observer, the time, and the viewing conditions. They can be seen as undesirable, forming a web of lines with varying contrast that fragments the subject.

Preserving and managing cultural heritage (CH) necessitates consideration of the impact of climate variability. Climate change can accelerate or sometimes decelerate the deterioration of buildings and their interiors. Therefore, a climate-smart approach to CH management is essential. The Urban Heat Island (UHI) intensifies the thermal stress on building materials and all objects in buildings, such as paintings, furniture, parquet, etc., accelerating their deterioration. Elevated temperatures cause materials to repeatedly expand and contract, leading to cracks, structural instability, and chemical damage [

4,

5,

6,

7,

8].

In recent years, machine learning applications have been developed in the analysis of cracks in materials [

9] and craquelure in paintings [

2], employing techniques such as digital image analysis [

10], convolutional neural networks, and deep learning [

11]. However, as presented in this paper, our research also involved the application of machine learning models utilizing convolutional neural networks. Since craquelure provides valuable information about the history and authenticity of a work of art, the application of machine learning models in this area of art offers new perspectives on the conservation and authentication of artistic heritage [

12,

13].

While macroscopic crack analysis is a more common issue addressed with machine learning and computer vision tasks [

14], they focus upon the macroscopic features of structural concrete, as opposed to our microscopic network detection model developed in this work. Although macroscopic models (like the standard U-Net architecture [

15], GAMSNet [

16], or CFRNet [

17]) provide interesting approaches, they focus on road extraction and navigation usage or classification problems.

From a typological standpoint, the craquelure network can be classified based on its shape, size, and depth. There are surface cracks, which affect only the color layer, and deep cracks, which can penetrate the support. Reticular cracks, in a net-like form, and linear cracks, which extend in a single direction, can also be distinguished.

Analyzing craquelure in a painting can provide valuable insights into the artwork’s history and conservation status. By studying the craquelure network morphology and distribution of craquelure, specialists can reconstruct the history of previous treatments, identify the most vulnerable areas, and assess the risk of future degradation.

This research examines and enhances a damage detection technique that relies on local geometric characteristics. A machine learning model, the autoencoder model, improves image clarity by identifying the craquelure network and other imperfections. Deep learning and traditional image processing techniques have been successfully applied to computer vision-based craquelure network detection. This enables researchers to extract and analyze the craquelure network features more accurately and efficiently [

18,

19]. Deep learning has become a dominant force in craquelure network detection, but its demands for computational resources, limited transparency, and vulnerability to data quality issues present challenges. While traditional image processing techniques retain their value in certain contexts, combining them with deep learning offers a promising avenue for enhancing craquelure network detection performance.

Convolutional neural networks (CNNs) have proven effective in enhancing feature extraction for craquelure network detection and classification. By leveraging multiple layers of neural networks, CNNs can extract relevant information from input data, overcoming the limitations of traditional image processing and machine learning techniques [

20].

While pure craquelure networks are a proven tool for authentication purposes, it is often complicated to correctly identify the same patch every time; this leads to the necessity for a similarity search engine and an explainable AI approach and demonstrates scalability through integrating a robust vector database, enabling its application in real-world scenarios [

21], together with a portable runtime [

22]. The system processes grayscale images of paintings through a deep learning pipeline, extracting embeddings from the bottleneck layer of a modified VGG19 model [

23]. These embeddings are stored in an open-source embedding database (ChromaDB), tagged with metadata for efficient retrieval and reconstruction.

Other approaches (using different image acquisition methodology) [

24] conducted a 3D morphological analysis of craquelure patterns in oil paintings utilizing optical coherence tomography (OCT). This research, focusing on characterizing craquelure shape, width, and depth, demonstrated the ability to differentiate between authentic and artificially induced craquelure. However, the widespread adoption of this method is severely limited by the high cost and specialized equipment required for 3D imaging using OCT.

2. Materials and Methods

The analysis of craquelure patterns and the use of machine learning for art authentication have garnered increasing attention in recent years. Various approaches have been proposed to address challenges in feature extraction, structural analysis, and the scalability of systems for artwork authentication.

This paper proposes a novel approach that leverages a modified VGGNet-19 (VGG19) model to extract and utilize craquelure patterns as embeddings directly. The key contributions of this work include the following.

Craquelure patterns, the intricate network of craquelure that appears on paintings, offer valuable insights into the history and provenance of artworks. This study explores a novel approach to analyzing craquelure patterns using deep learning techniques. The core of our methodology lies in the development of a customized model specifically designed to extract features crucial for craquelure pattern recognition.

This modified VGGNet-19 architecture is meticulously fine-tuned to emphasize features relevant to craquelure patterns, such as edges, textures, and the spatial relationships between craquelure inside the network. These extracted features are then used to generate high-dimensional embeddings, unique numerical representations that encapsulate the distinctive characteristics of each craquelure pattern. The generated embeddings serve as a foundation for efficient querying and similarity retrieval. By comparing the embeddings of different craquelure patterns, we can identify those with the highest degree of similarity, facilitating comprehensive analyses and comparisons across a vast dataset of artworks. Furthermore, the embeddings enable the reconstruction of craquelure patterns, providing a valuable tool for visualization and further investigation.

To ensure scalability and efficiency in handling large datasets, we employ ChromaDB, a robust vector database. It provides optimized storage and retrieval mechanisms for high-dimensional embeddings, enabling the efficient application of our proposed method in real-world scenarios with a substantial volume of craquelure pattern data.

Craquelure networks are important for various tasks, including artwork source identification and restoration processes. This work presents our efforts to find appropriate automated tooling for these tasks. Our approach is divided into deterministic, using computer vision software, and statistical, using machine learning applications. The latter enables the development of hardware devices that can be integrated within the analysis devices. With a vast ecosystem of modules and packages, the Python 3.10 language [

25] provides us with the necessary tools for algorithm implementation while working with optimized binary linear algebra systems. A large number of craquelure analysis techniques are available to researchers in the field.

2.1. Dataset Description and Preparation

The original dataset consists of close-up images for craquelure networks registered during the preparation phase, in which 19 artworks were analyzed for the craquelure network. The artworks were previously assessed by the artwork specialists team, and 19 high-resolution images were chosen to construct this model. The original images have an extensive shape of 5500 pixels (width) and 6500 pixels (height) using 3 channels (BGR). Each image resolution is 600 dpi. These images were segmented in arrays of 992 × 1984 pixels (overlapping is allowed), leading to 96 unaltered images. From these images, 10 were extracted for testing and validation.

This part of our analysis uses CPU and relies on the OpenCV [

18]—Open Computer Vision—together with a set of specialized models, and its main purpose is to provide us with training data for our autoencoder. Basically, the workflow converts the optical microscope image to grayscale and uses the edge detection algorithms to extract the craquelure network. During this stage, expert help was required for craquelure network identification, thus enabling accurate results for each given image.

The edge detection algorithm first operates over the images using the associated numerical array (using numpy [

26] version 1.21.1) and converts them to the logarithmic scale. It then applies a bilateral filter in order to reduce the image noise (often provided by graphical elements). Then, it performs the Canny edge detection algorithm [

27]. Feature extraction is performed using ORB (Oriented FAST and Rotated BRIEF) [

28].

2.2. Machine Learning Model Development

The main purpose of this model (tool) is to provide a computer vision enhancement technique focusing on the craquelure network from the analyzed artwork. In order to implement this, we use PyTorch version 2.1, an extremely popular and versatile open-source machine-learning library built on the Torch library [

29]. It is widely used in applications such as computer vision, natural language processing, and many others. PyTorch offers exceptional flexibility in defining and implementing machine learning models. The high degree of control over the computational process makes PyTorch a great choice for research and prototyping, and the intuitive Python interface and comprehensive documentation make PyTorch accessible to beginners and experienced researchers.

Using a traditional approach, PyTorch works on tensors and graphs, which are essential for machine learning and other AI operations. While other frameworks focus on the monolithic approach, PyTorch provides a rich tooling ecosystem with several extensions for metrics, similarity, accountability, and optimized algorithms.

In this paper, we focus on extracting the craquelure network enhancement using a deep autoencoder topology, the same as transformers [

30] (which use the Encoder-Decoder pattern), with some enhancements, like vector embedding extraction from the output of the encoder component. These embeddings allow for vector (cosine) similarity search [

31]. This enables the user to search an existing database for similar craquelure networks for cases where no distinct match exists between the work analyzed and the existing vector database.

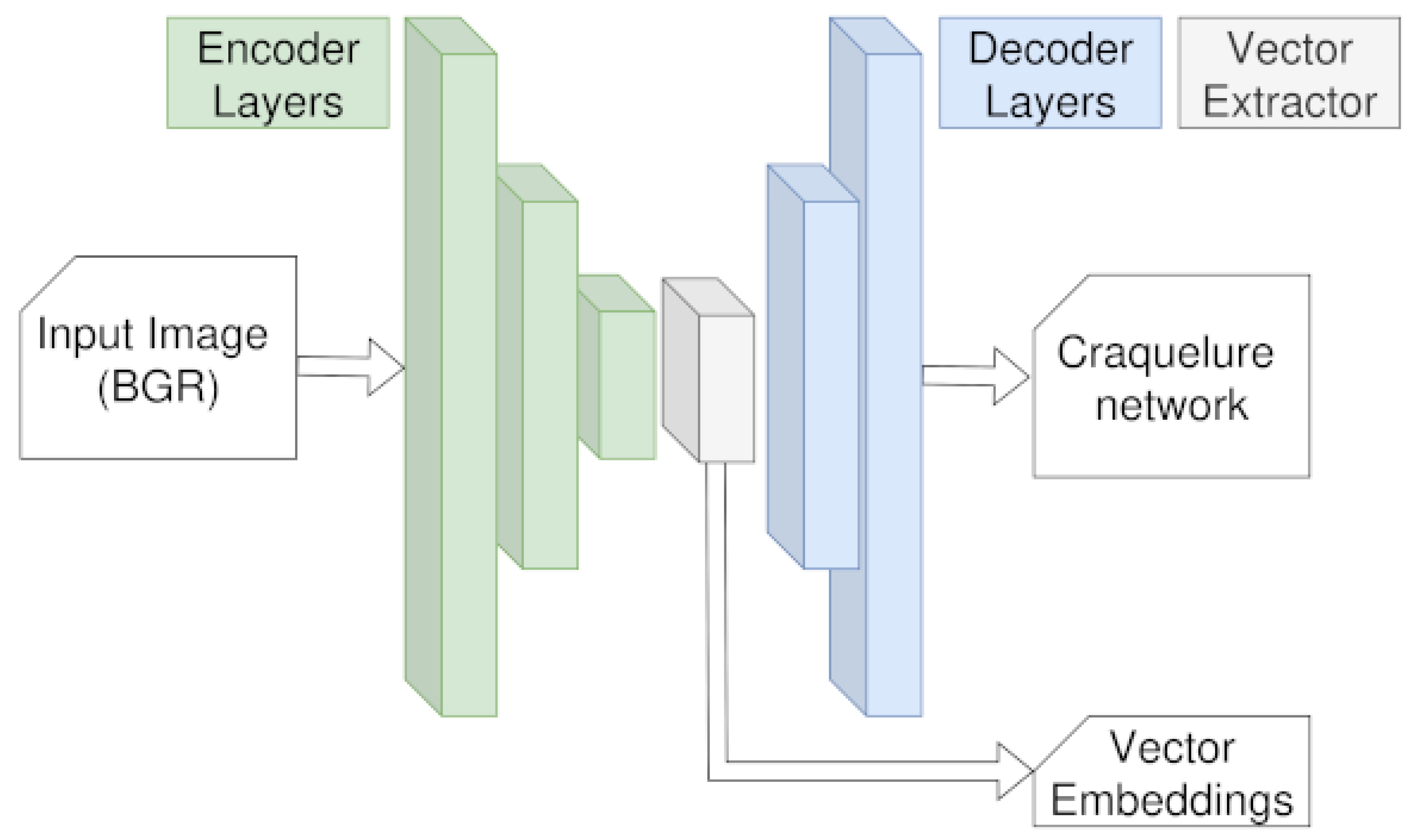

The modified architecture, presented in

Figure 1, shows the extractor, encoder, and decoder blocks and presents how the vector embeddings are extracted from the model. The extractor block (green line in

Figure 1).

Each of the blocks in

Figure 1 ends with a MaxPool2D layer and contains two convolution layers. The bottleneck for the architecture consists of 64 neurons. It also provides the embeddings (output) containing a vector embedding summarizing the image. The Decoder side consists of three ConvTranspose2d layers. The activation function for both the encoder and the decoder is the Rectified Linear Unit (ReLU).

One important aspect of our approach is to reduce the network size so that it can be applied to limited devices (like websites, IoT devices, mobile phones, field-programmable gate arrays, network processing units, or tensor processing units) in order to extend the model applicability. The model details resulting from the architecture tuning are presented in

Table 1. Such an approach requires the model to be exported within a portable format like ONNX [

22,

32].

The input image consists of 600 dpi pixels with a fixed size of 992 × 1984 pixels with BGR (blue–green–red) encoding. After the encoder package, the embeddings are extracted and placed, along with other information, like the original image and proven authentication data, allowing for later queries to be performed. The reason for storing these data is to provide investigators with a similarity search tool. The output image is the same size as the input image, but this time, it contains only the craquelure network.

In order to obtain the output of the model, a combined loss function based on the MSE was applied, measuring both the pixel-wise accuracy and the overall difference between the generated image and the ORB-proposed output. For the optimizer function, we rely upon the ADAM optimizer, and to improve the training performance, we employ mixed-precision training (GPUs are much slower at processing floating-point numbers compared to quantized values). During the development stage, a large amount of time is lost toward basing Input–Output operations, while for each epoch, we save the testing images (both target and produced) on disk for debugging purposes. Once the architecture is established, all debugging information is discarded. The learning rate is set to and is automatically adjusted using a callback when there is no decrease in the combined loss for 5 epochs (patience). The training termination callback is triggered after only 98 epochs for a stable loss of .

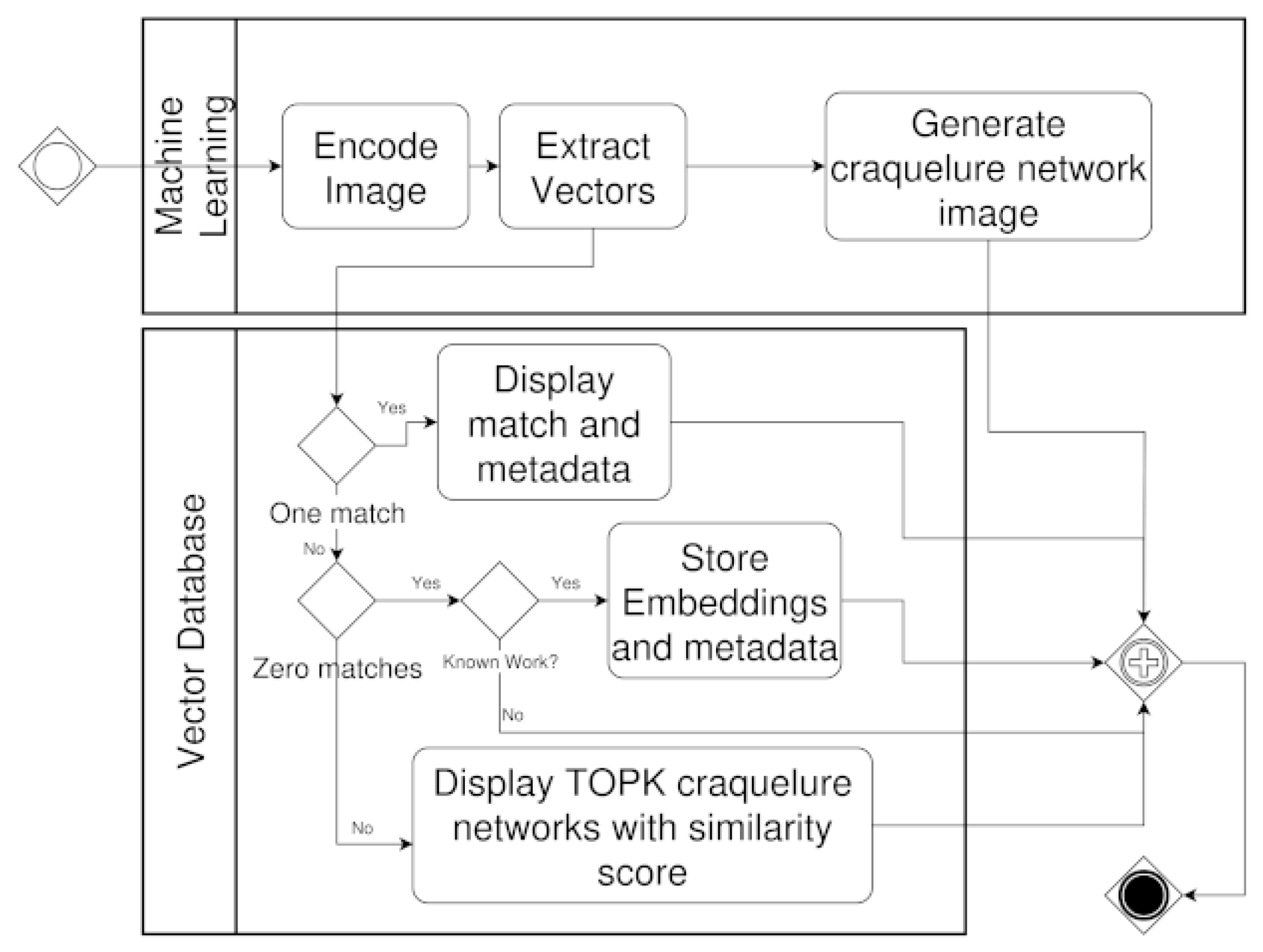

The output contains a single image of 992 × 1984 pixels, showing the craquelure network for the input image alongside the identified vector embeddings. One application of such a network consists of a craquelure network search tool for known data where a vector embeddings database (like ChromaDB [

21]) can be employed, thus enabling such search using a similar approach to the one presented in

Figure 2, where the blocking gateway at the right of the vector database lane waits for at least 2 inputs.

The overall training flow is implemented in an optimized fashion using PyTorch lightning [

33] version 2.3.3 pipeline, together with the vision package [

34], version 0.22. This allows us to define a custom

DataLoader module using concurrent IO for batch generation.

3. Results

Starting from the traditional approach, in which common algorithms like ORB [

28] and other feature-focused algorithms like Scalar-Invariant Feature Transform (SIFT) [

35] and Histogram of Oriented Gradients (HOG) [

36] or Speeded-Up Robust Features (SURF) [

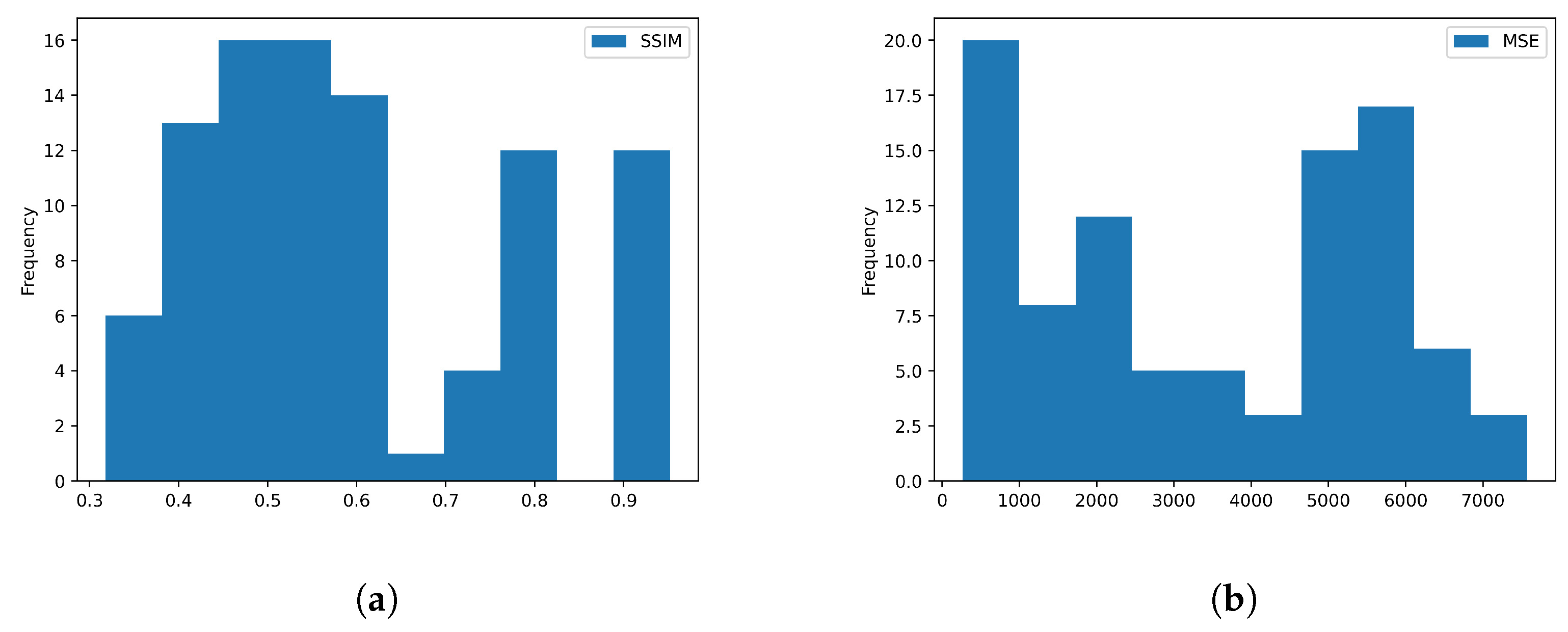

37] each have their own strengths and weaknesses, there is no one-size-fits-all approach. In order to overcome this issue, the first step in our approach generated target images for both training and validation. For the training part (we are using supervised training), we feed the model with both the input and the target image, while for the validation part, also from the MSE metric, we also employ the structural similarity index, allowing us to have better insight into the model outputs. In our case, the best results are obtained using the ORB algorithm.

On the other hand, using only one deterministic algorithm often resulted in the wrong craquelure attribution within the image. In order to address such cases, we created three main scenarios: the worst cases (

between the deterministic algorithm and the ML-generated craquelure network), the average cases (

), and the best cases (

). This separation was defined based on the Structural Similarity Index Measured (SSIM) and separated into three quartiles. The overall distribution of the evaluation functions (SSIM and MSE) is presented in

Figure 3a,b.

The samples are pieces of secco mural painting with different pigments as the last pictorial layer and thus simulate very common and widespread situations. These are useful not only for detecting counterfeits, interventions, and retouches but also for determining any possible subtle degradation or damage on valuable or fragile surfaces. This helps verify possible damage induced by a poor conservation strategy or provides an improved understanding of the mechanisms and rate of natural degradation.

Tests were also carried out for the technique of oil painting on canvas.

As previously shown, monitoring the craquelure network helps in the case of paintings on canvas not only to ensure that the work has not been replaced but also that it has not been attacked, which would induce accelerated degradation. All the situations described are very important not only for restorers and conservators but also for appraisers and insurers.

All samples were subjected to accelerated aging tests by sudden and repeated variation of microclimatic factors (relative air humidity and temperature). In order to simulate the possible causes of the generation of new cracks on the surface, fine mechanical shocks were applied to the surface (such as those that would occur in the case of unwanted blows to some works during transport or custody of some works).

Deterministic approaches are usually more sensitive to the existing noise within the input image, and the results are shown in

Figure 4,

Figure 5 and

Figure 6, where we show the input image, the deterministic image, and both the deterministic output and the proposed machine learning output files.

While all the images are recorded using visible light, not all features for the craquelure network are equally probable, and using controlled illumination techniques with only parts of the visible spectrum or photons from outside the visible spectrum might provide better results.

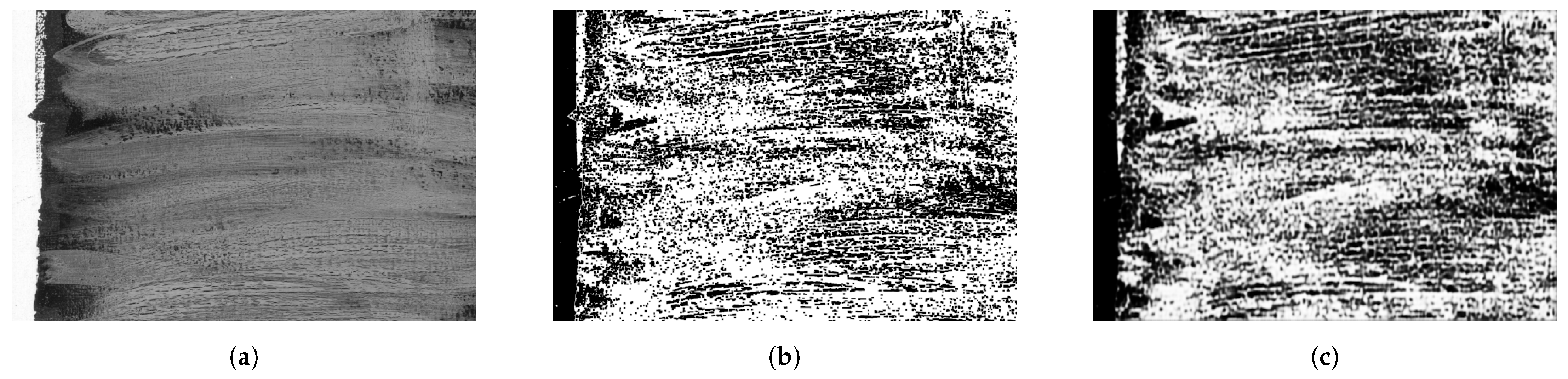

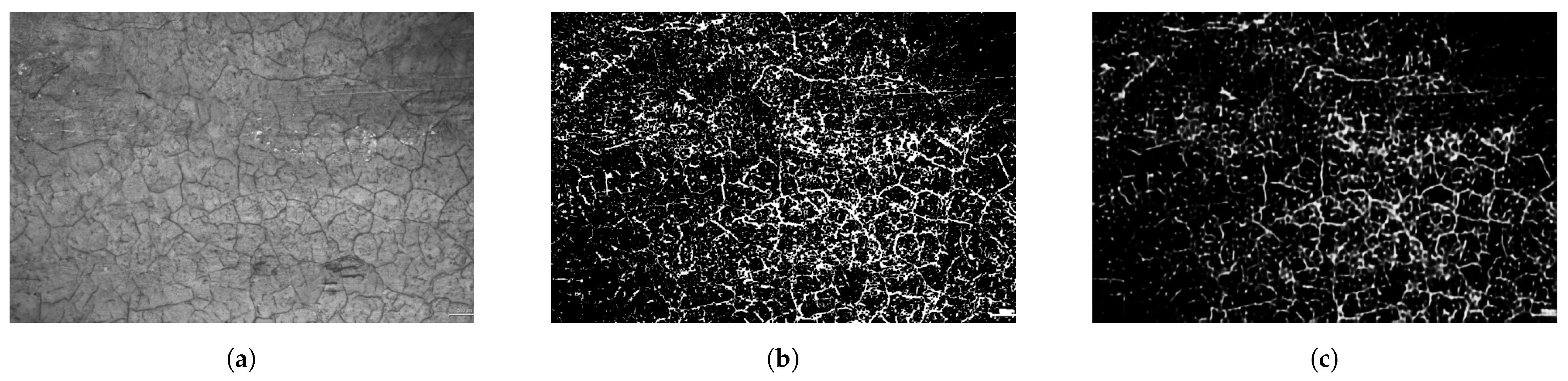

3.1. The Worst Case

The sample evaluated in this case consists of thick brush strokes that often mislead craquelure detection algorithms due to the structural composition of the artwork. In cases where there is no varnish protection, physical features like sample texture are often misinterpreted by standard algorithms, including the deterministic ORB-based algorithm that we use for generating the training target.

Figure 4b and the machine learning-produced craquelure network (

Figure 4c) show similar features, like the diagonal stripes (lower right toward the top) that are not clear within the original

Figure 4a. The additional strokes on the upper right of the images are not interpreted as craquelure but as edges, but the thick brush strokes contribute to the detection as high-gradient edges.

The best-detected edge is the one on the left of the original sample (while not clear due to the white edge). The higher intensity of the white line shows an increased probability for the craquelure detection, while the figure background shows lower white intensities.

The output images are converted to 8-bit integer grayscale for visualization; if floats are used, some of the features of the target and the predicted images are not visible to the naked eye.

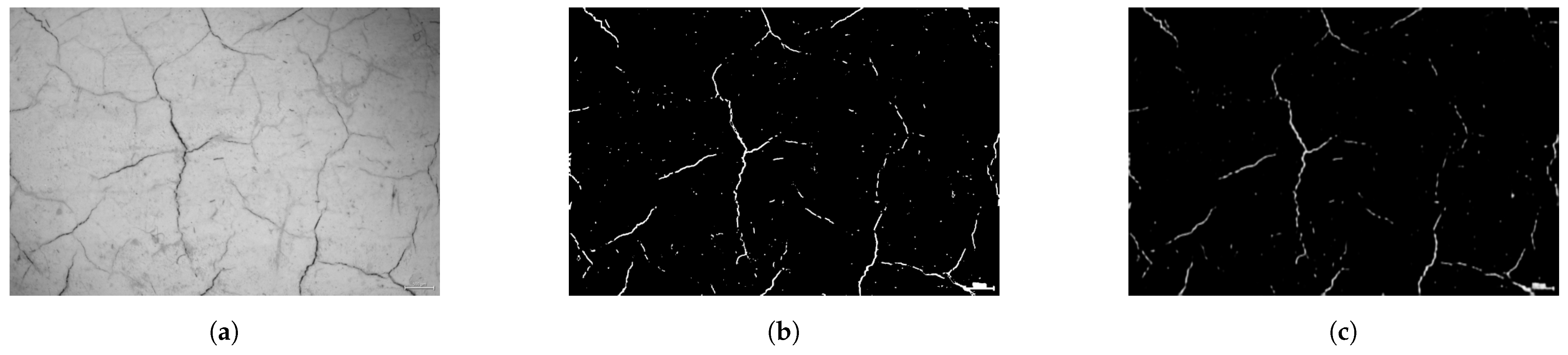

3.2. A Sample from the Average Case

This is one of the most common types of networks to be investigated, obtained from a picture with specific degradation, like missing segments (the right side of

Figure 5a). These macroscopic degradations usually mislead craquelure network detection. Besides these degradations, macroscopic cracks are present on the substrate.

This is a typical case of incorrect detection for the ORB model, where the high density of the craquelure network leads to false positives. These false positives are shown in

Figure 5b, where there are many detected points that are not related to the craquelure network. In this case, the predicted network (see

Figure 5c) shows mainly the major networks. The model also detects fewer probable network positions (shown in darker gray), thus demonstrating the fact that the machine learning algorithm provides more flexibility in terms of detection than the deterministic ones.

In order to make the lower probability prediction more visible, it is possible to adjust the image gray levels toward the maximum (thus increasing the intensity of the gray components for numbers closer to zero in the same way as histogram manipulation algorithms).

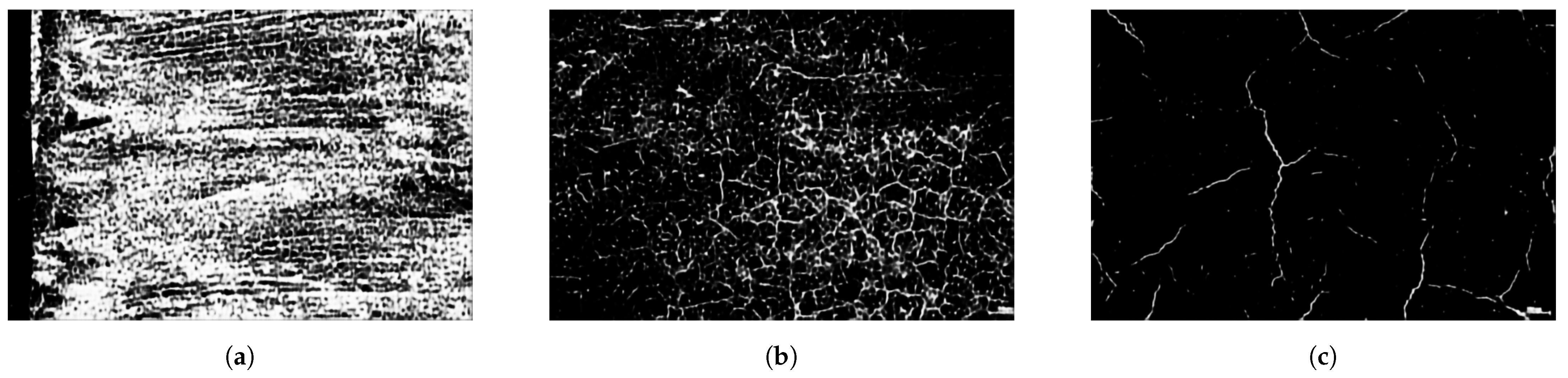

3.3. The Best Case

This sample contains a common picture with varnish, where the craquelure network developed over time. It shows both prominent and faded networks (see

Figure 6a). At least for this case, the deterministic algorithm provided better results with accurate network detection, and this led to a much closer ML algorithm detection to the target one.

This sample provides the best SSIM score between the target (

Figure 6b) and the machine learning-produced output (

Figure 6c). If an adjustment in the gray histogram is performed on the ML output, all features from the target are clearly visible. In this picture, the faded craquelure is interpreted with a lower probability (and is thus a darker gray).

We can also conclude that the machine learning approach is less sensitive to noise induced by the substrate deterioration than the deterministic methods and, in conjunction with the embeddings search system (machines are less susceptible than humans to detecting gray levels), it provides an interesting tool for craquelure network search, providing a valuable tool for evaluators.

3.4. Portable Model Output

While it is known that any model conversion comes with an increase in loss with respect to the original model, this work focuses on model portability, and we chose a standard way to make the model portable using the ONNX [

32] runtime. In our case, the export is less impacted by the number representation conversion from the fact that the model uses (for training and inference while using the original PyTorch implementation) a lower precision for the floating numbers, known as bfloat16. This approach speeds up the training process and reduces the gap between floating-point numbers and integers in case of quantized inference.

In our model, we chose not to perform quantization and kept the bfloat16 when changing to the ONNX format.

In

Figure 7, we present the output for the portable model associated with the ones presented above.

Figure 7a was generated using the same image as shown in

Figure 4,

Figure 7b was generated from the same original image in

Figure 5, and

Figure 7c has the same input as the one presented in

Figure 6.

The exported model consists of only a 6.5 Mb file, which can be called for inference from any of the supported ONNX backends using the associated runtime [

22].

4. Discussion

We started from the known premise that artwork items can be authenticated using the craquelure network with respect to the known craquelure network features at the time the item was identified. This approach focuses on small artworks that can travel to many places and be easily replaced by forgeries in such places. The main idea is to provide a known, good (authenticated) craquelure network. This network can then be compared by authenticators with the known good one in order to be able to confirm the returned artwork.

With this in mind, we developed a tool that encodes, using a vector database, the known good craquelure network for later reference. This database is enclosed within a machine learning algorithm (using a common architecture) that is embedded in devices (using micro-controllers, FPGAs, or even mobile phones or web apps/web APIs in such a way that the authenticators can easily verify its authenticity). This choice oriented us toward a more sparse network architecture, leading to the deep autoencoder architecture we implemented.

While the tool provided accurate results, it is still susceptible to errors due to image acquisition angles and the choice of patch; thus, its main purpose is not to replace specialized authenticators but rather to help them.

The results obtained in this research provide tooling support based upon the hypothesis that the craquelure network of a painting can serve as a unique digital fingerprint. Such a fingerprint is suitable for authenticating and monitoring degradation in artworks, providing a key point in their traceability. The integration of a vector database for both vector embeddings and the artwork metadata provides consistent storage with a query application programming interface, and these vectors provide consistent similarity results, even for small rotation angles. The decoder component can then be applied to reconstruct the original craquelure network and compare it to the current form, thus enabling efficient feature extraction and image reconstruction for human validation.

Some interesting results were obtained by swapping the encoder and the decoder parts, but such an approach makes it impossible to use the vector embeddings for similarity search (they are larger than the image itself). The attention mechanisms within the transformer architecture [

30] were initially promising, but our hardware’s graphical processing units (GPUs) were quickly exhausted.

With the deepening of this research, we propose a complex study focused on paintings with acrylic paint on canvas with and without a varnish layer. We appreciate that this technique will raise some specific problems due to the flexibility of acrylic materials and their high stability to microclimatic variations over time (with less pronounced dehydration).

Current limitations include the requirement for high-resolution images to achieve optimal performance and the potential for the model to overfit certain types of paintings. Future directions may include generalization to more artistic styles and historical periods, the integration of multi-modal data (e.g., spectral), and the development of mobile applications for on-site authentication.