1. Introduction

Scientific visualization is a sub-discipline of data visualization concerned with the representation, interpretation, and interactive exploration of data derived from computational simulations, empirical measurements, or theoretical models. It plays a key role in the qualitative and quantitative analysis of high-dimensional, spatiotemporal, and multivariate datasets [

1,

2,

3,

4,

5]. Furthermore, visualizing complex fluid dynamics, especially supersonic spatially developing turbulent boundary layers (SDTBLs), is challenging due to the high spatial and temporal resolutions required. Direct Numerical Simulation (DNS) offers detailed insights into turbulent flows but generates massive, complex datasets that hinder visualization and analysis. Computational fluid dynamics (CFD) aims to accurately model fluid behavior under specific conditions, often involving varied geometries and scales, which introduces significant computational demands. Traditional CFD methods are computationally intensive, requiring long runtimes and large amounts of storage to achieve convergence. However, the emergence of high-performance GPUs with massively parallel, multi-threaded architectures has completely changed the game, allowing even simple CFD algorithms to provide results almost instantly, as in Bednarz et al. [

6]. One of the primary objectives of this research is to develop an adaptive scientific visualization framework that can adjust to the special needs of datasets that are simulated using high-spatial/temporal-resolution numerical simulations. The proposed approach improves the user’s capacity to highlight spatial data that shows fluid dynamics, modify it to many visual formats, and scale it to various sizes to fulfill specific analytical demands. These formats include iso-surfaces, points, and vectors. New developments have brought even more attention to the possibilities of incorporating virtual reality (VR), augmented reality (AR), and mixed reality (MR) into CFD operations [

7,

8,

9,

10,

11,

12,

13]. One study that stands out uses computational fluid dynamics (CFD) in conjunction with four-dimensional flow-sensitive magnetic resonance imaging (4D-MRI) to examine the flow dynamics in a multi-staged femoral venous cannula. A vital requirement for accurate validation methodologies is shown by the study’s 7% disparity between the experimental data and CFD models [

14]. The results also showed that the cannula’s proximal holes had the maximum inflow and velocity, producing a

Y-shaped inflow profile. This information is useful for improving cannula designs for extracorporeal therapy.

Additionally, a notable development in the sector has been the fusion of Building Information Modeling (BIM) with CFD and VR, made possible by programs like Autodesk Revit, CFD Simulation, and 3D Max [

15]. Additionally, a state-of-the-art method, known as Mixed-Reality Particle Tracking Velocimetry (MR-PTV), has emerged that offers confirmed, real-time visualizations of the particle velocities inside flow fields. According to [

16], MR-PTV provides accurate flow visualizations through the use of synchronized front-facing cameras in MR headsets, which has significant implications for industrial and educational applications. This manuscript expands on these advances by studying the remote visualization of a Direct Numerical Simulation (DNS) with a Unity game engine [

17], specifically by employing different iso-surface values and vortex core identification techniques, such as the Q-criterion proposed by [

18]. The proposed methodology uses Microsoft Azure software to address large-scale data storage challenges and allows for visualization of complicated datasets of fluid dynamics models derived from previously conducted CFD simulations when used with mixed-reality environments. Augmented reality (AR) proves effective in enhancing planning, maintenance, collaboration, and simulation in CFD visualization, offering intuitive interaction and a deeper understanding of design decisions. Accurate tracking of the device and user positions, accomplished using vision-based, sensor-based, and hybrid tracking techniques, is crucial to the success of augmented reality systems. These technologies’ combination with portable devices and head-mounted displays (HMDs) has made it easier to create AR applications that are not only easy to use but also successful in lessening the pain that is typical with extended use [

19,

20]. Challenges remain in visualizing high-resolution, time-dependent DNS data on mobile devices due to large frame sizes and the complexity of VTK files, which demand significant processing. This work addresses these issues by optimizing data formats and visualization methods to present unsteady 3D CFD results effectively in AR environments. Subsequent research, like the creation of a virtual Gerotor pump prototype [

21], highlights the potential of sophisticated visualization methods in CFD. The prototype enables the creation of a full Digital Twin by integrating CFD and experimental data, streamlining performance evaluation and enhancing design accuracy. This case study demonstrates the wider range of applications for visualization technologies in engineering and how they can improve the precision and effectiveness of intricate design procedures [

22]. The role of extended reality (XR), comprising virtual reality (VR), augmented reality (AR), and mixed reality (MR), in computational fluid dynamics (CFD) has been expanding rapidly, particularly as simulation complexity and data visualization demands continue to grow. XR technologies offer significant advantages for data interpretation, interactive simulation, collaboration, and design iteration in the context of CFD.

The core objective of this study is to develop an immersive, interactive platform that improves the interpretation of these intricate datasets by integrating DNS data with extended reality (XR) settings, notably virtual reality (VR), augmented reality (AR), and remote visualization. With the use of XR, this work seeks to transform the visualization of fluid dynamics, offering a novel approach to understanding these intricate processes [

23]. A key focus of this research is applying data compression, both server-side and local, to reduce file sizes while preserving visual fidelity for efficient AR data handling. The compressed GLB files, each representing a time frame of the fluid simulation, are then imported into Unity game engine software. Within Unity, a custom animation script is developed to orchestrate the sequence of frames to give the user the impression of an animated flow, creating a dynamic visualization of the fluid behavior in the virtual wind tunnel. This animated sequence is then integrated with the Azure Remote Rendering framework, leveraging cloud computing capabilities for enhanced performance. The final stage involves connecting the rendered output to Microsoft HoloLens 2, enabling users to interact with the fluid simulations in an augmented reality environment. This connection facilitates high-quality rendering of complex fluid dynamics in the user’s field of view, allowing for intuitive analysis of flow patterns and turbulent events. The emphasis of the Unity profiling analysis is on optimizing the GPU and CPU performance for an overall enhancement of the VWT’s efficiency. Consequently, the framework can be readily adapted to a wide array of domains and environments—such as pedagogical simulation, biomedical visualization, education, architectural modeling, molecular dynamics, advanced manufacturing processes, sports, and immersive virtual tourism—to facilitate high-fidelity, fully immersive visualization experiences [

24,

25,

26,

27,

28,

29,

30,

31,

32,

33].

2. Methods and Materials

The proposed methodology establishes an end-to-end extended reality (XR) visualization framework tailored for the Direct Numerical Simulation (DNS) data of compressible turbulent flows. This framework integrates scientific post-processing, 3D data compression, profiling-based optimization, and real-time cloud rendering. Each stage is designed to ensure performance scalability and the accessibility of the high-fidelity visual content in augmented reality environments such as that provided by the Microsoft HoloLens 2.

Section 3 provides a concise overview of Direct Numerical Simulation (DNS) as applied to spatially developing turbulent boundary layers (SDTBLs). DNS constitutes a high-fidelity computational methodology wherein the Navier–Stokes equations are integrated directly, without employing any turbulence modeling, thereby fully resolving the entire spectrum of dynamically significant spatial and temporal scales inherent in the turbulent flow field.

The DNS datasets used in this study corresponded to supersonic turbulent boundary layers. Raw simulation outputs, typically stored in structured volumetric formats, were processed using ParaView 5.10 and Python 3.13 scripting. The post-processing pipeline extracted iso-surfaces representing significant scalar fields such as the velocity fluctuations (

), temperature, or vorticity (

Section 4). These surfaces were exported in polygonal geometry formats (GLTF) while preserving the mesh resolution and spatial scaling. To enable rendering in Unity, only the relevant frames or time steps were selected, and scalar field values were mapped to vertex colors or shaders, providing visual insight into the flow structures. This step was critical in transitioning from numerical data to 3D assets suitable for XR visualization.

Once extracted, the high-resolution meshes underwent compression to optimize them for rendering on limited-resource devices. The GLB format, an efficient binary encoding of the GLTF standard, was used for mesh storage and transmission (

Section 5). This format reduced the file size and eliminated external dependencies, enabling rapid loading and streaming within Unity. Mesh simplification techniques were employed to lower the polygon counts by up to 60–80% without significantly compromising the visual fidelity. The result was a set of optimized 3D assets that retained sufficient detail for scientific interpretation while being lightweight enough for mobile or head-mounted rendering.

To ensure stable and responsive user interaction, performance profiling was conducted using Unity’s built-in tools. The Unity profiler and Profile Analyzer were used to analyze the CPU and GPU frame times, identify rendering limitations, and monitor memory usage patterns (

Section 6). Key metrics such as the garbage collection frequency, draw calls per frame, and shader compilation time were tracked. Informed by this analysis, the application was refactored to incorporate techniques like level-of-detail (LOD) switching, asynchronous asset loading, and efficient animation state management. These changes resulted in an improvement in the FPS and a measurable reduction in memory fragmentation by 30%, making the application stable for prolonged XR sessions. The profiling stage was essential for transitioning from functional visualization to a smooth and high-performance immersive experience.

To further offload the processing demands and enable large-scale rendering, a remote visualization architecture was implemented using Microsoft Azure. A high-performance Azure Virtual Machine (VM), equipped with NVIDIA GPU resources and running Windows Server, was used to handle DNS model rendering. The Unity application was deployed on this VM and integrated with Azure Remote Rendering (ARR) services. The rendered output was streamed to the HoloLens 2 via a secure 5 GHz wireless network (

Section 7). Device deployment was performed using Visual Studio with settings tailored for ARM64 builds using the Universal Windows Platform (UWP). The HoloLens Developer Mode and Device Portal facilitated USB-based application sideloading and IP-based debugging. This configuration eliminated the hardware limitations of the HoloLens 2 by leveraging scalable cloud resources and enabled complex models with over 1 million polygons to be visualized interactively in AR.

The complete pipeline from DNS post-processing to remote rendering demonstrates a scalable and immersive approach to scientific visualization. The system supports real-time exploration of fluid dynamic phenomena in AR, offering intuitive spatial awareness and interaction capabilities that surpass those of traditional 2D screen-based methods. Additionally, the use of a cloud-based architecture permits simultaneous access by multiple users, promoting collaborative analysis and remote education. The combination of data fidelity, performance optimization, and interactive delivery establishes a robust framework for XR-based research in computational fluid dynamics and other scientific domains involving high-volume, time-resolved simulation data.

The present manuscript introduces significant technical and methodological advancements over previous work [

22], which we summarize below:

Remote Virtual Object Visualization: The current work introduces remote visualization capabilities for virtual flow datasets. This feature was not available in [

22], where all visualizations were conducted locally on a single workstation.

GLB Optimization and Multi-Time Encapsulation: In [

22], each time step of the CFD simulation had to be exported as a separate GLTF file, leading to inefficiencies in data handling and visualization. In contrast, the present work develops a novel methodology that encapsulates multiple time steps within a single GLTF file, which is then converted to the GLB format, significantly improving the loading times, asset management, and memory efficiency.

Quantitative Performance Profiling: We provide a detailed quantitative analysis of the system performance using Unity’s Profile Analyzer and Memory Profiler tools. This level of profiling, including metrics such as the total and tracked memory usage, the Garbage Collector’s impact, and the asset import overhead, was not performed in [

22].

Real-Time Optimization for XR Applications: The current work explores runtime optimizations for large-scale XR applications, including the effects of disabling Unity’s incremental Garbage Collector and the impact of GLB formatting on memory fragmentation and performance stability, areas not covered in earlier work.

In summary, this new manuscript builds upon the foundational XR visualization methodology introduced in [

22] but introduces substantial improvements in terms of the data structure efficiency, performance optimization, and interactivity for the visualization of complex and large-scale CFD datasets in immersive environments.

3. Direct Simulation of Turbulent Wall-Bounded Flows

It is well known that in fluid dynamics and wall-bounded flows most of the transport of mass, momentum, and heat takes place inside the boundary layers. Numerically speaking, accurately characterizing this thin shear layer is arduous, especially if the purpose is to capture the turbulence, compressibility, and wall curvature effects, particularly at large Reynolds numbers and with complex geometries. Furthermore, three-dimensionality and unsteadiness are inherent aspects of fluid dynamics, not outliers, making the boundary layer problem formidable and resource-consuming. Moreover, Direct Numerical Simulation (DNS) is a numerical tool that resolves all the turbulence length and time scales in computational fluid dynamics (CFD). As a consequence, it supplies data with the most precise spatial/temporal resolution possible on the fluid flow within the boundary or shear layer. The downside is the tremendous computational resources needed not only in the stage of running the flow solver but also during the storage and post-processing phase [

34,

35]. This study focuses on turbulent boundary layers that develop spatially along the flow direction, showing non-homogeneous conditions along the streamwise direction due to turbulent entrainment (i.e., spatially developing turbulent boundary layers, SDTBLs). DNS of SDTBLs requires the prescription of the time-dependent inflow turbulence at the computational domain inlet. A method for prescribing realistic turbulent velocity inflow boundary conditions was employed [

36]. The methodology was extended to high-speed turbulent boundary layers in [

34]. Furthermore, accounting for surface curvature effects introduces non-trivial difficulties to the problem, since the boundary layer is subject to critical distortion due to the combined streamwise–streamline (Adverse and Favorable) pressure gradients.

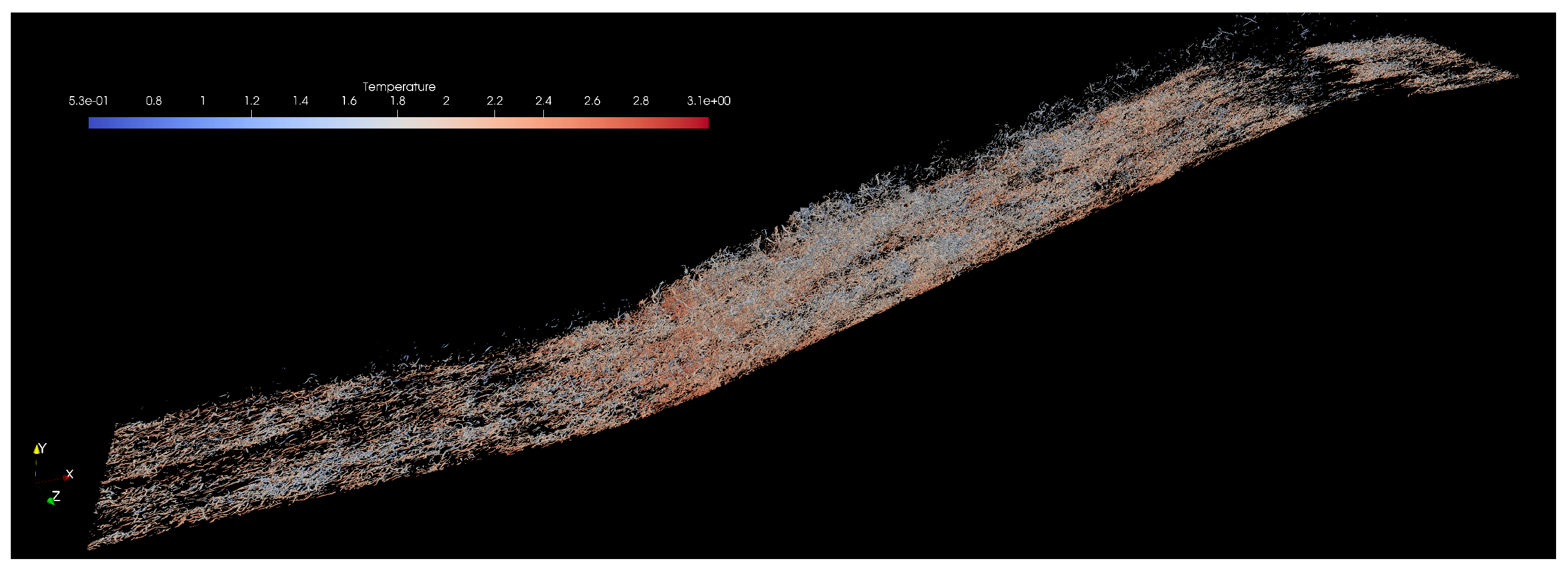

Figure 1 shows a lateral view of the complex geometry of a current (concave and convex wall curvatures) in supersonic turbulent boundary layers with 385 ×

cells. The Reynolds number (

) range is 900–2600, which represents a real challenge for the DNS approach. Where

is the boundary layer thickness,

denotes the friction velocity, and

is the wall’s kinematic viscosity. The preliminary results in

Figure 1 (the numerical simulation is close to reaching the statistically steady flow stage) exhibit instantaneous fluid density contours in Adiabatic walls with an evident (inclined line at the gray–white zone interface) oblique shock wave generated by the concave surface, which slows down the incoming supersonic flow (APG).

Figure 2 shows vortex cores that were extracted and visualized using the Q-criterion methodology [

18]. Clearly, at such high Reynolds numbers, the turbulence structures look finer and more isotropic. The presence of an APG at the concave curvature enhances turbulence production and velocity fluctuations, as demonstrated by the high concentration of vortex cores. On the other hand, the convex curvature results in very strong flow acceleration or a very strong Favorable Pressure Gradient (FPG) in the supersonic expansion (note the presence of expansion waves in the density contour plot). The very strong Favorable Pressure Gradient of the incoming flow induces quasi-laminarization [

37]. It can be observed that a very low density of vortex cores exists downstream of the convex curvature.

In this study we employed a DNS database [

38] of supersonic concave–convex geometries at lower Reynolds numbers for mixed-reality (MR) purposes. The computational domain possessed 10 ×

cells. Three wall thermal conditions were prescribed: Cold, Adiabatic, and Hot conditions. The Reynolds number (

) range was 214–684. Readers are referred to [

22] for additional scientific visualization details.

4. DNS Data Post-Processing for XR Visualization

Processing large-scale simulations is a non-trivial task that demands a scalable and efficient solution. This study predominantly used the in-house Aquila library [

35]. Aquila is a modular library for post-processing large-scale simulations and enables the processing of large datasets by operating out of core and providing the illusion of an in-memory dataset through asynchronous data pre-fetching [

35]. The library uses an MPI backend for distributed memory communication and HDF5 [

39] to ensure efficient and compressed files at the storage backend. An approach to high-performance calculations requiring instantaneous flow parameters involves an asynchronous dispatch engine that handles scheduling without input from the domain expert. Currently, the focus is on the use of TBBs (Threading Building Blocks) as the threading layer [

40,

41]. TBBs incorporate a non-centralized, highly scalable work-stealing scheduler amenable to unbalanced workloads. This implementation also addresses issues such as conditional masking of flow variables via Boolean algebra, which avoids excessive memory allocations in favor of additional integer calculations. Optimizing compilers are capable of generating machine instructions accounting for the wide backends in modern CPUs and concurrent floating point/integer units in modern GPUs, taking advantage of the added integer computations. All in all, the described post-processing infrastructure is scalable from platforms with constrained performance to large-scale supercomputers with thousands of CPU/GPU cores.

The DNS dataset size utilized in the present study at low Reynolds numbers was 736 GB (approximately 4000 three-dimensional flow fields). The DNS dataset at much higher Reynolds numbers currently being assembled is estimated to reach around 28 TB, collecting 6200 three-dimensional flow fields. Visualization of the 3D database was performed by means of the modular interface VTK library [

42] (.vts, .vtu, or .vtk formats, including the .pht format for data from the PHASTA flow solver reader), which enabled visualization in ParaView [

43].

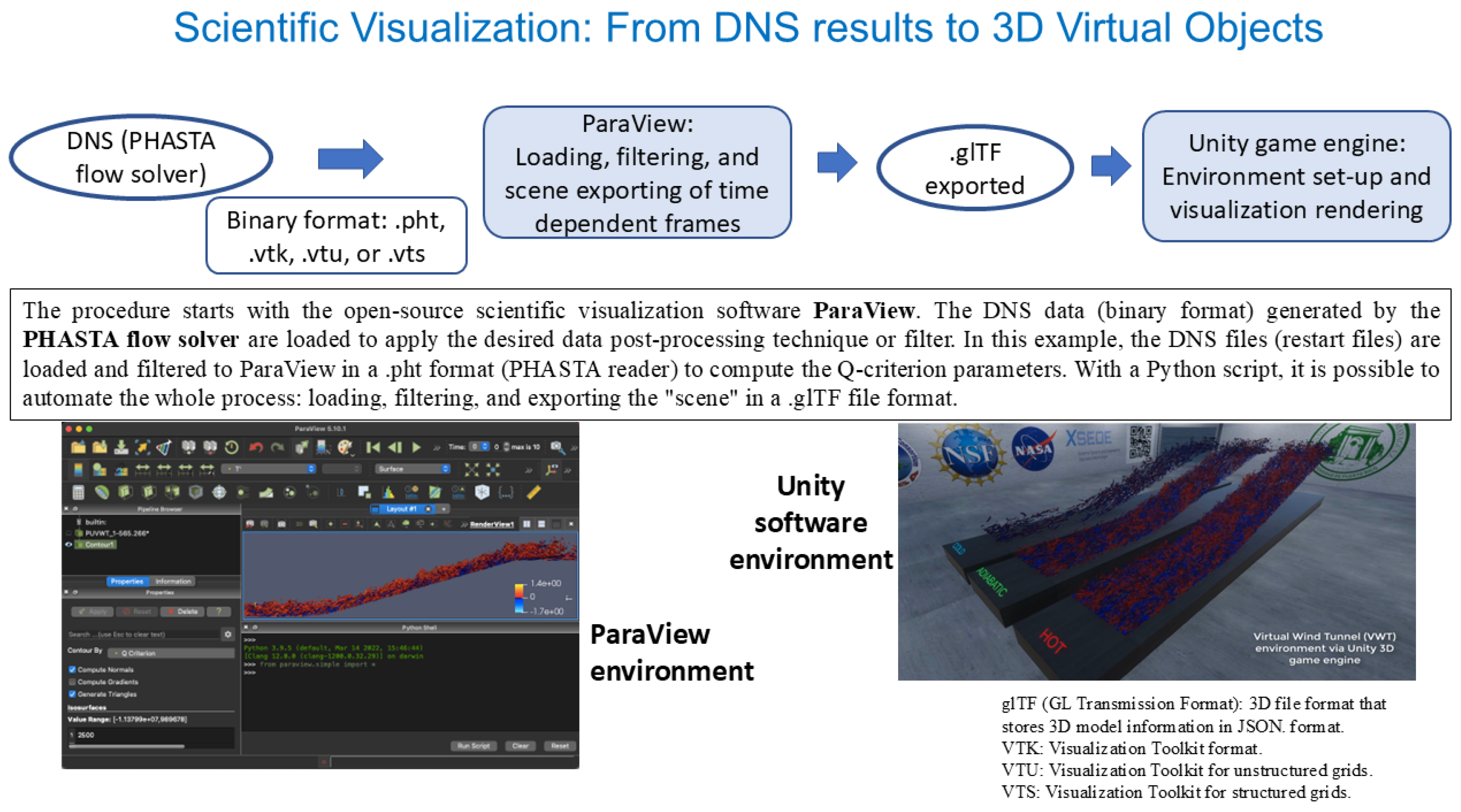

Figure 3 depicts the corresponding pipeline procedure from high-spatial/temporal-resolution data to Unity game engine rendering in the virtual wind tunnel (VWT). The procedure started with the use of the open-source scientific visualization software ParaView. The DNS data (binary format) generated by the PHASTA flow solver were loaded to apply the desired data post-processing technique or filter. In the left image in

Figure 3, the DNS files (restart.* files obtained from the PHASTA flow solver) were loaded to and filtered in ParaView in the .pht format (PHASTA reader) to compute the Q-criterion parameters. With a Python script, it was possible to automate the whole process: loading, filtering, and exporting the “scene” in a GLTF [

22]. Once prepared, the data was imported into Unity to develop a virtual wind tunnel (VWT), a virtual environment where users could visualize and interact with DNS results on demand, offering a truly immersive experience.

This study focuses on visualizing turbulent fluid flows, specifically supersonic spatially developing turbulent boundary layers (SDTBLs), using DNS data with high spatial and temporal resolutions. We explore the use of a Direct Numerical Simulation (DNS) database for visualization using augmented reality (AR) technologies, such as the HoloLens Gen. 2, and Microsoft Azure as a backend to reduce the local computational usage and rendering resources required.

5. Data Optimization and Compression via GLB Encoding

To enable frame consolidation, a C# script was implemented and run in Node.js, using the GLTF library for merging multiple GLTF outputs into one. Efficient asset preparation is essential for performance-sensitive scientific visualization, particularly when handling high-frequency real-time rendering of a dense, frame-based simulation output. To facilitate smooth rendering of temporally resolved flow fields in Unity, a streamlined pipeline was devised to consolidate multiple GLTF files, each representing a distinct time step, into a singular binary GLTF file suitable for optimizing the runtime execution.

The experiments that were conducted to evaluate the performance and memory efficiency of the GLTF and GLB formats using 40-frame simulations focused on Hot, Cold, and Adiabatic wall conditions. In these simulations, these three wall conditions present in the GLTF cases were simulated concurrently, resulting in average memory usage and noticeable performance fluctuations due to the separate handling of meshes, textures, and animations inherent to the GLTF. In contrast, the GLB format, which consolidates all assets into a single binary file, exhibited superior memory management and reduced the performance variance. In particular, GLB-based simulations demonstrated a significant reduction in garbage collection (GC)-related spikes in the profile analysis, leading to smoother and more stable system performance. Statistical analysis revealed that the GLB format achieved up to 25% memory savings in all the tested cases. Specifically, the Cold wall saw a reduction in memory usage of 22.84%, the Hot wall 25.23%, and the Adiabatic wall 25.00%, as shown in

Table 1. These results highlight that the binary nature of GLB files not only reduces the file sizes but also minimizes the memory overhead and optimizes the asset loading efficiency, making it a more efficient format for large-scale simulations and real-time applications. Consequently, the GLB format proved to be more effective in terms of its memory usage, performance stability, and garbage collection management compared to the GLTF format.

Optimizing Scientific Visualization in Unity: Strategies for Compressing GLTF Files to GLB Files

For this analysis, two separate projects were created in Unity (version 2022). The first project used 40 individual GLTF frames from the Cold, Hot, and Adiabatic simulation situations. In this configuration, each frame was loaded and handled as an individual resource. In the second project, all 40 frames were combined into a single GLB file and converted to a binary format for better asset management. The aim of this approach was to compare the performance and memory efficiency of the handling of separate GLTF files and a single GLB file during long-duration simulations. The subsequent section presents a comprehensive overview of the data compression optimization process and its impact on enhancing the real-time simulation performance.

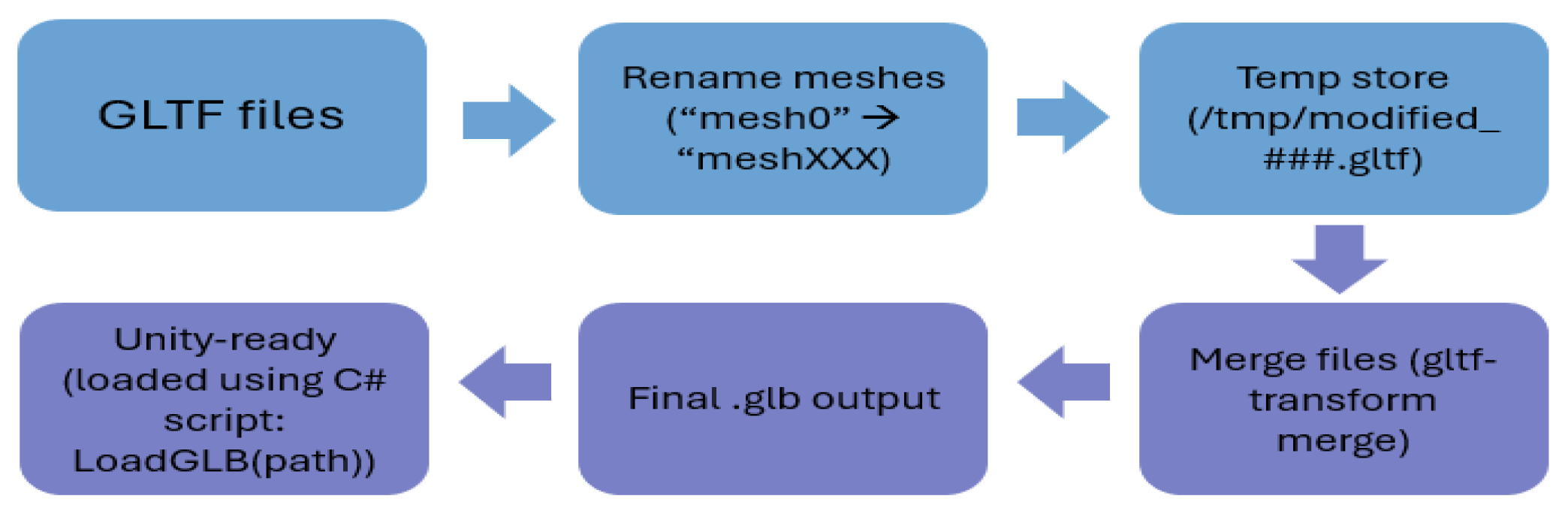

This process was automated using a Bash script, as shown in

Figure 4, executed within a Linux shell environment using the Windows Subsystem for Linux (WSL2), specifically using Ubuntu 20.04. The script performed three critical operations: (a) the input GLTF files—each corresponding to a unique simulation frame—were deterministically sorted using lexicographic ordering based on filename indices to preserve temporal continuity; (b) automatic disambiguation of duplicate mesh identifiers—mesh objects originally labeled “mesh0” across all the files were uniquely renamed (e.g., “mesh000”, “mesh001”, etc.) using stream editing (sed) during pre-processing; and (c) the modified assets were programmatically merged using the gltf-transform merge tool from the @gltf-transform/cli library (v3.0+) toolkit, installed using Node.js, which was built on top of the GLTF 2.0 specification and offered high-performance conversion and restructuring of GLTF assets. The script leveraged mktemp to manage temporary processing directories, ensuring non-destructive input handling. Briefly, mktemp is a command-line utility used in Unix-like operating systems like Linux and BSD to securely create temporary files and directories with unique names. It is particularly useful in shell scripts and applications where temporary storage is needed.

This transformation into a GLB format yielded a compact binary representation that embedded the geometry, material, animation, and metadata into a single, compact file. Compared to loading individual GLTF assets, this consolidation reduced the filesystem’s I/O latency, improved Unity’s scene instantiation time, and minimized memory fragmentation during rendering. Additionally, this approach mitigated common issues such as duplicated object names and inconsistent asset paths, which are prevalent when loading files independently in Unity’s runtime environment. However, Unity’s import limit of 2 GB per asset posed a constraint. Consequently, the merged GLB files were restricted to 40 frames to stay within this boundary. The resulting GLB asset encapsulated all 40 individual time step frames, structurally arranged to support animated rendering workflows within Unity’s Scriptable Render Pipeline (SRP). This approach minimized memory fragmentation, improved the CPU-GPU transfer latency, and circumvented the identifier collisions that arise when using separately imported GLTF files.

Future research will focus on enhancing performance through dynamic streaming and modular asset loading techniques to further mitigate the effect of Unity’s 2 GB asset import limit. Dynamic streaming will allow assets to be loaded on demand, optimizing memory usage and reducing the load times, especially for larger datasets. Additionally, modular asset loading will enable selective asset loading, ensuring that only the necessary assets are loaded into memory at any given time, further improving the performance and scalability. These approaches aim to address the current limitations and maximize the potential of GLB for use in high-fidelity, resource-intensive applications. Ultimately, GLB will continue to offer substantial benefits in terms of asset management and runtime efficiency, solidifying its position as the optimal format for complex scientific visualization tasks.

6. Unity Profiling Tools for Performance and Memory Optimization

This section provides an in-depth analysis and optimization procedure for enhancing the performance and Unity’s memory usage throughout scientific visualization simulation. This study employed a multi-faceted evaluation framework, including wall thermal condition-based performance assessment over a particular interval, in-depth profiling using Unity’s Performance Profiler and with the incremental Garbage Collector (GC) disabled, and inspection of memory consumption trends across execution timelines in Unity’s Memory Profiler. Unity offers a comprehensive profiling ecosystem to analyze and optimize the performance of real-time applications. Two critical components in this suite are the Profile Analyzer and the Memory Profiler, which enable systematic evaluation of runtime execution behavior and memory utilization.

The Profile Analyzer operates on data captured from Unity’s native profiler and facilitates statistical analysis across multiple frames. It supports detailed inspection of CPU-intensive operations, including markers such as PlayerLoop, EditorLoop, and render pipeline stages like ExecuteRenderGraph and DoRenderLoop_Internal. By aggregating the data per frame, it computes aggregate metrics such as the mean, median, min, max, and standard deviation, allowing developers to identify execution hotspots, temporal anomalies, and performance regressions across the test conditions. This tool is highly effective for comparative analysis between build variants, platform targets, or rendering configurations.

The Memory Profiler provides a snapshot-based segmented breakdown of memory allocations within Unity’s execution environment. It differentiates between tracked memory (memory explicitly managed by Unity, including the resource files, materials, and scripts) and untracked memory (memory that is not directly visible to the engine, typically from native or external sources). The profiler also reports managed heap statistics—specifically, in-use (actively referenced memory) and reserved (pre-allocated but unused heap space) memory—as well as fragmentation and object lifecycle data from the graphics drivers, executables, and DLLs. These metrics prove to be essential for evaluating the garbage collection behavior of the incremental GC (Garbage Collector), the asset loading overhead, and memory retention issues.

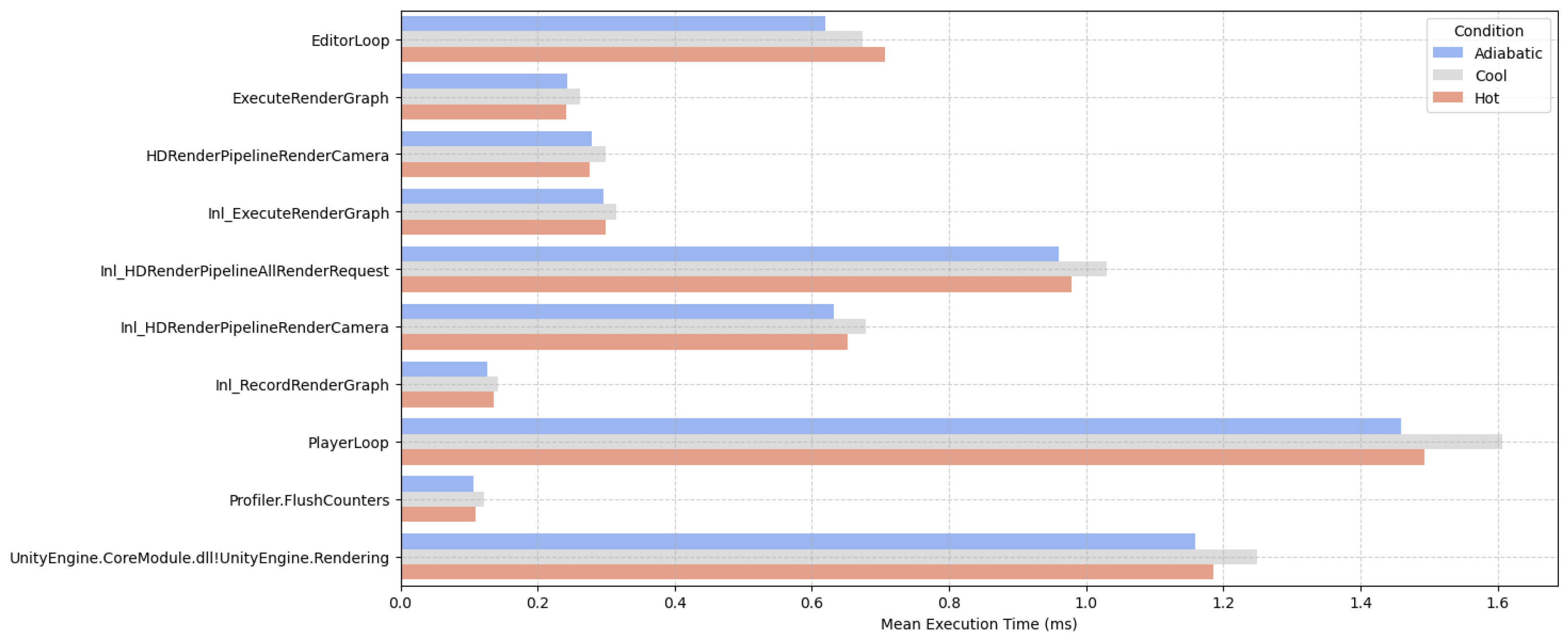

Profiling analysis conducted across multiple configurations of the proposed Unity-based scientific visualization system revealed ten markers that consistently contributed the highest computational load. Notably, PlayerLoop and EditorLoop—which represented the core execution cycles of the runtime and editor environments, respectively—demonstrated substantial resource consumption, indicating that both play a critical role in driving the animation and scene updates in the flow visualizations. Markers associated with Unity’s High Definition Render Pipeline (HDRP), including HDRenderPipelineRenderCamera, Inl_HDRenderPipelineRenderCamera, and Inl_HDRenderPipelineAllRenderRequest, reflect the rendering intensity inherent in this project, which involves high-resolution contour animations and shader-heavy visuals. Additionally, the ExecuteRenderGraph and Inl_ExecuteRenderGraph markers, responsible for coordinating render pass execution through HDRP’s Render Graph architecture, were significant contributors to the frame time, highlighting the complexity of rendering layered flow fields across multiple podiums. The presence of Inl_RecordRenderGraph further emphasizes the cost of preparing and recording these passes, particularly when rendering large GLTF/GLB assets in real time. The marker UnityEngine.CoreModule.dll!UnityEngine.Rendering underscores the low-level rendering tasks initiated by Unity’s core systems, and its high resource usage suggests that shader compilation and draw call management are non-trivial in the corresponding pipeline. Finally, Profiler.FlushCounters, though not directly part of the gameplay loop, consumed measurable resources due to the profiling process itself, particularly when capturing the data per frame. Together, these markers provide critical insights into optimization targets, especially under the memory and computational constraints imposed by the use of GLTF/GLB formats and real-time streaming of scientific data.

Secondly, enabling or disabling incremental garbage collection wherever appropriate and monitoring memory allocation using Unity’s Memory Profiler can help minimize GC-related spikes. Key optimization techniques involve minimizing memory fragmentation through proper asset loading/unloading management and leveraging object pooling to reduce the allocation frequency. Compression of textures, shaders and meshes, along with efficient handling of native code (including graphics drivers and DLLs), can significantly reduce the memory overhead. Additionally, minimizing the memory footprint of untracked allocations, such as those from external libraries or plugins, is crucial for overall optimization. To further optimize the memory usage, it is critical to monitor the memory spikes during scene transitions or asset loading and to ensure that unused objects and unmanaged memory are disposed of appropriately to prevent memory leaks. Continuous monitoring of both tracked and untracked memory using Unity’s Memory Profiler enables a reduction in GC-related performance issues. The process and outcomes of these attempts to optimize simulation execution for real-time applications are described in the following sections.

6.1. Thermal Wall Condition-Based Stability Evaluation: Hot, Cold, and Adiabatic

To evaluate the effect of the runtime period on the performance and resource usage, the flow simulations were conducted at intervals of 5, 10, and 15 min. Performance metrics were recorded throughout the experiment to calculate how an increase in the length of the simulation affected the execution time (in milliseconds) and system stability for both long-duration formats. The results demonstrate that the performance stabilized with an increase in the runtime, indicating the potential for long-duration simulations with predictable resource consumption when optimized correctly. This procedure highlighted the variations in memory handling and performance stability between the GLTF and GLB formats, particularly in extended simulation scenarios.

Preliminary profiling data derived from the Unity-based implementation reveal that the Hot wall flow scenario consistently showed longer execution times across the majority of key computational markers when compared to the Cold and Adiabatic boundary conditions. This empirical observation aligns with established theoretical expectations in both fluid dynamics and computational visualization. Specifically, Hot wall boundary conditions promoted increased flow isotropy and the development of finer-scale turbulent structures, which in turn generated more complex iso-contour topologies and elevated particle interaction densities (heavier frame files). Within the Unity environment, such flow characteristics manifested as an increased computational overhead, primarily due to intensified mesh deformation, a greater number of vertex manipulations, and more frequent shader evaluations executed per frame. Furthermore, the presence of thermally induced vortical structures necessitated the instantiation of denser particle fields, thereby amplifying the draw call frequency and real-time physics computation demands during animation rendering.

Such elevated complexity in the simulation and visualization directly affected performance metrics like PlayerLoop, Editorloop HDRenderPipelineRenderCamera, and ExecuteRenderGraph, leading to a measurable increase in the frame time and resource usage for the Hot case. The render pipeline, especially in the HDRP framework, experienced an additional computational overhead when processing complex materials and lighting conditions related to thermal plumes and gradients.

Notably, during extended runtime evaluations (e.g., 15 min continuous execution), the Cold flow case began to demonstrate computational resource utilization that was comparable to, or marginally exceeded, that of the Hot case for specific performance markers, as seen in

Figure 5. This observed shift was principally attributable to the cumulative memory allocation patterns and asset management behaviors inherent to the Unity engine. Specifically, the increasing complexity of the mesh geometries and the rendering of high-resolution contour plots in the Cold scenario progressively imposed greater demands on both the memory and processing subsystems. Despite this transition, the initial observation—that the Hot flow configuration incurred a higher computational load—remains significant, as it provides critical insight into the influence of thermofluidic properties (e.g., elevated temperatures and intensified turbulence) on Unity’s real-time visualization efficiency.

6.2. Profiler-Based Performance Comparison: GLTF vs. GLB

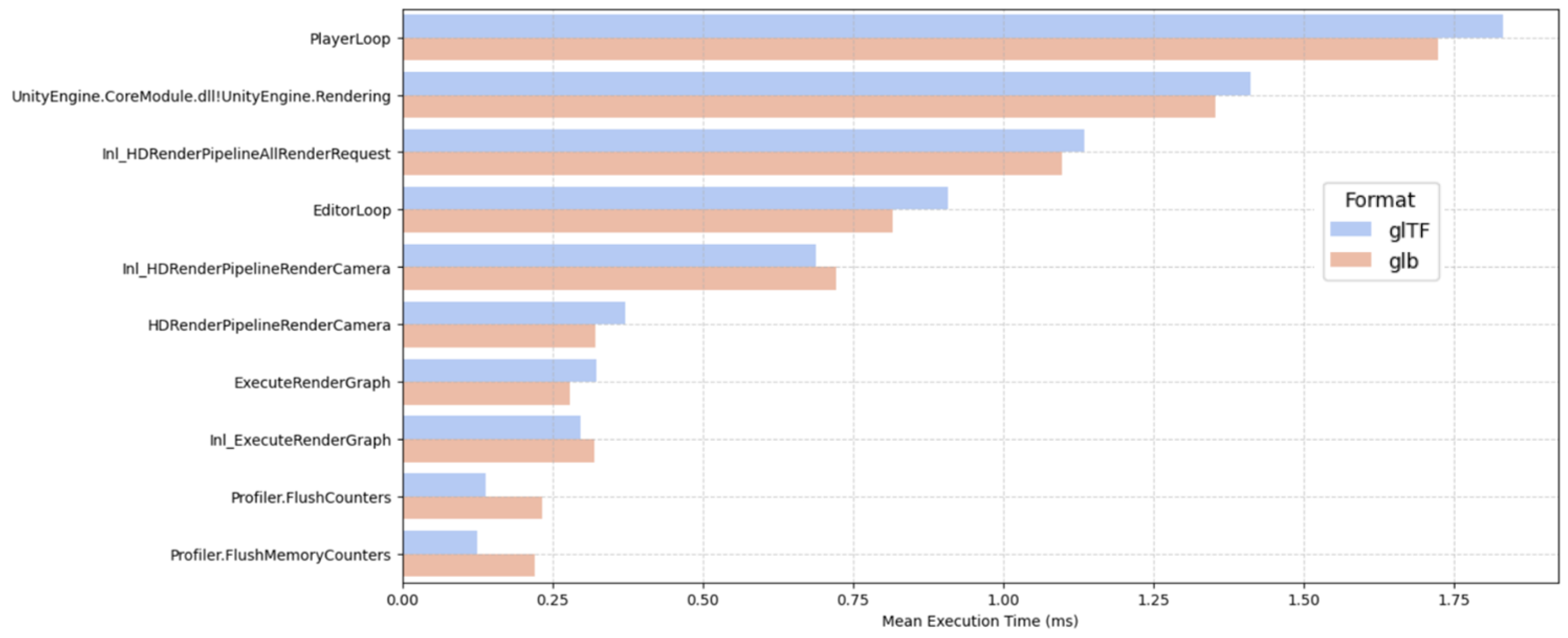

A profiler-based comparative analysis was conducted using Unity’s Profile Analyzer to evaluate the differences in the execution performance between Unity projects utilizing individual GLTF files and a merged GLB file. Each project contained 40 frames across three thermal flow cases—Cold, Hot, and Adiabatic—with the simulations executed over a 15 min interval to ensure a consistent performance environment. Profiling according to this procedure focused on the top 10 most highly effective markers,

PlayerLoop,

EditorLoop,

HDRenderPipelineRenderCamera,

Inl_ExecuteRenderGraph, and

DoRenderLoop_Internal.

Figure 6 presents the mean execution time comparison, showing that the GLTF-based project (blue) consistently demonstrated lower execution times compared to the GLB-based project (red) across critical render and update loops. The results highlight that the GLTF sustains an additional runtime overhead, likely due to its distributed structure, which requires multiple discrete resource loading operations, along with an increase in the shader/material update costs during real-time data rendering. On the other hand, the GLB format having a monolithic binary structure allows for more streamlined memory access and faster resource decoding, resulting in lower execution times across complex rendering and simulation stages.

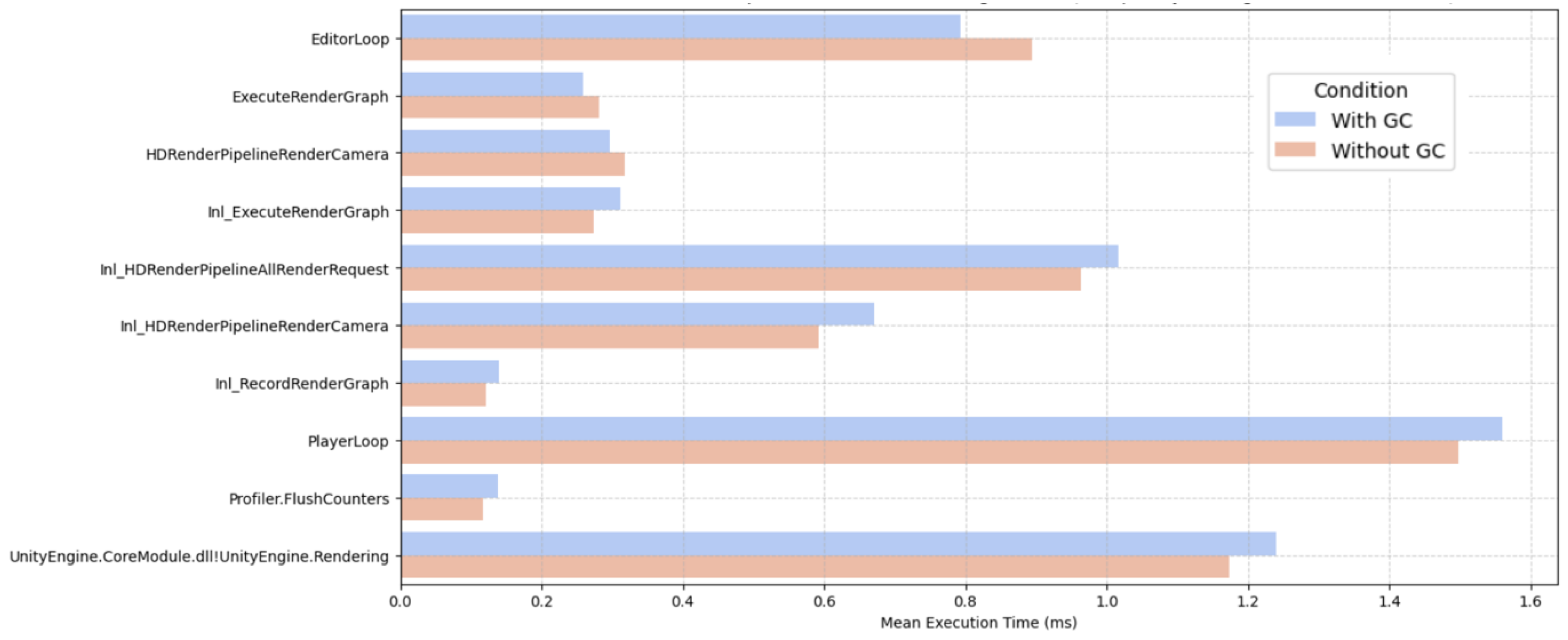

Furthermore, targeted optimizations involving Unity’s Garbage Collector (GC) configuration were evaluated to optimize the memory stability during high-load simulations. Disabling the incremental GC yielded a significant decrease in the runtime memory spikes and improved the overall simulation efficiency (

Figure 7), particularly during intensive flow animations. This evaluation aimed to assess the computational efficiency and determine the optimal format for large-scale scientific visualization workflows in Unity. These findings suggest that, although the GLTF remains advantageous for modular resource management, GLB, along with disabled Garbage Collector (GC) configurations, provides better real-time computational performance and runtime efficiency in scientific visualization workflows in Unity. To maximize the performance in expansive simulations, pre-processing strategies like merging individual GLTF frames into optimized GLB assets are essential, guaranteeing improved rendering stability and decreased the loading overhead without compromising any scientific detail.

6.3. Analysis of Memory Utilization with GLTF vs. GLB in Scientific Visualization

In high-fidelity scientific visualization applications such as Direct Numerical Simulation (DNS) flow animations, efficient memory management plays a pivotal role in ensuring smooth runtime performance and scalability, particularly in immersive environments like virtual and mixed reality. To evaluate the runtime performance and optimize the resource loading along with the animation, two approaches were investigated: one utilizing 40 individually loaded and animated GLTF files and the other employing 40 merged GLB files. Both configurations were evaluated using Unity’s Memory Profiler tool during the execution of animated flow visualizations that provided a quantitative insight into the distribution of memory usage between managed, tracked, and untracked segments. The goal of this experiment was to analyze the memory utilization patterns associated with each method and to determine which approach offered more optimized memory consumption for high-volume simulation data.

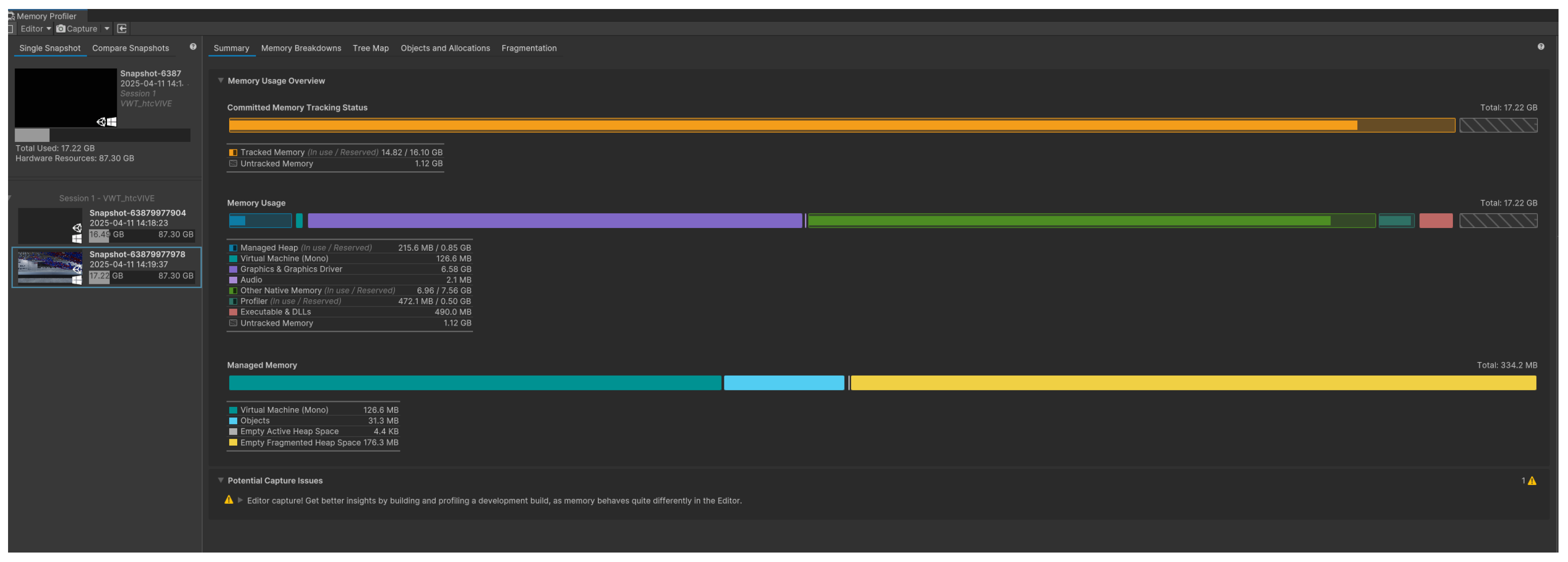

For the GLTF-based project, we recorded a total memory usage of 17.22 GB (

Figure 8), with a tracked memory in use of 14.82 GB (out of 16.10 GB reserved) and untracked memory totaling 1.12 GB. In contrast, the GLB-based setup demonstrated a lower total memory usage of 15.44 GB (

Figure 9), with a tracked memory of 14.01 GB (reserved: 14.48 GB) and an untracked memory of 0.98 GB. This notable reduction of approximately 10.3% in the total usage illustrates how binary asset consolidation in the GLB format led to more efficient memory handling, as summarized in

Table 2. Additionally, a lower difference between the reserved and in-use memory in the GLB project indicates tighter memory control, reducing fragmentation and improving the predictability.

A significant difference was also observed in the managed heap usage, which directly related to the .NET runtime’s memory allocation. The GLTF version used 215.6 MB of the heap (reserved: 850 MB), while the GLB version used only 93.7 MB (reserved: 124.8 MB). This stark contrast implies a higher garbage collection overhead and frequent allocations in the GLTF approach, likely due to the repetitive instantiation of metadata such as GameObjects, materials, and meshes. In contrast, the GLB strategy minimized these allocations by embedding all the assets into a single reusable structure, reducing the load on the Garbage Collector, and improving the runtime consistency.

Ultimately, the memory profiling results clearly establish the advantages of using merged GLB files over individually loaded GLTF assets in Unity-based DNS visualization projects as a more memory-efficient solution for large-scale, frame-by-frame scientific animations. The GLB approach offers a more compact and controlled memory profile, with lower total memory consumption, reduced untracked allocations, and a significantly smaller managed heap footprint. These characteristics are particularly beneficial in extended reality (XR) applications, where the performance constraints are tighter and the memory efficiency directly impacts the user experience. Consequently, adopting a GLB-based asset pipeline is recommended for producing scalable and immersive scientific visualizations in Unity.

In a performance comparison analysis between the GLTF and GLB formats, targeted memory optimization played a critical role in reducing the runtime variability and enhancing stability. Unity’s Memory Profiler revealed that GLB files, by encapsulating the geometry, textures, and animations in a compact binary format, significantly reduced managed heap fragmentation compared to individually loaded GLTF assets. This consolidation minimized the per-frame memory allocations and improved the asset instantiation efficiency during the runtime. A major approach applicable to GLB-based workflows includes aggressive texture and mesh compression, which further reduces the memory footprint without sacrificing visual fidelity. The unified nature of GLB also reduces the memory overhead associated with redundant asset references and inconsistent loading paths, commonly observed in GLTF workflows. Overall, these improvements aim to reduce the frame time variability, supporting measured validation of the runtime performance and memory, and rendering efficiency in complex immersive environments, ensuring scalable performance for large-scale scientific visualizations in Unity.

7. Remote Visualization Using Microsoft Azure

A major breakthrough in the remote visualization of Direct Numerical Simulation (DNS) data, especially in fluid dynamics research, has been made using a combination of Microsoft Azure’s cloud computing capabilities with the HoloLens Gen. 2 augmented reality (AR) platform. Using Azure’s powerful computing capacity, this system processes and renders high-resolution DNSs in real time. The HoloLens 2 receives the streamed images for immersive visualization. The outcomes of using this configuration have shown a number of important benefits. First, supersonic spatially developing turbulent boundary layers (SDTBLs) can be seen in previously unheard-of detail because of the Virtual Machine (VM) powered by Azure, which effectively manages the processing needs of DNSs. Users have the opportunity to interact with the data in a completely immersive augmented reality environment using the HoloLens 2, where the simulations, produced using Unity, are smoothly delivered. This facilitates the intuitive investigation of intricate fluid flow patterns, leading to a more profound comprehension of the fundamental physical phenomena. By offloading the computationally intensive tasks to Azure, a level of detail and interactivity in the DNSs that would be impossible on standalone devices is achieved. The HoloLens 2 provides an intuitive interface for exploring the simulation data, allowing users to walk through and interact with complex fluid structures in a way that traditional 2D monitors or VR headsets cannot match. This capability opens up new avenues for research and analysis in CFD. Researchers can now visualize and manipulate large-scale simulations in real time, gaining insights that were previously hidden in dense numerical data. The AR environment provided by HoloLens 2 offers a more natural and engaging way to interact with these simulations, leading to faster and more informed decision-making processes.

Connecting Azure to HoloLens 2

The process of connecting Azure to HoloLens 2 involved several critical steps, beginning with setting up a Virtual Machine (VM) on Azure. This VM was configured with the necessary computational resources to handle the rendering of DNSs. Following the configuration of the Virtual Machine and installation of Unity, Azure’s remote visualization capabilities were employed to stream the rendered output to the HoloLens 2 device. This connection was established using a combination of Remote Desktop Protocol (RDP) and Unity’s Universal Windows Platform (UWP) build settings, which allowed us to deploy the Unity project directly on HoloLens 2. Enabling Developer Mode and configuring the Device Portal on the HoloLens 2 facilitated seamless communication between the Azure Virtual Machine and the augmented reality headset. The result was a highly responsive and immersive visualization of fluid dynamics, viewed directly through the HoloLens 2.

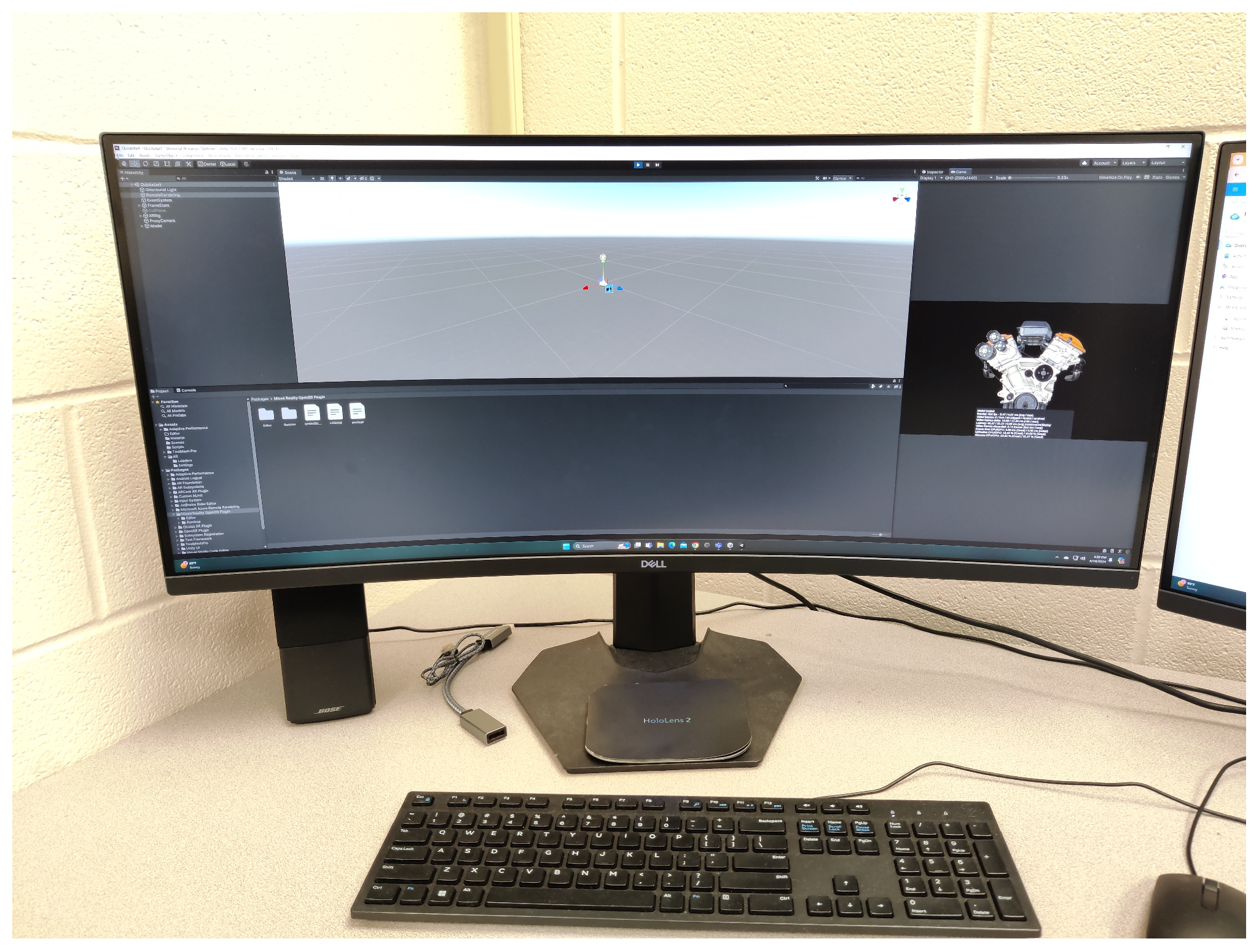

With the view to illustrate the application of AR using Azure Remote Rendering, we connected it to the HoloLens 2, followed by a series of steps that involved certain software and hardware configurations. The workflow was performed on a high-performance Dell (TX, USA) Alienware PC with a 13th Gen Intel

® Core™ i9 processor, 64 GB of RAM, and an NVIDIA GeForce RTX 4090 GPU, running Windows 11 Home as seen in

Figure 10. This PC served as the primary development environment, ensuring sufficient computational power for rendering and processing the tasks.

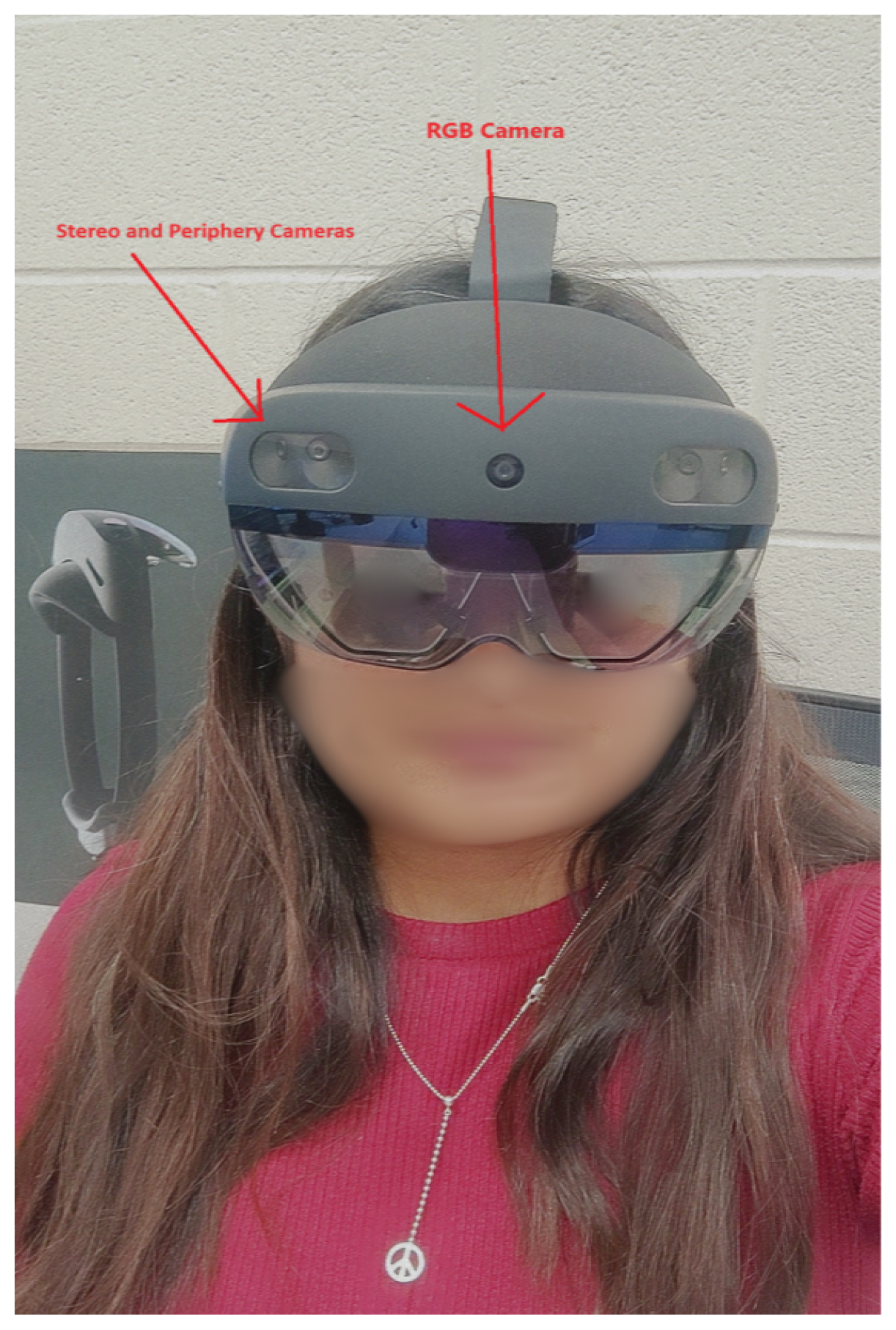

The Azure Remote Rendering service was initially configured using the Azure portal on the development workstation. A new rendering account was created, and key credentials, such as the account key and domain, were generated for secure communication between the client and Azure’s cloud services. The HoloLens 2 headset (

Figure 11), equipped with a Qualcomm Snapdragon 850 processor, 4 GB of RAM, and a second-generation custom-built holographic processing unit with 64 GB of storage, was connected to a stable 5 GHz Wi-Fi network to facilitate seamless data streaming.

We employed Unity 2021.3 LTS to develop the remote rendering application, incorporating the Azure Remote Rendering SDK and the Mixed Reality Toolkit (MRTK). The Unity project was specifically configured to support an ARM64 architecture, crucial for optimal performance on the HoloLens 2. Complex 3D models, which were optimized in formats like the GLTF, were prepared for remote rendering, ensuring high-fidelity visualization.

For the code optimization and project management, Visual Studio was used extensively. After configuring Unity, the project was opened in Visual Studio by navigating to the file’s build settings in Unity, switching the platform to a

Universal Windows Platform, which generated a solution file adjusting the configuration to

Release and

ARM64, and setting the debugger mode to

Remote Machine. Specific settings were fine-tuned, including by entering the IP address or the device name, which acted as the Machine Name of the HoloLens in the debugger settings, and ensuring the HoloLens was connected via USB to the PC. Finally, the project was built and deployed directly from Visual Studio to the HoloLens 2 using the debugger (F5), enabling real-time testing and validation of the application (

Figure 12).

This meticulous setup allowed us to have a robust and responsive mixed-reality experience, with Azure’s cloud resources effectively handling the rendering load, thereby overcoming the hardware limitations of the HoloLens 2. The use of Visual Studio for optimization and deployment ensured that the application was both well structured and sturdy, providing a high-quality, immersive experience (

Figure 13), which is crucial for complex data visualization tasks.

Another achievement is the scalability of the system. By leveraging Azure’s scalable cloud architecture, the simulations’ complexity can be increased without requiring the user to switch their hardware. This feature not only improves the accessibility of high-level simulations but also makes sure that a certain system can adapt to future research demands, allowing for the development of increasingly complex and computationally demanding models. In addition, the integration with the HoloLens 2 opens up new options for collaboration, research, and teaching. No matter where they are situated in the world, several people can view and work with the same simulation of the data in real time, thanks to the AR interface. In collaborative academic environments, where team members can engage with and confer about intricate simulations as though they were in the same room, this feature is especially helpful. To sum up, a major advancement in computational fluid dynamics research has been achieved with the deployment of the HoloLens 2 and Azure for remote DNS visualization. This method not only increases the efficacy and efficiency of data visualization but also paves the way for further advancements in cloud-based augmented reality applications. Such systems are projected to play a critical role in advancing the study of fluid dynamics and other complex scientific domains.