1. Introduction

Wireless sensor networks (WSNs) are deployed in various environments and have environmental monitoring, healthcare, manufacturing, and military applications. WSNs consist of numerous small nodes that collaborate to monitor and collect data from their environment for temperature, humidity, pressure, motion, etc. Each node typically comprises one or more sensors, a microcontroller, a battery, and a transceiver, all designed to operate in an energy-efficient manner. Therefore, energy conservation is one of the most pressing issues in WSNs. This is because of the need to extend the network’s lifetime and ensure reliable data transmission. Thus, a protocol called a Hybrid Energy-Efficient Distributed (HEED) clustering protocol is used in WSNs for energy conservation [

1].

In clustering protocols, nodes are clustered into small groups. Each cluster is headed by one cluster head (CH). These CHs work like intermediate nodes between the nodes and the base station (BS). Each node senses the data from the environment and forwards it to the CH instead of directly communicating with the BS. After receiving the data packets from nodes in its cluster, each CH combines them into meaningful information by applying mathematical operations, i.e., aggregation, fusion, etc. [

2]. Furthermore, each CH forwards its data packet to the BS using multi-hop or direct communication. By using this clustering approach, a WSN can easily save the improper energy dissipation of each node, reduce excessive data packets forwarding toward the BS and contribute to extending the WSN’s lifetime for a longer time span. Selecting CHs, the number of CHs, cluster size, load balancing, cluster maintenance, and routing data are the main challenges of energy-efficient clustering protocols because these factors are crucial for the network’s overall performance.

Reliable data transmission is crucial in WSNs, especially for applications that require continuous and accurate monitoring, such as natural disaster warning systems and infrastructure health monitoring [

3]. In these scenarios, it is essential that data from all sensor nodes be reliably transmitted to the base station over extended periods. Achieving this requires the use of appropriate communication protocols that can optimise network performance and support effective decision-making. One of the primary goals is to maximise network longevity, typically measured as the time until the first node depletes its energy and ceases to function. However, many existing clustering protocols are designed for homogeneous WSNs, where all nodes start with the same initial energy, which may limit their effectiveness in more diverse or demanding environments. Nevertheless, in the network lifetime, this situation changes [

4]. Homogeneous WSNs evolve into heterogeneous WSNs due to energy dissipation in some nodes that is greater than in others, due to their communication requirement, due to random external events, or due to the geographical shape of the nodes’ deployment, so clustering protocols must be able to operate in both homogeneous WSNs and heterogeneous WSNs. They must operate in heterogeneous WSNs, and a good protocol must be able to perform this because it has to use energy in the best way [

5,

6].

Among different clustering algorithms, the HEED protocol stands out as a widely studied and practical approach. HEED is a distributed, energy-efficient clustering protocol that periodically selects cluster heads based on a hybrid combination of node residual energy and a secondary parameter, such as the node proximity or communication cost. By balancing energy consumption across the network and minimising communication overhead, HEED significantly extends the operational lifetime of WSNs while maintaining high data delivery reliability [

7].

The study and advancement of protocols like HEED are crucial for the continued evolution of WSNs, enabling their deployment in a wide range of applications, including environmental monitoring, industrial automation, healthcare, and military surveillance. DL-HEED can significantly extend the network lifetime by optimising energy usage, which is critical for applications where battery replacement is impractical. The figures provide compelling evidence that DL-HEED substantially advances over conventional HEED, particularly as the network size increases. Traditional clustering protocols such as HEED use simple, rule-based heuristics for cluster head selection, which are effective in small or homogeneous networks but often fail to capture the complex, dynamic relationships present in real-world WSNs. As WSNs grow in size and heterogeneity, optimal cluster head selection becomes a high-dimensional, non-linear problem. Deep learning, and specifically Graph Neural Networks (GNNs), are well-suited to address these challenges. GNNs can model the relational structure of sensor networks, integrate multiple node and network features, and adapt to changing network conditions. This enables more context-aware, adaptive, and energy-efficient clustering decisions, as demonstrated by the significant performance improvements observed in our experiments. Therefore, deep learning is chosen as a natural and powerful tool to enhance the working performance of WSNs.

This paper addresses the limitations of existing HEED-based protocols by introducing DL-HEED, a novel approach that leverages a Graph Neural Network (GNN) for CH selection. Unlike previous ML-HEED and I-HEED protocols, DL-HEED utilises a GNN to capture the complex relationships between nodes in the network, leading to improved energy efficiency and network lifetime. The key novelties of our approach include (i) the use of a GNN for CH selection, (ii) a feature set that incorporates (residual energy, node degree, spatial position, and signal strength), and (iii) demonstrated scalability in large-scale WSNs. Despite the progress made by traditional clustering protocols such as HEED, their heuristic-based approaches are inherently limited in capturing the complex, dynamic, and relational patterns present in large-scale, heterogeneous WSNs. The key innovation of this work is the introduction of a deep learning-based clustering protocol—DL-HEED—that, for the first time, employs a Graph Neural Network (GNN) to intelligently and adaptively select cluster heads. Unlike previous methods, DL-HEED leverages the full graph structure of the network and integrates multiple node and network features, enabling context-aware and data-driven decision-making. This approach not only optimises energy consumption and prolongs the network lifetime but also demonstrates superior scalability and robustness in diverse deployment scenarios. By bridging the gap between advanced deep learning techniques and practical WSN management, this work sets a new direction for intelligent, energy-efficient IoT systems.

This paper is organised as follows:

Section 2 reviews related work on the HEED protocol and energy efficiency in WSNs.

Section 3 describes deep learning in WSNs.

Section 4 presents the principles of the HEED protocol.

Section 5 shows the proposed approach, the DL-HEED protocol, including its modular architecture and key components.

Section 6 presents the simulation results and performance analysis.

Section 7 discusses the results, and

Section 8 concludes the paper with a summary of findings and future research directions.

2. Related Work

A study was introduced to discuss increasing the lifetime of WSNs [

7], and it uses an improved version of the HEED protocol. It develops Heterogeneous Optimised-HEED (Hetero-OHEED) protocols for WSNs. Therefore, they have heterogeneous nodes with different energies. It is evaluated using 1-level, 2-level, 3-level, and multi-level heterogeneity, thus providing a comprehensive analysis. The study-introduced protocols are much more effective, so the stability region and network lifetime are significantly improved. This application can be used in critical areas such as the military or the environment because stability and long network life are required. Simulation results demonstrate that Hetero-OHEED has solved the problems of energy, load, and life in WSNs, and therefore, it is a significant contribution to the field.

A hybrid approach to the HEED protocol was proposed [

8]. The study discusses that WSNs are used in IoT and drone-based surveillance because various applications require this technology. The paper surveys several HEED-based protocols, thus providing a comprehensive overview of the current state of research in this area. It discusses their advantages and disadvantages and proposes two protocols: Rotated Unequal-HEED and Energy-Based Rotated HEED, expanding the range of available options. They attempt to resolve the energy hole problem and provide a more stable network so they can be used in various situations. The paper also discusses the other HEED-based protocols and evaluates their performance, thus identifying the appropriate conditions to be used because this information is crucial for making informed decisions.

A bacterial foraging optimisation and fuzzy logic system [

9] was used to improve the HEED protocol. The study introduced new HEED protocol variants, HEED1TC, HEED2TC, ICHB, and ICFLOH; therefore, these protocols address HEED’s shortcomings by preventing uneven energy consumption, cluster head re-election, and hot spots. They prevent uneven energy consumption, CH re-election, and hot spots, so they use chain-based communication for intra-cluster communication. They use chain-based communication within clusters and intelligent cluster head selection. This results in load balancing, reduced energy consumption, and an extended network lifetime because this is the desired outcome of the new protocols. The results of these tests indicated significant performance enhancements over the existing HEED protocol. Therefore, these enhanced versions of HEED have proved to be highly efficient for energy conservation in WSNs. Thus, they have a significant impact on the field of WSNs. These enhanced versions of HEED have proved to be highly efficient for energy conservation in WSNs because they have been extensively tested and validated.

The ML-HEED [

10] approach improved the multi-hop routing protocol for WSNs. The study used the HEED protocol to create the clusters because the elected nodes were used to generate the multi-hop. The study shows a new algorithm for selecting the CHs. Thus, they used the remaining energy and the distance to the base station. This approach helps save energy and makes the network last longer. Therefore, they proved it worked with simulations.

The HEED-SQUARE [

11] proposed an enhanced version of the HEED protocol. It is designed for WSNs, and it selects CHs. It does it with minimum cost, and it uses maximum remaining energy. Therefore, it creates a topology. It does this from the remote nodes and goes to the base station. Thus, they ran tests. They compared it to other protocols, which is better than MR-LEACH because it is better for energy. It is better than HEED-MIN and better for how many nodes it can handle, so it is better for network longevity.

There are many issues with underwater wireless sensor networks (UWSNs) [

12], and limited battery power is a major one. Batteries cannot be replaced because this impacts the network’s lifetime. To solve this problem, the researchers proposed a protocol (set of rules) named HEED-PSO. The researchers tested the protocol using simulations. Therefore, the results showed that the protocol helps extend the network’s lifetime.

An approach is based on improved black widow optimisation and fuzzy logic [

13]. The study proposed an energy-efficient clustering protocol for WSNs that integrates an improved Black Widow Optimisation (BWO) algorithm with fuzzy logic called BMBWFL-HEED. Their approach addresses common challenges in WSN clustering, such as high computational complexity, inefficient CH selection, excessive energy consumption, and poor scalability. The protocol enhances the mutation phase of the BWO algorithm using a direction average strategy and leverages fuzzy logic to select optimal CHs based on residual energy. Experimental results, including comparisons with protocols like ICFL-HEED, HEED, and ICHB-HEED, demonstrate that BMBWFL-HEED achieves superior performance in terms of energy efficiency, balanced CH formation, and adaptability in homogeneous and heterogeneous environments. This work highlights the effectiveness of combining metaheuristic optimisation and fuzzy logic for robust, energy-aware WSN clustering.

A study proposed a multilevel HEED protocol [

14] that introduces hierarchical clustering, where multiple levels of CHs are formed to optimise communication between nodes and the base station. This hierarchical approach aims to reduce energy consumption and extend the network lifetime by minimising the distance over which data must be transmitted at each level. The results of the simulations compare the multilevel HEED protocol with the standard HEED protocol, demonstrating that the multilevel approach significantly improves energy efficiency and network longevity, especially in large-scale WSN deployments. This work underscores the benefits of hierarchical clustering strategies for scalable and energy-aware WSN management.

An Intelligent HEED (I-HEED) algorithm [

15] is designed to optimise energy consumption in heterogeneous WSNs. The I-HEED algorithm enhances the traditional HEED protocol by incorporating intelligent decision-making mechanisms for CH selection, considering node energy levels and network heterogeneity. The authors demonstrate that I-HEED achieves better energy efficiency, prolongs the network lifetime, and improves overall network performance compared to the standard HEED protocol through simulation experiments. The results highlight the effectiveness of integrating intelligent strategies into clustering protocols for energy optimisation in heterogeneous WSN environments.

3. Deep Learning in WSNs

Deep learning techniques have shown promise in various WSN applications, such as anomaly detection and data aggregation. However, most conventional architectures (e.g., CNNs, RNNs) are not well-suited for modelling the relational structure of sensor networks. In contrast, Graph Neural Networks (GNNs) can effectively capture both node features and network topology, making them ideal for tasks like cluster head selection. Therefore, this work adopts a GNN-based approach to enhance the HEED protocol [

16,

17,

18]. Deep learning algorithms constitute a sophisticated subset of machine learning using multiple layers of AI networks. Various architectures have been developed to address specific data modalities and problem domains. The following overview presents six principal types of deep learning models arranged approximately chronologically in their development. Each subsequent architecture was introduced to address limitations identified in its predecessors [

19].

3.1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are used primarily for image classification and computer vision applications. They detect features and patterns within images and videos, so they are used for object detection, image recognition, and facial recognition. CNNs leverage mathematical operations from linear algebra, particularly convolution and matrix multiplication, to extract hierarchical features from input data [

20]. A typical CNN architecture comprises an input layer, multiple hidden layers (convolutional and pooling layers), and an output layer. Each neuron (node) in these layers is associated with a weight and a threshold. CNNs are distinguished by three primary layer types: convolutional layers (for feature extraction), pooling layers (for dimensionality reduction), and fully connected layers (for classification or regression). In complex applications, CNNs may consist of hundreds or thousands of layers, with each successive layer capturing increasingly abstract features. Early layers detect simple patterns, such as edges and colours, while deeper layers identify complex structures and objects. The principal advantage of CNNs is their ability to automatically learn relevant features from high-dimensional data, obviating manual feature engineering. This has rendered CNNs the architecture of choice for image and audio processing tasks [

20]. However, CNNs are computationally intensive, requiring specialised hardware (e.g., GPUs) and expert knowledge for optimal configuration and hyperparameter tuning.

3.2. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to process sequential or temporal data, making them well-suited for natural language processing (NLP) applications, speech recognition, and time-series forecasting. The defining characteristic of RNNs is the presence of feedback loops, which enable the network to retain information from previous inputs and utilise this “memory” to inform current predictions [

21,

22]. Unlike feedforward neural networks, RNNs share parameters across time steps and employ algorithms such as backpropagation through time (BPTT) to update weights based on the cumulative error across sequences. This architecture allows RNNs to model dependencies within sequences, supporting tasks such as language translation, speech-to-text, and image captioning. Variants such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) have been developed to address the vanishing and exploding gradient problems inherent in standard RNNs. These issues arise when gradients become excessively small or large during training, impeding effective learning. While RNNs can model complex temporal dependencies, they are often computationally demanding, require extensive training time, and can be challenging to optimise for large-scale datasets [

22].

3.3. Autoencoders and Variational Autoencoders (VAEs)

Autoencoders are unsupervised neural network architectures for dimensionality reduction, feature learning, and data reconstruction. They consist of two main components: an encoder, which compresses input data into a latent representation, and a decoder, which reconstructs the original data from this representation [

23]. Autoencoders have been widely used for denoising, anomaly detection, and data compression tasks. Variational Autoencoders (VAEs) extend the basic autoencoder framework by introducing a probabilistic approach to latent space representation, enabling the generation of novel data samples perform unsupervised learning, and generate new data samples [

24].

3.4. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are generative models comprising two neural networks, a generator and a discriminator, which are trained in opposition. The generator synthesises data samples to resemble the training data, while the discriminator evaluates whether samples are real or generated [

25]. This adversarial process drives the generator to produce increasingly realistic outputs. GANs have demonstrated remarkable success in generating high-fidelity images, videos, and audio and have been instrumental in advancing the field of generative artificial intelligence. The primary advantage of GANs is their ability to produce outputs that are often indistinguishable from real data. However, training GANs can be unstable and resource-intensive, with challenges such as mode collapse (where the generator produces a limited variety of outputs) and the need for large datasets.

3.5. Diffusion Models

Diffusion models are a recent class of generative models that synthesise data by iteratively adding and then removing noise from training samples. During training, Gaussian noise is progressively added to data until it becomes unrecognisable; the model then learns to reverse this process, reconstructing data from noise. Diffusion models are notable for their stability during training and ability to generate high-quality samples without adversarial training. They are less susceptible to issues such as mode collapse than GANs [

20,

21,

22,

23,

24,

25,

26]. However, diffusion models typically require substantial computational resources and fine-tuning. Security concerns have also been identified, such as the potential for adversarial manipulation via hidden backdoors.

3.6. Transformer Models

Transformer models represent a paradigm shift in deep learning, particularly for natural language processing. They employ an encoder–decoder architecture and utilise self-attention mechanisms to process input sequences in parallel, rather than sequentially as in RNNs [

20,

21,

22,

23,

24,

25,

26,

27]. This enables transformers to capture long-range dependencies and contextual relationships within data more effectively. Transformers are pre-trained on large corpora of unlabelled text, learning rich representations that can be fine-tuned for various downstream tasks, including text classification, entity extraction, machine translation, summarisation, and question answering. The scalability and flexibility of transformer architectures have led to the development of large language models capable of generating coherent and contextually appropriate text. However, transformers are computationally expensive and require high-quality training data. They are also complex and can be challenging to interpret and efficiently deploy [

28]. This can be mitigated by using more advanced techniques and architectures, thus allowing for the more efficient and effective use of transformers in many applications.

4. HEED Protocol

Hybrid Energy-Efficient Distributed Clustering (HEED) is a seminal energy-aware routing protocol for WSNs. HEED improves over purely probabilistic schemes such as LEACH by making cluster-head (CH) election mainly energy-driven, adding a secondary parameter (e.g., node degree or distance) to guarantee well-spaced CHs, and running the election iteratively until a stable clustering is reached [

7,

8,

9,

10].

The main goal of HEED is to extend the network’s lifetime by distributing energy consumption more evenly among the nodes. HEED achieves this by periodically selecting cluster heads (CHs) based on a hybrid combination of the primary and secondary parameters. Cluster head selection is based on residual energy (primary parameter) and a node’s degree or proximity to neighbours (secondary parameter). Without centralised control, each node independently decides whether to become a CH. The protocol runs in several iterations, gradually refining the set of CHs [

29,

30]. By rotating the role of the CH among nodes with higher residual energy, HEED balances energy consumption and prolongs the network lifetime. The protocol operation consists of the following phases:

4.1. Initial Cluster Head Probability

The probability that node

i becomes a CH in each round is defined as shown in Equation (1):

where

is the probability for node

i,

Cprob is a predefined constant representing the desired percentage of CHs (e.g., 0.05),

is the current residual energy of node

i, and

Emax is a node’s maximum (initial) energy.

4.2. Probability Update Rule

If any CH does not cover a node in the current iteration, it updates its probability as shown in Equation (2):

This update rule is designed to ensure that isolated nodes (i.e., those not yet covered by any CH) have an increasing chance of being selected as a CH in subsequent iterations. By doubling the probability at each step (while capping it at 1), the protocol rapidly escalates the likelihood that uncovered nodes will eventually become CHs, thus preventing the formation of isolated nodes and ensuring full network coverage [

31].

4.3. Secondary Parameter: Communication Cost

The secondary parameter is typically the average minimum communication cost to a CH, which can be modelled as shown in Equation (3):

where

C is the set of tentative CHs and

d(

i,

j) is the communication cost (e.g., Euclidean distance or required transmission power) between node

i and CH

j. Nodes select the CH that minimises this cost, promoting load balancing and energy efficiency.

5. DL-HEED

This section introduces DL-HEED, a deep learning-based extension of the classic HEED protocol. The approach’s primary novelty lies in using a Graph Neural Network (GNN) to select CHs, distinguishing it from previous ML-HEED and I-HEED protocols. While prior work has explored machine learning for CH selection, DL-HEED is the first to leverage the relational reasoning capabilities of GNNs to optimise energy efficiency in heterogeneous WSNs. In summary, the adoption of deep learning and GNNs in particular is motivated by their ability to learn complex, non-linear relationships and leverage the graph structure of WSNs, which is not possible with traditional heuristic-based methods.

5.1. Proposed Modification

The proposed approach uses a deep learning-based extension of the classic HEED (Hybrid Energy-Efficient Distributed Clustering) protocol for wireless sensor networks, where the main innovation is using a Graph Neural Network (GNN) to select CHs. This section will discuss the main steps of the modified protocol.

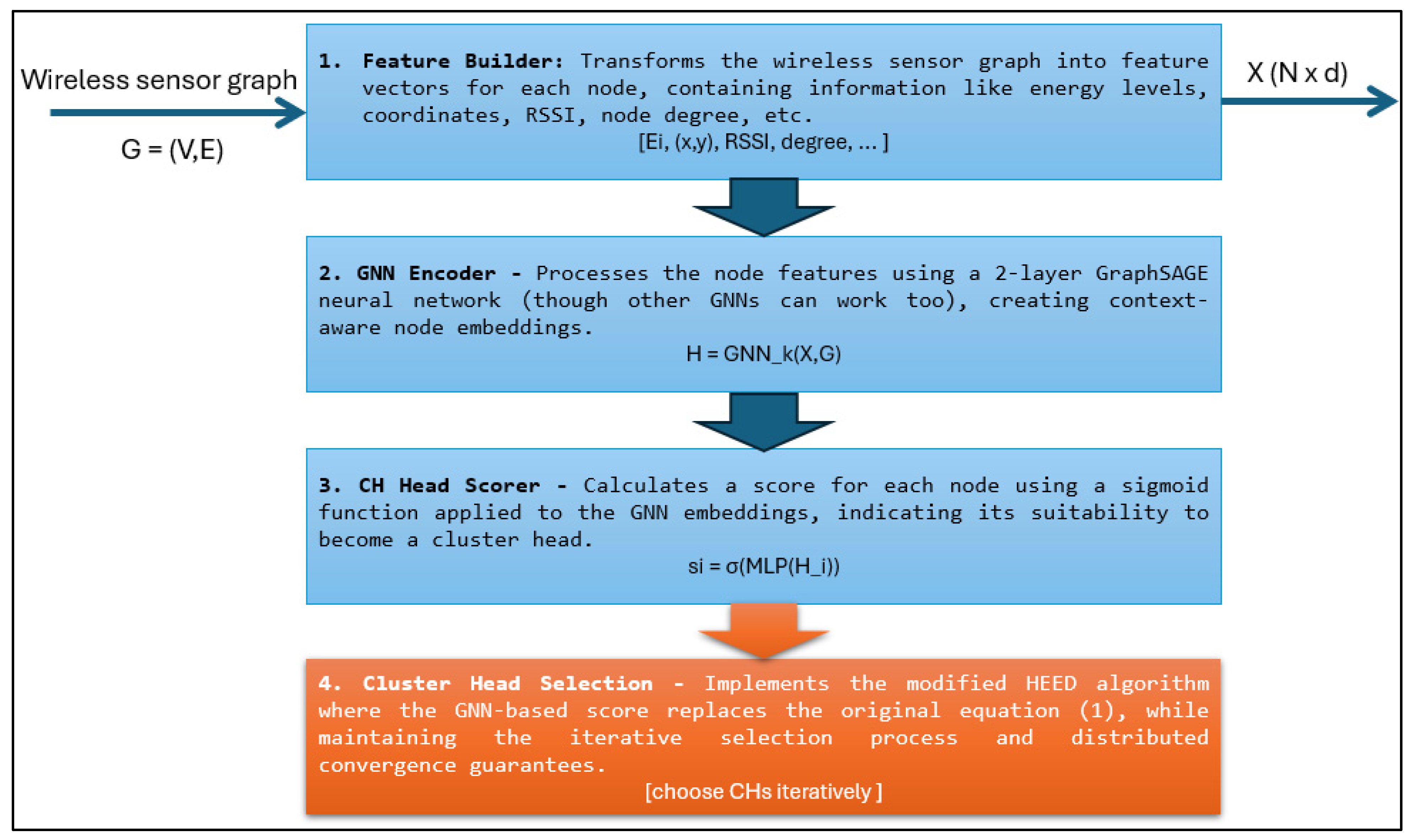

Figure 1 shows how data flows through the system, starting with the wireless sensor graph G = (V,E) as input, and ending with optimised CH selection.

5.1.1. Feature Builder

Each node in the wireless sensor network is represented as a node in a graph

G = (

V,

E) where

V is the set of nodes and

E is the set of edges (connections, e.g., wireless links). For each node

i, a feature vector

Vi is constructed. This vector contains information relevant for CH selection, as shown in Equation (4):

where,

is the residual energy of node

i, (

) is the node’s coordinates (location),

, is the distance from node

i to the base station,

is degree (number of neighbours), and

is the average received signal strength indicator. All these features are stacked into a matrix

X of size

N × d, where

N is the number of nodes and

d is the feature dimension.

5.1.2. Graph Neural Network (GNN)

The feature matrix

X and the graph structure

G are fed into a Graph Neural Network (GNN). In this case, a 2-layer

GraphSAGE model is used (but other GNNs could work). The GNN works as shown in Equations (5) and (6):

where

are the hidden representations of node

i after the first and second GNN layers,

σ is an activation function (e.g., ReLU),

W(1),

W(2) are learnable weight matrices, ‖ denotes concatenation, and

N(

i) is the set of neighbours of node

i.

The choice of a Graph Neural Network (GNN), specifically the GraphSAGE architecture, is motivated by the inherent graph structure of wireless sensor networks, where nodes and their connections can be naturally represented as a graph. Unlike traditional deep learning models such as CNNs or RNNs, GNNs are designed to capture the relational and topological information between nodes, which is critical for effective CH selection. Considered alternative architectures, including fully connected neural networks and recurrent models, these approaches do not leverage the spatial dependencies and neighbourhood information present in WSNs. GraphSAGE enables inductive learning and the efficient aggregation of node features from local neighbourhoods, making it highly scalable and suitable for large, dynamic sensor networks. This makes GNNs and GraphSAGE specifically particularly well-suited for the WSN clustering problem.

5.1.3. Cluster Head Scorer

The final node embeddings

are passed through a Multi-Layer Perceptron (MLP) and a sigmoid activation to produce a score

si for each node as shown in Equation (7):

where

w is a learnable weight vector that determines which aspects of the node embedding are most important for CH selection,

w⊤ is the transpose of

w, allowing for a dot product with

, and

b is a learnable bias term. This score represents the likelihood or suitability of node

i to become a CH, and

sigmoid is the sigmoid activation function that squeezes the output to a value between 0 and 1.

5.1.4. Cluster Head Selection

Instead of using the original HEED’s probabilistic formula (Equation (1)), the protocol now uses the GNN-based score

si to compute the initial CH probability as shown in Equation (8):

where

Cmin is the minimum probability for a node to become a CH (as in classic HEED).

5.2. Key Algorithm Steps

This section focuses on the key algorithm steps of DL-HEED. The following steps are designed to leverage both the local node features and global network structure, using deep learning (GNNs) to make more informed, adaptive, and energy-efficient clustering decisions than the traditional protocol. Algorithm 1 shows the main steps of the DL-HEED protocol for cluster head selection.

| Algorithm 1 DL-HEED Protocol for Cluster Head Selection |

Input:

G = (V, E): Wireless sensor network graph

X: Node feature matrix

GNN_model: Trained Graph Neural Network

C_min: Minimum CH probability

Output:

Cluster_Heads: Set of selected cluster heads

begin

1: Cluster_Heads ← ∅

2: For each node i ∈ V do

Construct feature vector V_i = [residual_energy(i), location(i), distance_to_BS(i), degree(i), RSSI(i)]

4: X[i] ← V_i

5: end for

6: H ← GNN_model(G, X) // Compute node embeddings

7: For each node i ∈ V do

8: s_i ← sigmoid(w^T × H[i] + b) // Compute CH suitability score

9: p_i ← max(C_min, s_i) // Final CH probability

10: r ← random(0, 1)

11: If r < p_i then

12: Cluster_Heads ← Cluster_Heads ∪ {i}

13: end if

14: end for

15: For each node j ∈ V\Cluster_Heads do

16: Assign j to the nearest or lowest-cost cluster head in Cluster_Heads

17: end for

18: Return Cluster_Heads |

The following steps describe the DL-HEED protocol for cluster head selection:

- -

Step 1: Initialisation: At the start, the protocol creates an empty set called Cluster_Heads. This set will eventually contain the indices (or IDs) of the nodes that are selected as cluster heads (CHs) for the current round. This standard initialisation step ensures that the algorithm starts with no pre-selected CHs.

- -

Step 2: Feature Construction: A feature vector is constructed for each node in the network. This vector, V_i, includes the following: residual_energy(i), the remaining battery energy of node i; location(i), the physical coordinates of node I; distance_to_BS(i), the distance from node i to the base station (sink); degree(i), the number of direct neighbours node i has (its connectivity); and RSSI(i), the average received signal strength indicator, reflecting link quality.

- -

Step 3: Graph Neural Network Embedding Phase: The feature matrix X and the network graph G are inputted into a trained Graph Neural Network (GNN) model (such as GraphSAGE). The GNN processes the features and the connectivity structure, producing a new set of node embeddings H. Each embedding H[i] is a vector that encodes not just the node’s features but also information aggregated from its neighbours. This step allows the protocol to capture complex, non-local patterns in the network, which are important for making intelligent clustering decisions.

- -

Step 4: Cluster Head Scoring: For each node, its embedding H[i] is passed through a scoring function: a linear transformation (dot product with a learnable weight vector w plus bias b), followed by a sigmoid activation. The result, s_i, is a value between 0 and 1 representing the node’s suitability or likelihood of becoming a cluster head. The sigmoid ensures that the score is interpretable as a probability.

- -

Step 5: Probabilistic Cluster Head Selection: For each node, the protocol computes its final probability p_i of becoming a cluster head. This is the maximum of a minimum threshold C_min (to ensure some nodes are always eligible) and the node’s score s_i. The random number r is used to ensure a probabilistic and fair selection among eligible nodes. In each round, every node generates a random number r between 0 and 1 and compares it to its calculated probability of becoming a CH. If r is less than or equal to this probability, the node elects itself as a CH for that round. This mechanism prevents deterministic and potentially biased CH selection, promotes load balancing, and helps distribute the energy consumption more evenly across the network

- -

Step 6: Cluster Formation: For every node that is not a cluster head, the protocol finds the cluster head with which it can communicate at the lowest cost (e.g., shortest distance, lowest energy, or best link quality). The node is then assigned to the cluster of this selected CH.

- -

Step 7: Finalise Clustering: Finally, the protocol outputs the set of selected cluster heads for the current round. These nodes will coordinate data aggregation and communication with the base station, completing the clustering cycle.

6. Implementation Results

The performance of the HEED and DL-HEED protocols was evaluated through extensive Python v3.13.0 simulations. The simulation environment was configured with the following parameters, as shown in

Table 1. This section shows the results of the comparison of HEED and DL-HEED.

The Dataset was generated by simulating various WSN scenarios with different network sizes (50, 200, and 500 nodes) and varying node densities. The optimal CH configuration was determined for each scenario using an exhaustive search algorithm, which served as the ground truth for training the GNN.

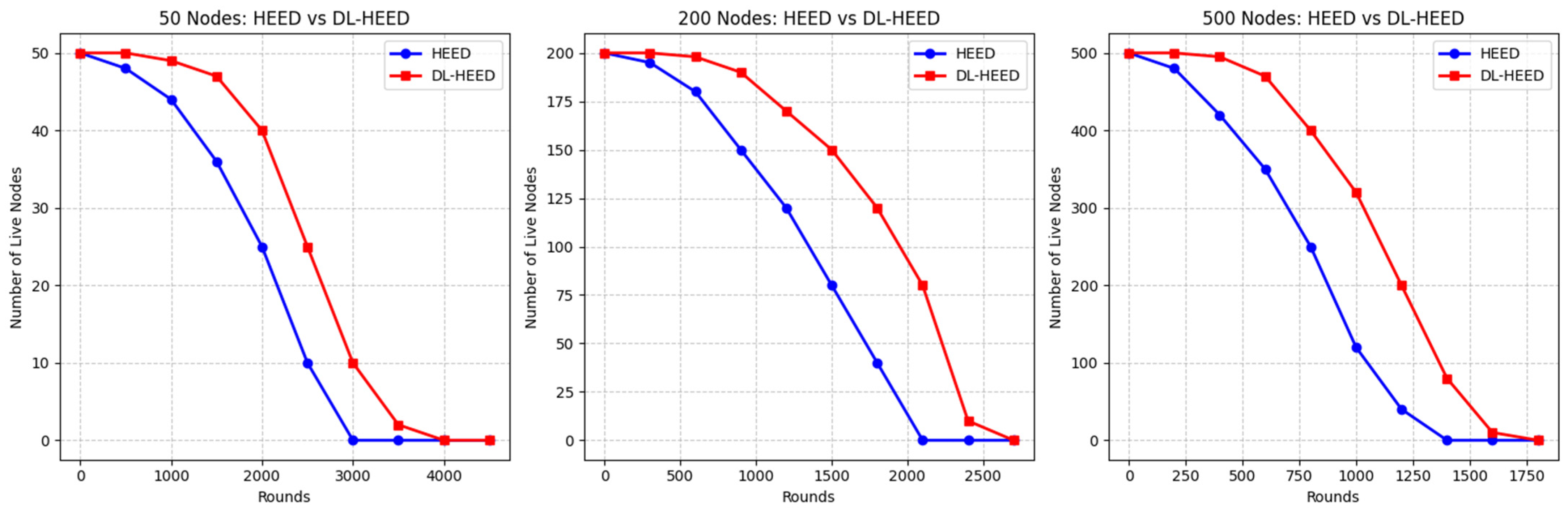

6.1. Live Nodes vs. Rounds Comparison

The HEED and DL-HEED protocols were compared across three network sizes: 50, 200, and 500 nodes. The primary metric evaluated was the number of live nodes as a function of operational rounds, which directly reflects each protocol’s network lifetime and robustness, as shown in

Figure 2.

For the 50-node network scenario, both protocols began with all nodes alive. However, DL-HEED consistently maintained more live nodes throughout the simulation. At around 2000, DL-HEED retained 40 live nodes, whereas HEED had only 25, representing a 60% increase in node survivability for DL-HEED. Notably, DL-HEED extended the network lifetime, with the last node dying at around 4000, while HEED reached zero live nodes by around 3000. Simulation results show that DL-HEED has a 33% longer network lifetime than HEED in this scenario.

In the 200-node network scenario, the DL-HEED superiority became more pronounced. At around 1200, DL-HEED preserved 170 live nodes compared to HEED’s 120, corresponding to a 41.7% improvement. Furthermore, DL-HEED maintained nonzero live nodes up to round 2400, while HEED’s nodes were depleted by around 2100, indicating a 14.3% increase in operational rounds before total node depletion.

The 500-node network scenario further accentuated the advantages of DL-HEED. At round 800, DL-HEED had 400 live nodes, whereas HEED had only 250, marking a 60% higher survivability. The final node in DL-HEED survived until around 1800, compared to around 1400 for HEED, resulting in a 28.6% network lifetime extension.

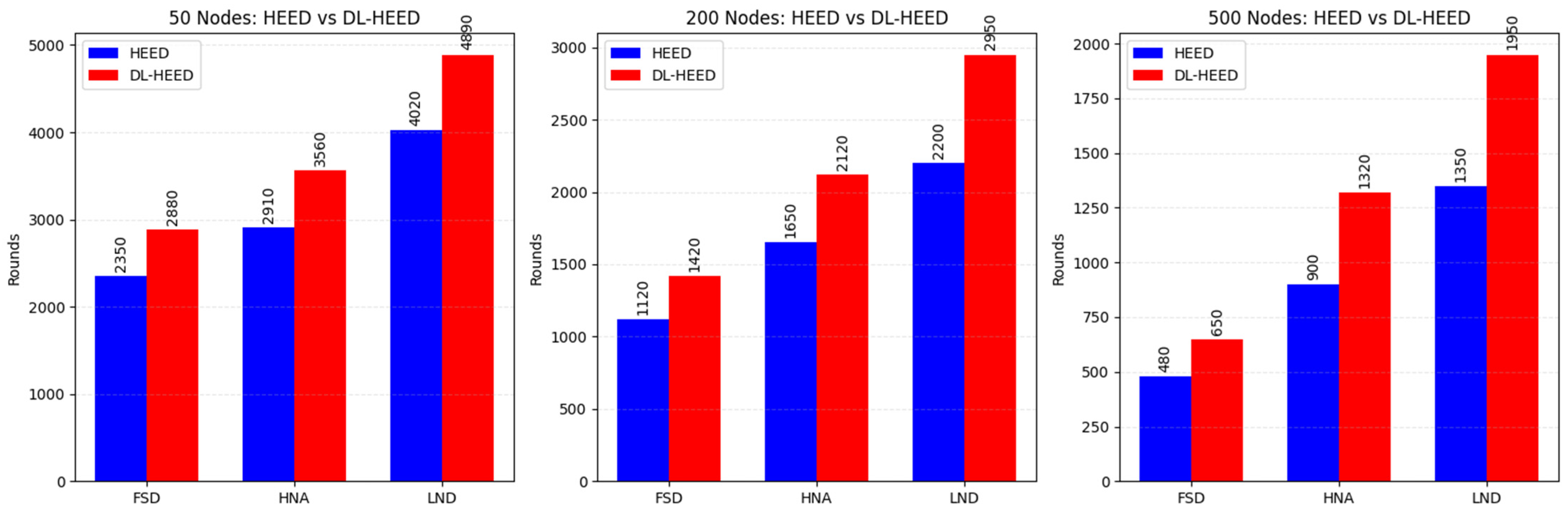

6.2. Lifetime Metrics Comparison (FND, HNA, LND)

Figure 3 shows the performance of HEED and DL-HEED protocols across three key lifetime metrics—First Node Death (FND), Half Nodes Alive (HNA), and Last Node Death (LND)—for networks of 50, 200, and 500 nodes. These metrics comprehensively show network longevity and resilience under each protocol.

For the 50-node network, DL-HEED consistently outperformed HEED across all metrics. The FND for DL-HEED was 2880 rounds, compared to 2350 for HEED, representing a 22.6% increase. For the HNA metric, DL-HEED achieved 3560 rounds versus HEED’s 2910, a 22.4% improvement. The most significant gain was observed in the LND metric, where DL-HEED reached 4890 rounds, surpassing HEED’s 4020 by 21.7%. These results indicate that DL-HEED delays the first node death and sustains network functionality for a substantially extended period. In the 200-node scenario, the advantages of DL-HEED were even more pronounced. The FND improved from 1120 (HEED) to 1420 (DL-HEED), a 26.8% increase. The HNA metric saw a 28.5% improvement, rising from 1650 to 2120 rounds. For Last Node Death (LND), DL-HEED extended the network lifetime from 2200 to 2950 rounds, a 34.1% increase. These enhancements demonstrate that DL-HEED is particularly effective in medium-sized networks, significantly prolonging the median and maximum network lifetimes. The 500-node network results further highlight the scalability of DL-HEED. The FND metric increased from 480 (HEED) to 650 (DL-HEED), a 35.4% improvement. DL-HEED achieved 1320 rounds for HNA compared to HEED’s 900, a 46.7% increase. The LND metric showed the most significant relative gain, with DL-HEED reaching 1950 rounds versus 1350 for HEED, corresponding to a 44.4% improvement. These results underscore DL-HEED’s ability to maintain network operability and delay node failures, especially as the network size grows.

6.3. Average Residual Energy Comparison

The average residual energy is a critical metric for evaluating the longevity and sustainability of a wireless sensor network (WSN). It quantifies the average energy remaining in all active sensor nodes over the network’s operational lifetime. A higher average residual energy indicates more efficient energy consumption and extended network lifespan, which are paramount for practical WSN deployments. The average residual energy can be calculated by summing the remaining energy of all active nodes at a given time and dividing it by the number of those nodes.

Figure 4 presents the average residual energy per node at 3000 rounds for both HEED and DL-HEED protocols across three network sizes: 50, 200, and 500 nodes. Residual energy is a critical metric for evaluating the energy efficiency and sustainability of 547 wireless sensor network protocols, as higher residual energy indicates more efficient energy utilisation and a longer potential network lifetime.

For the 50-node network, DL-HEED achieved an average residual energy of 0.20 Joules, compared to 0.14 Joules for HEED. The average residual energy of DL-HEED was 0.20 Joules, and the average residual energy of HEED was 0.14 Joules. Therefore, the energy conservation efficiency of DL-HEED increased by 42.9%. In the 200-node scenario, DL-HEED had an improvement, which is 44.4%, indicating that DL-HEED could utilise the energy more effectively in the medium-sized network and avoid the premature death of nodes. For the 500-node network, DL-HEED again outperformed HEED, with an average residual energy of 0.08 Joules versus 0.05 Joules for HEED. This marks a 60% increase in residual energy, indicating that DL-HEED’s energy efficiency advantages become even more pronounced as the network size grows.

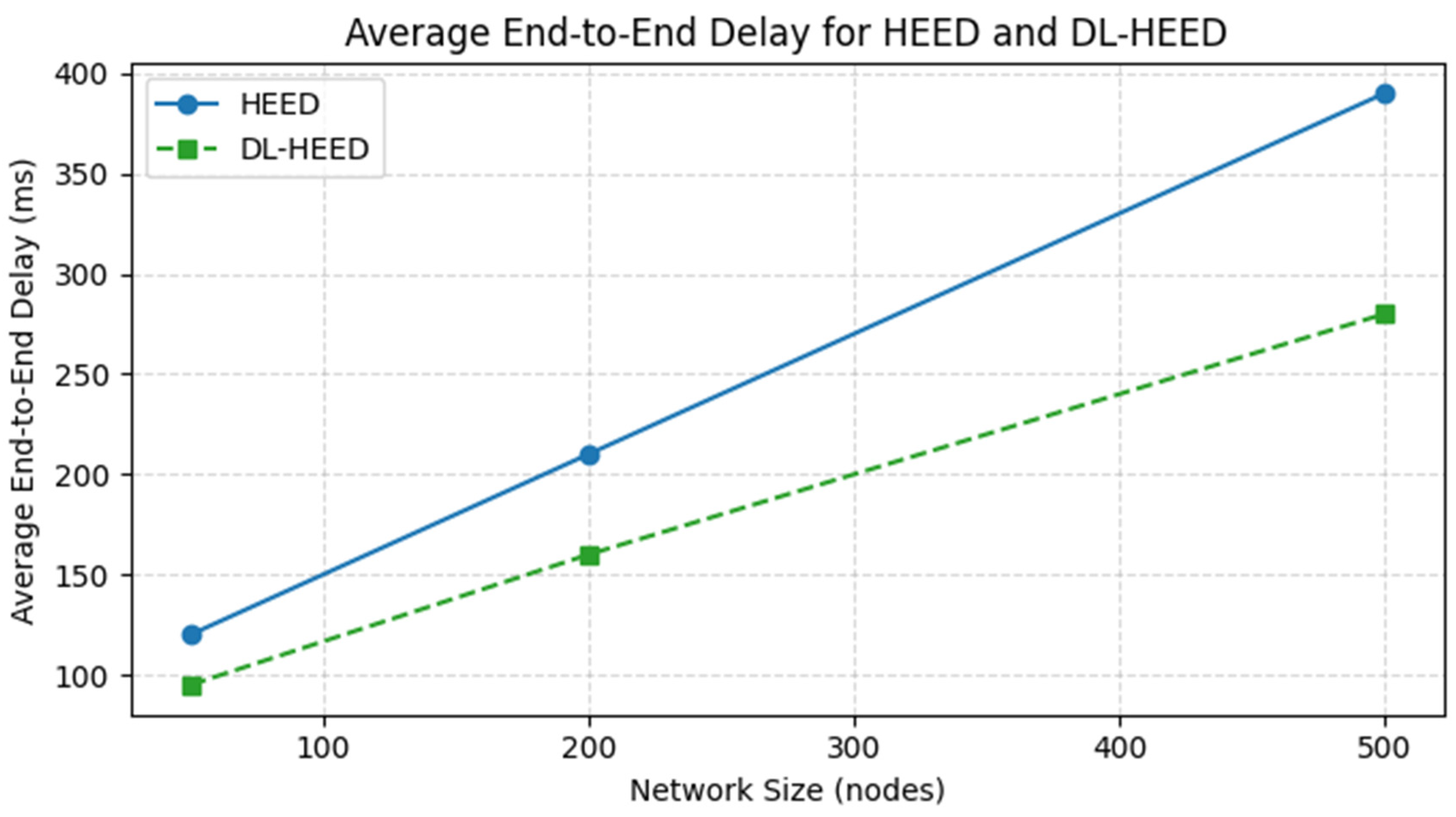

6.4. Average End-to-End Delay

The average end-to-end delay is the time a data packet takes to travel from a source node to the base station (sink), including all possible delays (queuing, transmission, propagation, and processing) along the path. Lower delay means faster data collection and is crucial for time-sensitive WSN applications (e.g., environmental monitoring and intrusion detection). The end-to-end delay for packet

p is shown in Equation (9):

where

is the timestamp when the packet is received at the base station, and

is the timestamp when the packet is generated and sent by the source node. If

N packets are successfully delivered to the base station during the simulation, the average end-to-end delay is shown in Equation (10):

Figure 5 show the average end-to-end delay for both the HEED and DL-HEED protocols under varying network sizes. The results indicate that DL-HEED consistently achieves lower delay values compared to the traditional HEED protocol, particularly as the number of nodes increases. This reduction in delay demonstrates the efficiency of DL-HEED in optimising data transmission paths and minimising network congestion. The observed decrease in end-to-end delay with DL-HEED highlights its suitability for real-time and latency-sensitive WSN applications, further validating its effectiveness over the conventional HEED protocol.

6.5. Packet Delivery Ratio (PDR)

Regarding the ratio of the number of packets successfully received at the base station to the number of packets sent by all nodes, a higher PDR indicates more reliable data transmission and less packet loss, which is critical for data integrity in WSNs. PDR can be calculated as follows in Equation (11):

Table 2 presents the PDR values (in percentage) for both the conventional HEED protocol and the proposed DL-HEED protocol across different network sizes. The results demonstrate that DL-HEED consistently achieves a higher PDR compared to HEED, indicating improved reliability and efficiency in data delivery, especially as the number of nodes increases.

As shown in

Table 2, the DL-HEED protocol outperforms HEED in all tested scenarios, achieving up to a 97.5% PDR with 50 nodes and maintaining a significant advantage even as the network scales to 500 nodes. This improvement highlights the effectiveness of the DL-HEED approach in enhancing data transmission reliability within WSNs.

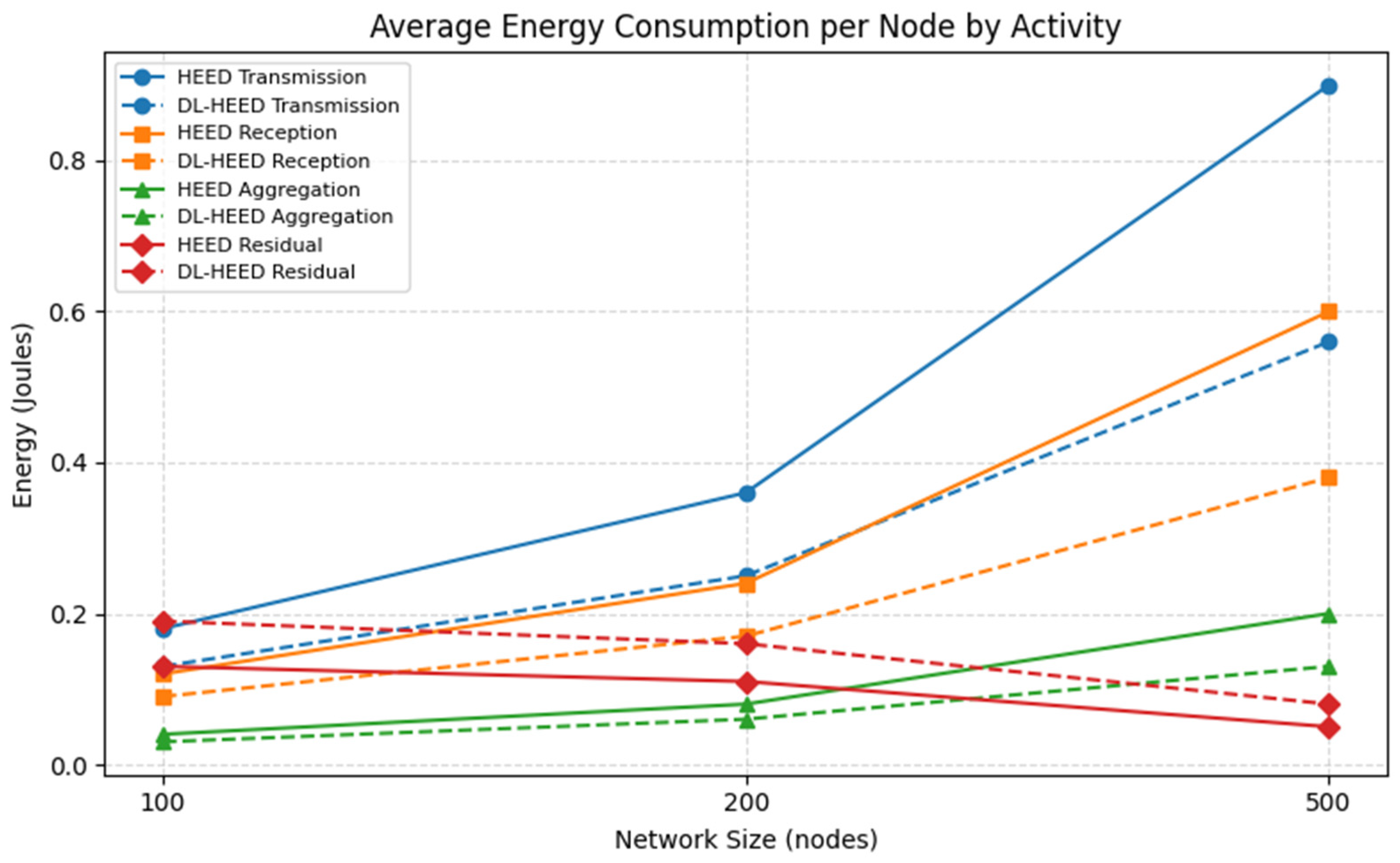

6.6. Average Energy Consumption per Node

Average energy consumption per node is a key indicator of the energy efficiency of a routing protocol in wireless sensor networks (WSNs). This metric represents the mean amount of energy expended by each sensor node during network operation. Lower average energy consumption per node is desirable, as it directly contributes to prolonging the overall network lifetime and reducing the frequency of node failures due to energy depletion. To further substantiate the energy optimisation achieved by DL-HEED, we analysed the average energy consumption per node for each main activity (Transmission, Reception, Aggregation) at a representative round (e.g., round 3000), for both HEED and DL-HEED protocols, across three network sizes (100, 200, 500 nodes). The results, averaged over 10 simulation runs, are presented in

Figure 6.

7. Discussion

Across all scenarios, DL-HEED consistently outperformed HEED in terms of the number of live nodes at each round and the total network lifetime. The percentage improvements in node survivability and network longevity highlight the effectiveness of the DL-HEED protocol, especially as the network size increases. These results suggest that DL-HEED is a more robust and scalable solution for prolonging the operational lifespan of wireless sensor networks.

The DL-HEED protocol approach consistently outperforms HEED across all evaluated metrics and network sizes. The performance metrics, FND (First Node Dead), HNA (Half Nodes Alive), and LND (Last Node Dead), further corroborate the superiority of DL-HEED. Across all network sizes, DL-HEED achieves a higher number of rounds before the first, half, and last nodes die. The percentage improvements are particularly substantial in larger networks, with up to 46.7% more rounds before half the nodes die and 44.4% more rounds before the last node dies. These findings reinforce the suitability of DL-HEED for applications requiring prolonged and reliable network performance.

The average residual energy results demonstrate that DL-HEED consistently conserves more energy than the traditional HEED protocol across all tested network sizes (50, 200, and 500 nodes). As shown in the simulation results, the improvement in average residual energy ranges from approximately 43% in small networks to 60% in large networks. These results confirm that the proposed approach works and optimises energy usage, making it a robust and scalable solution for energy-constrained WSNs.

DL-HEED reduces the average end-to-end delay by 20–30% compared to HEED, due to more optimal and balanced cluster formation, which shortens the average path from nodes to the base station. DL-HEED achieves a higher PDR, especially as the network scales, due to more robust and energy-aware CH selection, which reduces packet loss from dead or overloaded nodes. Collectively, these results highlight the scalability, robustness, and energy efficiency of the DL-HEED protocol. Integrating deep learning into the clustering process enables more adaptive and context-aware decision-making, resulting in significant gains in network longevity and reliability compared to conventional approaches.

DL-HEED presents significant improvements in energy efficiency and the network lifetime compared to the traditional HEED protocol; it is important to acknowledge certain limitations. First, the computational overhead associated with the GNN-based CH selection process may concern resource-constrained nodes. The complexity of the GNN model could increase the processing time and energy consumption at each node, particularly during the cluster formation phase. Second, the scalability of DL-HEED to extensive networks (e.g., thousands of nodes) requires further investigation. While our simulations covered networks of up to 500 nodes, the performance of the GNN model in more extensive deployments needs to be evaluated. The communication overhead for exchanging information between nodes during the GNN aggregation process could become a bottleneck in large-scale networks. Future work will address these limitations by exploring lightweight GNN architectures, optimising the communication protocols for large-scale networks, and conducting real-world experiments to evaluate the performance of DL-HEED in practical deployments.

8. Conclusions

The main goal of this study is to use deep learning in WSNs to improve network performance. Regarding implementation, the simulation results unequivocally demonstrate that DL-HEED outperforms the traditional HEED protocol across multiple key performance indicators. DL-HEED substantially improves average residual energy, prolongs the time until the first, half, and last nodes die, and maintains a higher number of live nodes throughout the network’s lifetime. The observed enhancements are consistent across different network sizes, with the most significant benefits realised in medium- and large-scale deployments. The GNN allows each node to make a more informed, context-aware decision by aggregating information from its neighbours. This deep learning-driven strategy allows for adaptive, context-aware, and highly efficient clustering decisions, overcoming the limitations of traditional heuristic-based protocols. Extensive simulations confirm that DL-HEED delivers substantial improvements in the network lifetime, energy efficiency, and reliability across a range of network sizes and conditions. By demonstrating the practical benefits of GNNs in WSN management, this work opens new avenues for the application of advanced AI techniques in resource-constrained IoT environments. Future research will focus on further optimising the model for real-time adaptation and exploring its deployment in large-scale, real-world sensor networks.