Research on World Models for Connected Automated Driving: Advances, Challenges, and Outlook

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Review Methodology and Guiding Questions

1.2.1. Literature Search and Selection Strategy

1.2.2. Inclusion and Exclusion Criteria

- ▪

- Inclusion Criteria: (1) The paper must be written in English. (2) It must be a peer-reviewed journal article, a full paper from a top-tier conference (e.g., CVPR, NeurIPS, and ICRA), or a highly influential pre-print on arXiv. (3) The work must either explicitly identify as a “World Model” or contribute a foundational methodology highly relevant to the core components of the World Model framework (i.e., environmental perception, dynamic prediction, and agent decision-making). (4) The application context is primarily focused on autonomous driving or CAVs, or the proposed methodology is demonstrably transferable and highly influential to the CAV domain (e.g., seminal works in reinforcement learning or generative modeling from domains like game playing).

- ▪

- Exclusion Criteria: (1) Editorials, commentaries, patents, books (unless a specific chapter is seminal), and non-peer-reviewed workshop papers. (2) Papers where the methods are only tangentially related and do not align with the core conceptual framework of World Models.

- ▪

- Additional Criteria for Targeted Searches: For supplementary searches, more specific criteria were applied based on the research question. For instance, studies focusing on scene generation were required to address complex, non-simplistic scenarios and demonstrate clear relevance to vehicle–road collaboration.

1.2.3. Quality Assessment and Data Synthesis

- Clarity of Objectives: Are the research questions, objectives, or hypotheses clearly stated?

- Significance of Contribution: Is the contribution novel, or does it represent a significant advancement over existing work?

- Appropriateness of Methodology: Is the chosen methodology (e.g., theoretical, experimental, and system design) appropriate for the stated objectives?

- Transparency of Methodology: Is the methodology described in sufficient detail to allow for conceptual understanding and, in principle, reproducibility?

- Validity of Claims: Are the claims and conclusions adequately supported by the results, proof, or arguments presented?

- Community Impact: Has the work demonstrated a notable impact on the field (e.g., through citations or its role as a foundational reference)?

- Relevance to the Review: Does the paper’s contribution directly and substantially address one or more of our review’s guiding research questions?

1.2.4. Guiding Questions and Review Structure

- RQ1: What are the foundational architectures and core principles of modern World Models relevant to the autonomous driving domain?

- RQ2: How are these World Models specifically applied across the six core domains of CAVs, and what are the key methodologies in each domain?

- RQ3: What are the primary technical, ethical, and safety challenges that currently limit the practical deployment of World Models in CAV scenarios?

- RQ4: Based on the current advancements and limitations, what are the most promising future research directions?

2. World Model Research Progress

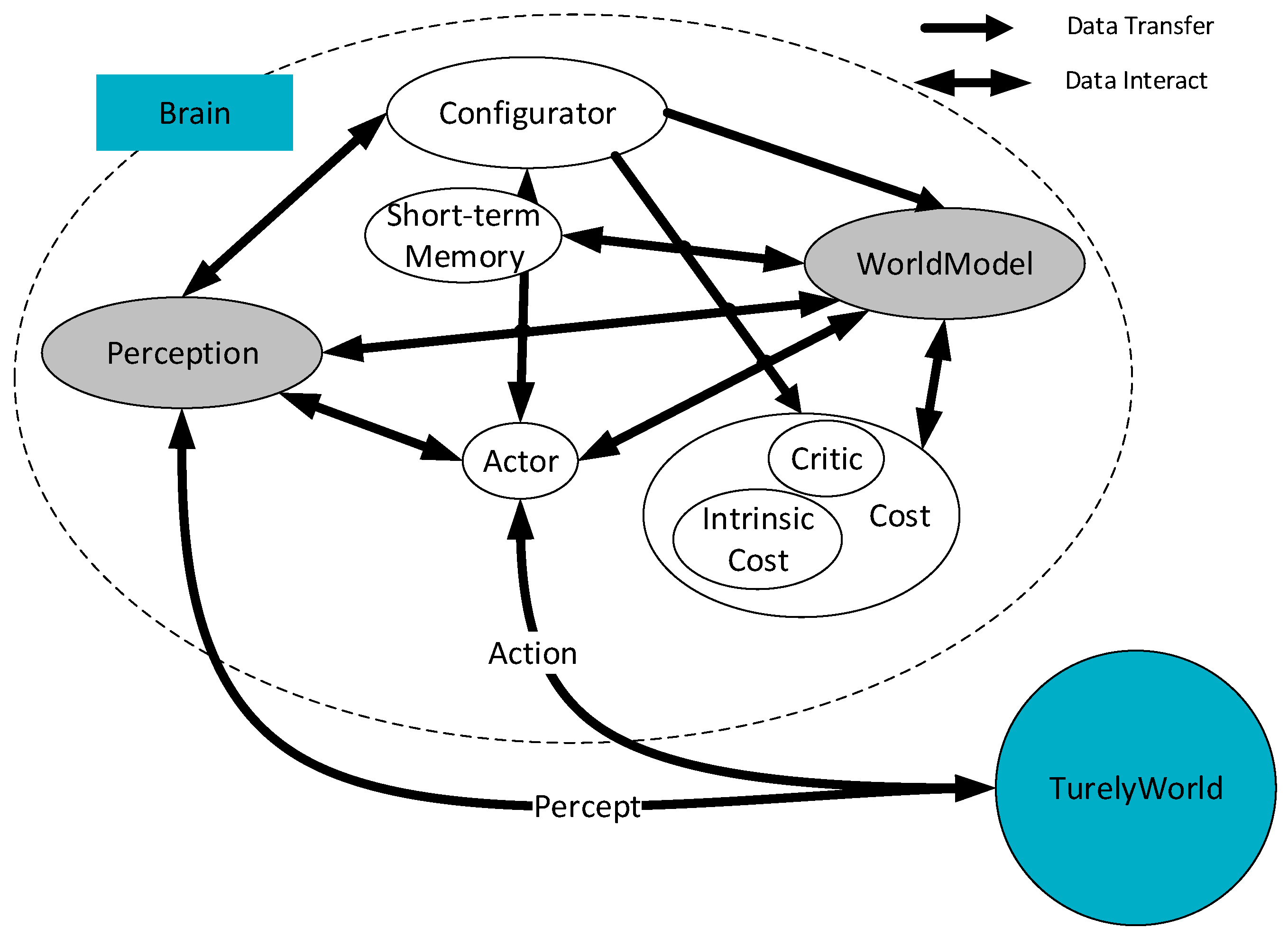

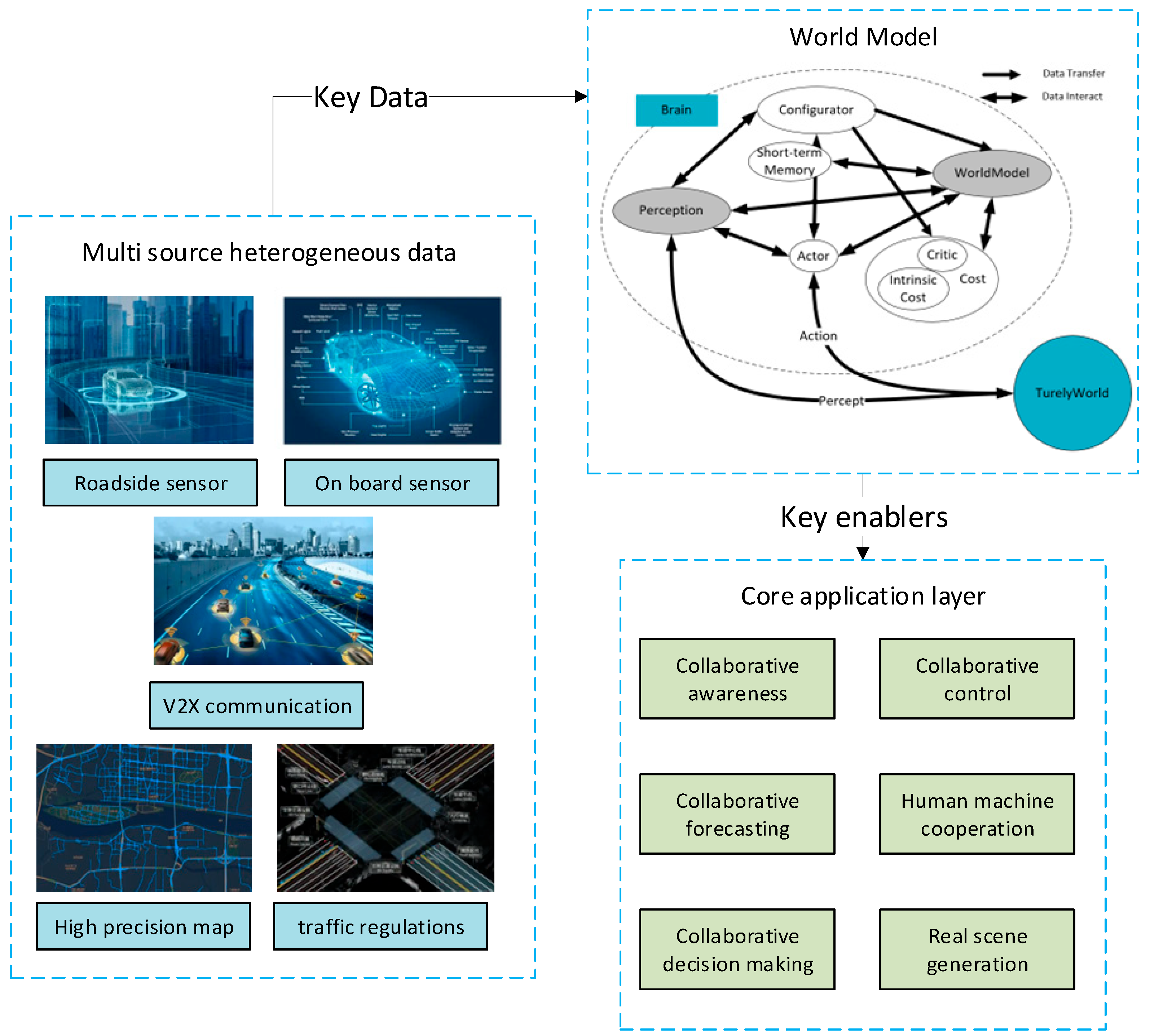

3. World Models in Connected Automated Driving Environments

3.1. Uniqueness and Research Needs of Connected Automated Driving Environments

- (A)

- Multi-source Heterogeneous Perception and Fusion: Unlike traditional single-vehicle intelligent systems that primarily rely on onboard sensors, CAV systems can integrate multi-modal data from roadside infrastructure (e.g., high-definition cameras, LiDAR, and millimeter-wave radars) and the vehicles’ own sensors. This provides global, multi-view, and multi-spatiotemporal environmental perception information [8]. While this diverse and heterogeneous data source offers the potential to construct more comprehensive and precise environmental models, it also presents significant challenges to World Models in terms of efficient fusion and unified representation across data formats, coordinate systems, timestamp alignment, and leveraging complementary advantages of different modalities [35].

- (B)

- Cooperative Decision Making and Global Optimization: The core objective of CAVs is to shift from local optimality for individual vehicles towards the global optimization of traffic flow, for instance, by improving road throughput, reducing congestion, and mitigating accident risks [36]. This implies that World Models must not only consider the driving behavior of the vehicle itself but also profoundly understand and simulate the complex interactions and behavioral intentions among multiple agents in the traffic system [37], thereby providing a reliable prediction basis and decision support for cooperative decision making and control [38].

- (C)

- Deep Human–Machine Collaboration and Trust Building: As the level of autonomous driving advances, future CAV systems will become complex systems deeply integrating human, vehicle, and road elements [39], with human–machine collaborative driving becoming the norm [40]. This necessitates that World Models not only accurately perceive the environment but also comprehend human drivers’ intentions, driving styles, and behavioral patterns. This capability is essential for achieving natural, efficient, and safe interactions, and for gradually establishing user trust in the system [41].

- (D)

- High-Quality, Real-World Scene Generation and Validation: The development and testing of CAVs technology urgently require high-quality, diverse, and controllable driving scenario simulation environments, particularly those encompassing rare (corner case) and hazardous scenarios [42]. World Models, leveraging their powerful generative capabilities, are expected to provide realistic and controllable scenario data for CAV systems, thereby accelerating algorithm iterations, testing validation, and standardization [37]. However, ensuring the physical realism and diversity of generated scenarios remains a challenge.

3.2. Research and Applications of World Models in Connected Automated Driving

3.2.1. Cooperative Perception Based on World Models

3.2.2. Cooperative Prediction Based on World Models

3.2.3. Cooperative Decision-Making Based on World Models

3.2.4. Cooperative Control Based on World Models

3.2.5. Human-Machine Collaboration Based on World Models

3.2.6. Real-World Scene Generation Based on World Models

4. Challenges and Future Directions

4.1. Technical and Computational Challenges

- (A)

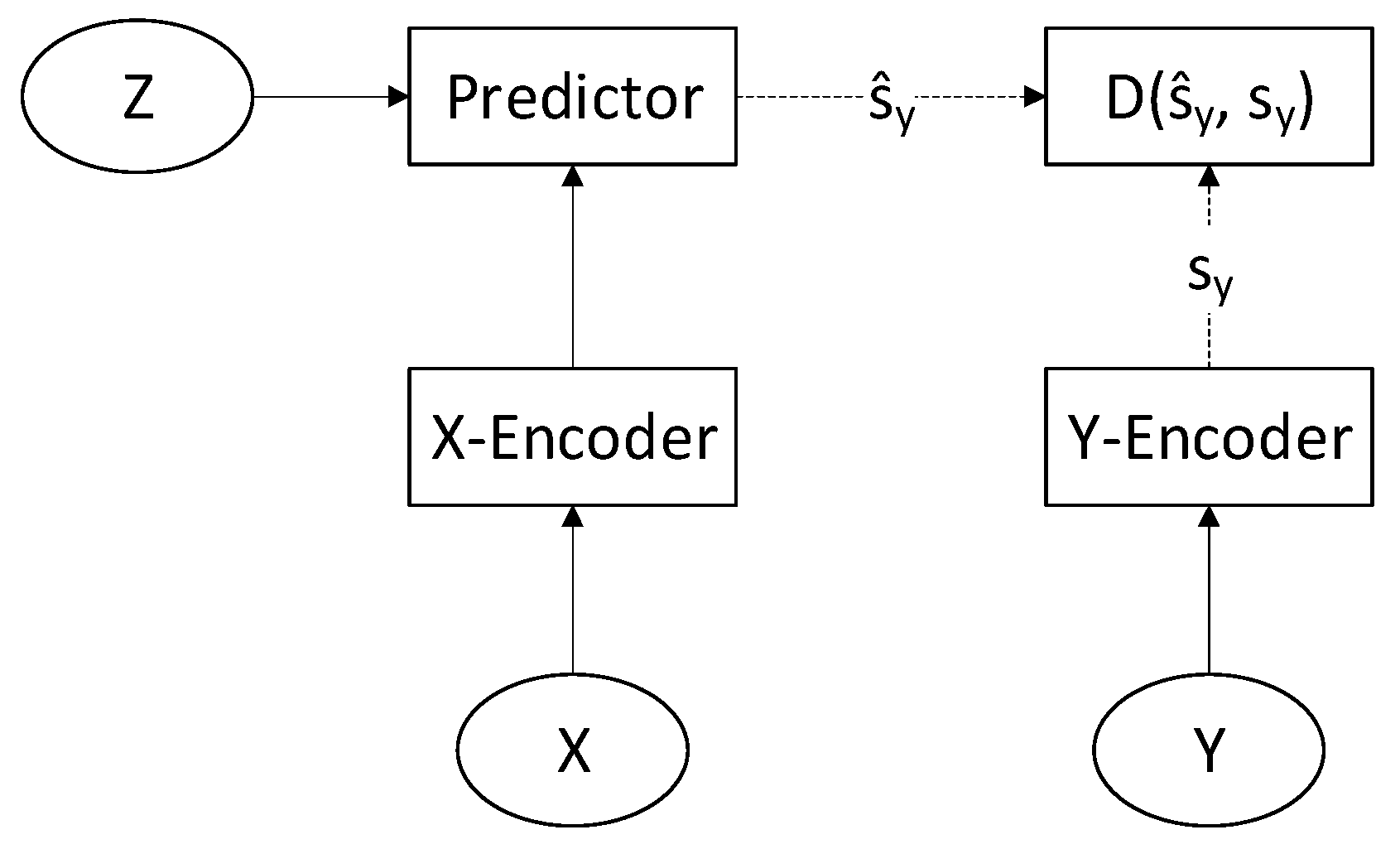

- Efficient Fusion and Unified Representation of Multi-source Heterogeneous Data: The multi-source heterogeneous nature of data in connected automated driving environments is a salient characteristic. Data collected from vehicle-borne [92] and roadside sensors [93] exhibit significant differences in modality types (e.g., images, point clouds, and millimeter-wave radars), data formats, sampling frequencies, and noise characteristics. Although existing research (e.g., Uniworld [76], Drive-WM [33]) has made preliminary explorations into multi-modal fusion, constructing efficient and versatile fusion frameworks remains a key bottleneck. Future research needs to explore more advanced cross-modal attention mechanisms (drawing inspiration from the JEPA concept in V-JEPA [44]) and multi-sensor association methods based on Graph Neural Networks (GNNs) to achieve deeper levels of data correlation and information propagation. Concurrently, to meet real-time requirements, it is necessary to investigate techniques such as lightweight feature extraction networks, knowledge distillation, model pruning, and quantization [94] to build efficient multi-modal unified representation models.

- (B)

- Understanding Physical Laws and Real-World Mapping: Current World Models primarily lean towards data-driven statistical modeling, and their deep understanding and explicit modeling of fundamental laws governing the physical world (e.g., Newton’s laws of motion, conservation of energy) remain insufficient [5]. However, the ultimate goal of CAV systems is to achieve effective control and optimization of real traffic flow, which demands that World Model predictions and decision outcomes precisely map back to the physical world and produce expected, safe, and reliable physical effects. Future research needs to focus on how to explicitly integrate physical knowledge into World Model design [95], for instance, by embedding physical laws as constraints into models via Physics-Informed Neural Networks (PINNs) or by using symbolic regression and similar methods to automatically discover underlying physical laws [96]. Concurrently, attention should be paid to the model’s generalization capabilities across different physical scales and complexities, ensuring its effectiveness and reliability in various real-world environments.

- (C)

- Real-time Performance and Computational Efficiency on Edge Devices: The concern regarding latency and processing capabilities is highly pertinent, as CAVs demand decisions within milliseconds. A significant shortcoming of many current WM approaches is that they are developed in research settings with abundant computational resources, often overlooking the stringent real-time constraints of in-vehicle systems. The high computational complexity of large Transformer-based architectures [73,75] and iterative planning algorithms can lead to inference latencies that are prohibitive for practical driving conditions. Future research must therefore prioritize the development of lightweight and efficient WMs tailored for edge deployment [46]. This involves exploring architectural optimization techniques such as model quantization, pruning, and knowledge distillation to compress large models without significant performance degradation; architectures like JEPA [44,79], which avoid expensive pixel-level reconstruction, represent a promising direction in this regard. Furthermore, hardware–software co-design, which optimizes WM algorithms for specific in-vehicle hardware accelerators (e.g., GPUs, TPUs, and FPGAs) [28], is crucial for maximizing throughput and minimizing latency. Investigating hybrid computing paradigms that delineate tasks between in-vehicle, edge, and cloud computing [1] is another key prospect. For instance, latency-critical tasks could be handled by a lightweight WM on the vehicle, while more computationally intensive tasks are offloaded to the edge or cloud. Clarifying which of the reviewed models are suitable for each paradigm remains a crucial follow-up endeavor.

- (D)

- Memory Enhancement for Long-Term Tasks: In complex and dynamic real-world environments, constructing World Models that can effectively integrate and utilize long-term memory to maintain coherent cognitive reasoning and stable decision-making presents a formidable challenge in the current CAVs domain [97]. Although Transformer architectures have made progress in processing long-sequence data, limitations still exist in achieving truly scalable and human-like long-term memory integration, often leading to “context forgetting” and “recurrent repetition” issues. Future research needs to explore more efficient and scalable memory mechanisms, such as hierarchical memory structures, external memory modules, and sparse attention mechanisms. Concurrently, the continuous optimization of training methods is required, including contrastive learning, memory-augmented learning, continual learning, and memory replay strategies, to enhance the stability of the model’s long-term memory. Furthermore, effective context management strategies (e.g., sliding windows, memory compression, and information retrieval) are crucial for the fine-grained management and filtering of long-term information under limited computational resources.

4.2. Ethical and Safety Challenges

- (A)

- Responsibility Attribution and Explainability of Cooperative Decision Making and Control: Connected Autonomous Vehicle (CAV) systems are complex systems characterized by deep multi-agent cooperation (involving vehicles, roadside infrastructure, cloud platforms, communication networks, and other traffic participants). The traditional framework for single-vehicle liability attribution is therefore difficult to apply [98]. In the event of an accident, responsibility attribution could involve multiple factors, becoming exceptionally complex and ambiguous. Furthermore, the decision-making processes driven by World Models often lack transparency and interpretability, which exacerbates the difficulty of tracing accountability. Future research should integrate Explainable Artificial Intelligence (XAI) techniques into the World Model design, leveraging mechanisms such as attention mechanisms, visualization techniques, and causal inference models to enhance the transparency and interpretability of the model’s decision-making process. Concurrently, it is necessary to establish liability attribution mechanisms and legal regulations tailored to CAVs’ characteristics, clearly defining the responsibilities and obligations of all participating entities.

- (B)

- Data Privacy Protection and Information Security in Connected Automated Driving: The effective operation of CAVs relies heavily on large-scale data collection and real-time information exchange. This inevitably involves vast amounts of sensitive user information (e.g., vehicle location, driving trajectories, driving habits, and identity identifiers). Data breaches or misuse would pose severe threats, leading to significant ethical and social issues [99]. Future research must prioritize data privacy protection, exploring and applying advanced techniques such as federated learning, differential privacy, and homomorphic encryption to enable data sharing and value extraction within a secure and trustworthy environment. Simultaneously, it is imperative to establish data security management norms, clearly defining permissions, procedures, and responsible entities for data collection, storage, transmission, and usage, while strengthening cybersecurity defenses to effectively counter increasingly severe cyber threats and ensure the stable and reliable operation of the system.

4.3. Future Perspectives

- ▪

- For Level 3 (Conditional Automation), WMs are crucial for robustly predicting scenarios that may require human takeover, quantifying uncertainty, and ensuring safe transitions of control. The primary challenge lies in the model’s reliability and its ability to accurately assess its own confidence.

- ▪

- For Level 4 (High Automation), where the vehicle operates autonomously within a defined Operational Design Domain (ODD), WMs must achieve a profound and robust understanding of all possible scenarios within that domain. The challenges shift towards mastering long-tail edge cases and ensuring long-term operational safety without human oversight.

- ▪

- For Level 5 (Full Automation), WMs face the ultimate challenge of generalization. They must possess common sense reasoning and a deep understanding of physical laws to handle entirely novel situations, making the challenges of physical law mapping and long-term memory discussed previously paramount.

- (A)

- Deep Integration of World Models with Novel Roadside Sensing Devices: With the deployment of new roadside sensing devices such as the high-precision millimeter-wave radar, high-resolution LiDAR, and hyperspectral imagers, World Models should profoundly explore how to deeply integrate with these devices. This integration should span the data, feature, and decision layers, constructing more powerful and comprehensive cooperative perception systems [1]. WMs can leverage their multi-modal information fusion capabilities to achieve precise spatiotemporal alignment, efficient fusion, and deep semantic understanding of multi-source heterogeneous perceptual data, significantly enhancing the accuracy, range, and robustness of environmental perception. Furthermore, WMs are expected to enable intelligent control and optimized configuration of roadside sensing devices, leading to more efficient and intelligent cooperative perception. Crucially, this integration must also consider the computational load on roadside units, necessitating the deployment of latency-aware WMs optimized for edge processing.

- (B)

- World Model-Driven Global Optimized Control for Connected Automated Driving: Future CAV systems will shift from single-vehicle intelligence towards a global optimized control, with the ultimate goal of significantly improving the operational efficiency and safety level of the entire transportation system [100]. The powerful prediction and decision-making capabilities of WMs make them central to achieving global optimization. Research should explore how to utilize WMs to predict future evolution trends of traffic flow (e.g., traffic volume, congestion status, and bottleneck locations). Based on these high-precision predictions, global optimization strategies such as adaptive traffic signal timing, dynamic lane assignment, and fine-grained control of highway ramp metering can be realized. Additionally, further research can investigate World Model-based Distributed Model Predictive Control (DMPC) to enable vehicles to actively consider the overall efficiency of the transportation system while satisfying their own demands.

- (C)

- Construction of World Model-Based CAVs Simulation Platforms and Standards: High-quality, standardized simulation platforms are the cornerstone for innovation in CAVs technology research, development, and application. The powerful capabilities of WMs in scenario generation, data simulation, and multi-agent interaction modeling are expected to drive the construction of a new generation of more realistic, diverse, and scalable CAV simulation platforms [101]. Concurrently, active participation in the formulation and refinement of relevant international and national standards is crucial to promote the standardization of simulation platforms and test data, thereby accelerating technology maturity and deployment.

5. Conclusions

Funding

Conflicts of Interest

Abbreviations

| CAVs | Connected Autonomous Vehicles |

| V2X | Vehicle-to-Everything |

| WM | World Model |

| ITS | Intelligent Transportation System |

| JEPA | Joint-Embedding Predictive Architecture |

| LSTM | Long Short-Term Memory |

| MLLM | Multimodal Large Language Model |

| CNN | Convolutional Neural Network |

| GNN | Graph Neural Network |

| RNN | Recurrent Neural Network |

| RSSM | Recurrent State Space Model |

| RL | Reinforcement Learning |

| VAE | Variational Autoencoder |

References

- Yi, X.; Rui, Y.; Ran, B.; Luo, K.; Sun, H. Vehicle–Infrastructure Cooperative Sensing: Progress and Prospect. Strateg. Study Chin. Acad. Eng. 2024, 26, 178–189. [Google Scholar] [CrossRef]

- Li, X. Comparative analysis of LTE-V2X and 5G-V2X (NR). Inf. Commun. Technol. Policy 2020, 46, 93. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Wiseman, Y. Autonomous vehicles. In Encyclopedia of Information Science and Technology, 5th ed.; Khosrow-Pour, M., Ed.; IGI Global: Hershey, PA, USA, 2020; pp. 1–11. [Google Scholar]

- Wang, F.; Wang, Y. Digital scientists and parallel sciences: The origin and goal of AI for science and science for AI. Bull. Chin. Acad. Sci. 2024, 39, 27–33. [Google Scholar]

- Li, Y.; Li, M. Anomaly detection of wind turbines based on deep small-world neural network. Power Gener. Technol. 2021, 42, 313. [Google Scholar] [CrossRef]

- Feng, T.; Wang, W.; Yang, Y. A survey of world models for autonomous driving. arXiv 2025, arXiv:2501.11260. [Google Scholar]

- Tu, S.; Zhou, X.; Liang, D.; Jiang, X.; Zhang, Y.; Li, X.; Bai, X. The role of world models in shaping autonomous driving: A comprehensive survey. arXiv 2025, arXiv:2502.10498. [Google Scholar]

- Gao, S.; Yang, J.; Chen, L.; Chitta, K.; Qiu, Y.; Geiger, A.; Zhang, J.; Li, H. Vista: A generalizable driving world model with high fidelity and versatile controllability. arXiv 2024, arXiv:2405.17398. [Google Scholar] [CrossRef]

- Luo, J.; Zhang, T.; Hao, R.; Li, D.; Chen, C.; Na, Z.; Zhang, Q. Real-time cooperative vehicle coordination at unsignalized road intersections. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5390–5405. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. arXiv 2017, arXiv:1708.05866. [Google Scholar] [CrossRef]

- Cimini, G.; Squartini, T.; Saracco, F.; Garlaschelli, D.; Gabrielli, A.; Caldarelli, G. The statistical physics of real-world networks. Nat. Rev. Phys. 2019, 1, 58–71. [Google Scholar] [CrossRef]

- Bieker, L.; Krajzewicz, D.; Morra, A.; Michelacci, C.; Cartolano, F. Traffic simulation for all: A real world traffic scenario from the city of Bologna. In Modeling Mobility with Open Data: 2nd SUMO Conference 2014 Berlin, Germany, May 15–16, 2014; Springer International Publishing: Cham, Switzerland, 2015; pp. 47–60. [Google Scholar]

- Zhang, L.; Xie, Y.; Xidao, L.; Zhang, X. Multi-source heterogeneous data fusion. In Proceedings of the 2018 International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 26–28 May 2018; IEEE: New York, NY, USA, 2018; pp. 47–51. [Google Scholar]

- Zhang, H.; Wang, Z.; Lyu, Q.; Zhang, Z.; Chen, S.; Shu, T.; Dariush, B.; Lee, K.; Du, Y.; Gan, C. COMBO: Compositional world models for embodied multi-agent cooperation. arXiv 2024, arXiv:2404.10775. [Google Scholar] [CrossRef]

- Bergies, S.; Aljohani, T.M.; Su, S.F.; Elsisi, M. An IoT-based deep-learning architecture to secure automated electric vehicles against cyberattacks and data loss. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 5717–5732. [Google Scholar] [CrossRef]

- Samak, T.; Samak, C.; Kandhasamy, S.; Krovi, V.; Xie, M. AutoDRIVE: A comprehensive, flexible and integrated digital twin ecosystem for autonomous driving research & education. Robotics 2023, 12, 77. [Google Scholar] [CrossRef]

- Munn, Z.; Barker, T.H.; Moola, S.; Tufanaru, C.; Stern, C.; McArthur, A.; Stephenson, M.; Aromataris, E. Methodological quality of case series studies: An introduction to the JBI critical appraisal tool. JBI Evid. Synth. 2020, 18, 2127–2133. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Miao, Q. Novel paradigm for AI-driven scientific research: From AI4S to intelligent science. Bull. Chin. Acad. Sci. 2023, 38, 536–540. [Google Scholar]

- Ding, J.; Zhang, Y.; Shang, Y.; Zhang, Y.H.; Zong, Z.; Feng, J.; Yuan, Y.; Su, H.; Li, N.; Sukiennik, N.; et al. Understanding World or Predicting Future? A Comprehensive Survey of World Models. arXiv 2024, arXiv:2411.14499. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, X.; Zhao, W.; Min, C.; Deng, N.; Dou, M.; Wang, Y.; Shi, B.; Wang, K.; Zhang, C.; et al. Is sora a world simulator? A comprehensive survey on general world models and beyond. arXiv 2024, arXiv:2405.03520. [Google Scholar] [CrossRef]

- Wan, Y.; Zhong, Y.; Ma, A.; Wang, J.; Feng, R. RSSM-Net: Remote sensing image scene classification based on multi-objective neural architecture search. In Proceedings of the IGARSS 2020-2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; IEEE: New York, NY, USA, 2021; pp. 1369–1372. [Google Scholar]

- Littwin, E.; Saremi, O.; Advani, M.; Thilak, V.; Nakkiran, P.; Huang, C.; Susskind, J. How jepa avoids noisy features: The implicit bias of deep linear self distillation networks. arXiv 2024, arXiv:2407.03475. [Google Scholar] [CrossRef]

- Imran, A.; Gopalakrishnan, K. Reinforcement Learning and Control. In AI for Robotics: Toward Embodied and General Intelligence in the Physical World; Apress: Berkeley, CA, USA, 2025; pp. 311–352. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Hafner, D.; Lillicrap, T.; Norouzi, M.; Ba, J. Mastering atari with discrete world models. arXiv 2020, arXiv:2010.02193. [Google Scholar]

- Hafner, D.; Pasukonis, J.; Ba, J.; Lillicrap, T. Mastering diverse domains through world models. arXiv 2023, arXiv:2301.04104. [Google Scholar]

- Li, K.; Wu, L.; Qi, Q.; Liu, W.; Gao, X.; Zhou, L.; Song, D. Beyond single reference for training: Underwater image enhancement via comparative learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2561–2576. [Google Scholar] [CrossRef]

- An, Y.; Yu, F.R.; He, Y.; Li, J.; Chen, J.; Leung, V.C. A Deep Learning System for Detecting IoT Web Attacks With a Joint Embedded Prediction Architecture (JEPA). IEEE Trans. Netw. Serv. Manag. 2024, 21, 6885–6898. [Google Scholar] [CrossRef]

- Wang, Y.; He, J.; Fan, L.; Li, H.; Chen, Y.; Zhang, Z. Driving into the future: Multiview visual forecasting and planning with world model for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 14749–14759. [Google Scholar]

- Li, Q.; Jia, X.; Wang, S.; Yan, J. Think2Drive: Efficient Reinforcement Learning by Thinking with Latent World Model for Autonomous Driving (in CARLA-V2). In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 142–158. [Google Scholar]

- Bogdoll, D.; Yang, Y.; Zöllner, J.M. Muvo: A multimodal generative world model for autonomous driving with geometric representations. arXiv 2023, arXiv:2311.11762. [Google Scholar] [CrossRef]

- Huang, W.; Ji, J.; Xia, C.; Zhang, B.; Yang, Y. Safedreamer: Safe reinforcement learning with world models. arXiv 2023, arXiv:2307.07176. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, Z.; Huang, G.; Chen, X.; Zhu, J.; Lu, J. DriveDreamer: Towards Real-World-Drive World Models for Autonomous Driving. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 55–72. [Google Scholar]

- Zhang, Z.; Liniger, A.; Dai, D.; Yu, F.; Van Gool, L. Trafficbots: Towards world models for autonomous driving simulation and motion prediction. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: New York, NY, USA, 2023; pp. 1522–1529. [Google Scholar]

- Hu, A.; Russell, L.; Yeo, H.; Murez, Z.; Fedoseev, G.; Kendall, A.; Shotton, J.; Corrado, G. Gaia-1: A generative world model for autonomous driving. arXiv 2023, arXiv:2309.17080. [Google Scholar] [CrossRef]

- Jia, F.; Mao, W.; Liu, Y.; Zhao, Y.; Wen, Y.; Zhang, C.; Zhang, X.; Wang, T. Adriver-i: A general world model for autonomous driving. arXiv 2023, arXiv:2311.13549. [Google Scholar] [CrossRef]

- Bruce, J.; Dennis, M.D.; Edwards, A.; Parker-Holder, J.; Shi, Y.; Hughes, E.; Lai, M.; Mavalankar, A.; Steigerwald, R.; Apps, C.; et al. Genie: Generative interactive environments. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 24–27 July 2024. [Google Scholar]

- Wang, X.; Zhu, Z.; Huang, G.; Wang, B.; Chen, X.; Lu, J. Worlddreamer: Towards general world models for video generation via predicting masked tokens. arXiv 2024, arXiv:2401.09985. [Google Scholar] [CrossRef]

- Song, K.; Chen, B.; Simchowitz, M.; Du, Y.; Tedrake, R.; Sitzmann, V. History-Guided Video Diffusion. arXiv 2025, arXiv:2502.06764. [Google Scholar] [CrossRef]

- Garrido, Q.; Ballas, N.; Assran, M.; Bardes, A.; Najman, L.; Rabbat, M.; Dupoux, E.; LeCun, Y. Intuitive physics understanding emerges from self-supervised pretraining on natural videos. arXiv 2025, arXiv:2502.11831. [Google Scholar]

- Ren, X.; Xu, L.; Xia, L.; Wang, S.; Yin, D.; Huang, C. VideoRAG: Retrieval-Augmented Generation with Extreme Long-Context Videos. arXiv 2025, arXiv:2502.01549. [Google Scholar]

- Zhang, X.; Weng, X.; Yue, Y.; Fan, Z.; Wu, W.; Huang, L. TinyLLaVA-Video: A Simple Framework of Small-scale Large Multimodal Models for Video Understanding. arXiv 2025, arXiv:2501.15513. [Google Scholar]

- Tanveer, M.; Zhou, Y.; Niklaus, S.; Amiri, A.M.; Zhang, H.; Singh, K.K.; Zhao, N. MotionBridge: Dynamic Video Inbetweening with Flexible Controls. arXiv 2024, arXiv:2412.13190. [Google Scholar] [CrossRef]

- Xie, H.; Chen, Z.; Hong, F.; Liu, Z. Citydreamer: Compositional generative model of unbounded 3d cities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 9666–9675. [Google Scholar]

- Huang, S.; Chen, L.; Zhou, P.; Chen, S.; Jiang, Z.; Hu, Y.; Liao, Y.; Gao, P.; Li, H.; Yao, M.; et al. Enerverse: Envisioning embodied future space for robotics manipulation. arXiv 2025, arXiv:2501.01895. [Google Scholar] [CrossRef]

- Zhai, S.; Ye, Z.; Liu, J.; Xie, W.; Hu, J.; Peng, Z.; Xue, H.; Chen, D.P.; Wang, X.M.; Yang, L.; et al. StarGen: A Spatiotemporal Autoregression Framework with Video Diffusion Model for Scalable and Controllable Scene Generation. arXiv 2025, arXiv:2501.05763. [Google Scholar] [CrossRef]

- Ge, J.; Chen, Z.; Lin, J.; Zhu, J.; Liu, X.; Dai, J.; Zhu, X. V2PE: Improving Multimodal Long-Context Capability of Vision-Language Models with Variable Visual Position Encoding. arXiv 2024, arXiv:2412.09616. [Google Scholar]

- Bahmani, S.; Skorokhodov, I.; Qian, G.; Siarohin, A.; Menapace, W.; Tagliasacchi, A.; Lindell, D.B.; Tulyakov, S. AC3D: Analyzing and Improving 3D Camera Control in Video Diffusion Transformers. arXiv 2024, arXiv:2411.18673. [Google Scholar] [CrossRef]

- Liang, H.; Cao, J.; Goel, V.; Qian, G.; Korolev, S.; Terzopoulos, D.; Plataniotis, K.N.; Tulyakov, S.; Ren, J. Wonderland: Navigating 3D Scenes from a Single Image. arXiv 2024, arXiv:2412.12091. [Google Scholar] [CrossRef]

- Xing, Y.; Fei, Y.; He, Y.; Chen, J.; Xie, J.; Chi, X.; Chen, Q. Large Motion Video Autoencoding with Cross-modal Video VAE. arXiv 2024, arXiv:2412.17805. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Z.; Zhang, H.; Zhou, Y.; Kim, S.Y.; Liu, Q.; Li, Y.J.; Zhang, J.M.; Zhao, N.X.; Wang, Y.L.; et al. UniReal: Universal Image Generation and Editing via Learning Real-world Dynamics. arXiv 2024, arXiv:2412.07774. [Google Scholar]

- Li, L.; Zhang, H.; Zhang, X.; Zhu, S.; Yu, Y.; Zhao, J.; Heng, P.A. Towards an information theoretic framework of context-based offline meta-reinforcement learning. arXiv 2024, arXiv:2402.02429. [Google Scholar]

- Huang, Z.; Guo, Y.C.; Wang, H.; Yi, R.; Ma, L.; Cao, Y.P.; Sheng, L. Mv-adapter: Multi-view consistent image generation made easy. arXiv 2024, arXiv:2412.03632. [Google Scholar]

- Ni, C.; Zhao, G.; Wang, X.; Zhu, Z.; Qin, W.; Huang, G.; Liu, C.; Chen, Y.; Wang, W.; Zhang, X.; et al. ReconDreamer: Crafting World Models for Driving Scene Reconstruction via Online Restoration. arXiv 2024, arXiv:2411.19548. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, W.; Chen, C.; Lu, J.; Hu, W.; Fu, T.J.; Allardice, J.; Lai, Z.F.; Song, L.C.; Zhang, B.W.; et al. STIV: Scalable Text and Image Conditioned Video Generation. arXiv 2024, arXiv:2412.07730. [Google Scholar] [CrossRef]

- Wang, Q.; Fan, L.; Wang, Y.; Chen, Y.; Zhang, Z. Freevs: Generative view synthesis on free driving trajectory. arXiv 2024, arXiv:2410.18079. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Lian, K.; Mu, Z.; Ma, X.; Liu, A.; Liang, Y. Rocket-1: Mastering open-world interaction with visual-temporal context prompting. arXiv 2024, arXiv:2410.17856. [Google Scholar]

- Fan, Y.; Ma, X.; Wu, R.; Du, Y.; Li, J.; Gao, Z.; Li, Q. Videoagent: A memory-augmented multimodal agent for video understanding. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 75–92. [Google Scholar]

- Feng, J.; Ma, A.; Wang, J.; Cheng, B.; Liang, X.; Leng, D.; Yin, Y. Fancyvideo: Towards dynamic and consistent video generation via cross-frame textual guidance. arXiv 2024, arXiv:2408.08189. [Google Scholar]

- Yuan, Z.; Liu, Y.; Cao, Y.; Sun, W.; Jia, H.; Chen, R.; Li, Z.; Lin, B.; Yuan, L.; He, L.; et al. Mora: Enabling generalist video generation via a multi-agent framework. arXiv 2024, arXiv:2403.13248. [Google Scholar] [CrossRef]

- Song, Z.; Wang, C.; Sheng, J.; Zhang, C.; Yu, G.; Fan, J.; Chen, T. Moviellm: Enhancing long video understanding with ai-generated movies. arXiv 2024, arXiv:2403.01422. [Google Scholar]

- Deng, F.; Park, J.; Ahn, S. Facing off world model backbones: RNNs, Transformers, and S4. Adv. Neural Inf. Process. Syst. 2023, 36, 72904–72930. [Google Scholar]

- Ma, Y.; Fan, Y.; Ji, J.; Wang, H.; Sun, X.; Jiang, G.; Shu, A.; Ji, R. X-dreamer: Creating high-quality 3d content by bridging the domain gap between text-to-2d and text-to-3d generation. arXiv 2023, arXiv:2312.00085. [Google Scholar]

- Zheng, W.; Chen, W.; Huang, Y.; Zhang, B.; Duan, Y.; Lu, J. Occworld: Learning a 3d occupancy world model for autonomous driving. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 55–72. [Google Scholar]

- Bardes, A.; Garrido, Q.; Ponce, J.; Chen, X.; Rabbat, M.; LeCun, Y.; Assran, M.; Ballas, N. Revisiting feature prediction for learning visual representations from video. arXiv 2024, arXiv:2404.08471. [Google Scholar] [CrossRef]

- Cai, S.; Chan, E.R.; Peng, S.; Shahbazi, M.; Obukhov, A.; Van Gool, L.; Wetzstein, G. Diffdreamer: Towards consistent unsupervised single-view scene extrapolation with conditional diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 2139–2150. [Google Scholar]

- Lu, H.; Yang, G.; Fei, N.; Huo, Y.; Lu, Z.; Luo, P.; Ding, M. Vdt: General-purpose video diffusion transformers via mask modeling. arXiv 2023, arXiv:2305.13311. [Google Scholar] [CrossRef]

- Mendonca, R.; Bahl, S.; Pathak, D. Structured world models from human videos. arXiv 2023, arXiv:2308.10901. [Google Scholar] [CrossRef]

- Robine, J.; Höftmann, M.; Uelwer, T.; Harmeling, S. Transformer-based world models are happy with 100k interactions. arXiv 2023, arXiv:2303.07109. [Google Scholar] [CrossRef]

- Ma, H.; Wu, J.; Feng, N.; Xiao, C.; Li, D.; Hao, J.; Wang, J.; Long, M. Harmonydream: Task harmonization inside world models. arXiv 2023, arXiv:2310.00344. [Google Scholar]

- Zhang, W.; Wang, G.; Sun, J.; Yuan, Y.; Huang, G. Storm: Efficient stochastic transformer based world models for reinforcement learning. Adv. Neural Inf. Process. Syst. 2023, 36, 27147–27166. [Google Scholar]

- Min, C.; Zhao, D.; Xiao, L.; Yazgan, M.; Zollner, J.M. Uniworld: Autonomous driving pre-training via world models. arXiv 2023, arXiv:2308.07234. [Google Scholar]

- Wu, P.; Escontrela, A.; Hafner, D.; Abbeel, P.; Goldberg, K. Daydreamer: World models for physical robot learning. In Proceedings of the Conference on robot learning, Atlanta, GA, USA, 6–9 November 2023; PMLR: Cambridge, UK, 2023; pp. 2226–2240. [Google Scholar]

- Gu, J.; Wang, S.; Zhao, H.; Lu, T.; Zhang, X.; Wu, Z.; Xu, S.; Zhang, W.; Jiang, W.G.; Xu, H. Reuse and diffuse: Iterative denoising for text-to-video generation. arXiv 2023, arXiv:2309.03549. [Google Scholar]

- Bardes, A.; Ponce, J.; LeCun, Y. Mc-jepa: A joint-embedding predictive architecture for self-supervised learning of motion and content features. arXiv 2023, arXiv:2307.12698. [Google Scholar]

- Fei, Z.; Fan, M.; Huang, J. A-JEPA: Joint-Embedding Predictive Architecture Can Listen 2024. arXiv 2024, arXiv:2311.15830. [Google Scholar]

- Assran, M.; Duval, Q.; Misra, I.; Bojanowski, P.; Vincent, P.; Rabbat, M.; LeCun, Y.; Ballas, N. Self-supervised learning from images with a joint-embedding predictive architecture. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 15619–15629. [Google Scholar]

- Okada, M.; Taniguchi, T. DreamingV2: Reinforcement learning with discrete world models without reconstruction. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: New York, NY, USA, 2022; pp. 985–991. [Google Scholar]

- Deng, F.; Jang, I.; Ahn, S. Dreamerpro: Reconstruction-free model-based reinforcement learning with prototypical representations. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; PMLR: Cambridge, UK, 2022; pp. 4956–4975. [Google Scholar]

- Micheli, V.; Alonso, E.; Fleuret, F. Transformers are sample-efficient world models. arXiv 2022, arXiv:2209.00588. [Google Scholar]

- Chen, C.; Wu, Y.F.; Yoon, J.; Ahn, S. Transdreamer: Reinforcement learning with transformer world models. arXiv 2022, arXiv:2202.09481. [Google Scholar] [CrossRef]

- Hu, A.; Corrado, G.; Griffiths, N.; Murez, Z.; Gurau, C.; Yeo, H.; Kendall, A.; Cipolla, R.; Shotton, J. Model-based imitation learning for urban driving. Adv. Neural Inf. Process. Syst. 2022, 35, 20703–20716. [Google Scholar]

- Gao, Z.; Mu, Y.; Shen, R.; Duan, J.; Luo, P.; Lu, Y.; Li, S.E. Enhance sample efficiency and robustness of end-to-end urban autonomous driving via semantic masked world model. arXiv 2022, arXiv:2210.04017. [Google Scholar] [CrossRef]

- Ye, W.; Liu, S.; Kurutach, T.; Abbeel, P.; Gao, Y. Mastering atari games with limited data. Adv. Neural Inf. Process. Syst. 2021, 34, 25476–25488. [Google Scholar]

- Koh, J.Y.; Lee, H.; Yang, Y.; Baldridge, J.; Anderson, P. Pathdreamer: A world model for indoor naviga tion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 14738–14748. [Google Scholar]

- Kim, K.; Sano, M.; De Freitas, J.; Haber, N.; Yamins, D. Active world model learning with progress curiosity. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 13–18 July 2020; PMLR: Cambridge, UK, 2020; pp. 5306–5315. [Google Scholar]

- Hafner, D.; Lillicrap, T.; Fischer, I.; Villegas, R.; Ha, D.; Lee, H.; Davidson, J. Learning latent dynamics for planning from pixels. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2025; PMLR: Cambridge, UK, 2019; pp. 2555–2565. [Google Scholar]

- Wang, X.; Yang, X.; Jia, X.; Wang, S. Modeling and analysis of hybrid traffic flow considering actual behavior of platoon. J. Syst. Simul. 2024, 36, 929–940. [Google Scholar]

- Zhang, Y.; Zhang, L.; Liu, B.L.; Liang, Z.Z.; Zhang, X.F. Multi-spatial scale traffic prediction model based on spatio-temporal Transformer. Comput. Eng. Sci. 2024, 46, 1852. [Google Scholar]

- Huang, Y.; Zhang, S.; Lin, Y.; Zhen, F.; Zhao, S.S.; Li, L. Ideas and practices of city-level territorial spatial planning monitoring: A case study of Ningbo. J. Nat. Resour. 2024, 39, 823–841. [Google Scholar] [CrossRef]

- Zhao, J.; Ni, Q.; Li, Y. Exploration on Construction of Intelligent Water Conservancy Teaching Platform in Colleges and Universities Based on Digital Twin. J. Yellow River Conserv. Tech. Inst. 2024, 36, 76–80. [Google Scholar]

- Li, G. AI4R: The fifth scientific research paradigm. Bull. Chin. Acad. Sci. 2024, 39, 1–9. [Google Scholar]

- Ye, Y.; Xu, Y.; Zhang, Z.; Hu, L.; Xia, S. Recent Advances on Motion Control Policy Learning for Humanoid Characters. J. Comput.-Aided Des. Comput. Graph. 2025, 37, 185–206. [Google Scholar]

- Wang, X.; Tan, G. Research on Decision-making of Autonomous Driving in Highway Environment Based on Knowledge and Large Language Model. J. Syst. Simul. 2025, 37, 1246. [Google Scholar]

- Yang, L.; Yuan, T.; Duan, R.; Li, Z. Research on the optimization strategy of the content agenda setting of artificial intelligence in the WeChat official account of scientific journals. Chin. J. Sci. Tech. Period. 2025, 36, 611. [Google Scholar]

- Yuan, Z.; Gu, J.; Wang, G. Exploration and practice of urban intelligent pan-info surveying and mapping in Shanghai. Bull. Surv. Mapp. 2024, 4, 168. [Google Scholar]

- Ren, A.; Li, S. DESIGN OF DRL TIMING SIMULATION SYSTEM FOR SIGNAL INTERSECTION INTEGRATING VISSIM-PYTHON AND QT. Comput. Appl. Softw. 2024, 41, 53–59. [Google Scholar]

| Year | Model Name | Input Modalities | Core Structure | Key Contributions for V2X Scenarios |

|---|---|---|---|---|

| 2025 | DFoT [43] | Video | Diffusion Transformer | Generates high-quality, temporally consistent, and robust CAV simulation scenarios, including rare events. |

| V-JEPA [44] | Natural Video, Pixel Data | JEPA | Provides a lightweight, data-driven paradigm for WM construction, enhancing representation learning and prediction efficiency. | |

| VideoRAG [45] | Video, Audio, and Textual | RAG | Introduces retrieval-augmented generation for ultra-long videos, crucial for long-term CAVs scene prediction. | |

| TinyLLaVA-Video [46] | Video, Text | Transformer | Enables deployment of high-performance video understanding models on resource-constrained in-vehicle edge platforms. | |

| MotionBridge [47] | Trajectory Stroke, Pixel, and Text | Diffusion Transformer | Offers a novel approach for high-fidelity traffic flow video simulation in CAVs. | |

| CityDreamer4D [48] | Image, Text | VQVAE, Transformer, GAN | Generates large-scale, high-fidelity 4D city scenes for complex urban CAV simulations. | |

| EnerVerse [49] | Image, Text, and Action | FAVs, Diffusion, and 4DGS | Proposes new insights for modeling complex, dynamic scenes and collaborative perception in multi-agent systems. | |

| StarGen [50] | Image, Text, and Pose Trajectory | Diffusion | Provides a spatiotemporal autoregressive generation framework with high-precision pose control for large-scale, high-fidelity traffic flow simulations. | |

| 2024 | V2PE [51] | Image, Text | V2PE-enhanced | Enhances understanding of complex CAV scenarios and human–machine interaction through efficient multi-modal data processing. |

| AC3D [52] | Text, Video | Diffusion Transformer | Significant for dynamic urban scene generation and sensor view manipulation in CAVs. | |

| Wonderland [53] | Image | Diffusion Transformer, Latent Transformer | Enables rapid construction of high-fidelity, large-scale 3D traffic simulation environments from single images. | |

| Cross-modal Video VAE [54] | Video, Text | VAE | Presents a novel cross-modal video VAE, supporting bandwidth-limited V2X communication and efficient collaborative perception. | |

| UniReal [55] | Images, Text | Diffusion Transformer | Learns and constructs real-world dynamics from video data, providing more realistic and diverse approaches for traffic scene simulations. | |

| UNICORN Framework [56] | Trajectory Segment | Information Theoretic Meta-RL Framework | Enhances V2X system adaptability and reliability in complex, uncertain environments through task representation learning. | |

| MV-Adapter [57] | Text, Image, and Camera Parameter | Multi-view Adapter Model | Generates multi-view consistent, high-quality images, supporting HMC and multi-sensor fusion perception. | |

| ReconDreamer [58] | Video | Diffusion | Reduces artifacts and maintains spatiotemporal consistency, improving accuracy and robustness of V2X environmental perception. | |

| STIV [59] | Text, Image | Diffusion Transformer, VAE | Enhancing environmental understanding and prediction in complex traffic. | |

| FreeVS [60] | Pseudo-Image | Diffusion, U-Net | Developing more realistic, generalizable CAV simulation platforms, enhancing in-vehicle perception robustness in complex scenarios. | |

| ROCKET-1 [61] | Image | TransformerXL | Demonstrates decision-making potential in complex, dynamic environments, offering technical reference for future CAV systems. | |

| VideoAgent [62] | Video | VQVAE, Transformer | Offers insights into building modular and scalable CAV systems through its versatile toolkit approach. | |

| FancyVideo [63] | Text | CTGM, Transformer, and Latent Diffusion Model | Significantly improves temporal consistency and motion coherence in text-to-video generation. | |

| Genie [41] | Videos, Text, and Image | VQ-VAE, Transformer, and MaskGIT | Significantly advances real-world scene creation and human–machine co-driving R&D. | |

| MORA [64] | Text, Image | Diffusion | Provides valuable technical examples for CAV applications requiring diverse scenario generation or operating with limited real-world data. | |

| Think2Drive [34] | Image, Text | RSSM | Offers reference for efficient reinforcement learning decisions in CAV environments. | |

| MovieLLM [65] | Video | Diffusion | Provides new technical references and data augmentation strategies for CAV traffic scene simulations and human–machine co-driving. | |

| S4WM [66] | Image | S4/PSSM, VAE | Offers significant advantages in long-sequence traffic flow prediction and complex dynamic scene modeling for CAVs. | |

| WorldDreamer [42] | Image, Video, and Text | VQGAN, STPT Transformer | Provides technical paradigms for high-fidelity scene generation and multi-modal data fusion via its STPT architecture and masked token prediction. | |

| X-Dreamer [67] | Text | LoRA, Diffusion | Stimulates research into more robust and accurate virtual simulations for V2X systems. | |

| 2023 | OccWorld [68] | Semantic Data | VQVAE, Transformer | Provides predictive data support for safer, more efficient collaborative decision-making and control in V2X systems. |

| V-JEPA [69] | Video | JEPA | Offers theoretical support for V2X system deployment and application in data-constrained scenarios. | |

| MUVO [35] | Image, Text | Transformer, GRU | Greatly enhances perception, prediction, and decision-making capabilities in complex traffic scenarios. | |

| DiffDreamer [70] | Image | Diffusion | Delivers richer and more realistic virtual environments for the development, testing, and validation of V2X algorithms. | |

| VDT [71] | Image | Diffusion Transformer | Reduces model complexity and computational cost, facilitating deployment on resource-constrained in-vehicle and roadside units. | |

| SWIM [72] | Video | RSSM, VAE, and CNN | Provides insights into developing flexible and safe control strategies for V2X systems. | |

| SafeDreamer [36] | Image | RSSM | Offers technical support for dynamically adjusting V2X control strategies based on varying safety requirements. | |

| TWM [73] | Image | Transformer | Provides theoretical references for risk assessment and safety decision-making in V2X systems. | |

| HarmonyDream [74] | Image | RSSM | Enhances V2X system adaptability and collaborative decision making in complex traffic scenarios. | |

| STORM [75] | Image | Transformer | Reduces reliance on manual annotation through self-supervised learning, improving V2X system stability and reliability in data-scarce environments. | |

| UniWorld [76] | Image | BEV, Transformer | Offers technical insights into constructing multi-functional, integrated perception models for V2X systems. | |

| DayDreamer [77] | Image, Text | RSSM | Provides a reference architecture for building scalable and customizable World Models for V2X systems. | |

| TrafficBots [38] | Text, Image | Transformer, GRU | Delivers robust tools for the development, validation, and deployment of V2X algorithms. | |

| VidRD [78] | Text, Video | Diffusion | Accelerates R&D and validation of V2X collaborative perception, prediction, decision-making, and control algorithms. | |

| MC-JEPA [79] | Image | JEPA | Improves performance of V2X target detection, scene understanding, and behavior prediction tasks. | |

| DreamerV3 [30] | Image | RSSM | Enhances V2X system stability and reliability in complex environments. | |

| Drive-WM [33] | Imags, Text, and Action | U-Net, Transformer | Boosts the generalization capability of V2X systems in complex environments. | |

| A-JEPA [80] | Audio Spectrogram | JEPA | Provides technical support for various human–machine collaboration solutions in CAVs, including voice interaction and driver state recognition. | |

| ADriver-I [40] | Action, Image | MLLM, Diffusion | Potentially reduces CAVs system reliance on roadside infrastructure, enhancing in-vehicle unit intelligence. | |

| I-JEPA [81] | Image | JEPA | Enables lightweight, high-performance edge computing vision perception models for CAV systems. | |

| GAIA-1 [39] | Video, Text, and Action | Diffusion Transformer | Offers technical support for more flexible and intelligent scene interactions and human–machine co-driving in CAV systems. | |

| DriveDreamer [37] | Image, Text, and Actions | Diffusion Transformer | Provides powerful tools for CAV simulations, data augmentation, and scene understanding, accelerating algorithm R&D and validation. | |

| 2022 | DreamingV2 [82] | Image, Actions | RSSM | Holds significant potential for CAVs perception, prediction, and decision-making tasks. |

| DreamerPro [83] | Image, Scalar Reward | RSSM | Enhances environmental perception robustness and decision reliability of CAV systems under adverse conditions | |

| IRIS [84] | Image, Action | VAE, Transformer | Offers solutions for low-latency, high-reliability collaborative perception and prediction. | |

| TransDreamer [85] | Visual Observation | Transformer | Greatly enhances perception, prediction, and decision-making capabilities in complex traffic scenarios. | |

| MILE [86] | Images, Actions | RSSM | Provides robust solutions for multi-modal fusion perception and long-tail scenario handling in CAVs. | |

| SEM2 [87] | Images, LiDAR | RSSM | Paves new theoretical pathways for developing more practical CAV decision-making and control algorithms. | |

| 2021 | EfficientZero [88] | Video, Image | MuZero | Provides theoretical basis for realistic scene simulation and scene-understanding-based human–machine co-driving. |

| Pathdreamer [89] | Image, Semantic Segmentation, and Camera Poses | GAN | Holds potential for CAV systems to efficiently learn and predict intentions and trajectories of key agents in complex traffic flows. | |

| 2020 | AWML [90] | Object-Oriented Features | LSTM | Offers technical references for complex decision making and control in resource-constrained in-vehicle platforms. |

| DreamerV2 [29] | Pixels | RSSM | Offers valuable insights for robust perception, multi-agent interaction prediction, and collaborative planning. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; Liu, X. Research on World Models for Connected Automated Driving: Advances, Challenges, and Outlook. Appl. Sci. 2025, 15, 8986. https://doi.org/10.3390/app15168986

Chen N, Liu X. Research on World Models for Connected Automated Driving: Advances, Challenges, and Outlook. Applied Sciences. 2025; 15(16):8986. https://doi.org/10.3390/app15168986

Chicago/Turabian StyleChen, Nuo, and Xiang Liu. 2025. "Research on World Models for Connected Automated Driving: Advances, Challenges, and Outlook" Applied Sciences 15, no. 16: 8986. https://doi.org/10.3390/app15168986

APA StyleChen, N., & Liu, X. (2025). Research on World Models for Connected Automated Driving: Advances, Challenges, and Outlook. Applied Sciences, 15(16), 8986. https://doi.org/10.3390/app15168986