PAGCN: Structural Semantic Relationship and Attention Mechanism for Rumor Detection

Abstract

1. Introduction

1.1. Background

1.2. Research Motivation

1.3. Main Contributions

- (1)

- An attention mechanism is incorporated to identify critical propagation nodes and paths, allowing the model to more effectively learn the underlying propagation patterns of rumors. This design addresses the performance degradation commonly observed in recurrent networks as input size increases.

- (2)

- Key structural information within propagation paths is captured through the use of a GCN. By enhancing the modeling of relationships between nodes, this approach improves both the interpretability and accuracy of rumor propagation analysis.

- (3)

- A novel framework, PAGCN, is introduced for rumor detection. By integrating attention mechanisms with structural semantic relationship modeling, the framework leverages deep feature fusion and context-aware representation learning to improve the accuracy and robustness of rumor detection, particularly in noisy and dynamic environments.

- (4)

- Extensive experiments are conducted, including comparative analyses, ablation studies, and evaluations of early rumor detection performance based on varying numbers of retweets and different detection deadlines. The framework is benchmarked against 21 state-of-the-art baseline methods, demonstrating the effectiveness of the proposed PAGCN framework.

2. Related Work

2.1. Self-Attention Mechanism in Rumor Detection Methods

2.2. GNN-Based Rumor Detection Methods

2.3. System Comparison

3. Proposed Method

3.1. Problem Description

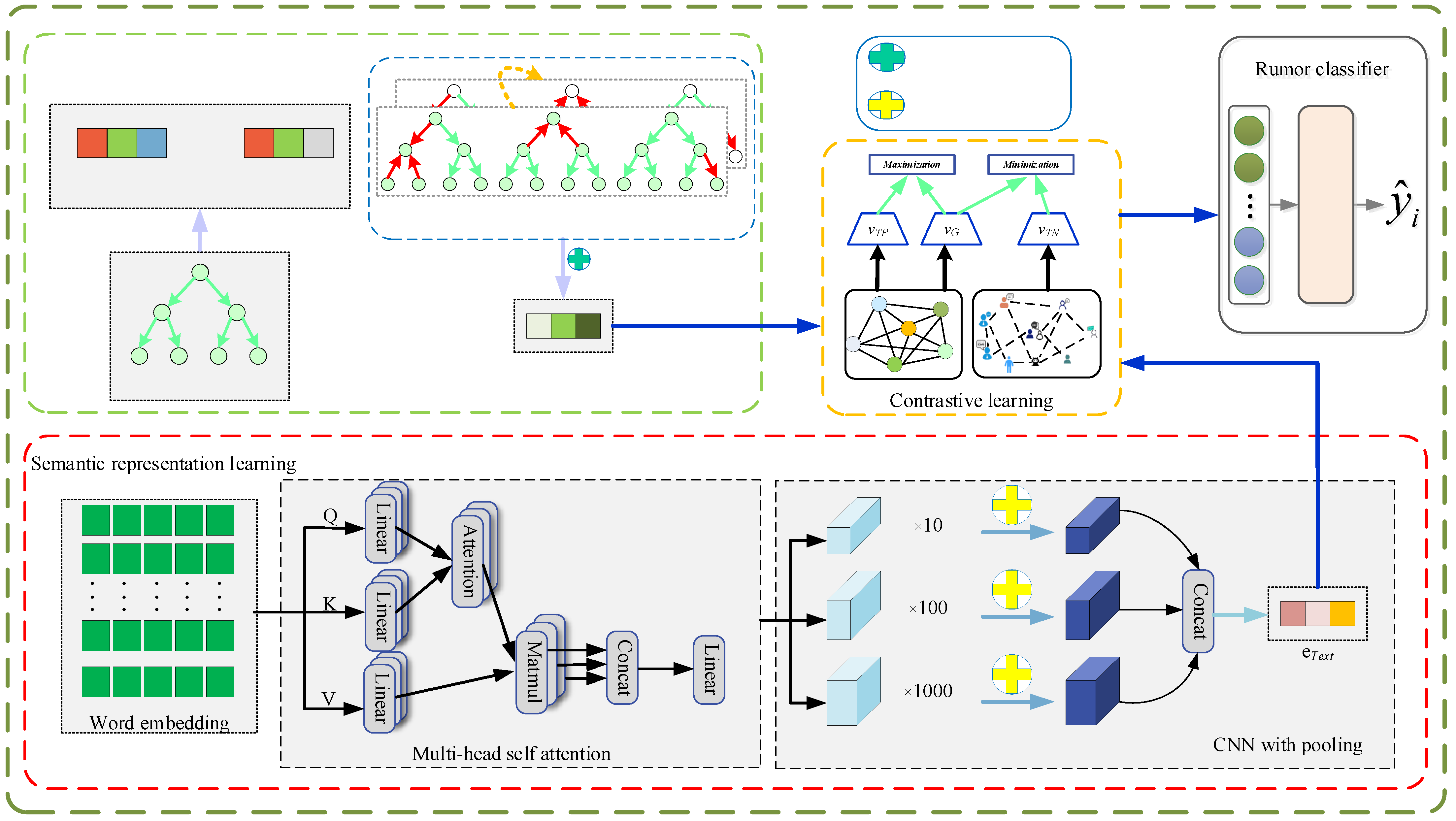

3.2. System Framework

3.2.1. Propagating Representation Learning

3.2.2. Sentence Semantic Learning

3.2.3. Incompatible Learning

| Algorithm 1: Training procedure of PAGCN. |

|

3.2.4. Classification of Rumors

4. Experiments and Analysis

- RQ1: How does PAGCN perform in comparison to individual baseline models in terms of overall effectiveness?

- RQ2: What is the contributions of components such as propagation nodes, the bidirectional converter model, and the integration of path attention mechanisms in improving PAGCN’s rumor detection capabilities?

- RQ3: How well does PAGCN perform in detecting rumors at early stages of propagation?

4.1. The Dataset

4.2. Baselines

- BERT [8]. BERT aims to pre-train a powerful language model through an unsupervised learning method, which can be transferred to various NLP tasks. Its key innovation lies in using bidirectional context information, and employing masked language modeling (MLM) and next sentence prediction (NSP) tasks during pre-training, significantly improving the performance across NLP tasks.

- STS-NN [24]. This method is designed to judge the semantic similarity between two texts (e.g., sentences or paragraphs). STS is typically evaluated using a similarity score, generally ranging from 0 to 5.

- EBGCN [20]. EBGCN is a graph neural network model that processes edge information in graphs. It introduces an edge bias mechanism to enhance the performance of graph convolutional networks, especially in tasks where edge information plays a crucial role.

- RvNN-GA [25]. This hybrid model integrates an RNN with a genetic algorithm (GA). The GA optimizes the RNN training process, improving the model’s ability to solve complex structural learning problems.

- Sta-PLAN [26]. A variant of the PLAN model, Sta-PLAN incorporates rumor spreading structure information to some extent, enhancing rumor detection capabilities.

- DTC [27]. DTC is a decision tree-based classification algorithm that divides the dataset’s features conditionally, gradually building a decision tree to make predictions.

- SVM-TS [28]. This method constructs a time series model using a linear SVM classifier and hand-crafted features for rumor detection.

- RvNN [21]. A rumor detection approach based on tree-structured recurrent neural networks, using GRU units to learn rumor representations via the propagation structure.

- GCAN [29]. GCAN focuses on enhancing graph data representation by flexibly attending to the most important parts of a graph, improving performance on large-scale or complex graph structures.

- Bi-GCN [22]. This model employs a GCN to learn rumor propagation tree representations, effectively capturing the structure of propagation trees.

- RvNN* [30]. A modified version of the RvNN model that uses the AdaGrad algorithm instead of momentum gradient descent in the training process.

- GACL [31]. This method uses node information in graph structure data for representation learning within a self-supervised learning framework, which improves the performance of downstream tasks such as node classification.

- DAN-Tree [32]. A deep learning model that combines tree structures with a dual attention mechanism (node-level and path-level attention), aimed at text classification tasks.

- BiGAT [33]. A neural topic model that detects rumors at the topic level, overcoming the cold start problem, and analyzes the dynamic characteristics of rumor topic propagation for authenticity detection.

- TG-GCN [34]. This model uses graph convolutional networks to transmit information between nodes and employs an attention mechanism to distinguish the influences of different nodes on rumor detection, providing accurate node representations.

- RECL [35]. This rumor detection model performs self-supervised contrastive learning at both the relation and event layers to enrich the self-supervised signals for rumor detection.

- FSRU [36]. A novel spectrum representation and fusion network based on double contrastive learning, which effectively converts spatial features into spectral representations, yielding highly discriminative multimodal features.

- (UMD)2 [37]. An unsupervised fake news detection framework that encodes multi-modal knowledge into low-dimensional vectors. This framework uses a teacher student architecture to assess the truthfulness of news by aligning multiple modalities.

- ERGCN [38]. This model extracts valuable entity information from both visual objects and textual descriptions, leveraging external knowledge to construct cross-modal graphs for each image text pair sample, facilitating the detection of semantic inconsistencies between modalities.

- FND-CLIP [39]. A method that leverages the multimodal cognitive capabilities of CLIP, generating self-directed attentional weights to fuse features based on modal similarity computed by CLIP.

- BMR [40]. This model utilizes multi-view representations of news features, employing the Mixture of Experts network for the fusion of multi-view features.

4.3. Experimental Results and Analysis

4.4. Results and Analysis of Ablation Experiments

4.5. Experimental Results and Analysis of Early Rumor Detection Based on PAGCN Model

4.5.1. Early Rumor Detection with Limited Retweets

4.5.2. Early Rumor Detection in Finite Time

4.6. Effects of the Attention Mechanisms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bian, T.; Xiao, X.; Xu, T.Y.; Zhao, P.L.; Huang, W.B.; Rong, Y.; Huang, J.Z. Rumor detection on social media with bi-directional graph convolutional networks. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, Menlo Park, CA, USA, 7–12 February 2020; pp. 549–556. [Google Scholar]

- Liu, X.Y.; Wen, G.L.; Wang, A.J.; Liu, C.; Wei, W.; Meo, P. GGDHSCL: A Graph Generative Diffusion with Hard Negative Sampling Contrastive Learning Recommendation Method. IEEE Trans. Comput. Soc. Syst. 2025, 16, 1–16. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Lu, M.; Huang, Z.; Li, B.; Zhao, Y.; Qin, Z.; Li, D. SIFTER: A Framework for Robust Rumor Detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 429–442. [Google Scholar] [CrossRef]

- Liu, X.Y.; Han, Q.; Wang, W.; Zhan, X.X.; Lu, L.; Ye, Z.J. GAWF: Influence Maximization Method Based on Graph Attention Weight Fusion. Int. J. Mod. Phys. C. 2025, 22, 2542002. [Google Scholar] [CrossRef]

- Zhang, K.Q.; Liu, X.Y.; Zhao, N.; Liu, S.; Li, C.R. Dual channel semantic enhancement-based convolutional neural networks model for text classification. Int. J. Mod. Phys. C. 2025, 36, 2442012. [Google Scholar] [CrossRef]

- Yu, J.L.; Yin, H.Z.; Xia, X.; Chen, T.; Cui, L.Z.; Nguyen, Q.V.H. Are graph augmentations necessary? Simple graph contrastive learning for recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1294–1303. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 2171–2186. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P. Detecting rumors from microblogs with recurrent neural networks. In Proceedings of the 25th International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 3818–3824. [Google Scholar]

- Liu, X.; Zhao, Y.; Zhang, Y.; Liu, C.; Yang, F. Social Network Rumor Detection Method Combining Dual-Attention Mechanism with Graph Convolutional Network. IEEE Trans. Comput. Soc. Syst. 2023, 10, 2350–2361. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph attention networks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–12. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Tschannen, M.; Djolonga, J.; Rubenstein, P.K.; Gelly, S.; Lucic, M. On Mutual Information Maximization for Representation Learning. arXiv 2019, arXiv:1907.13625. [Google Scholar]

- Ma, J.; Gao, W. Debunking Rumors on Twitter with Tree Transformer. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5455–5466. [Google Scholar]

- Shu, K.; Wang, S.; Liu, H. Beyond news contents: The role of social context for fake news detection. In Proceedings of the 12th ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 312–323. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the 2017 International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 1202–1214. [Google Scholar]

- Mehta, N.; Pacheco, M.L.; Goldisser, D. Tackling fake news detection by continually improving social context representations using graph neural networks. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 1363–1380. [Google Scholar]

- Gao, W.; Fang, Y.; Li, L.; Tao, X.H. Event detection in social media via graph neural network. In Proceedings of the 22nd International Conference on Web Information Systems Engineering, Melbourne, Australia, 26–29 October 2021; pp. 1370–1384. [Google Scholar]

- Wei, L.W.; Hu, D.; Zhou, W.; Yue, Z.J.; Hu, S.L. Edge-enhanced Bayesian graph convolutional networks for rumor detection. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Bangkok, Thailand, 1–6 August 2021; pp. 3845–3854. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing (EMNLP), Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Sattarov, O.; Choi, J. Detection of Rumors and Their Sources in Social Networks: A Comprehensive Survey. IEEE Trans. Big Data. 2025, 11, 1528–1547. [Google Scholar] [CrossRef]

- Ma, G.; Hu, C.; Ge, L.; Zhang, H. DSMM: A dual stance-aware multi-task model for rumour veracity on social networks. Inf. Process. Manag. 2024, 6, 103528. [Google Scholar] [CrossRef]

- Huang, Q.; Zhou, C.; Wu, J.; Liu, L.C.; Wang, B. Deep spatial-temporal structure learning for rumor detection. Neural Comput. Appl. 2020, 35, 12995–13005. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W. An attention-based rumor detection model with tree-structured recursive neural networks. ACM Trans. Intell. Syst. Technol. 2020, 11, 1124–1138. [Google Scholar] [CrossRef]

- Khoo, L.; Chieu, H.; Qian, Z.; Jiang, J. Interpretable rumor detection in microblogs by attending to user interactions. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, Menlo Park, CA, USA, 7–12 February 2020; pp. 2783–2792. [Google Scholar]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on Twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 675–684. [Google Scholar]

- Ma, J.; Gao, W.; Wei, Z.; Lu, Y.; Wong, K.F. Detect rumors using time series of social context information on microblogging websites. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management, Melbourne, Australia, 19–23 October 2015; pp. 1741–1754. [Google Scholar]

- Lu, Y.-J.; Li, C.-T. GCAN: Graph-aware co-attention networks for explainable fake news detection on social media. arXiv 2020, arXiv:2004.11648. [Google Scholar]

- Wu, K.; Yang, S.; Zhu, K. False rumors detection on Sina Weibo by propagation structures. In Proceedings of the IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; pp. 651–662. [Google Scholar]

- Sun, T.; Qian, Z.; Dong, S.; Li, P.; Zhu, Q. Rumor detection on social media with graph adversarial contrastive learning. In Proceedings of the ACM Web Conference, Lyon, France, 25–29 April 2022; pp. 2789–2797. [Google Scholar]

- Wu, J.; Xu, W.; Liu, Q.; Wu, S.; Wang, L. Adversarial contrastive learning for evidence-aware fake news detection with graph neural networks. IEEE Trans. Knowl. Data Eng. 2023, 36, 4589–4597. [Google Scholar] [CrossRef]

- Wu, L.; Lin, H.; Gao, Z.; Tan, C.; Li, S. Self-supervised learning on graphs: Contrastive, generative, or predictive. IEEE Trans. Knowl. Data Eng. 2021, 35, 659–668. [Google Scholar] [CrossRef]

- Wu, S.; Tang, Y.; Zhu, Y.; Wang, L.; Xie, X.; Tan, T. Session-based recommendation with graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 343–353. [Google Scholar]

- Xu, Y.; Hu, J.; Ge, J.; Wu, Y.; Li, T.; Li, H. Contrastive Learning at the Relation and Event Level for Rumor Detection. In Proceedings of the ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023; pp. 1531–1545. [Google Scholar]

- Lao, A.; Zhang, Q.; Shi, C.; Cao, L.; Yi, K.; Hu, L.; Miao, D. Frequency Spectrum is More Effective for Multimodal Representation and Fusion: A Multimodal Spectrum Rumor Detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 2985–2997. [Google Scholar]

- Silva, A.; Luo, L.; Karunasekera, S.; Leckie, C. Unsupervised Domain-agnostic Fake News Detection using Multi-modal Weak Signals. arXiv 2023, arXiv:2305.11349. [Google Scholar] [CrossRef]

- Li, L.; Jin, D.; Wang, X.; Guo, F.; Wang, L.; Dang, J. Multi-modal sarcasm detection based on cross-modal composition of inscribed entity relations. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence, Atlanta, GA, USA, 6–8 November 2023; pp. 918–925. [Google Scholar]

- Zhou, Y.; Yang, Y.; Ying, Q.; Qian, Z.; Zhang, X. Multimodal fake news detection via clip-guided learning. In Proceedings of the IEEE International Conference on Multimedia and Expo, Brisbane, Australia, 10–14 July 2023; pp. 2825–2830. [Google Scholar]

- Ying, Q.; Hu, X.; Zhou, Y.; Qian, Z.; Zeng, D.; Ge, S. Boot strapping multi-view representations for fake news detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 5384–5392. [Google Scholar]

| Comparing Dimensions | RvNN | Bi-GCN | EBGCN | PAGCN |

|---|---|---|---|---|

| Path encoding method | Recurrent neural networks | Implicit capture of path characteristics in bidirectional information dissemination | Weight of Bayesian probability modeling edges | Multi scale path embedding |

| Attention mechanism | Single-layer attention | Not have | Not have | Hierarchical attention |

| Dynamic adaptability | Fixed architecture | Dynamic topology | Fixed architecture | Path aware dynamic adjustment |

| Comparative learning applications | Not have | Not have | Not have | Path level comparison loss |

| Key innovation points | Path modeling | Structural adaptation | Deep optimization | Path perception + Dynamic adaptation + Contrastive learning |

| Statistic | Twitter15 | Twitter16 | |

|---|---|---|---|

| # of source post | 1490 | 818 | 4664 |

| # of posts | 42,914 | 20,295 | 2,011,057 |

| # of true rumors | 372 | 205 | 2351 |

| # of false rumors | 370 | 205 | 2313 |

| # of unverified rumors | 374 | 203 | 0 |

| # of non-rumors | 374 | 205 | 0 |

| Avg. # of depth/tree | 261 | 255 | 285 |

| Avg.# of paths/tree | 2616 | 2253 | 39,411 |

| Avg.# of posts/tree | 2880 | 2481 | 43,127 |

| Avg.# of length/root post | 1768 | 1704 | 5924 |

| Avg.# of length/post | 1413 | 1368 | 595 |

| Models | Acc. | F1 | |||

|---|---|---|---|---|---|

| NR | FR | TR | UR | ||

| DTC [27] | 0.454 | 0.733 | 0.355 | 0.317 | 0.415 |

| SVM-TS [28] | 0.544 | 0.796 | 0.472 | 0.404 | 0.483 |

| RvNN [21] | 0.723 | 0.682 | 0.758 | 0.821 | 0.654 |

| BERT [8] | 0.735 | 0.731 | 0.722 | 0.730 | 0.705 |

| STS-NN [24] | 0.810 | 0.753 | 0.766 | 0.890 | 0.838 |

| RvNN-GA [25] | 0.756 | 0.784 | 0.774 | 0.817 | 0.680 |

| Sta-PLAN [26] | 0.852 | 0.840 | 0.846 | 0.884 | 0.837 |

| Bi-GCN [22] | 0.886 | 0.891 | 0.860 | 0.930 | 0.864 |

| EBGCN [20] | 0.871 | 0.820 | 0.898 | 0.843 | 0.837 |

| DAN-Tree [32] | 0.902 | 0.891 | 0.900 | 0.930 | 0.886 |

| GCAN [29] | 0.842 | 0.844 | 0.846 | 0.884 | 0.837 |

| PAGCN | 0.909 ± 0.0175 | 0.947 ± 0.019 | 0.053 ± 0.017 | 0.918 ± 0.024 | 0.082 ± 0.021 |

| Models | Acc. | F1 | |||

|---|---|---|---|---|---|

| NR | FR | TR | UR | ||

| DTC [27] | 0.465 | 0.643 | 0.393 | 0.419 | 0.403 |

| SVM-TS [28] | 0.574 | 0.755 | 0.420 | 0.571 | 0.526 |

| RvNN [21] | 0.737 | 0.662 | 0.743 | 0.835 | 0.708 |

| RvNN-GN [25] | 0.764 | 0.708 | 0.753 | 0.840 | 0.738 |

| DAN-Tree [32] | 0.901 | 0.877 | 0.865 | 0.953 | 0.908 |

| Bi-GCN [22] | 0.880 | 0.847 | 0.869 | 0.937 | 0.865 |

| PAGCN | 0.901 ± 0.0185 | 0.907 ± 0.0125 | 0.093 ± 0.0098 | 0.906 ± 0.0160 | 0.054 ± 0.0087 |

| Models | Class | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|

| GACL [31] | FR | 91.2 | 92.5 | 90.8 | 91.6 |

| TR | 90.3 | 91.6 | 90.9 | ||

| TG-GCN [34] | FR | 89.7 | 90.2 | 88.5 | 89.3 |

| TR | 89.1 | 90.3 | 89.7 | ||

| RvNN * [30] | FR | 92.9 | 94.9 | 90.9 | 92.8 |

| TR | 91.1 | 95.0 | 93.0 | ||

| FSRU [36] | FR | 93.2 | 92.8 | 93.5 | 93.1 |

| TR | 93.6 | 92.7 | 93.1 | ||

| BiGAT [33] | FR | 89.7 | 90.2 | 88.9 | 89.5 |

| TR | 89.1 | 90.4 | 89.7 | ||

| PAGCN | FR | 93.9 ± 0.9 | 94.5 ± 0.5 | 93.1 ± 1.1 | 93.8 ± 0.8 |

| TR | 93.3 ± 0.8 | 94.7 ± 0.9 | 94.2 ± 0.7 |

| Model | Accuracy | ||

|---|---|---|---|

| Twitter15 | Twitter16 | ||

| PAGCN | 0.909 | 0.901 | 0.939 |

| PAGCN w/o path | 0.824 | 0.829 | 0.852 |

| PAGCN w/o GRU | 0.778 | 0.726 | 0.843 |

| PAGCN w/o BERT | 0.801 | 0.786 | 0.841 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Wang, D. PAGCN: Structural Semantic Relationship and Attention Mechanism for Rumor Detection. Appl. Sci. 2025, 15, 8984. https://doi.org/10.3390/app15168984

Liu X, Wang D. PAGCN: Structural Semantic Relationship and Attention Mechanism for Rumor Detection. Applied Sciences. 2025; 15(16):8984. https://doi.org/10.3390/app15168984

Chicago/Turabian StyleLiu, Xiaoyang, and Donghai Wang. 2025. "PAGCN: Structural Semantic Relationship and Attention Mechanism for Rumor Detection" Applied Sciences 15, no. 16: 8984. https://doi.org/10.3390/app15168984

APA StyleLiu, X., & Wang, D. (2025). PAGCN: Structural Semantic Relationship and Attention Mechanism for Rumor Detection. Applied Sciences, 15(16), 8984. https://doi.org/10.3390/app15168984