Abstract

Traditional GCN based methods capture the propagation structure between posts, but do not fully model dynamic semantic information, such as the role of specific users on the propagation path and the context of post content that changes over time, leading to a decrease in the accuracy of rumor detection. Therefore, we propose an innovative path attention graph convolution network (PAGCN) framework, which effectively solves this limitation by integrating propagation structure and semantic representation learning. PAGCN first uses the graph neural network (GNN) to model the information transmission path, focusing on the differences between rumor and fact information in communication behavior, such as the differences between depth first and breadth first dissemination modes. Then, in order to enhance the ability of semantic understanding, we design a multi head attention mechanism based on convolutional neural network (CNN), which extracts deep contextual relationships from text content. Furthermore, by introducing the comparative learning technology, PAGCN can adaptively optimize the representation of structural and semantic features, dynamically focus on the most discriminative features, and significantly improve the sensitivity to subtle patterns in rumor propagation. The experimental verification on three benchmark datasets of twitter15, twitter16, and Weibo, shows that the proposed PAGCN performs best among the 17 comparison models, and the accuracy rates on twitter15 and Weibo datasets are 90.9% and 93.9%, respectively, which confirms the effectiveness of the framework in capturing propagation structure and semantic information at the same time.

1. Introduction

1.1. Background

With the rapid proliferation of social media, users increasingly rely on online platforms to follow and discuss trending events. However, this practice has also contributed to the widespread dissemination of rumors, often driven by unverified posts with hidden agendas. These rumors are further amplified by users who share information without verifying its authenticity, leading to a decline in the credibility of social networks and negatively influencing public opinion. To address these challenges, developing an effective and robust rumor detection method has become essential. Traditional rumor detection approaches, primarily based on textual feature analysis, have shown promising results but face notable limitations. These include difficulties in capturing contextual nuances, handling multimodal data, and achieving the high detection accuracy. Such limitations highlight the need for more holistic approaches that integrate both textual and structural information. In this study, we propose a method that combines text feature extraction with social network analysis to enhance the overall performance of rumor detection. Specifically, an improved BERT model is employed to extract high-level semantic representations from textual content. Simultaneously, a social network graph is constructed to capture information such as message propagation patterns, user relationships, and node centrality. Finally, a mathematical model is developed to integrate these features, providing a more comprehensive understanding of rumor dynamics.

Currently, most rumor detection methods focus on analyzing both textual semantics and social context. Textual semantic analysis typically leverages enhanced versions of the BERT model to extract meaningful features from content. To address the complex structure of information propagation, Graph Convolutional Networks (GCNs) are employed due to their strong capabilities in feature representation and their ability to handle irregular spatial data structures. Recognizing GCNs effectiveness, Bian et al. [1] utilized GCNs to capture aggregated node representations from two opposite directions—propagation and diffusion—significantly advancing the performance of rumor detection systems. Building on this work, many researchers have proposed various GCN-based models [2] to further capture the social context of rumors. These efforts have collectively contributed to the ongoing development and refinement of rumor detection technologies.

In recent years, a common approach in studying the social context of rumors has been to use GCNs to aggregate the neighborhood nodes of event-related posts. When encoding the structural information of rumor propagation, these methods often emphasize the directional characteristics of information flow. For instance, Bian et al. [1] argued that effective feature representations are difficult to obtain through a top-down deep propagation structure. As a result, they proposed a bottom-up, breadth-oriented diffusion structure to model information spread. While such direction-focused methods offer valuable insights, they may result in the loss of complete global structural information and are often insufficient for capturing the deeper contextual relationships among relevant nodes.

To address these challenges, this paper proposes a novel framework called PAGCN, which integrates textual semantic analysis with structural representation learning to enhance rumor detection. The proposed model captures the hierarchical propagation structure of events by stacking multiple graph convolutional layers, enabling the extraction of both shallow and deep feature representations. Additionally, by dynamically adjusting attention weights, PAGCN effectively focuses on critical neighboring nodes, ensuring that both local and global structural information are preserved. By combining high-level semantic features from textual content with propagation patterns derived from social network structures, the model provides a more comprehensive understanding of rumor dynamics. Experiments conducted on the Twitter15, Twitter16, and Weibo datasets demonstrate that the proposed method significantly improves the accuracy and robustness of rumor detection.

1.2. Research Motivation

The rise and widespread adoption of social media have significantly accelerated the dissemination of rumors, often resulting in the rapid spread of misinformation with harmful societal consequences. Traditional rumor detection methods, which primarily rely on isolated textual or metadata features, are increasingly inadequate in addressing the complex dynamics of modern online platforms. Consequently, there is a pressing need for more robust detection approaches that integrate both textual semantics and structural propagation characteristics.

Natural Language Processing (NLP) techniques, such as keyword extraction, syntactic structure analysis, and semantic content modeling, offer valuable insights into the linguistic traits of rumor-related text. These features can be leveraged to construct classification models capable of automatically identifying misleading content. Moreover, rumors often follow distinctive propagation patterns, including depth-oriented spread, rapid dissemination, and characteristic user interaction networks. Analyzing these structural patterns provides a deeper understanding of the underlying mechanisms driving rumor diffusion.

Recent advances in deep learning, particularly the introduction of attention mechanisms [3], have further enhanced the ability of models to selectively focus on salient features in both text and propagation pathways. By integrating these semantic and structural insights, it becomes possible to detect rumors more accurately and robustly, even in noisy, fast-evolving online environments, thus contributing to the mitigation of their societal impact.

1.3. Main Contributions

The proposed framework makes the following significant contributions to rumor detection:

- (1)

- An attention mechanism is incorporated to identify critical propagation nodes and paths, allowing the model to more effectively learn the underlying propagation patterns of rumors. This design addresses the performance degradation commonly observed in recurrent networks as input size increases.

- (2)

- Key structural information within propagation paths is captured through the use of a GCN. By enhancing the modeling of relationships between nodes, this approach improves both the interpretability and accuracy of rumor propagation analysis.

- (3)

- A novel framework, PAGCN, is introduced for rumor detection. By integrating attention mechanisms with structural semantic relationship modeling, the framework leverages deep feature fusion and context-aware representation learning to improve the accuracy and robustness of rumor detection, particularly in noisy and dynamic environments.

- (4)

- Extensive experiments are conducted, including comparative analyses, ablation studies, and evaluations of early rumor detection performance based on varying numbers of retweets and different detection deadlines. The framework is benchmarked against 21 state-of-the-art baseline methods, demonstrating the effectiveness of the proposed PAGCN framework.

Section 2 reviews related work, including self-attention mechanisms and GNN-based rumor detection methods. Section 3 details the proposed approach and its core components. Section 4 presents the experimental setup and provides comparative analyses against baseline methods. Finally, Section 5 summarizes the findings and concludes the paper.

2. Related Work

The proliferation of social media has spurred significant advancements in rumor detection, particularly through the adoption of deep learning techniques. Recurrent Neural Networks (RNNs) and their variants (such as LSTM and GRUs) have been widely employed to model the temporal and sequential patterns of rumor propagation [4]. However, these approaches often face challenges in scalability and in effectively capturing the complex structural dependencies inherent in social propagation networks. To address these limitations, recent studies have explored the integration of GNNs for modeling the structural aspects of rumor dissemination. GNNs have demonstrated the superior performance in leveraging the topological information of propagation graphs, thereby offering a more realistic and effective approach to understanding rumor dynamics [5].

In addition, to further enhance the robustness and generalization capabilities of rumor detection models, contrastive learning techniques have gained traction. Supervised graph contrastive learning enables models to learn discriminative and invariant features across different events [6]. Unsupervised contrastive learning methods have also been proposed to capture retweet relationships and structural patterns, enabling the generation of effective graph-based embeddings for rumor detection tasks [7].

2.1. Self-Attention Mechanism in Rumor Detection Methods

The self attention mechanism proposed by Vaswani et al. [3] dynamically generates weights through query key interaction, which realizes the global dependency modeling and parallelized long-distance relationship learning of sequence elements. Bert [8] realizes the context awareness and hierarchical semantic combination of the whole sentence through the bidirectional transformer encoder. Ma et al. [9] applied sequential processing to rumor propagation sequences to capture the temporal characteristics of fake news dissemination. To further enhance performance, many researchers have employed Transformer-based models to extract long-range dependencies within text. The self attention mechanism of Transformer dynamically captures global dependencies by calculating the correlation weights between all words in the sentence, thereby achieving a comprehensive understanding of the context. Among them, BERT [10] has emerged as one of the most successful pre-trained language models for text classification tasks. BERT excels at understanding the context within a single text sequence, but it cannot directly model the semantic and structural features of the entire propagation structure linking multiple posts in a tree. Our GNN method fills this gap. Bahdanau et al. [11] first proposed an attention mechanism, which improved the traditional encoder decoder architecture by jointly learning alignment and translation. This work has laid an important foundation for subsequent sequence modeling research, and its attention mechanism has been widely applied in fields such as natural language processing and speech recognition. Veličković et al. [12] first validated the effectiveness of attention mechanisms in graph neural networks using the Graph Attention Network (GAT) model. GAT focuses on modeling relationships in non euclidean spaces, providing a new paradigm for tasks such as social network analysis and molecular attribute prediction [13,14].

2.2. GNN-Based Rumor Detection Methods

GNNs represents the global relationships between words as a graph, where words are treated as nodes and the relationships between them are represented by edges. This graph-based structure allows GNNs to capture the global information of words in a sentence more effectively [15]. As a result, several researchers have proposed various GNN-based models and successfully applied them to text classification tasks. For instance, Shu et al. [16] constructed a heterogeneous information network by incorporating the relationships between news releases, dissemination patterns, and user interactions. They employed matrix factorization to generate embedded representations for detecting false news.

Kipf et al. [17] introduced the Graph Convolutional Network (GCN), which constructs a symmetric adjacency matrix based on the relationship graph. This matrix is then processed using the Convolutional Neural Networks (CNN) to extract features and aggregate the information from neighboring nodes, thereby obtaining feature representations for text. Mehta et al. [18] designed an iterative inference framework that applied various inference operations to uncover hidden relationships within the graph, improving social context representations and facilitating fake news detection.

The strength of GCNs and their variants lies in their use of convolutional sliding windows to extract global semantic features from the graph. Previous studies have shown the effectiveness of GCNs in detecting social media events, but they have not fully accounted for the impact of textual context information [19]. To address this gap, this paper proposes combining GCNs with models that capture local information, thereby enhancing the overall performance of rumor detection.

2.3. System Comparison

EBGCN [20] is a graph neural network model that processes edge information in graphs. It introduces an edge bias mechanism to enhance the performance of graph convolutional networks, especially in tasks where edge information plays a crucial role. RvNN [21] is a rumor detection approach based on tree-structured recurrent neural networks, using GRU units to learn rumor representations via the propagation structure. The Bi-GCN [22] model employs a GCN to learn rumor propagation tree representations, effectively capturing the structure of propagation trees. Table 1 systematically compares PAGCN with three representative methods: RvNN, Bi-GCN, and EBGCN, focusing on the four dimensions of path coding, attention mechanism, dynamic adaptability, and comparative learning. This comparison more clearly shows the advantages of pagcn in path sensitivity and dynamic adaptability, especially proposed by us combination innovation of the hierarchical attention mechanism and path contrast learning.

Table 1.

System Comparison.

3. Proposed Method

3.1. Problem Description

First, we will introduce some common notations to be used in the paper, we use bold uppercase letters used to denote matrices and bold lowercase letters used to denote vectors.

As the context within a single post is often limited, this study focuses on rumor detection at the event level, where an event is defined as a collection of related posts. Let C denote a set of source posts , each labeled as either a rumor or the truth. Each event c comprises two types of heterogeneous data. The first is the propagation tree <V, E>, with node set V represents posts, and edge set E captures the reply or diffusion relationships between these posts. The source post c serves as the root node of the tree, while all other nodes correspond to replies. Directed edges indicate the direction of the reply from post vi to post vj. The second aspect is the semantic content, where each node is associated with a feature vector , representing its semantic representation, with , where d is the feature dimension and N is the number of posts. Therefore, each event can be modeled as an attributed graph, characterized by an adjacency matrix A, where each element indicates the connection between nodes, as follows:

The rumor detection problem can be formulated as the task of leveraging heterogeneous data sources to assess the credibility of information associated with an event.

3.2. System Framework

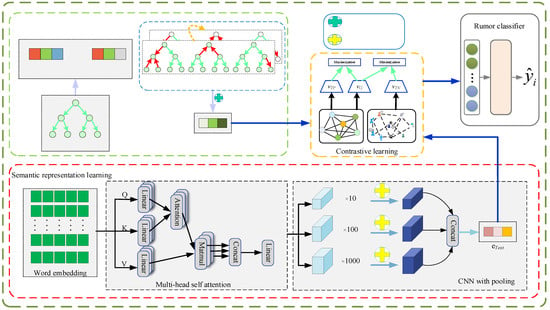

The proposed PAGCN framework, illustrated in Figure 1, consists of the following three key components: a text feature extractor, a structural feature extractor, and a structural semantic fusion module. The Text Feature Extractor utilizes a pre-trained transformer model to encode the content of news posts, extracting high-level semantic representations from a variable textual space. Meanwhile, the Structural Feature Extractor constructs an information propagation graph based on online interactions and employs GNNs to learn the structural patterns of rumor diffusion. This includes modeling bidirectional propagation paths (both top-down and bottom-up) to capture the hierarchical diffusion dynamics of rumors. An attention mechanism is incorporated into the GNN to assign varying importance to nodes, enabling the model to focus on influential nodes within the propagation network. Finally, the Structural Semantic Fusion component integrates temporal encoding with both textual and structural features to model the temporal evolution of rumors and enhance the understanding of how rumor characteristics change over time.

Figure 1.

Path-Attentive rumor detection (PAGCN) framework.

3.2.1. Propagating Representation Learning

Rumor propagation patterns usually differ from those of factual information. While factual content typically spreads in a breadth-first manner, rumors tend to propagate in a depth-first fashion [11]. Leveraging this observation, we utilize GNNs to capture these distinct diffusion behaviors and represent them as structural features of post propagation. The core methodology employs a proximity-based aggregation strategy within the diffusion tree, where node representations are iteratively updated by integrating vector information from their neighboring nodes. By stacking multiple layers, GCNs progressively aggregate information from higher-order neighbors, thereby expanding the receptive field of each node. The layer-wise propagation rule for GCNs can be mathematically expressed as follows:

where denotes the normalized adjacency matrix, with A representing the original adjacency matrix, I the identity matrix (to include self-loops), and D the corresponding degree matrix. is a trainable weight matrix at the n-th layer, and is a nonlinear activation function (e.g., ReLU). In this framework, the initial node embeddings H(0) are set to X, which is obtained via a multi-head attention mechanism based on the distribution of words within the post. Simultaneously, the adjacency matrix A is defined and initialized to model the structural connectivity between posts. The ReLU function is adopted to introduce nonlinearity into the learning process. After passing through n layers of graph convolution, the final node representation H(n) captures n-hop structural dependencies, reflecting high-order correlations between nodes in the propagation graph. After the representation of the nodes on the propagation tree g has been established, the readout function is used to obtain the representation of the source post. Here, we use the mean pooling operator, as follows:

3.2.2. Sentence Semantic Learning

To enhance the model’s sentence-level understanding, we incorporate a CNN into the overall framework, leveraging its capability in capturing local semantic patterns. A multi-head self-attention mechanism is combined with the CNN to learn sentence representations. The multi-head attention mechanism enables the model to capture word-level features from multiple sub-spaces, enhancing its contextual understanding. In a multi-head attention architecture, each attention head independently performs an attention operation. The model utilizes the following three common input matrices: the query matrix Q, the key matrix K, and the value matrix V. For each attention head, these matrices are linearly projected into different sub-spaces using trainable weight matrices. The self-attention mechanism allows each word in a sequence to attend to all other words, enabling it to dynamically adjust its representation based on relevant contextual information. Specifically, in each head, the query vector Qi interacts with key vectors Ki using scaled dot-product attention. This involves computing the dot product between Qi and Ki, where dk is the dimension of the key vectors, and applying a non-linear activation softmax to generate attention weights, which are then used to compute a weighted sum over the value vectors Vi. We adopt a three-layer convolutional structure, using 3 3 filters for each layer, with a step size of 1, combined with ReLU activation function and batch normalization (BatchNorm). The pooling strategy adopts a 2 2 maximum pooling with a stride of 2. In terms of the attention mechanism: the text encoder uses 8-head self attention, with each head having a dimension of 64; All attention layers use the GELU activation function and a dropout rate of 0.1.

In the Query key value calculation stage. Use linear transformation (without activation function) is used to generate the Q/K/V matrix, where softmax ensures that the attention weight is normalized to probability distribution. The final output of the multi-head attention mechanism is obtained through a structured process. Initially, the outputs from all attention heads are concatenated, where each head has processed the input in a distinct sub-space, capturing complementary aspects of the data. This multi-perspective processing allows the model to focus on different semantic features simultaneously.

To unify the representations from these diverse sub-spaces, a learnable linear transformation is subsequently applied. This transformation not only projects the concatenated output into a common dimensional space, but also learns to optimally combine information from each head. By adjusting the weights during training, the transformation effectively fuses the key information extracted from multiple heads, producing the final output of the multi-head attention module. In the Multi head attention output stage, Using the GeLU (Gaussian Error Linear Unit) activation function can better preserve the gradient information of the attention layer compared to ReLU.

Consider the input as a sequence fed into the CNN, where l denotes the sequence length (which can be padded or truncated as necessary), and d represents the dimensionality of the word embeddings. The convolutional operation involves sliding a filter across consecutive word segments in the input to extract local feature patterns. This operation captures contextual dependencies within a window of h words, referred to as the filter’s receptive field. For each position i in the sequence, a feature vector vi is computed as follows:

where v is the filter weight, z is the segment of input covered by the window at position i, b is a bias term, and is a non-linear activation function (e.g., ReLU). The convolutional filter is applied to all possible windows in the sentence, yielding a sequence of feature vectors, as follows:

A max-pooling operation is then applied over the feature map to retain the most salient features, as follows:

To capture patterns at various granularities, multiple filters with different receptive field sizes k are applied. Their outputs are concatenated to form the final representation of the sentence, as follows:

This final vector t encodes rich local semantic information suitable for downstream tasks.

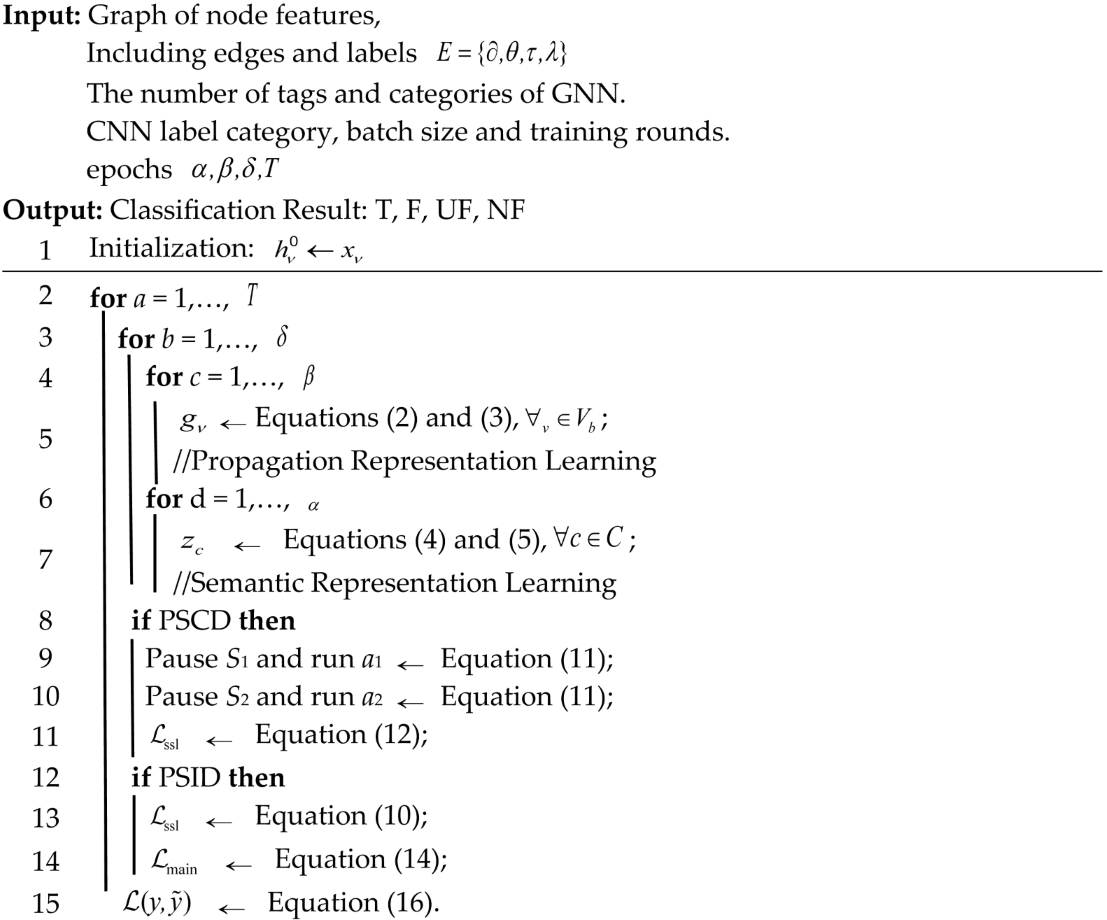

3.2.3. Incompatible Learning

In incompatible learning, semantic features from both the propagation representation in the GNN and CNN modules are extracted. The next step involves merging these two distinct representations to uncover their potential relationship, creating a more comprehensive understanding of the rumor propagation process. Empirical research has examined the link between content characteristics and propagation patterns. From a probabilistic perspective, it is assumed that the text content provides low-entropy priors, which are useful for identifying the structure of propagation. To exploit this relationship, a self-supervised task focused on instance and cluster recognition is introduced like Algorithm 1.

| Algorithm 1: Training procedure of PAGCN. |

|

This is called Propagation Semantic Instance Recognition (PSID). We represent each social media post from the following two complementary perspectives: the propagation tree and the semantic content. To model the co-occurrence relationship between these two views, we adopt a self-supervised post-level discrimination task. Specifically, the task aims to predict whether a given pair of views originates from the same post instance. We define positive pairs as views derived from the same post, where gi and ti denote the image and text representations generated by the corresponding encoders E1 and E2, respectively. Conversely, negative pairs consist of views from different posts, this auxiliary supervision enforces consistency between the two views of the same post (positive pairs), encouraging the model to learn aligned cross-modal representations. In contrast, negative pairs promote discriminability between representations of different posts, thus enhancing the model’s ability to differentiate between unrelated content, as follows:

where s denotes the similarity function, which is set as the inner product, and τ is a hyper-parameter, which plays a crucial role in mining negative pairs. The similarity function is a key component in measuring the similarity between sample pairs. The choice of this function directly influences the model’s ability to differentiate between positive and negative sample pairs, and effectively recognizing negative sample pairs is critical for model training. Among various similarity measurement techniques, the inner product is a widely used and effective approach for evaluating the similarity between two vectors by calculating their dot product.

Building on instance recognition, the Propagation Semantic Cluster Discrimination (PSCD) method is inspired by prior work and introduces a clustering-based intelligent recognition task. The PSCD approach leverages contrastive learning, which relies on pairwise comparisons and necessitates an effective negative sampling strategy. Basic clustering techniques such as k-means group data points based on their similarity, ensuring that posts in different views, specifically the propagation and semantic views, are consistently assigned to the same cluster. Intuitively, posts with similar content are more likely to share similar cluster assignments, which leads to their grouping into the same clusters from which clustering features are derived.

The propagation-based cluster assignments consist of the following two components: the E1 and E2 encoders, which map the propagation information g and semantic representation t of posts to the d-dimensional clustering space, respectively. A discriminator is then used to assign these representations to one of the K clusters.

where S1 and S2 are linear transformation matrices used to project input features into a low dimensional space. a1 and a2 are sparse coefficient vectors used to reconstruct the original features. By alternately minimizing Si and ai, the reconstruction error is minimized, thereby learning a more compact feature representation. The technical implementation logic adopts a dual branch design, which processes different feature sources separately and achieves feature fusion between modalities by minimizing reconstruction errors. For example, text features and propagation features complement each other, enhancing the model’s ability to capture complex rumors. Then, we adopt an alternating optimization strategy, optimize ai when Si is fixed (such as the least squares method), and optimize Si when ai is fixed (such as gradient descent), iterate until convergence.

3.2.4. Classification of Rumors

To classify rumors, a rumor detection model is designed based on the propagation representation g of a post c.

where l(.) is the loss function, which may use cross entropy loss or contrastive loss to measure the consistency between the predicted results and the target, and f1 and f2 are two independent classifiers that classify and predict data from different perspectives or after enhancement. Build a rumor detector model on top of the propagation representation g of post c as follows. By enhancing the prediction results of f1 and f2, the model can explore the potential correlation between rumor propagation patterns (such as forwarding timing) and semantic features (such as keyword contradictions), and enhance the cross platform recognition ability of hidden rumors. Where p(c) represents the probability of a post c becoming a rumor, which is modeled and computed using the trainable matrices wg and the bias terms b. The classification task is optimized using the cross-entropy loss function, as follows:

The resulting rumor propagation tree representation, denoted as r, is fed into a feed-forward neural network layer to determine whether it corresponds to a rumor. Cross-entropy is employed as the classification method to calculate the similarity between the true labels and the predicted labels. A smaller cross-entropy value indicates more accurate predictions. Therefore, for each rumor event classifier, the objective is to minimize the cross-entropy loss function, as follows:

where y represents the true label corresponding to the event, ||θ||2 represents θ the L2 regularization operation applied to all parameters of the network model, and λ is the balance coefficient.

4. Experiments and Analysis

To evaluate the effectiveness of the proposed method, we conduct experiments using three publicly available datasets, with the aim of addressing the following key research questions:

- RQ1: How does PAGCN perform in comparison to individual baseline models in terms of overall effectiveness?

- RQ2: What is the contributions of components such as propagation nodes, the bidirectional converter model, and the integration of path attention mechanisms in improving PAGCN’s rumor detection capabilities?

- RQ3: How well does PAGCN perform in detecting rumors at early stages of propagation?

4.1. The Dataset

To evaluate our model, we used the following three classic rumor datasets to compare its performance with existing related models: the Twitter15 [4], Twitter16 [4], and Weibo [23] datasets. The Twitter15 and Twitter16 datasets were collected and organized by Ma et al. [4] from the widely used social media platform Twitter, and the data was labeled using accuracy tags from debunking websites such as snopes.com and Emergent. info. The Twitter15 and Twitter16 datasets contain 1490 and 818 rumor propagation tree structures, respectively, each consisting of forwarded posts and comment posts. Since the forwarded posts do not contain any textual information, we have removed these forwarded posts and only kept the comment posts. The Twitter15 and Twitter16 datasets include the following four tags: Non Rumor (NR), Verified False Rumor (FR), Verified True Rumor (TR), and Unverified Rumor (UR). The Weibo dataset was collected and organized by Ma et al. [23] from the Weibo platform, which is a widely used social media platform in China. The Weibo dataset contains a total of 4664 rumor data, including the following two labels: verified false rumors (FR) and verified true rumors (TR). The rumor labeled FR was obtained through the reporting and processing hall of Weibo. The statistical information of the three datasets is shown in Table 2.

Table 2.

Statistics of the dataset.

We used 5-fold cross validation on the three datasets to train and test the performance of the model. The Twitter15 and Twitter16 datasets used in this article were collected from the English social platform Twitter. Therefore, we used the WordNetLemmatizer method from the NLTK (NaturalLanguageToolkit) library for morphological restoration and the TweetTokenizer method for text segmentation. For the Weibo dataset captured from the Chinese social platform Weibo, we removed hyperlinks that did not contain semantic information from the data and finally used jieba segmentation to perform segmentation operations on the data. For these three datasets, we did not remove punctuation marks such as “!” and “?”, and retained emojis and other emoticons that may appear in the text during word segmentation operations, such as “:” and “(”. These symbols have a certain emotional expression ability and help to obtain more suitable sentence level semantic representations in rumor detection tasks. Finally, we selected the top 5000 words with the highest frequency of occurrence in the dataset for model training.

In order to more fairly compare the experimental results of various more fairly, Accuracy and F1 score were used as evaluation metrics for model performance on the Twitter15, Twitter16, and Weibo datasets. The higher the accuracy, the better the classification performance of the model, and the higher the F1 value, the better the overall performance of the model. We also used the F1 value metric on the subclasses of the three datasets. On the Weibo dataset, we also used Precision and Recall to evaluate the experimental performance on each subcategory. The experimental environment uses PyTorch2.8. In the Adam algorithm, the values of β1 and β2 are set to 0.9 and 0.999, respectively. The initial value of the learning rate lr is set to 0.01, the dropout probability is set to 0.5, the balance coefficient λ is set to 0.01, and the hidden layer dimension is set to 300. The word embeddings in the model are initialized as 300 dimensional word vectors, and the word vectors are kept in trainable mode during the model training process. The length N of the fixed length propagation path sequence is set to 50, Nt is set to 1 layer, h in multi head attention is set to 4, and the same experimental parameters are used for the Twitter15 and Twitter16 datasets. Due to the fact that the average number of posts in the Weibo dataset is much higher than the other two datasets and it has a larger average number of propagation paths, we set h to 6 and N to 90 in the multi head attention in our experiments on the Weibo dataset. The other parameter settings of the Weibo dataset are the same as the other two datasets.

4.2. Baselines

The proposed rumor detection method PAGCN is compared with the following baselines:

- BERT [8]. BERT aims to pre-train a powerful language model through an unsupervised learning method, which can be transferred to various NLP tasks. Its key innovation lies in using bidirectional context information, and employing masked language modeling (MLM) and next sentence prediction (NSP) tasks during pre-training, significantly improving the performance across NLP tasks.

- STS-NN [24]. This method is designed to judge the semantic similarity between two texts (e.g., sentences or paragraphs). STS is typically evaluated using a similarity score, generally ranging from 0 to 5.

- EBGCN [20]. EBGCN is a graph neural network model that processes edge information in graphs. It introduces an edge bias mechanism to enhance the performance of graph convolutional networks, especially in tasks where edge information plays a crucial role.

- RvNN-GA [25]. This hybrid model integrates an RNN with a genetic algorithm (GA). The GA optimizes the RNN training process, improving the model’s ability to solve complex structural learning problems.

- Sta-PLAN [26]. A variant of the PLAN model, Sta-PLAN incorporates rumor spreading structure information to some extent, enhancing rumor detection capabilities.

- DTC [27]. DTC is a decision tree-based classification algorithm that divides the dataset’s features conditionally, gradually building a decision tree to make predictions.

- SVM-TS [28]. This method constructs a time series model using a linear SVM classifier and hand-crafted features for rumor detection.

- RvNN [21]. A rumor detection approach based on tree-structured recurrent neural networks, using GRU units to learn rumor representations via the propagation structure.

- GCAN [29]. GCAN focuses on enhancing graph data representation by flexibly attending to the most important parts of a graph, improving performance on large-scale or complex graph structures.

- Bi-GCN [22]. This model employs a GCN to learn rumor propagation tree representations, effectively capturing the structure of propagation trees.

- RvNN* [30]. A modified version of the RvNN model that uses the AdaGrad algorithm instead of momentum gradient descent in the training process.

- GACL [31]. This method uses node information in graph structure data for representation learning within a self-supervised learning framework, which improves the performance of downstream tasks such as node classification.

- DAN-Tree [32]. A deep learning model that combines tree structures with a dual attention mechanism (node-level and path-level attention), aimed at text classification tasks.

- BiGAT [33]. A neural topic model that detects rumors at the topic level, overcoming the cold start problem, and analyzes the dynamic characteristics of rumor topic propagation for authenticity detection.

- TG-GCN [34]. This model uses graph convolutional networks to transmit information between nodes and employs an attention mechanism to distinguish the influences of different nodes on rumor detection, providing accurate node representations.

- RECL [35]. This rumor detection model performs self-supervised contrastive learning at both the relation and event layers to enrich the self-supervised signals for rumor detection.

- FSRU [36]. A novel spectrum representation and fusion network based on double contrastive learning, which effectively converts spatial features into spectral representations, yielding highly discriminative multimodal features.

- (UMD)2 [37]. An unsupervised fake news detection framework that encodes multi-modal knowledge into low-dimensional vectors. This framework uses a teacher student architecture to assess the truthfulness of news by aligning multiple modalities.

- ERGCN [38]. This model extracts valuable entity information from both visual objects and textual descriptions, leveraging external knowledge to construct cross-modal graphs for each image text pair sample, facilitating the detection of semantic inconsistencies between modalities.

- FND-CLIP [39]. A method that leverages the multimodal cognitive capabilities of CLIP, generating self-directed attentional weights to fuse features based on modal similarity computed by CLIP.

- BMR [40]. This model utilizes multi-view representations of news features, employing the Mixture of Experts network for the fusion of multi-view features.

4.3. Experimental Results and Analysis

In order to verify the performance of the proposed method, experiments are carried out on three public rumor detection datasets, and the effect of the model is compared with other baseline models. Then, the ablation experiment is carried out for each module in the model, and the contribution of each module to the whole model is analyzed. To evaluate the performance of PAGCN, the accepted Accuracy, Precision, Recall, and F1 score are used as experimental indicators to evaluate the overall performance of the model.

TP (true positive) is the true example, which refers to the positive samples that are correctly predicted by the model. FP (false positive) is a negative example that the model predicted correctly. FN (false negative) is a false negative, which is a positive example that the model incorrectly predicted. TN (true negative) is a negative example that is incorrectly predicted by the model.

To answer RQ1, the PAGCN model is compared with the algorithm introduced in Section 4.2. The experimental results on the datasets, presented in Table 3, Table 4 and Table 5, show the performance comparison between the model and other baseline methods. It can be observed that PAGCN significantly outperforms the baseline models across all indicators.

Table 3.

Performance comparison on the Twitter15 dataset.

Table 4.

Performance comparison on the Twitter16 dataset.

Table 5.

Performance comparison on the Weibo dataset.

The quantitative analysis results shown in Table 3, Table 4 and Table 5 indicate that the path aware graph convolutional neural network proposed in this study has significant performance advantages over the baseline model. The classification accuracy of PAGCN on the Weibo dataset is 94%. This performance improvement is mainly attributed to the innovative integration of the attention mechanism in the graph building structure of PAGCN. This mechanism realizes the feature enhancement of key propagation paths through dynamic weight distribution, thus significantly improving the feature extraction ability of the model. In contrast, the traditional baseline model, due to its lack of such adaptive feature aggregation design, often shows the problem of insufficient feature representation when dealing with network data with complex topology. In the performance evaluation of the model, this article uses a 95% confidence interval (95% CI) to quantify the uncertainty of the indicators. All confidence intervals were calculated using the bootstrap method with bias correction (repeated sampling 1000 times) to eliminate the impact of small sample bias on the estimation. The Precision of the Twitter15 dataset is 0.909 (95% CI [0.8915, 0.9265]), indicating a 95% probability that this metric falls within the range of 0.8915 to 0.9265; The Precision of the Twitter16 dataset (0.901, 95% CI [0.8816, 0.9195]). The precision of the Weibo dataset (0.939, 95% CI [0.930, 0.948]) showed no overlapping intervals, indicating statistically significant differences (p < 0.05). This interval estimation method enhances the interpretability of the results and provides a statistical basis for cross dataset performance comparison.

The traditional rumor detection method based on Feature Engineering has significant limitations in the information classification task, and its artificially designed feature representation is difficult to effectively capture the complex pattern of rumor propagation. However, our proposed path aware graph convolutional network innovatively integrates the path attention mechanism, and achieves more accurate rumor feature extraction through multi granularity topology modeling. The path attention mechanism can quantify the differential contributions of nodes in the propagation path, which is significantly better than the traditional method in terms of feature representation ability. Experimental results show that the architecture achieves a good performance in key indicators such as accuracy and F1 value.

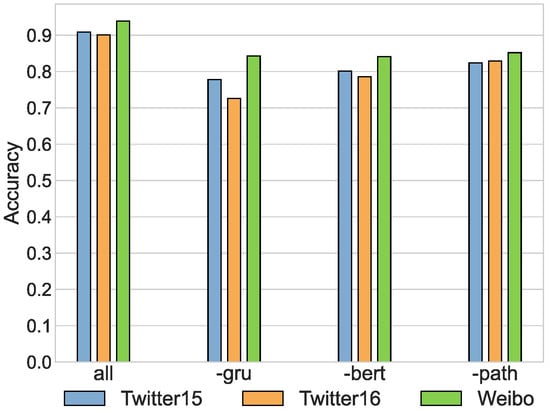

4.4. Results and Analysis of Ablation Experiments

To answer RQ2 and to evaluate the individual contribution of each module, ablation experiments are conducted by removing the GRU architecture in the PAGCN model, the pre-trained language model BERT, and the social context feature path, respectively. The experimental comparison results are shown in Table 6.

Table 6.

Results of ablation experiment.

In Table 6, by gradually removing key components in the model (gated recursive units, pre-trained language model BERT, and social context features) and using control variable methods to maintain the consistency of other hyper-parameters, the impact of each module on rumor detection performance can be accurately quantified. The specific steps are as follows:

Baseline model: The accuracy of the complete model (including GRU, BERT, and social background features) on the Weibo dataset is 0.939.

Module removal: After removing social background features, the accuracy droped to 0.852, reflecting the role of social background features in rumor detection. Although this feature contains some useful information (such as propagation path patterns), it also introduces noise (such as irrelevant user social behavior data). After removing the GRU, the model accuracy decreased to 0.843 (a 9.6% decrease), indicating that the GRU plays a critical role in sequence modeling and long-range dependency capture [36]. After removing BERT, the accuracy further decreased to 0.841 (a decrease of 9.8%), highlighting the enhancing effect of pre-trained language models on semantic understanding. Figure 2 shows the specific contribution differences of each module on different datasets.

Figure 2.

Results of ablation experiments.

In Figure 2, “-GRU” denotes the model with the gated recurrent unit (GRU) component removed, “-BERT” denotes the model without the pretrained language model BERT, and “-path” denotes the model without propagation path information. The experimental results indicate that the accuracy decreases whenever any component is removed. For example, without the propagation structural information features, the model’s accuracy drops by approximately 8.7%.

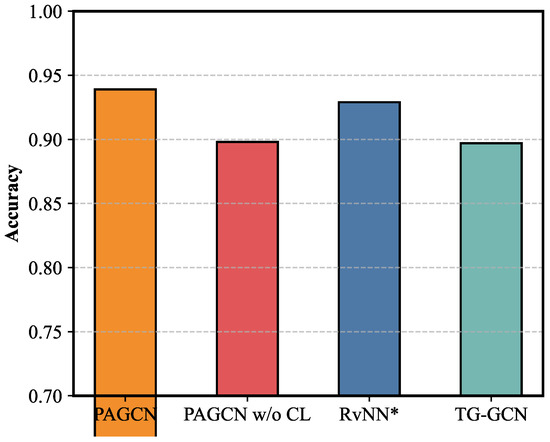

To verify the effectiveness of the introduced contrastive learning method, we removed the contrastive learning method from the model and conducted experimental analysis with the simple fusion baselines RvNN * and TG-GCN. CL represents the removal of contrastive learning methods from the model, and the comparative analysis results on Weibo are shown in Figure 3.

Figure 3.

The ablation experiment on Weibo.

In Figure 3, the comparative analysis results on Weibo show that the PAGCN model exhibits significant advantages over baseline methods (PAGCN without contrastive loss and RvNN * and TG-GCN without contrastive loss) after introducing the contrastive learning mechanism. It is 1% and 4.2% higher than RvNN * (This is an improved version of RvNN, which is a model from the literature) and TG-GCN without the contrastive analysis mechanism, respectively. This improvement is due to the fact that contrastive loss effectively distinguishes noise interference in heterogeneous space through negative sampling, while a simple connection strategy leads to feature confusion due to the lack of explicit crossmodal constraints. Experimental data verifies the necessity of contrastive learning in latent space mapping, which achieves more robust concept alignment than traditional fusion strategies by maximizing mutual information.

4.5. Experimental Results and Analysis of Early Rumor Detection Based on PAGCN Model

To answer RQ3, the early stage of a rumor usually refers to the few retweets or the first few hours after the rumor is posted. This section evaluates the early rumor detection ability of the proposed method and other baseline methods by limiting the number of retweets of the source blog post and the passage time after its publication, respectively.

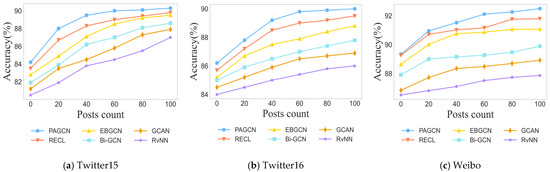

4.5.1. Early Rumor Detection with Limited Retweets

The performance of the model is evaluated by controlling the number of user retweets since the source blog post was published and calculating the accuracy of rumor detection across different time intervals. The early rumor detection results, based on varying forwarding times, are shown in Figure 4. From the data in the graph, it can be observed that as the number of retweets increases, the accuracy of the PAGCN detection improves. PAGCN and RECL demonstrate a high accuracy on different datasets after the initial propagation of rumors. This trend indicates that PAGCN efficiently integrates the information from adjacent nodes and learns accurate representations of node features, thereby enhancing the model’s ability to detect rumors in the early stages of information dissemination. On the Weibo dataset, PAGCN outperforms RECL when fewer than 10 blog posts are involved. This advantage can be attributed to PAGCN’s ability to explore intra-layer dependencies formed by multiple bloggers reposting the same blog post, which shows that the proposed method can effectively model both inter-layer and intra-layer dependencies simultaneously, leading to more accurate rumor detection results.

Figure 4.

Early rumor detection results based on different number of retweets.

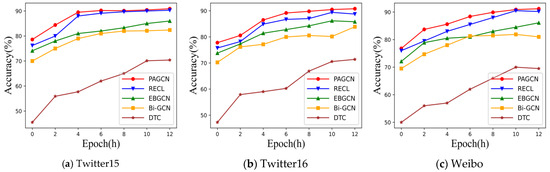

4.5.2. Early Rumor Detection in Finite Time

By controlling the publication time of the source blog post within a specified deadline, the accuracy of rumor detection over different periods is calculated to evaluate the performance of the PAGCN model. The experimental results are shown in Figure 5. As time progresses, various detection methods show a certain improvement, indicating that the structural information becomes increasingly rich as the information propagates.

Figure 5.

Early rumor detection results based on different deadlines.

Furthermore, in the early stages of information propagation, the graph-based structure captures more comprehensive characteristics of the propagation pattern, leading to a superior detection performance compared to other baselines. Notably, on the Weibo dataset, the rumor detection performance of PAGCN outperforms all the baseline models.

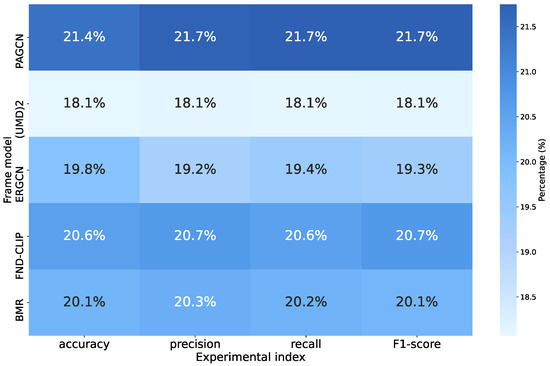

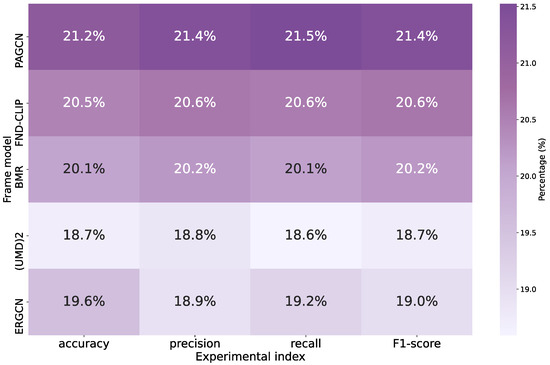

4.6. Effects of the Attention Mechanisms

In this study, the introduction of the attention mechanism significantly enhances the accuracy and robustness of rumor propagation analysis. By dynamically adjusting the weights of nodes in the propagation graph, the attention mechanism allows the model to focus on key propagation paths and features. For instance, in the PAGCN algorithm, the attention module directs the model’s focus toward nodes with a high propagation risk by calculating the semantic correlation between nodes.

The experiments presented in Figure 6 and Figure 7 demonstrate that, compared to UMD2, the model incorporating the attention mechanism improves the accuracy of rumor classification by approximately 2.5–3.3% across different datasets. Additionally, the F1 score for early detection in dynamic propagation scenarios increases by about 2.7–3.6%. Furthermore, the layered attention mechanism uncovers the hierarchical structure of communication, effectively reducing noise interference by capturing both local interaction features and global communication patterns through a two-stage attention process. These improvements make the model more suitable for complex communication scenarios.

Figure 6.

Results of average attention weights on Twitter16.

Figure 7.

Results of average attention weights on Weibo.

5. Conclusions

This paper proposes a rumor detection method by combining structural semantic relationships and the attention mechanism (Path Attention Graph Convolution Network Rumor Detection, PAGCN). It is helpful for integrating modern graph analysis methods and text semantic representation methods to solve the low accurate problem of traditional rumor detection. The proposed PAGCN model shows the competitive results and has the potential for further research. In the early stage of training, it shows stability, and in the fourth stage, the curve tends to be smooth. Experiments show that the accuracy of the framework is 90.9% and 93.9% on twitter15 and Weibo datasets, respectively, and the F1 score on microblog datasets is 94%, which confirms the effectiveness of the framework in capturing the propagation structure and semantic information at the same time. The limitation of the our proposed PAGCN method in this paper are not very good in the detection of multi-modal rumors. In the future, we will focus on the multimodal feature fusion method for rumor detection.

Author Contributions

Conceptualization, X.L. and D.W.; methodology, software, validation, formal analysis, X.L.; investigation, writing—review and editing, D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the Key Project of Chongqing Municipal Education Commission (23SKGH247), Graduate Innovation Fund of Chongqing University of Technology (gzlcx20242044).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bian, T.; Xiao, X.; Xu, T.Y.; Zhao, P.L.; Huang, W.B.; Rong, Y.; Huang, J.Z. Rumor detection on social media with bi-directional graph convolutional networks. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, Menlo Park, CA, USA, 7–12 February 2020; pp. 549–556. [Google Scholar]

- Liu, X.Y.; Wen, G.L.; Wang, A.J.; Liu, C.; Wei, W.; Meo, P. GGDHSCL: A Graph Generative Diffusion with Hard Negative Sampling Contrastive Learning Recommendation Method. IEEE Trans. Comput. Soc. Syst. 2025, 16, 1–16. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Lu, M.; Huang, Z.; Li, B.; Zhao, Y.; Qin, Z.; Li, D. SIFTER: A Framework for Robust Rumor Detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 429–442. [Google Scholar] [CrossRef]

- Liu, X.Y.; Han, Q.; Wang, W.; Zhan, X.X.; Lu, L.; Ye, Z.J. GAWF: Influence Maximization Method Based on Graph Attention Weight Fusion. Int. J. Mod. Phys. C. 2025, 22, 2542002. [Google Scholar] [CrossRef]

- Zhang, K.Q.; Liu, X.Y.; Zhao, N.; Liu, S.; Li, C.R. Dual channel semantic enhancement-based convolutional neural networks model for text classification. Int. J. Mod. Phys. C. 2025, 36, 2442012. [Google Scholar] [CrossRef]

- Yu, J.L.; Yin, H.Z.; Xia, X.; Chen, T.; Cui, L.Z.; Nguyen, Q.V.H. Are graph augmentations necessary? Simple graph contrastive learning for recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1294–1303. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 2171–2186. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P. Detecting rumors from microblogs with recurrent neural networks. In Proceedings of the 25th International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 3818–3824. [Google Scholar]

- Liu, X.; Zhao, Y.; Zhang, Y.; Liu, C.; Yang, F. Social Network Rumor Detection Method Combining Dual-Attention Mechanism with Graph Convolutional Network. IEEE Trans. Comput. Soc. Syst. 2023, 10, 2350–2361. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph attention networks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–12. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Tschannen, M.; Djolonga, J.; Rubenstein, P.K.; Gelly, S.; Lucic, M. On Mutual Information Maximization for Representation Learning. arXiv 2019, arXiv:1907.13625. [Google Scholar]

- Ma, J.; Gao, W. Debunking Rumors on Twitter with Tree Transformer. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5455–5466. [Google Scholar]

- Shu, K.; Wang, S.; Liu, H. Beyond news contents: The role of social context for fake news detection. In Proceedings of the 12th ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 312–323. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the 2017 International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 1202–1214. [Google Scholar]

- Mehta, N.; Pacheco, M.L.; Goldisser, D. Tackling fake news detection by continually improving social context representations using graph neural networks. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 1363–1380. [Google Scholar]

- Gao, W.; Fang, Y.; Li, L.; Tao, X.H. Event detection in social media via graph neural network. In Proceedings of the 22nd International Conference on Web Information Systems Engineering, Melbourne, Australia, 26–29 October 2021; pp. 1370–1384. [Google Scholar]

- Wei, L.W.; Hu, D.; Zhou, W.; Yue, Z.J.; Hu, S.L. Edge-enhanced Bayesian graph convolutional networks for rumor detection. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Bangkok, Thailand, 1–6 August 2021; pp. 3845–3854. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing (EMNLP), Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Sattarov, O.; Choi, J. Detection of Rumors and Their Sources in Social Networks: A Comprehensive Survey. IEEE Trans. Big Data. 2025, 11, 1528–1547. [Google Scholar] [CrossRef]

- Ma, G.; Hu, C.; Ge, L.; Zhang, H. DSMM: A dual stance-aware multi-task model for rumour veracity on social networks. Inf. Process. Manag. 2024, 6, 103528. [Google Scholar] [CrossRef]

- Huang, Q.; Zhou, C.; Wu, J.; Liu, L.C.; Wang, B. Deep spatial-temporal structure learning for rumor detection. Neural Comput. Appl. 2020, 35, 12995–13005. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W. An attention-based rumor detection model with tree-structured recursive neural networks. ACM Trans. Intell. Syst. Technol. 2020, 11, 1124–1138. [Google Scholar] [CrossRef]

- Khoo, L.; Chieu, H.; Qian, Z.; Jiang, J. Interpretable rumor detection in microblogs by attending to user interactions. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, Menlo Park, CA, USA, 7–12 February 2020; pp. 2783–2792. [Google Scholar]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on Twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 675–684. [Google Scholar]

- Ma, J.; Gao, W.; Wei, Z.; Lu, Y.; Wong, K.F. Detect rumors using time series of social context information on microblogging websites. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management, Melbourne, Australia, 19–23 October 2015; pp. 1741–1754. [Google Scholar]

- Lu, Y.-J.; Li, C.-T. GCAN: Graph-aware co-attention networks for explainable fake news detection on social media. arXiv 2020, arXiv:2004.11648. [Google Scholar]

- Wu, K.; Yang, S.; Zhu, K. False rumors detection on Sina Weibo by propagation structures. In Proceedings of the IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; pp. 651–662. [Google Scholar]

- Sun, T.; Qian, Z.; Dong, S.; Li, P.; Zhu, Q. Rumor detection on social media with graph adversarial contrastive learning. In Proceedings of the ACM Web Conference, Lyon, France, 25–29 April 2022; pp. 2789–2797. [Google Scholar]

- Wu, J.; Xu, W.; Liu, Q.; Wu, S.; Wang, L. Adversarial contrastive learning for evidence-aware fake news detection with graph neural networks. IEEE Trans. Knowl. Data Eng. 2023, 36, 4589–4597. [Google Scholar] [CrossRef]

- Wu, L.; Lin, H.; Gao, Z.; Tan, C.; Li, S. Self-supervised learning on graphs: Contrastive, generative, or predictive. IEEE Trans. Knowl. Data Eng. 2021, 35, 659–668. [Google Scholar] [CrossRef]

- Wu, S.; Tang, Y.; Zhu, Y.; Wang, L.; Xie, X.; Tan, T. Session-based recommendation with graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 343–353. [Google Scholar]

- Xu, Y.; Hu, J.; Ge, J.; Wu, Y.; Li, T.; Li, H. Contrastive Learning at the Relation and Event Level for Rumor Detection. In Proceedings of the ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023; pp. 1531–1545. [Google Scholar]

- Lao, A.; Zhang, Q.; Shi, C.; Cao, L.; Yi, K.; Hu, L.; Miao, D. Frequency Spectrum is More Effective for Multimodal Representation and Fusion: A Multimodal Spectrum Rumor Detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 2985–2997. [Google Scholar]

- Silva, A.; Luo, L.; Karunasekera, S.; Leckie, C. Unsupervised Domain-agnostic Fake News Detection using Multi-modal Weak Signals. arXiv 2023, arXiv:2305.11349. [Google Scholar] [CrossRef]

- Li, L.; Jin, D.; Wang, X.; Guo, F.; Wang, L.; Dang, J. Multi-modal sarcasm detection based on cross-modal composition of inscribed entity relations. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence, Atlanta, GA, USA, 6–8 November 2023; pp. 918–925. [Google Scholar]

- Zhou, Y.; Yang, Y.; Ying, Q.; Qian, Z.; Zhang, X. Multimodal fake news detection via clip-guided learning. In Proceedings of the IEEE International Conference on Multimedia and Expo, Brisbane, Australia, 10–14 July 2023; pp. 2825–2830. [Google Scholar]

- Ying, Q.; Hu, X.; Zhou, Y.; Qian, Z.; Zeng, D.; Ge, S. Boot strapping multi-view representations for fake news detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 5384–5392. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).