Deep Reinforcement and IL for Autonomous Driving: A Review in the CARLA Simulation Environment

Abstract

1. Introduction

2. Reinforcement Learning in Autonomous Driving

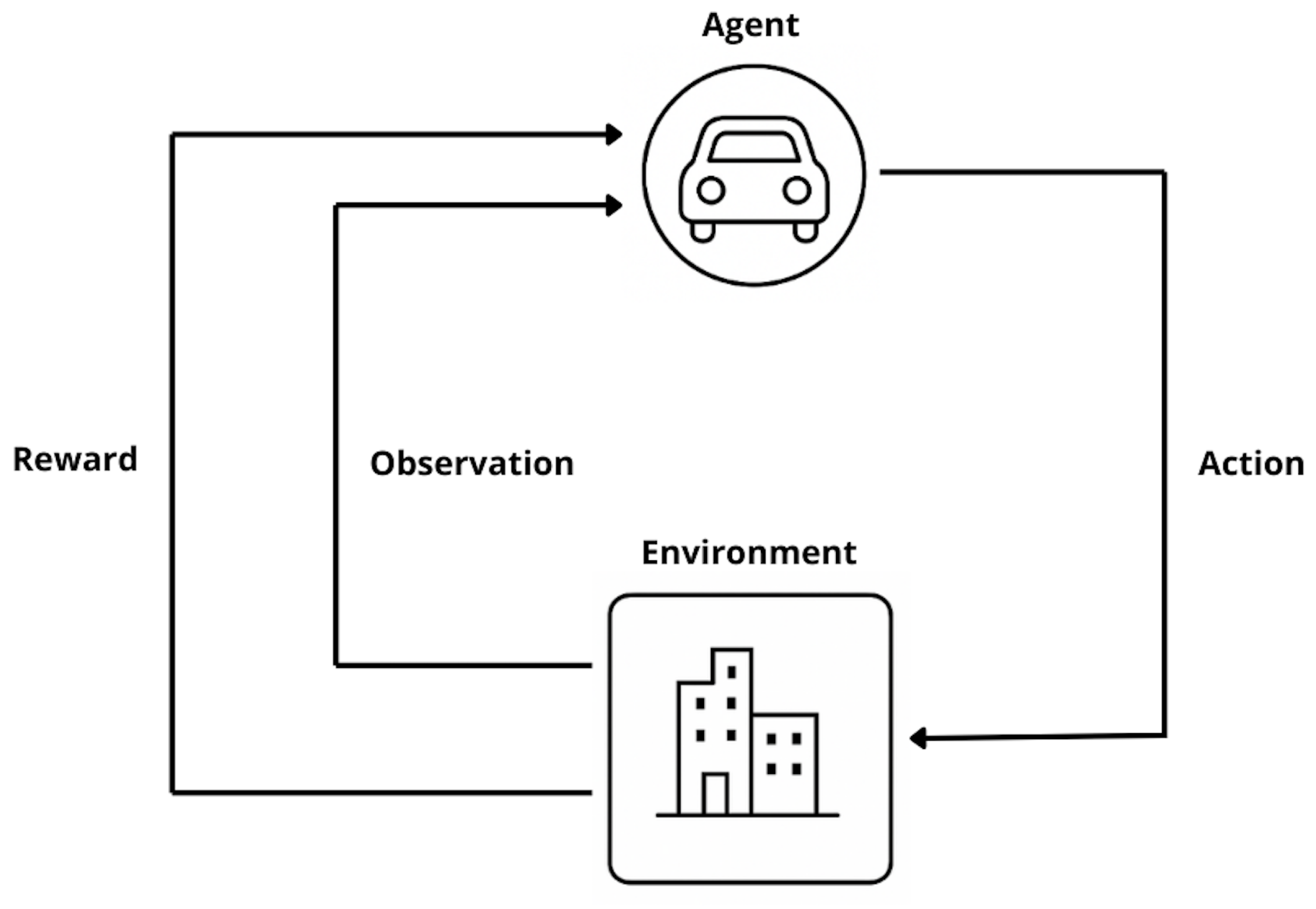

2.1. Overview of Reinforcement Learning

2.2. Related Works About Autonomous Driving

3. Review of Reinforcement Learning Approaches

4. Gaps and Limitations in RL-Based Methods

- Limited Generalization to Real-World Scenarios—Some RL-based models exhibit strong performance within narrowly defined simulation environments but fail to generalize to the complexities of real-world driving. For instance, the agent described in [8] was trained under constrained conditions using a binary action set (stop or proceed). Although suitable for intersection navigation in simulation, this minimalist approach lacks the flexibility and robustness required for dynamic urban environments characterized by unpredictable agent interactions, diverse traffic rules, and complex road layouts.

- Pipeline Complexity and Practical Deployment Issues—Advanced RL systems often involve highly layered and interdependent components, which, while enhancing learning performance in simulation, may hinder real-time applicability. The architecture proposed in [11] integrates multiple sub-models for driving and braking decisions. Despite its efficacy within the training context, the increased architectural complexity poses challenges in deployment scenarios, such as increased inference latency, reduced system interpretability, and difficulties in modular updates or extensions.

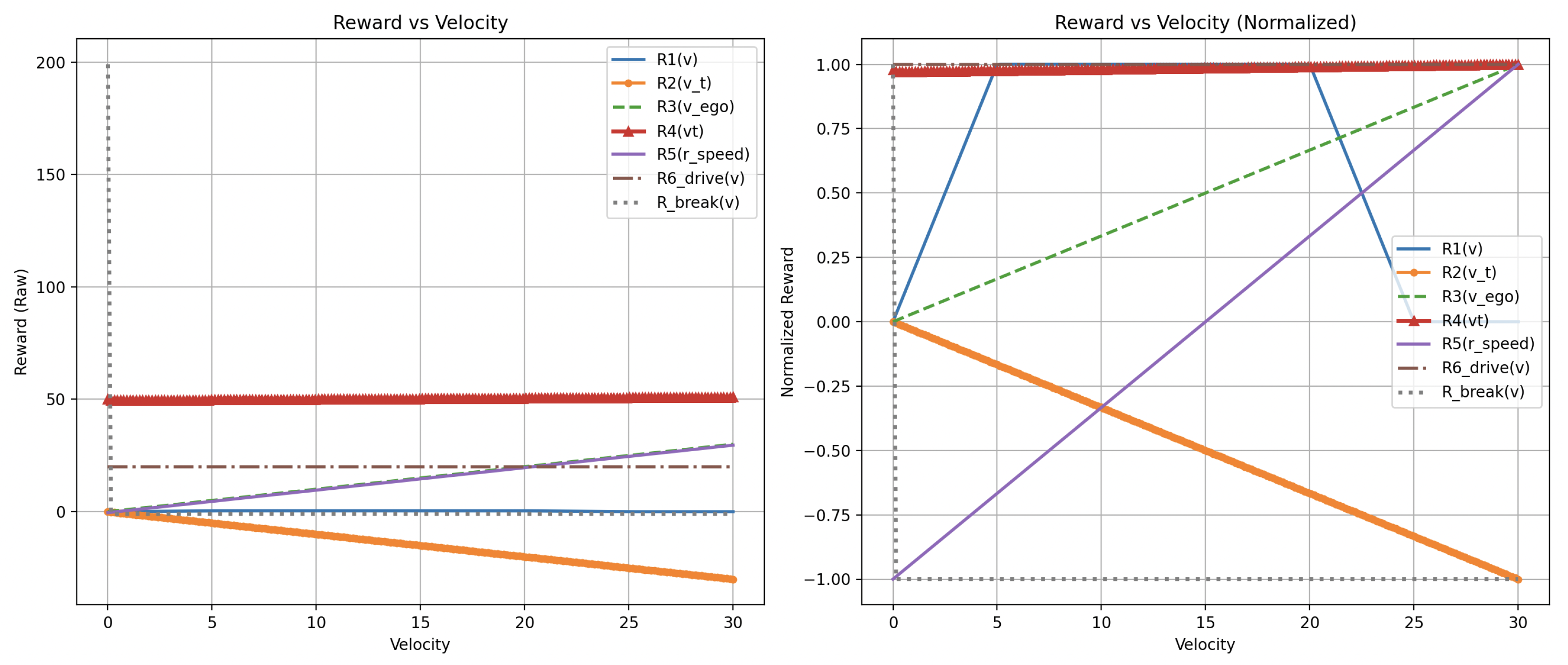

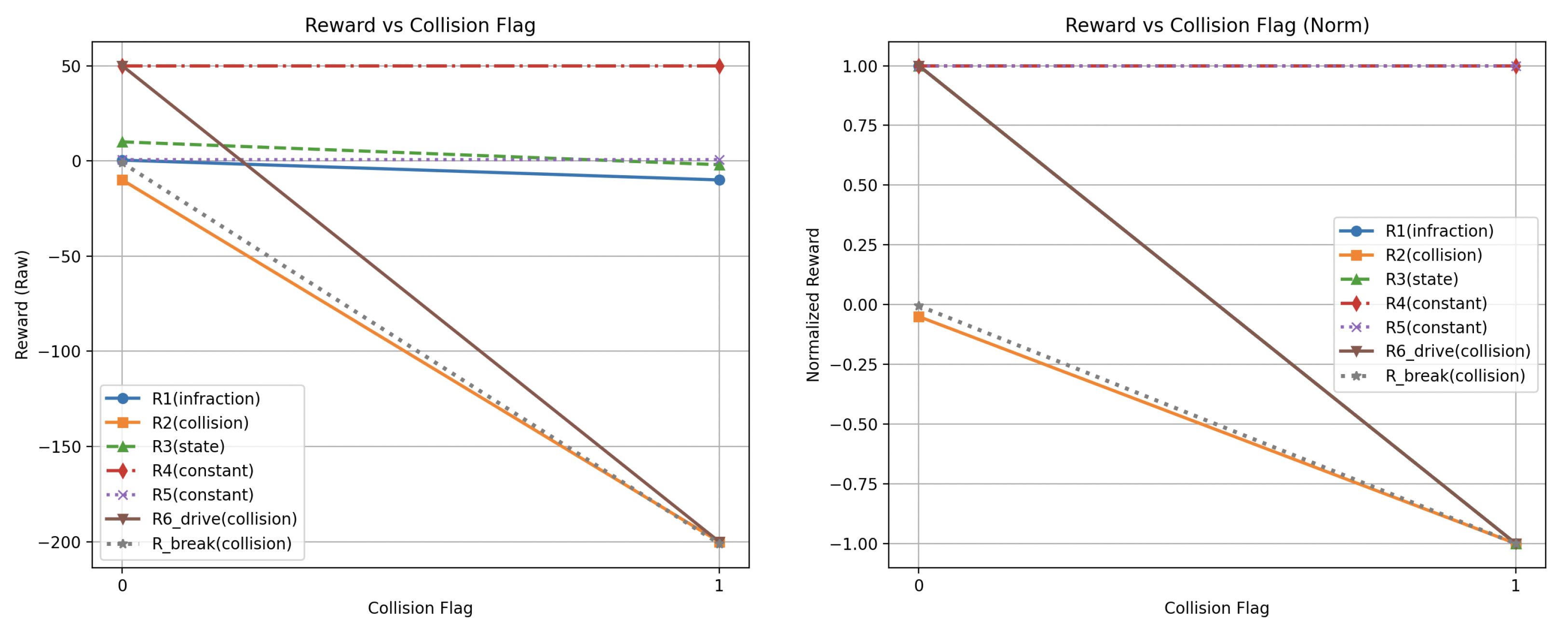

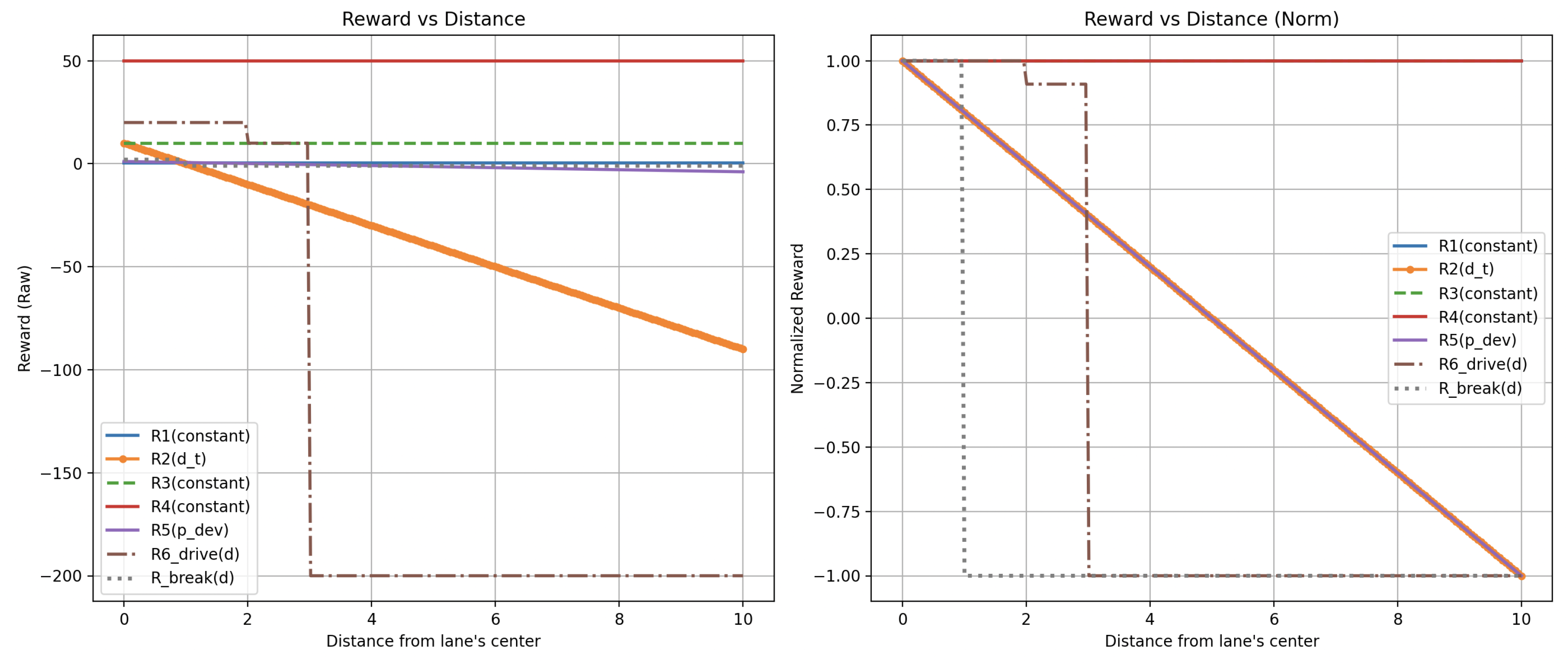

- Reward Function Design and Safety Trade-offs—Crafting a reward function that effectively balances task completion with safe, rule-compliant behavior remains an ongoing challenge. Poorly calibrated rewards may inadvertently incentivize agents to exploit loopholes or adopt high-risk behaviors that maximize rewards at the cost of safety. The reward formulation presented in [7] mitigates this risk by incorporating lane deviation, velocity alignment, and collision penalties. Nevertheless, even well-intentioned designs can result in unintended behaviors if the agent learns to over-prioritize specific features, highlighting the need for reward tuning and safety regularization.Several works have specifically tackled these limitations by employing advanced RL strategies. Yang et al. (2021) developed uncertainty-aware collision avoidance techniques to enhance safety in autonomous vehicles [59]. Fang et al. (2022) proposed hierarchical reinforcement learning frameworks addressing the complexity of urban driving environments [60]. Cui et al. (2023) further advanced curriculum reinforcement learning methods to tackle complex and dynamic driving scenarios effectively [61]. Feng et al. (2025) utilized domain randomization strategies to enhance the generalization of RL policies, significantly improving performance across varying simulated environments [62].

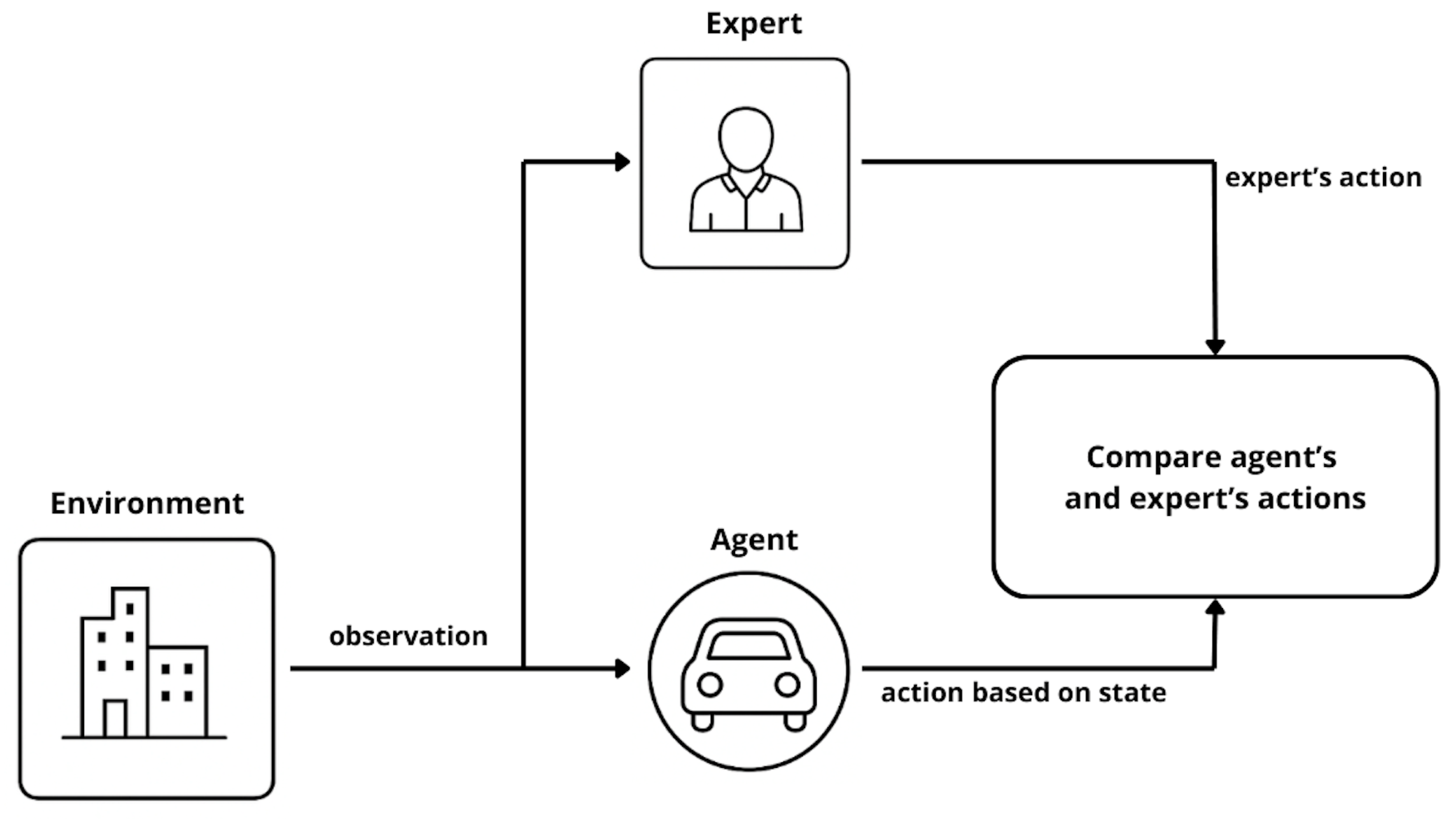

5. Imitation Learning (IL) for Autonomous Driving

- Agent—The learner that interacts with the environment. Unlike in reinforcement learning, the agent does not rely on scalar rewards but instead learns to imitate the expert’s demonstrated behavior.

- Expert—Typically, a human operator or a pretrained model that provides high-quality demonstration trajectories. These trajectories serve as the ground truth for training.

- Model-based vs. Model-free—Model-based approaches attempt to build a transition model of the environment and use it for planning. Model-free techniques rely solely on observed expert trajectories without reconstructing the environment’s internal dynamics.

- Policy-based vs. Reward-based—Policy-based methods directly learn a mapping from states to actions, while reward-based methods (such as inverse reinforcement learning) infer the expert’s reward function before deriving a policy.

5.1. IL-Based Architectures

5.2. Hybrid Solutions

6. Comparative Analysis and Discussion

- Baseline approaches tend to be simple and narrowly scoped. Papers comparing DQN and DDPG in Town 1 or intersections under limited conditions fall here. They offer important groundwork but do little to stress-test agents’ generalization across environments.

- High-complexity, high-diversity methods push boundaries. State-of-the-art works like Think2Drive and Raw2Drive introduce latent world models or dual sensor-stream inputs, validated across dozens to hundreds of scenarios. These methods exemplify increased policy adaptability to rare and corner cases.

- Hybrid and multi-objective agents indicate a shifting paradigm. While still relatively underexplored, works that incorporate user preferences or multi-objective reward structures begin to bridge from pure RL toward human-centric driving goals.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RL | Reinforcement Learning |

| IL | Imitation Learning |

| CARLA | Car Learning to Act (Autonomous Driving Simulator) |

| CNN | Convolutional Neural Network |

| BEV | Bird’s Eye View |

| PGV | Polar Grid View |

| IRL | Inverse Reinforcement Learning |

| GAIL | Generative Adversarial IL |

| MDP | Markov Decision Process |

| PPO | Proximal Policy Optimization |

| DQN | Deep Q-Network |

| A3C | Asynchronous Advantage Actor–Critic |

| LiDAR | Light Detection and Ranging |

| RGB | Red–Green–Blue (Color Image Input) |

| BC | Behavioral Cloning |

| DAgger | Dataset Aggregation |

| PID | Proportional–Integral–Derivative (Controller) |

| PGM | Probabilistic Graphical Model |

Appendix A. Supplementary Results

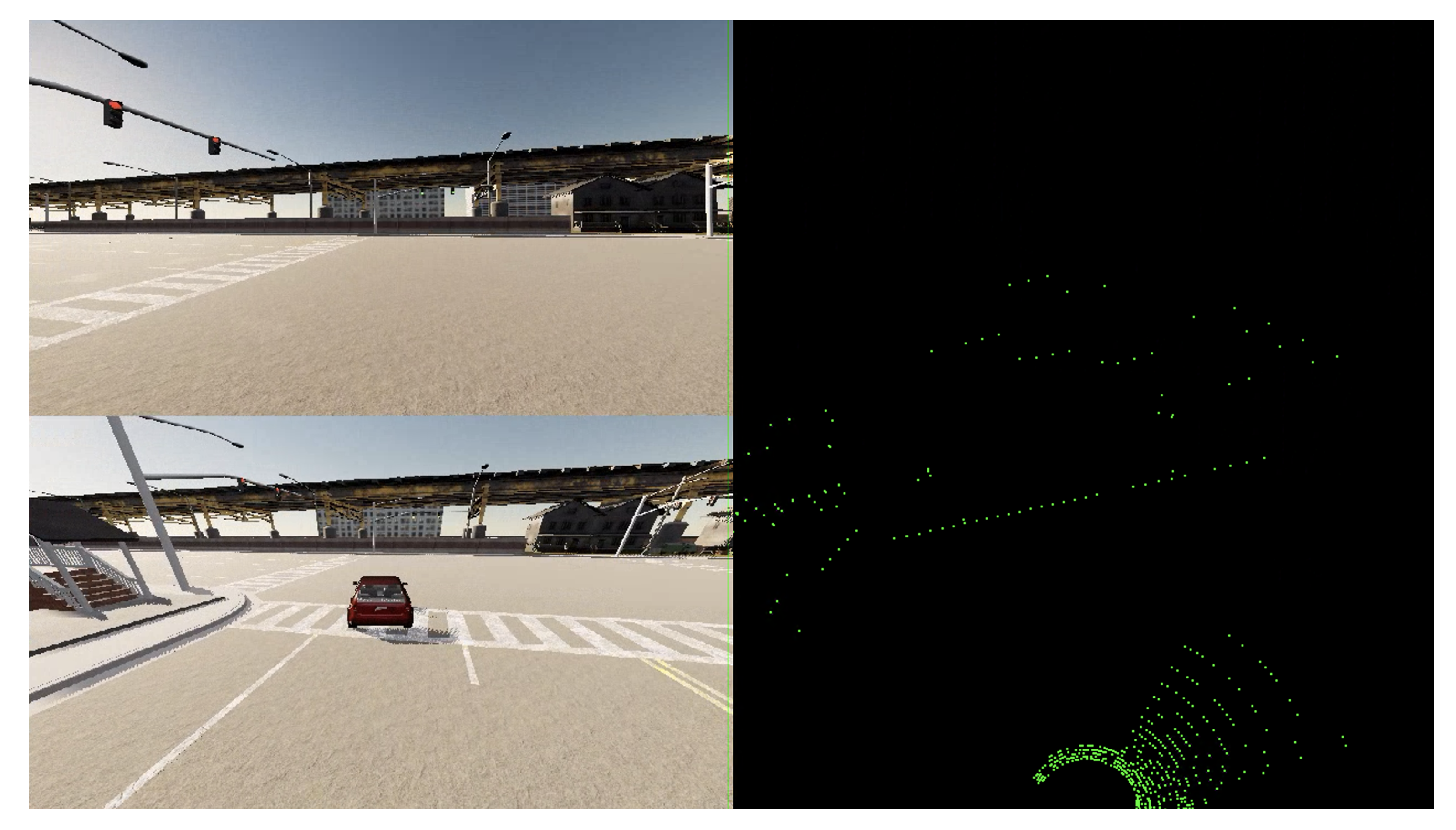

CARLA Simulator as an Evaluation Platform

- Management and control of both vehicles and pedestrians;

- Environment customization (e.g., weather, lighting, time of day);

- Integration of various sensors such as LiDAR, radar, GPS, and RGB cameras;

- Python/C++ APIs for interaction and simulation control.

References

- Dhinakaran, M.; Rajasekaran, R.T.; Balaji, V.; Aarthi, V.; Ambika, S. Advanced Deep Reinforcement Learning Strategies for Enhanced Autonomous Vehicle Navigation Systems. In Proceedings of the 2024 2nd International Conference on Computer, Communication and Control (IC4), Indore, India, 8–10 February 2024. [Google Scholar]

- Govinda, S.; Brik, B.; Harous, S. A Survey on Deep Reinforcement Learning Applications in Autonomous Systems: Applications, Open Challenges, and Future Directions. IEEE Trans. Intell. Transp. Syst. 2025, 26, 11088–11113. [Google Scholar] [CrossRef]

- Kong, Q.; Zhang, L.; Xu, X. Constrained Policy Optimization Algorithm for Autonomous Driving via Reinforcement Learning. In Proceedings of the 2021 6th International Conference on Image, Vision and Computing (ICIVC), Qingdao, China, 23–25 July 2021. [Google Scholar]

- Kim, S.; Kim, G.; Kim, T.; Jeong, C.; Kang, C.M. Autonomous Vehicle Control Using CARLA Simulator, ROS, and EPS HILS. In Proceedings of the 2025 International Conference on Electronics, Information, and Communication (ICEIC), Osaka, Japan, 19–22 January 2025. [Google Scholar]

- Malik, S.; Khan, M.A.; El-Sayed, H. CARLA: Car Learning to Act—An Inside Out. Procedia Comput. Sci. 2022, 198, 742–749. [Google Scholar] [CrossRef]

- Razak, A.I. Implementing a Deep Reinforcement Learning Model for Autonomous Driving. 2022. Available online: https://faculty.utrgv.edu/dongchul.kim/6379/t14.pdf (accessed on 30 July 2025).

- Pérez-Gil, Ó.; Barea, R.; López-Guillén, E.; Bergasa, L.M.; Gómez-Huélamo, C.; Gutiérrez, R.; Díaz-Díaz, A. Deep Reinforcement Learning Based Control for Autonomous Vehicles in CARLA. Electronics 2022, 11, 1035. [Google Scholar] [CrossRef]

- Gutiérrez-Moreno, R.; Barea, R.; López-Guillén, E.; Araluce, J.; Bergasa, L.M. Reinforcement Learning-Based Autonomous Driving at Intersections in CARLA Simulator. Sensors 2022, 22, 8373. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; López, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. arXiv 2017, arXiv:1711.03938. [Google Scholar] [CrossRef]

- Li, Q.; Jia, X.; Wang, S.; Yan, J. Think2Drive: Efficient Reinforcement Learning by Thinking with Latent World Model for Autonomous Driving (in CARLA-V2). IEEE Trans. Intell. Veh. 2024. early access. [Google Scholar] [CrossRef]

- Nehme, G.; Deo, T.Y. Safe Navigation: Training Autonomous Vehicles using Deep Reinforcement Learning in CARLA. Sensors 2023, 23, 7611. [Google Scholar]

- Codevilla, F.; Müller, M.; López, A.; Koltun, V.; Dosovitskiy, A. End-to-end Driving via Conditional IL. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2017. [Google Scholar]

- Chen, D.; Zhou, B.; Koltun, V.; Krähenbühl, P. Learning by Cheating. arXiv 2019, arXiv:1912.12294. [Google Scholar] [CrossRef]

- Eraiqi, H.M.; Moustafa, M.N.; HÖner, J. Dynamic Conditional IL for Autonomous Driving. arXiv 2022, arXiv:2211.11579. [Google Scholar]

- Abdou, M.; Kamai, H.; El-Tantawy, S.; Abdelkhalek, A.; Adei, O.; Hamdy, K.; Abaas, M. End-to-End Deep Conditional IL for Autonomous Driving. In Proceedings of the 2019 31st International Conference on Microelectronics (ICM), Cairo, Egypt, 15–18 December 2019. [Google Scholar]

- Li, Z. A Hierarchical Autonomous Driving Framework Combining Reinforcement Learning and IL. In Proceedings of the 2021 International Conference on Computer Engineering and Application (ICCEA), Kunming, China, 25–27 June 2021. [Google Scholar]

- Sakhai, M.; Wielgosz, M. Towards End-to-End Escape in Urban Autonomous Driving Using Reinforcement Learning. In Intelligent Systems and Applications. IntelliSys 2023; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 823. [Google Scholar] [CrossRef]

- Kołomański, M.; Sakhai, M.; Nowak, J.; Wielgosz, M. Towards End-to-End Chase in Urban Autonomous Driving Using Reinforcement Learning. In Intelligent Systems and Applications. IntelliSys 2022; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2023; Volume 544. [Google Scholar] [CrossRef]

- Sakhai, M.; Mazurek, S.; Caputa, J.; Argasiński, J.K.; Wielgosz, M. Spiking Neural Networks for Real-Time Pedestrian Street-Crossing Detection Using Dynamic Vision Sensors in Simulated Adverse Weather Conditions. Electronics 2024, 13, 4280. [Google Scholar] [CrossRef]

- Sakhai, M.; Sithu, K.; Soe Oke, M.K.; Wielgosz, M. Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA. Appl. Sci. 2025, 15, 7493. [Google Scholar] [CrossRef]

- Huang, Y.; Xu, X.; Yan, Y.; Liu, Z. Transfer Reinforcement Learning for Autonomous Driving under Diverse Weather Conditions. IEEE Trans. Intell. Veh. 2022, 7, 593–603. [Google Scholar]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Zhu, Z.; Zhao, H. A survey of deep RL and IL for autonomous driving policy learning. IEEE Trans. Intell. Transp. Syst. 2021, 23, 14043–14065. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Elavarasan, D.; Vincent, P.M.D. Crop Yield Prediction Using Deep Reinforcement Learning Model for Sustainable Agrarian Applications. IEEE Access 2020, 8, 86886–86901. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Lue, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Cui, J.; Liu, Y.; Arumugam, N. Multi-Agent Reinforcement Learning-Based Resource Allocation for UAV Networks. IEEE Trans. Wirel. Commun. 2019, 19, 729–743. [Google Scholar] [CrossRef]

- Shaukat, K.; Luo, S.; Varadharajan, V.; Hameed, I.; Xu, M. A Survey on Machine Learning Techniques for Cyber Security in the Last Decade. IEEE Access 2020, 8, 222310–222354. [Google Scholar] [CrossRef]

- Ye, H.; Li, G.Y.; Juang, B.F. Deep Reinforcement Learning Based Resource Allocation for V2V Communications. IEEE Trans. Veh. Technol. 2019, 68, 3163–3173. [Google Scholar] [CrossRef]

- Le, L.; Nguyen, T.N. DQRA: Deep Quantum Routing Agent for Entanglement Routing in Quantum Networks. IEEE Trans. Quantum Eng. 2022, 3, 4100212. [Google Scholar] [CrossRef]

- Scholköpf, B.; Locatello, F.; Bauer, S.; Ke, N.R.; Kalchbrenner, N.; Goyal, A.; Bengio, Y. Toward Causal Representation Learning. Proc. IEEE 2021, 109, 612–634. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, H.; Wang, L.; Luo, X.; Song, Y. Mixed Deep Reinforcement Learning Considering Discrete-continuous Hybrid Action Space for Smart Home Energy Management. J. Mod. Power Syst. Clean Energy 2022, 10, 743–754. [Google Scholar] [CrossRef]

- Sogabe, T.; Malla, D.B.; Takayama, S.; Shin, S.; Sakamoto, K.; Yamaguchi, K.; Singh, T.P.; Sogabe, M.; Hirata, T.; Okada, Y. Smart Grid Optimization by Deep Reinforcement Learning over Discrete and Continuous Action Space. In Proceedings of the 2018 IEEE 7th World Conference on Photovoltaic Energy Conversion (WCPEC), Waikoloa, HI, USA, 10–15 June 2018. [Google Scholar]

- Guériau, M.; Cardozo, N.; Dusparic, I. Constructivist Approach to State Space Adaptation in Reinforcement Learning. In Proceedings of the 2019 IEEE 13th International Conference on Self-Adaptive and Self-Organizing Systems (SASO), Umea, Sweden, 16–20 June 2019. [Google Scholar]

- Abdulazeez, D.H.; Askar, S.K. Offloading Mechanisms Based on Reinforcement Learning and Deep Learning Algorithms in the Fog Computing Environment. IEEE Access 2023, 11, 12555–12586. [Google Scholar] [CrossRef]

- Lapan, M. Głębokie Uczenie Przez Wzmacnianie. Praca z Chatbotami Oraz Robotyka, Optymalizacja Dyskretna i Automatyzacja Sieciowa w Praktyce; Helion: Everett, WA, USA, 2022. [Google Scholar]

- Mahadevkar, S.V.; Khemani, B.; Patil, S.; Kotecha, K.; Vora, D.R.; Abraham, A.; Gabralla, L.A. A Review on Machine Learning Styles in Computer Vision—Techniques and Future Directions. IEEE Access 2022, 10, 107293–107329. [Google Scholar] [CrossRef]

- Shukla, I.; Dozier, H.R.; Henslee, A.C. A Study of Model Based and Model Free Offline Reinforcement Learning. In Proceedings of the 2022 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2022. [Google Scholar]

- Hyang, Q. Model-Based or Model-Free, a Review of Approaches in Reinforcement Learning. In Proceedings of the 2020 International Conference on Computing and Data Science (CDS), Stanford, CA, USA, 1–2 August 2020. [Google Scholar]

- Beyon, H. Advances in Value-based, Policy-based, and Deep Learning-based Reinforcement Learning. Int. J. Adv. Comput. Sci. Appl. 2023, 14. [Google Scholar] [CrossRef]

- Liu, M.; Wan, Y.; Lewis, F.L.; Lopez, V.G. Adaptive Optimal Control for Stochastic Multiplayer Differential Games Using On-Policy and Off-Policy Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5522–5533. [Google Scholar] [CrossRef]

- Banerjee, C.; Chen, Z.; Noman, N.; Lopez, V.G. Improved Soft Actor-Critic: Mixing Prioritized Off-Policy Samples With On-Policy Experiences. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 3121–3129. [Google Scholar] [CrossRef] [PubMed]

- Toromanoff, M.; Wirbel, E.; Moutarde, F. End-to-end Model-free Reinforcement Learning for Urban Driving Using Implicit Affordances. arXiv 2020, arXiv:2001.09445. [Google Scholar]

- Wang, Y.; Chitta, K.; Liu, H.; Chernova, S.; Schmid, C. InterFuser: Safety-Enhanced Autonomous Driving Using Interpretable Sensor Fusion Transformer. arXiv 2021, arXiv:2109.05499. [Google Scholar]

- Chen, Y.; Li, H.; Wang, Y.; Tomizuka, M. Learning Safe Multi-Vehicle Cooperation with Policy Optimization in CARLA. IEEE Robot. Autom. Lett. 2021, 6, 3568–3575. [Google Scholar]

- Liu, Y.; Zhang, Q.; Zhao, L. Multi-Agent Reinforcement Learning for Cooperative Autonomous Vehicles in CARLA. J. Intell. Transp. Syst. 2025, 29, 198–212. [Google Scholar]

- Nikpour, B.; Sinodinos, D.; Armanfard, N. Deep Reinforcement Learning in Human Activity Recognition: A Survey. TechRxiv 2022. [Google Scholar] [CrossRef]

- Kim, J.; Kim, G.; Hong, S.; Cho, S. Advancing Multi-Agent Systems Integrating Federated Learning with Deep Reinforcement Learning: A Survey. In Proceedings of the 2024 Fifteenth International Conference on Ubiquitous and Future Networks (ICUFN), Budapest, Hungary, 2–5 July 2024. [Google Scholar]

- Bertsekas, D.P. Model Predictive Control and Reinforcement Learning: A Unified Framework Based on Dynamic Programming. IFAC-PapersOnLine 2024, 58, 363–383. [Google Scholar] [CrossRef]

- Vu, T.M.; Moezzi, R.; Cyrus, J.; Hlava, J. Model Predictive Control for Autonomous Driving Vehicles. Electronics 2021, 10, 2593. [Google Scholar] [CrossRef]

- Jia, Z.; Yang, Y.; Zhang, S. Towards Realistic End-to-End Autonomous Driving with Model-Based Reinforcement Learning. arXiv 2020, arXiv:2006.06713. [Google Scholar]

- Yang, Z.; Jia, X.; Li, Q.; Yang, X.; Yao, M.; Yan, J. Raw2Drive: Reinforcement Learning with Aligned World Models for End-to-End Autonomous Driving (in CARLA v2). arXiv 2025, arXiv:2505.16394. [Google Scholar]

- Uppuluri, B.; Patel, A.; Mehta, N.; Kamath, S.; Chakraborty, P. CuRLA: Curriculum Learning Based Deep Reinforcement Learning For Autonomous Driving. arXiv 2025, arXiv:2501.04982. [Google Scholar] [CrossRef]

- Chen, J.; Peng, Y.; Wang, X. Reinforcement Learning-Based Motion Planning for Autonomous Vehicles at Unsignalized Intersections. Transp. Res. Part C 2023, 158, 104945. [Google Scholar]

- Surmann, H.; de Heuvel, J.; Bennewitz, M. Multi-Objective Reinforcement Learning for Adaptive Personalized Autonomous Driving. arXiv 2025, arXiv:2505.05223. [Google Scholar] [CrossRef]

- Tan, Z.; Wang, K.; Hu, H. CarLLaVA: Embodied Autonomous Driving Agent via Vision-Language Reinforcement Learning. Stanford CS224R Project Report. 2024. Available online: https://cs224r.stanford.edu/projects/pdfs/CS224R_Project_Milestone__5_.pdf (accessed on 30 July 2025).

- Yang, Z.; Liu, J.; Wu, H. Safe Reinforcement Learning for Autonomous Vehicles with Uncertainty-Aware Collision Avoidance. IEEE Robot. Autom. Lett. 2021, 6, 6312–6319. [Google Scholar]

- Fang, Y.; Yan, J.; Luo, H. Hierarchical Reinforcement Learning Framework for Urban Autonomous Driving in CARLA. Robot. Auton. Syst. 2022, 158, 104212. [Google Scholar]

- Cui, X.; Yu, H.; Zhao, J. Adaptive Curriculum Reinforcement Learning for Autonomous Driving in Complex Scenarios. IEEE Trans. Veh. Technol. 2023, 72, 9874–9886. [Google Scholar]

- Feng, R.; Xu, L.; Luo, X. Generalization of Reinforcement Learning Policies in Autonomous Driving: A Domain Randomization Approach. IEEE Trans. Veh. Technol. 2025. early access. [Google Scholar]

- Luo, Y.; Wang, Z.; Zhang, X. Improving Imitation Learning for Autonomous Driving through Adaptive Data Augmentation. Sensors 2023, 23, 4981. [Google Scholar]

- Mohanty, A.; Lee, J.; Patel, R. Inverse Reinforcement Learning for Human-Like Autonomous Driving Behavior in CARLA. IEEE Trans. Hum.-Mach. Syst. 2024. early access. [Google Scholar]

- Liang, X.; Wang, T.; Yang, L.; Xing, E. Cirl: Controllable imitative reinforcement learning for vision-based self-driving. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 584–599. [Google Scholar]

- Phan-Minh, T.; Howington, F.; Chu, T.S.; Lee, S.U.; Tomov, M.S.; Li, N.; Dicle, C.; Findler, S.; Suarez-Ruiz, F.; Beaudoin, R.; et al. Driving in real life with inverse reinforcement learning. arXiv 2022, arXiv:2206.03004. [Google Scholar] [CrossRef]

- Ho, J.; Ermon, S. Generative adversarial IL. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Chekroun, R.; Toromanoff, M.; Hornauer, S.; Moutarde, F. Gri: General reinforced imitation and its application to vision-based autonomous driving. Robotics 2023, 12, 127. [Google Scholar] [CrossRef]

- Han, Y.; Yilmaz, A. Learning to drive using sparse imitation reinforcement learning. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3736–3742. [Google Scholar]

- Reddy, S.; Dragan, A.D.; Levine, S. Sqil: IL via reinforcement learning with sparse rewards. arXiv 2019, arXiv:1905.11108. [Google Scholar]

- Huang, X.; Chen, H.; Zhao, L. Hybrid Imitation and Reinforcement Learning for Safe Autonomous Driving in CARLA. IEEE Trans. Intell. Transp. Syst. 2024. early access. [Google Scholar] [CrossRef]

- Kim, J.; Cho, S. Reinforcement and Imitation Learning Fusion for Autonomous Vehicle Safety Enhancement. IEEE Trans. Intell. Veh. 2025. early access. [Google Scholar]

- Li, Z.; Zhang, S.; Zhou, D. Behavioral Cloning and Reinforcement Learning for Autonomous Driving: A Comparative Study. IEEE Intell. Transp. Syst. Mag. 2022, 14, 27–41. [Google Scholar]

- Cheng, Y.; Wu, J.; Wang, Z. End-to-End Urban Autonomous Driving with Deep Reinforcement Learning and Curriculum Strategies. Appl. Sci. 2023, 13, 5432. [Google Scholar]

- Hofbauer, M.; Kuhn, C.; Petrovic, G.; Steinbach, E. TELECARLA: An Open Source Extension of the CARLA Simulator for Teleoperated Driving Research Using Off-the-Shelf Components. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020. [Google Scholar]

- Yue, X.; Zhang, Y.; Zhao, S.; Sangiovanni-Vincentelli, A.; Keutzer, K.; Gong, B. Domain Randomization and Pyramid Consistency: Simulation-to-Real Generalization without Accessing Target Domain Data. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2100–2110. [Google Scholar] [CrossRef]

- Kaur, M.; Kumar, R.; Bhanot, R. A Survey on Simulators for Testing Self-Driving Cars. Comput. Sci. Rev. 2021, 39, 100337. [Google Scholar] [CrossRef]

- Bu, T.; Zhang, X.; Mertz, C.; Dolan, J.M. CARLA Simulated Data for Rare Road Object Detection. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2794–2801. [Google Scholar] [CrossRef]

- Zeng, R.; Luo, J.; Wang, J. Benchmarking Autonomous Driving Systems in Simulated Dynamic Traffic Environments. IEEE Trans. Intell. Transp. Syst. 2024, 25, 121–132. [Google Scholar]

- Jiang, X.; Zhao, H.; Zeng, Y. Benchmarking Reinforcement Learning Algorithms in CARLA: Performance, Stability, and Robustness Analysis. Transp. Res. Rec. 2025, 2025, 247–258. [Google Scholar]

| Method | Use Model | Based on | On-Policy/Off-Policy |

|---|---|---|---|

| Q-learning | Model free | Values | Off-policy |

| REINFORCE | Model free | Policy | On-policy |

| Actor–Critic | Model free | Hybrid (Values + Policy) | On-policy |

| Proximal Policy Optimization (PPO) | Model free | Policy | On-policy |

| Deep Q-Network | Model free | Values (approx. using neural network) | Off-policy |

| Model Predictive Control (MPC) | Model based | Dynamics model + Policy Optimization | Typically off-policy |

| Paper [Agent] | State Space | Action Space |

|---|---|---|

| Implementing a deep reinforcement learning model for autonomous driving [6] [PPO agent] | throttle [0, 1], steer [−1, 1], brake [0, 1], distance from waypoint, waypoint orientation [degrees] | throttle [0, 1], steer [−1, 1], brake [0, 1] |

| Deep reinforcement learning based control for Autonomous Vehicles in CARLA [7] [DRL-flatten-image agent] | 121 dimensional vector (processed RGB image), distance from lane, [degrees] | steer [−1, 1],throttle [0, 1] |

| Deep reinforcement learning based control for Autonomous Vehicles in CARLA [7] [DRL-Carla-Waypoints agent] | vector of waypoints [w0…14], distance from lane, [degrees] | steer [−1, 1], throttle [0, 1] |

| Deep reinforcement learning based control for Autonomous Vehicles in CARLA [7] [DRL-CNN agent] | , distance from lane, [degrees] | steer [−1, 1], throttle [0, 1] |

| Deep reinforcement learning based control for Autonomous

Vehicles in CARLA [7] [DRL-Pre-CNN agent] | vector of waypoints [w0…14], distance from lane, [degrees] | steer [−1, 1], throttle [0, 1] |

| Reinforcement Learning-Based Autonomous Driving at Intersections in CARLA Simulator [8] [RL agent] | distance from intersection, speed | stop [speed = 0 m/s], drive [speed = 5 m/s] |

| CARLA: An Open Urban Driving Simulator [9] [A3C agent] | speed, distance to goal, damage from collisions | steer [−1, 1], throttle [0, 1], brake [0, 1] |

| Think2Drive: Efficient Reinforcement Learning by Thinking with Latent World Model for Autonomous Driving [10] [Think2Drive agent] | , speed | throttle [0, 1], brake [0, 1], steer [−1, 1] |

| Safe Navigation: Training Autonomous Vehicles using Deep Reinforcement Learning in CARLA [11] [Combined DQN agent] | distance, distance from obstacle, [degrees], speed, traffic light | brake [throttle = 0.0, brake = 1.0, steer = 0.0], drive straight [throttle = 0.3, brake = 0.0, steer = 0.0], turn left [throttle = 0.1, brake = 0.0, steer = −0.6], turn right [throttle = 0.1, brake = 0.0, steer = 0.6], turn slightly left [throttle = 0.4, brake = 0.0, steer = −0.1], turn slightly right [throttle = 0.4, brake = 0.0, steer = 0.1] |

| Paper [Agent] | Reward |

|---|---|

| Implementing a deep reinforcement learning model for autonomous driving [6] [There was only one agent presented] | R = |

| Deep reinforcement learning based control for Autonomous

Vehicles in CARLA [7] [DRL-flatten-image agent, DRL-Carla-Waypoints agent, DRL-CNN agent, DRL-Pre-CNN agent] | = |

| Reinforcement Learning-Based Autonomous Driving at Intersections

in CARLA Simulator [8] [There was only one agent presented] | R = |

| CARLA: An Open Urban Driving Simulator [9] [There was only one agent presented] | |

| Think2Drive: Efficient RL by Thinking with Latent World Model [10] [There was only one agent presented] | |

| Safe Navigation: Training Autonomous Vehicles using DRL in CARLA [11] [One agent combined two models] | = |

| = |

| Agent (Study) | Input Representation | Control Output | Key Performance Metrics | Architectural Highlights | Strengths and Limitations |

|---|---|---|---|---|---|

| Think2Drive [10] | BEV latent maps with semantic and temporal masks | Discrete set of 30 maneuvers | 100% route completion on CARLA v2 within 3 days on A6000 GPU | Latent world model + Dreamer-V3 architecture | High generalization; requires privileged inputs and tuned latent space |

| Gutierrez-Moreno [8] | Speed + distance to intersection | Discrete stop/go | 78–98% success in intersection scenarios; 0.04 collisions per episode | Curriculum learning; actor–critic; 2D input space | Fast convergence; limited for urban complexity |

| Dosovitskiy [9] | Dual RGB frames + auxiliary features | Continuous control signals | 74% route success (baseline CARLA); low scalability | A3C baseline with dense reward | Generalizable benchmark; outdated by newer models |

| Pérez-Gil [7] | 4 architectures: RGB → vector, waypoints, raw CNN, preprocessed CNN | Continuous steering/throttle | 78% success; 18% collisions across setups | Compares flattened vs CNN vs waypoint inputs | Explores control-input trade-offs; lacks domain transfer tests |

| Razak [6] | Throttle, brake, steering, distance + orientation to waypoint | Continuous control | 83% success; 12% collision (simulation report) | Simple handcrafted reward with angular shaping | Minimal setup; lacks multi-scenario adaptation |

| Nehme [11] | Depth + traffic light + deviation metrics | Modular discrete driving and braking decisions | 86% success; 7% collision rate (simulation study) | Separate DQN for brake + maneuver control | Interpretability; modular; complex integration |

| Method | Use Model | Based on |

|---|---|---|

| Behavioral Cloning (BC) | Model free | Values |

| DAgger (Dataset Aggregation) | Model free | Policy |

| Inverse Reinforcement Learning (IRL) | Indirect (Learns Reward) | Reward |

| Generative Adversarial IL (GAIL) | Model free | Policy |

| Paper [Agent] | State Space | Action Space |

|---|---|---|

| End-to-end Driving via Conditional IL [12] [Simulation and physical agent; identical state/action spaces] | ||

| Learning by Cheating [13] [Privileged agent] | ||

| Learning by Cheating [13] [Sensorimotor agent] | ||

| Dynamic Conditional IL for Autonomous Driving [14] [Single-agent architecture] |

| Agent (Study) | Input Representation | Control Output | Key Performance Metrics | Architectural Highlights | Strengths and Limitations |

|---|---|---|---|---|---|

| Codevilla et al. [12] | RGB image (800 × 600), directional command | Continuous steering, acceleration | 96% success rate in simulation; real-world feasibility via scaled platform | Conditional branching policy; centralized end-to-end network | Simple design; low robustness to distributional shift; single camera limitation |

| Chen et al. [Privileged] [13] | Semantic BEV map (320 × 320 × 7), speed, command | Steering, throttle, brake (via controller on predicted waypoints) | Achieves near-expert imitation with minimal variance | Two-stage pipeline with expert heatmap output and PID controller | High accuracy, but requires privileged input unavailable at test time |

| Chen et al. [Sensorimotor] [13] | RGB image (384 × 160), speed, command | Steering, throttle, brake | Generalizes well when trained via privileged supervision | Learns to imitate expert via internal heatmap prediction | End-to-end trainable; suboptimal if privileged guidance is inaccurate |

| Eraqi et al. [14] | RGB image, LiDAR (polar grid), speed, directional command | Steering, throttle, brake | 92% success rate in navigation scenarios; improved safety margins | Multi-modal encoder; dynamic conditional policy branches | Robust multi-sensor fusion; higher computational complexity |

| Agent (Study) | Input Representation | Control Output | Key Performance Metrics | Architectural Highlights | Strengths and Limitations |

|---|---|---|---|---|---|

| Phan et al. [66] | RGB, speed, high-level commands | Steering, throttle, brake | Effective policy transfer to real-world intersections | Inverse RL followed by RL fine-tuning | Combines realism and safety; complex pretraining required |

| Ho and Ermon [67] | Trajectory sequences (expert vs. agent) | Action likelihood over states | Performs well in MuJoCo; widely adopted in AV variants | GAIL adversarial setup: discriminator + generator agent | No reward engineering; sensitive to discriminator instability |

| Liang et al. [CIRL] [65] | Camera image, vehicle speed, navigation command | Steering, throttle, brake | High success rate in intersection and obstacle-avoidance tasks | Behavior cloning + DDPG in staged learning | Improves generalization; requires balanced training phase timing |

| Chekroun et al. [GRI] [68] | Sensor data, expert trajectory actions | Steering, throttle, brake | Tested in CARLA and MuJoCo; fast convergence | Unified replay buffer; expert data has constant high reward | No IL pretraining needed; success depends on reward scaling |

| Han et al. [69] | Sensor data + sparse expert cues | Blended: agent and expert actions (probabilistic) | Rapid learning with low collision rates | Exploration guided by sparse expert rules | Safe learning; depends on sparse rule coverage |

| Reddy et al. [SQIL] [70] | Standard RL states + expert trajectories | RL actions from standard policy | Improves over baseline Soft Q-Learning in MuJoCo | Simple buffer reward logic (1 for expert, 0 for agent) | Very easy to integrate; lacks fine control on imitation priority |

| Huang et al. [71] | RGB image + LiDAR + GPS | Steering, throttle, brake | Enhanced safety and generalization in CARLA scenarios | Hierarchical fusion of IL + PPO RL agent | Flexible framework; limited outside simulation |

| Kim and Cho [72] | Camera + sensor state features | Steering, throttle, brake | Improved driving scores in complex scenarios | Combines attention-based IL with actor–critic RL | Effective in dense environments; lacks interpretability |

| Article | Goal | Architecture Complexity | Environment Complexity | Scenarios | Advantages |

|---|---|---|---|---|---|

| Implementing a Deep Reinforcement Learning Model for Autonomous Driving | End-to-end RL agent with Variational Autoencoder (VAE) | 2 | 3 | One scenario in three towns (1, 2 and 7) | An extensive and complex approach to the subject |

| Deep Reinforcement Learning Based Control for Autonomous Vehicles in CARLA | Comparison of DQN and DDPG on few models | 1 | 1 | Two scenarios in town 1 | Multiple and varied approaches to agent’s architecture |

| Reinforcement Learning-Based Autonomous Driving at Intersections in CARLA Simulator | Complete agent capable of driving through intersection with traffic | 2 | 3 | Three scenarios, one for each type of intersection (lights, stop signal, uncontrolled) | Complex approach to crossing intersection with traffic |

| CARLA: An Open Urban Driving Simulator | Comparison of performance of RL, IL and Modular Pipeline | 1 | 3 | Five scenarios in four possibilities (training conditions, new town, new weather, new weather and town) | A good comparison of RL with other machine learning methods with a multi-sensor agent |

| Think2Drive: Efficient Reinforcement Learning by Thinking with Latent World Model for Autonomous Driving (in CARLA-V2) | RL agent possible to drive in multiple corner cases | 3 | 3 | 39 detailed scenarios | A highly complex approach to an agent capable of driving multiple corner case scenarios |

| Safe Navigation: Training Autonomous Vehicles using Deep Reinforcement Learning in CARLA | RL agent capable of driving to maintain speed and break when necessary to avoid collision | 2 | 2 | Four scenarios in town 2 | A complex and robust approach to an agent composed of two models (breaking model and driving model) |

| Raw2Drive: Reinforcement Learning with Aligned World Models for End-to-End Autonomous Driving (in CARLA v2) | Model-based RL agent capable of learning effective driving from raw sensor data | 3 | 3 | 220 routes from Batch2Drive benchmark | Recent highly complex and highly robust dual-stream Model Based RL approach with one stream of privileged sensor data |

| CuRLA: Curriculum Learning Based Deep Reinforcement Learning For Autonomous Driving | PPO+VAE agent for driving in environment with increasing traffic | 2 | 2 | Few routes in town 7 with changing traffic | A modern approach combining Curriculum Learning with Deep Reinforcement Learning |

| Multi-Objective Reinforcement Learning for Adaptive Personalized Autonomous Driving | Multi-objective RL agent capable of driving according to preferences | 2 | 1 | One scenario | A modern approach to autonomous driving with a multi-objective (selected driving preferences) end-to-end agent |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Czechowski, P.; Kawa, B.; Sakhai, M.; Wielgosz, M. Deep Reinforcement and IL for Autonomous Driving: A Review in the CARLA Simulation Environment. Appl. Sci. 2025, 15, 8972. https://doi.org/10.3390/app15168972

Czechowski P, Kawa B, Sakhai M, Wielgosz M. Deep Reinforcement and IL for Autonomous Driving: A Review in the CARLA Simulation Environment. Applied Sciences. 2025; 15(16):8972. https://doi.org/10.3390/app15168972

Chicago/Turabian StyleCzechowski, Piotr, Bartosz Kawa, Mustafa Sakhai, and Maciej Wielgosz. 2025. "Deep Reinforcement and IL for Autonomous Driving: A Review in the CARLA Simulation Environment" Applied Sciences 15, no. 16: 8972. https://doi.org/10.3390/app15168972

APA StyleCzechowski, P., Kawa, B., Sakhai, M., & Wielgosz, M. (2025). Deep Reinforcement and IL for Autonomous Driving: A Review in the CARLA Simulation Environment. Applied Sciences, 15(16), 8972. https://doi.org/10.3390/app15168972