Abstract

Infrared-visible image fusion quality is critical for nighttime perception in autonomous driving and surveillance but suffers severe degradation under extreme low-light conditions, including irreversible texture loss in visible images, thermal boundary diffusion artifacts, and overexposure under dynamic non-uniform illumination. To address these challenges, a Decomposition–Disentanglement–Dynamic Compensation framework, D3Fusion, is proposed. Firstly, a Retinex-inspired Decomposition Illumination Net (DIN) decomposes inputs into enhanced images and degradative illumination maps for joint low-light recovery. Secondly, an illumination-guided encoder and a multi-scale differential compensation decoder dynamically balance cross-modal features. Finally, a progressive three-stage training paradigm from illumination correction through feature disentanglement to adaptive fusion resolves optimization conflicts. Compared to State-of-the-Art methods, on the LLVIP, TNO, MSRS, and RoadScene datasets, D3Fusion achieves an average improvement of 1.59% in standard deviation (SD), 6.9% in spatial frequency (SF), 2.59% in edge intensity (EI), and 1.99% in visual information fidelity (VIF), demonstrating superior performance in extreme low-light scenarios. The framework effectively suppresses thermal diffusion artifacts while mitigating exposure imbalance, adaptively brightening scenes while preserving texture details in shadowed regions. This significantly improves fusion quality for nighttime images by enhancing salient information, establishing a robust solution for multimodal perception under illumination-critical conditions.

1. Introduction

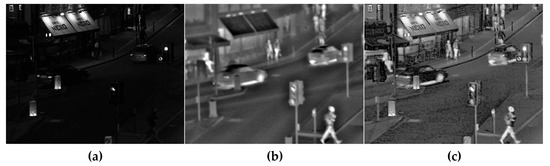

Infrared (IR) and visible (VIS) image fusion leverages their complementary characteristics to play a key role in complex scene perception, demonstrating significant application value in military reconnaissance, autonomous driving, nighttime surveillance, and other fields [1,2,3,4]. As illustrated in Figure 1, in autonomous driving perception systems, IR imaging captures thermal radiation features to detect heat-emitting targets (e.g., pedestrians, vehicles) under low-light or foggy conditions, while VIS imaging preserves reflectance-dependent texture details such as clothing patterns and road signs [3]. This physical complementarity makes their fusion a core solution for boosting visual system perception.

Figure 1.

Comparative visualization of nighttime street scenes: (a) Visible image (VIS); (b) Infrared image (IR); (c) Fused image (generated by the proposed D3Fusion). The fused image integrates texture details (from VIS) and thermal targets (from IR) for enhanced nighttime perception.

As applications such as autonomous driving expand to all-weather and all-terrain scenarios, ensuring stable fusion performance in extreme environments becomes critical. Specifically, extreme low-light conditions exacerbate three fundamental challenges: imaging degradation in low-light environments—stemming from insufficient photon capture and sensor noise amplification, reducing VIS image signal-to-noise ratio (SNR) below 5 dB, and submerging texture details in noise [1]. Meanwhile, dynamic non-uniform illumination (e.g., vehicle headlights, streetlights) creates coexisting overexposed and underexposed regions, challenging traditional fusion methods to balance dynamic range [5]. Additionally, the fundamental divergence between VIS (reflectance-based) and IR (thermal radiation-based) imaging causes systematic discrepancies in edge positions and contrast for the same object [6], leading to thermal edge diffusion, texture blurring, and misalignment in fused images [2], which exacerbates cross-modal feature alignment difficulties. Current fusion methodologies address these challenges through three technical paradigms:

- (i)

- Retinex-based methods decompose an image into reflectance(R) and illumination (L) components to achieve low-light enhancement, establishing a physically interpretable framework for this restoration process [7,8,9]. Traditional Retinex-based methods have laid foundational groundwork for low-light image enhancement, though with notable limitations. Early implementations like Single-Scale Retinex (SSR) [10] and Multi-Scale Retinex (MSRCR) [11] leverage Gaussian filtering to smooth illumination maps, but this approach often introduces color distortion and over-enhancement artifacts. The reliance on manual parameter tuning further restricts their adaptability across diverse scenes. Deep learning advancements have enabled data-driven Retinex implementations. Jin et al. [4] employ a deep neural network to estimate the shading maps (analogous to illumination maps) and adjust lightness, while KinD [12] enhances upon this by incorporating a reflection recovery network to suppress noise and color distortion. Retinexformer [13] integrates Retinex decomposition with a Transformer architecture, utilizing illumination-guided attention to model long-range dependencies. Additionally, classic Retinex models do not consider the noise in low-light images, while a robust Retinex model has been proposed to address this by adding a noise term and using novel regularization terms for illumination and reflectance [14]. These single-modality frameworks demonstrate efficacy in enhancing low-light images but lack explicit cross-modal feature integration, limiting their direct applicability to fusion tasks. In the context of multimodal fusion, Zhang et al. [15] pioneered the integration of Retinex decomposition into fusion workflows using UNet for illumination estimation. PIAFusion [3] does not explicitly employ Retinex theory, yet it introduces a progressive illumination-aware network to estimate lighting distributions, enabling adaptive fusion by leveraging illumination conditions. This approach inspires our model design by demonstrating the effectiveness of integrating illumination-aware mechanisms for dynamic feature fusion. DIVFusion [1] advances this by designing a Scene-Illumination Disentangled Network (SIDNet) to strip degradative illumination from visible images, thereby improving texture preservation in low-light scenarios, though it still faces challenges in dynamic illumination adaptation.

- (ii)

- Early frequency-domain methods, such as FCLFusion [16], decompose images using wavelet transforms to separate high-frequency texture details from low-frequency structural components. U2Fusion [17] advances this by introducing a unified unsupervised network with dense blocks for multi-scale feature integration, though its performance diminishes in extremely low-light scenarios. WaveletFormerNet [5] innovatively integrates wavelet transforms with Transformer-based modules, leveraging attention mechanisms to mitigate texture loss during downsampling. This approach demonstrates superior detail preservation in non-homogeneous fog conditions. However, the adoption of fixed frequency band division strategies makes it difficult to adapt to dynamic illumination in complex lighting environments.

- (iii)

- Transformer architectures and attention mechanisms have revolutionized infrared-visible image fusion by enabling explicit modeling of long-range dependencies and cross-modal feature interactions. CrossFuse [6] introduces a cross-attention mechanism (CAM) that leverages reversed softmax activation to prioritize complementary (uncorrelated) features, reducing redundant information while preserving thermal targets from infrared images and texture details from visible inputs. This design demonstrates that attention can effectively enhance modality-specific feature integration. SwinFusion [18] adapts the Swin Transformer to model cross-domain long-range interactions, outperforming CNN-based methods in global feature aggregation. Its hierarchical architecture with shifted windows allows efficient computation while capturing contextual dependencies, though it may incur higher computational costs in real-time scenarios. CDDFuse [19] proposes a correlation-driven dual-branch feature decomposition framework, using cross-modal differential attention to disentangle shared structural features and modality-specific details. This approach explicitly enhances feature complementarity, but the absence of illumination-aware guidance leads to significant loss of visible-light information under low-light conditions, degrading fusion quality.

Despite progress, three critical limitations hinder existing methods in extreme low-light environments: (i) Traditional fusion frameworks exhibit insufficient texture recovery capability for severely degraded visible images. Under extreme low-light conditions, the signal-to-noise ratio (SNR) of visible images drops significantly [1], accompanied by issues such as low contrast and prominent noise. This renders traditional fusion methods unable to balance thermal target saliency and texture detail preservation in nighttime scenes, leading to severely constrained fusion performance [3]. (ii) Direct fusion of cross-modal features triggers spectral distortion. Infrared and visible modalities exhibit distinct physical properties: infrared gradients primarily reflect thermal boundaries, while visible gradients characterize material textures [20,21]. Fusing their features without explicit decomposition generates artifacts, fundamentally because traditional methods under low-light conditions tend to over-rely on infrared thermal features [19]. This leads to the misamplification of thermal radiation features and suppression of high-frequency texture expression, thereby causing abnormal diffusion of thermal boundaries and blurring degradation of visible-light textures in shadow regions, which produces obvious artifacts. (iii) Complex dynamic illumination distributions in nighttime scenes significantly interfere with static fusion strategies, resulting in blurred target edges. Static fusion rules fail to adaptively balance multimodal features under varying lighting conditions [3].

To address the above issues, an illumination-aware progressive infrared-visible fusion network, D3Fusion, tailored for nighttime scenes, is proposed in this paper. The core of this approach lies in a unified framework that couples a Decomposition Illumination Net (DIN) with attention-guided feature disentanglement, enabling joint optimization of low-light enhancement and cross-modal integration. DIN decomposes degradative illumination components from visible images to achieve low-light enhancement, while the Disentangled Encoder and Reconstruction Decoder collaborate to disentangle and fuse cross-modal features—thereby preserving texture details while integrating thermal saliency. This framework dynamically separates illumination effects from reflectance-dependent texture features, preventing thermal boundary diffusion and noise amplification typical of traditional methods. Additionally, the proposed illumination-guided feature disentanglement encoder adjusts attention weights based on real-time illumination maps, while the multi-scale differential compensation decoder enhances complementary feature integration through bidirectional feature refinement and hierarchical attention gating. By explicitly modeling the physical divergence between thermal radiation and reflectance-based features, these components mitigate spectral artifacts and enable adaptive fusion under extreme low-light. To resolve the objective conflicts in end-to-end training, a three-stage progressive training paradigm is introduced: Stage I optimizes low-light enhancement to normalize illumination variations, Stage II refines cross-modal feature disentanglement to separate thermal saliency and texture cues, and Stage III conducts adaptive fusion with illumination-guided attention. This staged strategy ensures that the model sequentially adapts to illumination dynamics, feature disparities, and fusion objectives, overcoming the static rule limitations of traditional methods. In summary, the main contributions of the proposed method are as follows:

- A unified illumination-feature optimization framework is proposed, integrating Retinex-based degradation separation with attention-guided feature disentanglement to achieve joint optimization of low-light enhancement and feature disentanglement, balancing thermal saliency and texture preservation.

- An illumination-guided disentanglement encoder and a multi-scale differential compensation decoder are designed, wherein attention weights are adaptively adjusted using real-time illumination maps, while complementary feature extraction is enhanced through multi-scale differential compensation.

- A progressive three-stage training paradigm to resolve end-to-end training conflicts and adapt to dynamic illumination is established:

- Stage I: Illumination-aware enhancement

- Stage II: Cross-modal feature disentanglement

- Stage III: Dynamic complementary fusion

Experimental results demonstrate that our method achieves a balance between thermal targets and texture details in low-light scenarios, adaptively amplifying scene brightness while fusing complementary information according to dynamic illumination conditions. The framework demonstrates superior visual quality and dominates quantitative metrics. Our work establishes a systematic Decomposition–Disentanglement–Dynamic Compensation (D3Fusion) framework for robust multimodal perception under illumination-critical conditions.

2. Methodology

2.1. Overview

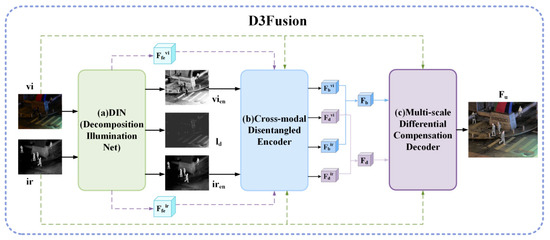

Figure 2 illustrates the overall architecture of the proposed illumination-aware progressive fusion framework. To address the challenges of nighttime image fusion under extreme low-light conditions, the framework employs a three-stage pipeline:

Figure 2.

Overall architecture of the proposed illumination-aware progressive fusion network. The framework comprises three core components: (a) Decomposition Illumination Net (DIN) for joint low-light enhancement, (b) Cross-Modal Disentangled Encoder for feature decomposition, and (c) Differential Compensation Decoder.

Illumination Decomposition. The Decomposition Illumination Net (DIN) processes input visible () and infrared () image pairs. Inspired by Retinex theory, DIN decomposes them into enhanced visible () and infrared () images, along with a degraded illumination map (). Crucially, DIN extracts illumination feature components () that guide subsequent attention mechanisms. This stage explicitly mitigates degradative lighting effects in low-light visible imagery.

Feature Disentanglement. Enhanced images are processed by a Cross-Modal Disentangled Encoder (EN). The pipeline begins with a Shared Encoder that extracts preliminary cross-modal features. Significantly, this encoder is modulated by illumination features () from DIN, enabling adaptive feature extraction under varying illumination conditions. Modality-specific encoders then decompose inputs into shared base features () representing structural information and modality-specific detail features () capturing visible textures and thermal signatures.

Differential-Aware Fusion. A Dynamic Compensation Decoder (DE), equipped with a multi-scale differential perception mechanism, dynamically fuses the disentangled base () and detail () features. It utilizes attention gating and differential feature compensation strategies to adaptively integrate the complementary information from both modalities, producing the final fused image ().

Formally, given visible image and infrared image , the pipeline is governed by the following:

2.2. DIN (Decomposition Illumination Net)

The Decomposition Illumination Network (DIN) is inspired by Retinex theory [7] and improved from the Scene-Illumination Disentangled Network (SIDNet) [1]. As formalized in Equation (2), Retinex theory decomposes a low-light image () into a reflectance component () and an illumination component ():

where denotes element-wise multiplication. The visual degradation caused by low-light conditions primarily originates from the illumination component , while the reflectance component represents the intrinsic attributes of the scene. Thus, the enhanced image under normal illumination can be derived by estimating from the degraded low-light image .

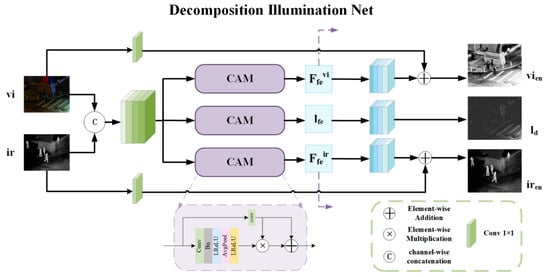

Architecture of DIN. As shown in Figure 3, the DIN module adopts a triple-branch architecture to achieve illumination dissociation. The visible and infrared images are concatenated as input and processed through stacked 3 × 3 convolutions for preliminary feature extraction. Each branch incorporates a Channel Attention Module (CAM) to adaptively select multimodality-specific features. The CAM generates channel attention vectors via global average pooling to enhance visible features, degrade illumination features, and preserve infrared thermal characteristics. Three symmetric decoders with residual skip connections are then employed to reconstruct the outputs: the enhanced visible image , enhanced infrared image , and degraded illumination image . The process is formulated as follows:

where denotes channel-wise concatenation, and represent the input visible and infrared image pairs. Notably, the illumination features, and , extracted from the SAM blocks are leveraged for subsequent illumination-guided processing.

Figure 3.

Architecture of the Decomposition Illumination Net (DIN). The three-branch architecture processes concatenated visible-infrared inputs through parallel convolution streams with Channel Attention Modules (CAM).

The learning of decomposed components is supervised through a multi-objective loss function (detailed in Section 2.5), which enforces consistency between reconstructed images and their corresponding physical properties.

2.3. Disentanglement Encoder

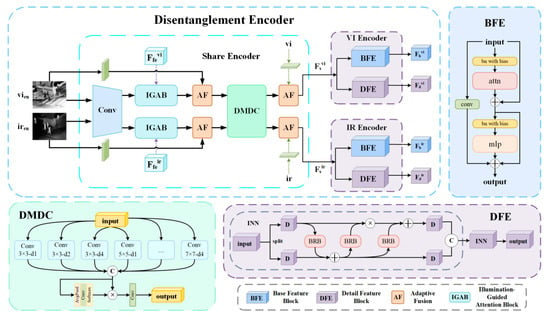

As illustrated in Figure 4, the encoder comprises a Shared Encoder for cross-modal feature extraction and Modality-Specific Encoders for visible and infrared feature disentanglement.

Figure 4.

Architecture of the Disentanglement Encoder. The dual-path network combines a shared encoder with illumination-guided attention (IGAB) and multi-scale dilated convolution (DMDC), and modality-specific branches for base/detail feature extraction. Base Feature Encoders (BFE) employ spatial self-attention, while Detail Feature Encoders (DFE) use inverted residual blocks for edge-preserving decomposition.

2.3.1. Shared Encoder

The shared encoder employs dual parallel branches to extract shallow features from visible and infrared inputs. Three critical components are integrated into this encoder: the Illumination-Guided Attention Block (IGAB), Dilated Multi-Scale Dense Convolution (DMDC) module, and Adaptive Fusion (AF) module. The process is formally expressed as follows:

where denotes the shared encoder, and represent shallow features of visible and infrared images.

Illumination-Guided Attention Block (IGAB). Conventional Transformers neglect illumination distribution when computing attention weights, thus limiting their robustness under complex and dynamic lighting conditions. To address this limitation, we introduce the Illumination-Guided Attention Block (IGAB) [13], which incorporates both decomposed visible and infrared illumination features from DIN as attention modulators. These features dynamically adjust cross-modal attention maps, facilitating physics-aware feature enhancement across varying illumination scenarios.

Dilated Multi-Scale Dense Convolution (DMDC). To capture multi-scale contextual features, DMDC combines three convolutional kernels (3 × 3, 5 × 5, 7 × 7) with three dilation rates (1, 2, 4), forming nine parallel branches. The outputs are concatenated and weighted via global average pooling and Softmax to generate attention maps, adaptively highlighting critical regions.

Adaptive Fusion Module (AF). A learnable weighted fusion mechanism replaces naive concatenation or averaging. For inputs and , the fusion is expressed as follows:

where is a trainable parameter optimized via softmax constraints to balance multimodal feature contributions.

2.3.2. Modality-Specific Feature Disentanglement

The modality-specific encoders integrate parallel Basic Feature Extraction (BFE) and Detail Feature Extraction (DFE) branches, conceptually extended from CDDFuse’s dual-branch decomposition framework [19]. Visible and infrared modalities undergo independent processing using dedicated BFE/DFE streams to extract modality-specific features. BFE captures structural representations through spatial self-attention mechanisms. DFE preserves textural details via inverted residual blocks. This configuration enables specialized feature learning while maintaining inter-modal compatibility during fusion.

Basic Feature Extraction (BFE). BFE extracts global structural features from shallow shared features:

where denotes the BFE module. It integrates spatial multi-head self-attention and gated depth-wise convolutions to model long-range dependencies while maintaining computational efficiency.

Detail Feature Extraction (DFE). DFE focuses on local texture preservation:

where represents the DFE module. To achieve lossless feature extraction, an Inverted residual block (INN) with reflection padding and cross-channel interaction is employed. Each INN unit performs nonlinear feature coupling [22].

This hierarchical architecture explicitly disentangles modality-shared structural features and modality-specific detail features, providing a robust foundation for subsequent fusion.

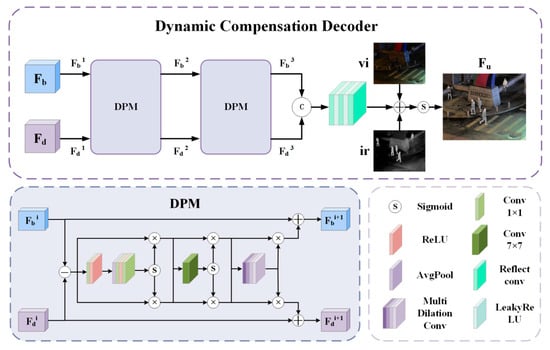

2.4. Dynamic Compensation Decoder

As illustrated in Figure 5, after the encoder disentangles features, the decoupled base and detail features are fused. To integrate cross-modal base (or detail) features, we reuse the BFE (or DFE) to obtain fused base and detail components:

where and represent the fused base and detail components, respectively.

Figure 5.

Architecture of the Dynamic Compensation Decoder. Multi-Scale Differential Perception Module (DPM) enabling bidirectional feature refinement and attention-gated fusion.

For the fusion of global base and detail features, a fusion decoder (Dynamic Compensation Decoder) is designed. Although infrared and visible features have been disentangled into base and detail components, simple fusion strategies (e.g., weighted averaging) lack sensitivity to feature discrepancies and fail to adequately exploit cross-modal complementarity. To address this, we propose a Differentiation-Driven Dynamic Perception Module (DPM) that achieves complementary feature fusion through differential feature disentanglement, multi-scale attention enhancement, and bidirectional feature compensation.

In DPM, firstly, we generate difference features through mutual differentiations:

where and are the input components of the i-th layer. and denote differential features, and represents element-wise subtraction, capturing modality-specific discrepancies.

Subsequently, multi-layer convolution and ReLU activation functions are used to enhance the sparsity and separability of differential features. Through Global Average Pooling (GAP) and dimensionality boosting and reducing convolution, channel attention maps are generated, enabling the network to focus on the complementary regions between modalities:

where and denote dimension reduction/expansion convolutions, and is Sigmoid. In order not to lose the global spatial correlation, a 7 × 7 large convolution kernel is then used:

where denote 7 × 7 convolution. After that, we adopted the cascaded dilation convolution (dilation rates = 1, 2, 3) to capture the multi-scale context:

where denote 3 × 3 convolution with an expansion rate of r.

Finally, the enhanced differential features are inversely fused into base/detail components:

where the denotes element-wise addition. Thus, the output components and of the i-th layer are obtained.

In the final upsampling process, Reflection Padding is adopted instead of the traditional zero padding. The artifacts at the image edges are suppressed by mirroring symmetrical boundary pixels, and a controllable negative slope is introduced by using the LeakyReLU activation function to alleviate the problem of gradient sparsity in deep networks.

The decoder participates in Stage II and Stage III training:

where the denotes the fusion decoder. and represent the visible light image and the infrared image reconstructed using decoupled features, which are used to participate in the training of Stage II. denotes the final fused image, which is used to participate in the training of Stage III.

2.5. Progressive Three-Stage Training Strategy

Since the optimization directions of low-light enhancement (Stage I), feature decoupling (Stage II), and multimodal fusion (Stage III) are different, direct end-to-end training will cause the loss terms of different tasks to interfere with each other during gradient backpropagation, reducing the convergence stability. To solve the optimization conflicts among multiple tasks, we propose a progressive three-stage training framework with task-specific loss functions.

2.5.1. Stage I: Degradation-Aware Illumination Separation

In Stage I, we dissociated the degraded illumination features from paired visible and infrared images , obtaining the low-light-enhanced visible and infrared images . The total loss function is defined as follows:

where:

Reconstruction Loss (, ). Ensures fidelity of enhanced images:

where represents the calculation of structural similarity, . denotes the squared L2-norm calculation. Let denote the reconstructed visible-light image. In accordance with the previous Retinex theory, we define as the element-wise multiplication of and , which can be expressed by the formula:

Illumination Smoothness Loss (). Constrains the spatial continuity of :

where is an extremely small constant used to prevent the denominator from being zero (0.01 in this paper). represents the Sobel operator in both the and directions.

Mutual Consistency Loss (). Enforces physical consistency between illumination and reflectance:

introduces an illumination–reflection mutual-regularization term. At the prominent edges of objects (where is relatively large), it allows for abrupt illumination changes (where is relatively small) to better simulate the abrupt illumination changes at the edges of objects in the real world. Here, is a constant, and it is set to −10 in this paper.

Perceptual Loss (). Aligns enhanced images with histogram-equalized references using VGG-19 features:

where represents the L1-norm calculation, represents after histogram equalization, and represents the use of the pre-trained VGG-19 model. We extract the features of its conv3_3 and conv4_3 layers for similarity calculation.

2.5.2. Stage II: Feature Disentanglement

In the second stage, paired visible and infrared images are first enhanced via DIN. The enhanced visible and infrared images are then fed into a feature encoder to disentangle base features and detailed features . To generate supervisory images, the base and detail features of the visible image (or infrared image ) are combined and input into a fusion decoder to reconstruct the corresponding visible image (or infrared image ). This process aims to train the encoder’s capability in feature disentanglement. The total loss function in Stage II is formulated as follows:

where:

Reconstruction Loss (, ). Supervises the decoder to reconstruct visible/infrared images from disentangled features ():

Feature Disentanglement Loss (). During feature disentanglement, we posit that the base features extracted from visible and infrared images predominantly encode shared modality-invariant information (highly correlated), while the detail features capture modality-specific characteristics (weakly correlated). To explicitly enforce this decomposition, a feature disentanglement loss is introduced:

where computes Pearson correlation and is set to 1.01 to ensure that this loss function is always positive.

Gradient Consistency Loss (). To further preserve high-frequency details, we introduce an additional gradient consistency loss:

2.5.3. Stage III: Cross-Modal Feature Fusion

The paired visible and infrared images are sequentially processed through DIN and the pre-trained encoder to obtain decomposed features . The cross-modal base features (or detail features ) are then fused via the BFE or DFE, producing fused features . Finally, these fused features are fed into the fusion decoder to generate the fused image .

where:

Feature Disentanglement Loss (). Remains consistent with Stage II.

Intensity Consistency Loss (). Ensures fused image preserves salient thermal and visible regions:

where and are the height and width of an image. By performing a maximum-value operation, within , we direct the fused image to retain both the high-frequency textures of the visible light image and the thermal radiation features of the infrared image.

Multimodal Gradient Loss (). Aligns gradients with optimal edges from both modalities:

3. Experiments and Results

3.1. Datasets and Experimental Settings

Datasets. Four datasets covering different scenarios are selected: LLVIP [23], TNO [24], MSRS [3], and RoadScene [25]. The LLVIP dataset is a traffic surveillance dataset containing 1200 pairs of street scene images for validating dynamic illumination adaptation. The TNO dataset contains infrared-visible image pairs of nighttime military scenes with a resolution of 640 × 480, including extreme low-light conditions (<5 lux). The MSRS dataset is a multispectral road scene dataset containing 2000 image pairs, covering complex light interference scenarios such as vehicle headlights and street lamps. The RoadScene dataset is an autonomous driving scenario dataset (800 image pairs) that includes harsh conditions like fog and rainy nights. This experiment aims to verify the model’s enhancement capability under extremely low-light conditions. Therefore, we randomly selected images with illumination < 10 lux from each dataset to form the test set. The training set includes both normally lit and low-light images to enhance the model’s generalization ability, while we employed random rotation and gamma transformation to simulate different low-light intensities and added Gaussian noise to improve robustness. Our results are compared against seven State-of-the-Art (SOTA) fusion methods: PIAFusion [3], MUFusion [26], SeAFusion [27], CMTFusion [28], CrossFuse [6], DIVFusion [1], and CDDFuse [19].

Evaluation Metrics. Eight widely recognized metrics to quantify fusion performance from multiple perspectives are adopted: entropy (EN), standard deviation (SD), spatial frequency (SF), average gradient (AG), edge intensity (EI), visual information fidelity (VIF), mutual information (MI), and QAB/F [29,30,31].

Implementation Details. The experiments are conducted on a machine equipped with an NVIDIA GeForce RTX 4090 GPU using the PyTorch 2.0 framework. During the preprocessing stage, training samples were randomly cropped into 128 × 128 patches. The training protocol consisted of 260 epochs with a three-stage progressive training scheme (Stage I: 100 epochs, Stage II: 40 epochs, Stage III: 120 epochs), with a batch size of 8. We employed the Adam optimizer (β1 = 0.9, β2 = 0.999) with an initial learning rate of 10−4 and a cosine decay strategy. For network hyperparameter configuration, λ1 to λ5 were set to 1000, 2000, 7, 9, and 40, respectively, while α1 to α6 were configured as 1, 1, 2, 10, 1, and 10.

3.2. Comparative Studies

3.2.1. Qualitative Analysis

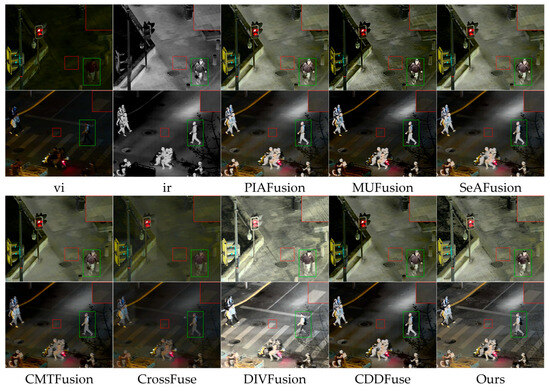

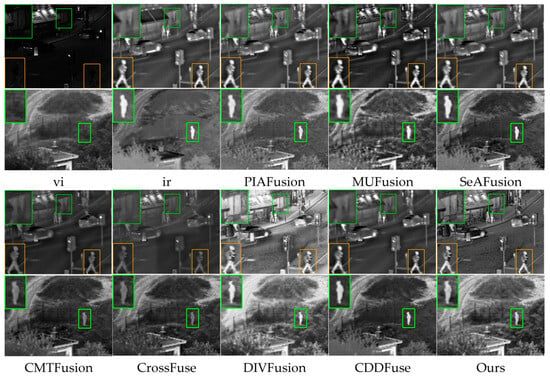

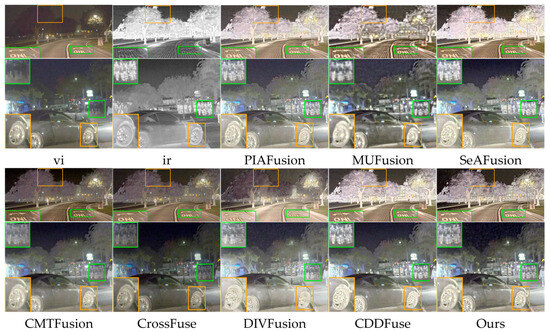

Visual comparisons across four benchmark datasets (Figure 6, Figure 7, Figure 8 and Figure 9) demonstrate our method’s superior fusion quality through effective integration of infrared thermal radiation signatures and visible-light textural details. As evidenced in Figure 6 (LLVIP #34, #13) and Figure 7 (TNO #25), conventional methods exhibit critical limitations in shadowed regions: PIAFusion, MUFusion, CMTFusion, SeAFusion, and CDDFuse suffer from textural (ground brick patterns) degradation due to unconstrained thermal feature dominance without illumination decomposition, resulting in over-smoothed low-light textures and significant detail loss.

Figure 6.

Visual comparison for “#34” and “#13” in the LLVIP dataset.

Figure 7.

Visual comparison for “#25” and “#19” in the TNO dataset.

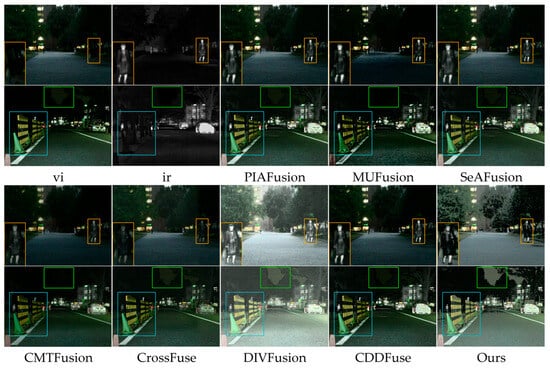

Figure 8.

Visual comparison for “#00004N” and “#00943N” in the MSRS dataset.

Figure 9.

Visual comparison for “#FLIR_07206” and “#FLIR_08284” in the RoadScene dataset.

In contrast, D3Fusion simultaneously enhances pedestrians in extremely low-light regions and preserves lane markings through degraded illumination separation via DIN decomposition combined with dynamic feature weighting using illumination-guided attention. This approach effectively mitigates the inherent conflict between thermal saliency retention and texture preservation through integrated degraded illumination separation (DIN decomposition) and dynamic feature weighting (IGAB), further enhanced by differential feature compensation. Furthermore, our framework suppresses thermal boundary diffusion artifacts and illumination-induced halo effects in Figure 7 (TNO #19, #25: human contours in vegetation and near streetlights) through multimodal feature equilibrium.

The dynamic adaptation capability is exemplified in complex lighting environments such as Figure 8 (MSRS #00943N: sky-forest boundaries) and Figure 9 (RoadScene #FLIR_08284: vehicle-light interactions). Here, the Illumination-Guided Attention Block (IGAB) adaptively balances multi-source lighting influences, effectively mitigating unnatural artifacts near light sources (e.g., color distortion) and exposure imbalance prevalent in DIVFusion and PIAFusion, thereby maintaining edge sharpness and spatial consistency under varying illumination conditions.

3.2.2. Quantitative Analysis

Eight metrics across four benchmark datasets (LLVIP, MSRS, TNO, and RoadScene) are used for evaluating the proposed method. To ensure reproducibility, three independent replicate fusion experiments were performed on each dataset using identical training weights and hyperparameters. The standard deviation of key evaluation metrics (i.e., EN, SD, SF, and VIF) across these replicates was found to be ≤1%, thus validating the stability of outputs under consistent experimental conditions. As shown in Table 1, quantitative comparisons demonstrate superior performance of our approach under low-light conditions. Significant advantages in information entropy (EN) and standard deviation (SD) confirm its exceptional detail preservation and contrast enhancement capabilities. Dominance in dynamic range metrics (SF, EI, AG) indicates remarkable enhancement in low-light textures and effective extraction of high-frequency features. Although our method does not achieve optimal results in structural fidelity metrics (MI, QAB/F), it maintains competitive performance. This outcome aligns with our fundamental insight: Low-light enhancement constitutes information reconstruction rather than simple replication. By prioritizing visual discriminability, the approach may reduce correlation with source images. Simultaneously, the proposed model actively suppresses thermal noise edges in infrared images to achieve clearer textures of dynamic targets. The results show our fused images exhibit enhanced clarity and more appropriate brightness in low-light scenarios, yielding superior visual quality. Therefore, the slight reductions in mutual information (MI) and edge preservation (QAB/F) represent an acceptable compromise.

Table 1.

Quantitative results of the IVF task. Boldface and underline show the best and second-best values, respectively. ↑ indicates higher values are better.

3.3. Ablation Study

To validate the contribution of key components and training strategies within the proposed D3Fusion framework, comprehensive ablation experiments were conducted on the LLVIP test set, as detailed in Table 2.

Table 2.

Ablation experiment results in the test set of LLVIP. Bold indicates the best value.

3.3.1. Impact of Core Modules

Removing the Illumination-Guided Attention Block (IGAB)reduced performance in both entropy (EN) and standard deviation (SD). This degradation confirms IGAB’s critical role in dynamically modulating cross-modal attention weights through real-time illumination maps, which enhances thermal target contrast in severely underexposed areas while simultaneously suppressing overexposure artifacts.

Omitting the Dilated Multi-Scale Dense Convolution (DMDC)module significantly impacted feature representation capabilities, with observable reductions in EN and SD metrics. This performance drop stems from DMDC’s role in capturing multi-scale contextual features through parallel convolutional pathways with varying receptive fields.

Exclusion of the Differentiation-Driven Dynamic Perception Module (DPM)caused significant degradation in visual information fidelity (VIF). This verifies DPM’s critical function in enabling bidirectional gradient compensation for high-frequency detail preservation.

The simultaneous removal of all three core components (IGAB + DMDC + DPM) resulted in severe performance collapse across all quantitative measures, establishing their synergistic operation within the illumination guidance → multi-scale encoding → differential compensation pipeline.

3.3.2. Training Strategy Analysis

The three-stage training paradigm proved indispensable for stable optimization. End-to-end training without progressive stages caused measurable performance degradation across multiple metrics. This confirms that sequential decoupling of illumination correction (Stage I), feature disentanglement (Stage II), and dynamic fusion (Stage III) resolves fundamental optimization conflicts arising from competing objectives. A critical incompatibility exists between low-light enhancement (Stage I) and feature disentanglement (Stage II): the illumination smoothness loss () constrains illumination continuity but simultaneously suppresses the detail separation capability of feature disentanglement loss (. This conflict manifests as compromised texture preservation when both losses are optimized concurrently.

Skipping the feature disentanglement stage (Stage II) significantly degraded multiple key metrics, indicating insufficient modality-specific feature separation by the encoder. This inadequacy substantially compromises model convergence stability during training and reduces overall robustness. These findings validate the necessity of explicit decomposition learning prior to fusion, confirming that staged optimization effectively prevents mutually inhibitory gradient updates.

3.3.3. Loss Function Contributions

Removal of reduced visual information fidelity (VIF) and introduced visible artifacts in high-illumination regions due to non-smooth illumination estimation. This degradation confirms the loss term’s critical role in enforcing illumination map continuity through curvature constraints, preventing overexposure artifacts while preserving natural luminance transitions.

Elimination of significantly impaired feature separation capability, evidenced by measurable degradation in mutual information (MI) metrics. The loss function’s design, enforcing high correlation between base features while minimizing detail feature correlation, proves essential for effective modality-specific feature extraction. This clean decoupling substantially enhances subsequent cross-modal fusion quality by reducing thermal-texture confusion in overlapping spectral regions.

These experiments conclusively demonstrate that each component synergistically contributes to robust fusion performance under extreme low-light conditions.

3.4. DIN Decomposition Visualization

Figure 10 illustrates the decomposition results of DIN. The illumination separation process effectively decouples degraded lighting () from reflectance components, enhancing both visible () and infrared () modalities. Critical features obscured in raw inputs () become discernible post-enhancement, particularly in 0–5 lux regions (highlighted areas). This explicit separation provides a robust foundation for subsequent feature disentanglement and fusion.

Figure 10.

DIN decomposition visualization.

These results collectively validate our framework’s superiority in addressing low-light degradation, modality conflicts, and dynamic illumination challenges inherent to nighttime fusion tasks.

3.5. Computational Efficiency

We tested the model on NVIDIA RTX 4090D (24 GB) using 256 × 256 image samples from the datasets. The average inference time per image is 41.3 ms (corresponding to 24.2 frames per second), with Total FLOPs per frame reaching 450.5 GFLOPs. These metrics reflect the model’s computational demands while demonstrating real-time inference potential on high-performance hardware. The model is implemented based on PyTorch, which supports deployment on various platforms. The lightweight design of key modules (e.g., the multi-scale differential compensation decoder) provides potential for compression and deployment on resource-constrained devices. In future work, we aim to further explore model compression techniques to reduce computational overhead, while conducting targeted research to adapt the model to specific application scenarios. This will involve hardware–software co-optimization and real-world environment testing to broaden its applicability across diverse deployment scenarios.

4. Conclusions and Discussion

This paper proposes D3Fusion, an illumination-aware progressive fusion framework for infrared-visible images in extreme low-light scenarios. By establishing a collaborative optimization mechanism between illumination correction and feature disentanglement, our framework effectively addresses the core challenges of texture degradation, modality conflicts, and dynamic illumination adaptability in extreme low-light conditions. The core innovations include:

- A unified illumination-feature co-optimization architecture that integrates Retinex-based degradation separation with attention-guided feature fusion, enabling joint enhancement of low-light images and decoupling of cross-modal characteristics.

- An illumination-guided disentanglement encoder and multi-scale differential compensation decoder, which dynamically modulate attention weights using real-time illumination maps while enhancing complementary feature extraction via multi-scale differential perception.

- A three-stage progressive training paradigm (degradation recovery, feature disentanglement, and adaptive fusion) that resolves optimization conflicts inherent in end-to-end frameworks, achieving balanced enhancement in thermal saliency and texture preservation.

Extensive experiments on four benchmark datasets (TNO, LLVIP, MSRS, RoadScene) demonstrate that D3Fusion outperforms State-of-the-Art methods in both visual quality and objective metrics under low-light conditions. The proposed Decomposition–Disentanglement–Dynamic Compensation framework effectively suppresses spectral distortion artifacts while enhancing salient information, establishing a robust solution for multimodal perception in illumination-degraded environments with significant applicability for nighttime autonomous systems.

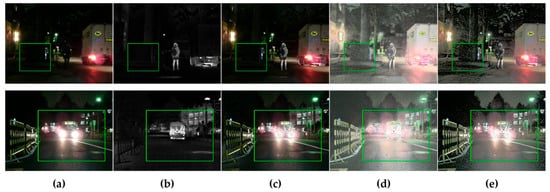

Despite its strong performance, D3Fusion exhibits limitations in certain challenging scenarios. One representative failure case occurs in environments with complex lighting and poor image quality: in extremely dark, shadowed regions, residual noise may be misidentified as object contours during the illumination recognition and correction process. This misinterpretation leads to inappropriate enhancement, where noise artifacts are erroneously amplified as structural edges. An example of this artifact is visualized in Figure 11, demonstrating the model’s sensitivity to severe noise in low-light shadows. Additionally, slight halo artifacts may emerge near intense glare sources due to overcompensation during illumination correction, accompanied by blurred object boundaries. Finally, the model’s computational efficiency (24 FPS on RTX 4090) restricts deployment on resource-constrained edge devices. These limitations will motivate future work on adaptive illumination adjustment, artifact suppression, and lightweight network optimization.

Figure 11.

Failure cases of “#00714N” and “#00947N” in the MSRS dataset. (a) VIS; (b) IR; (c) Fusion result from CDDFuse (without illumination correction); (d) Fusion result from DIVFusion (with illumination correction); (e) Ours (with illumination correction). During illumination correction, residual noise is misidentified as structural contours, resulting in erroneous enhancement in low-light regions.

Author Contributions

Conceptualization, W.Y. and X.C.; Methodology, W.Y.; Software, W.Y.; Validation, W.Y.; Formal analysis, W.Y., Y.L. and X.C.; Investigation, W.Y.; Resources, W.Y.; Data curation, W.Y.; Writing—original draft, W.Y.; Writing—review & editing, W.Y., Y.L. and X.C.; Visualization, W.Y.; Supervision, Y.L. and X.C.; Project administration, Y.L. and X.C.; Funding acquisition, X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (62203312) and the Natural Science Foundation of Sichuan Province of China (2024NSFSC1484).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. The datasets in this article are not readily available due to technical and time limitations. However, the data are available from the authors upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IR | Infrared Images |

| VIS | Visible Images |

| DIN | Decomposition Illumination Network |

| SNR | Signal-to-Noise Ratio |

| SSR | Single-Scale Retinex |

| MSR | Multi-Scale Retinex |

| SIDNet | Scene-Illumination Disentangled Network |

| ir | Infrared Input Images |

| vi | Visible Input Images |

| CAM | Channel Attention Modules |

| CNN | Convolutional Neural Networks |

| EN | Encoder |

| DE | Decoder |

| IGAB | Illumination-Guided Attention Block |

| DMDC | Dilated Multi-Scale Dense Convolution |

| SE | Shared Encoder |

| BFE | Base Feature Encoders |

| DFE | Detail Feature Encoders |

| AF | Adaptive Fusion |

| INN | Inverted Residual Block |

| DPM | Differential Perception Module |

| ReLU | Rectified Linear Unit |

| GAP | Global Average Pooling |

| LeakyReLU | Leaky Rectified Linear Unit |

| VGG | Visual Geometry Group |

References

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inf. Fusion 2023, 91, 477–493. [Google Scholar] [CrossRef]

- Zhang, X.; Demiris, Y. Visible and Infrared Image Fusion Using Deep Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10535–10554. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83, 79–92. [Google Scholar] [CrossRef]

- Jin, Y.; Yang, W.; Tan, R.T. Unsupervised Night Image Enhancement: When Layer Decomposition Meets Light-Effects Suppression. In Proceedings of the 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 404–421. [Google Scholar]

- Zhang, S.; Tao, Z.; Lin, S. WaveletFormerNet: A Transformer-based wavelet network for real-world non-homogeneous and dense fog removal. Image Vis. Comput. 2024, 146, 105014. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.-J. CrossFuse: A novel cross attention mechanism based infrared and visible image fusion approach. Inf. Fusion 2024, 103, 102147. [Google Scholar] [CrossRef]

- Land, E.H.; McCann, J.J. Lightness and Retinex Theory. J. Opt. Soc. Am. 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.H.; Zheng, J.; Hu, H.M.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Fu, X.Y.; Zeng, D.L.; Huang, Y.; Zhang, X.P.; Ding, X.H. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 2782–2790. [Google Scholar]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Zhang, Y.H.; Zhang, J.W.; Guo, X.J. Kindling the Darkness: A Practical Low-light Image Enhancer. In Proceedings of the 27th ACM International Conference on Multimedia (MM), Nice, France, 21–25 October 2019; ACM: New York, NY, USA, 2019; pp. 1632–1640. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage Retinex-based Transformer for Low-light Image Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 12470–12479. [Google Scholar]

- Li, M.D.; Liu, J.Y.; Yang, W.H.; Sun, X.Y.; Guo, Z.M. Structure-Revealing Low-Light Image Enhancement Via Robust Retinex Model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond Brightening Low-light Images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Wang, C.; Pu, Y.; Zhao, Z.; Nie, R.; Cao, J.; Xu, D. FCLFusion: A frequency-aware and collaborative learning for infrared and visible image fusion. Eng. Appl. Artif. Intell. 2024, 137, 109192. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.Y.; Tang, L.F.; Fan, F.; Huang, J.; Mei, X.G.; Ma, Y. SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer. IEEE-CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: New York, NY, USA, 2023; pp. 5906–5916. [Google Scholar]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Wang, J.; Xi, X.; Li, D.; Li, F. FusionGRAM: An Infrared and Visible Image Fusion Framework Based on Gradient Residual and Attention Mechanism. IEEE Trans. Instrum. Meas. 2023, 72, 5005412. [Google Scholar] [CrossRef]

- Zhou, M.; Fu, X.Y.; Huang, J.; Zhao, F.; Liu, A.P.; Wang, R.J. Effective Pan-Sharpening With Transformer and Invertible Neural Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5406815. [Google Scholar] [CrossRef]

- Jia, X.Y.; Zhu, C.; Li, M.Z.; Tang, W.Q.; Zhou, W.L.; Soc, I.C. LLVIP: A Visible-infrared Paired Dataset for Low-light Vision. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 3489–3497. [Google Scholar]

- Toet, A.; Hogervorst, M.A. Progress in color night vision. Opt. Eng. 2012, 51, 0109010. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.Y.; Le, Z.L.; Jiang, J.J.; Guo, X.J.; Assoc Advancement Artificial, I. FusionDN: A Unified Densely Connected Network for Image Fusion. In Proceedings of the 34th AAAI Conference on Artificial Intelligence/32nd Innovative Applications of Artificial Intelligence Conference/10th AAAI Symposium on Educational Advances in Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12484–12491. [Google Scholar]

- Cheng, C.; Xu, T.; Wu, X.-J. MUFusion: A general unsupervised image fusion network based on memory unit. Inf. Fusion 2023, 92, 80–92. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Park, S.; Vien, A.G.; Lee, C. Cross-Modal Transformers for Infrared and Visible Image Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 770–785. [Google Scholar] [CrossRef]

- Liu, J.Y.; Wu, G.Y.; Liu, Z.; Wang, D.; Jiang, Z.Y.; Ma, L.; Zhong, W.; Fan, X.; Liu, R.S. Infrared and Visible Image Fusion: From Data Compatibility to Task Adaption. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2349–2369. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.Y.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Zhang, X. Deep Learning-Based Multi-Focus Image Fusion: A Survey and a Comparative Study. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4819–4838. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).