1. Introduction

Transfers in professional football (soccer) are a risky business because the average transfer fee has increased in recent years [

1] and because these fees can be characterized as investments with high risks where large fees are involved [

2]. Extensive knowledge about players is beneficial in making well-informed decisions about these complex transfer investments in football. By offering a practitioner-oriented study on forecasting players’ future quality and monetary value, this paper offers methods to football clubs for gaining new insights and for improving their strategic transfer investments.

Models providing information about player quality and value have recently emerged with the evolution of data-driven player performance indicators. Improvements in data-capturing technologies resulted in large data sets containing in-game data about football players, which provide the opportunity to obtain more complex variables on player performance [

3,

4]. Numerous player performance indicators have been introduced since then. An example is the expected goals (xG) indicator, which values shot chances and shooting ability [

5,

6,

7,

8]. Next to such action-specific models, assessment methods exist for general player performance, which can be divided into bottom-up and top-down ratings [

9]. Expected threat (xThreat) [

10,

11,

12] and VAEP [

13,

14,

15,

16] are examples of bottom-up ratings that quantify action quality and use the quality of the actions to create general ratings. Top-down ratings such as plus–minus ratings [

17,

18,

19,

20,

21], Elo ratings adjusted for team sports [

22], and the SciSkill algorithm [

23] distribute credit of player performance based on the result of a team as a whole. For the monetary value of players, many models about the estimation of transfer fees and market values have been introduced and provide indicators of the current value of football players [

24]. These performance and financial models describe the quality and monetary value of a football player, and they can complement traditional scouting reports. This allows managers and technical directors of football clubs to make better-informed transfer decisions.

These models for player quality and transfer value give information about the quality and financial value of football players up to that moment, although a transfer decision regards whether a football player should be part of a team in the future. To make better-informed transfer decisions, team managers and technical directors also need insights into the future development of the indicator values that describe the financial value and the player performance. This paper examines the training of supervised learning models that forecast the development in player quality and transfer value of players.

To this end, two prediction problems are studied: forecasting the quality of a football player and their transfer value one year ahead. In the first prediction problem, models are trained to predict the development of a top-down quality indicator, the SciSkill [

23]. The second prediction problem concerns the prediction of the development of the player value, described by the Estimated Transfer Value (ETV) [

25]. A forecasting horizon of one year is selected in this study as it aligns with the length of one full season in football and the success of a transfer is often measured by the performances in the subsequent season. The resulting models of these prediction problems offer insight into the question of whether a player will be worth the money in the future. These models thus provide critical insights for the managerial staff of a professional football club.

To further improve the usability of the research results for staff at football organizations, the findings of models should be presented such that they can be utilized by football clubs in practice, as stressed by Herold et al. [

4]. This means that models should not only be assessed on predictive accuracy but also on explainability and on methods for uncertainty quantification [

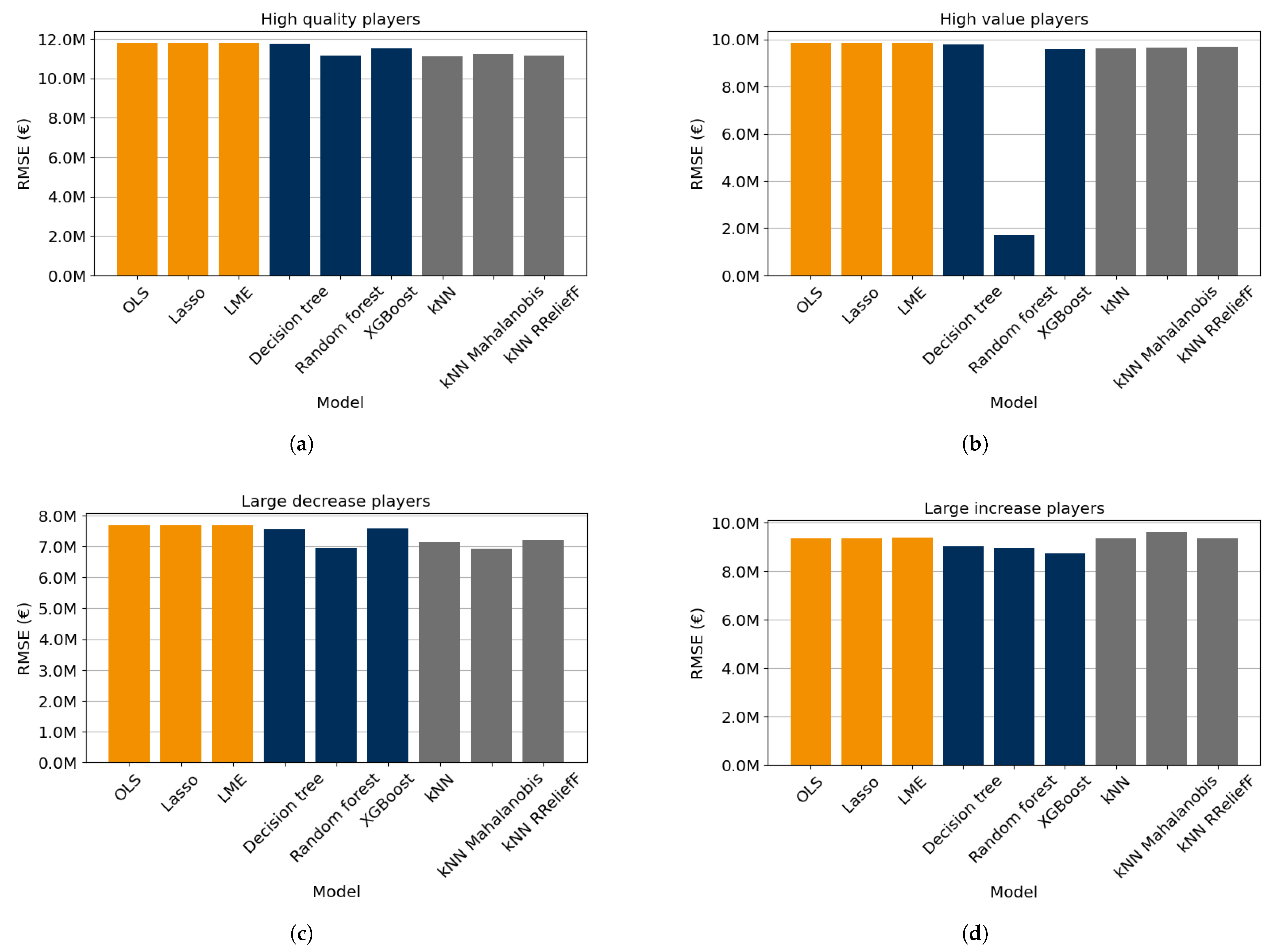

26]. To this end, only explainable supervised learning models are used in this research, and the models are assessed on their methods for uncertainty quantification. Although deep learning models are common in forecasting tasks, their lack of explainability makes them less suitable for application at football clubs, and they are thus excluded in this study. To focus even more on the applicability for practitioners, the accuracy of the models is partly determined by estimating the loss values on different groups of football players, because certain types of football players are more important from a practitioner’s perspective.

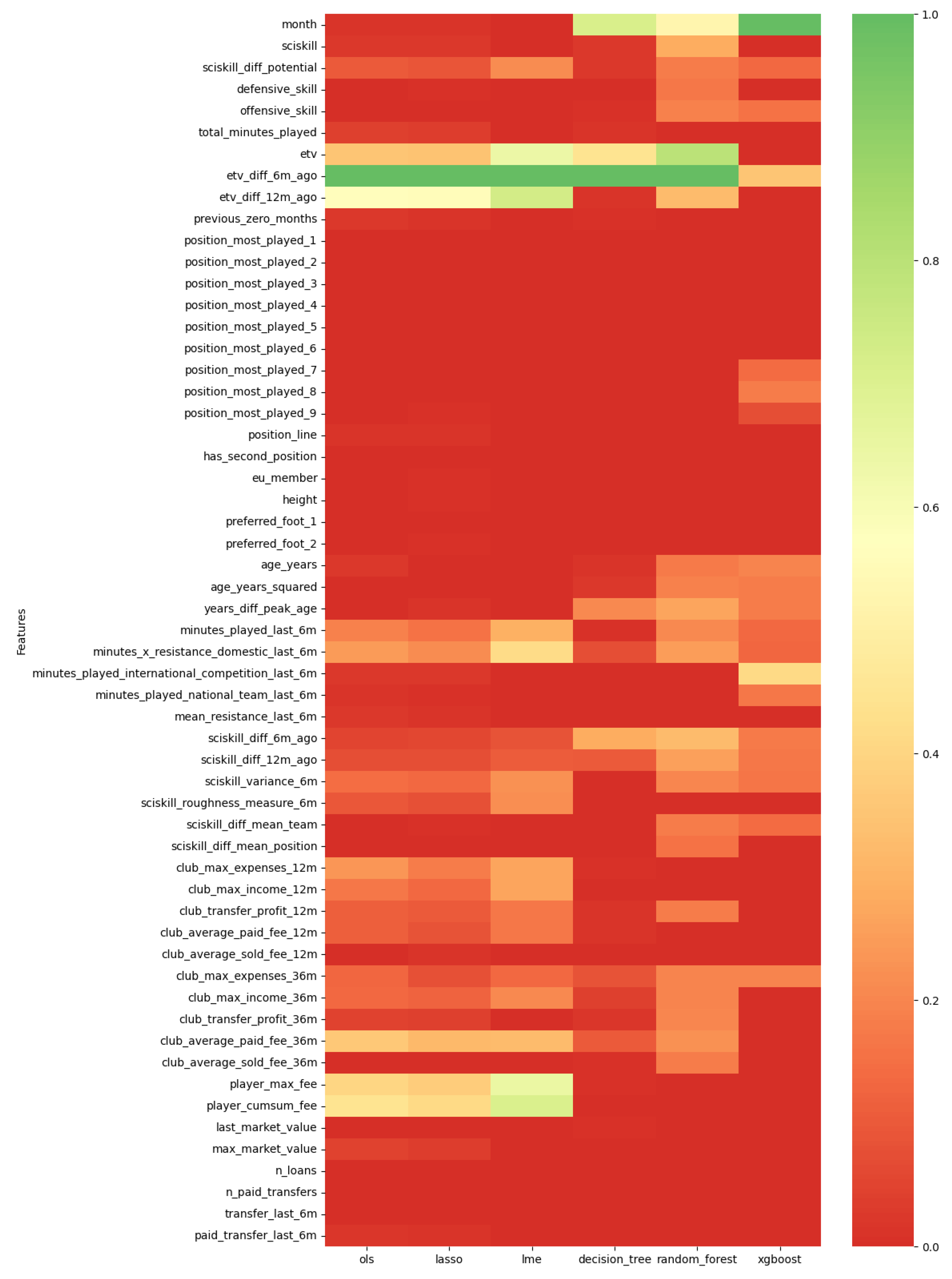

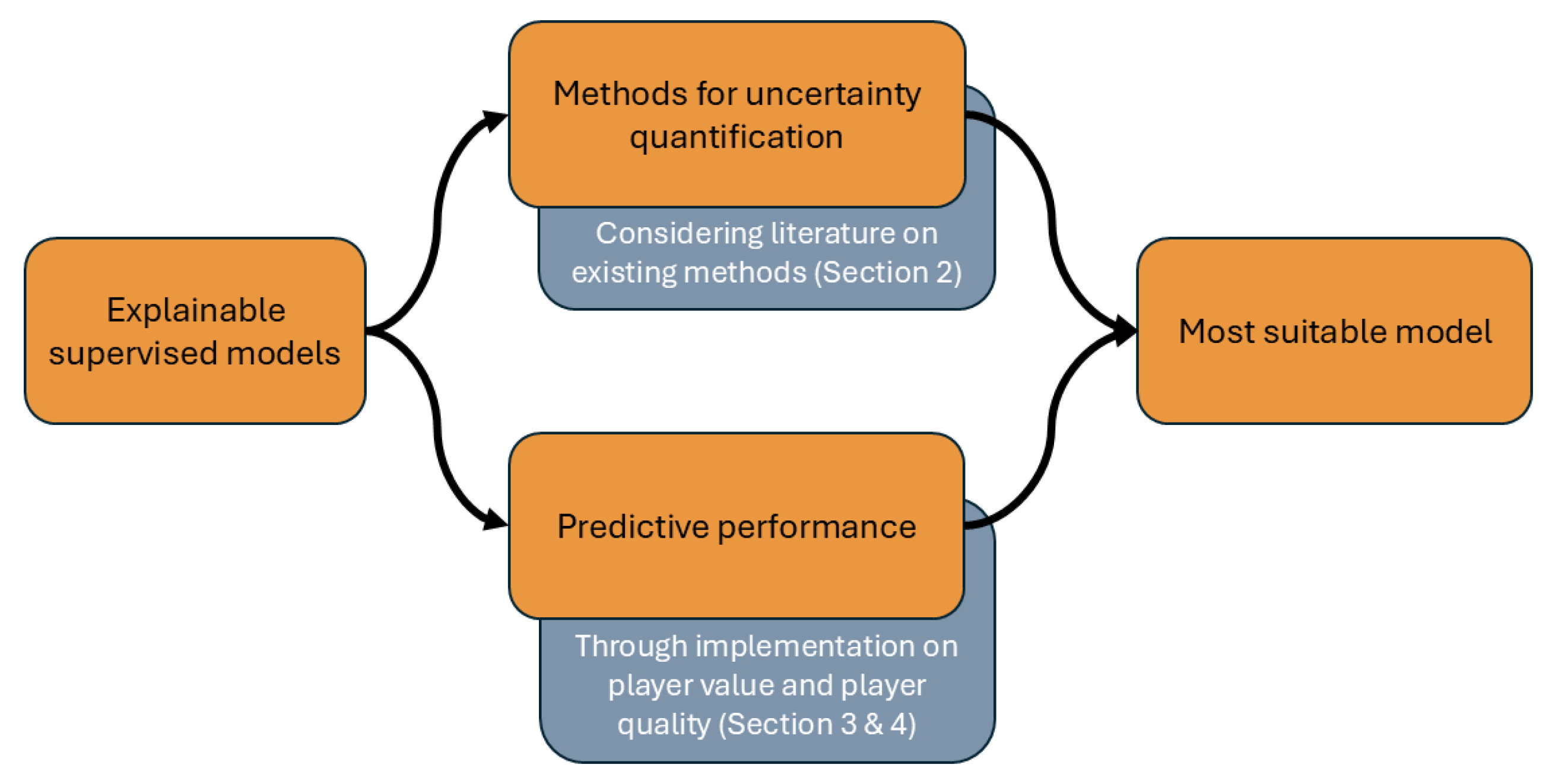

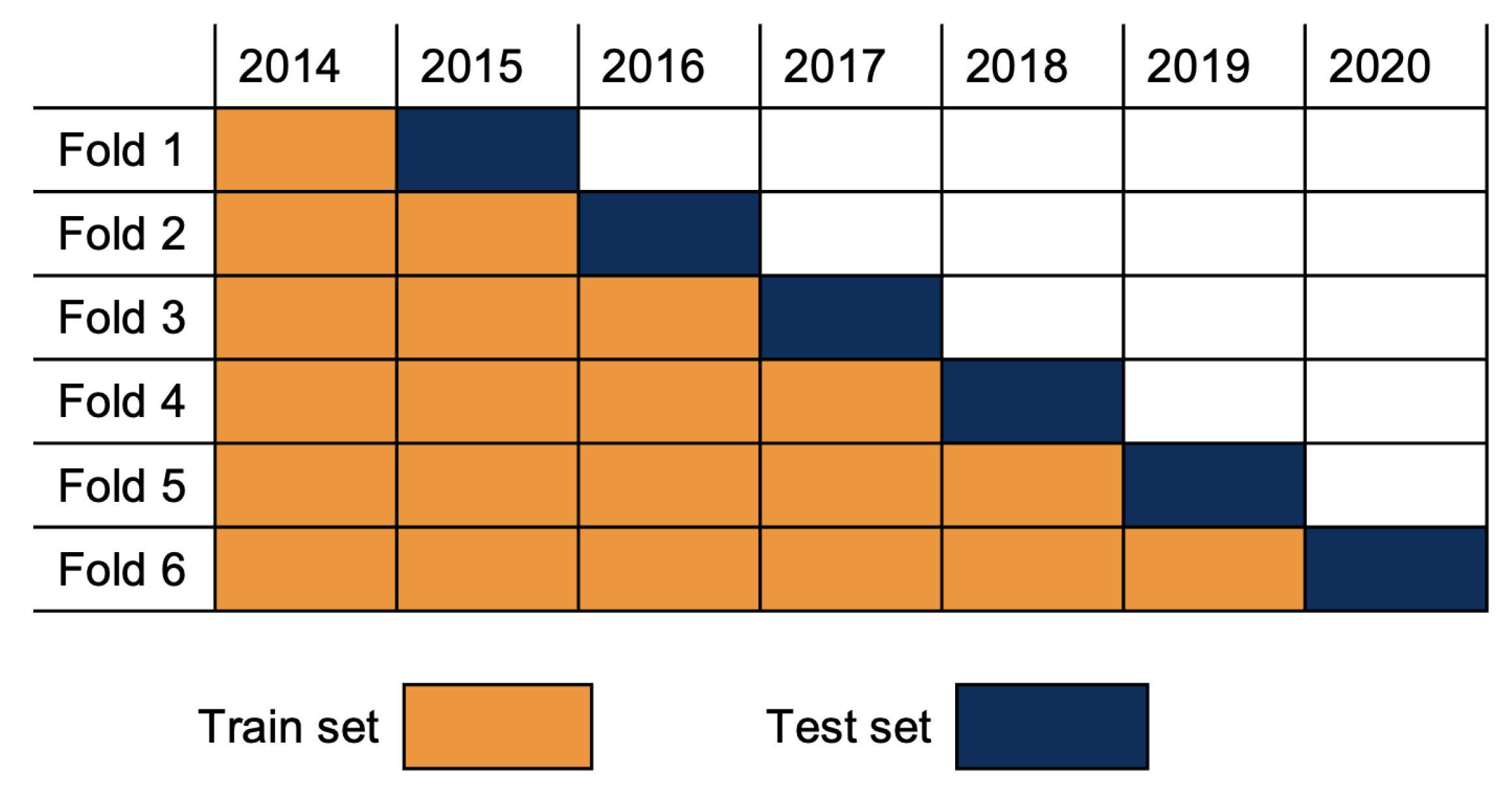

The aim of this research is to find the most suitable explainable machine learning model to forecast player performance with respect to predictive accuracy and methods for uncertainty quantification. To find this, several explainable supervised learning models are studied. By reviewing the literature, favorable models are identified based on their methods for uncertainty quantification. The predictive performance is assessed by implementing them to forecast the player quality (SciSkill) and the player value (Estimated Transfer Value) of football players one year ahead. The predictive quality is studied on both the general population of players and on subgroups of players that are more interesting for practitioners, such as young or high-value players. An overview of this process is visualized in

Figure 1. These results will then be combined to find an answer to the research question.

The main contribution of this paper is the illustration of how extra value can be added to football player KPIs by forecasting future values in a practitioner-oriented way. The results of this study provide knowledge on what models are most suitable for application by practitioners, lowering the threshold for real-life implementation. By taking explainability, uncertainty quantification, and performance on important subpopulations of players into account, this research is presented such that the results can be used easily by practitioners. In this way, this paper also contributes to bridging the gap between academic research and practitioners.

This paper is organized as follows: The scientific background in the existing literature is reviewed in

Section 2. After a discussion of the considered supervised models and their methods for uncertainty quantification, favorable models are identified based on their methods for uncertainty quantification. Then, the literature on data-driven player value and on quality quantification methods is covered, along with research on forecasting these values. The long-term forecasting of player development is then studied for the two prediction problems, for which the methods are described in

Section 3. The results of the models in the prediction problems of the player quality and player value are presented in

Section 4. The conclusions of this research are then summarized in

Section 5, followed by a discussion of the implications and future directions in

Section 6.

2. Background

First, the considered models are discussed along with an assessment of their methods for uncertainty quantification. Then, more background is given on existing models for football player quality and value.

2.1. Supervised Models

Supervised learning models provide a possibility to forecast the player performance indicators in the future. Based on input variables, they predict output variables, and they can be trained based on an existing data set for prediction problems. Such a data set consists of features describing characteristics about a data point and labels describing the actual values. In this research, the features are values that describe the situation of a player at a specific point in time, and the labels are the player’s performance indicator exactly one year later. By constructing these features and labels for multiple points through time and for different players, a data set can be created to train the models.

2.1.1. Considered Models

To align the research with practitioner needs, explainable models are studied in this paper. The considered models can be divided into linear models, tree-based models, and kNN-based models, and they will now be shortly described.

The first linear model considered is ordinary least squares (OLS), sometimes called (multiple) linear regression, as described by (

Section 3.2, Hastie et al. [

27]). This model assumes that the true relation is linear

, where

is normally distributed noise. OLS minimizes the residual sum of squares

and has the lowest mean square error of all unbiased estimators. Lasso regression [

28] is another linear model that introduces a bias in the model by minimizing the penalized residual sum of squares

. By introducing this bias, it reduces the variance, and it is possible to provide more accurate predictions. The third linear model is the linear mixed effects (LME) model, which assumes that

where

[

29]. The random variable

is called the random effect of group

i and can be used to model the influence of a specific random attribute that should not influence the predictions, e.g., nationality. Because of their linearity, these models are explainable and suitable for applications.

The CART decision tree (Section 9.2, [

27]) is a model that recursively splits the feature space by taking splits of the form

such that it minimizes the sum of squares within each split. As described by Hastie et al. [

27] (p. 312), decision trees generally suffer from a high variance. A random forest model solves this by repeatedly training decision trees on resampled data (Chapter 15, [

27]). The random forest model then predicts by taking the average prediction of these decision trees. Boosting is another way of improving decision trees. A boosting algorithm fits decision trees sequentially and reweighs the data points for which the model has a bad performance (Chapter 10, [

27]). The XGBoost [

30] combines several techniques, such as regularization and feature quantile estimates, to provide such a boosting algorithm. Generally, the splitting behavior in tree-based algorithms mimics the if–then reasoning of humans. Additionally, they can provide feature importances by considering how much a specific feature improves the predictions on the training set. This makes the tree-based models explainable and thus suitable models.

The last type of model considered in this paper is the k-nearest neighbor (kNN) model. Given the features, these models search for the most similar data points in the training data set and predict the associated

-value by taking the average of the

k-nearest neighbors (Subsection 2.3.2, [

27]). The closest neighbors can be weighted more heavily as illustrated by Dudani [

31]. The ReliefF algorithm by [

32] calculates feature importances by looking at the probability of having different values considering the neighbors of a data point. Additionally, the kNN models can provide examples of training data points to explain predictions. This gives an intuitive interpretation to practitioners, since they can see that a player’s forecast performance is based on previous players in similar situations, making the rationale behind the prediction clear and relatable. To interpret a prediction, practitioners can then investigate the players in similar situations, which can help them assess the individual prediction. However, similarity becomes an abstract concept for practitioners in higher dimensions (pp. 108–109, [

33]), and the kNN model suffers from the curse of dimensionality (Section 2.5, [

27]), which makes application with a large feature set infeasible. To mitigate this in the current paper, kNN models are applied to a feature set of limited dimensions.

2.1.2. Uncertainty Quantification

To better align research with application in practice, the models in this study are also assessed on their methods for uncertainty quantification. Although quantile regression could be applied to obtain a form of uncertainty quantification, this requires an extra model. This makes quantile regression less useful for applications in practice. Therefore, models with uncertainty quantification methods that do not need to alter the model or results are favorable.

Linear regression has an underlying theory that gives prediction intervals as described by Neter et al. [

34]. These prediction intervals are based on assumptions that are unlikely to hold for the prediction problems in this paper, such as the normality of errors. Nonetheless, they do give an indication of the uncertainty of the prediction. Similarly, the kNN models provide the neighbors, which are a group of similar data points. Uncertainty quantification can be obtained by taking the minimal and maximal values of the dependent variable within these neighbors if the number of neighbors

k is of significant size. Lastly, the bagging procedure of the random forest model can be utilized to obtain uncertainty quantification for the predictions, as described by Wager et al. [

35]. This method utilizes the different decision trees in the random forest to quantify the uncertainty in the prediction. From these properties, it is concluded that the linear regression, random forest, and kNN-based models are favorable with respect to uncertainty quantification.

2.2. Existing Indicators

In recent years, the increasing amount of available data in football has driven the introduction of methods to rate individual football players [

36]. The performance of a professional football player has traditionally been determined via expert judgment based on video data and statistics describing the frequency of in-game events. Data-driven models have offered the possibility to reduce the bias in assessments and improve the consistency of the judgment of both player quality and player value, as discussed below. In this way, these models have provided new and consistent insights into the quality and monetary value of football players, which has aided in transfer decisions.

2.2.1. Player Performance

The creation and the comparison of models for player performance have revealed new challenges. Because teams can have different aims in football and can apply various tactics, there does not exist a ground truth for player performance [

26]. A player can, for instance, be instructed to keep the ball in possession, which leads to the player performing fewer actions that might result in scoring a goal. Because of this lack of ground truth, and various models exist that describe different aspects of the game.

Bottom-Up Ratings

Some models define the players’ quality by their actions, called bottom-up ratings [

9]. Although action-specific models exist [

5,

6,

7,

8,

37], methods to assess the quality of all types of actions have been introduced that generalize the action-specific models. These general models often define a ‘good’ action as one that increases the probability of scoring and decreases the probability of conceding a goal.

The VAEP model by Decroos et al. [

13] calculates the probability of scoring given the last three actions, including in-game context. Because the involved machine learning techniques are considered a black-box model by practitioners, the authors in [

14,

15] introduced methods to make the VAEP model more accessible for practitioners. A comparable, more interpretable framework is the Expected Threat (xThreat) model by Rudd [

10], which only considers the current situation, defined by the location of the ball-possessing player, to estimate the probabilities of the ball transitioning to somewhere else on the pitch. This is modeled by a Markov chain, which can be used to describe the probabilities of scoring before and after each action to find the quality of an action. Van Roy et al. [

11] have compared the xThreat and VAEP models and have found that, although the xThreat model is more interpretable to practitioners, it can only take into account the position of an action and excludes contextual information such as the position of defenders from its model. This means that there is a trade-off between explainability and the inclusion of in-game context when choosing either VAEP or xThreat models. Van Arem and Bruinsma [

12] have extended the xThreat model by including variables describing the defensive situation and height of the ball. This Extended xThreat model can take into account in-game context like the VAEP model, while maintaining explainability as an xThreat model.

These bottom-up ratings describe the quality of a professional football player by assessing in-game on-the-ball actions. These on-the-ball actions are often offensive actions, and they are better at capturing the quality of attackers and attacking midfielders, which leads to a bias when these models are studied. This bias makes it harder to assess the quality of defensive players. Moreover, these models need more detailed event data describing in-game events, limiting the scale on which they can be applied. Consequently, the quality of football players in this study is not assessed with bottom-up ratings.

Top-Down Ratings

These problems of bottom-up ratings [

9] can be reduced by using top-down models that describe player quality using the lineups and outcomes. Plus–minus ratings are an example of such ratings and were first used in ice hockey and basketball [

18], and were later applied to football by Sæbø and Hvattum [

17]. For plus–minus ratings, the game is partitioned into game segments that contain the same lineups, which correspond to the data points in the data set. In this data set, the result of the game segment, the goal difference, for example, is the dependent variable. Indicators describing whether a player was active in the segment are the independent variables. Linear regression is then applied to estimate the influence of players on the results. The coefficients of the regression describe the average impact of a player on the game result and give an indicator for player performance over the period of time covered by the data.

Because substitutions are infrequent in football, a game does not have many game segments with different lineups. Moreover, football is a low-scoring sport. This creates a situation where a low number of segments with limited distinction in outcomes must be used to infer player quality. To deal with this, other quantities have been used as the dependent variable to describe the result of a segment, like the expected number of goals (xG), the expected number of points (xP), and the created VAEP values by Kharrat et al. [

18] and Hvattum and Gelade [

9]. Pantuso and Hvattum [

19] additionally showed the potential of taking age, cards, and home advantage into account, and Hvattum [

20] illustrated how separate defensive and offensive ratings can be obtained. Because putting a player in the lineup is an action that a coach performs, De Bacco et al. [

21] adapted the plus–minus ratings using a causal model to better describe the influence of the selection of a player. These studies show how plus–minus ratings have been adapted to the application of rating players in football.

Whereas the plus–minus ratings describe the quality of a player using multiple historical games, there also exist models that determine the quality of a player after each game using the lineups and final score. Elo ratings are such ratings and were originally developed to evaluate performance in one-on-one sports. The concept was subsequently adapted to the game of football by Wolf et al. [

22]. This adapted algorithm provides ratings for each individual football player and calculates the team rating via the average of players in a game weighted by the number of minutes played. The ratings are then used to predict the match outcome using a fixed logistic function, and after each game, the individual ratings are adjusted. If the outcome is better than predicted, the player rating is increased, and if the outcome is worse than expected, it is decreased. The authors also introduced an indicator for player impact to deal with the fact that this rating undervalues good players at below-average teams.

The SciSkill [

23] is an industry-validated, more elaborate version of the Elo rating. Instead of only considering one player’s quality, it describes football players combining a defensive rating and an offensive rating. These offensive and defensive scores are then combined to obtain one value, the SciSkill. For each game, the outcome is predicted using a model via an expectation-maximization algorithm. After the prediction, the SciSkill is updated by adjusting the SciSkill values based on the difference with the actual game result as with other Elo-ratings. Compared to the Elo algorithm, which was implemented and validated by Wolf et al. [

22], the SciSkill model extracts more detailed information from the matches and describes the player quality more elaborately.

2.2.2. Player Value

In contrast with player quality, there does exist a ground truth for the concept of player values. The value of a transfer fee is based on the value of the player for each of the involved clubs and historical transfers of similar players [

38]. Thus, the historical values of these fees can be used to predict the transfer value of a player, albeit at the cost of some selection bias.

A well-known medium that describes the value of a player is Transfermarkt. This company uses crowd estimation to assign values to players [

39]. These market values describe the general value of a player and do not take into account the temporary situation of a football player, like the current club and contract length. This means that they describe a different quantity than the expected value of a transfer fee. Nonetheless, these values are strongly correlated with the real transfer fees as shown by Herm et al. [

39]. Therefore, both market values by Transfermarkt and transfer values are often used interchangeably when describing the monetary value of a football player.

The literature review by Franceschi et al. [

24] showed that many studies have been performed to describe the monetary value of a football player based on data. The authors found that most of these studies used linear regression to find which variables have a significant linear dependence on the value of a football player. The review by Franceschi et al. [

24] considered 111 trained models that were used in the scientific literature to investigate the transfer value of a football player. The vast majority (85%) of the models are based on ordinary least squares. The authors have also shown the importance of different variables in the considered models. For instance, they have found that age, the square of the age, and the number of matches played by a player are frequently studied variables. These variables are also most often found to be significant. Players frequently increase in value as they get better with experience that is gained over the years, but they also decrease in value as a player loses the potential to improve when getting older. Consequently, the influence of age is often measured with a quadratic term. Similarly, the number of games played can be expected to be an important variable because players gain experience by playing games, which makes them more valuable. Moreover, players who play a lot of games are often the better players on a team. This explains why these variables are important, as found by Franceschi et al. [

24]. Their study also shows that almost no variables describing defensive behavior were considered when other researchers trained models to describe transfer values. As a consequence, the resulting models can be expected to describe the value of offensive players better than that of defensive players. In addition, the linear models in these studies are mostly trained to determine the influence of variables on the transfer value of a football player, and they are generally not trained and tested for out-of-sample prediction, which limits the application of predicting based on new, unseen data.

In contrast, Al-Asadi and Tasdemır [

40] have trained multiple models to predict market values of football players with features from the video game FIFA. They have found that a random forest model gives improved predictions over linear methods. Steve Arrul et al. [

41] have studied the application of artificial neural networks for the same problem, also considering features from the video game FIFA. They obtain similar loss values as the random forest model of Al-Asadi and Tasdemır [

40]. The research by Behravan and Razavi [

42] introduces a methodology to train a support vector regression model via particle swarm optimization for the prediction of market values. Although the data set is somewhat similar to the studies above, this model attains worse loss values. In the study of Yang et al. [

1], random forests, GAMs, and QAMs are applied to predict the transfer fees of players based on variables describing the player. The authors have inferred from their random forest model that the expenditure of the buying club and the income of the selling club are important features in predicting the transfer fee, as well as the age and the remaining contract duration. This research additionally illustrates how GAMs and QAMs can be used to investigate the dependency between the player transfer value and the given features. The QAM models show that this relation varies for different quantiles, indicating the need to study the influence of models on different groups of players. On the other hand, the GAMs show that the relationship between the transfer values and the features is often nonlinear. A study with extensive types of player performance metrics as features has been performed by McHale and Holmes [

2], in which linear regression, linear mixed effects, and XGBoost models have been trained. These models not only include statistics such as the number of minutes played, height, and position, but also plus–minus ratings based on xG, expert ratings from the video game FIFA, and GIM ratings, which are similar to VAEP ratings. The results show that the best predictive performance is attained by the XGBoost model, although the linear mixed effects with the buying and selling clubs as random effects also provide good results. Their results indicate that their model outperforms Transfermarkt market values when predicting the transfer fees on average, although the market values are a better predictor for transfers of more than EUR 20 million. These studies show how supervised learning can be used to obtain models that predict the value of a football player. They show that nonlinear methods generally predict more accurately and that the patterns can differ for different groups of players.

2.3. Predicting Future Values

Although the studies discussed above are concerned with the quantification of the quality and monetary value of football players at the present moment, only limited work has been conducted on the future development of player performance. Apostolou and Tjortjis [

43] have conducted a small-scale study with the aim of predicting the number of future goals of the two football players Lionel Messi and Luis Suárez using a random forest, logistic regression, a multi-layer perceptron classifier, and a linear support vector classifier. Pantzalis and Tjortjis [

44] have predicted the expert ratings in the next season of 59 center-backs in the English Premier League based on one season of player attributes from a popular football manager simulation game. Their method uses a linear regression model to describe the in-sample patterns. Giannakoulas et al. [

45] have trained linear regression, random forest, and multi-layer perceptron models to predict the number of goals of a football player in a season before the start of the corresponding season. Their data set entails around 800 football players. Similarly, the models by Markopoulou et al. [

46] have been trained to predict the number of goals by looking at the creation of different models per competition. This study on 424 football players shows that the best results are often obtained using XGBoost models for this prediction problem and that making different models for different competitions might be beneficial.

Barron et al. [

47] have tried to predict the tier within the English first three leagues in which a football player would play next season as an indicator of player quality. Three artificial neural networks are trained to predict in which league a player would play with the data of 966 football players. Their models are only able to recognize the differences between players in the lowest and highest tiers (League One and the English Premier League).

Little literature exists about the prediction of the future transfer values of football players. Baouan et al. [

48] have applied lasso regression and a random forest model to identify important features for the development of around 22,000 football players. They have trained these supervised models for players of different positions to predict a player’s transfer value two years in the future based on performance statistics. The feature importances of the models show, for instance, that the average market value of a league is an important feature for the future values of players in that league. Although cross-validation is performed for the hyperparameter tuning, this study focuses on finding in-sample patterns.

Our research treats forecasting of both player quality and transfer value. The current paper builds on the existing literature about forecast player performance by studying the long-term forecasting of a model-based player quality indicator on a larger data set. Additionally, this research avoids the bias in the current literature that better describes offensive players by using a more general top-down rating. Our research contributes to the existing knowledge of forecasting the monetary value because the models are trained to perform out-of-sample prediction, which makes it possible to apply them to unseen situations. Moreover, the combination of forecasting the development of both player quality and transfer value gives a comprehensive summary of the most important aspects in transfer decisions. In this way, this paper builds upon the existing literature by forecasting the development of model-based indicators for player quality and value on a significant data set in a predictive setting.

5. Conclusions

This paper aims to find the most suitable supervised learning model for forecasting the development of player performance indicators one year ahead in a practitioner-oriented way. Through the literature, it was found that linear regression, random forest, and kNN-based models are favorable based on their uncertainty quantification methods.

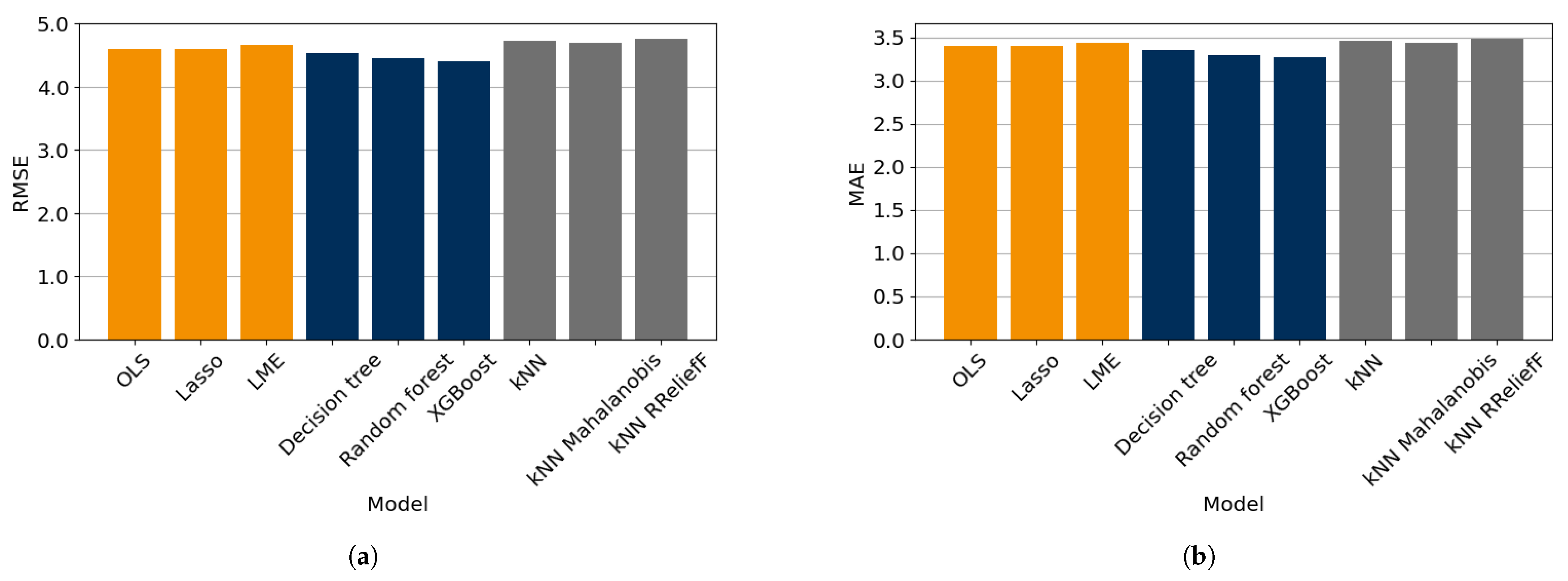

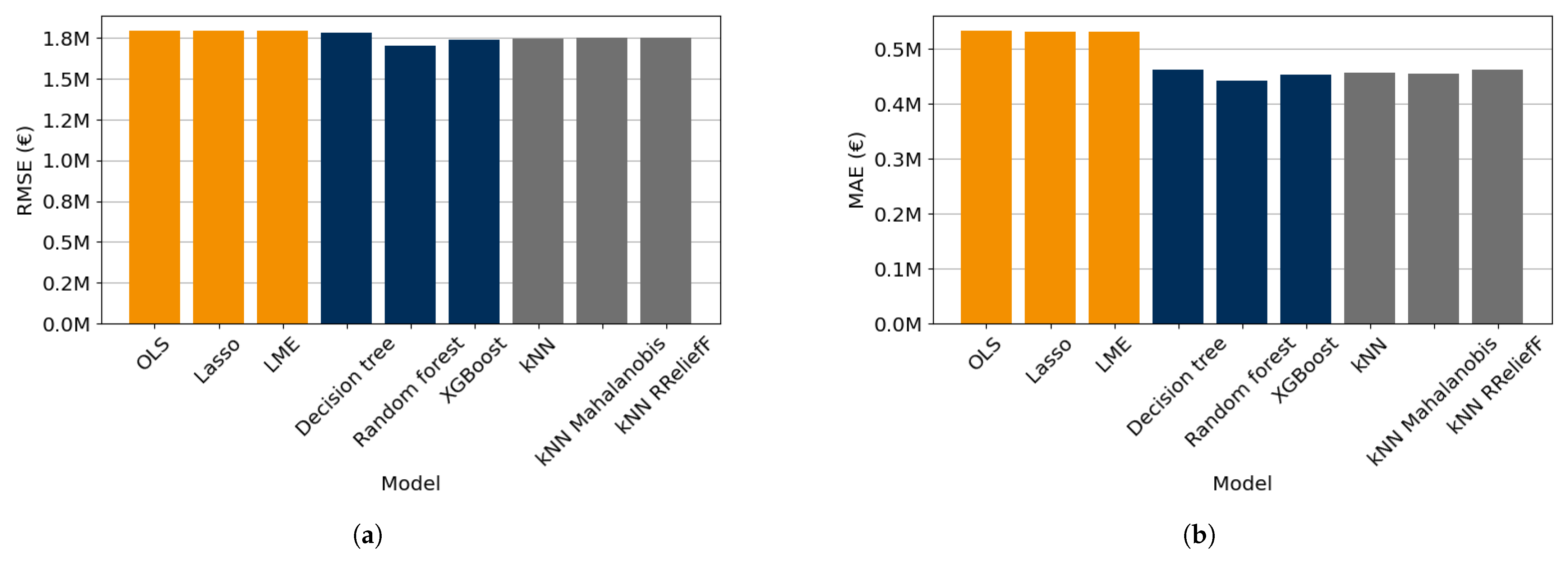

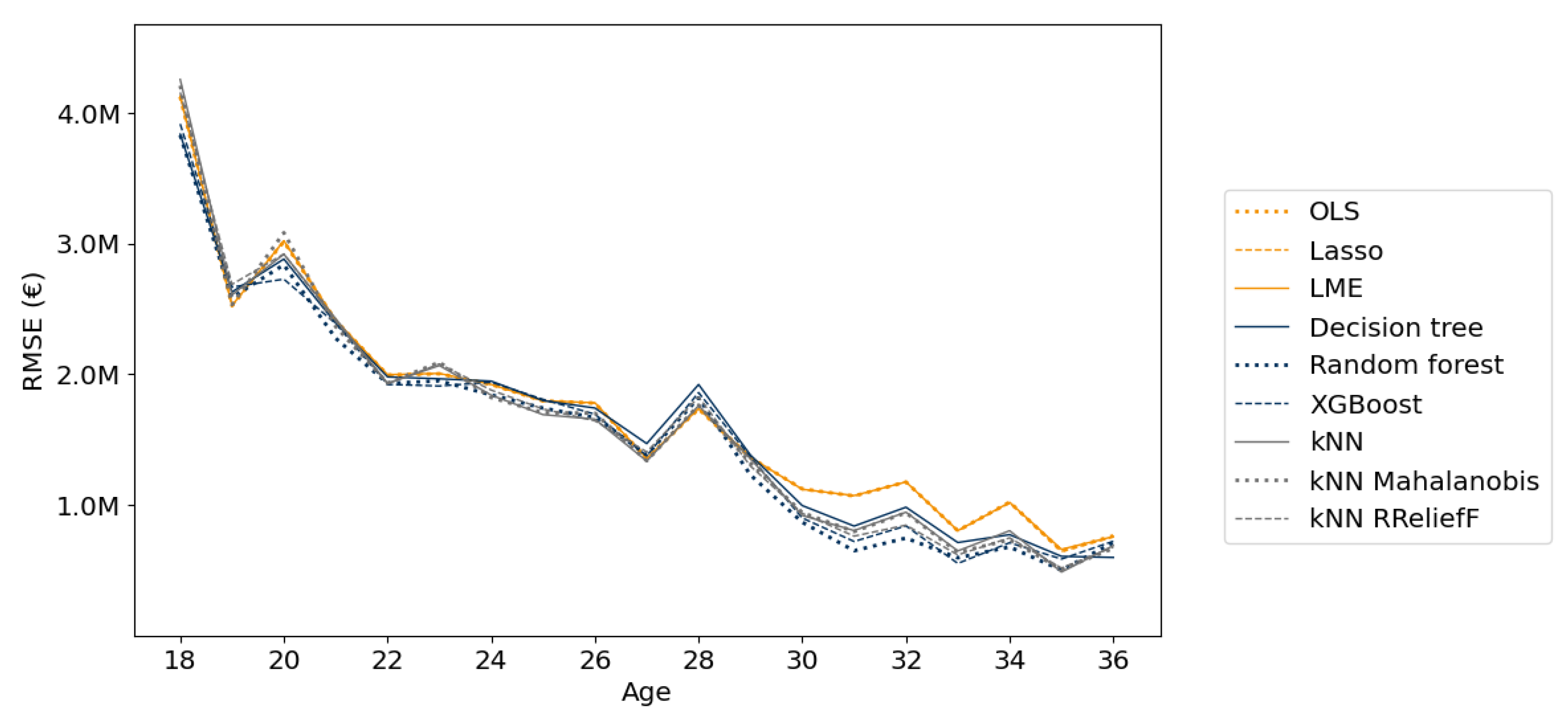

Two prediction problems were considered to study the predictive performance of linear, tree-based, and kNN-based models. The results show that the XGBoost model attained the lowest loss values for predicting the development of the player quality with this data set, while the random forest also had relatively low loss values. For the prediction of the player value, it was found that the random forest had the lowest loss values. The XGBoost and kNN-based models also had relatively accurate predictions according to some of the studied losses.

Because the random forest had a good predictive accuracy on these data sets and it provides methods for uncertainty quantification, it seems to be a suitable model for predicting the development of player quality and value in football. Nonetheless, it is important to note that random forests can be sensitive to data imbalance, which regularly occurs in elite football. Additionally, given the large number of features needed to predict the development of football players, random forests may risk overfitting if not properly tuned and validated. These limitations should be considered when applying the model in practice, and appropriate techniques, like resampling or feature selection, may be necessary to mitigate them.

Taking these considerations into account, it is concluded that the random forest is the most suitable explainable machine learning model to predict the development of player performance indicators one year ahead for the above prediction problems.

6. Implications and Future Directions

By addressing the two prediction problems, two random forest models have been obtained that can predict the development of both the quality and the monetary value of football players. These random forest models can be used to aid in transfer decisions combined with a critical view of a domain expert. Suppose the manager of a football club has a long-term interest in a football player. If the model predicting the development of player quality indicates that a player will grow in quality, it means that a player is more interesting to a football club in the long term. If the prediction of the transfer value indicates an increase in the transfer value, it might be better to buy the player sooner rather than later. The two models can also be combined to give extra insights. Suppose a manager currently has a veteran player who is predicted to start decreasing in quality, and he can buy a young player who is predicted to develop into a first-team player within the next year. Assume that the transfer value of the young player is predicted to only slightly increase. In this case, it might be better to buy the young player one year later and then sell the veteran player after a critical evaluation of the possible influences of noise and expected transfer market development by the manager. This would be beneficial because the young player will only have a slightly higher transfer fee, and the veteran player will have a better quality in the meantime. These examples illustrate the added value of our models to predict player development for the improvement of data-informed decision-making.

The models from this paper can also be used to complement existing methods in the literature. Pantuso and Hvattum [

19] introduced a method to optimize transfer decisions based on indicators that describe a player’s quality and transfer value and their future values. Their methodology needs to know the ‘future’ values of the player quality and their transfer values. To solve this, they consider transfer situations from over a year prior so that the values of one year later are already known. Consequently, their model can only be applied to historical situations. By using our models to predict the future values in quality and transfer value, it is possible to obtain these predictions and optimize transfer decisions with a current-time application. This makes it possible to advise data-driven transfer decisions to optimize the squad in a real-life transfer period.

When applying the models from this research at football clubs, possible shortcomings should be taken into consideration. The models will most likely capture patterns of development for players that are typical for the population on which they are trained. They will perform less on nontypical players, which makes it harder to predict, e.g., late bloomers. Young or injury-prone players can be considered vulnerable players who are nontypical for the general population, and the predictions of the models on such players should thus be interpreted with extra care. This highlights the value of explainable modeling, which can be complemented with interpretation techniques like the use of Shapley values by Lundberg and Lee [

54].

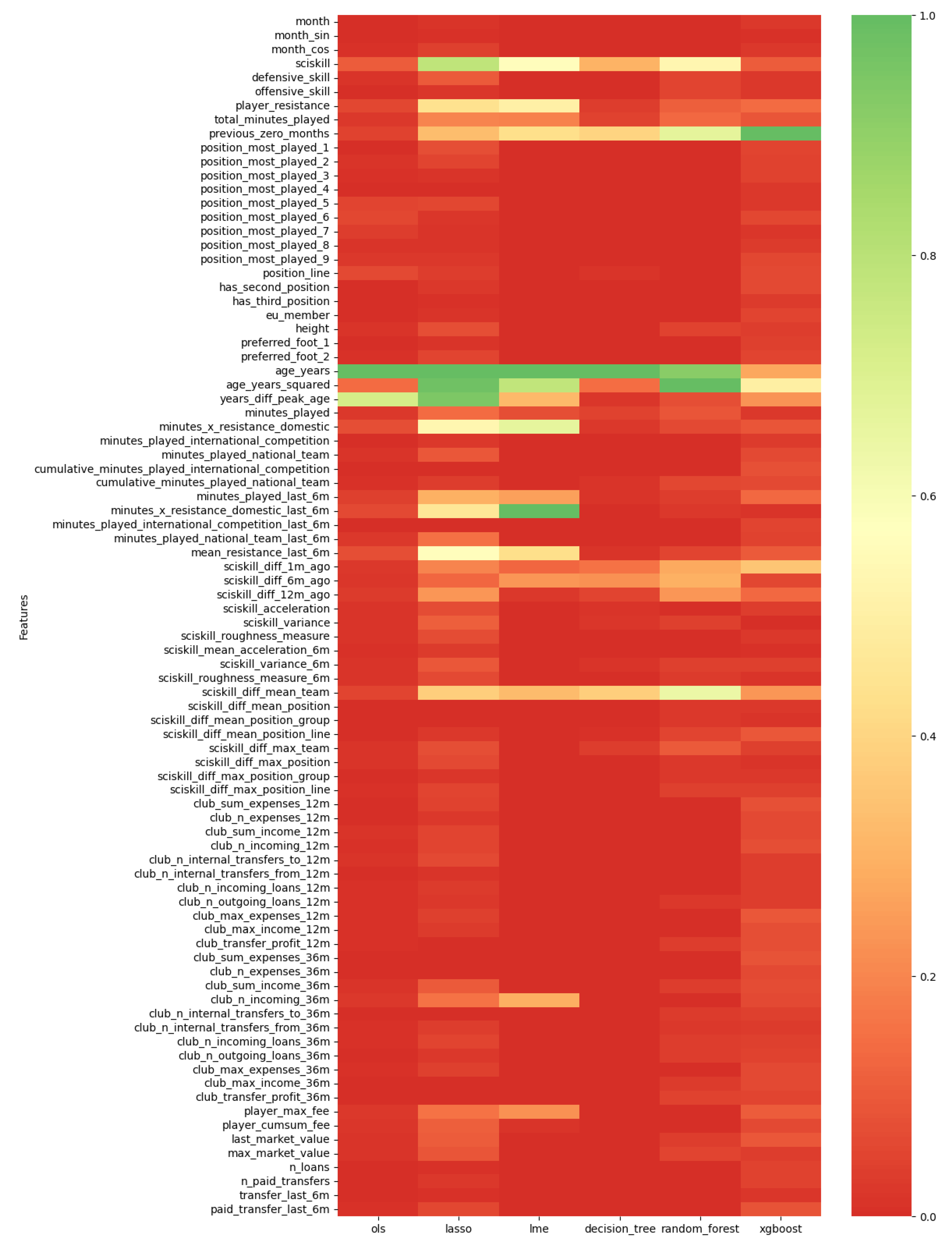

Another advantage of the explainable models in this research is that they provide methods to gain insight into important factors for prediction. The models for the prediction problems show that this can be achieved via feature importance, similar as carried out by Baouan et al. [

48]. Because our models predict the difference between the current performance indicators and those of one year later, our research can also show the influences of the indicator itself. Our results show that the value of the indicator and the historical values of the indicators are the most important features, which adds to the knowledge obtained by Baouan et al. [

48] that describes which features are important. Additionally, time-dependent variables like the period in the year and the months without games have been found to be important features in forecasting player development. This indicates that the time series of the indicators themselves contains important information on the development of football players, although our findings also suggest that contextual information gives improved predictive performance of the models.

In short, this paper studied explainable supervised models to predict player development via performance indicators. Two prediction problems were studied in which explainable models were trained to predict the development of both player quality and player value. It was found that the random forest model is the most suitable model for forecasting player development, because of the accurate predictions for both performance indicators, combined with the method for uncertainty quantification arising from the bagging procedure.