A Counterfactual Fine-Grained Aircraft Classification Network for Remote Sensing Images Based on Normalized Coordinate Attention

Abstract

1. Introduction

- 1.

- A novel attention mechanism is proposed: this design performs spatial position encoding and integrates a coordinate normalization mechanism to enhance the weights of key local features in the image, suppress interference from non-essential subject features, and accurately locate discriminative regions within the image.

- 2.

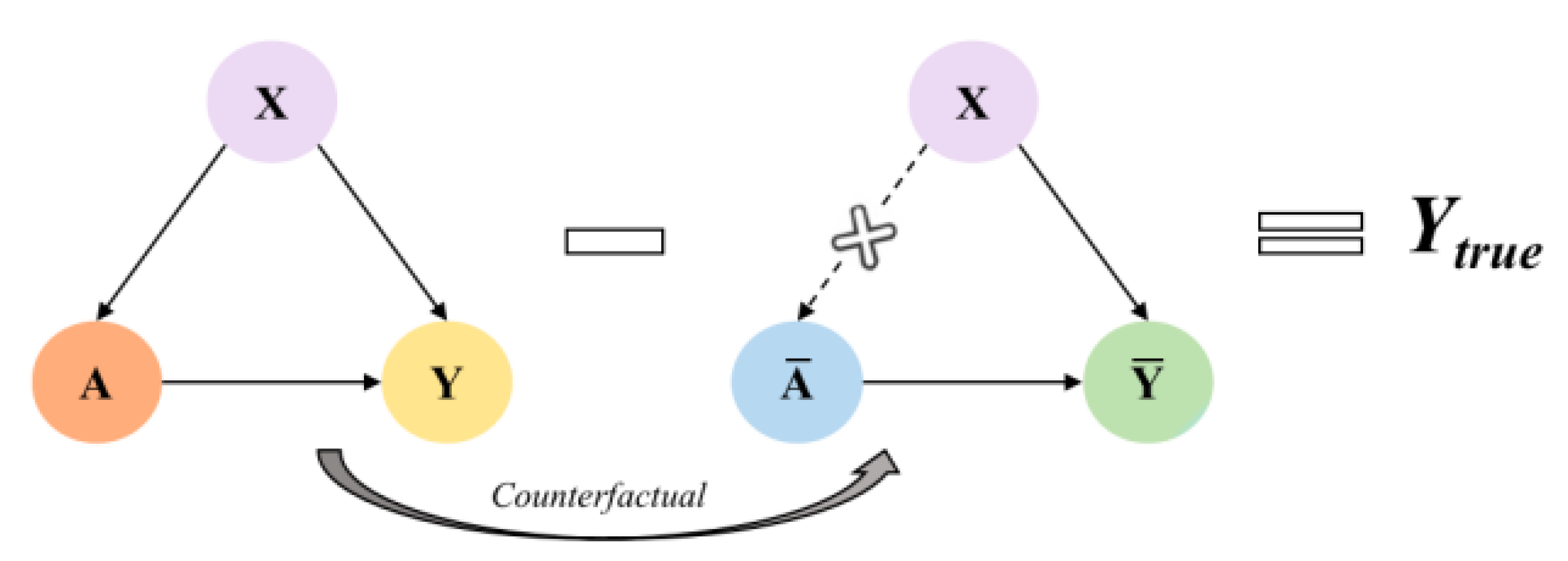

- A counterfactual intervention method based on causal inference is introduced to investigate the intrinsic relationships between the generated attention maps and the predicted classes, which not only enhanced the model’s interpretability but also significantly improved its robustness in complex scenarios such as annotation errors and domain changes.

- 3.

- The proposed network demonstrates strong classification performance and interpretability on three challenging fine-grained aircraft classification datasets, showing great potential for applications in both civil and military domains.

2. Related Works

2.1. Fine-Grained Image Classification

2.2. Attention Mechanism

2.3. Explainable Analysis

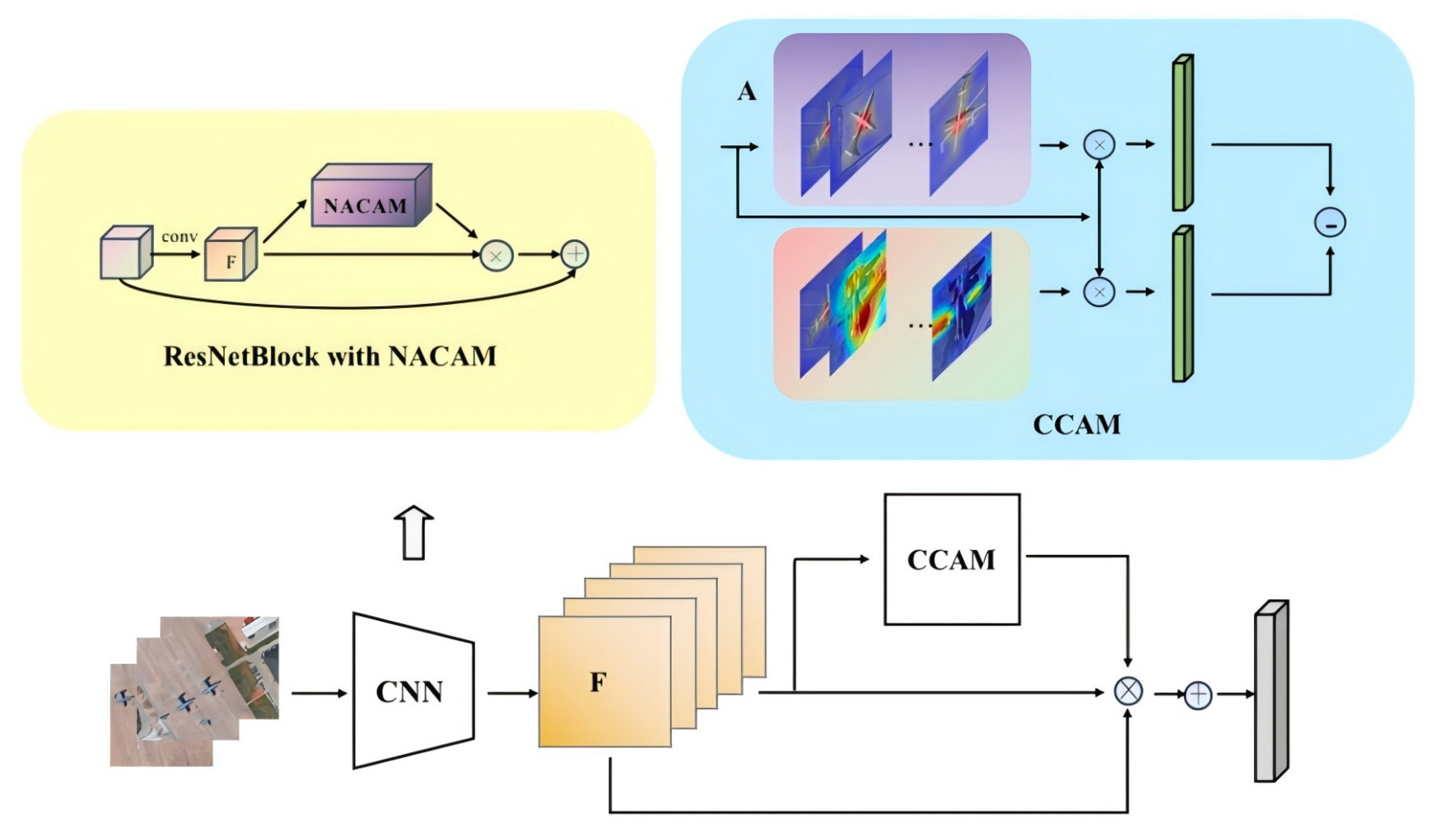

3. Materials and Methods

- 1.

- Feature Extraction Module: We employ ResNet and MobileNet as the backbone networks for feature extraction. The core idea of ResNet is to build deep networks using residual blocks, which effectively mitigates the vanishing gradient problem. MobileNet, on the other hand, is a lightweight convolutional neural network characterized by its efficient and compact design. Both networks can extract high-level feature representations from input images, capturing advanced semantic information.

- 2.

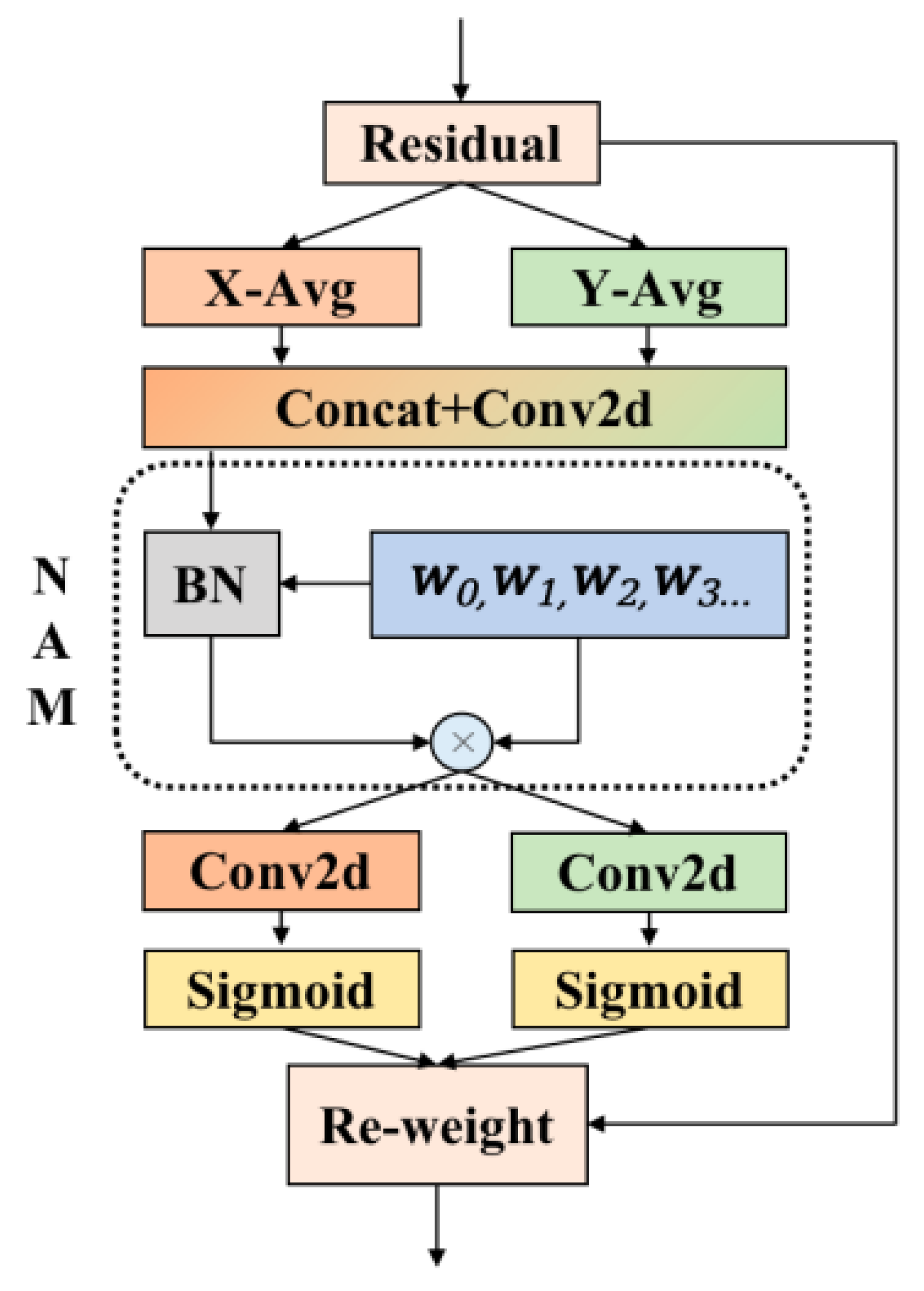

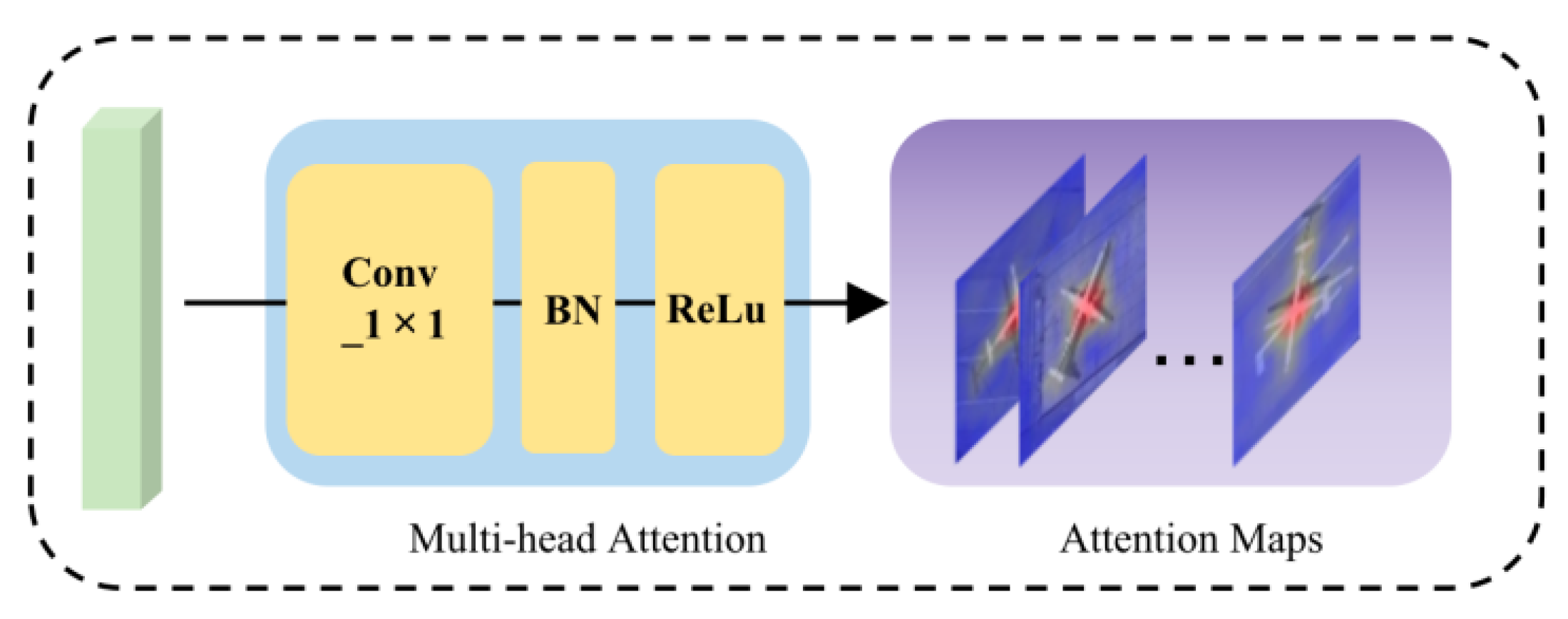

- Normalized Coordinate Attention Module: This module emphasizes positional information by incorporating it into feature judgment, allowing the model to recognize the relative positions of different regions within the input data. This helps the model better understand the structure of the image or sequence and models the global context through global similarity, thus accelerating convergence and enabling more accurate classification decisions.

- 3.

- Causal Discrepancy Learning Module: By comparing the regions the model focuses on with the actual classification results, the module learns which areas are critical for correct classification, helping the model identify truly useful features and reduce reliance on noisy ones. The causal discrepancy learning module improves both the performance and interpretability of the model, allowing it to better understand the reasons behind its decisions.

3.1. Network Architecture

3.2. Normalized Coordinate Attention Module

3.3. Counterfactual Learning CCAM Module

3.4. Loss Function

4. Results

4.1. Experimental Setup

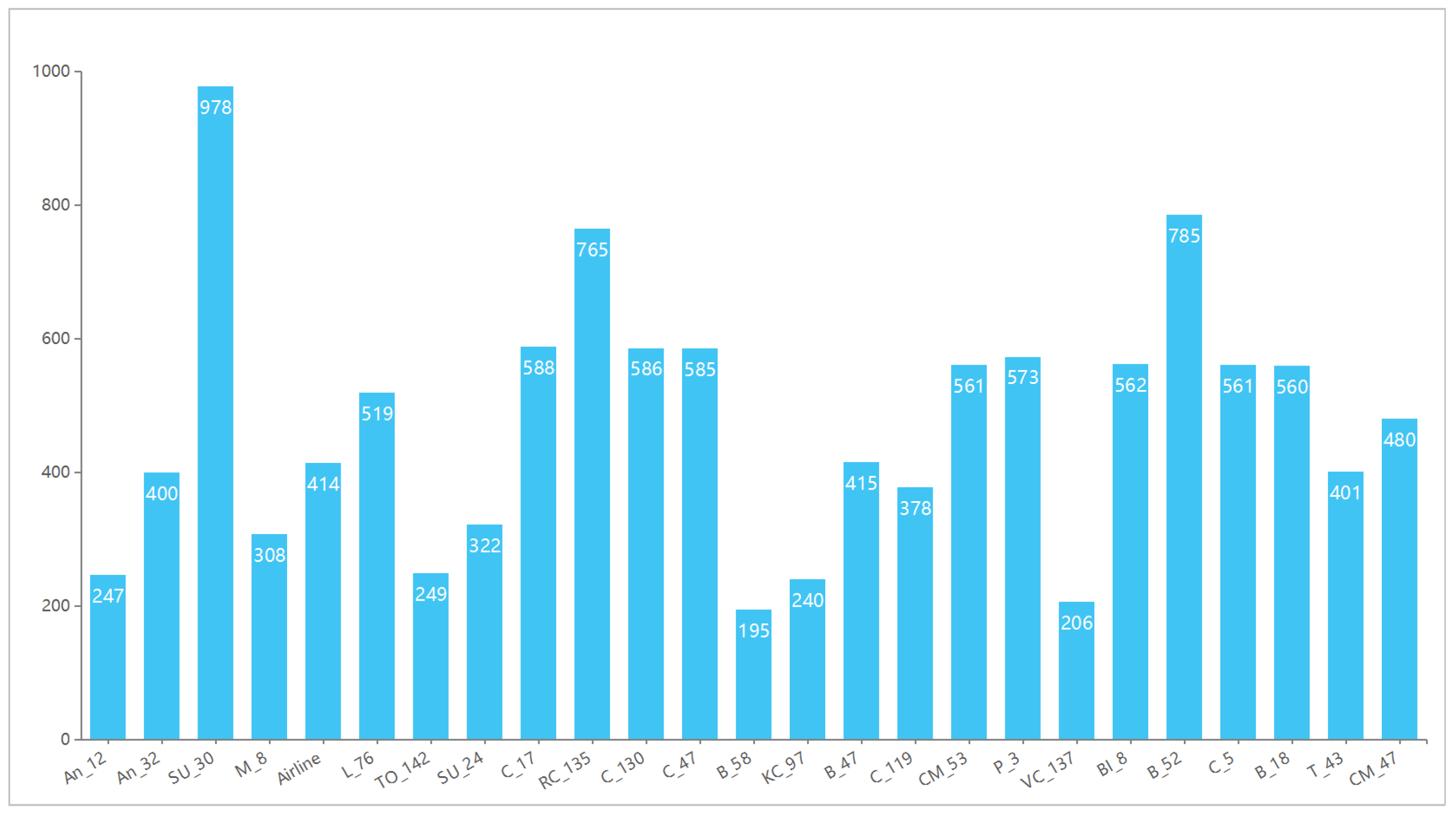

4.2. Dataset Descriptions

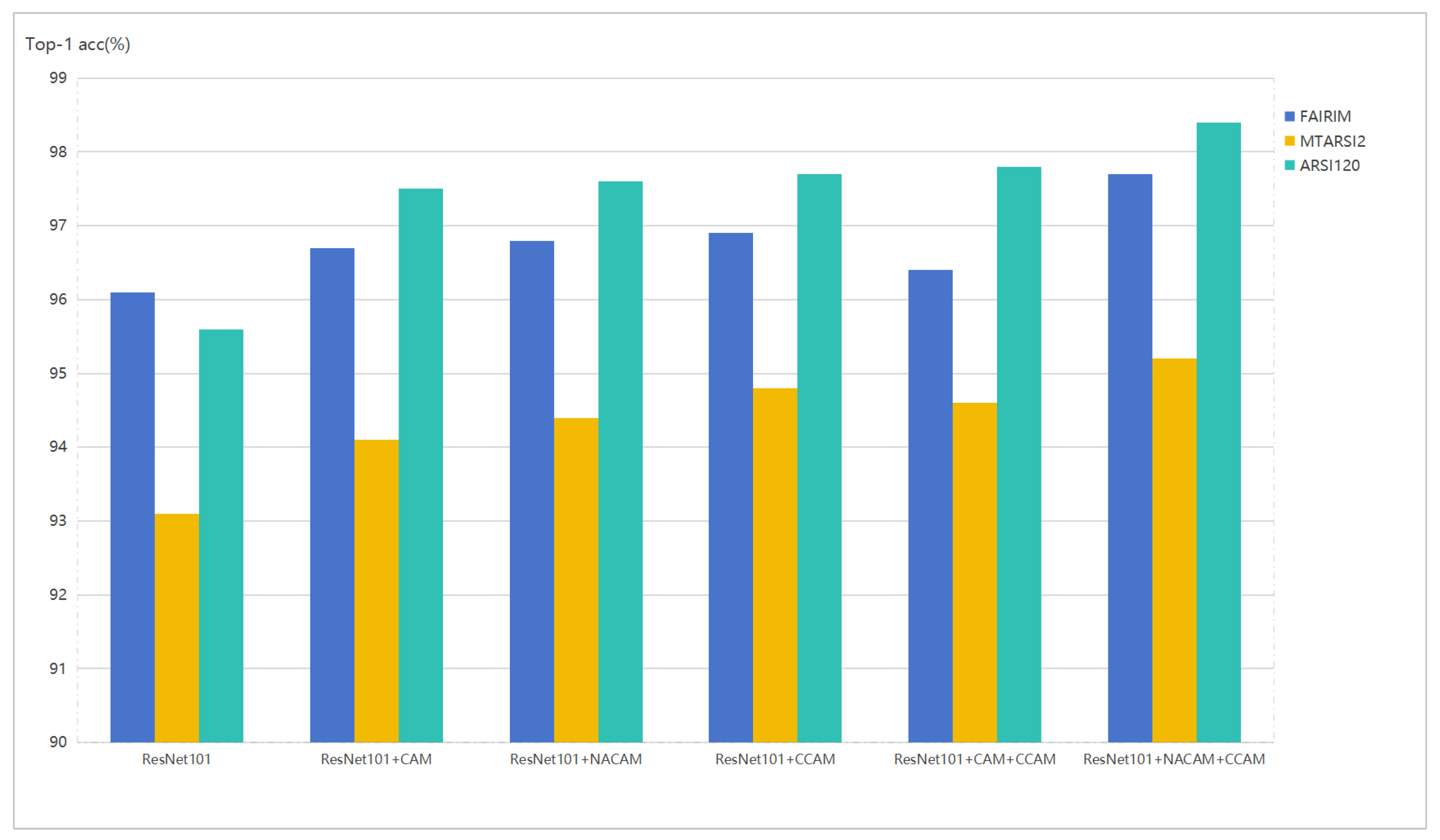

4.3. Ablation Study

4.4. Comparison with Other Methods

- ResNet101: This model introduces residual connections to solve the gradient problem, making the network training more stable.

- MobileNetV2: This lightweight convolutional neural network architecture employs strategies like depthwise separable convolutions to reduce model parameters and computational costs while maintaining good performance.

- Vision Transformer: As a currently popular backbone model, it utilizes self-attention to capture both global and local relationships.

- WS-DAN: This model adopts a weakly-supervised learning-based image enhancement method combined with attention mechanisms for fine-grained classification.

- API-Net: The model proposes a simple yet effective attention-pairing interaction network that progressively recognizes pairs of fine-grained images through interaction, achieving 90% accuracy on the CUB200-2011 dataset.

- FFVT: Applying the newer Transformer framework for feature fusion, this visual transformer compensates for local, low-level, and intermediate information by aggregating important tokens from each transformer layer, reaching 91.6% accuracy on the CUB200-2011 dataset.

- HERBS: Comprising two modules—a high-temperature refinement module and a background suppression module—this model extracts discriminative features while suppressing background noise, achieving 93% accuracy on the CUB200-2011 dataset.

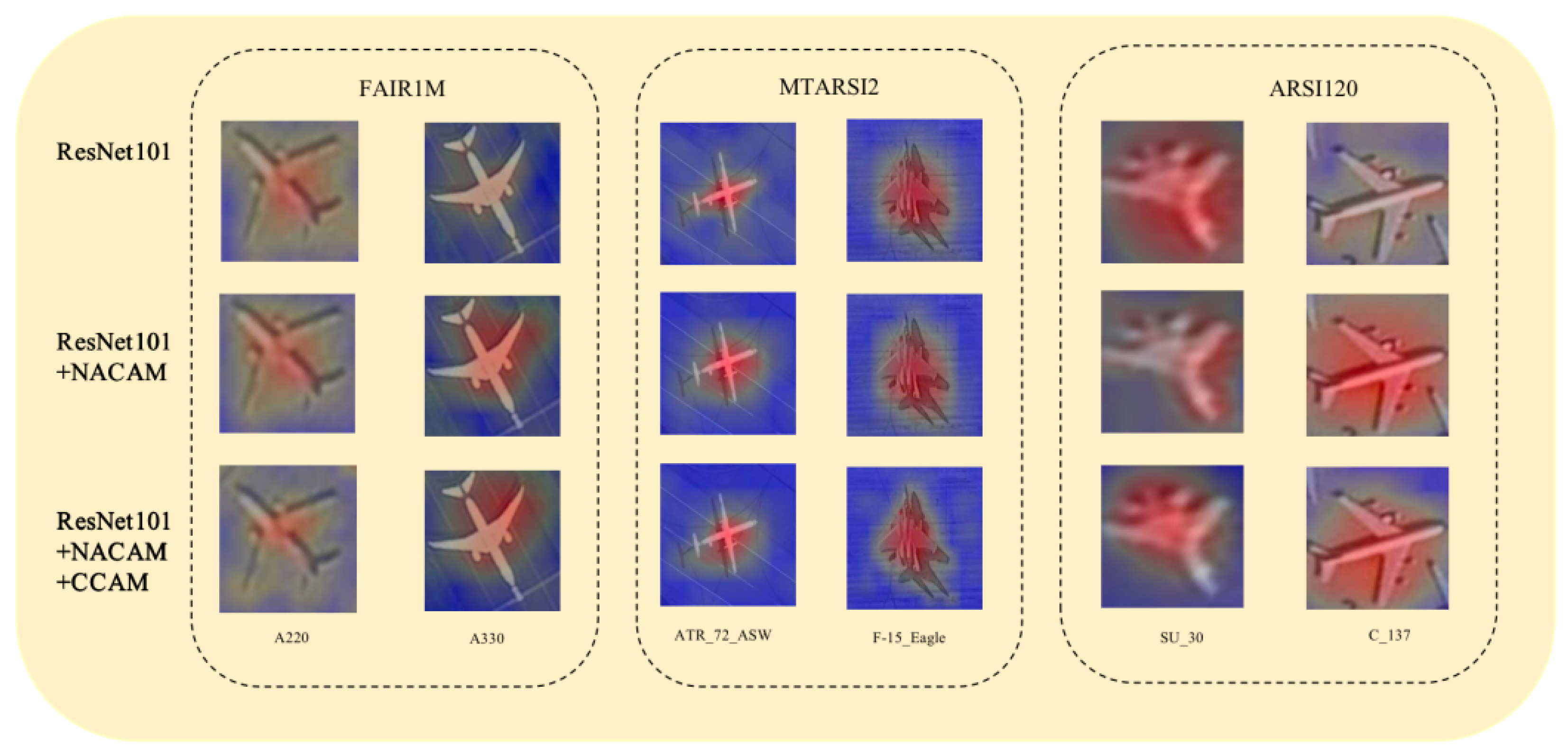

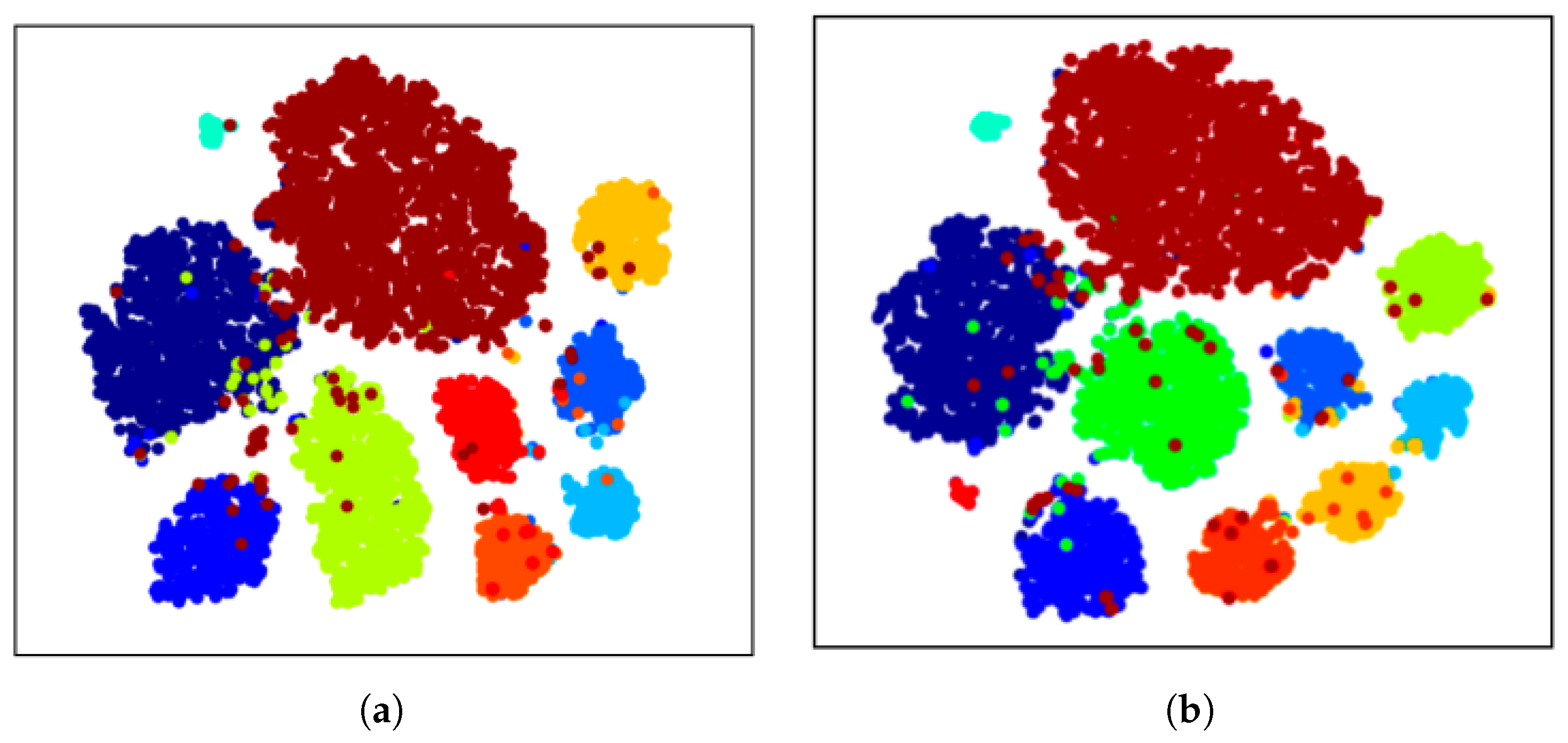

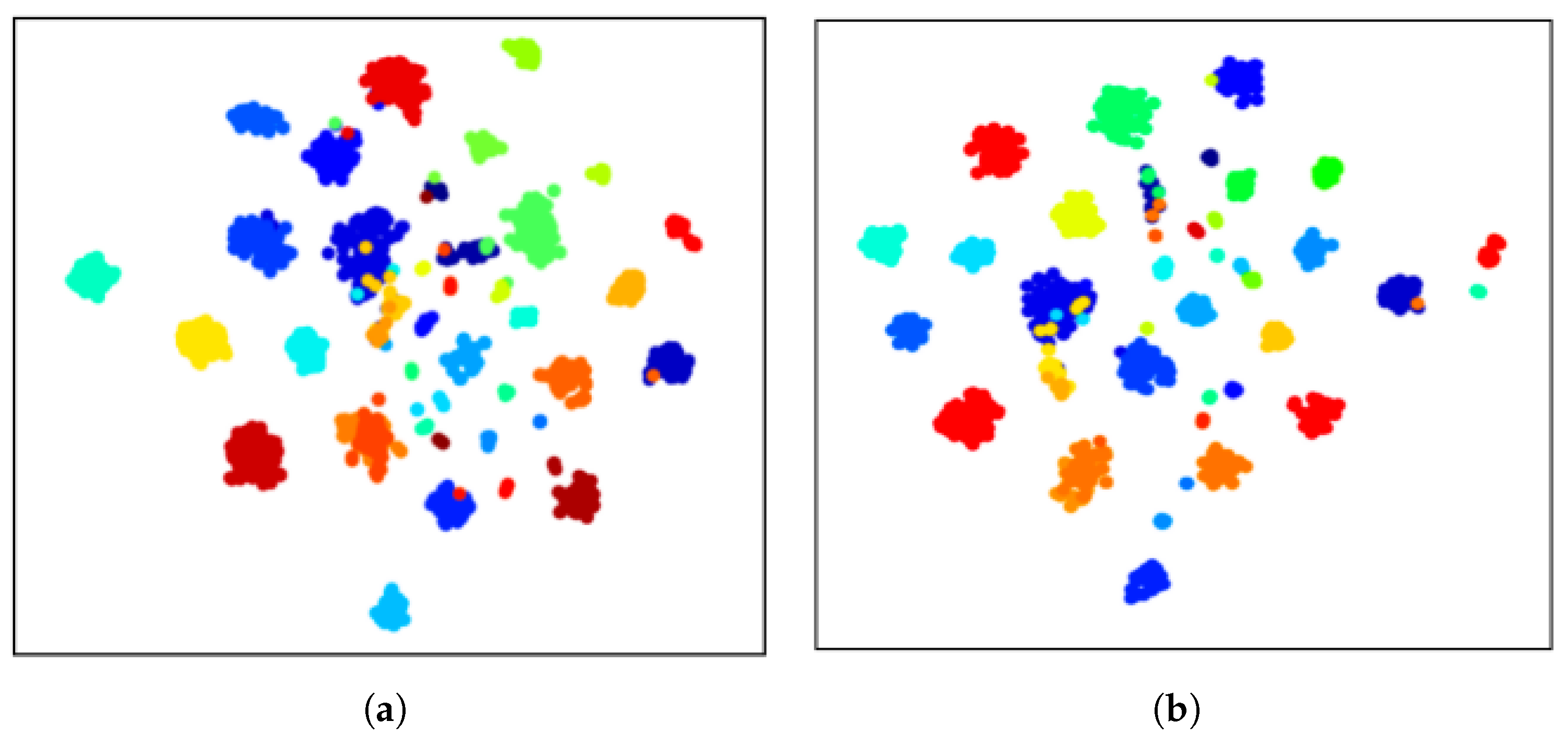

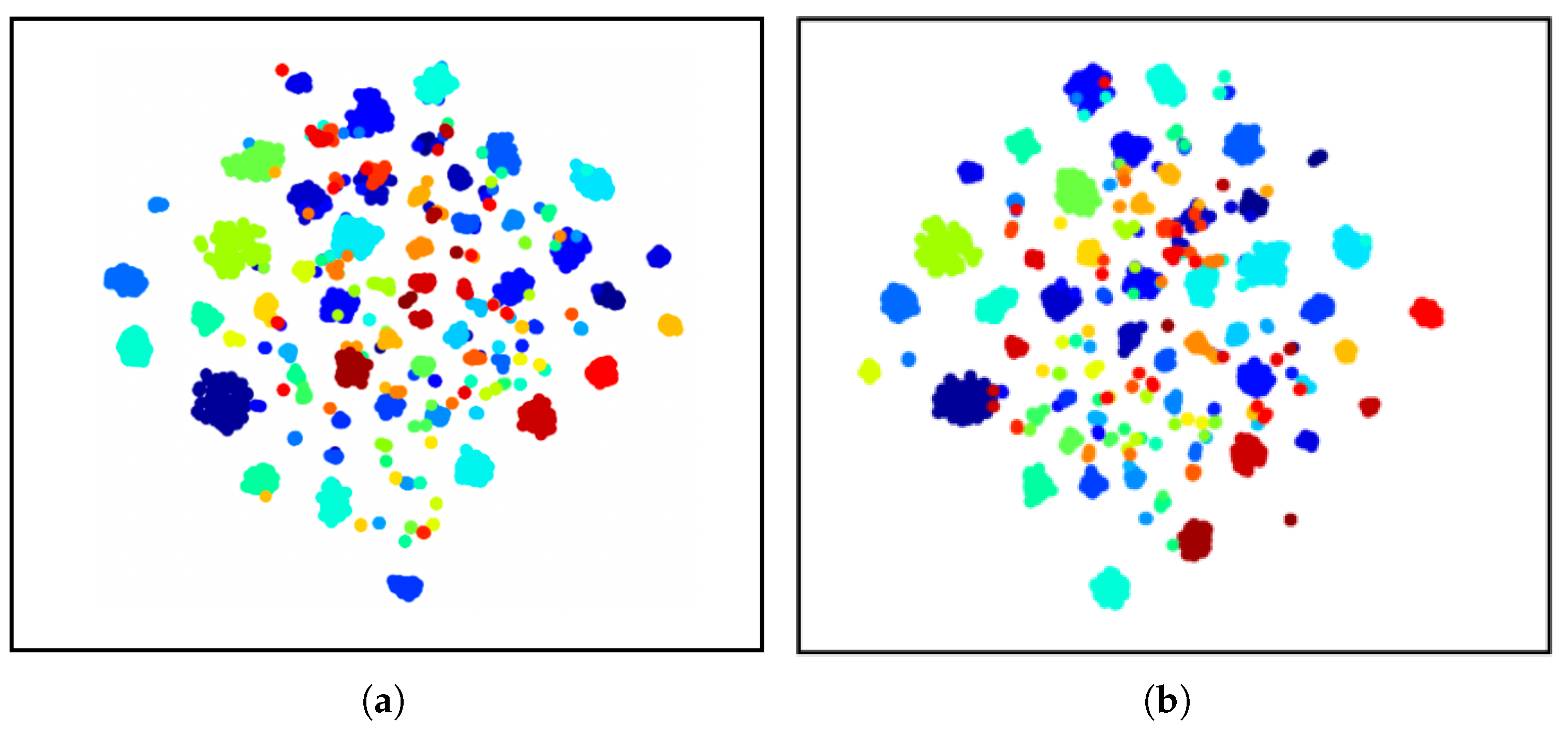

4.5. Interpretability Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hsieh, J.W.; Chen, J.M.; Chuang, C.H.; Fan, K.C. Aircraft type recognition in satellite images. IEE Proc. Vis. Image Signal Process. 2005, 152, 307–315. [Google Scholar] [CrossRef]

- Liu, G.; Sun, X.; Fu, K.; Wang, H. Aircraft recognition in high-resolution satellite images using coarse-to-fine shape prior. IEEE Geosci. Remote Sens. Lett. 2012, 10, 573–577. [Google Scholar] [CrossRef]

- Zhao, A.; Fu, K.; Wang, S.; Zuo, J.; Zhang, Y.; Hu, Y.; Wang, H. Aircraft recognition based on landmark detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1413–1417. [Google Scholar] [CrossRef]

- Goring, C.; Rodner, E.; Freytag, A.; Denzler, J. Nonparametric part transfer for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2489–2496. [Google Scholar]

- Branson, S.; Van Horn, G.; Belongie, S.; Perona, P. Bird species categorization using pose normalized deep convolutional nets. arXiv 2014, arXiv:1406.2952. [Google Scholar]

- Lin, D.; Shen, X.; Lu, C.; Jia, J. Deep LAC: Deep localization, alignment and classification for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1487–1500. [Google Scholar] [CrossRef]

- Xiao, T.; Xu, Y.; Yang, K.; Zhang, J.; Peng, Y.; Zhang, Z. The application of two-level attention models in deep convolutional neural network for fine-grained image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 842–850. [Google Scholar]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear Convolutional Neural Networks for Fine-grained Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1309–1322. [Google Scholar] [CrossRef]

- Sun, M.; Yuan, Y.; Zhou, F.; Ding, E. Multi-attention multi-class constraint for fine-grained image recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 805–821. [Google Scholar]

- Peng, Y.; He, X.; Zhao, J. Object-part attention model for fine-grained image classification. IEEE Trans. Image Process. 2017, 27, 1487–1500. [Google Scholar] [CrossRef]

- Rodriguez, P.; Velazquez, D.; Cucurull, G.; Gonfaus, J.M.; Roca, F.X.; Gonzalez, J. Pay attention to the activations: A modular attention mechanism for fine-grained image recognition. IEEE Trans. Multimed. 2019, 22, 502–514. [Google Scholar] [CrossRef]

- Zheng, H.; Fu, J.; Zha, Z.J.; Luo, J. Looking for the devil in the details: Learning trilinear attention sampling network for fine-grained image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5012–5021. [Google Scholar]

- Bera, A.; Wharton, Z.; Liu, Y.; Bessis, N.; Behera, A. SR-GNN: Spatial relation-aware graph neural network for fine-grained image categorization. IEEE Trans. Image Process. 2022, 31, 6017–6031. [Google Scholar] [CrossRef]

- Touvron, H.; Sablayrolles, A.; Douze, M.; Cord, M.; Jégou, H. Graft: Learning fine-grained image representations with coarse labels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 874–884. [Google Scholar]

- Sun, X.; Xv, H.; Dong, J.; Zhou, H.; Chen, C.; Li, Q. Few-shot learning for domain-specific fine-grained image classification. IEEE Trans. Ind. Electron. 2020, 68, 3588–3598. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Van der Maaten, L. Accelerating t-SNE using tree-based algorithms. J. Mach. Learn. Res. 2014, 15, 3221–3245. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, J.; Chen, J.N.; Liu, S.; Kortylewski, A.; Yang, C.; Bai, Y.; Wang, C. TransFG: A transformer architecture for fine-grained recognition. Proc. AAAI Conf. Artif. Intell. 2022, 36, 852–860. [Google Scholar] [CrossRef]

- Raistrick, A.; Lipson, L.; Ma, Z.; Mei, L.; Wang, M.; Zuo, Y.; Kayan, K.; Wen, H.; Han, B.; Wang, Y.; et al. Infinite Photorealistic Worlds Using Procedural Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 12630–12641. [Google Scholar] [CrossRef]

- Sermanet, P.; Frome, A.; Real, E. Attention for fine-grained categorization. arXiv 2014, arXiv:1412.7054. [Google Scholar]

- Zheng, H.; Fu, J.; Mei, T.; Luo, J. Learning multi-attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5209–5217. [Google Scholar]

- Chen, J.; Gao, Z.; Wu, X.; Luo, J. Meta-Causal Learning for Single Domain Generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 7683–7692. [Google Scholar] [CrossRef]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Dong, X.; Yuan, L.; Liu, Z. Mobile-Former: Bridging MobileNet and Transformer for Efficient Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–21 June 2024; pp. 6541–6550. [Google Scholar]

- Price, I.; Tanner, J. Improved projection learning for lower dimensional feature maps. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Gao, Z.; Xie, J.; Wang, Q.; Li, P. Global second-order pooling convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3024–3033. [Google Scholar]

- Liu, J.J.; Hou, Q.; Cheng, M.M.; Wang, C.; Feng, J. Improving convolutional networks with self-calibrated convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10096–10105. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Tang, K.; Niu, Y.; Huang, J.; Shi, J.; Zhang, H. Unbiased scene graph generation from biased training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3716–3725. [Google Scholar]

- Rao, Y.; Chen, G.; Lu, J.; Zhou, J. Counterfactual attention learning for fine-grained visual categorization and re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1025–1034. [Google Scholar]

- Niu, Y.; Tang, K.; Zhang, H.; Lu, Z.; Hua, X.S.; Wen, J.R. Counterfactual VQA: A cause-effect look at language bias. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12700–12710. [Google Scholar]

- Xiong, W.; Xiong, Z.; Cui, Y. An explainable attention network for fine-grained ship classification using remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5620314. [Google Scholar] [CrossRef]

- Pan, C.; Li, R.; Hu, Q.; Niu, C.; Liu, W.; Lu, W. Contrastive Learning Network Based on Causal Attention for Fine-Grained Ship Classification in Remote Sensing Scenarios. Remote Sens. 2023, 15, 3393. [Google Scholar] [CrossRef]

- Xu, K.; Wang, L.; Zhang, Q. Causal Domain Adaptation via Contrastive Conditional Transfer for Fine-Grained Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 17–21 June 2024; pp. 412–419. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based attention module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Rudd-Orthner, R.; Mihaylova, L. Multi-type Aircraft of Remote Sensing Images: MTARSI 2. Zenodo. 2021. Available online: https://oa.mg/work/10.5281/zenodo.5044950 (accessed on 28 July 2025).

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. SWS-DAN: Subtler WS-DAN for fine-grained image classification. J. Vis. Commun. Image Represent. 2021, 79, 103245. [Google Scholar] [CrossRef]

- Dong, X.; Liu, H.; Ji, R.; Cao, L.; Ye, Q.; Liu, J.; Tian, Q. Api-net: Robust generative classifier via a single discriminator. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 379–394. [Google Scholar] [CrossRef]

- Wang, J.; Yu, X.; Gao, Y. Feature fusion vision transformer for fine-grained visual categorization. arXiv 2021, arXiv:2107.02341. [Google Scholar]

- Chou, P.Y.; Kao, Y.Y.; Lin, C.H. Fine-grained visual classification with high-temperature refinement and background suppression. arXiv 2023, arXiv:2303.06442. [Google Scholar]

| Name | Description | ||

|---|---|---|---|

| Class | Train | Test | |

| FAIR1M | 10 | 32,763 | 8191 |

| MTARSI2 | 40 | 8164 | 2041 |

| ARSJ120 | 101 | 11,553 | 2889 |

| Backbone | Model | Params (M) | FLOPs (G) | FAIR1M | MTARSI2 | ARSI120 | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CAM | NACAM | CCAM | Top-1 acc (%) | Top-5 acc (%) | Top-1 acc (%) | Top-5 acc (%) | Top-1 acc (%) | Top-5 acc (%) | |||

| ResNet101 | 44.5 | 7.82 | 96.1 | 99.7 | 93.1 | 99.1 | 95.6 | 98.6 | |||

| ✓ | 46.1 | 7.91 | 96.7 | 99.8 | 94.1 | 99.8 | 97.5 | 98.9 | |||

| ✓ | 48.9 | 7.92 | 96.8 | 99.8 | 94.4 | 99.8 | 97.6 | 99.1 | |||

| ✓ | 45.2 | 7.92 | 96.9 | 99.9 | 94.8 | 100 | 97.7 | 99.2 | |||

| ✓ | ✓ | 48.8 | 7.93 | 96.4 | 99.8 | 94.6 | 100 | 97.8 | 99.2 | ||

| ✓ | ✓ | 48.9 | 7.93 | 97.7 | 99.9 | 95.2 | 100 | 98.4 | 99.5 | ||

| MobileNet V2 | 3.5 | 0.32 | 93.2 | 99.6 | 90.8 | 98.6 | 93.2 | 96.7 | |||

| ✓ | 3.9 | 0.34 | 94.1 | 99.7 | 91.2 | 99.4 | 93.3 | 96.8 | |||

| ✓ | 4.2 | 0.34 | 94.2 | 99.7 | 91.3 | 99.5 | 93.5 | 96.8 | |||

| ✓ | 4.1 | 0.34 | 94.6 | 99.8 | 92.1 | 99.5 | 93.8 | 97.1 | |||

| ✓ | ✓ | 4.3 | 0.34 | 95.2 | 99.9 | 92.0 | 99.4 | 94.3 | 97.2 | ||

| ✓ | ✓ | 4.4 | 0.34 | 95.8 | 99.9 | 92.7 | 99.8 | 94.9 | 97.6 | ||

| Models | Backbone | Acc(%) | ||

|---|---|---|---|---|

| Fair1M | MTARSI2 | ARSI120 | ||

| Resnet101 | 96.1 | 93.1 | 95.6 | |

| MobileNetV2 | 93.2 | 90.8 | 93.2 | |

| Vision Transformer [18] | 96.8 | 93.2 | 95.9 | |

| WS-DAN [43] | ResNet50 | 96.9 | 93.4 | 96.1 |

| API-Net [44] | DenseNet161 | 96.4 | 94.7 | 97.3 |

| FFVT [45] | ViT | 97.1 | 95.1 | 98.2 |

| HERBS [46] | ViT | 97.1 | 95.1 | 98.1 |

| (Ours) | Resnet101 | 97.7 | 95.2 | 98.4 |

| (Ours) | MobileNetV2 | 95.8 | 92.7 | 94.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Tuo, W.; Zhang, S.; Zhao, X. A Counterfactual Fine-Grained Aircraft Classification Network for Remote Sensing Images Based on Normalized Coordinate Attention. Appl. Sci. 2025, 15, 8903. https://doi.org/10.3390/app15168903

Zhao Z, Tuo W, Zhang S, Zhao X. A Counterfactual Fine-Grained Aircraft Classification Network for Remote Sensing Images Based on Normalized Coordinate Attention. Applied Sciences. 2025; 15(16):8903. https://doi.org/10.3390/app15168903

Chicago/Turabian StyleZhao, Zeya, Wenyin Tuo, Shuai Zhang, and Xinbo Zhao. 2025. "A Counterfactual Fine-Grained Aircraft Classification Network for Remote Sensing Images Based on Normalized Coordinate Attention" Applied Sciences 15, no. 16: 8903. https://doi.org/10.3390/app15168903

APA StyleZhao, Z., Tuo, W., Zhang, S., & Zhao, X. (2025). A Counterfactual Fine-Grained Aircraft Classification Network for Remote Sensing Images Based on Normalized Coordinate Attention. Applied Sciences, 15(16), 8903. https://doi.org/10.3390/app15168903