1. Introduction

Cores are rock samples extracted from formations through drilling and can be used to study reservoir diagenesis and the state of oil and gas storage. Oil and gas are primarily stored in the pores of the core, and the microstructure of these pores directly determines their reserves and migration capacity. Therefore, studying the influence of the pore microstructure on the core’s macroscopic physical properties (such as permeability, electrical properties, and mechanical properties) is crucial for oil and gas exploration and development. Traditional physical property experiments focus primarily on the macroscale and are unable to investigate the physical properties of cores at the microscale of pores.

Digital cores, a field that has been proposed in recent years and stands at the intersection of digital image processing and petroleum geology, have become a current research hotspot. Using imaging equipment to image the microstructure within the core, computers can be used to study its connectivity and pore distribution, as well as to perform various simulations such as those for seepage, electrical properties, and mechanical properties. These calculations and simulations can provide fundamental research data and support subsequent petroleum exploration and development.

Current research in three-dimensional (3D) reconstruction of digital cores focuses on binary core images [

1,

2,

3,

4,

5,

6,

7], wherein the minerals in rock samples are considered a single entity. The gray values of pixels in a 3D core CT image comprehensively reflect the differences in the X-ray absorption coefficients of different rock components. Real rock samples are composed of different components that exhibit different gray values under imaging equipment. Specifically, core parameter characteristics, such as permeability, electrical conductivity, and elastic modulus, also vary with the distribution of these components. Reconstructing gray-scale core images from a single two-dimensional (2D) image could significantly benefit the oil exploration and development field, for example.

Currently, gray-scale core image reconstruction is in the exploratory stage. In 2016, Tahmasebi et al. [

8,

9,

10] proposed the use of a cross-correlation function-based multi-point geostatistical algorithm to reconstruct gray-scale cores. In addition, recently developed texture synthesis algorithms [

11,

12,

13,

14,

15,

16,

17] can be used to reconstruct gray-scale 3D images; however, the reconstruction results ignore the statistical similarity between the reconstructed and target images. In general, relatively little research has been conducted in this area. Multi-phase (mostly three-phase) reconstruction using a simulated annealing algorithm has also been investigated. In this area, a multiphase two-point correlation function is used as the constraint condition. By randomly exchanging points and continuously iterating, the correlation function curve of the reconstructed image approaches the curve of the target. There are 256 possible gray levels for the gray-scale core image. The two-point correlation function [

18,

19] requires a large number of calculations, which cannot be achieved using the simulated annealing algorithm [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29]. Li et al. [

30] proposed a pattern dictionary-based algorithm for the reconstruction of a 3D greyscale image in 2021, but the reconstruction results were somewhat blurred. In 2022, Li et al. [

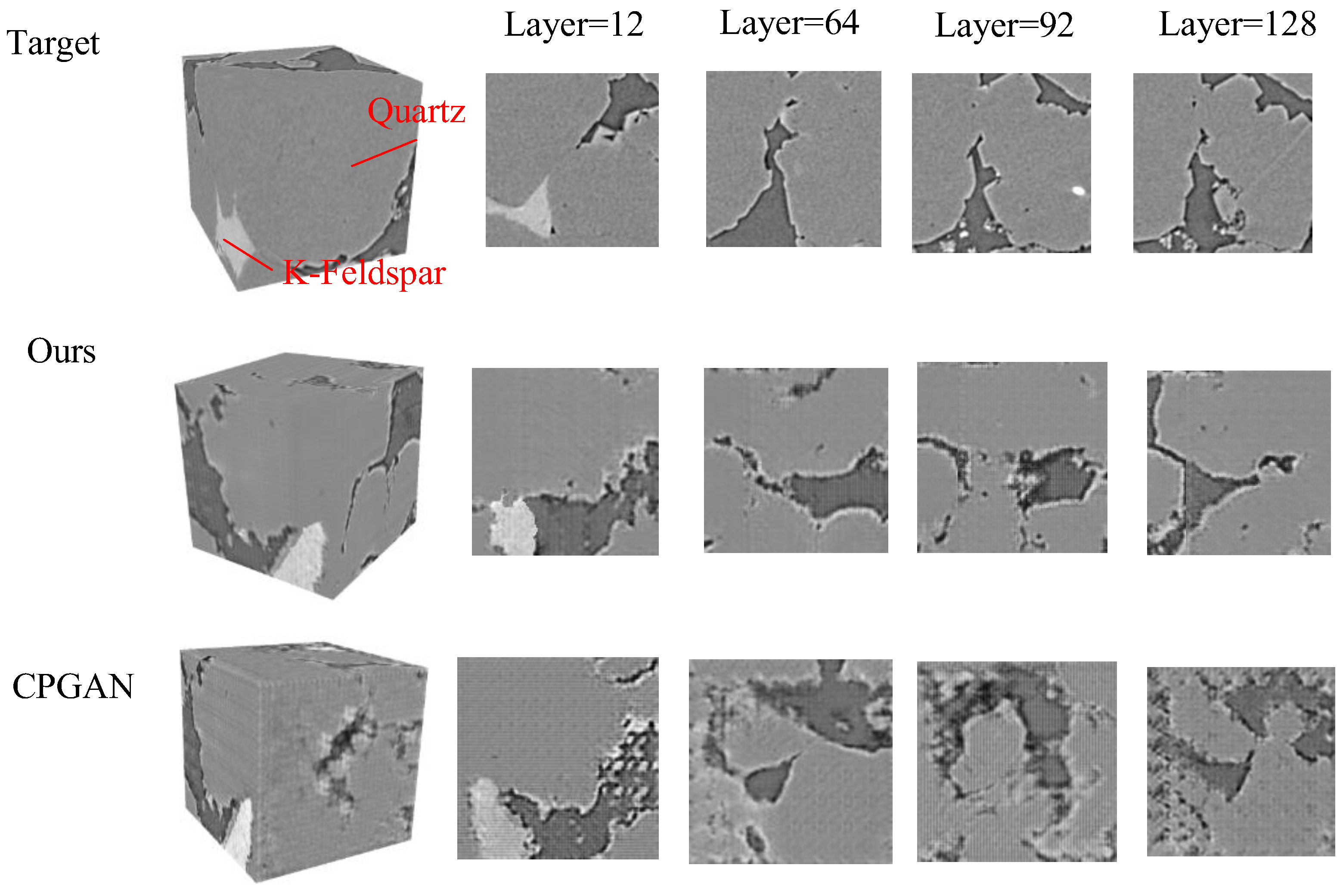

31] used deep learning technology to propose the cascaded progressive generative adversarial network (CPGAN) for the reconstruction of 3D gray-scale core images.

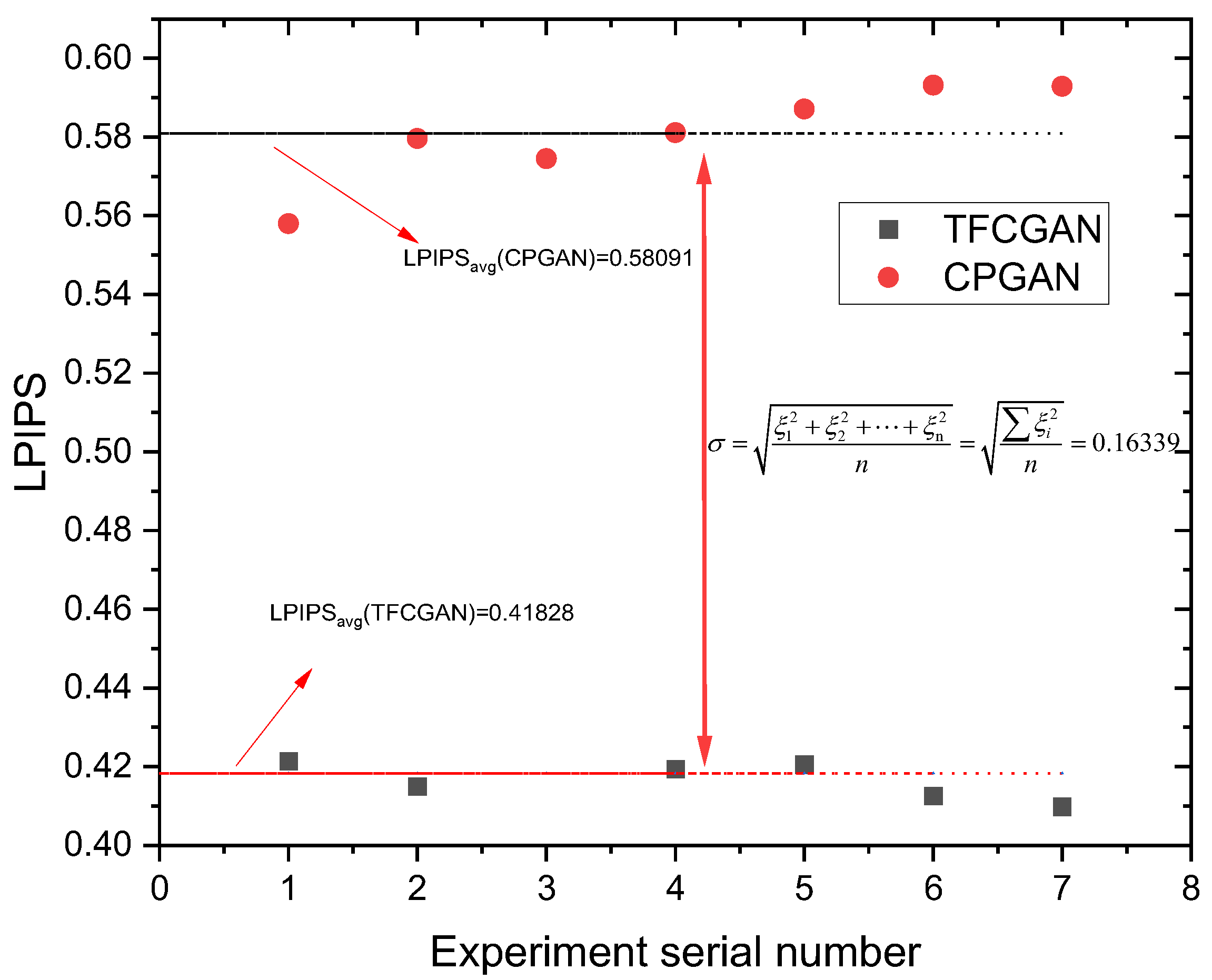

Deep learning algorithms can learn features independently [

32,

33,

34,

35], creating conditions for the reconstruction of gray-scale core images. However, existing deep learning-based reconstruction algorithms lack widely accepted evaluation criteria. Evaluating and comparing generative adversarial networks (GANs) or images produced by GANs is extremely challenging, partly because of the lack of clear likelihood methods commonly used in comparable probability models [

36]. The structural similarity (SSIM) method can be used to evaluate aligned images; however, it is sensitive to feature arrangement differences between core images. Additionally, core reconstruction requires statistical or morphological similarity to establish an objective image evaluation criterion that can accommodate image reconstruction rather than pixel reproduction.

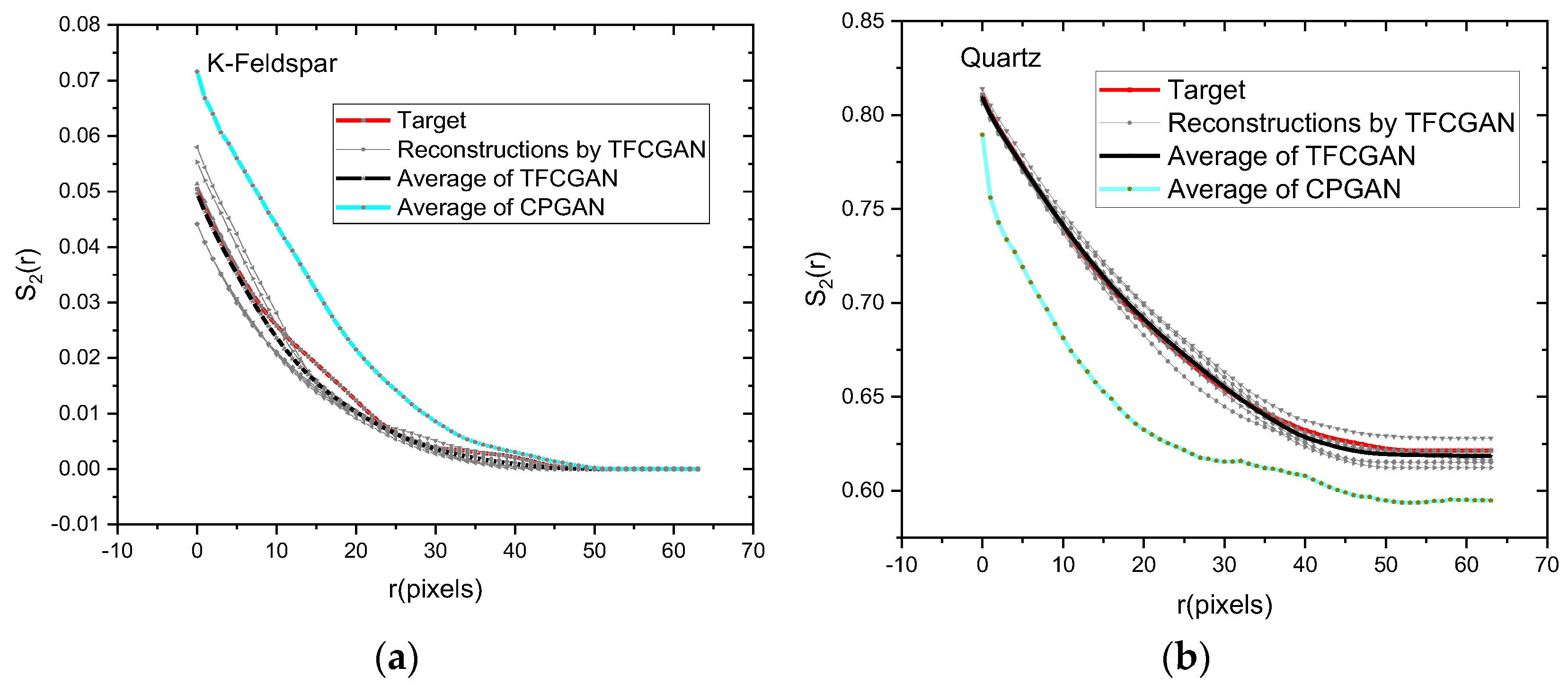

Furthermore, the evaluation criteria for gray-scale core reconstruction are extremely important. Common statistical measures for binary cores include the two-point correlation function and a linear path function. For a core with an n-phase structure, n2 probability functions can be obtained. Among these probability functions, there are (1 + n) × n/2 non-correlated probability functions, and n equations can be listed from these probability functions. Therefore, for a core with an n-phase structure, at least (n − 1) × n/2 non-correlated probability functions must be selected as constraints to reflect the constraint relationship between each phase in the n-phase structure during the reconstruction process. For gray-scale core images, because there are 256 gray levels, these statistics are not applicable to gray-scale cores. At least (n − 1) × n/2 non-correlated probability functions should be selected as constraints for cores with an n-phase structure, that is, 32,640 non-correlated probability functions for gray-scale cores, which are difficult to unify to relate to the overall structure and are computationally time-consuming. Therefore, a set of new evaluation criteria must be proposed to reconstruct the gray-scale cores.

In summary, existing methods are insufficient for texture fidelity. In the field of gray-scale core reconstruction, fidelity metrics include cross-correlation functions [

8,

9,

10] and texture synthesis algorithms [

11,

12,

13,

14,

15,

16,

17]. Structures reconstructed by these methods do not have high texture similarity with the target system and cannot simultaneously maintain texture characteristics and physical statistical properties for core reconstruction. Deep learning algorithms [

32,

33,

34,

35] lack widely accepted evaluation criteria. The structural similarity (SSIM) method is sensitive to feature arrangement differences between core images.

Deep image structure and texture similarity (DISTS) [

37], as a full-reference image quality assessment model, has a good correlation with the human perception of image quality. In addition, it can evaluate texture similarity (e.g., to evaluate images generated by GANs) and effectively handle geometric transformations (the evaluation is not strictly point-by-point-aligned image pairs; therefore, they can handle the “visual texture,” which is loosely defined as spatially uniform areas with repeating elements. DISTS has significant advantages in preserving texture properties. If it could be combined with physical statistical descriptors, it could generate structures that simultaneously maintain good texture properties and physical statistical properties for core reconstruction.

In this work, we propose a physics statistical descriptor-informed deep image structure and texture similarity metric as a generative adversarial network optimization criterion (PSDI-DISTS) and a texture feature-constrained GAN (TFCGAN) model for the reconstruction of gray-scale core images. In PSDI-DISTS, DISTS and one specially designed physics statistical descriptor (the gray-scale pattern density function) are combined. While DISTS is advantageous in preserving texture properties in gray-scale core image reconstruction, this is not sufficient. Because the goal of core reconstruction is to ultimately use the reconstructed structure for physical property analysis, such as seepage characteristics, the physical statistical properties of the generated structure must also be preserved. Therefore, the goal is to reconstruct a new metric combining the physics statistical descriptor and DISTS, ensuring that both the texture and physical properties of the generated structure are similar to those of the target system.

The remainder of this paper is organized as follows.

Section 2 describes the PSDI-DISTS metric;

Section 3 describes the TFCGAN model developed for reconstructing 3D gray-scale core images;

Section 4 presents the study findings and their discussion. Finally,

Section 5 concludes the study.

2. Physics Statistical Descriptor-Informed Deep Image Structure and Texture Similarity Metric

The previously mentioned statistical measures can be used to reconstruct a three-phase core; however, the gray-level core has 256 gray levels, which must be characterized by higher-order functions. Furthermore, traditional optimization methods such as simulated annealing algorithms are considerably time-consuming for three-phase function optimization [

38]. Therefore, new optimization reconstruction methods are considered for gray-scale cores.

2.1. Basic Theory of the Deep Image Structure and Texture Similarity Metric

Image quality assessment (IQA) in the digital age is considerably important. With the exponential growth in the application of digital images in various fields such as photography, medical imaging, remote sensing, and computer vision, ensuring high-quality images is crucial. In photography, a high-quality image can capture the essence of a moment, whereas in medical imaging, accurate image quality can lead to a precise diagnosis.

Traditional IQA methods have significant limitations. First, many traditional metrics focus on pixel-level differences, failing to comprehensively consider the structural and perceptual aspects of the images. They often produce results that do not align well with human visual perception and are oversensitive to texture resampling.

To address these issues, the DISTS assessment metric has emerged [

37]. DISTS offers a more advanced and comprehensive approach to IQA by considering both the structural and textural similarities of images. It could revolutionize the IQA field by providing more accurate and reliable results that align well with human perception.

Being a full-reference metric, DISTS requires both reference and test images for the evaluation. It aims to comprehensively measure the similarity between the test and reference images. Structurally, it analyzes the overall layout and organization of an image. For example, it can detect how well the edges and contours in the test image align with those in the reference image. This is crucial because the structure of an image often conveys important semantic information.

For texture, DISTS examines the fine-grained patterns and details in the images. Textural features significantly affect the visual appearance and realism of an image. By combining these two aspects, DISTS can capture comprehensive image quality. DISTS demonstrates exceptional robustness and adaptability when addressing various image changes. For highly adversarial texture variations, such as when an image has been intentionally distorted to create complex patterns, DISTS can still accurately assess the image quality. Additionally, it is not easily misled by challenging texture changes because it focuses on the underlying structural and textural similarities rather than just surface-level features. Moreover, DISTS can handle nonstrictly point-to-point-aligned images. For instance, in cases where an image is slightly rotated or translated, DISTS can still provide a reliable evaluation. This adaptability makes it a versatile metric for real-world scenarios in which image variations are common.

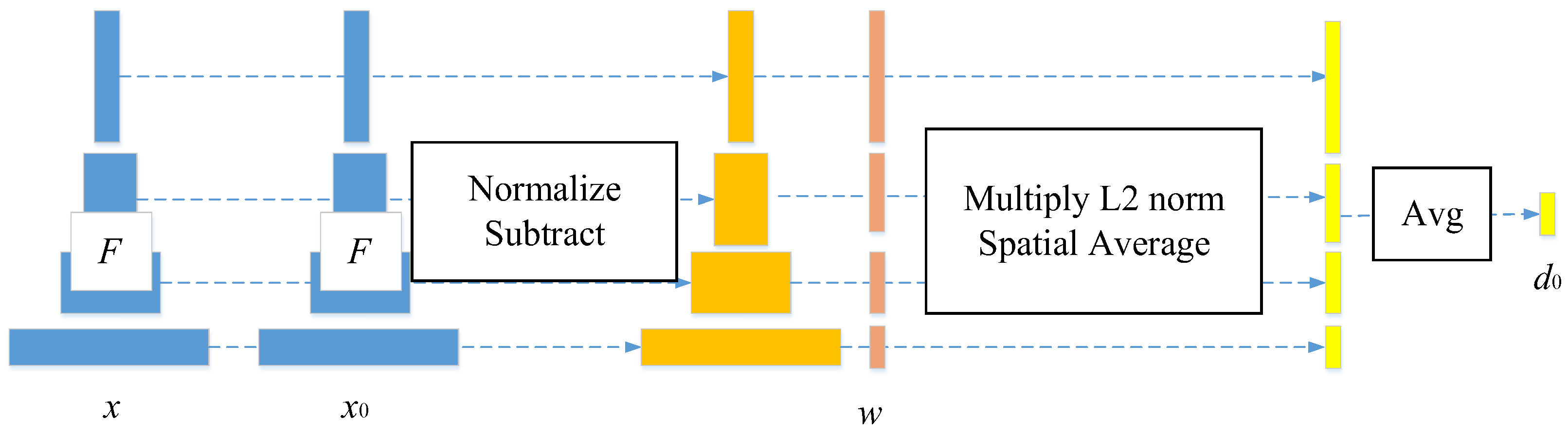

Deep learning plays a crucial role in this process. A pretrained deep neural network is used to extract high-level features from the images. These features are more abstract and representative than simple pixel values. The neural network is trained on a large dataset of images, enabling it to learn the complex relationships between different image elements. Thereafter, DISTS uses these features to calculate the similarity between the reference and test images, providing a more accurate and reliable assessment. Built upon a pretrained VGG-style network, DISTS operates as follows:

Let

and

denote the reference and test images, respectively. Feature extractor

(e.g., VGG-16 truncated at layer

L = 5) generates multiscale representations as follows:

where

represents the features at layer

.

Structure similarity at layer

l is designed using the global covariance (inspired by SSIM), as shown in Equation (2).

where

Textural similarity is designed using the normalized spatial global means of the feature maps, as shown in Equation (4).

where

and

(

,

) represent the global means and standard deviation vectors of

(

) across the spatial dimensions and

represents the global covariance between

and

.

> 0 ensures numerical stability.

The final DISTS score combines layer-wise similarities with learned weights

and

, which can be expressed as follows:

The weights , optimized during training, prioritize the VGG features that best align with human perception.

The DISTS metric is robust to noise and distortion. The main reason for this is that DISTS evaluates image quality by comprehensively considering the similarity of structure and texture, rather than simply comparing them at the pixel level. DISTS adopts deep learning networks (such as VGG or ResNet) to extract multi-layered image features. These features capture structural and texture details and mimic the human visual system’s sensitivity to image structure and texture differences. For this reason, DISTS reduces reliance on pixel-level noise and distortion in the evaluation, demonstrating high robustness.

2.2. Physics Statistical Descriptor—Gray-Scale Pattern Density Function

2.2.1. Cross-Correlation Function

The cross-correlation function [

39] can measure the similarity between different patterns and can be derived from template matching of gray-scale images. The basic principle is very simple. First, in the image to be searched, move the template image, measure the difference between the subimage of the image to be searched and the template image at each position, and then record the corresponding position when the similarity reaches its maximum. However, the actual situation is complex. For practical applications, an appropriate distance measurement method and the total distance difference for a highly similar match must be determined. As shown in

Figure 1a, the search region is defined with the origin of the two images as the reference point, and the maximum search area is determined by the size of the image to be searched and the size of the template image.

Template matching in gray-scale images mainly involves finding the same or most similar position between the template image R and the subimage in the searched image, I. The following equation represents the template offset in the image searched by (

r,

s) units. A schematic of this is shown in

Figure 1b.

The most important aspect of template matching is the similarity measurement function, which is robust to gray-scale and contrast changes. To measure the similarity between images, the distance

d(

r,

s) between the reference template image and the corresponding subimage in the searched image after each translation (

r,

s) is calculated. Several basic measurement functions exist for gray-scale images: the sum of absolute differences, the maximum difference, and the sum of squared differences (SSD), as shown in Equations (7)–(9), respectively.

The SSD function is often used in statistics and optimization fields. To determine the best matching position for the reference image in the searched image, minimizing the SSD function is necessary. That is, Equation (10) reaches a minimum value.

where

B is the sum of the squares of the gray-scale values of all the pixels in the reference template image. It is a constant (independent of r and s) and can be ignored when calculating the minimum SSD value.

A(

r,

s) represents the sum of the squares of the gray-scale values of all the pixels in the subimage of the searched image at the (

r,

s) coordinate position.

C(

r,

s) denotes the linear cross-correlation function of the searched image and the reference template image, and it can be expressed as

When R and I exceed the boundary, their values are zero. Thus, the above formula can also be expressed as

Assuming that A(r,s) is a constant in the searched image, its value is negligible when calculating the best match position in the SSD; when C(r,s) reaches its maximum value, the reference template image and the subimage in the search image are most similar. Essentially, the minimum SSD value is obtained by calculating the maximum value of C(r,s).

2.2.2. Pattern Distribution-Based Loss Function for Two-Phase Core Image Reconstruction

The pattern distribution of the porous microstructure in the two-phase core images reflects their morphological characteristics. The loss function based on the pattern distribution measures the difference between the predicted and true values of the pattern distribution in the two images and is defined as follows:

A pattern in an image is defined as data consisting of multiple points captured by a template. Taking the template as an example, the calculation process for the pattern distribution of a binary image is shown in

Figure 2. Specifically, the following steps are involved:

Scan across the image (via convolution) with the template to collect all occurrences of patterns Pati;

Flatten each pattern to obtain its corresponding binary code and convert it to a decimal number PatNumi;

Count each pattern

Pati that appears, obtain the number

NUM(

Pati), and normalize it to obtain the probability of each pattern

Pi, defined as

where

Ntotal represents the total number of patterns in an image. With a 3 × 3 template, 2

9 = 512 possible patterns exist.

Figure 2.

Schematic diagram for calculating the distribution of two-phase core image patterns.

Figure 2.

Schematic diagram for calculating the distribution of two-phase core image patterns.

As shown in

Figure 2, a 3 × 3 template is used to traverse the image in a raster path. The sum of all patterns is

Ntotal. In this example, three patterns are taken. Flattening these patterns yields 000000000, 111111111, and 001110010. These patterns are converted to decimals. For example, the conversion process for the third pattern is 2

0 × 0 + 2

1 × 1 + 2

2 × 0 + 2

3 × 0 + 2

4 × 1 + 2

5 × 1 + 2

6 × 1 +2

7 × 0 + 2

8 × 0 = 114. The number of patterns with a decimal value of 114 increases by 1. Finally,

NUM(

Pati)/

Ntotal is the pattern density of a specific decimal value.

2.2.3. Construction of Physics Statistical Descriptor—Gray-Scale Pattern Density Function

The pattern distribution-based loss function for two-phase core images (

Section 2.2.2) encodes different patterns and compares their distribution across two binary core images. However, for a gray-scale core image template such as the 5 × 5 template, the value range of each pixel is 0–255 and the total number of patterns is large, which makes it impossible to encode and construct a pattern density function based on it. The MSE is used as a metric to measure the difference between two patterns. The cross-correlation function is a more suitable metric for gray scale patterns. Therefore, a new physics statistical descriptor—the gray-scale pattern density function—is constructed and proposed through a cross-correlation function. Its schematic diagram is shown in

Figure 3, and the formulas are shown in Equations (15)–(17).

Here, Image

B is the target system image and

G(

A,

z) is the image generated by the generator. Images

B and

G(

A,

z) were scanned, the patterns were traversed, and the cross-correlation function between the patterns was calculated to construct the gray-scale pattern density functions,

Bpattern(

i1,

j1) and

G(

A,

z)

pattern(

i1,

j1). On this basis, for each pattern (Pattern

A, for example) in the gray-scale pattern density function

Bpattern(

i1,

j1), if the cross-correlation function >Θ between Pattern

A and Pattern x in image

B, the difference between the probabilities of Patterns

A and Pattern x is added to the gray-scale PDF loss for Pattern

A.

2.3. PSDI-DISTS Metric

DISTS unifies structure and texture similarities, is robust to mild geometric distortions, and performs well in texture-relevant tasks. However, not only does the gray-scale core image reconstruction task require that the generated image has texture similarity with the target image but the subsequent generated image also needs to be used to simulate physical properties such as seepage characteristics. Therefore, the addition of a PSD is crucial. Simultaneously, the 3D gray-scale core structure must be reconstructed; therefore, it is necessary to perform a similarity evaluation in the x, y, and z directions. Accordingly, this study proposes and constructs a PSDI-DISTS metric. Two-dimensional slices are extracted from I

3D along the

z/

y/

x-axis independently (i.e., I

2Dxy ∈ R

ℎH×wH, I

2Dxz ∈ R

ℎH×dH, and I

2Dyz ∈ R

wH×dH). The final metric is formed by combining the three directions, and its formula is shown in Equations (18)–(21). The schematic diagram of the PSDI-DISTS metric is shown in

Figure 4.

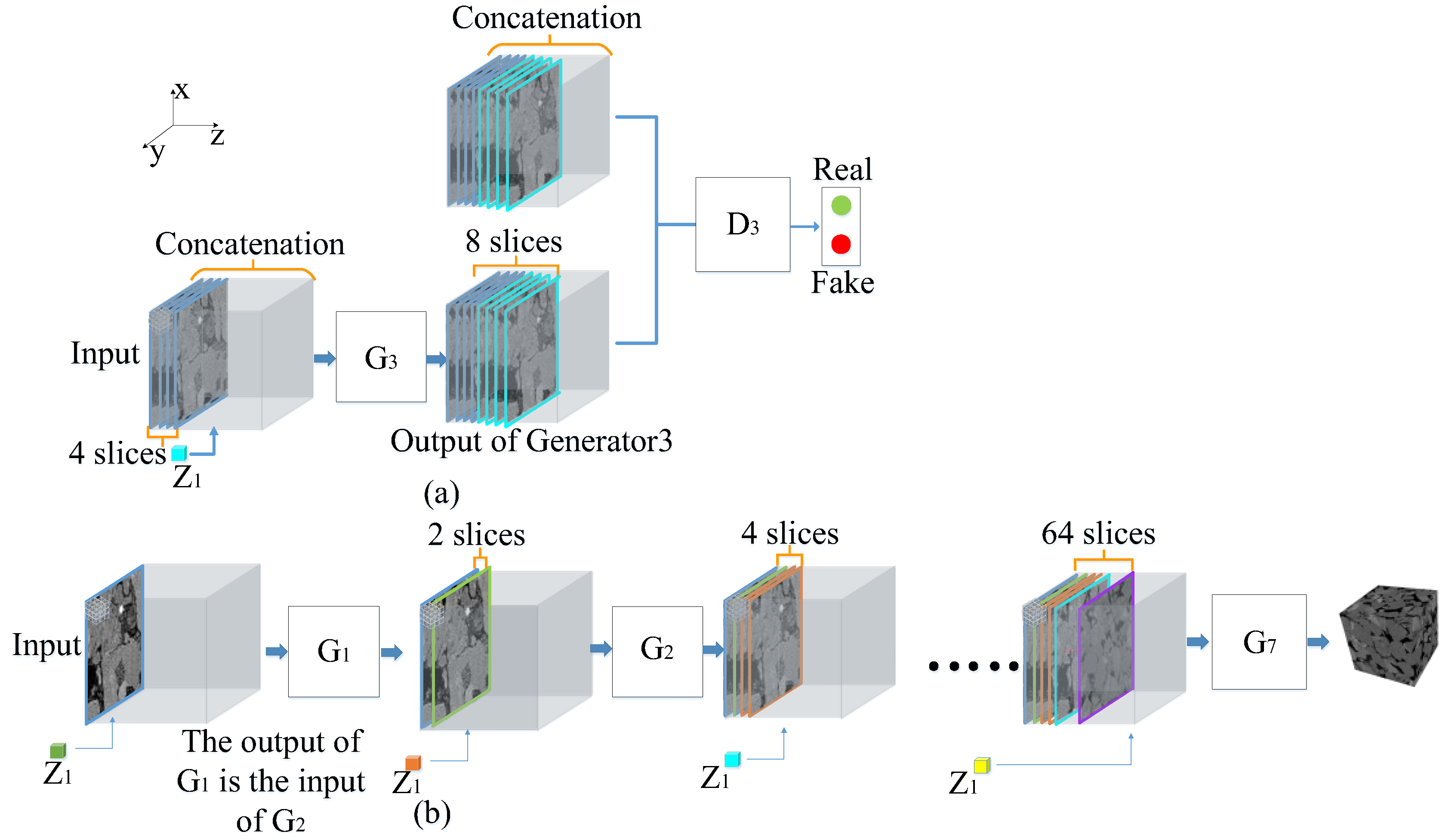

3. TFCGAN for Three-Dimensional Gray-Scale Core Image Reconstruction

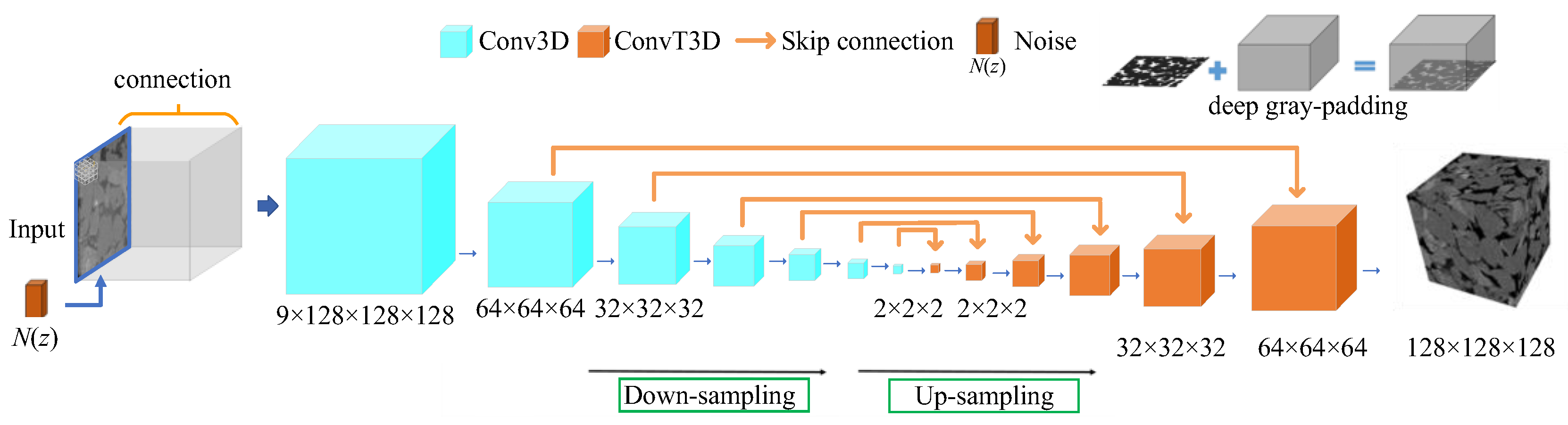

3.1. Main Architecture of the TFCGAN

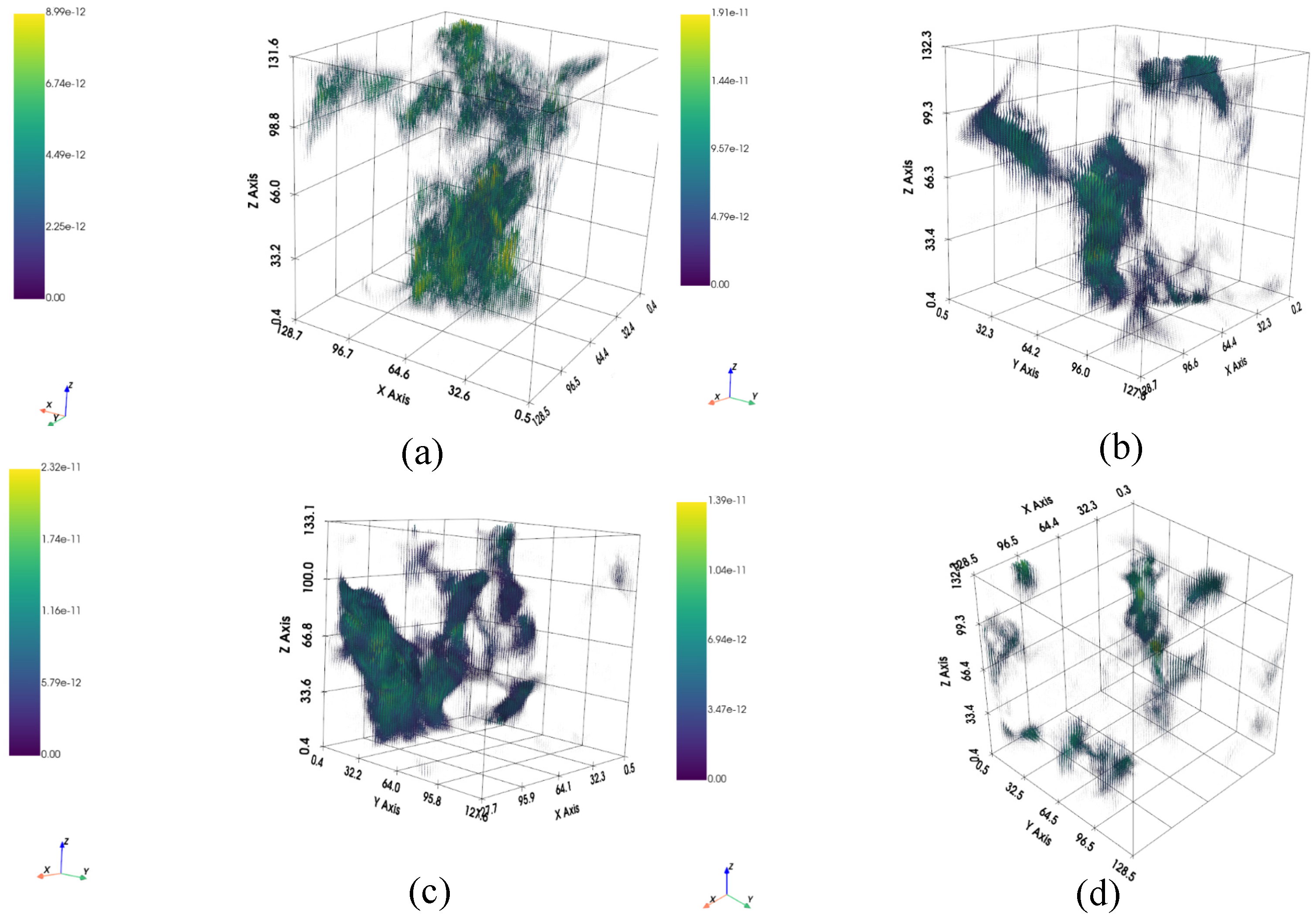

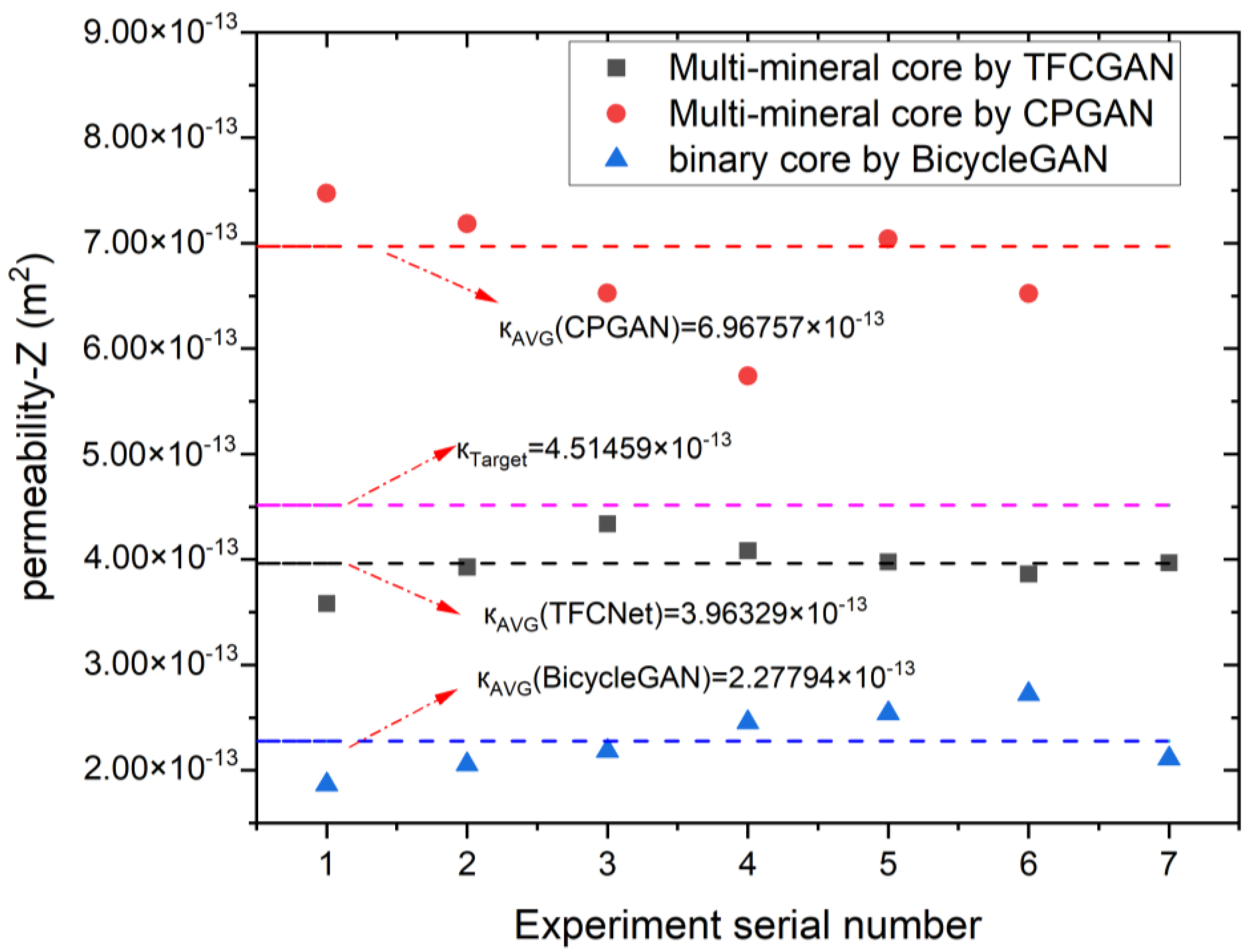

Figure 5 shows the network architecture of the generator G used to reconstruct a 128

3-size image in this study. The input is Gaussian noise

N(

z) and a 2D image, and the output is a 3D structure. As shown in the figure, the network architecture was based on a classic U-Net. Notably, to obtain a faster inference speed in the previous algorithm, the generation network used a 2D convolution and a 2D transposed convolution. The convolution layer fusing channel information was added to the last layer. Both 3D convolution (Conv3D) and 3D transposed convolution (ConvT3D) were used to better capture 3D spatial information. However, 3D operations cause greater difficulties in training the network and easily result in insufficient GPU memory. To address this problem, this study omitted the final channel fusion process because 3D (transposed) convolution is an operation performed in three dimensions, which can effectively fuse the information between channels. Therefore, it was designed such that the image input into generator G was downsampled to a size of 2 × 2 to reduce the number of network parameters, save memory, and accelerate network convergence. This article uses a GeForce RTX 1080 Ti graphics card (11 GB of GPU memory, NVIDIA Corporation, Santa Clara, CA, USA). In early testing, with a batch size of 1, training 128 × 128 size samples required approximately 7 GB of GPU memory. Downsampling images to 2 × 2 in generator G has a minimal impact on accuracy, but this strategy can reduce network parameters by approximately 30%, saving GPU memory and accelerating network convergence.

This study used a deep-gray padding technique while processing the input image to fill in the input image with the target image size. Thus, the network learned the mapping relationship between the 3D input and 3D output. Compared to the original learning of the mapping relationship from 2D to 3D images, the learning of this network was easier. This is because after adopting deep-gray padding technology, the input and target are set as two 3D bodies with equal structures, which is more convenient when using 3D convolution for feature extraction. The principle of deep-gray padding is illustrated in

Figure 5.

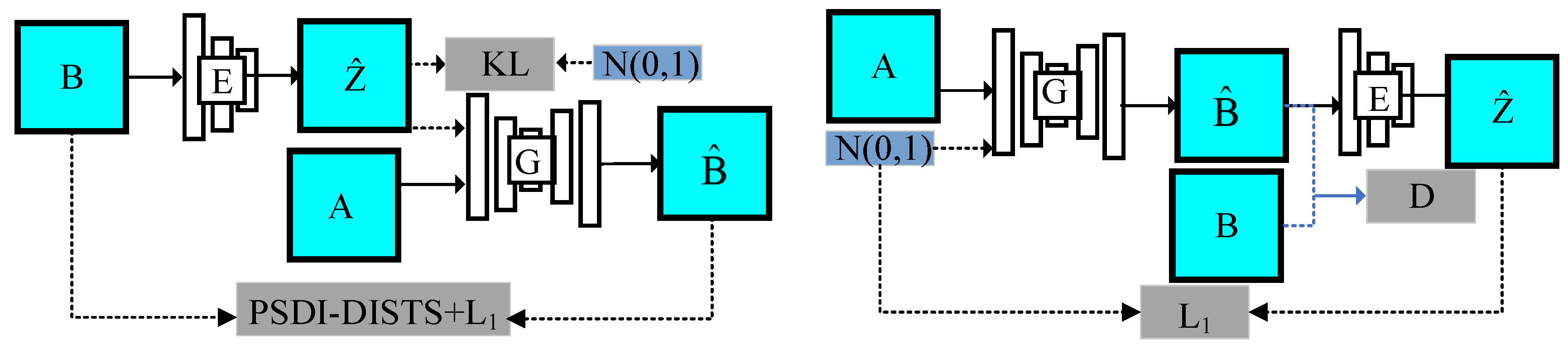

The proposed model is illustrated in

Figure 6. As shown in the figure, the algorithm uses the classic BicycleGAN model [

40] as its basic framework. The core idea is to explicitly establish a connection between the noise and the target. Compared to the Pix2Pix model [

41], the BicycleGAN model introduces an additional encoder. Its two subnetworks, cVAE-GAN and cLR-GAN, serve different functions, as described below.

The cVAE-GAN model can be regarded as a reconstruction of target B. The entire network implements an autoencoder function; that is, target B is the input of cVAE-GAN, and the output is an approximate or equivalent reconstruction value (). The cLR-GAN model realizes the reconstruction of noise N(z). That is, noise N(z) is the input of cLR-GAN, and the approximate value () is the output of the network. The network consists of a generator G and discriminator D. During the training process, generator G receives input A and noise N(z) (usually Gaussian noise) and outputs a predicted value . The discriminator evaluates the quality of the predicted value; that is, the difference between and B is calculated using the loss functions PSDI-DISTS and L1.

3.2. Balanced Training Strategy Integrating L1 and PSDI-DISTS Losses

By introducing the PSDI-DISTS loss, the textural similarity between the reconstructed 3D structure and target can be maintained. However, experiments showed that if only the PSDI-DISTS loss is used, the generator becomes lazy.

In this case, despite the 2D gray-scale core image chosen as the input, the generator tended to find a known 3D structure from the training set as the reconstruction result. This is because the structure directly obtained by the generator from the known training set also satisfied the textural and physical property similarities required for the PSDI-DISTS loss. Therefore, a balanced training strategy integrating

L1 and PSDI-DISTS losses was introduced. In the early stage of training, the

L1 loss was given a larger weight; therefore, the generator network realized that the results obtained from the known training set cannot deceive the discriminator. Thereafter, the weight of the

L1 loss was reduced to a level equivalent to that of PSDI-DISTS. For TFCGAN, at each timepoint when iterating N epochs, the loss weight

of the loss function

L1 was decayed according to the decay factor

; that is,

(

and

represent the new

L1 loss weight and the old

L1 loss weight, respectively). In the initial state,

= 100,

= 10, and

= 10. The final loss and weight decay are given according to Equations (22) and (23).

In summary, L1 loss describes the sum of the point-to-point pixel value differences between the generated structure and the target system. Initially, the L1 loss is heavily weighted. At this stage, if the generator directly takes samples from the training set, even though these samples have great texture and physical property similarity with the target system, the sum of the pixel value differences is large due to the different structures, making it difficult to deceive the discriminator. This forces the generator to learn realistic textural and physical statistical properties rather than directly taking samples from the training set. Later on in training, the L1 loss weight decays to a degree comparable to PSDI-DISTS, jointly constraining model learning.

3.3. Dataset Establishment and Parameter Settings

To test the reconstruction results, we used the real core CT images for verification. In the experiment, the test objects are core samples from the supplementary materials of the paper “Segmentation of digital rock images using deep convolutional autoencoder networks” by Sadegh Karimpouli and Pejman Tahmasebi (3DμCT image of Berea sandstone with a size of 1024 × 1024 × 1024 voxels and a resolution of 0.74 μm). From this 3DμCT image, the authors randomly cut out 3D cubes of 128 × 128 × 128 voxels to build a dataset. A total of 600 samples were created, of which 70% were used as the training set and 30% were used as the test set. Each sample was composed of a 2D image (input) and a 3D structure (target).

The key algorithm parameters include noise reconstruction loss weights

λlatent, discriminator loss weights

λdis, learning rates for generator G and D, batch size, and number of iterations (epoch). Experiments have shown that heterogeneous training images are unstable. The network collapses easily in the later stage of the iterative process, and there is no convergence. To address this problem, this study adopted the learning rate decay strategy; that is, the learning rate was decayed according to the decay factor γ at every N epoch (step), so

lrnew = γlrold. The parameter settings are listed in

Table 1. Similarly, to fully verify the stability and accuracy of the algorithm, we reconstructed the same image 20 times and analyzed the visual contrast and statistical average.

The experiments were run on an Intel i7-6700k system, with 16 GB DDR3 RAM, Nvidia GTX 1080 GPU, and an Ubuntu 16.04 operating system. A core image of size 1283 pixels was reconstructed in 0.5 s. The reconstruction time increased with the size of the network input data. For a core image of size n3 in terms of the amount of data, n3 = 1283 × (n/128)3. Thus, the reconstruction time would be approximately (n/128)3 times the reconstruction time of the 1283 size core image, that is, (n/128) 3 × 0.5 s.

The Pix2Pix model suffers from a certain degree of mode collapse. Mode collapse refers to the network’s limited generative capabilities, often only generating a small number of modes (or even a single mode). Specifically, when the input remains unchanged and only the input noise is varied, the output remains almost unchanged. Essentially, this is because noise N(z) is only added to the input of the network, without explicitly establishing a connection between the noise N(z) and the output.

The TFCGAN proposed in this paper uses BicycleGAN as its basic architecture. Its main purpose is to explicitly establish a connection between noise and target values. In the cLR-GAN model of BicycleGAN, first, the input A and Gaussian noise N(z) are fed into the generator to obtain the predicted value . Then, is fed into the encoder E to obtain the noise distribution . The loss function L1 measures the difference between the noise N(z) and . This makes TFCGAN training more stable. In the experiments, the generator and discriminator losses of TFCGAN reached a stable state after 120 epochs.

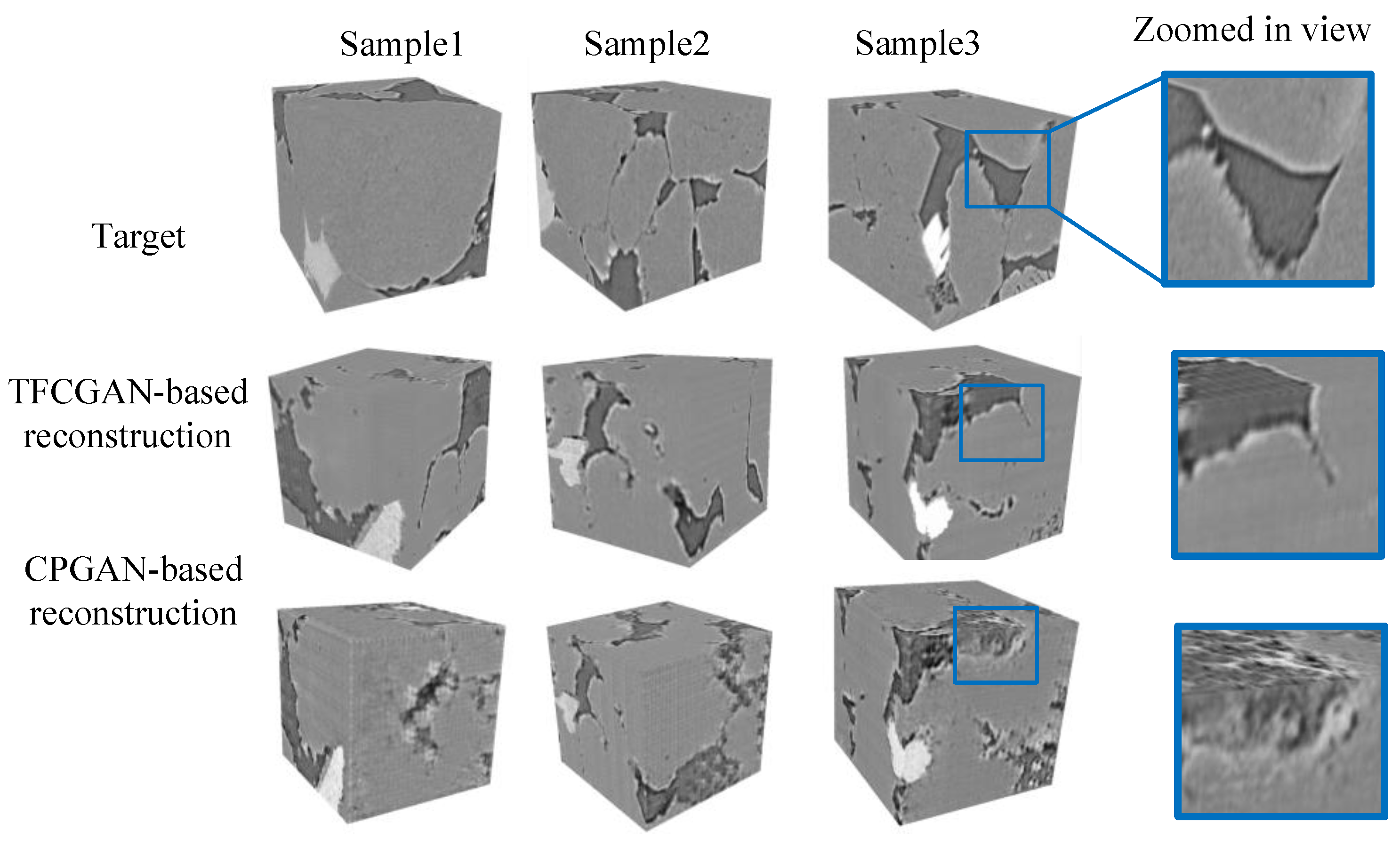

5. Conclusions

The author designed the gray-scale pattern density function through the cross-correlation function and integrated it with the DISTS assessment metric to propose a new physics statistical descriptor-informed deep image structure and texture similarity (PSDI-DISTS) metric. In addition, this study employed the PSDI-DISTS metric as a loss function of the GAN and proposed the TFCGAN model for the reconstruction of gray-scale core images. Reconstruction results using the TFCGAN model and seepage simulation showed that the reconstruction results can maintain the texture characteristics of the target system and lead to similar seepage characteristics, thus proving the effectiveness of the proposed algorithm.

The algorithm described in this paper focuses on digital core 3D reconstruction. This involves reconstructing a 3D structure from a single 2D gray-scale core slice image. This reconstructed 3D structure is statistically and morphologically similar to the target 3D structure and can be used to analyze physical properties such as seepage, thereby guiding real-world petroleum geology research. Therefore, in answer to the reviewer’s question, the resolution of the input 2D image determines the resolution of the reconstructed 3D structure; 3D reconstruction itself does not improve resolution. Super-resolution reconstruction of 2D or 3D digital core images is also a separate research area. For related research, readers can refer to the following literature [

56].

Although the TFCGAN algorithm effectively reconstructed the gray-scale core images, some problems remain, for example, the combination of neural networks and conventional methods. Significant progress has been made in recent years in terms of the accuracy of the 3D image reconstruction of cores. However, progress in terms of reconstruction speed has been slow, which could be because traditional reconstruction algorithms achieve reconstructions based on iterative processes. Although 3D reconstruction methods based on GPU or multithreading exist, there has been no change to the iterative reconstruction mechanism. Deep learning technology has the advantages of automatic feature extraction and faster inference speed. Recently, it has played an important role in image processing, video classification, and other fields. Therefore, the potential of deep learning to replace the iterative reconstruction mechanism in traditional algorithms and improve the reconstruction speed is worth considering.

In addition, although neural network-based approaches play an important role in various fields, we cannot completely neglect the development of traditional methods and pursue neural networks. Neural network methods are not omnipotent. If some prior information such as core porosity, pattern, shape distribution, and interlayer constraint information is incorporated into deep learning models as constraints or loss functions and if features described in the traditional method, such as a set of patterns in multipoint geostatistics, are utilized, the quality of reconstruction can be further improved. Therefore, for other specific problems, such as texture synthesis, image restoration, and super-resolution reconstruction, combining the different advantages of traditional and neural network methods is an important research direction.

Few-shot learning: The high cost of core scanning and the unique characteristics of some cores can result in a small number of collected samples. This poses challenges for learning-based methods, especially neural network methods, and further complicates subsequent computation and analysis. Transfer learning can be used to address this issue. The core idea behind transfer learning is to first train the neural network on a larger dataset, allowing it to find a general method for extracting features. Then, based on the specific problem being solved, targeted fine-tuning can be performed using certain prior information. Therefore, how to pre-train the neural network to perform small-shot learning based on specific problems and incorporating prior information specific to the problem is a pressing issue.

On the other hand, neural networks offer significant advantages in terms of reconstruction time. However, these methods typically have a large number of parameters and high hardware requirements (especially GPU memory). Therefore, the study of suitable methods based on the characteristics of core images and the design of lightweight models is of vital importance for the application of neural networks. Block reconstruction and various model compression methods [

57,

58,

59,

60] are currently one of the areas worth paying attention to.

Deep learning techniques such as the GAN can fit complex functions and high-dimensional spatial data distributions and therefore have been widely used in the field of 3D digital core reconstruction. The network designed in this paper can reconstruct cores and use the reconstruction results to simulate core flow characteristics. However, it should be recognized that the internal mechanisms of deep learning techniques such as the GAN are like a black box and their physical interpretability remains an issue worth exploring. The authors and other researchers in the field will continue to study related issues.