A Survey of Multi-Label Text Classification Under Few-Shot Scenarios

Abstract

1. Introduction

- (1)

- Conduct a comprehensive literature review to systematically organize and summarize recent advances in multi-label text classification under few-shot scenarios, providing valuable references for related research.

- (2)

- Propose a rigorous classification framework to systematically categorize and structure existing studies.

- (3)

- Identify the key challenges and methodological limitations faced by current approaches to multi-label text classification under few-shot scenarios.

- (4)

- Review representative tasks in three specific application scenarios and provide a comparative analysis of the corresponding algorithms.

- (5)

- Summarize the current research challenges in this field and discuss potential directions for future research.

2. Modeling and Current Research Status of Multi-Label Text Classification Under Few-Shot Scenarios

2.1. Mathematical Description

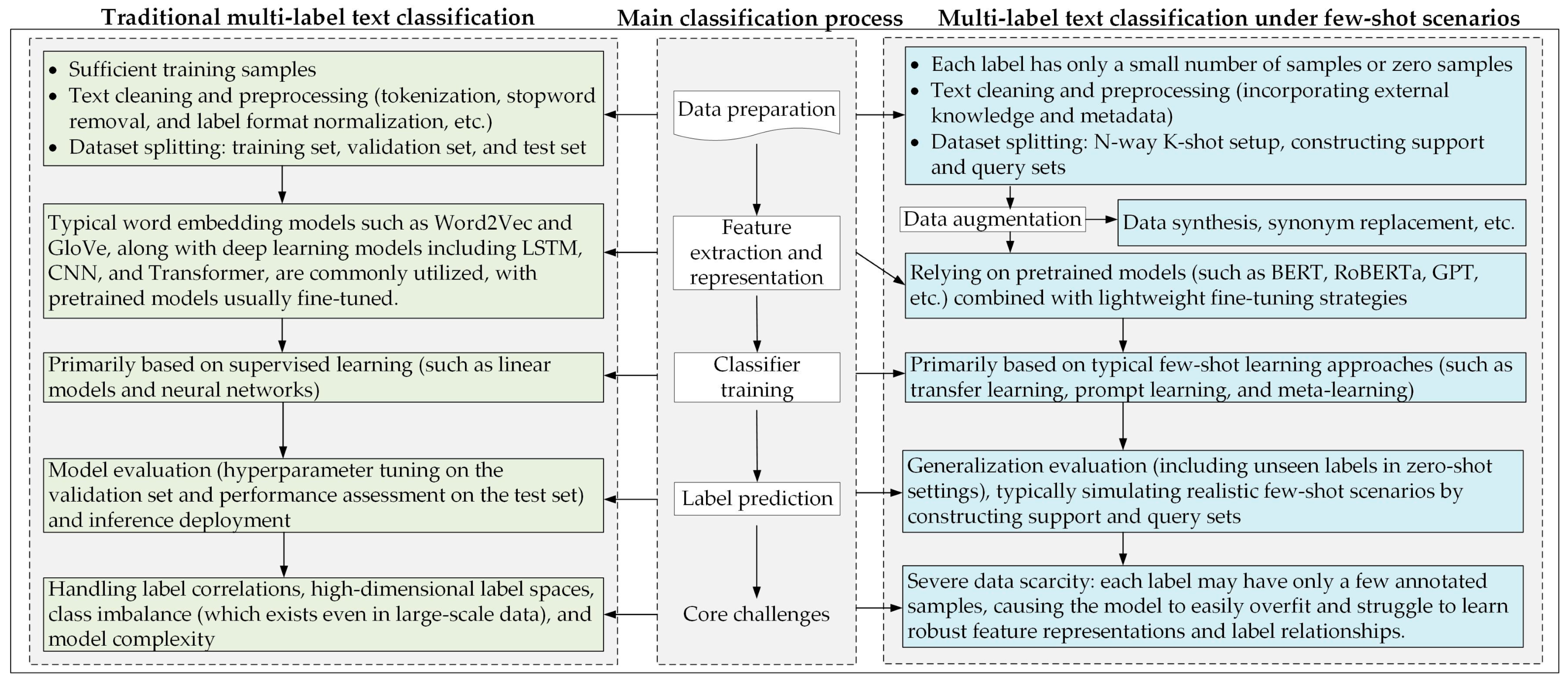

2.2. Differences Between Conventional Multi-Label Text Classification and Multi-Label Text Classification Under Few-Shot Scenarios

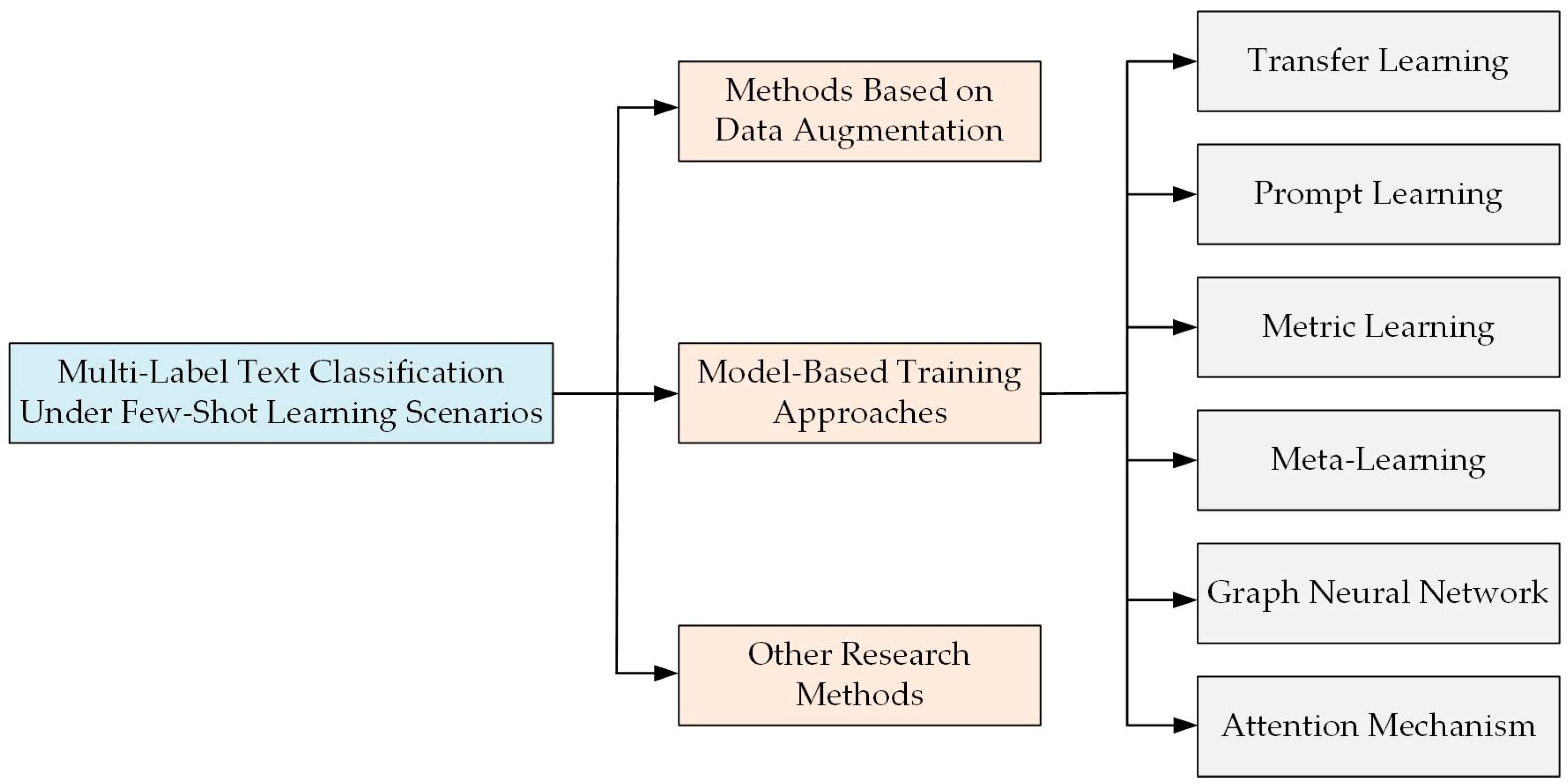

3. Technical Approaches

3.1. Methods Based on Data Augmentation

3.2. Model-Based Training Approaches

3.2.1. Transfer Learning-Based Approaches

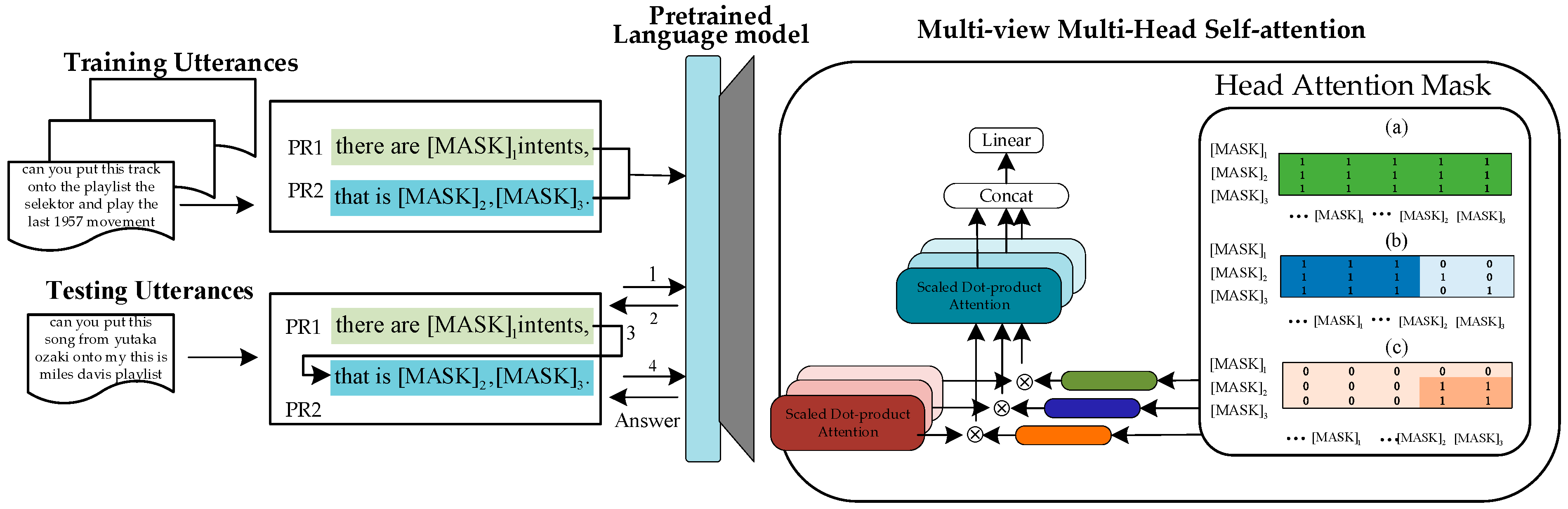

3.2.2. Prompt Learning-Based Approaches

3.2.3. Metric Learning-Based Approaches

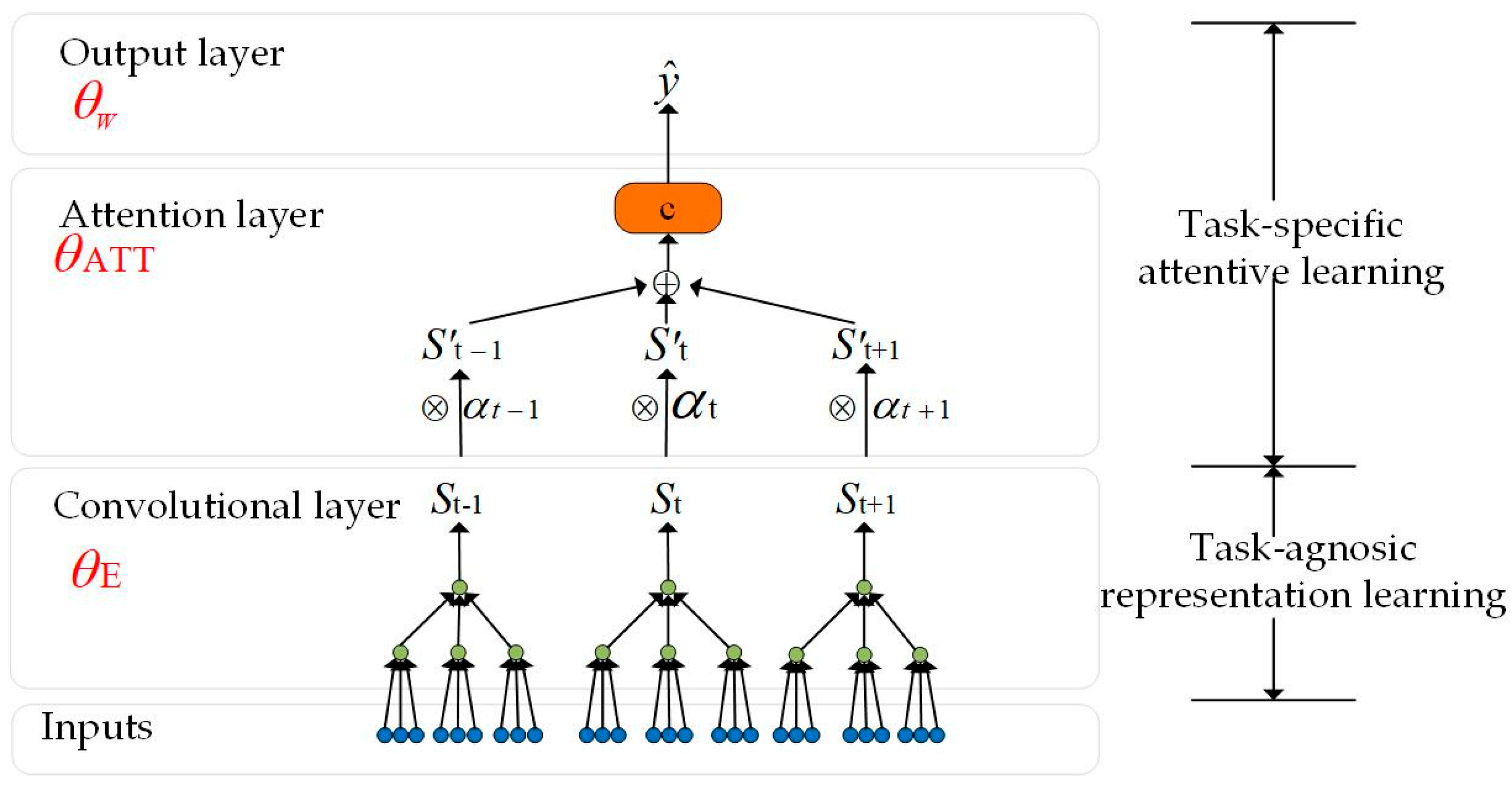

3.2.4. Meta-Learning-Based Approaches

3.2.5. Graph Neural Network-Based Approaches

3.2.6. Attention Mechanism-Based Approaches

3.3. Other Research Approaches

3.4. Multi-Model Performance Evaluation Under Similar Conditions

4. Scenario-Specific Studies

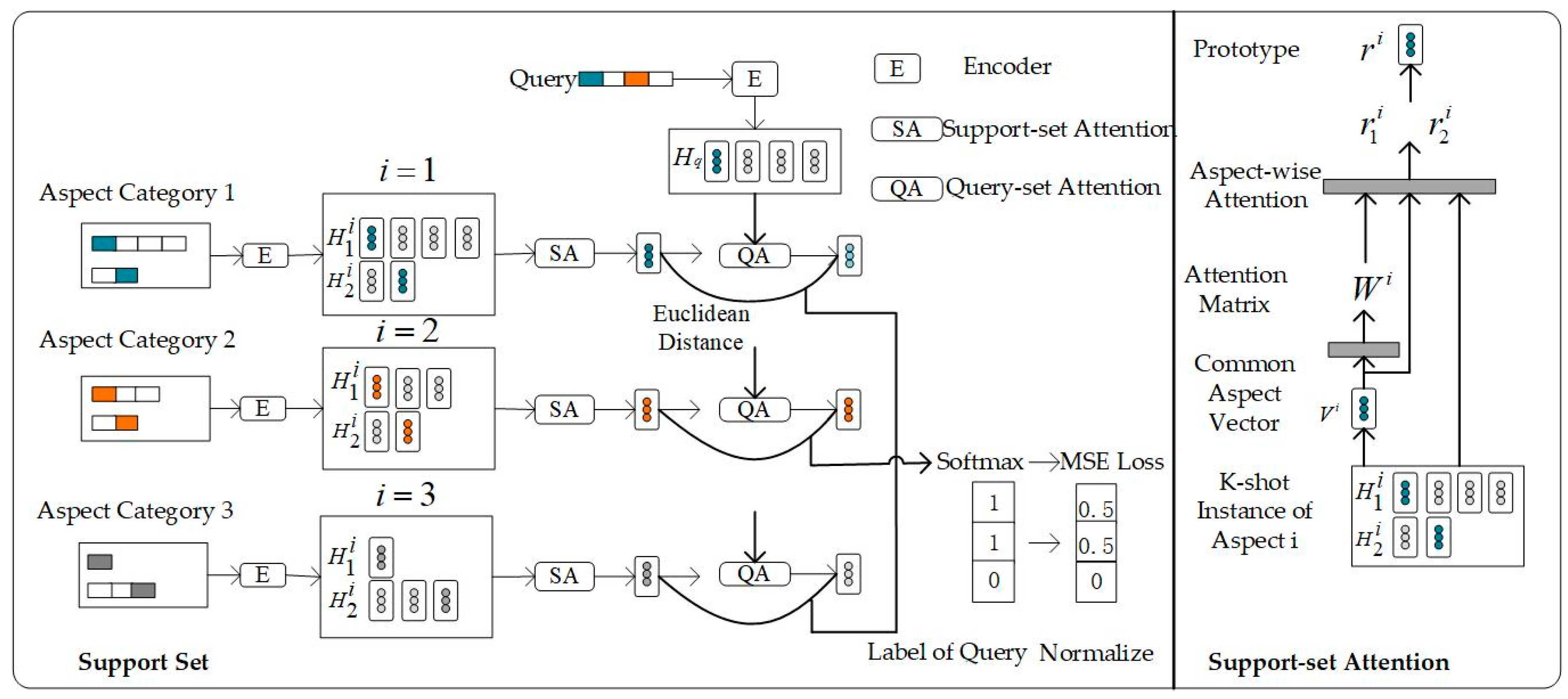

4.1. Few-Shot Multi-Label Aspect Category Detection

4.2. Few-Shot Multi-Label Intent Detection

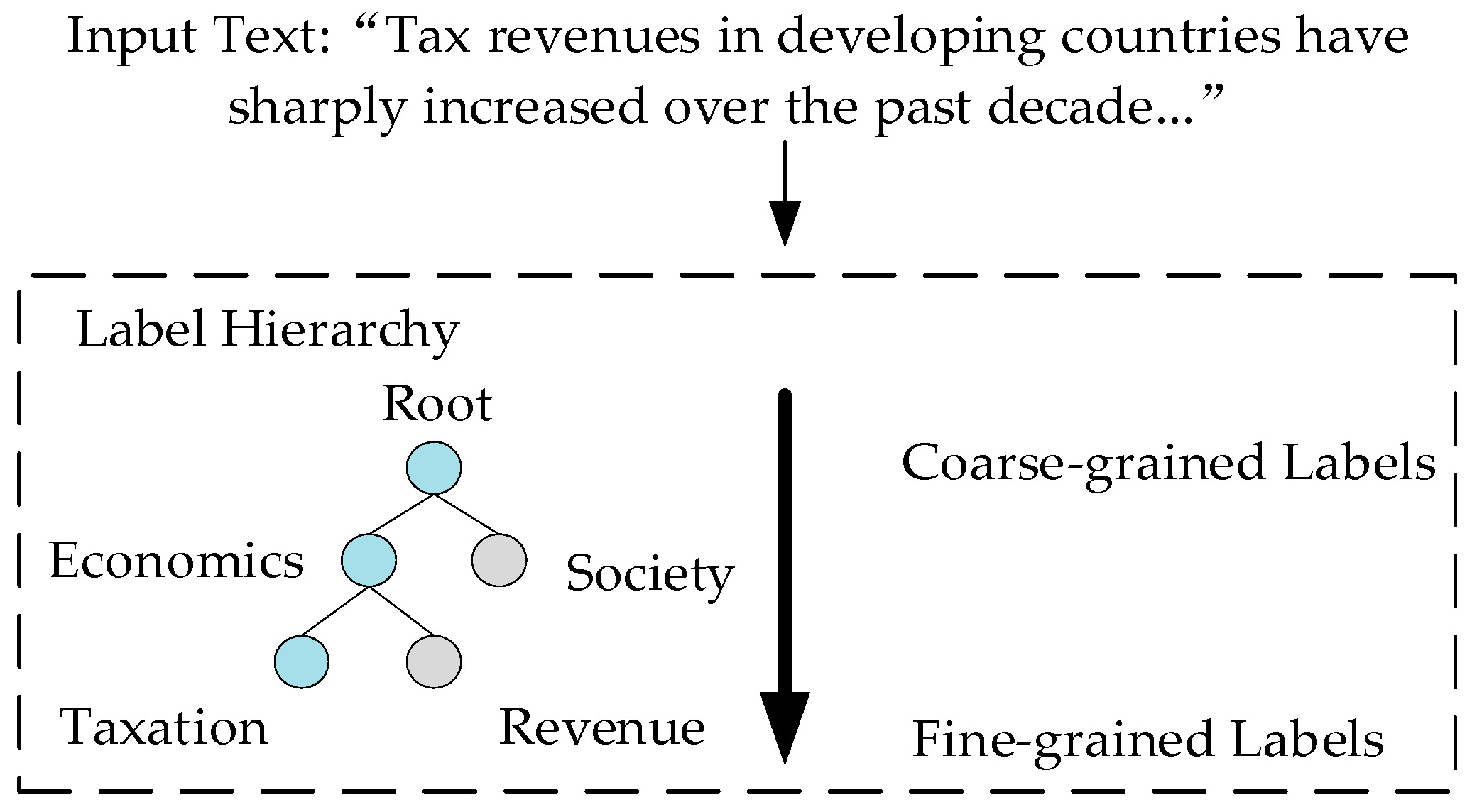

4.3. Few-Shot Multi-Label Hierarchical Text Classification

5. Commonly Used Datasets

6. Commonly Used Evaluation Metrics

6.1. Instance-Based Evaluation Metrics

- (1)

- Accuracy

- (2)

- Precision

- (3)

- Recall

- (4)

- F1-Score

- (5)

- Top- Precision ()

- (6)

- Top- Normalized Discounted Cumulative Gain ()

- (7)

- Top-k Propensity-Scored Precision ()

- (8)

- Top-k Recall ()

6.2. Label-Based Evaluation Metrics

- (1)

- Micro-F1

- (2)

- Macro-F1

- (3)

- AUC

6.3. Summary

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Song, Y.; Wang, T.; Cai, P.; Mondal, S.K.; Sahoo, J.P. A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities. ACM Comput. Surv. 2023, 55, 1–40. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Piscataway, NJ, USA, 2021; Volume 44, pp. 5149–5169. [Google Scholar]

- Gao, T.; Fisch, A.; Chen, D. Making pre-trained language models better few-shot learners. arXiv 2020, arXiv:2012.15723. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Falis, M.; Dong, H.; Birch, A.; Alex, B. Horses to zebras: Ontology-guided data augmentation and synthesis for ICD-9 coding. In Proceedings of the 21st Workshop on Biomedical Language Processing, Dublin, Ireland, 26 May 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 389–401. [Google Scholar]

- Zhou, Y.; Qin, Y.; Huang, R.; Chen, Y.; Lin, C.; Zhou, Y. Self-training improves few-shot learning in legal artificial intelligence tasks. In Artificial Intelligence and Law; Springer Nature: Berlin, Germany, 2024; pp. 1–17. [Google Scholar]

- Zhang, D.; Li, T.; Zhang, H.; Yin, B. On data augmentation for extreme multi-label classification. arXiv 2020, arXiv:2009.10778. [Google Scholar]

- Xu, P.; Xiao, L.; Liu, B.; Lu, S.; Jing, L.; Yu, J. Label-specific feature augmentation for long-tailed multi-label text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 10602–10610. [Google Scholar]

- Xu, P.; Song, M.; Li, Z.; Lu, S.; Jing, L.; Yu, J. Taming Prompt-Based Data Augmentation for Long-Tailed Extreme Multi-Label Text Classification. In Proceedings of the ICASSP 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 9981–9985. [Google Scholar]

- Zhao, Z.; Alzubaidi, L.; Zhang, J.; Duan, Y.; Gu, Y. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Syst. Appl. 2024, 242, 122807. [Google Scholar] [CrossRef]

- Rios, A.; Kavuluru, R. Neural transfer learning for assigning diagnosis codes to EMRs. Artif. Intell. Med. 2019, 96, 116–122. [Google Scholar] [CrossRef]

- Li, K.; Jing, L. Long-tailed Multi-label Text Classification via Label Co-occurrence-Aware Knowledge Transfer. In Proceedings of the 2022 European Conference on Natural Language Processing and Information Retrieval (ECNLPIR), Hangzhou, China, 19–21 July 2022; pp. 62–68. [Google Scholar]

- Wang, H.; Xu, C.; Mcauley, J. Automatic multi-label prompting: Simple and interpretable few-shot classification. arXiv 2022, arXiv:2204.06305. [Google Scholar]

- Livernoche, V.; Sujaya, V. A Reproduction of Automatic Multi-Label Prompting: Simple and Interpretable Few-Shot Classification. ML Reprod. Chall. 2022, 9, 33. [Google Scholar]

- Wei, L.; Li, Y.; Zhu, Y.; Li, B.; Zhang, L. Prompt tuning for multi-label text classification: How to link exercises to knowledge concepts? Appl. Sci. 2022, 12, 10363. [Google Scholar] [CrossRef]

- Hu, S.; Ding, N.; Wang, H.; Liu, Z.; Wang, J.; Li, J.; Wu, W.; Sun, M. Knowledgeable prompt-tuning: Incorporating knowledge into prompt verbalizer for text classification. arXiv 2021, arXiv:2108.02035. [Google Scholar]

- Yang, Z.; Wang, S.; Rawat, B.P.S.; Mitra, A.; Yu, H. Knowledge injected prompt based fine-tuning for multi-label few-shot icd coding. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Suzhou, China, 25 February 2023; p. 1767. [Google Scholar]

- Yang, Z.; Kwon, S.; Yao, Z.; Yu, H. Multi-label few-shot icd coding as autoregressive generation with prompt. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 5366–5374. [Google Scholar]

- Zhou, X.; Yang, L.; Wang, X.; Zhan, H.; Sun, R. Two stages prompting for few-shot multi-intent detection. Neurocomputing 2024, 579, 127424. [Google Scholar] [CrossRef]

- Zhuang, N.; Wei, X.; Li, J.; Wang, X.; Wang, C.; Wang, L.; Dang, J. A prompt learning framework with large language model augmentation for few-shot multi-label intent detection. In Proceedings of the ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Zhou, C.; Huang, B.; Ling, Y. A Chinese few-shot named-entity recognition model based on multi-label prompts and boundary information. Appl. Sci. 2025, 15, 5801. [Google Scholar] [CrossRef]

- Yang, W.; Li, J.; Fukumoto, F.; Ye, Y. HSCNN: A hybrid-siamese convolutional neural network for extremely imbalanced multi-label text classification. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6716–6722. [Google Scholar]

- Csányi, G.M.; Vági, R.; Megyeri, A.; Fülöp, A.; Nagy, D.; Vadász, J.P.; Uveges, I. Can triplet loss be used for multi-label few-shot classification? A Case study. Information 2023, 14, 520. [Google Scholar] [CrossRef]

- Hui, B.; Liu, L.; Chen, J.; Zhou, X.; Nian, Y. Few-shot relation classification by context attention-based prototypical networks with BERT. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 1–17. [Google Scholar] [CrossRef]

- Wang, X.; Du, Y.; Chen, D.; Li, X.; Chen, X.; Lee, Y.L.; Liu, J. Constructing better prototype generators with 3D CNNs for few-shot text classification. Expert Syst. Appl. 2023, 225, 120124. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, R.; Pan, L.; Wu, Z. A multi-label few-shot instance-level attention prototypical network classification method. Trans. Beijing Inst. Technol. (Nat. Sci. Ed.) 2023, 43, 403–409. [Google Scholar]

- Xiao, L.; Xu, P.; Song, M.; Liu, H.; Jing, L.; Zhang, X. Triple alliance prototype orthotist network for long-tailed multi-label text classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 2616–2628. [Google Scholar] [CrossRef]

- Kong, F.; Zhang, R.; Guo, X.; Chen, J.; Wang, Z. Preserving label correlation for multi-label text classification by prototypical regularizations. In Proceedings of the ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; pp. 3300–3310. [Google Scholar]

- Rios, A.; Kavuluru, R. EMR coding with semi-parametric multi-head matching networks. In Proceedings of the conference Association for Computational Linguistics North American Chapter Meeting, New Orleans, LA, USA, 23 August 2018; p. 2081. [Google Scholar]

- Yuan, Z.; Tan, C.; Huang, S. Code synonyms do matter: Multiple synonyms matching network for automatic ICD coding. arXiv 2022, arXiv:2203.01515. [Google Scholar]

- Thrun, S.; Pratt, L. Learning to learn: Introduction and overview. In Learning to Learn; Springer: Berlin/Heidelberg, Germany, 1998; pp. 3–17. [Google Scholar]

- Jiang, X.; Havaei, M.; Chartrand, G.; Chouaib, H.; Vincent, T.; Jesson, A.; Chapados, N.; Matwin, S. Attentive task-agnostic meta-learning for few-shot text classification. In Proceedings of the ICLR 2019 Conference Blind Submission, New Orleans, LA, USA, 28 September 2018. [Google Scholar]

- Wang, R.; Su, X.A.; Long, S.; Dai, X.; Huang, S.; Chen, J. Meta-LMTC: Meta-learning for large-scale multi-label text classification. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 8633–8646. [Google Scholar]

- Xiao, L.; Zhang, X.; Jing, L.; Huang, C.; Song, M. Does head label help for long-tailed multi-label text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 14103–14111. [Google Scholar]

- Zhou, F.; Qi, X.; Xiao, C.; Wang, J. MetaRisk: Semi-supervised few-shot operational risk classification in banking industry. Inf. Sci. 2021, 552, 1–16. [Google Scholar] [CrossRef]

- Teng, F.; Zhang, Q.; Zhou, X.; Hu, J.; Li, T. Few-shot ICD coding with knowledge transfer and evidence representation. Expert Syst. Appl. 2024, 238, 121861. [Google Scholar] [CrossRef]

- Wang, X.; Ye, Y.; Gupta, A. Zero-shot recognition via semantic embeddings and knowledge graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6857–6866. [Google Scholar]

- Rios, A.; Kavuluru, R. Few-shot and zero-shot multi-label learning for structured label spaces. In Proceedings of the Conference on Empirical Methods in Natural Language Processing Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; p. 3132. [Google Scholar]

- Ge, X.; Williams, R.D.; Stankovic, J.A.; Alemzadeh, H. Dkec: Domain knowledge enhanced multi-label classification for electronic health records. arXiv 2023, arXiv:2310.07059. [Google Scholar]

- Lu, J.; Du, L.; Liu, M.; Dipnall, J. Multi-label few/zero-shot learning with knowledge aggregated from multiple label graphs. arXiv 2020, arXiv:2010.07459. [Google Scholar]

- Chen, L.; Yan, X.; Wang, Z.; Huang, H. Neural architecture search with heterogeneous representation learning for zero-shot multi-label text classification. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, QLD, Australia, 18–23 June 2023; pp. 1–8. [Google Scholar]

- Chalkidis, I.; Fergadiotis, M.; Kotitsas, S.; Malakasiotis, P.; Aletras, N.; Androutsopoulos, I. An empirical study on large-scale multi-label text classification including few and zero-shot labels. arXiv 2020, arXiv:2010.01653. [Google Scholar]

- Wang, S.; Ren, P.; Chen, Z.; Ren, Z.; Liang, H.; Yan, Q.; Kanoulas, E.; de Rijke, M. Few-shot electronic health record coding through graph contrastive learning. arXiv 2021, arXiv:2106.15467. [Google Scholar]

- Chen, J.; Li, X.; Xi, J.; Yu, L.; Xiong, H. Rare codes count: Mining inter-code relations for long-tail clinical text classification. In Proceedings of the 5th Clinical Natural Language Processing Workshop, Toronto, ON, Canada, 14 July 2023; pp. 403–413. [Google Scholar]

- Rajaonarivo, L.; Mine, T.; Arakawa, Y. Few-shot and LightGCN learning for multi-label estimation of lesser-known tourist sites using tweets. In Proceedings of the 2023 IEEE/WIC International Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), Venice, Italy, 26–29 October 2023; pp. 103–110. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 20, 1254–1259. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Vu, T.; Nguyen, D.Q.; Nguyen, A. A label attention model for ICD coding from clinical text. arXiv 2020, arXiv:2007.06351. [Google Scholar]

- Wang, Y.; Zhou, L.; Zhang, W.; Zhang, F.; Wang, Y. A soft prompt learning method for medical text classification with simulated human cognitive capabilities. Artif. Intell. Rev. 2025, 58, 118. [Google Scholar] [CrossRef]

- Yogarajan, V.; Pfahringer, B.; Smith, T.; Montiel, J. Improving predictions of tail-end labels using concatenated biomed-transformers for long medical documents. arXiv 2021, arXiv:2112.01718. [Google Scholar]

- Rethmeier, N.; Augenstein, I. Self-supervised contrastive zero to few-shot learning from small, long-tailed text data. In Proceedings of the ICLR 2021 Conference Blind Submission, Vienna, Austria, 4 May 2021. [Google Scholar]

- Yao, Y.; Zhang, J.; Zhang, P.; Sun, Y. A dual-branch learning model with gradient-balanced loss for long-tailed multi-label text classification. ACM Trans. Inf. Syst. 2023, 42, 1–24. [Google Scholar] [CrossRef]

- Xu, H.; Vucetic, S.; Yin, W. X-SHOT: A Single System to Handle Frequent, Few-shot and Zero-shot Labels in Classification. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7 May 2024. [Google Scholar]

- Schopf, T.; Blatzheim, A.; Machner, N.; Matthes, F. Efficient few-shot learning for multi-label classification of scientific documents with many classes. arXiv 2024, arXiv:2410.05770. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; AL-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; Clercq, O.D.; et al. Semeval-2016 task 5: Aspect based sentiment analysis. Int. Workshop Semant. Eval. 2016, 19–30. [Google Scholar] [CrossRef]

- Hu, M.; Zhao, S.; Guo, H.; Xue, C.; Gao, H.; Gao, T.; Cheng, R.; Su, Z. Multi-label few-shot learning for aspect category detection. arXiv 2021, arXiv:2105.14174. [Google Scholar]

- Liu, H.; Zhang, F.; Zhang, X.; Zhao, S.; Sun, J.; Yu, H.; Zhang, X. Label-enhanced prototypical network with contrastive learning for multi-label few-shot aspect category detection. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 1079–1087. [Google Scholar]

- Zhao, F.; Shen, Y.; Wu, Z.; Dai, X. Label-driven denoising framework for multi-label few-shot aspect category detection. arXiv 2022, arXiv:2210.04220. [Google Scholar]

- Wang, Z.; Iwaihara, M. Few-shot multi-label aspect category detection utilizing prototypical network with sentence-level weighting and label augmentation. In Database and Expert Systems Applicationsthe, International Conference on Database and Expert Systems Applications, Penang, Malaysia, 28–30 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 363–377. [Google Scholar]

- Zhao, S.; Chen, W.; Wang, T. Learning few-shot sample-set operations for noisy multi-label aspect category detection. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 19–25 August 2023; pp. 5306–5313. [Google Scholar]

- Peng, C.; Chen, K.; Shou, L.; Chen, G. Variational hybrid-attention framework for multi-label few-shot aspect category detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 14590–14598. [Google Scholar]

- Guan, C.; Bai, Y.; Zhou, X. Few-shot multi-label aspect category detection based on prompt-enhanced prototypical networks. J. Shanxi Univ. (Nat. Sci. Ed.) 2024, 47, 494–505. [Google Scholar]

- Guan, C.; Zhu, Y.; Bai, Y.; Wang, L. Label-guided prompt for multi-label few-shot aspect category detection. arXiv 2024, arXiv:2407.20673. [Google Scholar]

- Zhao, S.; Chen, W.; Wang, T.; Yao, J.; Lu, D.; Zheng, J. Less is Enough: Relation graph guided few-shot learning for multi-label aspect category detection. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Young, S.; Gašić, M.; Thomson, B.; Williams, J.D. Pomdp-based statistical spoken dialog systems: A review. Proc. IEEE 2013, 101, 1160–1179. [Google Scholar] [CrossRef]

- Qin, L.; Xu, X.; Che, W.; Liu, T. TD-GIN: Token-level dynamic graph-interactive network for joint multiple intent detection and slot filling. arXiv 2020, arXiv:2004.10087. [Google Scholar]

- Xu, P.; Sarikaya, R. Exploiting shared information for multi-intent natural language sentence classification. Interspeech 2013, 3785–3789. [Google Scholar] [CrossRef]

- Hou, Y.; Lai, Y.; Wu, Y.; Che, W.; Liu, T. Few-shot learning for multi-label intent detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 13036–13044. [Google Scholar]

- Zhang, R.; Luo, S.; Pan, L.; Ma, Y.; Wu, Z. Strengthened multiple correlation for multi-label few-shot intent detection. Neurocomputing 2023, 523, 191–198. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, W.; Ding, F.; Wang, T. Dual class knowledge propagation network for multi-label few-shot intent detection. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 8605–8618. [Google Scholar]

- Zhang, X.; Li, X.; Liu, H.; Liu, X.; Zhang, X. Label hierarchical structure-aware multi-label few-shot intent detection via prompt tuning. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; pp. 2482–2486. [Google Scholar]

- Zhang, X.; Li, X.; Zhang, F.; Wei, Z.; Liu, J.; Liu, H. A coarse-to-fine prototype learning approach for multi-label few-shot intent detection. Find. Assoc. Comput. Linguist. EMNLP 2024, 2024, 2489–2502. [Google Scholar]

- Sun, A.; Lim, E.-P. Hierarchical text classification and evaluation. In Proceedings of the 2001 IEEE International Conference on Data Mining, San Jose, CA, USA, 29 November–2 December 2001; pp. 521–528. [Google Scholar]

- Chen, H.; Ma, Q.; Lin, Z.; Yan, J. Hierarchy-aware label semantics matching network for hierarchical text classification. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Bangkok, Thailand, 1–6 August 2021; pp. 4370–4379. [Google Scholar]

- Ji, K.; Lian, Y.; Gao, J.; Wang, B. Hierarchical verbalizer for few-shot hierarchical text classification. arXiv 2023, arXiv:2305.16885. [Google Scholar]

- Ji, K.; Wang, P.; Ke, W.; Li, G.; Liu, J.; Gao, J.; Shang, Z. Domain-hierarchy adaptation via chain of iterative reasoning for few-shot hierarchical text classification. arXiv 2024, arXiv:2407.08959. [Google Scholar]

- Kim, G.; Im, S.; Oh, H.-S. Hierarchy-aware biased bound margin loss function for hierarchical text classification. Find. Assoc. Comput. Linguist. ACL 2024, 2024, 7672–7682. [Google Scholar]

- Chen, H.; Zhao, Y.; Chen, Z.; Wang, M.; Li, L.; Zhang, M.; Zhang, M. Retrieval-style in-context learning for few-shot hierarchical text classification. Trans. Assoc. Comput. Linguist. 2024, 12, 1214–1231. [Google Scholar] [CrossRef]

- Zhao, X.; Yan, H.; Liu, Y. Hierarchical multilabel classification for fine-level event extraction from aviation accident reports. Inf. J. Data Sci. 2025, 4, 51–66. [Google Scholar] [CrossRef]

- Mcauley, J.; Leskovec, J. Hidden factors and hidden topics: Understanding rating dimensions with review text. In Proceedings of the 7th ACM Conference on Recommender Systems, Hong Kong, China, 12–16 October 2013; pp. 165–172. [Google Scholar]

- Johnson, A.E.; Pollard, T.J.; Shen, L.; Lehman, L.W.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Celi, L.A.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 1–9. [Google Scholar] [CrossRef]

- Lee, J.; Scott, D.J.; Villarroel, M.; Clifford, G.D.; Saeed, M.; Mark, R.G. Open-access MIMIC-II database for intensive care research. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August 2011–3 September 2011; pp. 8315–8318. [Google Scholar]

- Yang, P.; Sun, X.; Li, W.; Ma, S.; Wu, W.; Wang, H. SGM: Sequence generation model for multi-label classification. arXiv 2018, arXiv:1806.04822. [Google Scholar]

- Lewis, D.D.; Yang, Y.; Rose, T.G.; Li, F. Rcv1: A new benchmark collection for text categorization research. J. Mach. Learn. Res. 2004, 5, 361–397. [Google Scholar]

- Loza Mencía, E.; Fürnkranz, J. Efficient pairwise multilabel classification for large-scale problems in the legal domain. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Vilnius, Lithuania, 8–12 September 2024; Springer: Berlin/Heidelberg, Germany, 2008; pp. 50–65. [Google Scholar]

- Zubiaga, A. Enhancing navigation on wikipedia with social tags. arXiv 2012, arXiv:1202.5469. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; Kleef, P.V.; Auer, S.; et al. Dbpedia–a large-scale, multilingual knowledge base extracted from wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

- Williams, J.D.; Raux, A.; Ramachandran, D.; Black, A. Dialog State Tracking Challenge Handbook; Microsoft Research: Redmond, WA, USA, 2012. [Google Scholar]

- Eric, M.; Manning, C.D. Key-value retrieval networks for task-oriented dialogue. arXiv 2017, arXiv:1705.05414. [Google Scholar]

- Chalkidis, I.; Fergadiotis, M.; Malakasiotis, P.; Androutsopoulos, I. Large-scale multi-label text classification on EU legislation. arXiv 2019, arXiv:1906.02192. [Google Scholar]

- Bauman, K.; Liu, B.; Tuzhilin, A. Aspect based recommendations: Recommending items with the most valuable aspects based on user reviews. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 717–725. [Google Scholar]

- Kowsari, K.; Brown, D.E.; Heidarysafa, M.; Meimandi, K.J.; Gerber, M.S.; Barnes, L.E. Hdltex: Hierarchical deep learning for text classification. In Proceedings of the 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 364–371. [Google Scholar]

| Method Category | Models/Methods | Limitations | Addressed Challenges |

|---|---|---|---|

| Methods Based on Data Augmentation | LAIAugment [7] | Self-generated pseudo-labels may introduce noise and bias, leading to the accumulation of errors. | Insufficient labeled data. |

| GDA [8] | When data is scarce (e.g., when only 1% of the training data is available), GDA tends to produce lower-quality results due to difficulties in fine-tuning, often performing worse than rule-based methods. | Long-tail label distribution. | |

| Falis et al. [6] | Relies on external NER + L tools. | Long-tail label distribution. | |

| LSFA [9] | Feature transfer depends on the data quality of head labels. | Long-tail label distribution. | |

| XDA [10] | Methods based on high-quality pre-trained models (e.g., T5) exhibit superior performance, but their high computational cost limits practical applicability. | Long-tail label distribution. | |

| Transfer Learning-Based Approaches | Rios et al. [12] | Relies on two independent datasets (PubMed and EMR), increasing costs, and is unable to handle rare or unseen codes. | Data sparsity and long document handling. |

| LCOAKT [13] | Relies on the construction of label co-occurrence graphs and requires further optimization in hyperparameter tuning. | Long-tail label distribution. | |

| Prompt Learning-Based Approaches | AMuLaP [14] | Performance is limited by fixed prompt templates. | Manual design of label mappings requires extensive trial and error. |

| PTMLTC [16] | Ignores the natural graph structure relationships between knowledge concepts, affecting classification performance. | Reduces reliance on large amounts of labeled data. | |

| KPT [17] | Relies on the quality of external knowledge bases, which may introduce noise or malicious terms. | Mitigates issues of incomplete label-to-word mapping, bias, and instability in prompt learning. | |

| KEPTLongformer [18] | Due to memory constraints, it cannot be directly applied to tasks with a large number of labels (e.g., 8692 ICD codes). | Long-tail label distribution and data sparsity. | |

| GPsoap [19] | Generation speed is slow, and it relies on a large amount of proprietary clinical data for pretraining. | Complexity of long-tail label distribution and high-dimensional label space. | |

| PFT [20] | Relies on the accuracy of intent prediction and is sensitive to prompt design and data sampling strategies in few-shot scenarios. | Difficulty in threshold estimation and insufficient capture of intent correlations. | |

| PLMA [21] | Relies on LLM-generated templates and expanded answer space, which may increase computational costs and complexity. | Alleviates data sparsity and label dependency issues. | |

| MPBCNER [22] | Model computational efficiency is low, and an independent decoder must be designed for each entity type. | Challenges in Chinese named entity recognition under low-resource and complex structural conditions. | |

| Metric Learning-Based Approaches | HSCNN [23] | Relies on sampling strategies and threshold settings, with high computational complexity. | Long-tail label distribution. |

| Csányi et al. [24] | Performs poorly in binary classification tasks, with significant label overlap reducing classification effectiveness. | Label overlap and sample scarcity. | |

| Luo et al. [27] | Relies on label words as prior knowledge, without fully mitigating noise interference from multi-label samples in the support set. | Noise interference and prototype confusion issues. | |

| TAPON [28] | Performance may be unstable under extreme data scarcity (e.g., when tail labels have only 1–3 documents). | Long-tail label distribution. | |

| ProtoMix [29] | Performance may be limited when the number of labels is extremely large. | Label correlation and overfitting issues. | |

| Match–CNN [30] | The sampling method for the support set is relatively simple, potentially affecting performance. | Label sparsity and insufficient key information in long texts. | |

| MSMN [31] | Relies on external knowledge bases (e.g., UMLS) for synonym acquisition. | Addressing the diversity of ICD code expressions in electronic health records. | |

| Meta-Learning-Based Approaches | ATAML [33] | Performance may be limited when task differences are large or data distributions are complex. | Data scarcity. |

| Meta-LMTC [34] | High computational complexity. | Long-tail label distribution. | |

| HTTN [35] | Meta-knowledge learning may be insufficient when head labels are limited. | Long-tail label distribution. | |

| MetaRisk [36] | Dependency on unlabeled data may introduce noise. | Scarcity of labeled data and insufficient multi-label combination samples. | |

| EPEN [37] | Relies on high-quality training samples and does not fully utilize external knowledge. | Long-tail label distribution. | |

| Graph Neural Network-Based Approaches | ZAGCNN [39] | The model performs slightly worse than ACNN on frequent labels (e.g., 0.3% lower R@10 on MIMIC-III), and its reliance on structured label information and natural language descriptions limits its generalizability. | Information dispersion in long documents and label data sparsity. |

| DKEC [40] | Performance depends on label structure and logical rule design, which may limit generalization to datasets with large label discrepancies. | Long-tail label distribution. | |

| KAMG [41] | The model relies on a predefined label relationship graph, resulting in high computational complexity. | Poor classification performance for small-sample and zero-shot labels. | |

| NAS-HRL [42] | High computational cost and reliance on a predefined heterogeneous search space limit flexibility. | Alleviates the issue of heterogeneous data feature fusion between text and labels. | |

| Chalkidis et al. [43] | Due to text truncation and term fragmentation, BERT-based models perform poorly on the MIMIC-III dataset. Some methods (e.g., GC-BIGRU-LWAN) rely on label hierarchies but show limited effectiveness when labels are sparse. | Label distribution imbalance and underutilization of label hierarchy. | |

| CoGraph [44] | The model only relies on high-frequency words and entities, without fully leveraging medical knowledge or rules. | Extremely imbalanced distribution of ICD codes. | |

| Chen et al. [45] | Using multiple GCN modules may lead to overparameterization and increased training difficulty. | Long-tail label distribution. | |

| Rajaonarivo et al. [46] | Relies on tweet data, and if there are no related tweets for a location, it cannot estimate the category. | Data scarcity in specialized domains. | |

| Attention Mechanism-Based Approaches | LAAT [50] | The model is sensitive to hyperparameters (e.g., LSTM hidden layer size and projection dimension) and has high computational costs. | Data imbalance. |

| Wang et al. [51] | When training samples are insufficient, performance improvements are limited, and it struggles to distinguish between the “diagnosis” and “etiology” categories. | Lack of semantic association between pseudo-labels and original text in soft prompt learning. | |

| Other Research Approaches | Yogarajan et al. [52] | The sequential model has lower resource requirements but performs slightly worse than long-sequence dedicated models like TransformerXL and does not fully address the zero-value issue in low-frequency label prediction. | The performance bottleneck of transformers in handling long texts. |

| Rethmeier et al. [53] | On small-scale data, the model’s robustness to noise and sparse labels still has room for improvement, and performance is limited by the quality and quantity of self-supervised signals. | Alleviates the issue of high data dependence and poor performance of traditional methods in low-resource long-tail scenarios. | |

| DBGB [54] | The dual-branch structure may increase computational complexity and is not optimized for scenarios with extremely large label sets (e.g., millions of labels). | Long-tail label distribution. | |

| X-Shot [55] | Relies on pre-trained language models to generate weakly supervised data may introduce noise and is sensitive to task type overlap. | Alleviates the issue of needing separate optimization for frequent, small-sample, and zero-shot labels. | |

| FusionSent [56] | Training costs are high (requires training two models and merging parameters). | Labeled data scarcity and a large number of categories. |

| Method Category | Models/Methods | P@1 | P@3 | P@5 | nDCG@3 | nDCG@5 |

|---|---|---|---|---|---|---|

| Methods Based on Data Augmentation | GDA [8] 2020 | 96.29 | 83.06 | 67.49 | 91.84 | 90.03 |

| XDA [10] 2024 | 96.67 | / | 67.40 | / | / | |

| Metric Learning-Based Approaches | TAPON [28] 2023 | 95.19 | 80.67 | 65.68 | 89.48 | 87.29 |

| M-PON [28] 2023 | 95.65 | 81.03 | 66.19 | 90.21 | 88.01 | |

| Other Research Approaches | DBGB [54] 2023 | 96.59 | 83.61 | 68.25 | 92.34 | 90.57 |

| DBGB-ens [54] 2023 | 96.66 | 83.78 | 68.49 | 92.48 | 90.80 |

| Method Category | Models/Methods | P@1 | P@3 | P@5 | nDCG@3 | nDCG@5 |

|---|---|---|---|---|---|---|

| Methods Based on Data Augmentation | LSFA [9] 2023 | 97.21 | 82.52 | 57.52 | 94.20 | 95.42 |

| Transfer Learning-Based Approaches | LCOAKT [13] 2022 | 95.61 | 79.98 | 55.87 | 90.91 | 91.82 |

| Metric Learning-Based Approaches | HSCNN [23] 2020 | 94.90 | 77.60 | 54.37 | 81.77 | 64.60 |

| ProtoMix [29] 2025 | 97.48 | 83.24 | 57.82 | 94.12 | 94.64 | |

| TAPON [28] 2023 | 95.09 | 77.84 | 54.47 | 89.56 | 89.34 | |

| M-PON [28] 2023 | 95.89 | 78.81 | 55.23 | 89.95 | 90.69 | |

| Meta-Learning-Based Approaches | HTTN [35] 2021 | 94.70 | 77.83 | 54.21 | 88.49 | 89.05 |

| EHTTN [35] 2021 | 95.86 | 78.92 | 55.27 | 89.61 | 90.86 |

| Method Category | Models/Methods | P@1 | P@3 | P@5 | nDCG@3 | nDCG@5 |

|---|---|---|---|---|---|---|

| Methods Based on Data Augmentation | LSFA [9] 2023 | 86.95 | 62.88 | 43.43 | 83.96 | 87.53 |

| Transfer Learning-Based Approaches | LCOAKT [13] 2022 | 82.83 | 59.34 | 40.51 | 78.49 | 82.24 |

| Metric Learning-Based Approaches | ProtoMix [29] 2025 | 86.83 | 62.72 | 42.75 | 82.67 | 86.49 |

| TAPON [28] 2023 | 83.34 | 59.91 | 41.01 | 79.38 | 83.12 | |

| M-PON [28] 2023 | 83.89 | 60.56 | 41.43 | 79.72 | 83.54 | |

| Meta-Learning-Based Approaches | HTTN [35] 2021 | 82.49 | 58.72 | 40.31 | 78.20 | 81.24 |

| EHTTN [35] 2021 | 83.84 | 59.92 | 40.79 | 79.27 | 82.67 |

| Method Category | Models/Methods | P@1 | P@3 | P@5 | nDCG@3 | nDCG@5 |

|---|---|---|---|---|---|---|

| Methods Based on Data Augmentation | LSFA [9] 2023 | 83.75 | 70.74 | 58.95 | 74.13 | 68.25 |

| Transfer Learning-Based Approaches | LCOAKT [13] 2022 | 81.93 | 68.89 | 57.30 | 72.32 | 66.68 |

| Metric Learning-Based Approaches | ProtoMix [29] 2025 | 87.75 | 74.86 | 62.15 | 78.34 | 72.03 |

| TAPON [28] 2023 | 80.98 | 67.70 | 56.06 | 70.65 | 64.22 | |

| M-PON [28] 2023 | 81.37 | 68.09 | 56.51 | 70.97 | 64.67 | |

| Meta-Learning-Based Approaches | HTTN [35] 2021 | 81.14 | 67.62 | 56.38 | 70.89 | 64.42 |

| Other Research Approaches | DBGB [54] 2023 | 87.61 | 75.21 | 62.54 | 78.53 | 72.30 |

| DBGB-ens [54] 2023 | 88.93 | 76.38 | 63.53 | 79.83 | 73.48 |

| Method Category | Models/Methods | AUC | F1 | P@K | ||||

|---|---|---|---|---|---|---|---|---|

| Macro | Micro | Macro | Micro | k = 5 | k = 8 | k = 15 | ||

| Prompt Learning-Based Approaches | GPsoap [19] 2023 | / | / | 13.4 | 49.8 | / | / | / |

| Reranker (MSMN + GPsoap) [19] 2023 | / | / | 14.6 | 59.1 | / | / | 60.5 | |

| Concater (MSMN + GPsoap) [19] 2023 | / | / | 14.0 | 55.0 | / | / | / | |

| Metric Learning-Based Approaches | MSMN [31] 2022 | 95.0 | 99.2 | 10.3 | 58.4 | / | 75.2 | 59.9 |

| Graph Neural Network-Based Approaches | Chen et al. [45] 2023 | 95.0 | 99.2 | 10.3 | 58.0 | / | 75.3 | 59.9 |

| Chen et al. w/EnrichedDescriptions [45] 2023 | 95.2 | 99.2 | 10.8 | 58.6 | / | 75.3 | 60.3 | |

| Attention Mechanism-Based Approaches | LAAT [50] 2020 | 91.9 | 98.8 | 9.9 | 57.5 | 81.3 | 73.8 | 59.1 |

| JointLAAT [50] 2020 | 92.1 | 98.8 | 10.7 | 57.5 | 80.6 | 73.5 | 59.0 | |

| Method Category | Models/Methods | AUC | F1 | P@K | ||||

|---|---|---|---|---|---|---|---|---|

| Macro | Micro | Macro | Micro | k = 5 | k = 8 | k = 15 | ||

| Prompt Learning-Based Approaches | KEPTLongformer [18] 2022 | 92.63 | 94.76 | 68.91 | 72.85 | 67.26 | / | / |

| Metric Learning-Based Approaches | MSMN [31] 2022 | 92.8 | 94.7 | 68.3 | 72.5 | 68.0 | / | / |

| Attention Mechanism-Based Approaches | LAAT [50] 2020 | 92.5 | 94.6 | 66.6 | 71.5 | 67.5 | 54.7 | 35.7 |

| JointLAAT [50] 2020 | 92.5 | 94.6 | 66.1 | 71.6 | 67.1 | 54.6 | 35.7 | |

| Support Set | |

| staff | (1) It’s the rude staff and bland food that truly ruin the experience. (2) The staff were attentive and polite, and the food exceeded our expectations in both taste and presentation! |

| food | (1) It’s the rude staff and bland food that truly ruin the experience. (2) The staff were attentive and polite, and the food exceeded our expectations in both taste and presentation! |

| experience | (1) Unforgettable dining experience! (2) Every visit has been a pleasant experience with great food, friendly service, and a cozy atmosphere. |

| Query Set | |

| experience and staff staff and food food | (1) It was an awful experience. The staff was impatient, unhelpful, and completely unprofessional. I won’t be returning. (2) The lobby was stunning, our room was spotless, the food was outstanding, and the staff made us feel truly welcome. (3) We had lunch at the rooftop restaurant, and the food impressed us with its rich flavors and beautiful presentation. |

| Model | 5-Way 5-Shot | 5-Way 10-Shot | 10-Way 5-Shot | 10-Way 10-Shot | ||||

|---|---|---|---|---|---|---|---|---|

| AUC | F1 | AUC | F1 | AUC | F1 | AUC | F1 | |

| Proto-AWATT [58] 2021 | 91.45 | 71.72 | 93.89 | 77.19 | 89.80 | 58.89 | 92.34 | 66.76 |

| LPN [59] 2022 | 95.66 | 79.48 | 96.55 | 82.81 | 94.51 | 67.28 | 95.66 | 71.87 |

| LDF [60] 2022 | 92.62 | 73.38 | 94.34 | 78.81 | 90.87 | 62.06 | 92.93 | 68.23 |

| FSO [62] 2023 | 96.01 | 81.04 | 96.67 | 82.22 | 94.93 | 70.26 | 95.71 | 72.46 |

| VHAF [63] 2024 | 97.09 | 84.64 | 97.57 | 87.31 | 96.01 | 75.92 | 96.78 | 79.43 |

| ProtPrompt [64] 2024 | 95.73 | 82.49 | 96.81 | 85.49 | 94.80 | 72.43 | 95.94 | 76.53 |

| LGP [65] 2024 | 97.67 | 85.22 | 97.86 | 86.08 | 95.89 | 75.01 | 96.35 | 76.97 |

| User Utterances | Intent Labels |

|---|---|

| What is the date and Intent labels time of my next lab appointment? | Request_date, Request_time |

| Tell me Redwood City ’s forecast today. | Request_weather |

| Model | It | Ac | At | Fo | Tr | ||

|---|---|---|---|---|---|---|---|

| 1-shot | Electra-small | ALR + MCT [70] 2021 | 39.98 | 51.55 | 55.16 | 52.16 | 55.36 |

| DCKPN [72] 2023 | 44.21 | 55.91 | 59.74 | 56.55 | 57.48 | ||

| LHS [73] 2024 | 47.07 | 57.11 | 60.75 | 57.38 | 59.21 | ||

| BERT-base | ALR + MCT [70] 2021 | 44.58 | 57.11 | 60.34 | 56.49 | 60.18 | |

| DCKPN [72] 2023 | 48.19 | 58.32 | 60.93 | 58.22 | 61.05 | ||

| LHS [73] 2024 | 51.05 | 59.24 | 62.10 | 59.12 | 61.69 | ||

| 5-shot | Electra-small | ALR + MCT [70] 2021 | 44.21 | 51.37 | 55.76 | 54.50 | 55.37 |

| HCC-FSML [71] 2023 | 45.06 | 53.36 | 59.18 | 56.80 | 57.48 | ||

| DCKPN [72] 2023 | 47.76 | 55.83 | 59.48 | 60.06 | 60.23 | ||

| LHS [73] 2024 | 47.28 | 58.30 | 60.82 | 61.04 | 60.44 | ||

| BERT-base | ALR + MCT [70] 2021 | 46.80 | 54.79 | 59.95 | 59.11 | 60.13 | |

| HCC-FSML [71] 2023 | 48.64 | 57.60 | 60.69 | 60.78 | 60.59 | ||

| DCKPN [72] 2023 | 49.58 | 56.93 | 60.65 | 61.26 | 60.89 | ||

| LHS [73] 2024 | 52.87 | 58.30 | 61.60 | 62.35 | 61.23 | ||

| Model | 1-Shot | Ave. | 5-Shot | Ave. | |||||

|---|---|---|---|---|---|---|---|---|---|

| Sc | Na | We | Sc | Na | We | ||||

| Electra-small | ALR + MCT [70] 2021 | 40.61 | 40.76 | 46.16 | 42.51 | 51.83 | 46.44 | 54.17 | 50.82 |

| HCC-FSML [71] 2023 | / | / | / | / | 59.08 | 58.34 | 70.65 | 62.69 | |

| DCKPN [72] 2023 | 52.08 | 51.37 | 66.29 | 56.58 | 55.04 | 55.64 | 75.32 | 62.00 | |

| LHS [73] 2024 | 64.48 | 58.24 | 73.79 | 65.50 | 67.61 | 67.53 | 78.14 | 71.09 | |

| BERT-base | ALR + MCT [70] 2021 | 42.55 | 56.95 | 53.14 | 50.88 | 52.17 | 60.36 | 59.63 | 57.39 |

| HCC-FSML [71] 2023 | / | / | / | / | 54.69 | 64.41 | 68.64 | 62.58 | |

| DCKPN [72] 2023 | 53.81 | 58.48 | 74.02 | 62.10 | 57.81 | 63.71 | 93.83 | 71.78 | |

| LHS [73] 2024 | 65.98 | 67.64 | 80.12 | 71.25 | 70.37 | 75.37 | 89.79 | 78.51 | |

| CFPL [74] 2024 | 67.11 | 68.04 | 80.57 | 71.91 | 70.28 | 75.89 | 93.56 | 79.91 | |

| Datasets | Brief Description | Access URL | |||

|---|---|---|---|---|---|

| AmazonCat-13K | Amazon product categorization dataset | 1,493,021 | 13,330 | 5.04 | http://manikvarma.org/downloads/XC/XMLRepository.html |

| MIMIC-III | A high-quality clinical database containing both structured and unstructured data | 39,771 | 6932 | 13.6 | https://mimic.physionet.org/ |

| MIMIC II | A publicly available dataset for intensive care medicine research | 21,104 | 7042 | 36.7 | https://archive.physionet.org/pn5/mimic2db |

| AAPD | An academic paper dataset in the field of computer science | 55,840 | 54 | 2.41 | https://github.com/lancopku/SGM |

| RCV1 | Reuters news article dataset | 806,791 | 103 | 3.24 | http://www.daviddlewis.com/resources/testcollections/rcv1 |

| EUR-Lex | European Union legal documents dataset | 19,596 | 3993 | 5.37 | http://eur-lex.europa.eu/ |

| Wiki10-31K | Wikipedia article dataset | 20,764 | 30,938 | 18.64 | http://nlp.uned.es/social-tagging https://github.com/yourh/AttentionXML/tree/master/data |

| DBPedia | A large-scale, multilingual, open knowledge graph constructed by extracting structured knowledge from Wikipedia | 381,025 | 298 | - | https://www.dbpedia.org/ |

| TourSG | A dataset designed for multi-label intent recognition and dialogue state tracking | 25,751 | 102 | - | https://github.com/AtmaHou/FewShotMultiLabel |

| StanfordLU | A multi-domain task-oriented dialogue dataset | 8038 | 32 | - | https://github.com/AtmaHou/FewShotMultiLabel |

| EURLEX57K | European Union legislation dataset | 57,000 | 4271 | 5.07 | https://github.com/iliaschalkidis/lmtc-eurlex57k |

| FewAsp (multi) | Few-shot multi-label aspect category detection dataset | 40,000 | 100 | - | https://github.com/1429904852/LDF |

| RCV1-V2 | Reuters news articles dataset | 804,414 | 103 | 3.24 | http://www.ai.mit.edu/projects/jmlr/papers/volume5/lewis04a/lyrl2004_rcv1v2_README.htm www.ai.mit.edu/projects/jmlr/papers/volume5/lewis04a/lyrl2004_rcv1v2_README.htm |

| WOS | Academic paper dataset | 46,985 | 141 | 7 | http://archive.ics.uci.edu/index.php |

| Model/Method | Acc | Pre | R | F1 | Micro-F1 | Macro-F1 | P@k | nDCG@k | PSP@k | R@k | AUC |

|---|---|---|---|---|---|---|---|---|---|---|---|

| LAIAugment [7] | √ | √ | √ | ||||||||

| GDA [8] | √ | √ | |||||||||

| Falis et al. [6] | √ | √ | √ | ||||||||

| LSFA [9] | √ | √ | √ | ||||||||

| XDA [10] | √ | √ | |||||||||

| Rios et al. [12] | √ | √ | |||||||||

| LCOAKT [13] | √ | √ | √ | ||||||||

| AMuLaP [14] | √ | √ | |||||||||

| PTMLTC [16] | √ | √ | |||||||||

| KPT [17] | √ | ||||||||||

| KEPTLongformer [18] | √ | √ | √ | ||||||||

| GPsoap [19] | √ | √ | √ | √ | √ | √ | √ | ||||

| PFT [20] | √ | ||||||||||

| PLMA [21] | √ | ||||||||||

| MPBCNER [22] | √ | ||||||||||

| HSCNN [23] | √ | √ | √ | √ | |||||||

| Csányi et al. [24] | √ | √ | √ | √ | |||||||

| Luo et al. [27] | √ | ||||||||||

| TAPON [28] | √ | √ | √ | √ | √ | ||||||

| ProtoMix [29] | √ | √ | |||||||||

| Match–CNN [30] | √ | √ | √ | √ | √ | √ | |||||

| MSMN [31] | √ | √ | √ | ||||||||

| ATAML [33] | √ | √ | √ | ||||||||

| Meta-LMTC [34] | √ | √ | |||||||||

| HTTN [35] | √ | √ | √ | ||||||||

| MetaRisk [36] | √ | √ | √ | ||||||||

| EPEN [37] | √ | √ | |||||||||

| ZAGCNN [39] | √ | √ | √ | ||||||||

| DKEC [40] | √ | √ | √ | ||||||||

| KAMG [41] | √ | √ | |||||||||

| NAS-HRL [42] | √ | ||||||||||

| Chalkidis et al. [43] | √ | ||||||||||

| CoGraph [44] | √ | √ | √ | √ | |||||||

| Chen et al. [45] | √ | √ | √ | ||||||||

| Rajaonarivo et al. [46] | √ | ||||||||||

| LAAT [50] | √ | √ | √ | ||||||||

| Wang et al. [51] | √ | √ | √ | √ | |||||||

| Yogarajan et al. [52] | √ | √ | |||||||||

| DBGB [54] | √ | √ | √ | √ | |||||||

| FusionSent [56] | √ | √ | √ | ||||||||

| Proto-AWATT [58] | √ | √ | |||||||||

| LPN [59] | √ | √ | |||||||||

| LDF [60] | √ | √ | |||||||||

| Proto-SLWLA [61] | √ | √ | |||||||||

| FSO [62] | √ | √ | |||||||||

| VHAF [63] | √ | √ | |||||||||

| ProtPrompt [64] | √ | √ | |||||||||

| LGP [65] | √ | √ | |||||||||

| Zhao et al. [66] | √ | √ | |||||||||

| ALR + MCT [70] | √ | ||||||||||

| HCC-FSML [71] | √ | ||||||||||

| DCKPN [72] | √ | ||||||||||

| LHS [73] | √ | ||||||||||

| CFPL [74] | √ | ||||||||||

| HiMatch [76] | √ | √ | √ | √ | |||||||

| HierVerb [77] | √ | √ | |||||||||

| HierICRF [78] | √ | √ | |||||||||

| H2B [79] | √ | √ | |||||||||

| Chen et al. [80] | √ | √ | |||||||||

| Zhao et al. [81] | √ | √ | √ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, W.; Fan, Q.; Yan, H.; Xu, X.; Huang, S.; Zhang, K. A Survey of Multi-Label Text Classification Under Few-Shot Scenarios. Appl. Sci. 2025, 15, 8872. https://doi.org/10.3390/app15168872

Hu W, Fan Q, Yan H, Xu X, Huang S, Zhang K. A Survey of Multi-Label Text Classification Under Few-Shot Scenarios. Applied Sciences. 2025; 15(16):8872. https://doi.org/10.3390/app15168872

Chicago/Turabian StyleHu, Wenlong, Qiang Fan, Hao Yan, Xinyao Xu, Shan Huang, and Ke Zhang. 2025. "A Survey of Multi-Label Text Classification Under Few-Shot Scenarios" Applied Sciences 15, no. 16: 8872. https://doi.org/10.3390/app15168872

APA StyleHu, W., Fan, Q., Yan, H., Xu, X., Huang, S., & Zhang, K. (2025). A Survey of Multi-Label Text Classification Under Few-Shot Scenarios. Applied Sciences, 15(16), 8872. https://doi.org/10.3390/app15168872