Abstract

Surgical education and training have seen significant advancements with the integration of innovative technologies. This paper presents a novel approach to surgical education using a multi-view capturing system and bullet-time generation techniques to enhance the learning experience for aspiring surgeons. The proposed system leverages an array of synchronized cameras strategically positioned around a surgical simulation environment, enabling the capture of surgical procedures from multiple angles simultaneously. The captured multi-view data is then processed using advanced computer vision and image processing algorithms to create a “bullet-time” effect, similar to the iconic scenes from The Matrix movie, allowing educators and trainees to manipulate time and view the surgical procedure from any desired perspective. In this paper, we propose the technical aspects of the multi-view capturing system, the bullet-time generation process, and the integration of these technologies into surgical education programs. We also discuss the potential applications in various surgical specialties and the benefits of utilizing this system for both novice and experienced surgeons. Finally, we present preliminary results from pilot studies and user feedback, highlighting the promising potential of this innovative approach to revolutionize surgical education and training.

1. Introduction

Nowadays, the surgery capturing system has many new meanings in practice. The motivation behind the development of a multi-view capturing system and bullet-time generation for surgical education is to address the limitations of traditional training methods [1], enhance the learning experience, and combine with automated assessment [2] to optimize surgical education for efficiency and accessibility.

Enhancing Learning Efficacy: Surgical procedures are complex and often require a deep understanding of spatial relationships and fine motor skills. Traditional two-dimensional educational materials, such as textbooks and videos, may not adequately convey these nuances, such as spatial cognition [3]. The motivation is to provide a more effective learning tool that offers a comprehensive and immersive educational experience.

Safety and Skill Development: Surgery is inherently high-risk, and the consequences of mistakes during surgery can be severe. The motivation is to create a safe and controlled learning environment where aspiring surgeons can develop their skills, practice procedures, and make mistakes without putting patients at risk [4].

Advancements in Technology: Advances in camera technology, computer vision, and image processing have made it feasible to capture surgical procedures from multiple angles simultaneously and generate dynamic, interactive visualizations. The motivation is to leverage these technological advancements to improve surgical education [5].

Objective Assessment: Traditional surgical training relies heavily on subjective evaluations by experienced surgeons. The motivation is to introduce objective assessment methods by recording and analyzing trainee performance, enabling educators to provide data-driven feedback and track progress over time.

Innovation in Medical Education: Medical education, including surgical training, has been evolving with the integration of new technologies. The motivation is to keep pace with these innovations and explore novel approaches to improve the quality and effectiveness of surgical education [4].

Motivated by these considerations, we developed a multi-viewpoint capturing system dedicated to surgical education [6]. This system accelerates the learning of surgical instrument skills by capturing simulated suturing processes and generating bullet-time educational videos. Unlike conventional videos, bullet-time videos emphasize interactivity and active learning, while also mitigating occlusion problems through viewpoint switching.

Building upon our previous work [6], this article presents several key advancements. First, we developed an improved gaze-point setting strategy and enhanced the image processing algorithm. Second, we designed a comprehensive evaluation framework to assess the effectiveness of bullet-time videos in surgical education through both quantitative and qualitative analyses. Finally, we evaluated the system’s applicability from a user-oriented perspective, specifically verifying its ability to effectively mitigate occlusion problems.

2. Related Work

2.1. Surgical Video Recording

The primary role of the surgical imaging system is to document the operative procedure, which is crucial for both post-operative assessment and enhancing surgical skill development through education. Prior work [7] introduced a multi-camera array mounted on the operating shadowless lamp, offering an advancement over traditional single-camera recording setups. Despite this improvement, a persistent challenge remains: occlusion of the surgical field by the surgeon’s head, which can compromise the captured video quality. To specifically address this occlusion issue, our proposed system adopts a novel placement strategy, positioning the capture unit between the surgeon and the patient.

2.2. Object Detection

Numerous object detection frameworks have gained significant popularity and widespread adoption in recent years [8,9,10]. The You Only Look Once Version 7 (YOLOv7) algorithm [11], a prominent single-stage detector, integrates object recognition and localization within a unified network architecture. This approach enables substantially faster inference speeds compared to traditional two-stage methods while maintaining competitive accuracy. Furthermore, YOLOv7 facilitates streamlined model customization through transfer learning, allowing efficient fine-tuning on domain-specific datasets and iterative refinement during research development.

2.3. Bullet-Time Video Application

Bullet-time video technology [12] employs an array of fixed cameras strategically positioned around a subject. These cameras are typically triggered either simultaneously or in rapid sequence. Frames captured by each individual camera are subsequently stitched together to synthesize a cohesive video sequence [13]. This technique creates the illusion of a smoothly rotating perspective around a static subject, enabling detailed observation of the target object’s state at a precise instant in time [14]. Within surgical contexts, bullet-time visualization proves particularly valuable for gaining enhanced visibility during critical procedural moments, facilitating in-depth analysis [15].

3. Proposed Method

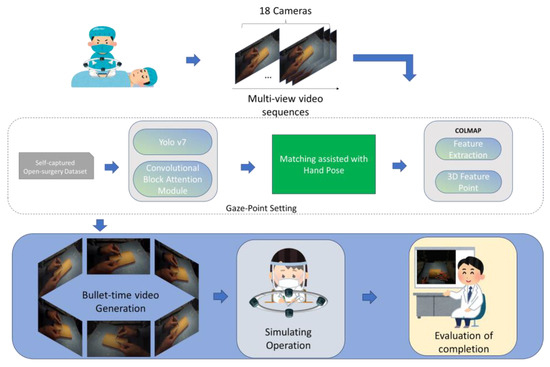

The workflow of our proposal methodology is shown in Figure 1. Firstly, we invited a medical professional to film a multi-view suture video in a simulated environment. Then, after target detection and hand pose detection, it is reflected into each viewpoint after a 2D to 3D key point coordinate transformation. The bullet-time video is then generated for all the points corresponding to the viewpoints. Next, the educational value of the bullet-time video for surgery was tested by evaluating the subject’s simulated suture completion.

Figure 1.

Overview of the proposed system workflow. The pipeline encompasses: (1) multi-view surgical scene capture using a synchronized camera array; (2) image processing including target/pose detection and per-frame 3D reconstruction; (3) generation of the interactive bullet-time suturing training video; (4) recruitment of experimenters to watch bullet time for simulation experiments; and (5) evaluation by expert surgeons for skill assessment.

3.1. Multi-View Surgical Capturing System

The multi-view system consists of 20 cameras fixed on a specially designed support frame [6] as shown in Figure 2. In order to increase its compatibility with a wide range of future anticipated surgeries, we have also designed a height-adjustable, camera-position-adjustable adjustment module. An LED strip serves as an LED module to indicate the status of the camera, and a special surgical spotlight serves as an illumination module to eliminate shadow interference as much as possible.

Figure 2.

Our prototype system, where the blue circle marks the location of the proposed surgeon and the red circle is the patient (data was collected in the suture model for this study).

3.1.1. Calibration of Multi-View Capturing System

The system utilizes Structure from Motion (SfM), implemented with COLMAP [16], to achieve camera calibration. Multi-view images serve as input, enabling the estimation of camera intrinsic parameters and the extraction of salient image features [17]. Leveraging the correspondence information between these features, the system estimates both the spatial positions and the sequential order of the cameras. Subsequently, the 3D coordinates of the corresponding points are reconstructed through stereo vision principles and refined using bundle adjustment techniques. This acquired data is then employed to iteratively optimize both the estimated 3D point positions and the camera calibration parameters.

3.1.2. Capturing and Camera Controlling—Large Number “Driverless” Camera Control System

Because our system contains a large number of UVC (USB Video Class) cameras [18], these cameras have the advantages of high resolution, small size, light weight, customization, and adjustability, which are essential in recording surgical procedures. However, at the same time, these cameras are usually Microsoft generic protocol UVC cameras without a dedicated driver. They suffer from memory consumption in the identification of multiple cameras of the same model, as well as from disorder in the way they are called. More importantly, the burden of a multi-viewpoint system on the endpoints is the load management and video stream processing when multiple cameras are working at the same time.

In order to solve the above problems, we use Microsoft’s KSMedia library to directly write the underlying driver logic to allocate resources and achieve multi-threaded synchronous work of multiple cameras [19]. The capturing cameras control system’s design logic is shown in Figure 3.

Figure 3.

Multi-camera control system’s design logic graph.

3.1.3. Comparative Testing and Performance Improvement of Multiple Video Capture Methods

Surgical recording usually requires a certain length of time, and the multi-camera system has problems such as excessive consumption of system resources by the camera, overheating of the chip, and untimely release of resources, leading to thread interruptions when working for a long period of time.

For this reason, we measured the frame rate variation in video recording with four (UVC) cameras turned on at the same time for a relatively long period of time (one hour) and under the same system environment as shown in Figure 4. The nominal frame rate of the video is set to 60, and the resolution is 1080p.

Figure 4.

Frame rate graph for one hour of continuous camera video capture (purple line shows the average frame rate of a one-hour recording stress test of the recording system we developed).

When the thread crashed, we set up an automatic rerun of the script, and after the restart, the system’s acquisition performance dropped. This explains why some of the methods have a precipitous drop at certain points in time. When comparing average frame rates and stability, the control system we designed using OpenCV [20], as well as libuvc and KSMedia, had varying degrees of advantage.

3.2. Multi-View Surgical Image Processing System

3.2.1. Object Detection and Optimization

Among the objects that appear in the suture scene, we need to pay close attention to the doctor’s hand and surgical instruments [21]. Therefore, we train the dataset based on YOLOv7 and optimize the model for the surgical scene.

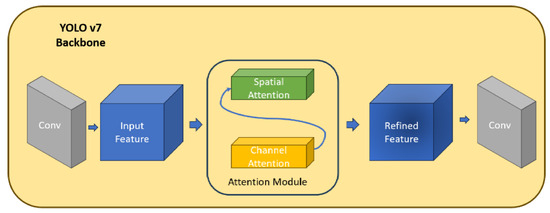

We integrated the CBAM attention mechanism [22] into the head of YOLOv7 to improve the detection performance of small objects. This is shown in Figure 5.

Figure 5.

Integrating the CBAM mechanism into YOLOv7’s backbone.

Thanks to the introduction of the attention mechanism and fine-tuned backbone, we achieve improved performance in tracking fuzzy tiny objects compared to the original target detection algorithm. As shown in Table 1, numerically, this is reflected in a 59.9% increase in mAP50%. In addition, because of the reduction in the number of parameters, our model shows an increase in detection speed, which in turn brings about a slight decrease in mAP95%. This means that the traditional algorithm still has an advantage when the object is completely free of occlusion and motion.

Table 1.

The wrist prediction result within a suturing video of a total of 20164 frames.

3.2.2. Hand Pose Estimation and Optimization

To realize the bullet-time gaze-point setting, we have combined expert opinion that the position of the apex of the surgical instrument is critical. However, for target detection, although we can determine the presence or absence of objects in the current scene, the pose of the object and the segmentation of key points cannot be performed well.

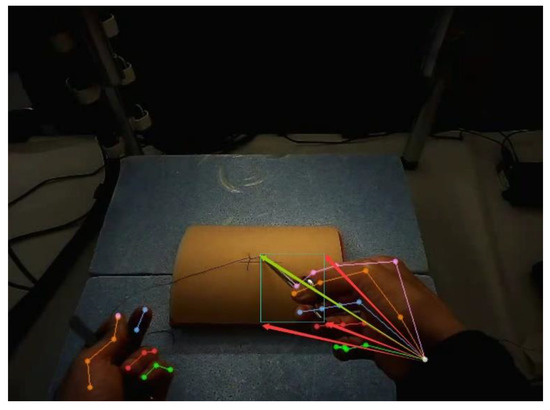

Therefore, we use the HRNet pre-training network for hand pose prediction [23]. It is worth mentioning that, in the hand pose, we weighted the detection of joints at the wrist and implemented bullet temporalization by placing the wrist furthest away from the target detection bounding box of the hand-held implement as the apex of the implement and as the gaze-point [24].

In a video clip of 20,164 frames, the wrist joint key points are detected by the pre-trained HRNet as shown in Table 1.

3.2.3. Gaze-Point Setting Strategy

The gaze-point serves as the pivotal center of rotation for the bullet-time video, fundamentally defining the viewer’s perspective during the viewpoint change. Its selection is therefore a critical determinant of the visualization’s effectiveness and relevance, particularly in surgical contexts where focus on specific anatomical structures or instrument tips (in our simulating experiment) is paramount. Consequently, establishing an optimal and reliable gaze-point setting strategy is essential. To address this, we propose a novel gaze-point setting strategy based on the detected results of an object detection algorithm and pose estimation to obtain a 2D gaze-point and an SfM method to transfer the 2D point to a 3D point.

After analyzing the bounding box of target detection, we find that one of the four corner points of each box is the location of the instrument’s tip. In order to determine which point it is, we find that the point is always the furthest point from the operator’s wrist.

Based on the holding posture of surgical instruments, the combination strategy of setting the gaze-point is to calculate the Euclidean distance of wrist-joint-point coordinate and bounding box corner points. The sample is shown in Figure 6.

Figure 6.

Euclidean distance of every bounding box point to hand wrist (where green box is the bounding box output by object detection algorithm, the green arrow points to the furthest distance corner-point, while the red ones are the ignored corner-points).

To establish the pivotal 3D gaze-point for bullet-time rotation, our strategy leverages the 3D reconstruction capabilities of our multi-view system. Initially, 2D gaze-points are detected per frame in individual camera views using target and pose detection algorithms. Utilizing our 18-camera array, we perform per-frame 3D reconstruction via COLMAP as we performed at Section 3.1.1, yielding dense 3D feature points for each timestep. The core of our approach selects the 3D gaze-point by identifying the 3D feature point (from the reconstructed set) closest to the highest-confidence 2D gaze-point detection in its corresponding camera view. This chosen 3D point inherently possesses known world coordinates. Crucially, using the calibrated intrinsic and extrinsic parameters of all cameras, we reproject this 3D gaze-point back onto the image plane of every camera view, generating a corresponding 2D projected gaze-point coordinate for each camera, regardless of the original detection status in that view.

This reprojection mechanism provides critical robustness; it ensures continuous gaze-point estimation across all views, even when the initial 2D detection fails in some cameras due to occlusion, motion blur, or other challenges, thereby preventing tracking failure.

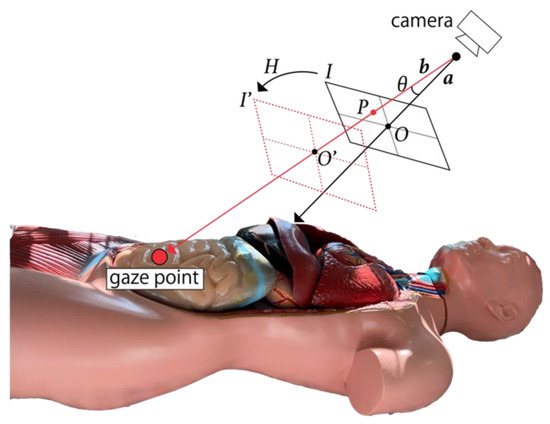

3.2.4. Calculation of Homography Matrix

To ensure the gaze target appears at the center of all frames in the synthesized video, each camera image undergoes a geometric transformation defined by a 3 × 3 homography matrix H [6]. It is illustrated in Figure 7.

Figure 7.

Homography transformation of gaze-point setting.

Consider O as the principal point (image center) of the original image I, and P as the projection of the gaze point onto I. The 3D vectors, a and b, originate from the camera’s optical center, pointing towards O and P, respectively. Using vectors a and b, the angular deviation θ between them is computed. This angle θ is then utilized to derive a 3D rotation matrix R that satisfies the relation . Computing the homography H from R additionally requires the camera’s intrinsic calibration parameters, obtained in the preceding calibration stage. The intrinsic matrix for each camera ( = 1, …, ) is given by:

where denotes the focal length of camera , estimated via SfM, and represents the image coordinates of the principal point (intersection of the optical axis with the image plane).

The transformation gives us the views that center the gaze-point in Section 3.2.3. With the help of the bullet-time video viewer that we developed [6], the detected objects can be observed at all times at all angles in the bullet-time video.

4. Experiments and Results

This chapter will present the three experiments we designed to evaluate, respectively: occlusion rate ablation of the system, the ablation study for target detection–CBAM mechanism fusion, and a simulated surgical suturing experiment to validate the effectiveness of the bullet-time video.

4.1. Experiment for Occlusion Comparison

Increasing the number of cameras not only allows for smoother point-of-view switching in the generating bullet-time video, eliminating the sense of viewing dissonance, but also reduces the impact of occlusion issues. To quantitatively evaluate the occlusion suppression capability of our 18-camera multi-view system, we conducted comparative experiments under three configurations simulating existing surgical imaging systems:

- Single-View system: one camera

- Multi-Lamp mounted system: five cameras

All camera conditions are set to 55 cm height based on existing medical imaging standards [25].

In order to quantitatively assess the improvement in occlusion rate, we propose to use whether the objects appearing within the scene can be recognized as a benchmark for judging occlusion or not by suturing video clips at 20,164 frames.

The main categories of targets present in this experimental scenario are as follows: left-hand, right-hand, needle holder, and tweezers. When we obtain the video stream data, we first perform the frame alignment of multi-view video and output the results with separate target detection [26]. Based on the detection results, all the targets in each frame are analyzed and categorized for that frame in that experimental setting. This procedure is shown in Figure 8.

Figure 8.

Workflow of frames’ category analysis.

In the above process, if a certain frame can be detected normally by all targets in the current experimental environment, then we say that this frame belongs to Global Detection Stability Frames, and the ratio of such frames is CDR (Complete Detection Rate):

where T is the total number of frames and I is an indicator function to represent the detection state of the c-kind of objects at t frames.

Correspondingly, if only some of the objects are successfully detected, then we say that a partial occlusion situation has occurred in this frame and validate it in PDTR (Partial Detection Tier Rate). In order to distinguish the severity of the occlusion, we further categorize partial occlusion into PDTR-T1, PDTR-T2, and PDTR-T3 to denote it. They represent that the 3, 2, and 1 classes of targets are detected, respectively.

FMR (Full Miss Rate), which is the opposite of CDR, is the ratio of problematic occlusion frames in which all objects are undetectable.

OIMR represents the percentage of frames in which at least one object is not detected due to occlusion, and it will be effective in evaluating whether the proposed system is still able to detect the object through other viewpoints when other occlusion situations occur.

In the problem frames where the four targets were not fully detected, we performed manual calibration as a result of the occ indicator function, which is combined with the detection results to determine whether the error or non-detection situation of the target c in frame t is associated with occlusion.

The PDTR, FMR, CDR, and OIMR values for the three simulated experimental environments are shown in Table 2:

Table 2.

Assessment of occlusion rate improvement of the proposed system compared to the existing system.

4.2. Object Detection Ablation Study

In our proposed multi-view image processing pipeline, the accuracy of the target detection algorithm is closely related to the quality of bullet-time video generation. Therefore, it is necessary to examine the effectiveness of the target detection algorithm and the enhancement metrics when combined with the CBAM attention mechanism [27].

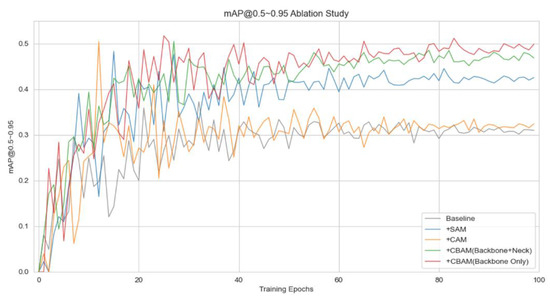

And based on the baseline we used, the network structure of YOLOv7, the attention module has several options for integration. For example, integrating the channel module or the spatial module alone. The integration parts are different according to the backbone and neck parts for the effect. For the above reasons, we trained 100 epochs for the ablation study; the mAP@0.5~0.95 curve is shown in Figure 9.

Figure 9.

Line graph of ablation study of 100 training epochs for mAP@0.5 to 0.95.

We also counted the value of the final harvest value and FPS for each scheme to indicate the processing speed, as shown in Table 3.

Table 3.

mAP@0.5~0.95 and FPS results of YOLOv7 baseline, YOLOv7 + SAM, YOLO + CAM, YOLOv7 + CBAM (Backbone and Neck), YOLOv7 + CBAM (Backbone, ours).

In conclusion, one of the CBAM mechanisms, the channel attention module, enhances the response of the channel of interest, i.e., the metal surgical instruments in the simulated surgical scenario we created. On the other hand, the spatial attention module focuses on the simulated surgical region, thus suppressing background interference and false detections.

YOLOv7’s Backbone is responsible for multi-scale feature extraction, which is the core source of semantic information for target detection, and the feature quality of Backbone directly affects the subsequent Neck/Head, and early optimization has global gain. The attention mechanism, however, may disrupt the multiscale balance of YOLOv7’s design by over-enforcing deep features [28].

4.3. Suturing Simulating Experimental Design and Content

We aimed to validate the effectiveness of bullet-time video for surgical education by simulating suturing under bullet-time video and a normal instructional video. The type of surgery varies according to the site and purpose, but suturing is the universal finishing stage of open surgery.

4.3.1. Subgroups and Rationale

In order to maximize the accuracy of the results, we used a controlled variable grouping approach, whereby the subjects were divided into two groups according to the ease of suturing [29] and the level of their skill.

We selected four pairs of participants with the most similar proficiency in the use of surgical instruments as controls from a total of 20 participants. In the experiment, they were shown different types of videos (general video and bullet-time video), and after viewing them, they were asked to reproduce as much as possible the operation process in the videos to compare the advantages of bullet-time videos over traditional single-view videos.

On the basis of the above description, we classified the subjects according to their surgical instruments using skill and experience as follows:

- General participants;

- Medical-related participants.

Almost all the medical-related participants can master the operation of simple sutures. Focusing on exploring the effect of bullet-time videos for participants who already have some basic skills to improve, we prepared two other suture operations [30]:

- Simple Suture;

- Mattress Suture;

- Figure-of-8 Suture.

Of these, the latter two require more steps to perform than the simple suture and require a higher degree of skill. And the arrangement of participants is shown in Table 4 and Table 5.

Table 4.

Definition of participants’ category.

Table 5.

Implementation program for all participants, where S indicates Simple Suture, M indicates Mattress Suture, and 8 indicates Figure-of-8 suture.

4.3.2. Qualitative Results—Participants’ Implementation Feedback

Based on a questionnaire administered to the subjects after the experiment, we collected feedback on how it felt to operate the system with multiple viewpoints and a self-assessment of whether bullet-time video was useful for education. The results are shown in Table 6.

Table 6.

Participants’ feedback (average value) and a higher bothering rate indicate that the respondent perceives the system to be more intrusive, while higher usefulness indicates that bullet-time video is more helpful in participants’ consideration.

It can be simply seen that the majority of the subjects found the educational effect of bullet-time videos to be better than that of regular videos, and that the lower the mastery skill, the more pronounced the effect. The multi-viewpoint system is less intrusive, but it seemed that medical subjects have higher requirements and standards when operating the system, as well as limitations on the shape of the system.

4.3.3. Quantitative Results

We counted the time taken by all subjects to complete the suturing operation, which was used as one of the indicators for a rough assessment of proficiency, as shown in Table 7.

Table 7.

Completion time of general participants for Simple Suture.

Medical participants underwent two separate suture operations, and the length of time that the two groups took to undergo the different suture operations was compared here. It was found that the viewing of the bullet time had a non-significant reduction in the length of the operation, as shown in Table 8.

Table 8.

Completion time of medical-related participants for high-difficulty suturing operations (Mattress Suture and Figure of 8 Suture).

In minimally invasive procedures, the precision and effectiveness of surgical instrument trajectories constitute critical metrics for evaluating operator proficiency [31]. This study employs a trajectory matching evaluation framework based on procedural characteristics of diverse suturing techniques. Specifically, temporal keyframes corresponding to critical operative phases were systematically identified. Quantitative comparison was implemented by calculating three-dimensional spatial displacement between instrument tip positions in expert-derived Ground Truth trajectories and trainee-generated trajectories [32]. The Root Mean Square Error (RMSE) algorithm was applied to measure positional deviations across corresponding keyframe intervals. This computational approach objectively quantifies operator–expert discrepancies in instrument control accuracy and motion economy, thereby establishing a standardized metric for surgical skill assessment.

First, we need to determine how to obtain the corresponding points of these two trajectories. Both the truth interval set as a reference and the corresponding interval are the coordinates of the tip point of the key surgical instrument in the keyframe manually intercepted under a specific critical operation, or aligned to the same time point by some interpolation method. If the timestamps of the trajectories do not match, the time alignment or interpolation process needs to be performed first.

Next, for each pair of corresponding points, we need to compute their Euclidean distances (di) in 3D space:

Subsequently, we take the sum of the mean squares of each corresponding point:

where N represents the total number of corresponding key points in the trajectory.

The actual value of RMSE is obtained by removing the square root:

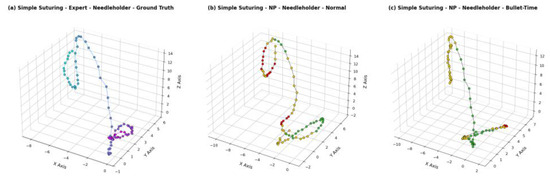

As demonstrated in Figure 10a–c, the three-dimensional trajectory comparison among Ground Truth (expert-derived), novice participants, and medical trainees during simple suturing reveals distinct operational patterns. Medical trainees with prior surgical experience exhibited significantly higher trajectory congruence with the Ground Truth (mean RMSE = 1.8 ± 0.3 mm) compared to novice participants (mean RMSE = 3.4 ± 0.5 mm, p < 0.01 via paired t-test). This inverse correlation between RMSE values and surgical experience aligns with the skill quantification framework, confirming that trajectory fidelity effectively reflects subjects’ baseline operative competence. Furthermore, the systematic deviation patterns observed in novice trajectories (e.g., excessive instrument rotation at needle entry points) provide actionable metrics for structured surgical training interventions.

Figure 10.

Visualization of sample trajectories compared to Ground Truth (left-(a)), normal video subject (middle-(b)), and bullet-time video subject (right-(c)), where the color or point is declared as above contents.

As illustrated in Figure 10, the left figure depicts the 3D trajectory of the needle holder tip during expert-level simple suturing procedures. This trajectory was reconstructed from 80 keyframes predicted by our proposed processing pipeline, with chromatic progression (blue → purple) encoding temporal dynamics of instrument movement. The central and right panels visualize trainee trajectories under contrasting instructional conditions. The corresponding RMSE calculation utilized these keyframes as positional ground truth.

A chromatic error mapping schema was implemented to enhance interpretability:

- Red: Critical deviations (RMSE > 5 mm);

- Yellow: Moderate errors (3 mm ≤ RMSE ≤5 mm);

- Green: Expert-like precision (RMSE < 3 mm).

It can be known that the trajectory output of the bullet-time video group is better than that of the control group, which only watched the normal video, in which the experimental group had a total of 3 Critical deviations, while the control group had 17 occurrences. It is worth noting that, in order to eliminate individual differences as much as possible, we selected these two experimenters who performed almost identically after watching the first normal video (25 occurrences of Critical deviations in the same step).

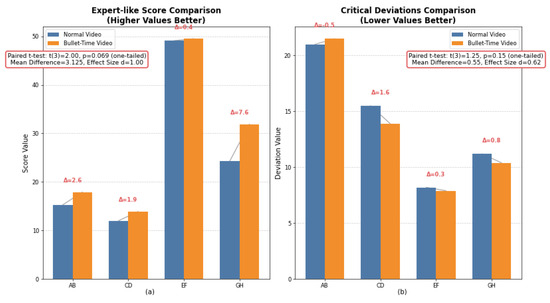

When we extended this rating method to the full dataset, we obtained the data shown in Table 9 below:

Table 9.

Distribution of RMSE of all data, a paired-sample design is implemented to compare participants who watched different video categories, with four matched pairs (AB, CD, EF, GH) representing identical content under different educational methods.

As shown in Figure 11, both metrics consistently favored the bullet-time modality, with Expert-like scores reaching statistical significance (p < 0.07, t(3) = 2.002) and Critical deviations showing strong directional trends (t(3) = 1.248). The large effect size for Expert-like (d = 1.00) and medium effect for Critical deviations (d = 0.62) indicate that bullet-time video provides measurable performance advantages.

Figure 11.

Comparison of Expert-like (a) and Critical deviations (b) for the normal and bullet-time video groups results with paired t-tests.

With the exception of subject B, which shows an increase in the rate of fatal errors after watching the bullet-time video instead, all groups showed an improvement in educational outcomes compared to the regular video control group.

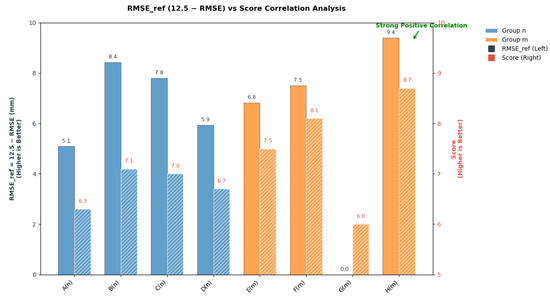

We also calculated the mean scores (transformed from RMSE calculations) for each of the eight groups of subjects participating in the trial, the time consumed, and the suture wound at completion, as shown in Figure 12.

Figure 12.

Comparison of time and size of wound (blue indicates normal experiment participants—group n; and orange indicates medical-related participants—group m).

In addition, we found that there was consistency in the direction of the values of the expert score (score) as well as the trajectory fit as shown in Figure 13. This could also confirm that our fit is able to explain the educational outcomes. And it indicates that the bullet-time educating method is useful for normal participants to master instruments’ easy control and medical participants about high-level procedures.

Figure 13.

Comparison of score and RMSE_ref analysis (blue indicates normal experiment participants—group n; and orange indicates medical-related participants—group m. The black numbers represent RMSE_ref, while the red numbers and dashed boxes represent manual scoring).

Because of the numerical nature of RMSE, we used the new numerical value RMSE_ref for the inverse expression of the trends of RMSE. This makes the results more intuitive.

A strong positive correlation is observed between expert ratings and automated RMSE_ref scores (r = 0.780, p = 0.022, 95% CI [0.18, 0.95]), indicating significant alignment in their evaluation trends. In 5 of 8 cases (62.5%), the expert ratings outperformed calculated RMSE_ref. The Wilcoxon signed-rank test showed consistent directional advantage for the system (T+ = 16.5, Z = −1.26, p = 0.207), with a moderate effect size (ES r = −0.44). These indicate that the trends of the two methodological assessments are highly consistent, and with the exception of some specific extreme samples, the rating score by system (RMSE_ref) can be used as a basis for judging completion.

5. Discussion

Although we can now tentatively conclude that bullet-time videos are effective for surgical education in terms of completion time, individual differences cannot be ignored. Therefore, we plan to expand the scale of the experiment to reduce the error.

Lack of real-time performance: The current processing pipeline, encompassing data acquisition through post-processing, faces challenges in achieving real-time operation. This limitation primarily stems from the computationally intensive frame-by-frame 3D reconstruction stage. However, the development of more efficient methods for mapping 2D image points to their corresponding 3D positions could significantly alleviate this bottleneck. Such an advancement would extend the system’s applicability beyond its current use in case analysis, surgical suturing training, international trends, and surgical education to include intraoperative navigation scenarios as [33].

Limited Portability: While the current study demonstrates the efficacy of the bullet-time video and multi-viewer systems in enhancing surgical instrument handling skills, their deployment in actual operating rooms faces significant challenges related to hardware portability and stringent operating room requirements. These include maintaining sterility protocols, accommodating heat emissions from surgical lighting (e.g., spotlights), and adapting the camera array configuration to specific surgical procedures and patient anatomy. Crucially, any necessary hardware adjustments must preserve the system’s ability to generate the high-quality bullet-time video essential for its demonstrated effectiveness.

Challenges in Implementing Bullet-Time Video: We demonstrated the benefits of bullet-time video over regular video for surgical instrument skill acquisition in this work. However, when we continue to advance our work to the surgical stage, the lack of multi-view surgical video data and the difficulty of deploying the system in real surgeries together contribute to the problem of a lack of available datasets for bullet-time video generation. This may be solved by generating AI virtual surgical data, but it remains to be demonstrated how much reference value the distance of the generated/data has.

Novelty Summary: Our work establishes three fundamental innovations: The first is surgical bullet-time pedagogy. Although in previous work [6] we proposed a similar approach, there is no complete pipeline to demonstrate its effectiveness. The second is a gaze-point setting strategy using object detection algorithms with CBAM embedded, plus hand pose estimation and 2D to 3D transformation. Finally, an automated evaluation system based on the trajectory of the tips of the instruments’ movement has been specially designed for our proposed system. The assessment is highly consistent with manual ratings and confirms that bullet-time video is effective for accelerating instrumental operation skill learning in a suture scenario with graded difficulty.

6. Conclusions

This study proposes a multi-view bullet-time video system to enhance surgical education through immersive, interactive training. The system integrates 18 synchronized high-resolution cameras arranged on adjustable frames to capture surgical procedures from all angles. Footage is processed via advanced computer vision algorithms (e.g., YOLOv7 with attention mechanisms) for object detection and 3D scene reconstruction using Structure from Motion (SfM) and stereo vision. This enables dynamic viewpoint manipulation, allowing trainees to pause, rotate, or slow down perspectives to analyze intricate techniques like needle trajectory.

Key advantages include resolving spatial ambiguities caused by occlusions and providing multi-angle insights into instrument–tissue interactions. Preliminary tests compared trainees using bullet-time videos against traditional single-view learners. Results showed the experimental group achieved an average of 29.5% lower trajectory errors (RMSE) and 22% faster task completion, with novices reporting 7.75/10 usefulness versus 6.0/10 for medical professionals. The system maintained stable synchronization during hour-long recordings and demonstrated technical viability for more capture scenarios.

By combining multi-view capture, 3D reconstruction, and interactive visualization, this approach addresses limitations of passive 2D video training by generating high-quality bullet-time videos. Future work will expand clinical validation and explore adaptive learning modules. The system offers a scalable tool to improve spatial reasoning, reduce procedural errors, and standardize surgical skill assessment.

Author Contributions

Conceptualization, T.O. and I.K.; methodology, I.K. and Y.W.; software, Y.W.; validation, Y.W. and D.K.; investigation, Y.W. and C.X.; data curation, K.K. and D.K.; writing—original draft preparation, Y.W.; writing—review and editing, C.X. and I.K.; supervision, I.K. and T.O.; funding acquisition, S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This achievement was received partial funding from the JSPS Grant-in-Aid for Scientific Research (Grant Number 25K03146 and 22K08791). This work was also funded by MEXT Promotion of Development of a Joint Usage/Research System Project: Coalition of Universities for Research Excellence Program (CURE) (Grant Number JPMXP1323015474).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of University of Tsukuba (protocol code 22-008 from 1 November 2023 to 1 April 2024).” for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Due to privacy and ethical restrictions, the created and used data will not be planned to be published.

Acknowledgments

I sincerely thank my principal supervisor and laboratory members for invaluable guidance and support throughout this research. Special gratitude to collaborators for essential contributions to experimental design and data interpretation, and to colleagues for critical feedback. I acknowledge technical experts for experimental and technical guidance. This work was made possible by participants’ contributions, and the collective efforts of research team members.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Saun, T.J.; Zuo, K.J.; Grantcharov, T.P. Video Technologies for Recording Open Surgery: A Systematic Review. Surg. Innov. 2019, 26, 599–612. [Google Scholar] [CrossRef] [PubMed]

- Shaharan, S.; Ryan, D.M.; Neary, P.C. Motion Tracking System in Surgical Training. InTech 2017, 1, 1–24. [Google Scholar] [CrossRef]

- Vajsbaher, T.; Schultheis, H.; Francis, N.K. Spatial cognition in minimally invasive surgery: A systematic review. BMC Surg. 2018, 18, 94. [Google Scholar] [CrossRef]

- Shahrezaei, A.; Sohani, M.; Taherkhani, S.; Zarghami, S.Y. The impact of surgical simulation and training technologies on general surgery education. BMC Med. Educ. 2024, 24, 1297. [Google Scholar] [CrossRef]

- Varas, J.; Coronel, B.V.; Villagrán, I.; Escalona, G.; Hernandez, R.; Schuit, G.; Durán, V.; Lagos-Villaseca, A.; Jarry, C.; Neyem, A.; et al. Innovations in surgical training: Exploring the role of artificial intelligence and large language models (LLM). Rev. Col. Bras. Cir. 2023, 50, e20233605. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, C.; Shishido, H.; Hashimoto, S.; Oda, T.; Kitahara, I. A surgical bullet-time video capturing system depending on surgical situation. In Proceedings of the 10th IEEE Global Conference on Consumer Electronics (GCCE 2021), Kyoto, Japan, 12–15 October 2021; pp. 571–574. [Google Scholar] [CrossRef]

- Shimizu, T.; Oishi, K.; Hachiuma, R.; Kajita, H.; Takatsume, Y.; Saito, H. Surgery recording without occlusions by multi-view surgical videos. In VISAPP (VISIGRAPP 2020—Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Valletta, Malta, 27–29 February 2020; Farinella, G.M., Radeva, P., Braz, J., Eds.; SciTePress: Setúbal, Portugal, 2020; Volume 5, pp. 837–844. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016. ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Lecture Notes in Computer Science. Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wachowski, L. (Directors). The Matrix [Film]. Warner Bros.; Village Roadshow Pictures; Groucho Film Partnership. 1999.

- Akechi, N.; Kitahara, I.; Sakamoto, R.; Ohta, Y. Multi-resolution bullet-time effect. In Proceedings of the SIGGRAPH-ASIA, Shenzhen, China, 3–6 December 2014. [Google Scholar]

- Nagai, T.; Shishido, H.; Kameda, Y.; Kitahara, I. An on-site visual feedback method using bullet-time video. In Proceedings of the 1st International Workshop on Multimedia Content Analysis in Sports, Seoul, Republic of Korea, 26 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 39–44. [Google Scholar] [CrossRef]

- Zuckerman, I.; Werner, N.; Kouchly, J.; Huston, E.; DiMarco, S.; DiMusto, P.; Laufer, S. Depth over RGB: Automatic evaluation of open surgery skills using depth camera. Int. J. CARS 2024, 19, 1349–1357. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- XMOS Limited. AN00127: USB Video Class Device (Version 2.0.1 Release Candidate 1) [Application Note]. n.d. Available online: https://www.xmos.com/download/AN00127:-USB-Video-Class-Device(2.0.1rc1).pdf/ (accessed on 14 May 2022).

- Microsoft. KSMedia.h Header. Windows Driver Kit (WDK) Documentation. n.d. Available online: https://learn.microsoft.com/en-us/windows-hardware/drivers/ (accessed on 14 May 2022).

- OpenCV Python Team. Opencv-Python (Version 4.11.0) [Python Package]. 2025. Available online: https://pypi.org/project/opencv-python/ (accessed on 14 May 2022).

- Jiang, K.; Pan, S.; Yang, L.; Yu, J.; Lin, Y.; Wang, H. Surgical Instrument Recognition Based on Improved YOLOv5. Appl. Sci. 2023, 13, 11709. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional block attention module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Wu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.-E.; Sheikh, Y.A. OpenPose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef]

- Kelts, G.I.; McMains, K.C.; Chen, P.G.; Weitzel, E.K. Monitor height ergonomics: A comparison of operating room video display terminals. Allergy Rhinol. (Provid. R.I.) 2015, 6, 28–32. [Google Scholar] [CrossRef] [PubMed]

- Ha, H.; Xiao, L.; Richardt, C.; Nguyen-Phuoc, T.; Kim, C.; Kim, M.H.; Lanman, D.; Khan, N. Geometry-guided Online 3D Video Synthesis with Multi-View Temporal Consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Chen, X.; Wang, Y.; Zhang, Z. CBAM-STN-TPS-YOLO: Enhancing agricultural object detection through spatially adaptive attention mechanisms. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 1124–1133. [Google Scholar]

- Qin, Q.; Zhou, X.; Gao, J.; Wang, Z.; Naer, A.; Hai, L.; Alatan, S.; Zhang, H.; Liu, Z. YOLOv8-CBAM: A study of sheep head identification in Ujumqin sheep. Front. Vet. Sci. 2025, 12, 1514212. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Navarro, A.R.; Quiroga-Garza, A.; Acosta-Luna, A.S.; Salinas-Alvarez, Y.; Martinez-Garza, J.H.; de la Garza-Castro, O.; Gutierrez-de la O, J.; de la Fuente-Villarreal, D.; Elizondo-Omaña, R.E.; Guzman-Lopez, S. Comparison of suturing models: The effect on perception of basic surgical skills. BMC Med. Educ. 2021, 21, 250. [Google Scholar] [CrossRef]

- Youssef, S.C.; Aydin, A.; Canning, A.; Khan, N.; Ahmed, K.; Dasgupta, P. Learning Surgical Skills Through Video-Based Education: A Systematic Review. Surg. Innov. 2023, 30, 220–238. [Google Scholar] [CrossRef]

- Pan, M.; Wang, S.; Li, J.; Li, J.; Yang, X.; Liang, K. An Automated Skill Assessment Framework Based on Visual Motion Signals and a Deep Neural Network in Robot-Assisted Minimally Invasive Surgery. Sensors 2023, 23, 4496. [Google Scholar] [CrossRef] [PubMed]

- Ebina, K.; Abe, T.; Yan, L.; Hotta, K.; Shichinohe, T.; Higuchi, M.; Iwahara, N.; Hosaka, Y.; Harada, S.; Kikuchi, H.; et al. A surgical instrument motion measurement system for skill evaluation in practical laparoscopic surgery training. PLoS ONE 2024, 19, e0305693. [Google Scholar] [CrossRef]

- Bayareh-Mancilla, R.; Medina-Ramos, L.A.; Toriz-Vázquez, A.; Hernández-Rodríguez, Y.M.; Cigarroa-Mayorga, O.E. Automated Computer-Assisted Medical Decision-Making System Based on Morphological Shape and Skin Thickness Analysis for Asymmetry Detection in Mammographic Images. Diagnostics 2023, 13, 3440. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).