MDNet: A Differential-Perception-Enhanced Multi-Scale Attention Network for Remote Sensing Image Change Detection

Abstract

1. Introduction

2. Related Work

2.1. Feature Aggregation

2.2. Attention Mechanism

2.3. Transformer-Based Network

3. Methodology

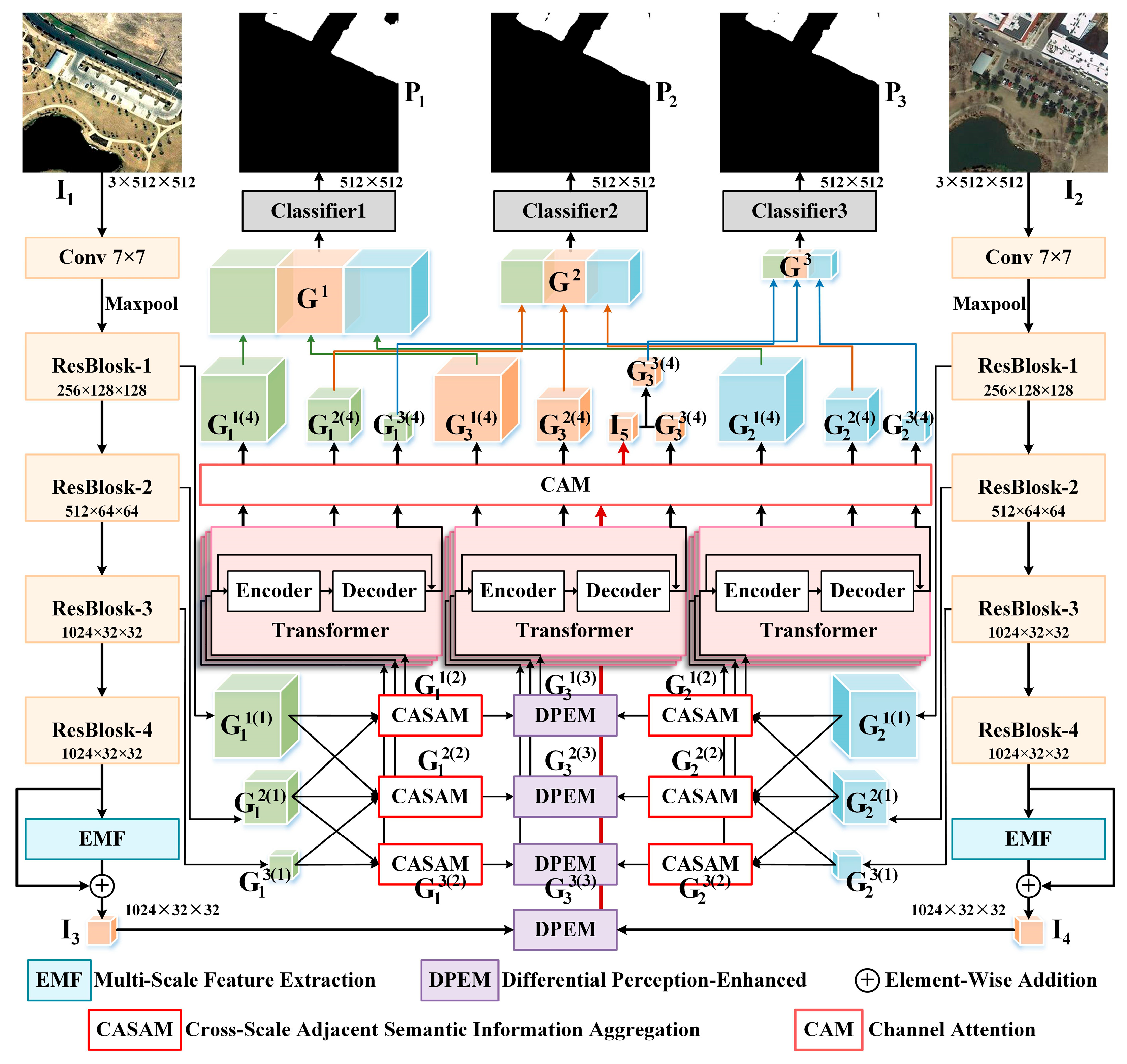

3.1. Overview

3.2. Multi-Scale CNN Feature Extractor

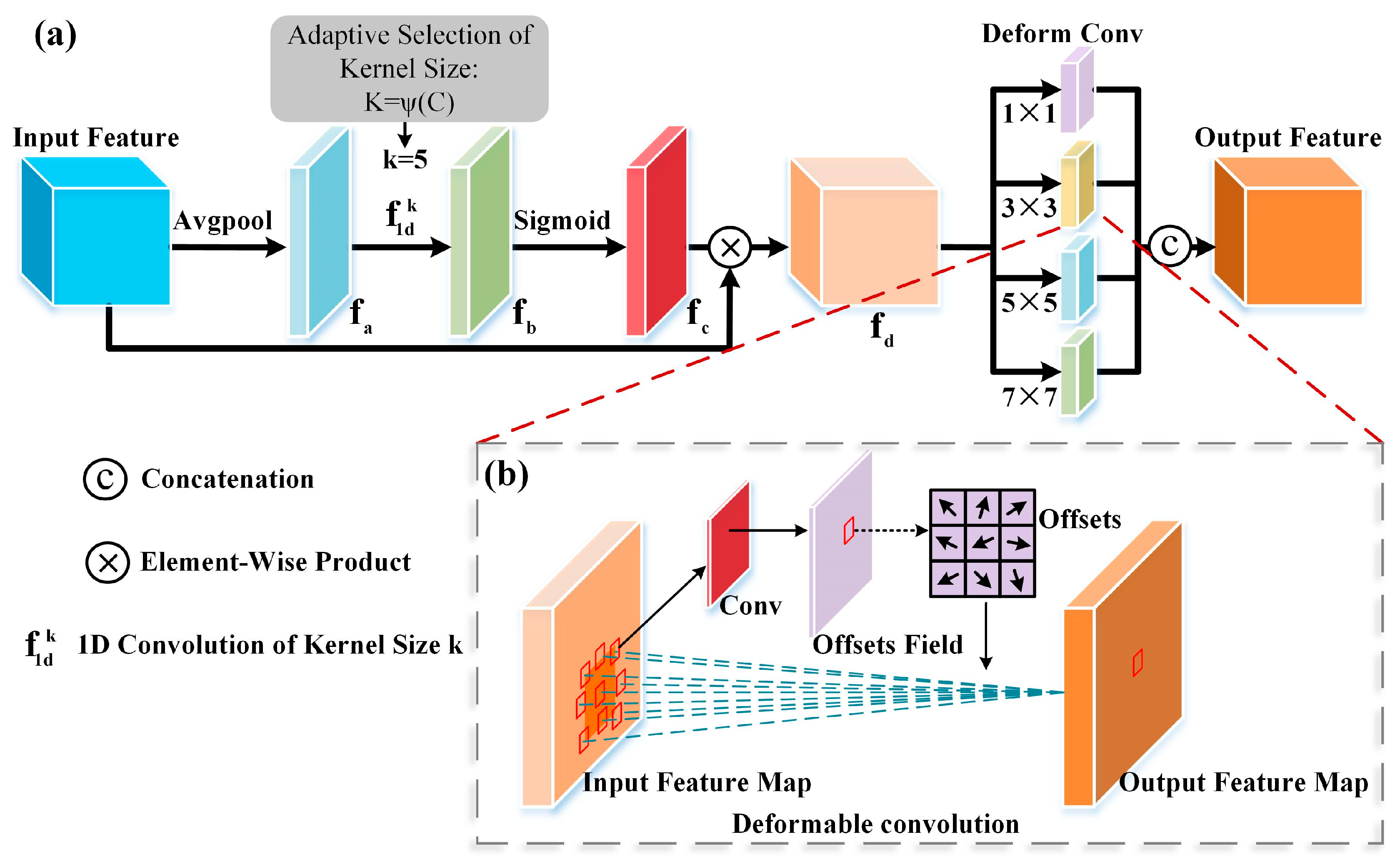

Multi-Scale Feature Extraction Module (EMF)

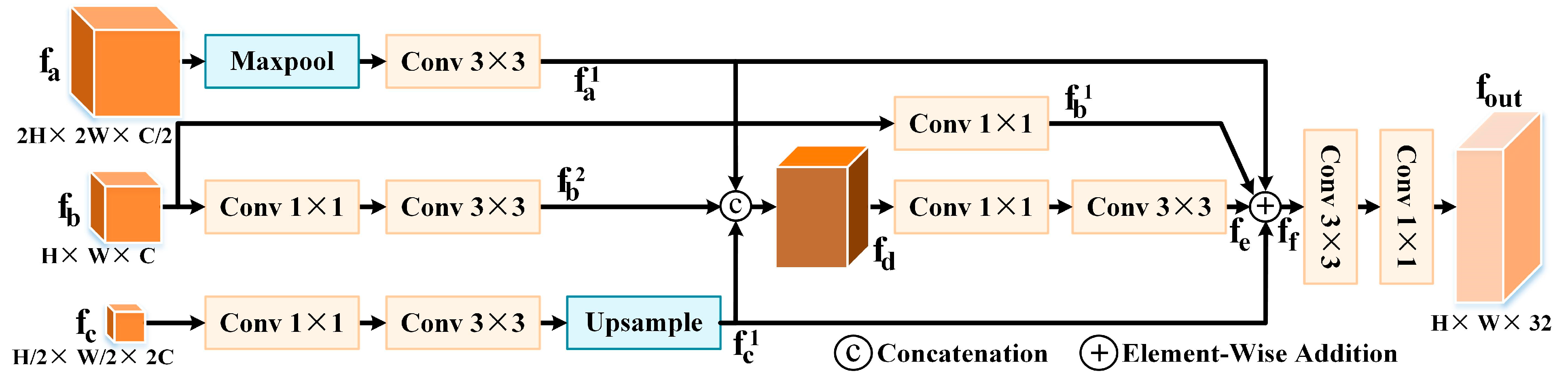

3.3. Cross-Scale Adjacent Semantic Information Aggregation Module (CASAM)

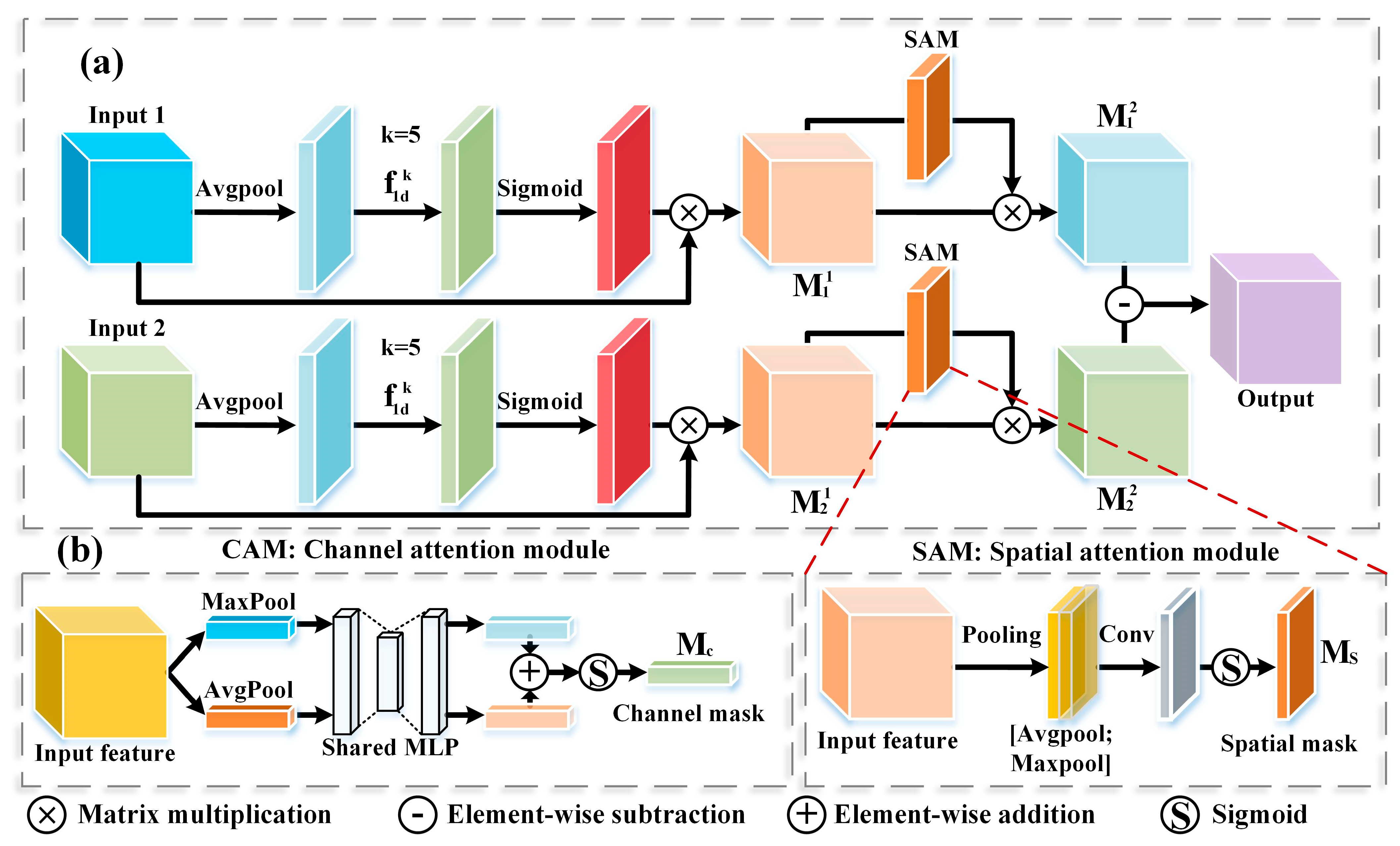

3.4. Differential-Perception-Enhanced Module (DPEM)

3.5. Transformer and Channel Attention Module (CAM)

3.6. Overall Loss Function

4. Experiments

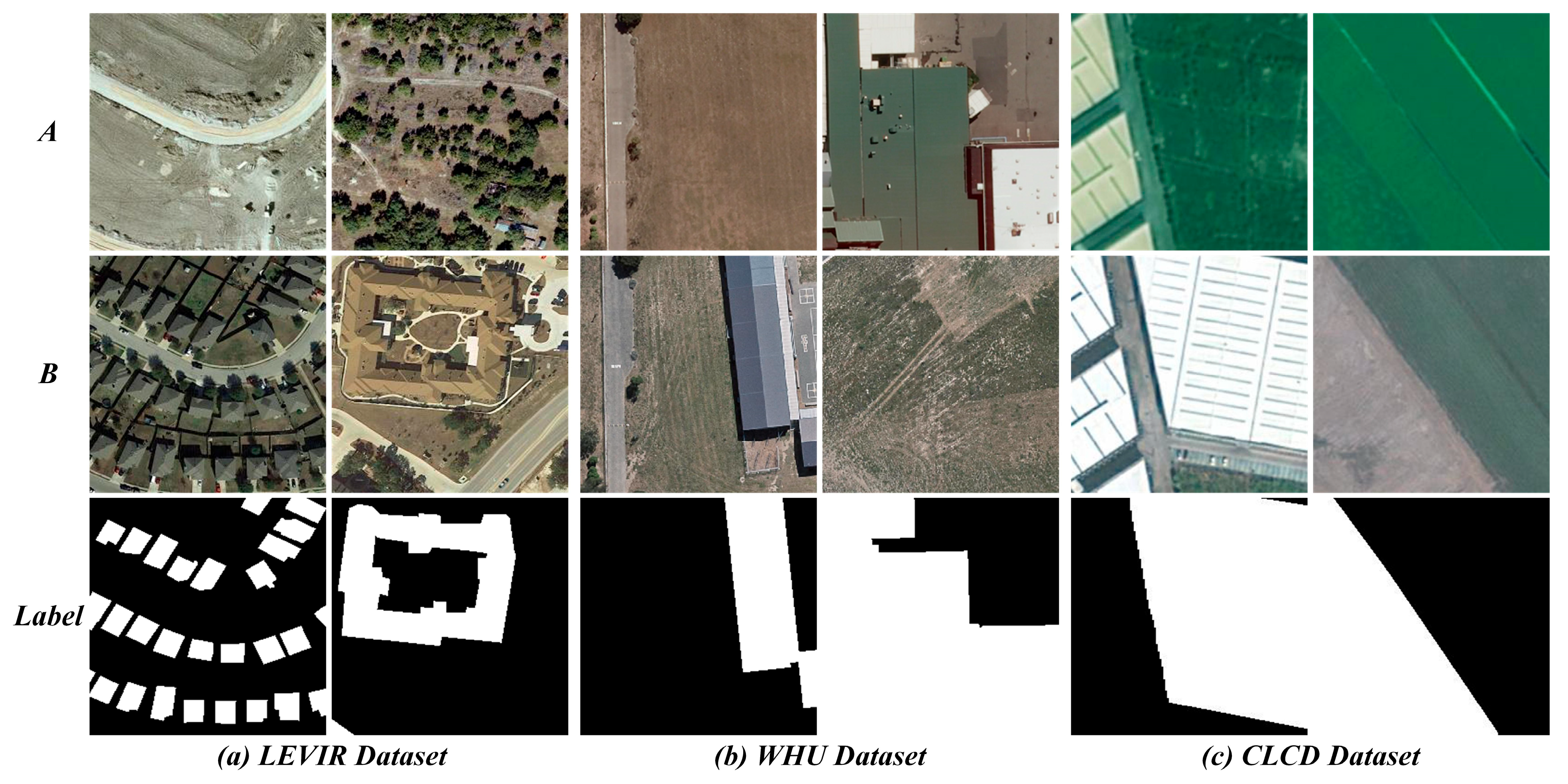

4.1. Dataset Introduction

4.2. Compared Methods

4.3. Implementation Details and Metrics

4.4. Ablation Experiments and Result Analysis

4.4.1. EMF

4.4.2. CASAM

4.4.3. DPEM

4.4.4. Transformer

4.4.5. CAM

4.4.6. EMF + CASAM

4.4.7. DPEM + Transformer

4.4.8. Complete Model

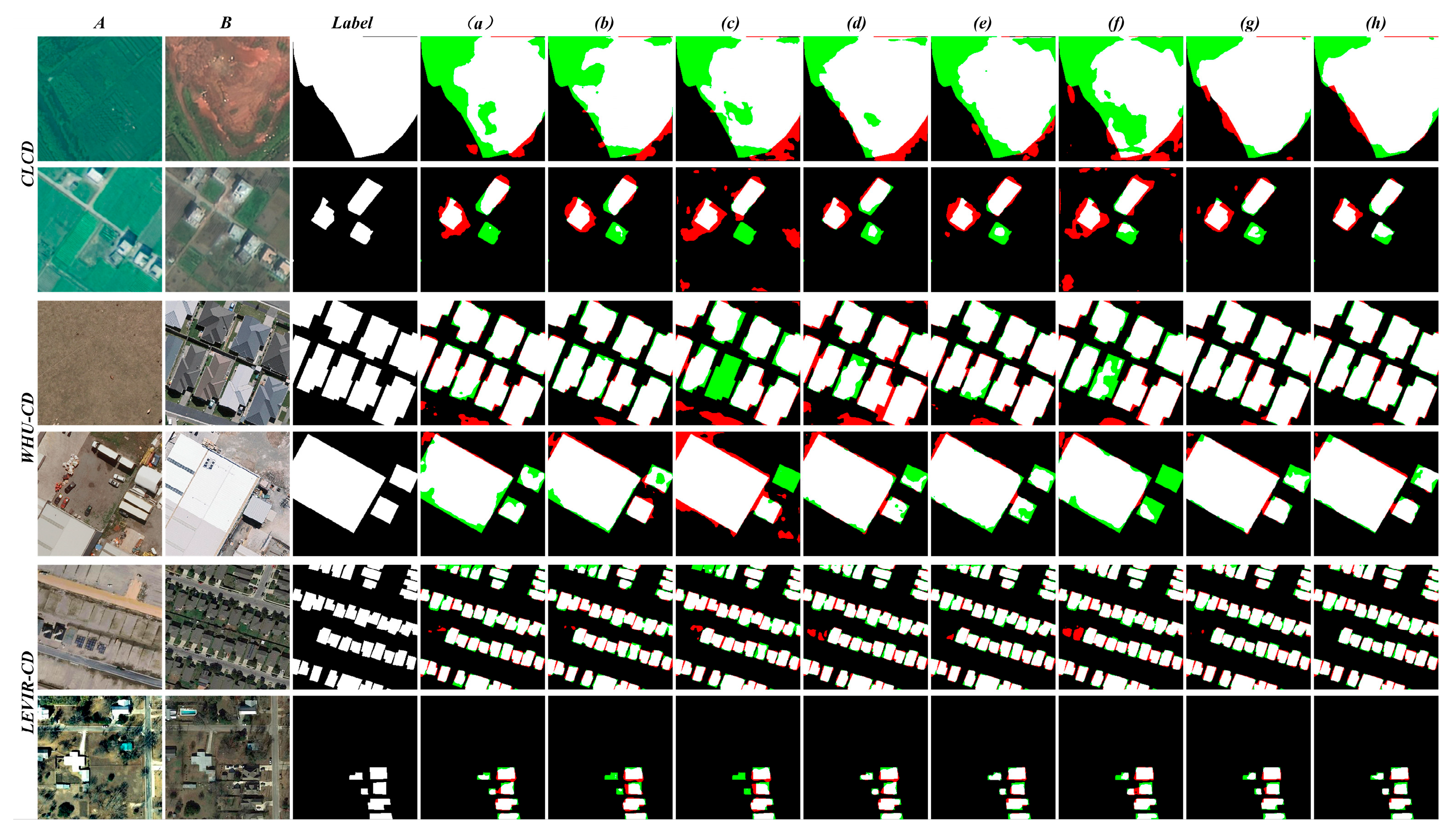

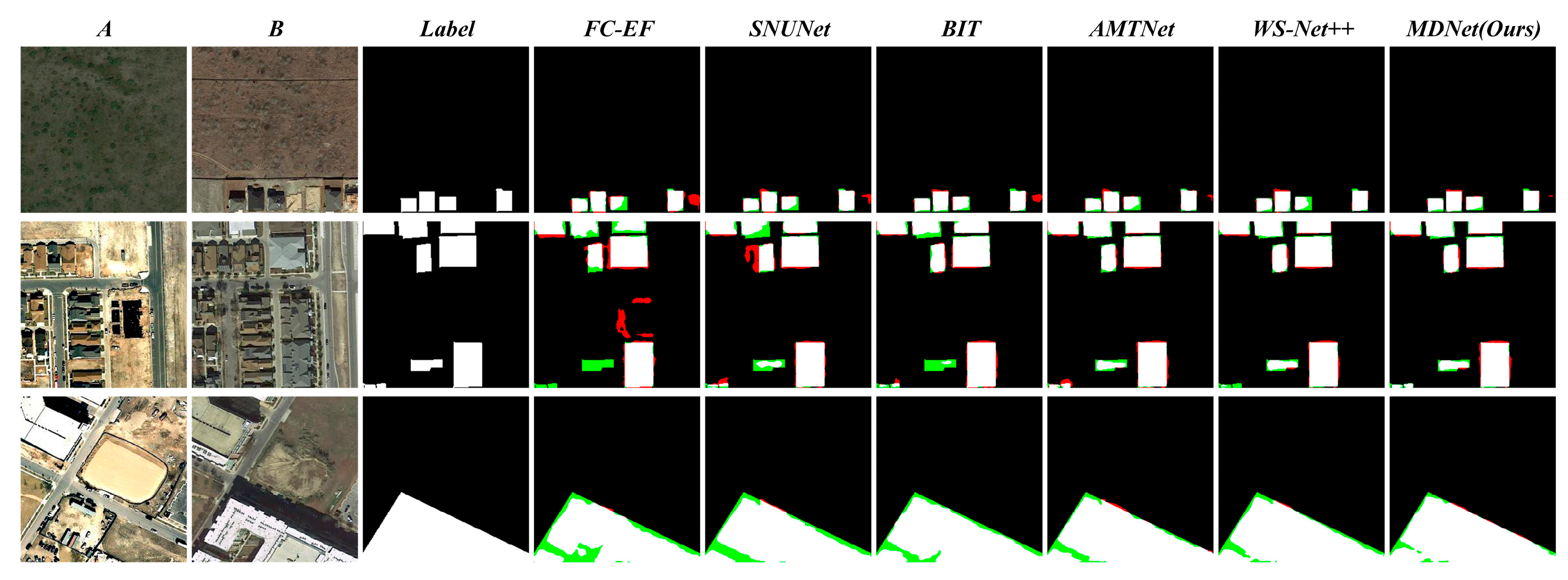

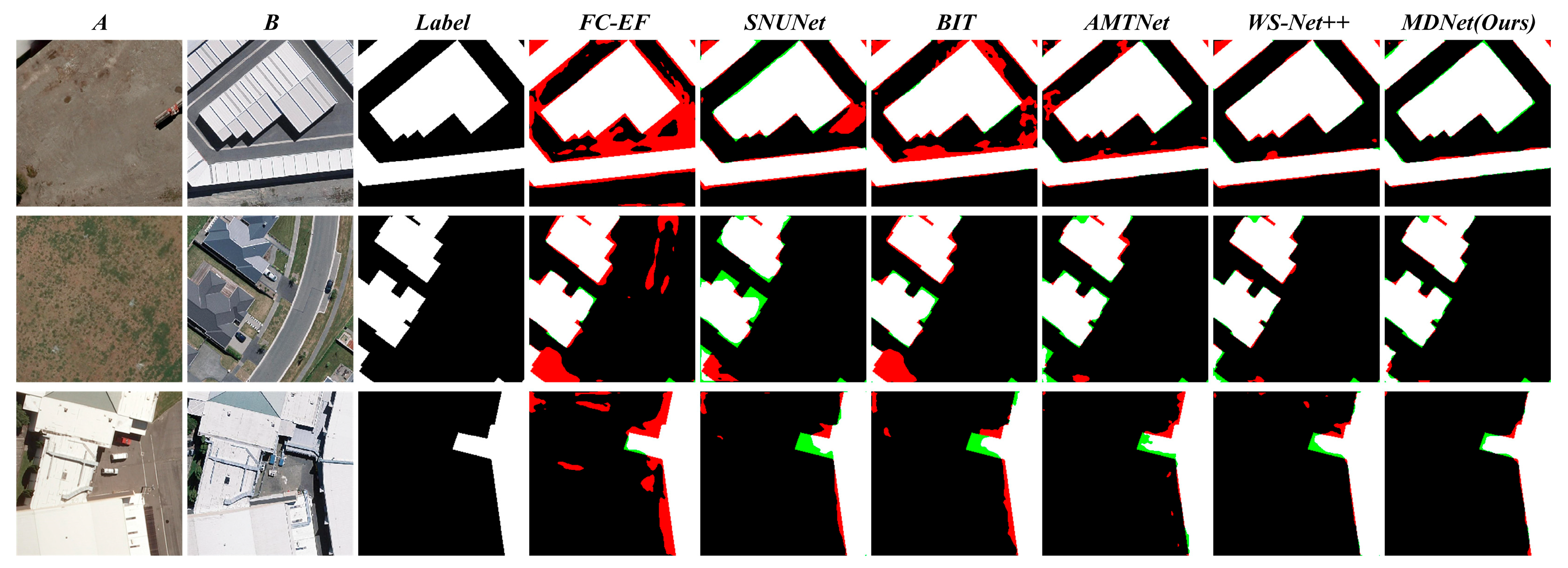

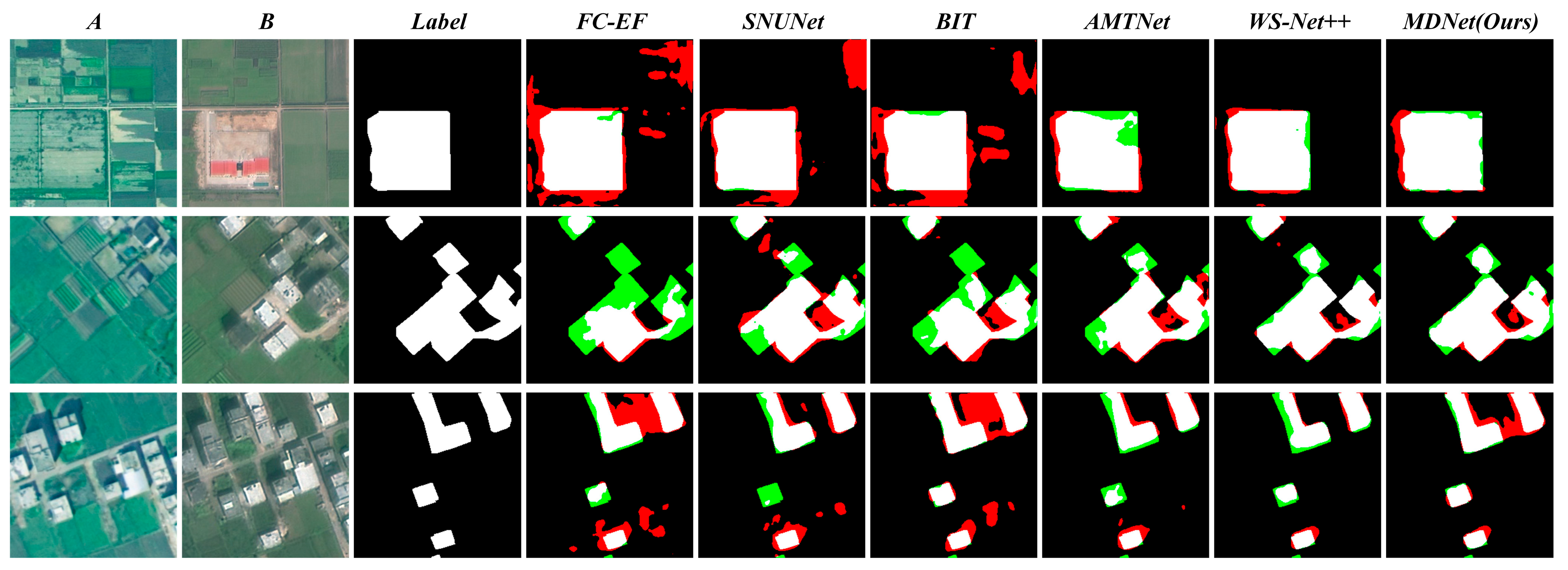

4.5. Comparative Experiment and Result Analysis

4.5.1. Comparisons on the LEVIR-CD Dataset

4.5.2. Comparisons on the WHU-CD Dataset

4.5.3. Comparisons on the CLCD Dataset

4.6. Model Efficiency

4.7. Analysis of Model Generalization and Real-Time Application Feasibility

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, C.; Zhang, L.; Du, B.; Chen, H.; Wang, J.; Zhong, H. UNet-like Remote Sensing Change Detection: A Review of Current Models and Research Directions. IEEE Geosci. Remote Sens. Mag. 2024, 12, 305–334. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4401015. [Google Scholar] [CrossRef]

- Li, Z.; Tang, C.; Liu, X.; Zhang, W.; Dou, J.; Wang, L.; Zomaya, A.Y. Lightweight Remote Sensing Change Detection with Progressive Feature Aggregation and Supervised Attention. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5602812. [Google Scholar] [CrossRef]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change Detection Methods for Remote Sensing in the Last Decade: A Comprehensive Review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Xu, Y.; Lei, T.; Ning, H.; Lin, S.; Liu, T.; Gong, M.; Nandi, A.K. From Macro to Micro: A Lightweight Interleaved Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4406114. [Google Scholar] [CrossRef]

- Afaq, Y.; Manocha, A. Analysis on Change Detection Techniques for Remote Sensing Applications: A Review. Ecol. Inform. 2021, 63, 101310. [Google Scholar] [CrossRef]

- Peng, D.; Bruzzone, L.; Zhang, Y.; Guan, H.; Ding, H.; Huang, X. SemiCDNet: A Semisupervised Convolutional Neural Network for Change Detection in High Resolution Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5891–5906. [Google Scholar] [CrossRef]

- Bai, B.; Fu, W.; Lu, T.; Li, S. Edge-Guided Recurrent Convolutional Neural Network for Multitemporal Remote Sensing Image Building Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5610613. [Google Scholar] [CrossRef]

- Luo, F.; Zhou, T.; Liu, J.; Guo, T.; Gong, X.; Ren, J. Multiscale Diff-Changed Feature Fusion Network for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5502713. [Google Scholar] [CrossRef]

- Ying, Z.; Tan, Z.; Zhai, Y.; Jia, X.; Li, W.; Zeng, J.; Genovese, A.; Piuri, V.; Scotti, F. DGMA2-Net: A Difference-Guided Multiscale Aggregation Attention Network for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, Z.; Ding, Q.; Huang, X.; Wang, Y.; Zhou, X.; Li, D. AERNet: An Attention-Guided Edge Refinement Network and a Dataset for Remote Sensing Building Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5617116. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual Attentive Fully Convolutional Siamese Networks for Change Detection in High-Resolution Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1194–1206. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure Transformer Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5224713. [Google Scholar] [CrossRef]

- Yan, T.; Wan, Z.; Zhang, P.; Cheng, G.; Lu, H. TransY-Net: Learning Fully Transformer Networks for Change Detection of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Zhang, X.; Cheng, S.; Wang, L.; Li, H. Asymmetric Cross-Attention Hierarchical Network Based on CNN and Transformer for Bitemporal Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2000415. [Google Scholar] [CrossRef]

- Lei, T.; Xu, Y.; Ning, H.; Lv, Z.; Min, C.; Jin, Y.; Nandi, A.K. Lightweight Structure-Aware Transformer Network for Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2023, 21, 6000305. [Google Scholar] [CrossRef]

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- Xiang, S.; Wang, M.; Jiang, X.; Xie, G.; Zhang, Z.; Tang, P. Dual-Task Semantic Change Detection for Remote Sensing Images Using the Generative Change Field Module. Remote Sens. 2021, 13, 3336. [Google Scholar] [CrossRef]

- Hou, X.; Bai, Y.; Li, Y.; Shang, C.; Shen, Q. High-Resolution Triplet Network with Dynamic Multiscale Feature for Change Detection on Satellite Images. ISPRS J. Photogramm. Remote Sens. 2021, 177, 103–115. [Google Scholar] [CrossRef]

- Zhang, L.; Hu, X.; Zhang, M.; Shu, Z.; Zhou, H. Object-Level Change Detection with a Dual Correlation Attention-Guided Detector. ISPRS J. Photogramm. Remote Sens. 2021, 177, 147–160. [Google Scholar] [CrossRef]

- Zhang, M.; Liu, Z.; Li, W.-Y.; Liu, L.; Jiao, L. Remote Sensing Image Change Detection Based on Deep Multi-Scale Multi-Attention Siamese Transformer Network. Remote. Sens. 2023, 15, 842. [Google Scholar] [CrossRef]

- Song, K.; Jiang, J. AGCDetNet:An Attention-Guided Network for Building Change Detection in High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4816–4831. [Google Scholar] [CrossRef]

- Wang, W.; Tan, X.; Zhang, P.; Wang, X. A CBAM Based Multiscale Transformer Fusion Approach for Remote Sensing Image Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6817–6825. [Google Scholar] [CrossRef]

- Jiang, S.; Lin, H.; Ren, H.; Hu, Z.; Weng, L.; Xia, M. MDANet: A High-Resolution City Change Detection Network Based on Difference and Attention Mechanisms under Multi-Scale Feature Fusion. Remote Sens. 2024, 16, 1387. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, P.; Chen, Z.; Bai, Y.; Zhao, Z.; Yang, X. A Triple-Stream Network with Cross-Stage Feature Fusion for High-Resolution Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5600417. [Google Scholar] [CrossRef]

- Li, Z.; Cao, S.; Deng, J.; Wu, F.; Wang, R.; Luo, J.; Peng, Z. STADE-CDNet: Spatial–Temporal Attention with Difference Enhancement-Based Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611617. [Google Scholar] [CrossRef]

- Sun, S.; Mu, L.; Wang, L.; Liu, P. L-UNet: An LSTM Network for Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8004505. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. Available online: https://ieeexplore.ieee.org/document/9883686 (accessed on 12 January 2025).

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, B.; Hong, D.; Chen, Z.; Yang, X.; Li, B. FCCDN: Feature Constraint Network for VHR Image Change Detection. ISPRS J. Photogramm. Remote Sens. 2022, 187, 101–119. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A Deeply Supervised Image Fusion Network for Change Detection in High Resolution Bi-Temporal Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Lin, H.; Wang, X.; Li, M.; Huang, D.; Wu, R. A Multi-Task Consistency Enhancement Network for Semantic Change Detection in HR Remote Sensing Images and Application of Non-Agriculturalization. Remote Sens. 2023, 15, 5106. [Google Scholar] [CrossRef]

- Li, Y.; Zou, S.; Zhao, T.; Su, X. MDFA-Net: Multi-Scale Differential Feature Self-Attention Network for Building Change Detection in Remote Sensing Images. Remote Sens. 2024, 16, 3466. [Google Scholar] [CrossRef]

- Wu, B.; Xu, C.; Dai, X.; Wan, A.; Zhang, P.; Yan, Z.; Tomizuka, M.; Gonzalez, J.; Keutzer, K.; Vajda, P. Visual Transformers: Token-Based Image Representation and Processing for Computer Vision. Available online: https://arxiv.org/abs/2006.03677 (accessed on 12 January 2025).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-To-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision(ECCV) 2020, Glasgow, UK, 23–28 August 2020; Volume 12346, pp. 213–229. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-To-End Object Detection. Available online: https://arxiv.org/abs/2010.04159 (accessed on 12 January 2025).

- Jiang, B.; Wang, Z.; Wang, X.; Zhang, Z.; Chen, L.; Wang, X.; Luo, B. VcT: Visual Change Transformer for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Tang, W.; Wu, K.; Zhang, Y.; Zhan, Y. A Siamese Network Based on Multiple Attention and Multilayer Transformers for Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5219015. [Google Scholar] [CrossRef]

- Wu, Y.; Li, L.; Wang, N.; Li, W.; Fan, J.; Tao, R.; Wen, X.; Wang, Y. CSTSUNet: A Cross Swin Transformer-Based Siamese U-Shape Network for Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5623715. [Google Scholar] [CrossRef]

- Feng, Y.; Xu, H.; Jiang, J.; Liu, H.; Zheng, J. ICIF-Net: Intra-Scale Cross-Interaction and Inter-Scale Feature Fusion Network for Bitemporal Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4410213. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Li, W.; Xue, L.; Wang, X.; Li, G. ConvTransNet: A CNN–Transformer Network for Change Detection with Multiscale Global–Local Representations. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5610315. [Google Scholar] [CrossRef]

- Zhao, J.; Jiao, L.; Wang, C.; Liu, X.; Liu, F.; Li, L.; Yang, S. GeoFormer: A Geometric Representation Transformer for Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4410617. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-Transformer Network with Multiscale Context Aggregation for Fine-Grained Cropland Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Shao, J.; Li, Z. SNUNet-CD: A Densely Connected Siamese Network for Change Detection of VHR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8007805. [Google Scholar] [CrossRef]

- Liu, W.; Lin, Y.; Liu, W.; Yu, Y.; Li, J. An Attention-Based Multiscale Transformer Network for Remote Sensing Image Change Detection. ISPRS J. Photogramm. Remote Sens. 2023, 202, 599–609. [Google Scholar] [CrossRef]

- Xiong, F.; Li, T.; Yang, Y.; Zhou, J.; Lu, J.; Qian, Y. Wavelet Siamese Network with Semi-Supervised Domain Adaptation for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633613. [Google Scholar] [CrossRef]

| Model | EMF | CASAM | DPEM | Transformer | CAM | LEVIR-CD | WHU-CD | CLCD | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | IOU | F1 | IOU | F1 | IOU | ||||||

| MDNet | × | √ | √ | √ | √ | 90.43 | 82.32 | 93.79 | 88.66 | 77.25 | 62.39 |

| MDNet | √ | × | √ | √ | √ | 90.3 | 83.1 | 93.12 | 89.05 | 77.47 | 62.86 |

| MDNet | × | × | √ | √ | √ | 88.89 | 80.74 | 91.45 | 85.36 | 71.38 | 58.12 |

| MDNet | √ | √ | × | √ | √ | 89.39 | 81.5 | 92.56 | 88.24 | 77.42 | 63.35 |

| MDNet | √ | √ | √ | × | √ | 90.69 | 83.05 | 93.5 | 88.58 | 77.65 | 62.61 |

| MDNet | √ | √ | × | × | √ | 89.08 | 81.06 | 91.7 | 85.86 | 72.24 | 58.64 |

| MDNet | √ | √ | √ | √ | × | 90.81 | 83.39 | 94.13 | 89.35 | 78.23 | 64.05 |

| MDNet | √ | √ | √ | √ | √ | 91.56 | 84.05 | 94.77 | 90.21 | 78.68 | 64.43 |

| Method | P | R | F1 | IOU |

|---|---|---|---|---|

| FC-EF | 86.91 | 80.17 | 83.4 | 71.53 |

| SNUNet | 89.18 | 87.17 | 88.16 | 78.83 |

| BiT | 89.24 | 89.37 | 89.31 | 80.68 |

| AMTNet | 91.82 | 89.71 | 90.76 | 83.08 |

| WS-Net++ | 93.32 | 88.97 | 90.96 | 83.51 |

| MDNet (Ours) | 92.25 | 90.64 | 91.56 | 84.05 |

| Method | P | R | F1 | IOU |

|---|---|---|---|---|

| FC-EF | 80.87 | 75.43 | 78.05 | 64.01 |

| SNUNet | 83.25 | 91.35 | 87.11 | 77.17 |

| BiT | 83.05 | 88.8 | 85.83 | 75.18 |

| AMTNet | 92.86 | 91.99 | 92.27 | 85.64 |

| WS-Net++ | 95.36 | 92.7 | 94.02 | 89.41 |

| MDNet (Ours) | 94.35 | 93.84 | 94.77 | 90.21 |

| Method | P | R | F1 | IOU |

|---|---|---|---|---|

| FC-EF | 71.7 | 47.6 | 57.22 | 40.07 |

| SNUNet | 70.82 | 62.37 | 66.32 | 49.62 |

| BiT | 61.42 | 62.75 | 62.08 | 45.01 |

| AMTNet | 78.64 | 75.06 | 76.81 | 62.35 |

| WS-Net++ | 82.58 | 75.47 | 79.64 | 65.34 |

| MDNet (Ours) | 80.97 | 76.52 | 78.68 | 64.43 |

| Method | FLOPs(G) | Params(M) |

|---|---|---|

| FC-EF | 3.58 | 1.35 |

| SNUNet | 54.83 | 12.03 |

| BiT | 8.75 | 3.49 |

| AMTNet | 21.56 | 24.67 |

| WS-Net++ | 40.59 | 906.16 |

| MDNet (Ours) | 26.43 | 36.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Zhao, M.; Wei, X.; Shao, Y.; Wang, Q.; Yang, Z. MDNet: A Differential-Perception-Enhanced Multi-Scale Attention Network for Remote Sensing Image Change Detection. Appl. Sci. 2025, 15, 8794. https://doi.org/10.3390/app15168794

Li J, Zhao M, Wei X, Shao Y, Wang Q, Yang Z. MDNet: A Differential-Perception-Enhanced Multi-Scale Attention Network for Remote Sensing Image Change Detection. Applied Sciences. 2025; 15(16):8794. https://doi.org/10.3390/app15168794

Chicago/Turabian StyleLi, Jingwen, Mengke Zhao, Xiaoru Wei, Yusen Shao, Qingyang Wang, and Zhenxin Yang. 2025. "MDNet: A Differential-Perception-Enhanced Multi-Scale Attention Network for Remote Sensing Image Change Detection" Applied Sciences 15, no. 16: 8794. https://doi.org/10.3390/app15168794

APA StyleLi, J., Zhao, M., Wei, X., Shao, Y., Wang, Q., & Yang, Z. (2025). MDNet: A Differential-Perception-Enhanced Multi-Scale Attention Network for Remote Sensing Image Change Detection. Applied Sciences, 15(16), 8794. https://doi.org/10.3390/app15168794