1. Introduction

The digital age enables both global innovation and an increasing cybercrime economy, which is projected to cost almost 10.5 trillion USD annually by 2025 [

1], surpassing the GDP of all but the two biggest countries in the world to become the third largest “economy” [

2]. This leads not only to enormous financial losses but also to operational damage, decreased public trust, and reputational damage for public and private organizations. A single data breach cost increased by 10% year-over-year to reach 4.88 million USD in 2024 [

3]. Moreover, cyberattacks have evolved into sophisticated, continuous threats. More specifically, an estimated 3.4 billion malicious emails circulate daily, with phishing being the dominant attack, accounting for 45.56% of all scam emails in 2021 [

4]. Cyberattacks are happening almost every few seconds, whilst an organization needs 258 days on average to contain a data breach [

3]. This alarming increase is reflected in IBM’s report, which indicated a 266% increase in infostealer deployments and a 71% rise in valid credentials attacks [

5]. Also, emerging technologies like generative Artificial Intelligence (e.g., Large Language Models (LLMs) like ChatGPT) enabled a remarkable increase of 4151% in hyper-personalized phishing and deepfake scams [

6], while IoT attacks surged 124% together with a 93% rise in encrypted threats in 2024 [

7].

This ever-evolving landscape has turned the attention to the engagement and the development of state-of-the-art solutions that can predict, prevent, tackle, and remedy cyberattacks by engaging smart AI-powered solutions. A significant rise in related scientific research supports this paradigm shift toward AI-driven solutions. According to Purnama et al.’s [

8] bibliometric analysis, the number of published studies on this topic grew by 1392% over the course of five years, from 13 publications in 2019 to 194 in 2023. There is a clear trend in this growing body of work toward integrative and hybrid approaches. These methods improve detection performance, system resilience, and adaptive capacity by carefully combining several AI techniques, including generative modeling, ensemble classification, and transfer learning. This development recognizes that no single model is always the best approach to counter the various and changing methods utilized by threat actors.

AI-driven solutions’ performance is highly dependent on data availability and quality. Given this context, data augmentation—whether applied to existing or newly collected datasets—in cybersecurity has gained a lot of interest. Generative Adversarial Networks (GANs), introduced by Ian Goodfellow et al. [

9] in 2014, offer significant potential in this area by generating synthetic data records nearly indistinguishable from real input samples. GANs have strong potential to address the ongoing problem of data scarcity of network intrusion records and malware images by generating realistic synthetic samples. These samples can closely resemble real-world occurrences and allow for the simulation of controlled scenarios for uncommon attack types, such as zero-day exploits, in addition to supplementing limited datasets.

Dedicated AI solutions are often limited to a very specific task. So, in complex, unbalanced, or noisy operating environments, ensemble methods—combining the predictions of a set of classifiers—consistently exhibit higher accuracy and robustness than single-model approaches. Transfer learning techniques, on the other hand, enable models that have been trained on a single dataset or domain to be successfully adapted for new, related tasks. This improves model generalization across different network infrastructures and significantly reduces the need for extensive retraining.

This study presents an integrative ML framework designed to significantly advance cybersecurity threat detection. Its primary purpose is to unify and enhance detection capabilities across diverse and evolving threat modalities—including zero-day attacks, botnets, and malware concealed within images—through a single, modular pipeline architecture. The framework specifically addresses this goal by starting with the synthetic data generation using GANs and continuing with the threat detection by combining transfer learning for image-based malware detection and ensemble learning for tabular network intrusion data analysis. This research directly builds upon the authors’ prior work in GAN-based malware synthesis and classification [

10], zero-day attack data generation [

11], and multi-model ensemble evaluation for intrusion detection [

12], aiming to create a comprehensive and scalable solution for heterogeneous cyber threats.

The main added value of the approach presented in the current study lies in its highly flexible, scalable, and adaptable modular architecture, which uniquely supports different input data types (image-based threats and tabular network data) without requiring standardization. It features two independently operating branches: an image-processing branch optimized for malware classification using CNNs and transfer learning, and a tabular-processing branch employing a soft-voting ensemble classifier for network anomaly detection. Each branch functions autonomously, providing binary (malicious/benign) outputs/predictions. Crucially, these outputs can be fused at a decision layer when both data modalities pertain to the same input instance. This optional fusion combines the strengths of each specialized detection approach, significantly boosting the system’s resilience against sophisticated, evasive, and zero-day threats—delivering enhanced, unified protection where traditional single-modality systems fall short. This design enables the system to operate effectively in complex threat scenarios, where multimodal indicators (e.g., obfuscated malware executables, traces of botnet or DDoS attacks) are present. Real-world platforms such as malware sandboxing systems, Security Information and Event Management (SIEM), and Extended Detection and Response (XDR) architectures often capture both static payloads and their dynamic behaviors across various channels. By unifying these modalities in a modular and flexible pipeline, the framework supports either independent or joint analysis, enhancing adaptability, improving detection accuracy, and reducing false positives.

The remainder of the paper is organized as follows:

Section 2 presents related works, focusing on the domain of cybersecurity and mainly revolving around GANs, zero-day attacks, transfer learning, and ensemble methods.

Section 3 describes in detail the proposed methodology designed and developed, whilst

Section 4 elaborates on the produced results. Finally,

Section 5 concludes the paper.

2. Related Works

In recent scientific literature, many publications utilize GANs for detecting and fighting cybersecurity threats. Mu et al. [

13] proposed a Wasserstein Generative Adversarial Networks with Gradient Penalty (WGAN-GP) model for generating synthetic network traffic data that can realistically simulate patterns of zero-day attacks. Experimental testing using the NSL-KDD dataset [

14] showed that the WGAN-GP model could improve the detection accuracy for both binary classification and multi-classification (2.3% and 2% improvement, respectively). The model helped the classification models in identifying even subtle signatures of zero-day attacks. A GAN-based model for detecting zero-day malware in Android mobile devices was introduced by Chhaybi and Lazaar [

15]. This model was capable of producing previously unknown viruses and threats, which were used for the training process. Both the sigmoid and ReLU activation functions were employed, and the Fréchet Inception Distance (FID) and Inception Score (IS) scores were used for evaluating the performance of the model. The FID metric is commonly used for assessing the performance of GANs [

16] and measures the similarity of the training set distribution and the generated samples’ distribution. The IS evaluates the diversity and quality of the images generated by a GAN model [

17]. The model proposed by the authors reached an IS score of 7.65 and FID score of 2.34, indicating that its generated results were very realistic and of high quality. Won et al. [

18] presented a GAN-based malware training and augmentation framework. The so-called PlausMal-GAN framework was capable of generating high-quality zero-day malware images of high diversity. For the classification of malware images, both real and generated malware data were utilized. Furthermore, the framework was tested with four different GAN models, namely the Least Squares Generative Adversarial Network (LSGAN), WGAN-GP, Deep Convolutional Generative Adversarial Network (DCGAN), and Evolutionary Generative Adversarial Network (E-GAN), yielding classification results of up to 98.74% accuracy. Alabrah [

19] proposed a GAN-based framework for binary and multi-class classification of Intrusion Detection. To address the class imbalance issue of many publicly available datasets for NIDS, the authors utilized GAN hyperparameter optimization followed by the chi-square method for feature selection. Experimental testing on the UNSW-NB15 dataset indicated high accuracy (98.14% and 87.44%) and F1-score (98.14% and 86.79%) for binary and multi-class classification, respectively.

GANs are also employed in adversarial attacks on IoT and Internet of Vehicles (IoV) applications. Benaddi et al. [

20] proposed an Intrusion Detection System (IDS) and utilized Conditional Generative Adversarial Networks (CGANs) to improve the training process, which often suffers from missing or unbalanced data. More specifically, the IDS model was CNNLSTM-based. These kinds of models combine the strengths of CNNs and Long Short-Term Memory (LSTM) networks. The IDS model was evaluated both before and after applying cGANs. This combination yielded better overall accuracy, precision, and F1-scores in different attack types (e.g., Denial-of-Service-DoS, Distributed Denial-of-Service- DDoS, Info Theft, Info Gathering) and increased the theft attack detection accuracy by 40%. Saurabh et al. [

21] proposed a Semi-supervised GAN model (SGAN) for detecting botnets in Internet of Things (IoT) environments. This model aimed to overcome the challenge of many supervised models, which, due to unlabeled network traffic, sometimes cannot directly categorize botnets that are responsible for a specific attack. The SGAN achieved a binary classification accuracy of 99.89% and a multi-classification accuracy of 59%. These results were better than the results achieved by an Artificial Neural Network (ANN) model and a CNN model when tested on the same dataset. The specific model also did not require large, labelled datasets, which are often required by supervised learning models.

To overcome the issues of imbalanced datasets, which are often present in IoT applications, as well as the difficulties of contemporary NIDS in detecting previously unknown threats. Li et al. [

22] proposed an algorithm that combined the strengths of GANs and Transformer models. More specifically, the so-called TransGAN-IDS model combined the generative capabilities of GANs and the ability of Transformers in capturing long-range dependencies in data. Experimental testing indicated an F1-score of 95.2% using the CIC-IDS2017 dataset [

23] and of 83.2% using the Kitsune dataset [

24]. Chu and Lin [

25] proposed a model for IoT attack classification. This model made use of GANs for data augmentation as well as a Multilayer Perceptron classifier. The authors reported an F1-score of over 90% when using the BoT-IoT dataset [

26] or the ToN-IoT [

27] dataset.

A model for IoV applications was showcased by Xu et al. [

28]. This model aimed at improving the detection of zero-day attacks in IoV applications, which often lack labelled data. The authors designed an attack sample augmentation algorithm, also incorporating a collaborative focal loss function into the discriminator to improve classification results. Experimental testing of the aforementioned approach indicated high F1 scores as compared to similar models, yielding an average 93.32% F1 score across four different attack types (i.e., DoS, Disruptive, RandomSpeed-RS, RandomPosOffset-RPO). The loss function used by the authors also outperformed other loss functions (Wasserstein Distance, Cross-Entropy Loss, Euclidean Distance, Kullback-Leibler Divergence) in terms of F1-score and AUC score. Another model for Intrusion Detection was proposed by Kumar and Sinha [

29]. This model combined Wasserstein Conditional Generative Adversarial Networks (WCGANs) and an XGBoost classifier. It was used for both synthetic data generation and the classification of different kinds of attacks. It made use of a gradient penalty for updating weights and was experimentally tested on three datasets (i.e., BoT-IoT, UNSW-NB15, and NSL-KDD). Its performance was also compared to the DGM model [

30], achieving better results in terms of Precision, Recall, and F1-scores (86.7%, 88.47%, and 87.58% respectively, as compared to 63.82%, 57.43%, and 60.46% of the DGM model).

DDoS attacks and botnet detection are the main focal points in different GAN-based publications. Lent et al. [

31] proposed a GAN-based anomaly detection system for the detection and mitigation of DDoS attacks on software-defined networks. The model was tested with the Orion and the CIC-DDoS2019 datasets, yielding an F1-score of 98.5% and not being seriously affected by the imbalance in the datasets. The model also helped in the mitigation by determining which network flows will be included in a block list and which in a safe list. Botnet detectors often constitute targets of adversarial evasion attacks. Taking this into consideration, Randhawa et al. [

32] introduced a GAN model that also utilized deep reinforcement learning for both exploring semantically aware samples and hardening the detection of botnets. The so-called RELEVAGAN was also experimentally tested and compared to another similar model called EVAGAN [

33]. The results indicated that RELEVAGAN outperformed EVAGAN in terms of convergence speed. More specifically, it converged at least 20 iterations before the EVAGAN in all the tested datasets. Aiming to address the issue of critical information leakage during the training of GANs for botnet detection, Feizi and Ghaffari [

34] presented a method combining Deep Convolutional GANs (DCGANs) and Differential Privacy (DP). The authors used DCGANs to distinguish real and fake botnets, applied DP, and implemented a mix-up method for stabilizing the training process. Experimental testing of the method indicated a classification accuracy of 87.4% while keeping information leakage during the training process at acceptable levels.

In the following publications, ensemble machine learning techniques were applied for botnet detection. Afrifa et al. [

35] combined three ML models (i.e., Random Forest—RF, Generalized Linear Model—GLM, and Decision Trees—DT), building a stacking ensemble model for detecting botnets in computer traffic. Out of the three individual ML models, the RF yielded the best coefficient of determination (R

2), reaching 0.9977, followed by the DT with 0.9882, and the GLM with 0.9522. The use of the stacking ensemble model led to an increase in the R

2 as compared to the use of individual ML models, resulting in a 0.2% improvement compared to RF and 1.15% and 3.75% as compared to the DT model and the GLM model, respectively. Another model based on ensemble learning was proposed byAbu Al-Haija and Al-Dala’ien [

36].The so-called ELBA-IoT model aimed to serve as a lightweight botnet detection system for IoT applications. More specifically, the authors combined three DT techniques (i.e., AdaBoosted, RUSBoosted, Bagged). The proposed model was capable of profiling behavioral features in IoT networks and detecting anomalous traffic from compromised IoT nodes. Experimental testing showed that ELBA-IoT could reach high accuracy rates (up to 99.6%) while having a very low inference time of 40 μs. The accuracy of the ensemble classifier was higher than the accuracy of each individual classifier (AdaBoosted reached 97.3%, RUSBoosted reached 97.7%, and Bagged reached 96.2%). Hossain and Islam [

37] presented a model for botnet detection that used ensemble learning and combined different feature selection techniques. More specifically, for the feature selection, the Principal Component Analysis, Mutual Information, and Categorical Analysis techniques were combined. Furthermore, the Extra Trees ensemble classification models were used, in which every decision tree was trained on a random subset of features from the input dataset. Experimental testing using different datasets (e.g., N-BaIoT, Bot-IoT, CTU-13, ISCX, CCC, CICIDS) indicated very high performance in terms of botnet detection, reaching a true positive rate of 99%. Finally, Srinivasan and Deepalakshmi [

38] presented an ensemble classifier for botnet detection with a stacking process called ECASP. The proposed model yielded an accuracy of 94.08%, a sensitivity of 86.5%, and a specificity of 85.68% when tested on publicly available datasets. ECASP outperformed three different models, i.e., an Extreme Learning Machine (ELM), a Support Vector Machine (SVM), and a CNN model.

While there is significant research interest around GAN-based models for detecting network attacks, most publications focus on specific attack types or on specific data modalities. On the contrary, the work presented in this paper follows an integrative and scalable approach that encompasses diverse attack types and data modalities. As a result, it can handle both tabular data and images, and detect zero-day attacks, botnet attacks, as well as malware hidden within image files.

3. Proposed Methodology

Section 3 presents the proposed integrative ML framework, which has been designed for advanced cybersecurity threat detection and mitigation actions. This unified system combines synthetic data generation using GANs, transfer learning for image-based malware detection, and ensemble learning for tabular network intrusion detection. The main goal of this research is to unify and enhance detection capabilities across diverse threat modalities and types, from zero-day and botnet attacks to malware included within images, through a modular and flexible pipeline architecture. The suggested methodology builds upon the authors’ previous work in related areas, including GAN-based malware image synthesis and classification [

10], zero-day attack data generation using tailored GAN models [

11], and multi-model ensemble evaluations for intrusion detection [

12].

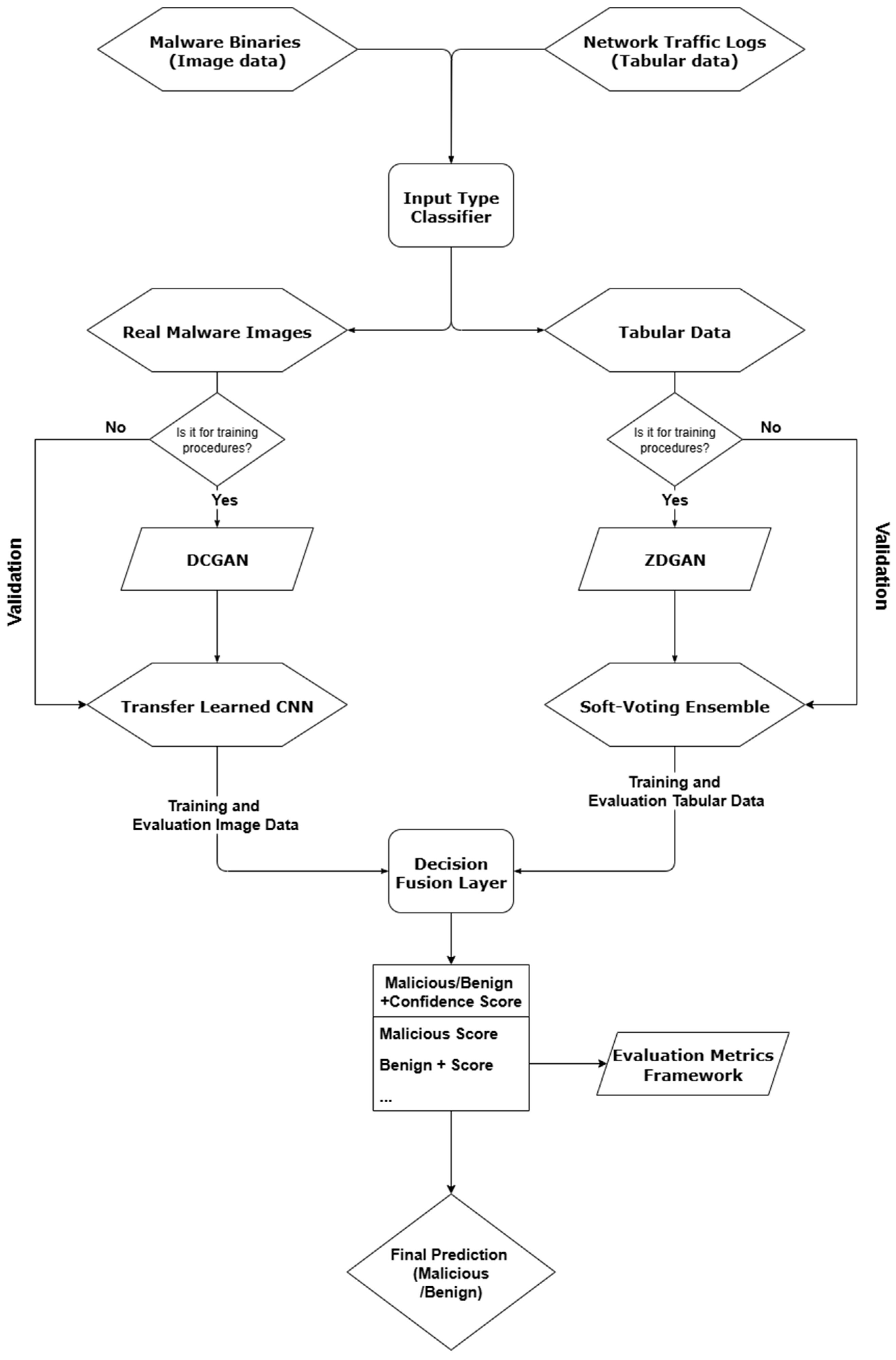

3.1. Overview of the Unified Threat Detection Pipeline

The unified threat detection pipeline has been designed to support multiple input modalities of different types and formats and integrate them in an effective way within a modular architecture. It includes an input recognition component, two parallel processing branches, one for image-based threats and one for tabular-based network intrusion data, and a final fusion layer for decision support. Thus, a modular architecture has been chosen for flexibility, scalability, and adaptability purposes to diverse threat modalities, including zero-day attacks, malware embedded within images, and network intrusion data. Each component operates independently, enabling the system to scale and adapt properly based on the input availability and the deployment environment. One of its core strengths lies in its ability to flexibly adapt to different types of cyber threat datasets, including image-based malware representations and structured tabular data of network traffic logs, without necessarily requiring standardization of the data inputs. This can be achieved through a two-branch system on the first layer of the suggested architecture, in which each branch specializes in different data modalities. To route incoming data to the appropriate branch, the pipeline includes a lightweight input-type classification layer that not only checks metadata and structure but also leverages traditional ML (shallow ML) models such as decision trees or entropy-based filters. These models are trained to distinguish malware image files from tabular network records based on statistical features. This approach enhances reliability compared to simple header-based detection and adds resilience to mislabeled or adversarial inputs. Based on the inferred type, each sample is automatically dispatched to the corresponding processing branch. Moreover, this mechanism ensures that even ambiguous or manipulated inputs are correctly routed, while maintaining modular adaptability for future extensions.

The first image-processing branch has been optimized for malware image classification, utilizing transfer-learning advancements and CNNs, while the second tabular-processing-oriented branch applies a soft-voting ensemble classifier to identify anomalies in network activity. Each branch is independently trained and functions autonomously, when its outputs are provided in the form of binary classification results, and more specifically in the form of malicious or benign threat. These outputs can optionally be merged on a decision-level fusion strategy layer, in cases where both data modalities refer to the same input instance. This option allows the system to combine the strengths of each detection approach, providing the capability to offer higher resilience against evasive or zero-day threats.

For further interoperability purposes, the architecture includes logging modules and configurable parameters for each branch, enabling the system to tune for different operational environments. Moreover, the modular nature of the suggested pipeline allows future extensions to receive additional data modalities such as textual, user activity, and/or behavioral analytics data as input. These main features of flexibility, extensibility, and modality with which the pipeline was designed make it well-suited for deployment, particularly in heterogeneous cybersecurity environments, including enterprise networks, endpoint detection systems, and malware analysis sandboxes. The overall architecture of the proposed system is illustrated in

Figure 1. It demonstrates the modular structure of the suggested, unified pipeline, composed of two specialized processing branches—one for image-oriented threats and one for tabular-based intrusion data—along with the input classification module, decision-level fusion logic, and the rest of the logging/configuration components. The design on this figure highlights how diverse threat data types can be handled independently but integrated coherently for final decision-making, within a layered and flexible design of different AI techniques that ensure scalability, adaptability, and extensibility.

The pseudocode below clarifies the internal operational steps of the unified pipeline architecture depicted in

Figure 1. This step-by-step representation outlines how incoming data is processed, classified, and optionally fused, providing a clear abstraction of the pipeline’s dynamic execution flow.

Pseudocode: Dual-Modality Threat Detection with GAN Augmentation.

1: Input:

2: x_img ← Malware binary image data

3: x_tab ← Network traffic log tabular data

4: mode ← {‘train’, ‘val’} //Indicates training or validation phase

5: Output:

6: Y ← Final threat label (Malicious/Benign)

7: τ_img ← RecognizeInputType(x_img)

8: τ_tab ← RecognizeInputType(x_tab)

9: # ===== Image Processing Branch =====

10: if τ_img == ‘image’ then

11: if mode == ‘train’ then

12: x_img^s ← DCGAN(x_img) //Synthetic image generation

13: D_img^s ← x_img U x_img^s //Combine real and synthetic images

14: else

15: D_img ← x_img //Use real images only

16: end if

17: Z_img ← φ_img(D_img) //Feature extraction via CNN

18: Y_img ← h_img(Z_img) //Image-based classification

19: # ===== Tabular Processing Branch =====

20: if τ_tab == ‘tabular’ then

21: if mode == ‘train’ then

22: x_tab^s ← ZDGAN(x_tab) //Synthetic tabular data generation

23: D_tab^s ← x_tab U x_tab^s //Combine real and synthetic tabular data

24: else

25: D_tab ← x_tab //Use real tabular data only

26: end if

27: Z_tab ← φ_tab(D_tab) //Tabular feature extraction

28: Y_tab ← h_tab(Z_tab) //Ensemble-based classification

29: # ===== Fusion and Evaluation =====

30: y ← (Y_img, Y_tab) //Decision fusion

31: s_conf ← ComputeConfidence(Y_img,Y_tab) //Score estimation

32: if mode == ‘val’ then

33: EvaluatePerformance(y,s_conf) //Metrics computation during validation

34: end if

35: return y //Final prediction (Malicious/Benign)

3.2. Synthetic Data Generation

3.2.1. ZDGAN for Zero-Day Tabular Data Synthesis

Data scarcity remains a critical challenge for both labeled and unlabeled cybersecurity datasets. To address this issue, particularly in the context of labeled zero-day attack data in structured network intrusion logs, we have adopted the Zero-Day Generative Adversarial Network (ZDGAN), an enhanced GAN variant introduced and developed within our prior work [

11].

Table 1 and

Table 2 present the features derived from open-source cybersecurity datasets (e.g, NSL-KDD, CTU-13) and have been validated in prior GAN-based data generation studies [

11,

39]. These features have been preprocessed and have been used as input for training the different GAN architectures, as presented within the next Sections of the current study. ZDGAN has been especially designed to simulate rare or previously unseen attack patterns by learning complex feature relationships from open-source datasets such as NSL-KDD and CTU-13. To this end, we utilize the CTU-13 dataset, a widely used benchmark in cybersecurity research, which includes network traffic captures of both benign and botnet-infected traffic. The original dataset comprises over 32 million packets collected during controlled malware-injection scenarios and includes diverse network protocol types (e.g., TCP, UDP, ICMP). From this, we extract a training subset of 216,352 labeled flows, consisting of 140,849 botnet (malicious) and 75,503 legitimate samples. Additionally, an unlabeled evaluation subset of 88,258 flows has been used. The dataset includes 57 initial features (such as IP addresses, port numbers, timestamps, traffic volume indicators), which were reduced through preprocessing and feature selection techniques, as highlighted within our previous study [

39]. Specifically, we applied univariate feature selection using the SelectKBest method with a chi-squared scoring function, in combination with feature importance scoring using an Extra Trees Classifier. This resulted in a final optimized dataset of 8 independent variables. The selected features include a mix of categorical (e.g., protocol state), numerical (e.g., packet counts, byte volume), and port-related attributes. Categorical fields were transformed via label encoding, while all numeric attributes were normalized to ensure compatibility with downstream neural network models. This structured preprocessing ensures that the dataset is not only clean and balanced but also optimized for deep learning-based data synthesis and classification tasks. The selected dataset was used during the training and evaluation of ZDGAN, though the model has been designed to receive additional data sources as input in future extensions. The model architecture consists of fully connected layers in both the generator and discriminator, trained adversarially to synthesize tabular data that properly preserves the structural and statistical properties of real-world network traffic and events. The model’s primary objective focuses mainly on preserving class-conditional feature distributions while also generating realistic variations that accurately reflect emerging attack behaviors.

The synthetic records generated by ZDGAN serve as the augmentation layer for the training of the ensemble classifier, which will be detailed in

Section 3.4. This integration enables the tabular processing branch to detect not only known threats but also to generalize to approximations of zero-day behaviors. To assess the quality and fidelity of the generated samples, we apply validation techniques, such as log-mean deviation analysis, feature-wise cosine similarity, and visual inspection of class-wise t-SNE plots. These techniques also confirm both the diversity and realism of the synthetic dataset.

Table 1 summarizes the structure and data feature types of the ZDGAN-generated dataset used in this study.

3.2.2. ZDGAN for Tabular Botnets Data Synthesis

To further enrich the training set of the tabular threat detection branch, we incorporate synthetic botnet intrusion data generated from subsets of the CTU-13 dataset. These samples represent a variety of botnet behaviors, including Neris, Rbot, and Virut traffic patterns. For the generation process, we adopt the same ZDGAN architecture as described in

Section 3.2.2, due to its proven ability to capture rare patterns across sparse and imbalanced features. This approach ensures architectural consistency while enabling the synthesis of an expanded range of malicious behaviors.

The generated botnet samples augment the existing dataset used by the ensemble classifier, improving its ability to generalize to diverse threat categories. To support the generation of synthetic botnet traffic, we utilized a labeled and preprocessed subset of the CTU-13 dataset, following the methodology introduced in our previous study [

11]. From the original dataset of over 32 million flow records, we extracted 140,849 botnet samples and 75,503 benign ones for training the ZDGAN model. The dataset was preprocessed through a tailored pipeline designed for network flow data, including protocol filtering, removal of incomplete connections, normalization, and label encoding of categorical variables. The selected features emphasized flow-level attributes (e.g., connection duration, bytes exchanged, state transitions) that are known to be effective for botnet detection. This configuration ensured the synthesized data retained realistic behavioral and structural traits observed in previously unseen botnet families. A representative portion of these synthetic samples was later used to simulate botnet attacks in our classification experiments. Validation of these botnet-specific synthetic samples follows the same methodology as the zero-day variants, using feature distribution comparisons, deviation metrics, and clustering visualizations. The combined augmentation strategy strengthens the classifier’s detection capabilities across a wider spectrum of real-world attack types without requiring additional data collection from live environments.

Table 2.

Characteristics of ZDGAN Generated for Botnets Data Sample.

Table 2.

Characteristics of ZDGAN Generated for Botnets Data Sample.

| Feature | Data Type | Value Range/Examples | Description |

|---|

| Flow Duration | Float | 0.0–1200 s | Total duration of the network flow |

| Src Packets | Integer | 1–4000 | Packets sent from the source IP |

| Dst Packets | Integer | 1–3500 | Packets received by the destination IP |

| Bytes Sent | Integer | 0–2,000,000 | Volume of outgoing traffic |

| Bytes Received | Integer | 0–1,500,000 | Volume of incoming traffic |

| Protocol | Categorical | TCP, UDP, ICMP | Protocol type used in the session |

| Botnet Family | Categorical | Neris, Rbot, Virut | Botnet label from CTU-13 annotations |

| Label | Binary | 0 = benign, 1 = botnet | Ground truth label |

It is important to highlight the different uses of ZDGAN in this framework and specifically between the two data sources engaged and the modeling objectives involved. Although both implementations rely on the same GAN (ZDGAN) architecture, the nature of the training data and the targets of the data samples generation differ significantly. In the case of zero-day attacks, ZDGAN is trained using NSL-KDD and generic CTU-13 entries to simulate infrequent or unknown threat vectors generally categorized as anomalies. In the opposite direction, the botnet data synthesis variant of ZDGAN is constrained to a subset of CTU-13 focused specifically on botnet activity, generating labeled records that reflect behavior from families like Neris, Rbot, and Virut. This results in varying feature schemas, label distributions, and operational roles despite relying on the equal architectural model.

Table 3 summarizes these differences to clarify how ZDGAN contributes to diversified threat modeling within the tabular processing pipeline and during synthetic data generation on different data modalities.

3.2.3. DCGAN for Malware Image Synthesis

To mitigate the limited availability of malware image datasets and improve the robustness of image-based threat classification, this particular study [

10] adopts a DCGAN for synthetic data generation. This suggested approach follows our prior work [

10], where malware binary samples were transformed into grayscale or RGB images and afterwards used to train a DCGAN model. The generator maps random noise vectors into visually coherent 64 × 64-pixel malware images, while the discriminator learns to distinguish between real and synthetic samples. During the training implementations, the adversarial dynamics of the DCGAN gradually improve the visual fidelity, statistical consistency, and accuracy of the generated images. In parallel, the generator employs transposed convolutional layers with batch normalization and ReLU activations, whereas the discriminator is built with striped convolutional layers and Leaky ReLU activations.

These generated images augment the training set of the CNN classifier in the image-processing branch, enhancing its ability to generalize to unseen variants of known malware families. Evaluation metrics from our prior work are adopted to demonstrate that DCGAN-augmented datasets yield improved classification accuracy and reduced false positive rates compared to real-related training samples. Additionally, frequency histograms, FID, and classifier-based evaluation results confirm the quality and utility of the synthetic, generated samples. For this study, new malware images were generated using the pretrained DCGAN model from our earlier work, to maintain consistency with the architecture while enabling seamless integration into the proposed multimodal threat detection pipeline. This reuse of a validated model allows for methodological consistency and cost-efficient experimentation in a real-world deployment setting.

Table 4 presents the image generation format and key attributes used in the DCGAN-generated dataset.

3.3. Transfer Learning for Image-Based Detection

The first branch of the proposed framework employs a transfer learning approach, consisting of an image-based threat detection mechanism centered around a pretrained ResNet50 CNN. This strategy capitalizes on the ability of deep residual networks to extract high-level visual features from structured image datasets, including those derived from malware binary samples. Taking the limited availability of labeled malware image datasets into account, transfer learning can be considered as a particularly effective solution, allowing the reuse of robust feature extractors trained on large-scale image datasets such as ImageNet.

The ResNet50 architecture has been adjusted by freezing the initial convolutional layers and fine-tuning the final dense layers using a domain-specific dataset consisting of real and DCGAN-generated malware images (as described in

Section 3.2 on ‘DCGAN for Malware Image Synthesis’). The input malware images are uniformly resized to 64 × 64 pixels and normalized to ensure compatibility with the pretrained layers. The training set has been split and balanced to include both benign and malicious samples, with augmentation further improving generalization. The model is trained using a binary cross-entropy loss function, the Adam optimizer with weight decay regularization, and a learning rate scheduler with early stopping based on validation loss.

To make the model’s decisions easier to understand, Gradient-weighted Class Activation Mapping (Grad-CAM) is optionally used to show which parts of the image influenced the prediction, supporting the classification decision-making. This helps to ensure that the model focuses on semantically meaningful visual features, rather than noise or artifacts introduced by the GAN generator. Evaluation metrics include classification accuracy, F1-score, false positive rate, and confusion matrix analysis. In previous experiments, incorporating DCGAN-generated malware samples during training led to a statistically significant improvement in the classifier’s ability to generalize to previously unseen malware sets, especially those with obfuscated or polymorphic structures.

The outputs of this second-layer branch are binary predictions denoting whether an input sample is classified as benign or malicious. These results are used in isolation or combined in the decision-level fusion stage (

Section 3.5) when tabular intrusion data is also available for the same case. Moreover, the simple but effective use of transfer learning ensures the system remains scalable for real-time or resource-constrained environments and procedures, while still maintaining high detection performance.

The network’s initial layers are frozen, and only the final dense layers are fine-tuned using both real and synthetic malware images. The classification task is binary (malware vs. benign), and training is optimized using early stopping and learning rate scheduling. This setup enables effective learning from modest domain-specific datasets.

Table 5 summarizes the setup used to adapt the ResNet50 model for the image-based classification task. This table outlines the key architectural and training parameters applied during the transfer learning processes.

3.4. Ensemble Learning for Tabular Threat Detection

The tabular data processing branch adopts a soft voting ensemble classifier composed of five distinct base learners: K-Nearest Neighbors (KNN), Support Vector Classifier (SVC), RF, DT, and Stochastic Gradient Descent (SGD). Each of these base classifiers is selected for its unique inductive bias and learning characteristics, enhancing the ensemble’s robustness and generalization capabilities when applied to heterogeneous network intrusion datasets.

These classifiers are trained on a composite dataset that includes both real-world network traffic records and synthetic samples generated by the ZDGAN model, as previously discussed in

Section 3.2. To manage class imbalance commonly present in intrusion detection datasets, two complementary strategies are applied: (1) oversampling of minority attack classes (using SMOTE or GAN-augmented sampling) and/or (2) undersampling of dominant benign records. This ensures that each model within the ensemble learns from a balanced distribution and is less susceptible to bias.

Training and hyperparameter tuning are performed independently for each base classifier, using stratified cross-validation on a training-validation split. Key hyperparameters, including the number of neighbors in KNN, kernel type for SVC, depth and number of trees in RF and DT, and learning rate (lr) in SGD, are optimized through a grid search. The ensemble then aggregates predictions from all base models using a soft voting mechanism, which averages the predicted class probabilities and selects the class with the highest mean probability as the final output.

This ensemble approach offers enhanced resilience to overfitting phenomena, improved detection of low-frequency threats, and greater adaptability results across different network environments and data modalities. Its integration with the synthetic data generation process ensures that the system remains capable of detecting rare or evolving attack patterns that would otherwise be underrepresented in real-world datasets or scenarios. These models are trained on a combination of real and synthetic tabular records. Experiments include variations of the training dataset, such as undersampled and oversampled versions, to address class imbalance. As a result, this ensemble method improves detection robustness and reduces overfitting to any single classifier.

Before concluding this section,

Table 6 summarizes the key training configurations of the base learners that comprise the soft-voting ensemble classifier.

3.5. Fusion Output and Decision Logic

The Fusion and Decision Logic constitutes one important layer for the final decision that has been extracted from the suggested pipeline. When both malware image and tabular data are available for the same input instance, their respective predictions are merged at the decision level using a confidence-weighted averaging strategy. This method calculates the final threat classification by averaging the probability score outputs extracted by the two branches, the image-based and tabular-based, and assigning greater weight to predictions made with higher classifier confidence. This strategy provides a balanced and probabilistic fusion mechanism that accommodates uncertainty in individual predictions, rather than relying on fixed thresholds or rigorous logic.

This probabilistic fusion ensures that threats indicated with high certainty in one modality are properly weighed even when the other modality shows uncertainty. By prioritizing average confidence scores, the system mitigates false positives from noisy inputs, while strengthening the decision boundary in ambiguous cases. Also, this approach balances sensitivity and accuracy while preserving real-time operability.

In cases where only one input modality is available, because of possible sensor limitations, missing data, or partial logs, the available branch processes the input independently and provides the final prediction. The fusion module is bypassed in such cases, and the system adjusts seamlessly to maintain functional reliability under partial input conditions.

Moreover, several alternative methods can be applied at the decision-level fusion stage of multimodal threat detection systems, including:

Majority Voting: Combines binary predictions from multiple branches and selects the majority class as the final decision [

40]

Confidence-Weighted Averaging: Averages probability scores from each branch, giving higher influence to more confident predictions [

41,

42]

Bayesian Inference: Models each branch as a probabilistic source and uses posterior distributions to infer the most likely class [

43]

Dempster-Shafer Theory: Applies evidence theory to combine beliefs from different branches, accounting for uncertainty and conflict [

44]

Rule-Based Method: Applies logical rules (e.g., OR) to combine binary decisions from multiple branches [

45]

During the experimental section of this study in

Section 4, we focus on evaluating three representative fusion methods: the Rule-Based (Boolean) approach for simplicity and explainability, Majority Voting for ensemble-orientation consensus, and Confidence-Weighted Averaging to incorporate probabilistic certainty in decision-making. These methods are benchmarked and compared to identify the most effective strategy for robust multimodal threat detection within the Fusion layer.

3.6. Evaluation Phase

The evaluation phase of the proposed integrative cybersecurity threat detection framework has been designed to assess each pipeline’s component in isolation as well as in its full multimodal configuration. Its main goal is to measure the contribution of individual modules (GAN-based synthetic data generation, image-based CNN classifier, tabular ensemble classifier) and evaluate the fusion layer’s impact on overall threat detection performance.

To validate the effectiveness of the proposed system in realistic cybersecurity configurations, a structured and coherent experimental setup was carefully established. Each component of the pipeline, whether it is related to image-based threats, tabular intrusion detection, or their multimodal fusion, is evaluated under consistent and reproducible conditions. This setup aims to replicate a wide range of attack scenarios and operational conditions.

The evaluation relies on three key dimensions:

Datasets—Three datasets are used, including Malimg for the image-based malware detection branch, NSL-KDD, and CTU-13 for tabular-oriented network intrusion analysis. Synthetic data augmentation is performed by adopting models developed in previous works, DCGAN (image modality) and ZDGAN (tabular modality, including zero-day and botnet variants) to enhance generalization on different cyberthreat sections.

Data Splitting and Preprocessing—Each dataset is divided into stratified training, validation, and test sets to maintain label distributions. In GAN-augmented scenarios, synthetic samples are combined with real data prior to training. Preprocessing includes normalization and encoding tailored to each modality, respectively. The datasets have been stratified into 70% for training, 15% for validation, and 15% for testing to maintain balanced class distributions.

Environment and Execution—Experiments are run in a GPU-accelerated environment. Training leverages early stopping, learning rate scheduling and cross-validation have been selected to ensure robust model evaluation.

Table 7 provides a summary of the metrics used in this study, describing their role and the branch or component to which each applies. Each classification has been evaluated using particular metrics as clearly stated below.

Table 8 summarizes the configuration of evaluation experiments applied to each branch of architecture. It includes the datasets used, any synthetic augmentation involved, the base classifiers employed, and the final structure of the outputs for the decision fusion stage.

The last layer of the suggested pipeline, the fusion component, plays a pivotal role in consolidating predictions from multiple data modalities. The evaluation of various fusion strategies allows this work to identify and select the most reliable method to combine the extracted results from both the image and tabular branches of the pipeline.

To select a fair evaluation of the impact of the fusion mechanism, we focus on performance improvements when both modalities are available for an input instance. Several fusion strategies are tested to understand their comparative benefits before selecting the optimal one. Subsequently, a representative subset of matched input samples, each containing both image-based and tabular features, is passed through the full pipeline. Experiments are structured to compare how the image-only, tabular-only, and fusion-enabled configurations perform across the evaluation metric. Among the tested fusion strategies, the confidence-weighted averaging method is benchmarked against majority voting and OR-based logic to validate its overall effectiveness. The remaining two strategies, Dempster-Shafer and Bayesian Inference, while theoretically robust, were finally excluded from full evaluation due to computational and integration constraints in the current pipeline design. The evaluation focuses on threat detection accuracy, F1-score, and false positive rates under scenarios that simulate zero-day attacks, botnet intrusions, and complex multi-source evasive threats.

Table 9 lists each evaluated fusion approach along with a short description.

4. Experimental Analysis and Results

4.1. Evaluation Process

Section 4 provides an extensive analysis and evaluation of the experimental results aimed at validating the effectiveness, robustness, and generalizability of the proposed integrative threat detection framework under diverse cybersecurity scenarios. Specifically, the extended evaluation presented in this chapter has been structured to assess how each component, including the input-type recognition layer, synthetic data generation modules, data classifiers, and final decision-level fusion module, contributes individually and in an integrated manner to improve the overall threat detection performance.

The key objectives of this evaluation process include assessing the accuracy of the input-type recognition module, which ensures proper routing of image and tabular-based data to the appropriate pipeline branches. Additionally, the evaluation procedure aims to quantify the detection performance of each individual branch-the image-based CNN classifier and the tabular-based ensemble model-across the corresponding representative datasets. Another objective is to examine the contribution of GAN-based synthetic data augmentation in improving generalization, particularly for rare threat types such as zero-day and botnet attacks, ensuring the extensibility of this layer to further types of cybersecurity threat datasets. The comparative effectiveness of multiple decision-level fusion strategies is also investigated, in order to determine the most reliable method for combining multimodal outputs when available. Lastly, the evaluation considers performance across specific threat categories, such as zero-day exploits, botnet intrusions, and multi-source evasive threats, to understand the system’s adaptability in realistic deployment scenarios.

To support these objectives, multiple experiments are conducted using publicly available datasets augmented with synthetic data, with evaluation metrics such as accuracy, precision, recall, F1-score, and false positive rate. This chapter presents both quantitative and qualitative insights into the system’s behavior under varying operational conditions and scenarios.

4.2. Evaluation of the Input-Type Recognition Layer

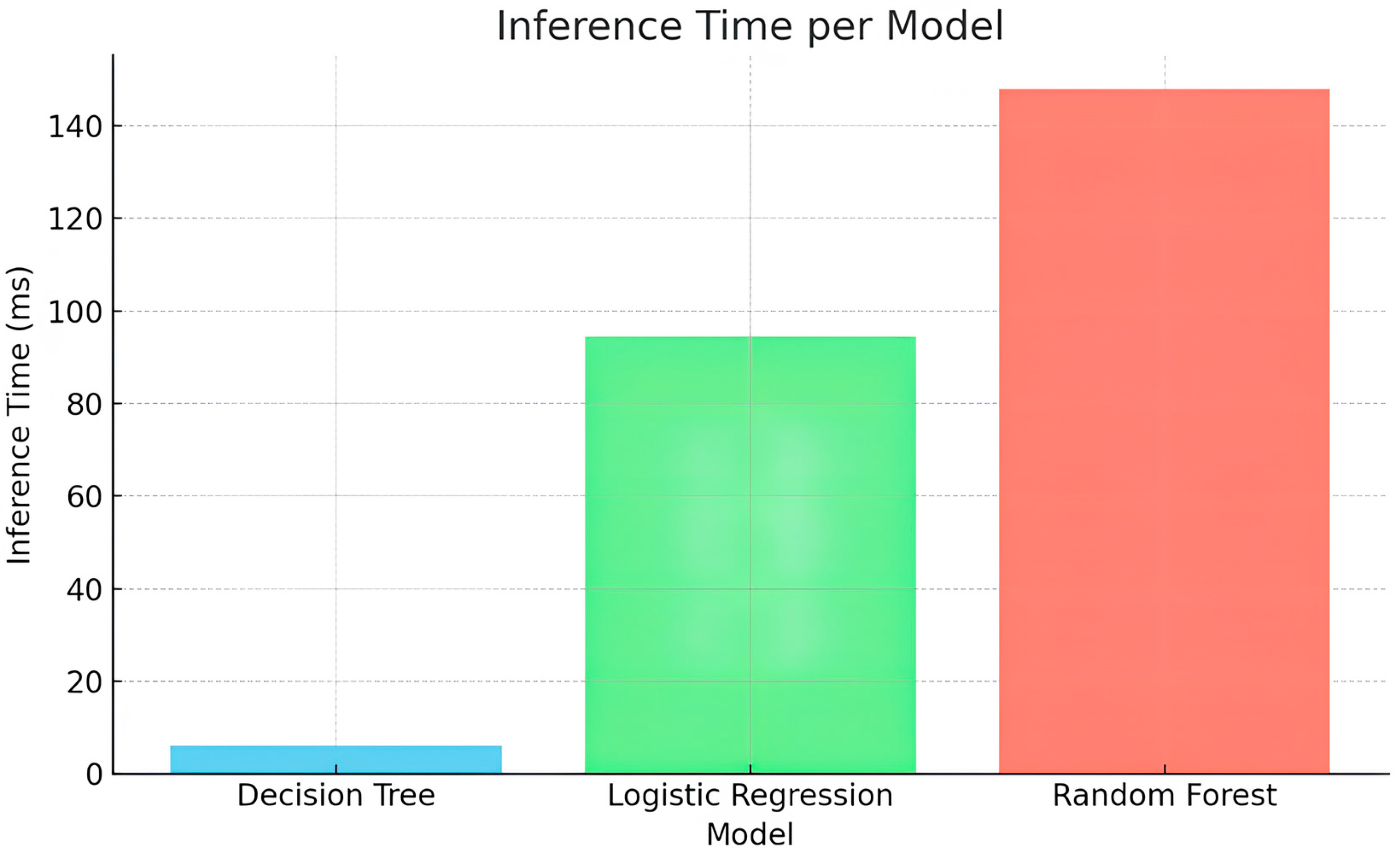

The evaluation of the efficiency and accuracy of the input-type recognition layer was conducted by implementing a comparative experiment using three lightweight traditional (shallow) ML classifiers: DT, Logistic Regression, and RF. A synthetic dataset of 10,000 total samples, consisting of 5000 image-based files and 5000 tabular records, was generated and used as input to simulate structural differences in typical cybersecurity inputs. Each sample was characterized by features such as file size, entropy, and encoded file extension. Additionally, to preserve label balance across the evaluation process, the dataset was split using stratified 70/30 train-test partitions, respectively.

As shown in

Table 10, all models achieved perfect accuracy (1.00) and F1-scores, ensuring that the process of the input-type recognition layer is highly separable using minimal features. However, the Decision Tree classifier significantly outperformed the others in terms of inference time, classifying inputs in just 6.04 milliseconds. In contrast, Logistic Regression and Random Forest required 94.45 ms and 147.96 ms, respectively. As a result, these findings lead to the selection of the Decision Tree-based input classifier, balancing high performance with minimal computational cost. This validates the design choice of employing a shallow ML model to ensure real-time routing throughout the (first) input layer of the suggested pipeline.

A visual comparison of the inference times of the model compared above is illustrated in

Figure 2, highlighting the advantage of the Decision Tree Classifier in terms of the efficiency in time-reference routing scenarios.

4.3. Evaluation of GAN Models for Data Synthesis

4.3.1. Evaluation of ZDGAN for Zero-Day Data Synthesis

The ZDGAN, introduced in the previous chapter, has been adopted to generate synthetic zero-day attack records for tabular network intrusion data. The main goal of this model, in the context of this integrated system, is to enhance the generalization capacity of the tabular-processing branch by introducing simulated examples of novel or rare cyberattacks. Rather than retraining or modifying the ZDGAN architecture, this evaluation focuses on quantifying the quality and diversity of the generated data, ensuring that the augmentation process contributes to improved threat detection coverage in a meaningful way.

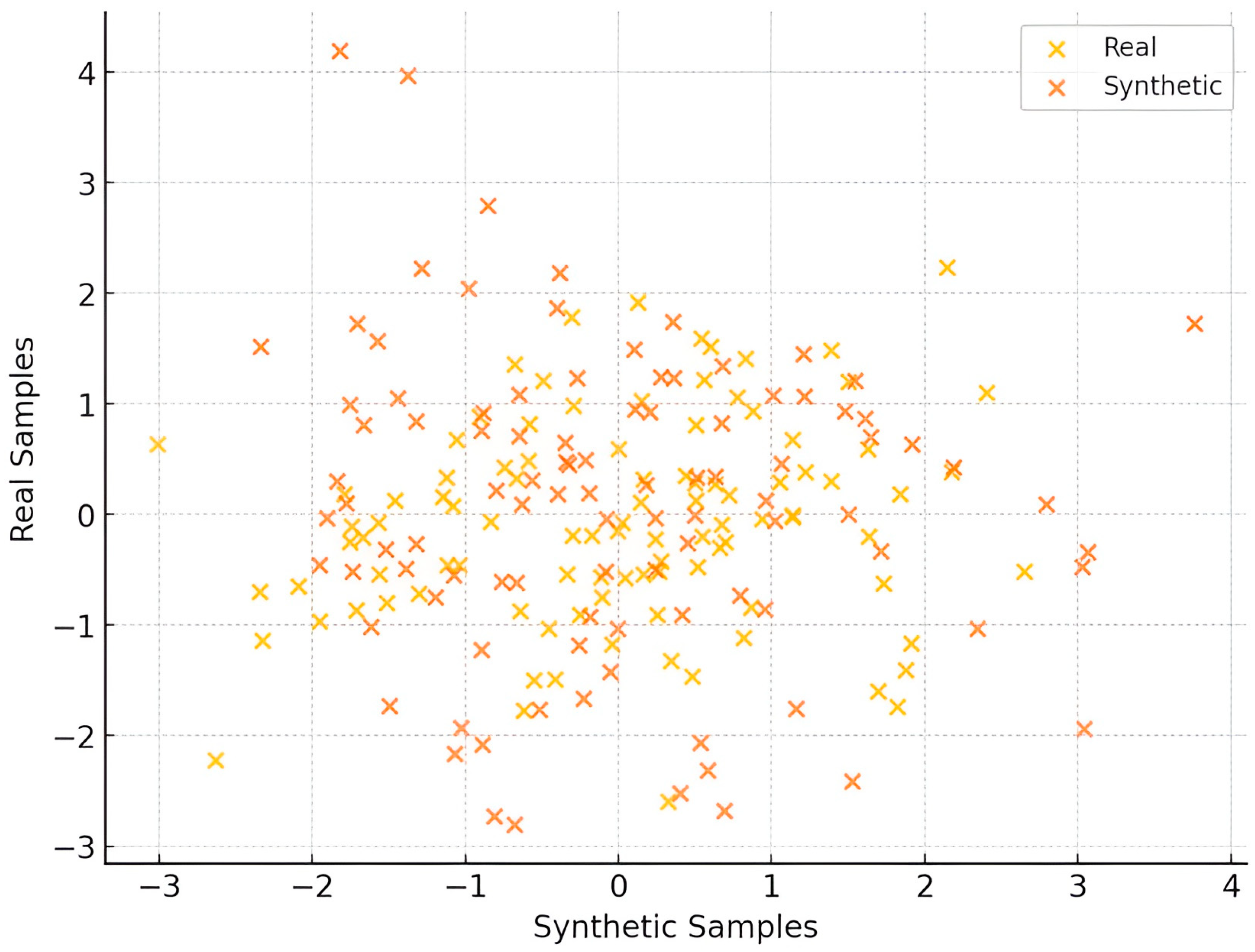

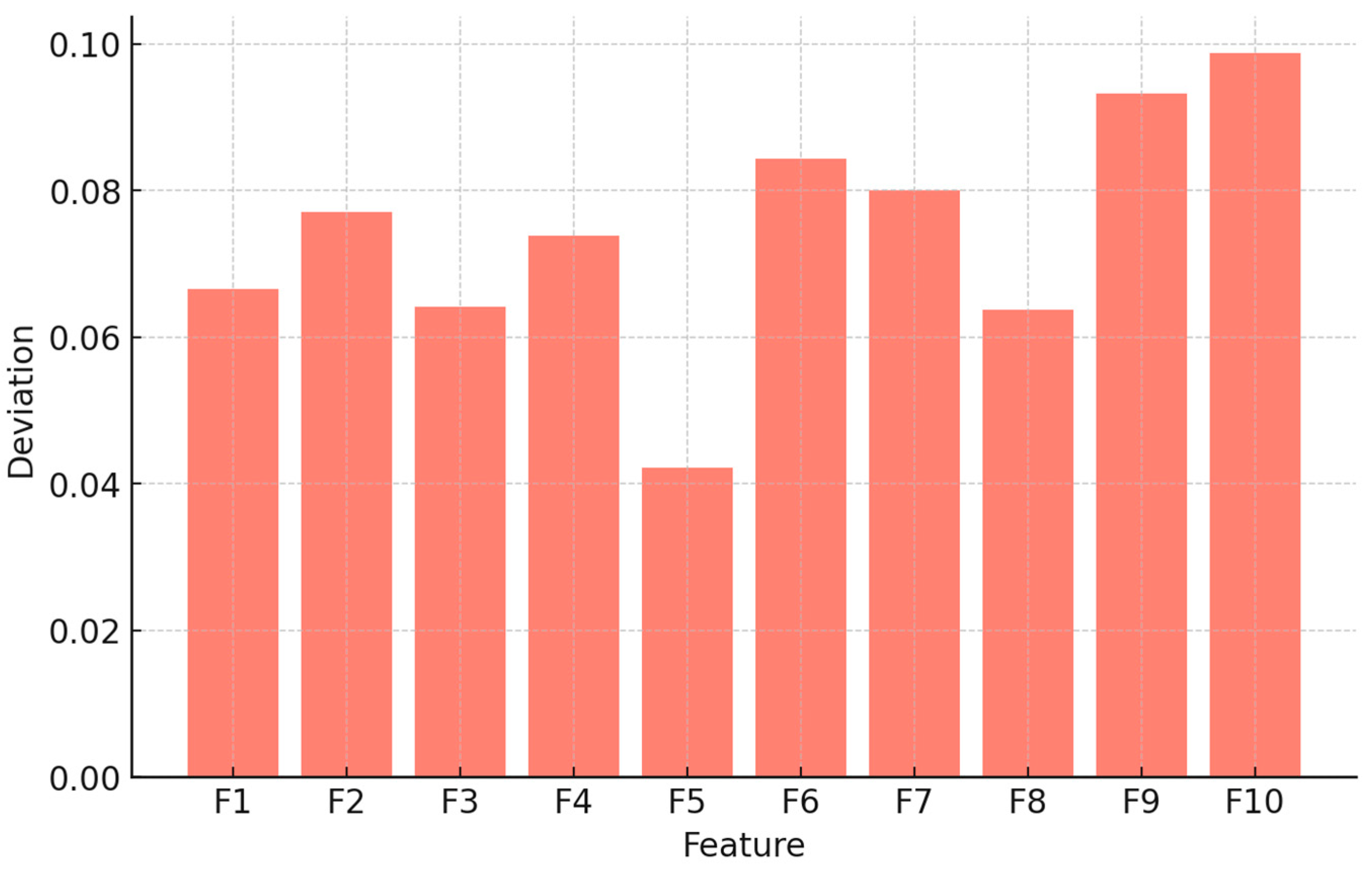

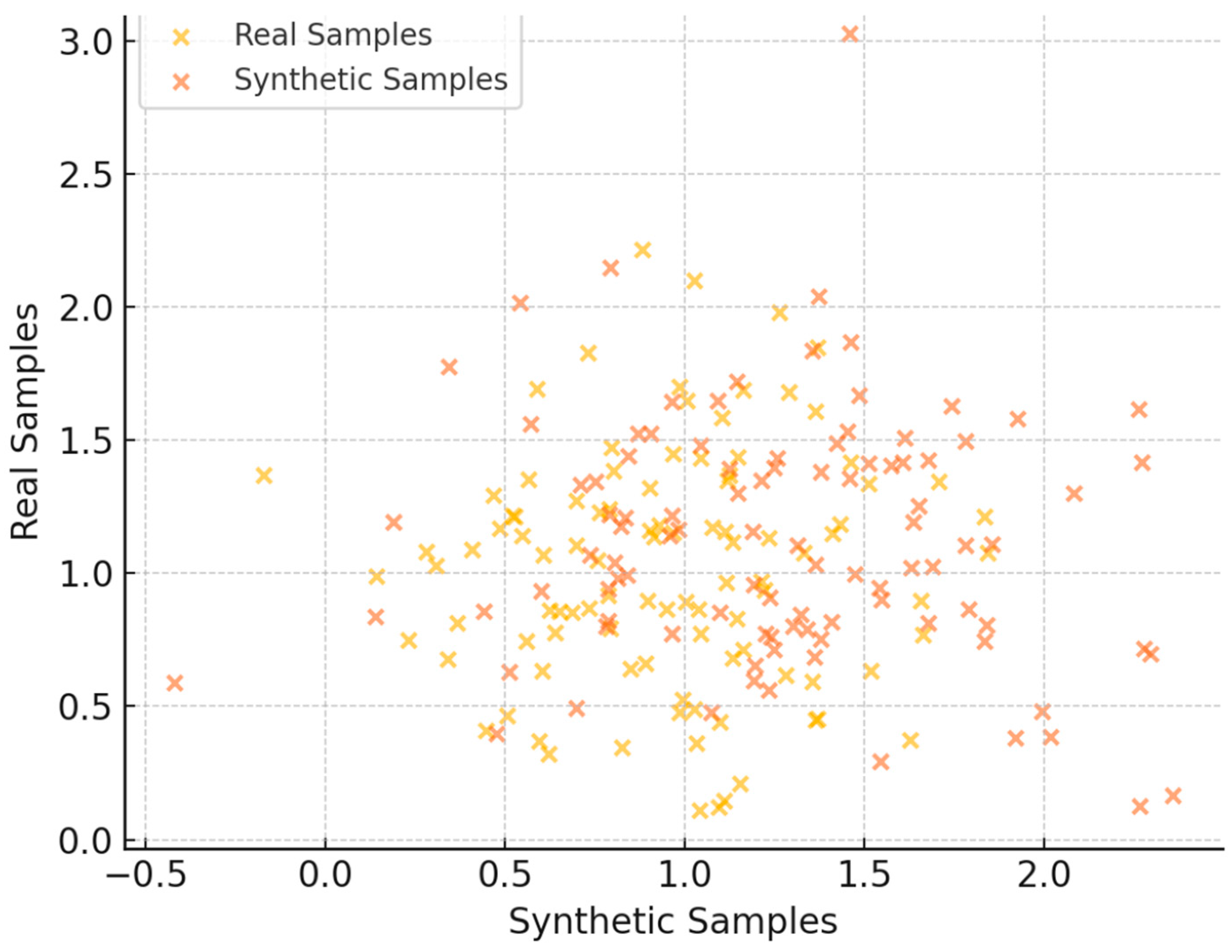

To evaluate the output of ZDGAN, a set of synthetic tabular samples was generated, using the pre-trained generator, which was then compared against real data records from NSL-KDD and CTU-13. A total of 10,000 synthetic and 10,000 real samples were used for comparison purposes. Hence, their similarity underwent further evaluation using five key metrics: mean cosine similarity, log-mean deviation, Jensen-Shannon divergence, diversity index, and dimensionality reduction-based cluster visualization. Cosine similarity measures directional alignment across feature vectors, while log-mean deviation quantifies statistical distance between distributions of real and synthetic records. To visualize how well synthetic records cluster with their real counterparts, the method of Principal Component Analysis (PCA) was selected and applied to project both datasets into a 2D embedding space.

Table 11 reports a detailed summary of metrics extracted using and evaluating the ZDGAN model. These metrics offer strong evidence of the synthetic data’s structure and statistical distribution and its capacity to serve as a meaningful augmentation to real-world intrusion datasets. The average cosine similarity was calculated at 81.9%, indicating a strong feature-level alignment. The average log-mean deviation across all features was found to be 82%, confirming that the synthetic records remain close to the real data distributions without exhibiting mode collapse or over-smoothing results. Beyond these core metrics, Jensen-Shannon divergence was estimated at 10.4%, reflecting a modest divergence in probability distributions that is still within acceptable limits for high-quality data synthesis. Additionally, a synthetic sample diversity index of 77% suggests the generator introduces meaningful variation among samples, preventing overfitting in downstream classifiers. Finally, the t-SNE cluster metric achieved a result of 87%, confirming that synthetic records were inserted well within the high-dimensional space of their real counterparts. Together, these metrics extracted highlight that the ZDGAN-generated samples are not only statistically like real data, but also diverse and semantically meaningful in terms of quality, an aspect essential for improving generalization in zero-day attack detection tasks.

To mitigate potential overfitting and bias introduced by the synthetic data, several validation mechanisms were adopted. First, a feature-wise log-mean deviation and cosine similarity mechanisms were used to verify that the synthetic samples closely approximate the statistical structure and properties of real-world data without collapsing into redundant or unrealistic outputs. Secondly, PCA visualizations were used to confirm that synthetic and real samples are grouped in similar ways based on their features. Additionally, we enabled a diversity index computation configuration to ensure variation among generated records, minimizing the risk of mode collapse. From a model training perspective, stratified data splits, early stopping, and regularization techniques were employed to avoid overfitting to synthetic samples. These combined strategies ensure that the synthetic data serves as a meaningful augmentation rather than a source of bias.

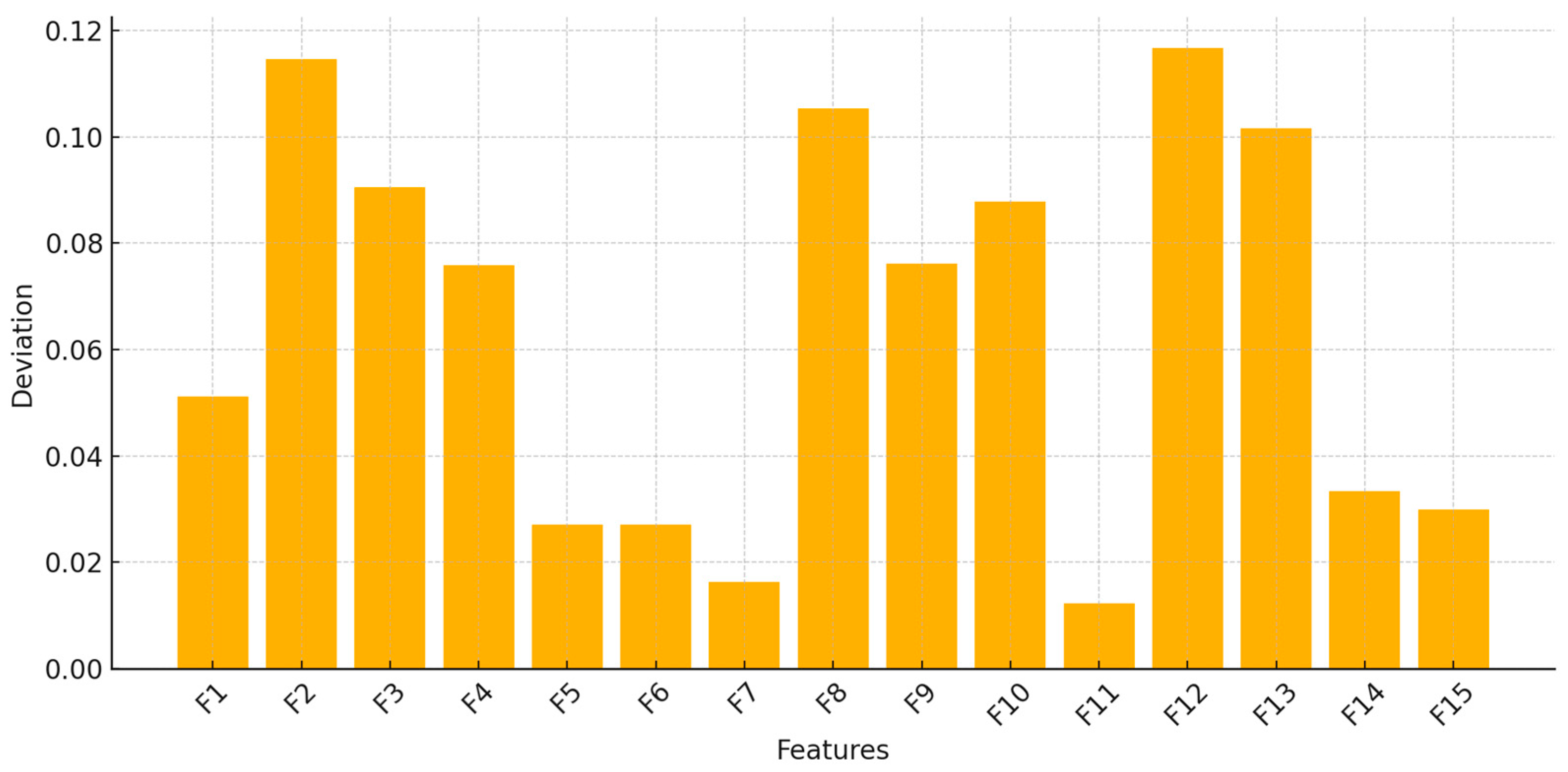

Figure 3 illustrates how synthetic and real samples group together, showing that the generator learns important patterns from the input data. In addition to this,

Figure 4 depicts a feature-level comparison of the extracted deviations. These results demonstrate that ZDGAN can produce reliable, diverse, structurally and statistically consistent synthetic samples that generalize well to unseen threats, thereby strengthening the detection performance of the ensemble classifier described in detail, also in

Section 3.

4.3.2. Evaluation of ZDGAN for Botnet Data Synthesis

Following the logic of

Section 4.3.2, to evaluate ZDGAN’s applicability beyond zero-day intrusion synthesis, the model was applied to a separate dataset focused on botnet attack traffic. The goal was to determine whether the generator could generalize effectively across different threat categories. The same architecture, training configuration, and evaluation pipeline were reused, and only the dataset and class schema were modified.

As shown in

Table 12, the generated samples retained strong alignment with the original botnet traffic data. A mean cosine similarity of 80.3% indicated solid feature-level consistency, while the average log-mean deviation was calculated at 9.3%, suggesting only minor dispersion across synthetic instances. The Jensen-Shannon divergence remained relatively low at 11.7%, reinforcing the statistical proximity between real and synthetic distributions. The diversity index of 74% confirmed that the model did not collapse into repetitive patterns, and a t-SNE overlap score of 85% demonstrated that synthetic clusters were meaningfully embedded within the real feature space.

As before,

Figure 5 illustrates how synthetic and real samples group together, while

Figure 6 demonstrates the feature-level comparison of the extracted deviations. These results validate the portability of ZDGAN to different structured intrusion datasets, confirming that its generative capabilities can be extended across threat domains. This generalizability is particularly useful in real-world deployments, where emerging threats—such as botnets—demand timely simulation to strengthen detection pipelines.

4.3.3. Evaluation of DCGAN for Malware Image Data Synthesis

To evaluate the synthetic image generation capability for the layer of the image-based pipeline, a Deep Convolutional GAN (DCGAN) was adopted to generate grayscale malware images that follow the structural patterns of the Malimg dataset, as previously detailed within

Section 3. The primary goal of this evaluation is to assess how realistic and diverse the synthetic images are in comparison to real malware instances and to ensure they preserve intra-class visual features while introducing meaningful inter-class diversity.

The generated images were compared to real malware samples using four key metrics that assess both structural similarity and distributional diversity. These metrics include the Structural Similarity Index Measure (SSIM), FID, average entropy, and an inter-class diversity index. The results, shown in

Table 13, indicate that the DCGAN-generated samples maintain high structural integrity with an SSIM of 87.3% and achieve a moderate FID of 24.6, which proves reduced deviation between the real and synthetic image feature distribution. Even if this metric is not as low as other more generic image-oriented generation tasks, considering the specialized nature and class imbalance of the malware set of images, it can be considered very competitive for domain-specific image generation. To further examine the quality and randomness of the samples, average entropy was calculated per image and reached 6.38, indicating high pixel variability and confirming that the generated samples do not suffer from mode collapse or over-smoothing. Finally, the diversity index score at 0.72, measuring variation across generated malware classes, is an aspect that signifies adequate intra-class diversity and supports generalization across different malware families for future extensibility of the particular layer of the pipeline.

Figure 7 demonstrates the PCA visualization diagram generated, illustrating and highlighting the inter-class and intra-class coherence. The results ensure the DCGAN model’s capability to generate the training space with diverse malware-like patterns.

4.4. Performance of Individual Branches

The performance of the two modality-specific detection branches of the proposed framework, the CNN-based image classifier for malware detection and the ensemble learning model for network intrusion analysis using tabular data, is evaluated in this section of the current study. Each branch is assessed independently to measure its detection capacity when it can also operate autonomously, prior to the fusion processes.

The CNN-based branch leverages a pre-trained VGG16 architecture, fine-tuned on malware image data synthesized and augmented using a DCGAN. A balanced dataset of 50,000 real and 50,000 synthetic grayscale malware samples was used to train and test the model.

The tabular branch integrates three classifiers—Random Forest, XGBoost, and SVM—into a soft voting ensemble. It was trained and tested on a combination of NSL-KDD and CTU-13 datasets, augmented by synthetic samples from ZDGAN. The test set also included 50,000 real and 50,000 synthetic intrusion records.

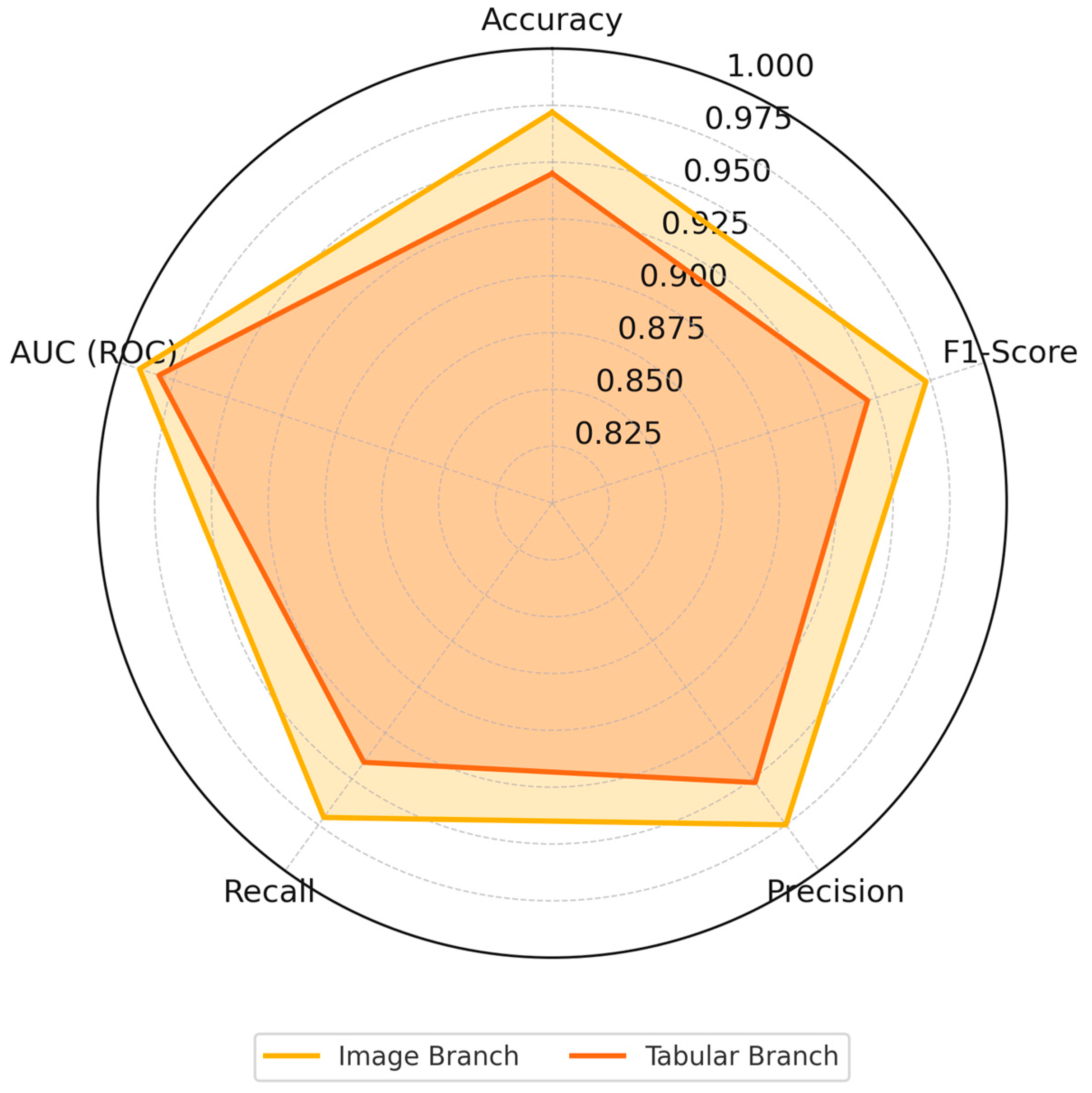

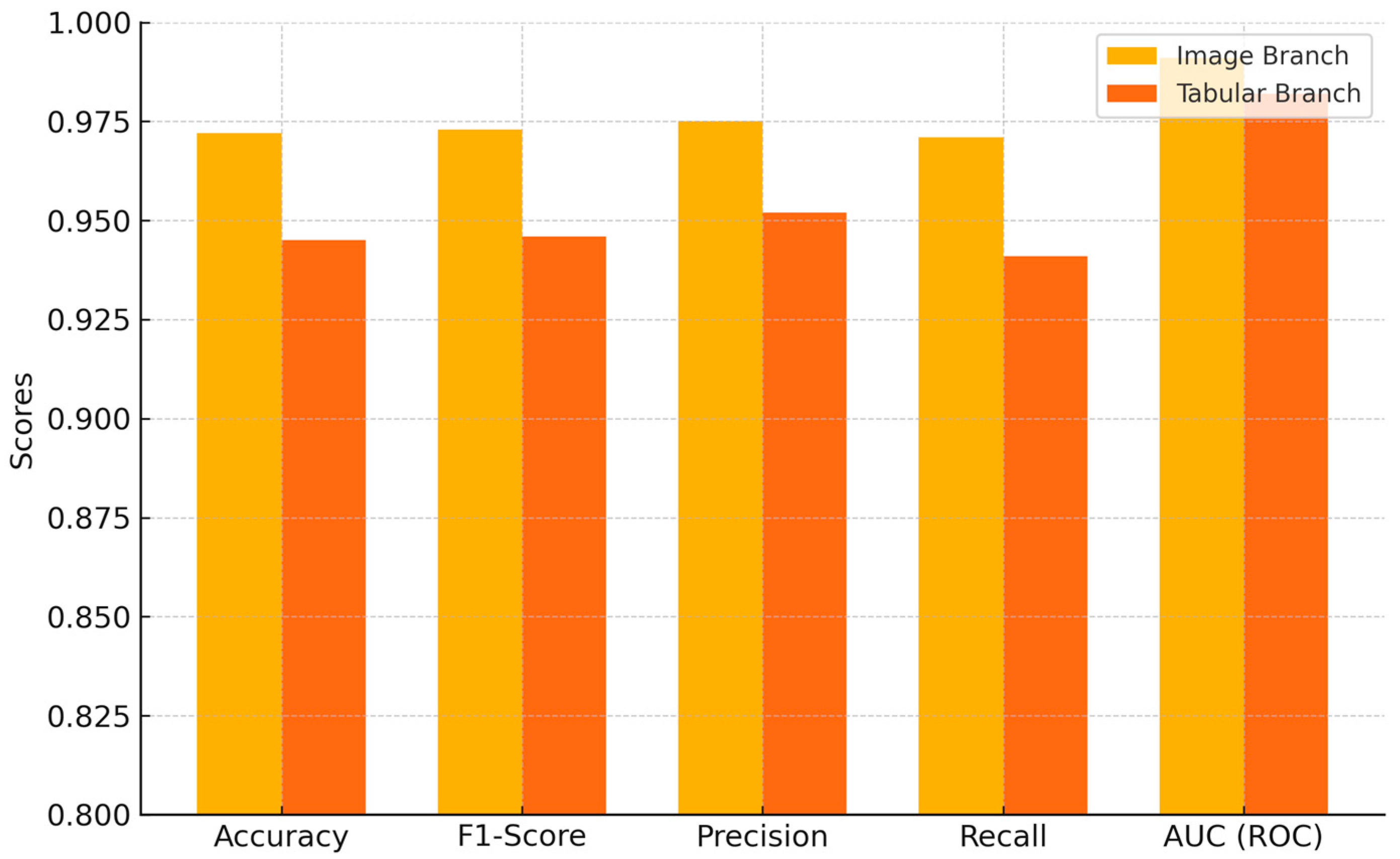

Table 14 presents a comprehensive summary of the evaluation metrics for the two detection branches of the proposed framework. The image-based CNN classifier achieved an accuracy of 97.2% and an F1-score of 97.3% when trained on a balanced dataset of 100,000 grayscale malware images (50% real, 50% synthetic). Precision and recall remained closely aligned at 97.5% and 97.1% respectively, while the Area Under the ROC Curve (AUC) was exceptionally high at 99.1%, indicating the model’s strong discriminative ability even under varied data sample complexities. On the other hand, the tabular-based classifier also showed reliable performance. Trained on a similarly sized dataset of 100,000 intrusion records (comprising real and ZDGAN-synthesized samples from NSL-KDD and CTU-13), it reached 94.5% accuracy and an F1-score of 94.6%. Its precision and recall were also high, 95.2% and 94.1%, respectively, with a robust AUC of 98.2%, which reflects consistent performance across various intrusion types, including zero-day and botnet attacks. Together, these results establish the individual and combined efficiency of both detection branches before fusion integration.

Figure 8 and

Figure 9 provide a visual comparison of the classification performance metrics between the image and tabular branches.

Figure 8 illustrates a radar chart capturing five key metrics, as presented in

Table 14, for both branches, allowing for an intuitive side-by-side performance overview. The chart reveals a consistently higher metric profile for the image-based CNN branch, particularly in precision and AUC, while the tabular branch remains competitive, especially in recall.

Figure 9 further reinforces this comparison through a grouped bar chart, highlighting metric-wise differences between the two branches. The visual separation between bars clearly highlights the quantitative margins, with the CNN-based model achieving slightly higher performance in most categories. These visualizations emphasize the complementary strengths of each modality and justify their joint use in a unified fusion architecture as described in

Section 3.

To further validate the performance of the tabular branch of our proposed framework, we compare it against a set of recent and representative GAN-based models designed for intrusion detection and botnet traffic analysis.

Table 15 presents a comparative analysis of the Zero-Day GAN (ZDGAN) module against three representative state-of-the-art GAN-based approaches for tabular intrusion detection: FLGAN [

28], WCGAN + XGBoost [

29], and TransGAN-IDS [

22]. These models were selected based on their architectural similarity, applicability to zero-day or synthetic data scenarios, and the availability of reproducible results using comparable datasets such as CTU-13 and NSL-KDD. Our proposed ZDGAN consistently achieves the best results across all evaluated metrics, with an F1-score of 0.946, AUC of 0.982, and accuracy of 0.945, outperforming FLGAN by +3.1% in F1 and +2.9% in AUC. These results highlight ZDGAN’s improved generalization capacity and its ability to model rare or unseen threat patterns more effectively. The performance gains further confirm the effectiveness of our integrated pipeline, which couples synthetic data generation with ensemble transfer learning, while stating its role as a state-of-the-art solution in the domain of tabular threat detection.

To assess the effectiveness of the malware image detection branch of our proposed framework, we conducted a comparative analysis against a recent state-of-the-art GAN-based approach.

Table 16 shows the results for the malware image detection branch, comparing our pipeline to PlausMal-GAN [

18], which applies ensemble DCGAN variants for image augmentation on the Malimg dataset. Our framework surpasses PlausMal-GAN by a substantial margin (F1-score 0.973 vs. 0.922 and AUC 0.991 vs. 0.947), mainly due to the use of transfer learning and ensemble classification, which enhance robustness and feature discrimination. The observed performance gains validate the utility of synthetic image generation in conjunction with deep learning ensembles for effective malware detection. It is important to note that we limit this comparison to PlausMal-GAN and DPGAN models, as these were the only recent and publicly documented GAN-based approaches evaluated on malware image data (e.g., Malimg), with reproducible metrics and architectural transparency. No other directly comparable models currently meet these criteria within the literature.

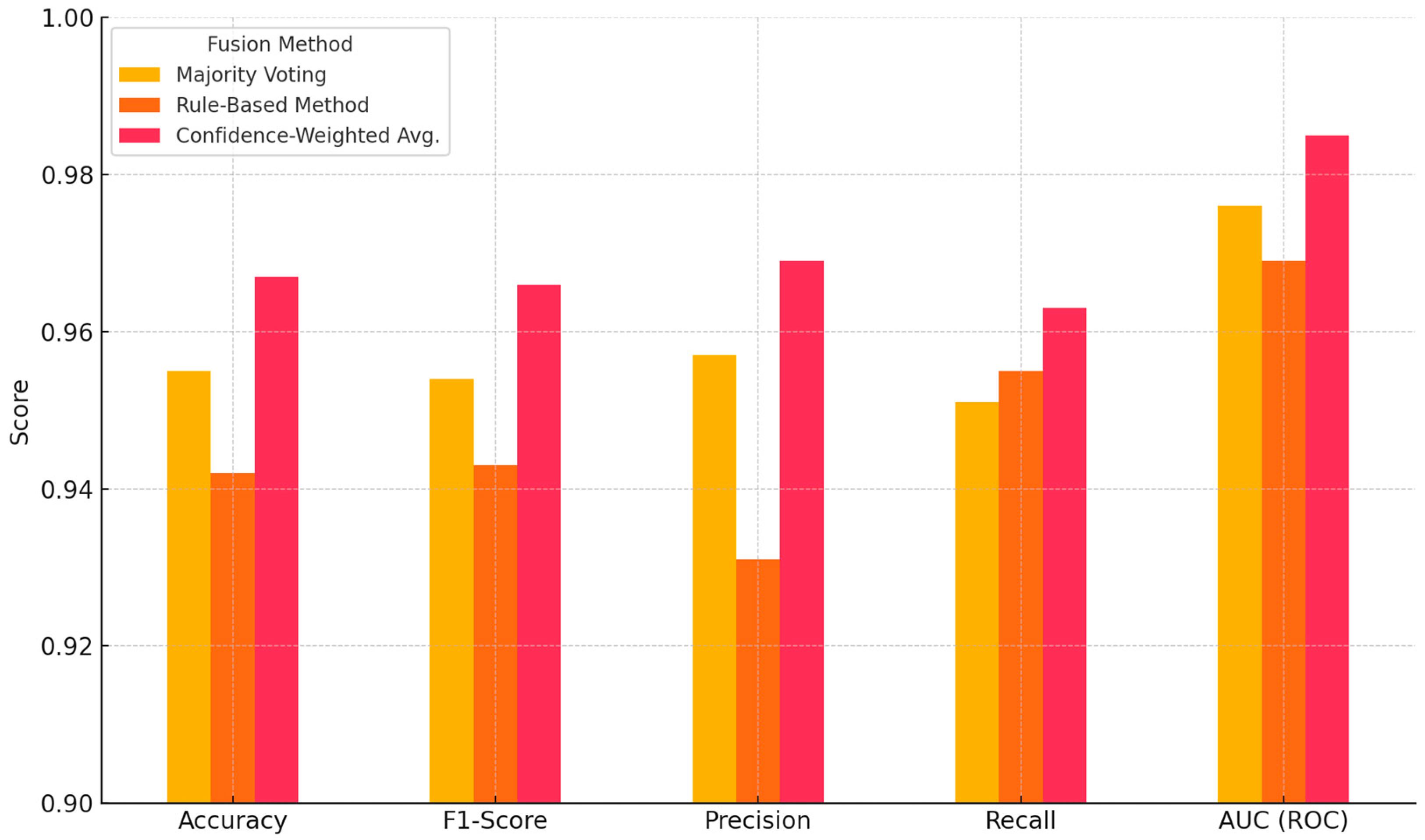

4.5. Comparison of Different Fusion Strategies

The fusion layer plays a crucial role when both data modalities are available as a given input instance, enabling the system to combine predictions from each independent model to produce a final decision. The current section of the current work evaluates and compares the performance of multiple decision-level fusion strategies used to integrate outputs from the image and tabular branches of the proposed threat detection pipeline. To this end, we selected and evaluated three distinct fusion methods, as indicated within

Section 3.5: Majority Voting, Rule-Based (Boolean Logic), and Confidence-Weighted. Each strategy was applied to a subset of test samples that included both image-based and tabular-based data, enabling multimodal fusion under realistic simulation environments. The goal was to measure the efficiency of each method in terms of threat detection accuracy, precision, recall, F1-score, and false positive rate when both predictions are integrated rather than taken from a single branch individually.

Table 17 highlights the comparative results for each algorithm, respectively. As it’s obvious, among the methods tested, Confidence-Weighted Averaging outperformed the others across the most key evaluation metrics, achieving a superior balance between precision and recall, as well as the lowest false positive rate. These results confirm the effectiveness, robustness, and operational feasibility of dynamically weighting predictions based on the relative confidence levels of each model’s certainty, especially in heterogeneous data environments.

Figure 10 illustrates the F1-score and AUC performance of each strategy, highlighting the performance results of probabilistic combination techniques over simpler rules like majority voting or Boolean logic.

The evaluation confirms that the integrative framework can adapt to multiple data formats and leverage synthetic augmentation to strengthen detection robustness, while the selected fusion mechanism plays a key role in enhancing decision-making’s reliability across the framework.

To further validate the importance of each individual component integrated in our proposed multimodal threat detection framework, we conducted ablation experiments isolating or removing core modules, namely synthetic data generation, transfer learning, and decision-level fusion layer. This allows us to quantify their respective contributions to the overall system performance. To this end, four configurations were considered and evaluated using the same test dataset. Full Model: All components enabled (considered as the main experiment in the current study).

No GAN-based Augmentation: Real data only, synthetic samples removed.

No Transfer Learning: CNN trained from scratch on malware images.

No Decision-Level Fusion Layer: Only tabular branch used (ignoring image input).

Table 18 summarizes the ablation experiment results for the selected configurations.

The results confirm that each module provides meaningful performance gains. Removing GAN-based augmentation resulted in a notable F1-score drop of 3.9% and a 6.4% decrease in accuracy, highlighting the critical role of synthetic data in improving generalization and supporting rare threat detection. Eliminating transfer learning also reduced F1-score by 2.7% and accuracy by 4.4%, underscoring the advantage of leveraging pretrained feature extractors for image-based malware detection. Lastly, bypassing the decision-level fusion led to a 2.2% drop in F1-score and a reduction in overall precision, confirming the added value of combining multimodal predictions to improve robustness and reduce false positives. These findings reinforce the architectural design choices made in this study and validate the integrative contribution of each module within the proposed framework.

5. Conclusions and Future Work

The current study presented an integrative framework for advanced cybersecurity threat detection that combines GAN-based synthetic data generation, modality-specific classifiers, and decision-level fusion strategies. Through a modular and adaptable architecture, the system efficiently processes both image-based malware samples and structured tabular network intrusion data. Experimental results demonstrated high detection accuracy performance for each individual branch, particularly 97.2% for the CNN-based malware detector and 94.5% for the ensemble tabular classifier. In parallel, the confidence-weighted averaging fusion strategy resulted in the best overall performance, achieving 96.7% accuracy and 98.5% AUC, confirming the added value of the addition of the multimodal fusion, as the last layer of the suggested pipeline, over standalone detection components.

The framework’s robustness was validated across diverse threat types, including zero-day exploits and botnet intrusions. Synthetic data generated through the selected ZDGAN and DCGAN models significantly improved generalization and resilience to rare or unseen threats. Moreover, the architecture’s ability to handle heterogeneous data streams and perform input-type recognition dynamically highlights its adaptability to real-world deployment environments. The extensive evaluation highlighted its scalability, modularity, and extensibility, making it suitable for cybersecurity infrastructures such as intrusion detection systems, malware analysis sandboxes, and endpoint security platforms.

The proposed multimodal framework has been designed with deployment adaptability in mind. Its modular architecture enables seamless integration into real-world IDS environments such as Security Information and Event Management (SIEM) or Extended Detection and Response (XDR) platforms. Each processing branch can function independently or jointly, depending on available data inputs, allowing the system to adapt to partial or multimodal threat contexts. Modern SIEM/XDR systems increasingly support the integration of external AI/ML modules, either through dedicated APIs, plugin environments, or data enrichment pipelines, making it feasible to deploy our threat detection branches as external microservices or real-time classification components. Additionally, the inclusion of an input-type recognition layer and support for configurable logging and fusion strategies makes the pipeline suitable for deployment within malware sandboxing tools, enterprise detection networks, or endpoint monitoring environments. These features, combined with its low computational footprint and reliance on standard deep learning components, ensure that the proposed system is not only theoretically sound but also practically feasible.

Despite the strengths of the proposed framework, some limitations should be acknowledged. The experiments were conducted using public benchmark datasets, which may not fully reflect the complexity and variability of real-world network traffic. Furthermore, while efforts were made to validate the synthetic data’s quality and diversity, its real-world representativeness cannot be guaranteed. The framework also assumes clean input labels and no adversarial manipulation, which might not be held in dynamic or hostile environments. Future work could address these limitations through deployment in live traffic settings, adversarial robustness evaluation, and integration of additional data modalities such as behavioral or textual data.

Future additions to this framework can explore the inclusion of additional modalities, such as user activity logs, behavioral analytics, and natural language textual inputs (e.g., phishing emails or threat intelligence feeds). The integration of online learning components could further enhance adaptability in dynamic environments. Moreover, the incorporation of explainability mechanisms (e.g., SHAP or LIME) can be used to interpret model decisions and increase transparency for cybersecurity analysts. Additionally, the framework’s design is also extensible to accommodate future advances in AI, including alternative deep learning (DL) architectures and large language models (LLMs), enabling hybrid reasoning or semantic enrichment. Lastly, real-time benchmarking on live network traffic and adversarial robustness evaluations will be explored to advance the framework’s maturity toward production-grade deployment. In addition to the existing fusion strategies evaluated within this study, future work could explore the integration of information market-based decision fusion approaches, which offer a theoretically grounded framework for aggregating classifier outputs based on their information value or utility. By modeling each detection branch (e.g., image-based and tabular classifiers) as a “market participant” that submits probabilistic results, this method would enable more dynamic, context-aware, and trust-sensitive fusion for enhancing decision reliability, especially under ambiguous, adversarial, or partially observable conditions.