A Rotation Target Detection Network Based on Multi-Kernel Interaction and Hierarchical Expansion

Abstract

1. Introduction

- (1)

- Progressive Scale Variation: In the same scene, targets of different sizes (such as various ships of different sizes) exhibit continuous progressive changes; this progressive variation in scale poses significant challenges to traditional target detection algorithms, as detectors with fixed-scale designs often struggle to accurately detect targets of all sizes simultaneously. To address the issues caused by scale variation, existing methods commonly use multi-level structures such as feature pyramids for feature fusion. Although increasing the number of stacked layers can cover a wider scale range to some extent, this significantly increases computational complexity; conversely, reducing the number of layers compromises detection accuracy. Consequently, existing methodologies face substantial challenges in striking an optimal balance between detection accuracy and computational efficiency. Against this backdrop, LSKNet [26] proposes a method aimed at selectively expanding the spatial receptive field for large-scale targets to capture more scene contextual information. This is achieved by introducing large-kernel convolutions and dilated convolutions into the backbone network. However, large-kernel convolutions may introduce substantial background noise, which negatively impacts the precise detection of small targets. On the other hand, while dilated convolutions have advantages in expanding the receptive field, they may overlook fine-grained information within the receptive field, which can lead to overly sparse feature representations and affect detection accuracy.

- (2)

- Coupling of Scale and Orientation: In remote sensing target detection tasks, existing methods typically model scale variation or orientation variation independently, while overlooking the deep coupling relationship between the two. When the target size is large, the features extracted within the target often fail to fully cover the entire target due to the limited local receptive field of convolutional operations, resulting in the absence of critical directional information in local features. This phenomenon makes it difficult to accurately capture the target’s orientation and shape structure by relying solely on local scale features, thereby affecting the accuracy of target detection. Therefore, the increase in target scale not only exacerbates the locality problem of feature extraction but also indirectly weakens the orientation perception ability. To effectively address this challenge, it is necessary to first perform fine-grained scale modeling during feature extraction in order to ensure that local features have sufficient global perception capabilities. Subsequently, by integrating an orientation modeling mechanism, the method can fully exploit the expressive ability of target orientation features under the background of scale variation, thereby achieving collaborative perception and enhancement of scale and orientation and improving the adaptability and robustness of detectors in complex remote sensing scenarios.

- (1)

- This paper systematically analyzes and reveals the deep coupling relationship between target scale variation and direction perception ability in remote sensing images for the first time. When the target scale is large, local feature extraction is limited by the receptive field range, making it difficult for local features to effectively express the overall direction information of the target, which affects the accuracy of target positioning and recognition. To address this phenomenon, in this paper we begin with scale modeling, then integrate direction modeling strategies to achieve collaborative perception and enhancement of scale and direction, thereby providing a new idea that can improve comprehensive scale-direction modeling capability in remote sensing target detection.

- (2)

- A multi-kernel contextual interaction module is constructed to effectively address the problem of gradual target scale changes. Based on existing multi-scale feature extraction methods, this paper adopts a parallel arrangement structure of non-dilated deep convolution kernels with different sizes to achieve efficient extraction of multi-scale features and modeling of local contextual information. This design not only effectively alleviates the feature sparsity problem caused by dilated convolutions but also improves the density and integrity of feature representation, thereby enhancing the detector’s modeling capability for targets of different scales in complex remote sensing scenes.

- (3)

- Through a collaborative optimization framework of the multi-kernel contextual interaction module and hierarchical expansion attention mechanism, the proposed method can establish an effective complementary relationship between feature extraction at different scales and global contextual modeling, thereby significantly improving the performance of remote sensing target detection.

- (4)

- Finally, an anchor box convergence method is proposed; by generating boundary center offset amounts for external oriented rectangular anchor boxes using pre-extracted fine-scale feature information, this offset regression method compensates for the problem of drastic changes in loss functions caused by angle regression while making the anchor boxes converge compactly and accurately.

2. Related Works

2.1. Rotation Feature Extraction

2.2. Bounding Box Representation

3. Methodology

3.1. MCI Module

3.2. HEA Module

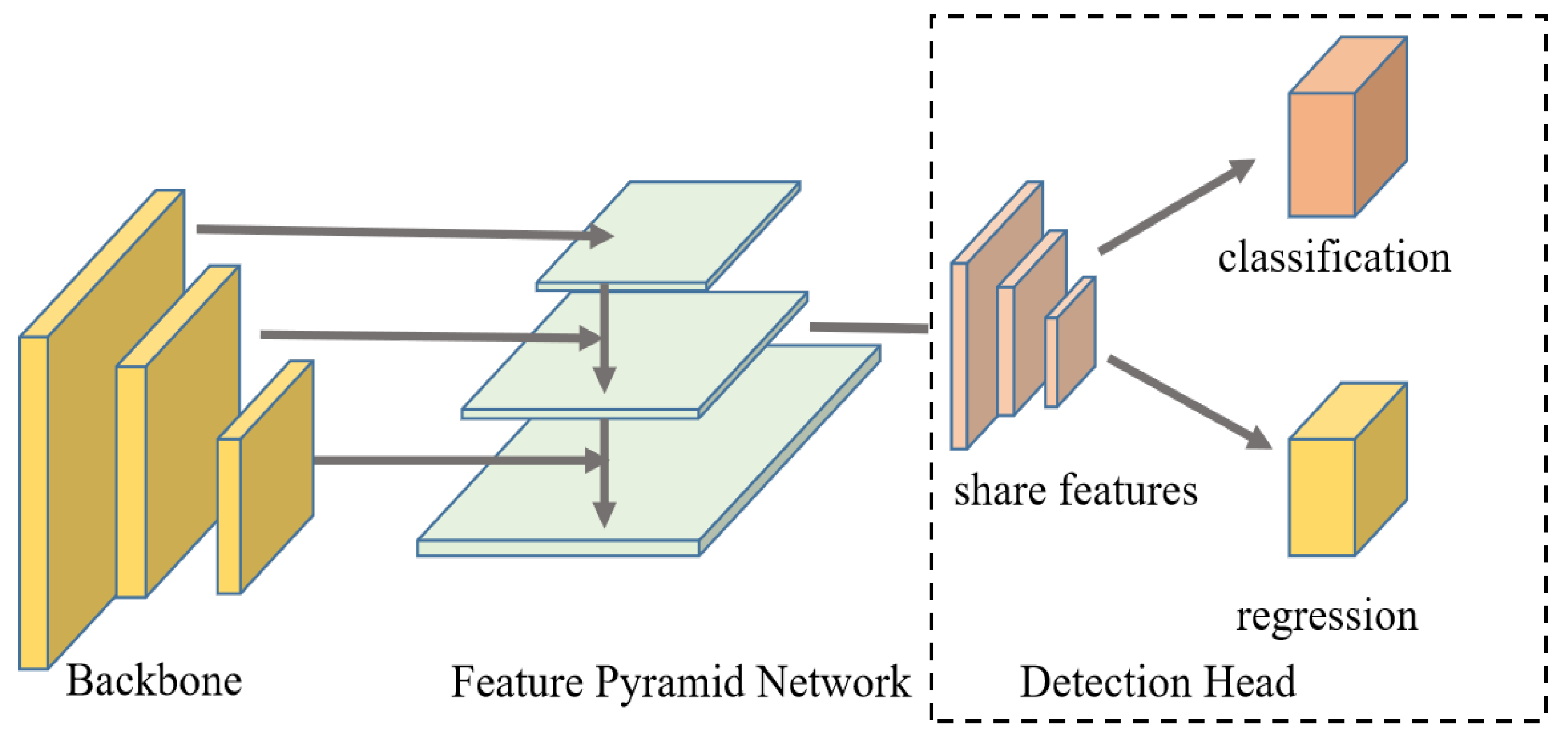

3.3. Overall Structure

3.4. Midpoint Offset Loss Function

4. Experiments

4.1. Datasets

4.2. Experimental Evaluation Metrics

4.3. Parameter Setting

4.4. Ablation Experiment

4.5. Comparison of Results for Different Datasets

4.5.1. Evaluation on DOTA

4.5.2. Evaluation on HRSC2016

4.5.3. Evaluation on UCAS-AOD

4.5.4. Failure Case Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mei, J.; Zheng, Y.B.; Cheng, M.M. D2ANet: Difference-aware attention network for multi-level change detection from satellite imagery. Comput. Vis. Media 2023, 9, 563–579. [Google Scholar]

- Sun, X.; Tian, Y.; Lu, W.; Wang, P.; Niu, R.; Yu, H.; Fu, K. From single-to multi-modal remote sensing imagery interpretation: A survey and taxonomy. Sci. China Inf. Sci. 2023, 66, 140301. [Google Scholar]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar]

- Zhang, J.; Zhang, R.; Xu, L.; Lu, X.; Yu, Y.; Xu, M.; Zhao, H. Fastersal: Robust and real-time single-stream architecture for rgb-d salient object detection. IEEE Trans. Multimed. 2024, 27, 2477–2488. [Google Scholar]

- Zhang, R.; Yang, B.; Xu, L.; Huang, Y.; Xu, X.; Zhang, Q.; Jiang, Z.; Liu, Y. A benchmark and frequency compression method for infrared few-shot object detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5001711. [Google Scholar]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A. SpectralFormer: Rethinking hyperspectral image classification with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5518615. [Google Scholar] [CrossRef]

- Chen, H.; Chu, X.; Ren, Y.; Zhao, X.; Huang, K. Pelk: Parameter-efficient large kernel convnets with peripheral convolution. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5557–5567. [Google Scholar]

- Huang, J.; Yuan, X.; Lam, C.T.; Ke, W.; Huang, G. Large kernel convolution application for land cover change detection of remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104077. [Google Scholar]

- Hou, Q.; Lu, C.Z.; Cheng, M.M.; Feng, J. Conv2former: A simple transformer-style convnet for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 8274–8283. [Google Scholar]

- Hu, Z.; Gao, K.; Zhang, X.; Wang, J.; Wang, H.; Yang, Z.; Li, C.; Li, W. EMO2-DETR: Efficient-matching oriented object detection with transformers. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5616814. [Google Scholar]

- Deng, C.; Jing, D.; Han, Y.; Wang, S.; Wang, H. FAR-Net: Fast anchor refining for arbitrary-oriented object detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6505805. [Google Scholar]

- Deng, C.; Jing, D.; Han, Y.; Chanussot, J. Toward hierarchical adaptive alignment for aerial object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5615515. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Wang, X.; Chen, H.; Chu, X.; Wang, P. AODet: Aerial Object Detection Using Transformers for Foreground Regions. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4106711. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring plain vision transformer backbones for object detection. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 280–296. [Google Scholar]

- Yang, X.; Yang, X.; Yang, J.; Ming, Q.; Wang, W.; Tian, Q.; Yan, J. Learning high-precision bounding box for rotated object detection via kullback-leibler divergence. Adv. Neural Inf. Process. Syst. 2021, 34, 18381–18394. [Google Scholar]

- Yang, X.; Zhou, Y.; Zhang, G.; Yang, J.; Wang, W.; Yan, J.; Zhang, X.; Tian, Q. The KFIoU loss for rotated object detection. arXiv 2022, arXiv:2201.12558. [Google Scholar]

- Pu, Y.; Wang, Y.; Xia, Z.; Han, Y.; Wang, Y.; Gan, W.; Wang, Z.; Song, S.; Huang, G. Adaptive rotated convolution for rotated object detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6589–6600. [Google Scholar]

- Zhang, R.; Cao, Z.; Huang, Y.; Yang, S.; Xu, L.; Xu, M. Visible-infrared person re-identification with real-world label noise. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4857–4869. [Google Scholar]

- Zhu, H.; Jing, D. Optimizing slender target detection in remote sensing with adaptive boundary perception. Remote Sens. 2024, 16, 2643. [Google Scholar]

- Yang, X.; Yan, J.; Ming, Q.; Wang, W.; Zhang, X.; Tian, Q. Rethinking rotated object detection with gaussian wasserstein distance loss. arXiv 2021, arXiv:2101.11952. [Google Scholar]

- Yang, X.; Yan, J. Arbitrary-oriented object detection with circular smooth label. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 677–694. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 16794–16805. [Google Scholar]

- Yuan, X.; Zheng, Z.; Li, Y.; Liu, X.; Liu, L.; Li, X.; Hou, Q.; Cheng, M.M. Strip R-CNN: Large Strip Convolution for Remote Sensing Object Detection. arXiv 2025, arXiv:2501.03775. [Google Scholar]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21), Online, 2–9 February 2021; Volume 35, pp. 3163–3171. [Google Scholar]

- Huang, Z.; Li, W.; Xia, X.G.; Wu, X.; Cai, Z.; Tao, R. A novel nonlocal-aware pyramid and multiscale multitask refinement detector for object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5601920. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2786–2795. [Google Scholar]

- Guan, J.; Xie, M.; Lin, Y.; He, G.; Feng, P. Earl: An elliptical distribution aided adaptive rotation label assignment for oriented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5619715. [Google Scholar]

- Huang, Z.; Li, W.; Xia, X.G.; Tao, R. A general Gaussian heatmap label assignment for arbitrary-oriented object detection. IEEE Trans. Image Process. 2022, 31, 1895–1910. [Google Scholar]

- Zhang, Z.; Guo, W.; Zhu, S.; Yu, W. Toward arbitrary-oriented ship detection with rotated region proposal and discrimination networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1745–1749. [Google Scholar]

- Li, C.; Xu, C.; Cui, Z.; Wang, D.; Jie, Z.; Zhang, T.; Yang, J. Learning object-wise semantic representation for detection in remote sensing imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. pp. 20–27.

- Li, Z.; Hou, B.; Wu, Z.; Guo, Z.; Ren, B.; Guo, X.; Jiao, L. Complete rotated localization loss based on super-Gaussian distribution for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5618614. [Google Scholar]

- Xia, G.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.J.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, L.; Weng, L.; Yang, Y. A high resolution optical satellite image dataset for ship recognition and some new baselines. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods ICPRAM, Porto, Portugal, 25–28 January 2017; Volume 1, pp. 324–331. [Google Scholar] [CrossRef]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic anchor learning for arbitrary-oriented object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 2355–2363. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Dong, Y. CFC-Net: A critical feature capturing network for arbitrary-oriented object detection in remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5605814. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Yang, X. Sparse label assignment for oriented object detection in aerial images. Remote Sens. 2021, 13, 2664. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning roi transformer for oriented object detection in aerial images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Yang, X.; Dong, Y. Optimization for Arbitrary-Oriented Object Detection via Representation Invariance Loss. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8021505. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Dong, Y.; Yang, X. Task interleaving and orientation estimation for high-precision oriented object detection in aerial images. ISPRS J. Photogramm. Remote Sens. 2023, 196, 241–255. [Google Scholar]

- Nabati, R.; Qi, H. Rrpn: Radar region proposal network for object detection in autonomous vehicles. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3093–3097. [Google Scholar]

- Qian, W.; Yang, X.; Peng, S.; Guo, Y.; Yan, J. Learning Modulated Loss for Rotated Object Detection. arXiv 2019, arXiv:1911.08299. [Google Scholar]

- Yang, X.; Yan, J. On the Arbitrary-Oriented Object Detection: Classification based Approaches Revisited. arXiv 2020, arXiv:2003.05597. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. arXiv 2018, arXiv:1808.01244. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October 2019–2 November 2019; pp. 9627–9636. [Google Scholar]

| Kernel Design | Parameters (MB) | FLOPs (G) | mAP |

|---|---|---|---|

| (3, 3, 3, 3, 3) | 12.62 | 62.40 | |

| (3, 5, 7, 9, 11) | 13.69 | 70.20 | |

| (3, 5, 9, 13, 17) | 14.99 | 79.57 | |

| (11, 11, 11, 11, 11) | 15.13 | 80.61 | |

| (15, 15, 15, 15, 15) | 17.44 | 92.45 |

| Kernel Number | Parameters (MB) | FLOPs (G) | mAP |

|---|---|---|---|

| 2 | 12.56 | 61.95 | |

| 3 | 12.78 | 63.57 | |

| 4 | 13.13 | 66.24 | |

| 5 | 13.69 | 70.20 | |

| 6 | 14.35 | 75.26 |

| Kernel Design | Parameters (MB) | FLOPs (G) | mAP |

|---|---|---|---|

| (3, 3, 3) | 13.50 | 68.95 | |

| (5, 5, 5) | 13.52 | 69.08 | |

| (5, 7, 7) | 13.54 | 69.21 | |

| (7, 11, 11) | 13.58 | 69.47 | |

| Expansive | 13.69 | 70.20 |

| Stage Apply | Parameters (MB) | FLOPs (G) | mAP |

|---|---|---|---|

| None | 12.03 | 61.72 | |

| 1 | 12.19 | 64.04 | |

| 2 | 12.31 | 65.45 | |

| 3 | 12.97 | 66.59 | |

| ALL | 13.69 | 70.20 |

| With MCI | With HEA | With Midpoint Offset | mAP |

|---|---|---|---|

| ✗ | ✗ | ✗ | |

| ✓ | ✗ | ✗ | |

| ✗ | ✓ | ✗ | |

| ✗ | ✗ | ✓ | |

| ✓ | ✓ | ✓ |

| With MCI | With HEA | With Midpoint Offset | mAP |

|---|---|---|---|

| ✗ | ✗ | ✗ | |

| ✓ | ✓ | ✗ | |

| ✗ | ✓ | ✓ | |

| ✓ | ✗ | ✓ | |

| ✓ | ✓ | ✓ |

| Methods | Backbone | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DAL [39] | ResNet-101 | 88.68 | 76.55 | 45.08 | 66.80 | 67.00 | 76.76 | 79.74 | 90.84 | 79.54 | 78.45 | 57.71 | 62.27 | 69.05 | 73.14 | 60.11 | 71.44 |

| CFC-Net [40] | ResNet-101 | 89.08 | 80.41 | 52.41 | 70.02 | 76.28 | 78.11 | 87.21 | 90.89 | 84.47 | 85.64 | 60.51 | 61.52 | 67.82 | 68.02 | 50.09 | 73.50 |

| SLA [41] | ResNet-50 | 88.33 | 84.67 | 48.78 | 73.34 | 77.47 | 77.82 | 86.53 | 90.72 | 86.98 | 86.43 | 58.86 | 68.27 | 74.10 | 73.09 | 69.30 | 76.36 |

| RoI Transformer [42] | ResNet-101 | 88.64 | 78.52 | 43.44 | 75.92 | 68.81 | 73.68 | 83.59 | 90.74 | 77.27 | 81.46 | 58.39 | 53.54 | 62.83 | 58.93 | 47.67 | 69.56 |

| RIDet-O [43] | ResNet-101 | 88.94 | 78.45 | 46.87 | 72.63 | 77.63 | 80.68 | 88.18 | 90.55 | 81.33 | 83.61 | 64.85 | 63.72 | 73.09 | 73.13 | 56.87 | 74.70 |

| Oriented R-CNN [44] | ResNet-50 | 89.84 | 85.43 | 61.09 | 79.82 | 78.71 | 85.35 | 88.82 | 90.88 | 86.68 | 87.73 | 72.21 | 70.80 | 82.42 | 78.18 | 74.11 | 80.87 |

| TIOE-Det [45] | DarkNet-50 | 89.76 | 85.23 | 56.32 | 76.17 | 80.17 | 85.58 | 88.41 | 90.81 | 85.93 | 87.27 | 68.32 | 70.32 | 68.93 | 78.33 | 68.87 | 78.69 |

| S2A-Net [25] | ResNet-101 | 89.28 | 84.11 | 56.95 | 79.21 | 80.18 | 82.93 | 89.21 | 90.86 | 84.66 | 87.61 | 71.66 | 68.23 | 78.58 | 78.20 | 65.55 | 79.15 |

| LSKNet-T [26] | - | 89.14 | 83.20 | 60.78 | 83.50 | 80.54 | 85.87 | 88.64 | 90.83 | 88.02 | 87.31 | 71.55 | 70.74 | 78.66 | 79.81 | 78.16 | 81.07 |

| MIHE-Net | ResNet-50 | 87.25 | 81.32 | 58.76 | 78.41 | 77.83 | 83.57 | 86.29 | 90.15 | 86.73 | 85.92 | 70.28 | 69.45 | 76.82 | 80.17 | 83.26 | 79.83 |

| MIHE-Net | ResNet-101 | 88.62 | 83.07 | 60.57 | 81.17 | 80.06 | 85.41 | 87.76 | 90.82 | 88.42 | 87.30 | 73.16 | 71.76 | 79.12 | 82.46 | 84.76 | 81.72 |

| Methods | Backbone | Parameters (MB) | FLOPs (G) | Size | mAP |

|---|---|---|---|---|---|

| RRPN [46] | ResNet101 | 44.5 | 125 | 800 × 800 | 79.08 |

| RoI-Transformer [42] | ResNet-101 | 55.1 | 200 | 512 × 800 | 86.20 |

| RSDet [47] | ResNet-50 | 28.9 | 73 | 800 × 800 | 86.5 |

| DAL [39] | ResNet-101 | 36.4 | 216 | 416 × 416 | 88.95 |

| R3Det [28] | ResNet-101 | 41.9 | 216 | 800 × 800 | 89.26 |

| SLA [41] | ResNet-101 | 49.0 | 130 | 768 × 768 | 89.51 |

| CSL [48] | ResNet-50 | 37.4 | 236 | 800 × 800 | 89.62 |

| GWD [23] | ResNet-101 | 55.2 | 121 | 800 × 800 | 89.85 |

| TIOE-Det [45] | ResNet-101 | 48.6 | 139 | 800 × 800 | 90.16 |

| S2A-Net [25] | ResNet-101 | 38.6 | 198 | 512 × 800 | 90.17 |

| ReDet [30] | ResNet-101 | 31.6 | 89 | 512 × 800 | 90.46 |

| Oriented RCNN [44] | ResNet-101 | 41.1 | 199 | 1333 × 800 | 90.50 |

| MIHE-Net | ResNet-101 | 13.69 | 71 | 800 × 800 | 92.43 |

| Methods | Backbone | Size | Car | Airplane | mAP |

|---|---|---|---|---|---|

| SLA [41] | ResNet-50 | 800 × 800 | 88.57 | 90.30 | 89.44 |

| TIOE-Det [45] | ResNet-50 | 800 × 800 | 88.83 | 90.15 | 89.49 |

| CornerNet [49] | Hourglass Network | 800 × 800 | 89.69 | 89.25 | 89.47 |

| FCOS [50] | ResNet-50 | 800 × 800 | 89.49 | 90.05 | 89.77 |

| RIDet-O [43] | ResNet-50 | 800 × 800 | 88.88 | 90.35 | 89.62 |

| DAL [39] | ResNet-50 | 800 × 800 | 89.25 | 90.49 | 89.87 |

| S2A-Net [25] | ResNet-50 | 800 × 800 | 89.56 | 90.42 | 89.99 |

| MIHE-Net | ResNet-101 | 800 × 800 | 91.71 | 92.01 | 91.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Xu, G.; Jing, D. A Rotation Target Detection Network Based on Multi-Kernel Interaction and Hierarchical Expansion. Appl. Sci. 2025, 15, 8727. https://doi.org/10.3390/app15158727

Wang Q, Xu G, Jing D. A Rotation Target Detection Network Based on Multi-Kernel Interaction and Hierarchical Expansion. Applied Sciences. 2025; 15(15):8727. https://doi.org/10.3390/app15158727

Chicago/Turabian StyleWang, Qi, Guanghu Xu, and Donglin Jing. 2025. "A Rotation Target Detection Network Based on Multi-Kernel Interaction and Hierarchical Expansion" Applied Sciences 15, no. 15: 8727. https://doi.org/10.3390/app15158727

APA StyleWang, Q., Xu, G., & Jing, D. (2025). A Rotation Target Detection Network Based on Multi-Kernel Interaction and Hierarchical Expansion. Applied Sciences, 15(15), 8727. https://doi.org/10.3390/app15158727