EEG-Based Attention Classification for Enhanced Learning Experience

Abstract

1. Introduction

Related Work

- Development of a real-time EEG-based neuroadaptive learning framework integrating frequency band selection, graph-theoretic connectivity, and interpretable classification.

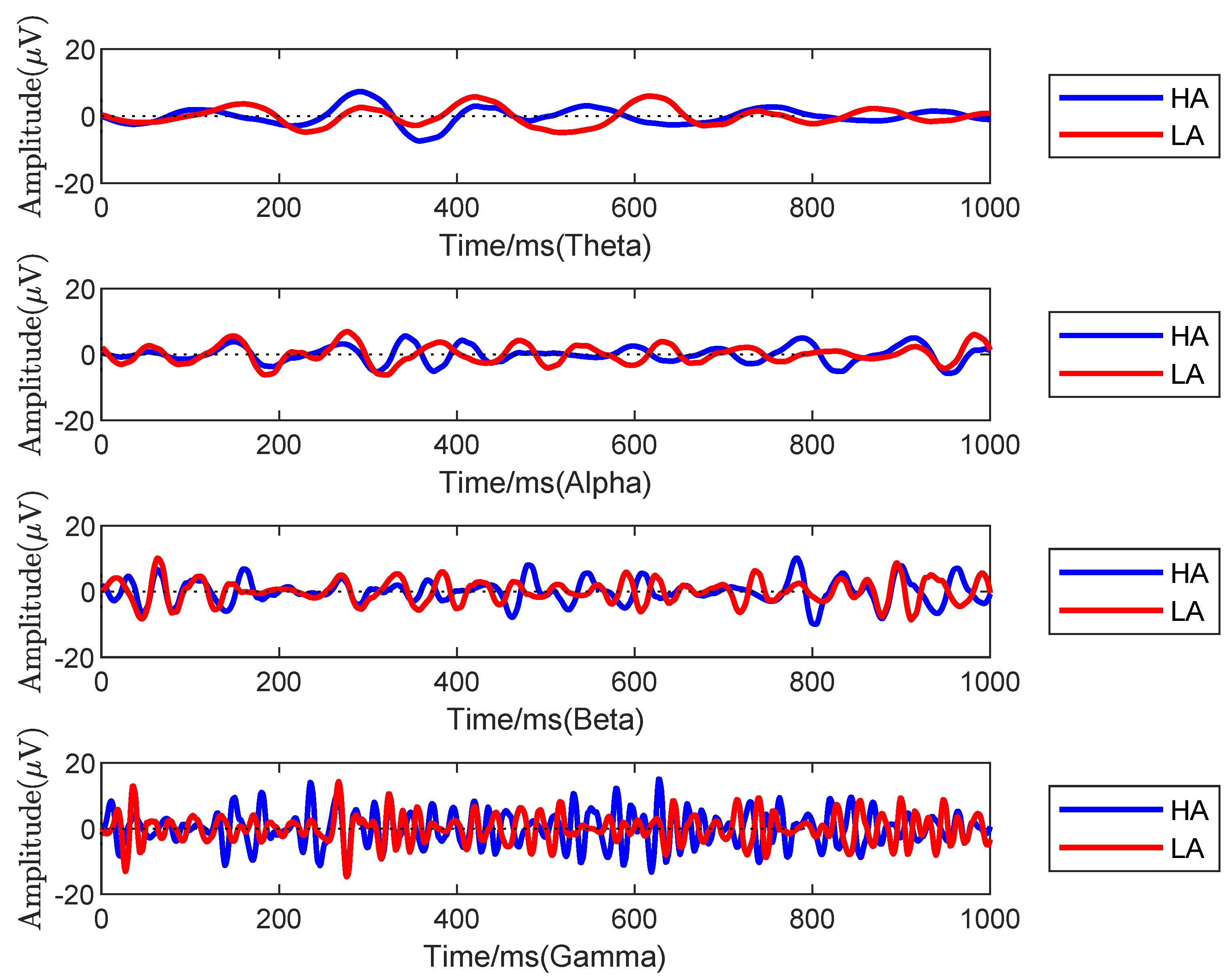

- Empirical validation showing gamma band superiority for HA/LA classification, supported by connectivity metrics.

- Demonstration of improved engagement and learning efficiency through dynamic task adjustment based on real-time EEG feedback.

- A practical implementation tailored for Mandarin vocabulary learning, with potential generalizability to broader educational contexts.

2. Materials and Methods

2.1. Task Design and Stimuli

- Response accuracy: Correct answers during recall were considered indicative of high attention (HA), while incorrect answers signaled low attention (LA).

2.2. Noise Removal and Artifact Correction

- Filtering: A 4th-order Butterworth bandpass filter (1–40 Hz) was applied to the EEG signals to retain relevant frequency components while removing low-frequency drifts and high-frequency noise. Additionally, a 50 Hz notch filter was used to suppress power line interference.

- Bad Channel Detection: Noisy EEG channels were identified through a combination of visual inspection and automated thresholding, using an absolute amplitude criterion of ±80 µV.

- Independent Component Analysis (ICA): ICA decomposition was performed using the runica algorithm in EEGLAB. The number of components was set equal to the number of EEG channels (after bad channel exclusion). Components with high correlation () to vertical and horizontal EOG signals were removed to eliminate ocular artifacts, following the guidelines of Delorme et al. (2007) [21].

- Channel Interpolation: Following ICA, bad channels were interpolated using spherical spline interpolation in EEGLAB. This order was chosen to preserve the full rank of the data, ensuring accurate component separation, as recommended by Kim et al. (2023) [22].

- Baseline Correction: Baseline correction was applied using the to prestimulus interval relative to stimulus onset, to eliminate low-frequency drifts and enable consistent epoch comparisons.

- Epoch Rejection: EEG epochs exceeding an absolute amplitude of µV were automatically rejected. This step removed approximately 5% of the total data.

- Re-referencing: All EEG signals were re-referenced to the common average reference to reduce spatial bias and enhance overall signal quality.

2.3. Frequency Division Using Wavelet Transform

2.4. Feature Extraction

Phase Correlation and Weighted Networks

2.5. Network Construction and Thresholding

2.6. Brain Functional Network Analysis (BFNs)

- Network Degree (ND): , which represents the total number of edges connected to node i, where is the corresponding element in the adjacency matrix.

- Clustering Coefficient (Cc): , where is the number of triangles through node i, and is the degree of node i. This metric evaluates the local interconnectivity or cliquishness of a node’s neighborhood.

- Path Length (PL): , the average of the shortest path lengths between all pairs of nodes i and j. It reflects the network’s overall routing efficiency.

- Global Efficiency (GE): , which quantifies the efficiency of information exchange across the whole network by considering the inverse of the shortest path lengths.

- Network Density (NDn): , where E is the number of existing edges and N is the number of nodes. This metric describes how densely the network is connected.

- Small-World Network Coefficient (SW): The small-world property was quantified using the small-worldness coefficient:where and are the clustering coefficient and characteristic path length of the actual brain network, and , represent the same metrics computed from randomized networks that preserve node degree distributions.

- Small-Worldness Criterion: The network is considered to exhibit small-world characteristics when:indicating higher clustering and similar or shorter path lengths than a random graph.

2.7. Statistical Analysis

2.8. Feature Reduction and Classification

2.9. Implementing Real-Time Neurofeedback-Based Learning Using the EAC Model

2.10. Data Analysis

3. Results

3.1. Training the k-NN Classifier

3.2. Real-Time Neurofeedback-Based Learning

4. Discussion

Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, D.; Huang, H.; Bao, X.; Pan, J.; Li, Y. An EEG-based attention recognition method: Fusion of time domain, frequency domain, and non-linear dynamics features. Front. Neurosci. 2023, 17, 1194554. [Google Scholar] [CrossRef] [PubMed]

- Debettencourt, M.T.; Cohen, J.D.; Lee, R.F.; Norman, K.A.; Turk-Browne, N.B. Closed-loop training of attention with real-time brain imaging. Nat. Neurosci. 2015, 18, 470–475. [Google Scholar] [CrossRef] [PubMed]

- Hagmann, P.; Cammoun, L.; Gigandet, X.; Meuli, R.; Honey, C.J.; Wedeen, V.J.; Sporns, O. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008, 6, e159. [Google Scholar] [CrossRef] [PubMed]

- Walter, C.; Rosenstiel, W.; Bogdan, M.; Gerjets, P.; Spüler, M. Online EEG-based workload adaptation of an arithmetic learning environment. Front. Hum. Neurosci. 2017, 11, 286. [Google Scholar] [CrossRef] [PubMed]

- Mills, C.; Fridman, I.; Soussou, W.; Waghray, D.; Olney, A.M.; D’Mello, S.K. Put your thinking cap on: Detecting cognitive load using EEG during learning. In Proceedings of the 7th International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 80–89. [Google Scholar]

- Apicella, A.; Arpaia, P.; Frosolone, M.; Improta, G.; Moccaldi, N.; Pollastro, A. EEG-Based measurement system for student engagement detection in Learning 4.0. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 5857. [Google Scholar] [CrossRef]

- Lingelbach, K.; Gado, S.; Bauer, W. Neuro-adaptive tutoring systems. Competence Dev. Learn. Assist. Syst. Data-Driven Future 2021, 243–260. [Google Scholar] [CrossRef]

- Rehman, A.U.; Shi, X.; Ullah, F.; Wang, Z.; Ma, C. Measuring student attention based on EEG brain signals using deep reinforcement learning. Expert Syst. Appl. 2025, 269, 126426. [Google Scholar] [CrossRef]

- Wang, H.; Chang, W.; Zhang, C. Functional brain network and multichannel analysis for the P300-based brain computer interface system of lying detection. Expert Syst. Appl. 2016, 53, 117–128. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, T.; Hu, D.; Cao, J. EEG Channel and Spectrum Weighting Based Attention State Evaluation. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 8597–8601. [Google Scholar]

- Hua, C.; Wang, H.; Wang, H.; Lu, S.; Liu, C.; Khalid, S.M. A novel method of building functional brain network using deep learning algorithm with application in proficiency detection. Int. J. Neural Syst. 2019, 29, 1850015. [Google Scholar] [CrossRef] [PubMed]

- Gogna, Y.; Tiwari, S.; Singla, R. Towards a versatile mental workload modeling using neurometric indices. Biomed. Eng./Biomed. Technik 2023, 68, 297–316. [Google Scholar] [CrossRef] [PubMed]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 355–361. [Google Scholar] [CrossRef]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef] [PubMed]

- Renton, A.I.; Painter, D.R.; Mattingley, J.B. Optimising the classification of feature-based attention in frequency-tagged electroencephalography data. Sci. Data 2022, 9, 296. [Google Scholar] [CrossRef] [PubMed]

- Gamboa, P.; Varandas, R.; Rodrigues, J.; Cepeda, C.; Quaresma, C.; Gamboa, H. Attention classification based on biosignals during standard cognitive tasks for occupational domains. Computers 2022, 11, 49. [Google Scholar] [CrossRef]

- Verma, D.; Bhalla, S.; Santosh, S.V.S.; Yadav, S.; Parnami, A.; Shukla, J. AttentioNet: Monitoring Student Attention Type in Learning with EEG-Based Measurement System. In Proceedings of the 2023 11th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, MA, USA, 10–13 September 2023; pp. 1–8. [Google Scholar]

- Tuckute, G.; Hansen, S.T.; Kjaer, T.W.; Hansen, L.K. Real-time decoding of attentional states using closed-loop EEG neurofeedback. Neural Comput. 2021, 33, 967–1004. [Google Scholar] [CrossRef] [PubMed]

- Syed, M.K.; Wang, H. EEG analysis of the Brain Language Processing oriented to Intelligent Teaching Robot. In Proceedings of the 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Shenyang, China, 24–27 August 2018; pp. 278–281. [Google Scholar]

- EEGLAB. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. Available online: https://sccn.ucsd.edu/eeglab/ (accessed on 16 December 2024).

- Delorme, A.; Sejnowski, T.; Makeig, S. Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage 2007, 34, 1443–1449. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Luo, J.; Chu, S.; Cannard, C.; Hoffmann, S.; Miyakoshi, M. ICA’s bug: How ghost ICs emerge from effective rank deficiency caused by EEG electrode interpolation and incorrect re-referencing. Front. Signal Process. 2023, 3, 1064138. [Google Scholar] [CrossRef]

- Niimura, Y.; Takemoto, J.; Kai, A.; Nakagawa, S. Attention-based CNN and Relative Phase Feature Modeling for Improved Imagined Speech Recognition. In Proceedings of the 2023 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Taipei, Taiwan, 31 October–3 November 2023; pp. 8–14. [Google Scholar]

- Stam, C.J. Characterization of anatomical and functional connectivity in the brain: A complex networks perspective. Int. J. Psychophysiol. 2010, 77, 186–194. [Google Scholar] [CrossRef] [PubMed]

- Sawicki, J.; Hartmann, L.; Bader, R.; Schöll, E. Modelling the perception of music in brain network dynamics. Front. Netw. Physiol. 2022, 2, 910920. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Wang, Y.; Fan, L.; Yu, Y.; Zhao, Z.; Zeng, P.; Wang, K.; Li, N.; Shen, H. Decoding Subject-Driven Cognitive States from EEG Signals for Cognitive Brain–Computer Interface. Brain Sci. 2024, 14, 498. [Google Scholar] [CrossRef] [PubMed]

| Variable | Condition | Mean | Std. Dev. | p-Value | Cohen’s d | Comparison |

|---|---|---|---|---|---|---|

| Theta | HA | 0.5212 | 0.2528 | 0.031 | HA > LA | |

| LA | 0.5136 | 0.2356 | ||||

| Alpha | HA | 0.4462 | 0.2551 | HA < LA | ||

| LA | 0.4520 | 0.2354 | ||||

| Beta | HA | 0.3656 | 0.2455 | HA < LA | ||

| LA | 0.3819 | 0.2368 | ||||

| Gamma | HA | 0.3620 | 0.2420 | HA < LA | ||

| LA | 0.3853 | 0.2349 |

| Parameter | Theta | Alpha | Beta | Gamma | p-Value | ||||

|---|---|---|---|---|---|---|---|---|---|

| HA | LA | HA | LA | HA | LA | HA | LA | ||

| CC | 0.7767 | 0.7337 | 0.8322 | 0.6636 | 0.8695 | 0.7899 | 0.8650 | 0.8270 | 0.06 |

| PL | 1.3070 | 1.3643 | 1.1636 | 1.3135 | 1.1269 | 1.1773 | 1.1528 | 1.2123 | 0.04 |

| C_real | 1.1184 | 1.0754 | 0.9995 | 1.1095 | 1.0148 | 1.0291 | 1.0379 | 0.9997 | 0.06 |

| PL_real | 1.0005 | 1.0235 | 1.0004 | 1.0005 | 0.9996 | 1.0000 | 1.0004 | 1.0046 | 0.03 |

| Degree | 15.53 | 14.73 | 20.87 | 15.20 | 22.47 | 20.27 | 21.33 | 19.13 | 0.02 |

| Band | NA + KNN | NA + PCA + KNN | ||||||

|---|---|---|---|---|---|---|---|---|

| Acc. | Prec. | Rec. | F1-Scr. | Acc. | Prec. | Rec. | F1-Scr. | |

| Theta | 71.67 * | 72.89 * | 69.00 | 70.89 | 80.00 * | 78.00 | 81.25 * | 80.00 * |

| Alpha | 74.83 | 73.96 | 76.67 * | 75.29 * | 80.00 * | 80.00 * | 80.00 * | 80.00 * |

| Beta | 83.17 * | 85.41 * | 80.00 | 82.62 | 83.00 | 80.33 | 84.86 | 83.00 |

| Gamma | 89.67 * | 92.20 * | 86.67* | 89.35 * | 91.00 * | 89.00 * | 92.71 * | 91.00 * |

| Aspect | Details |

|---|---|

| Model Name | EEG Adaptive Classification (EAC) Model |

| Purpose | Real-time quiz difficulty adjustment based on attention levels |

| Performance Metric | Classification accuracy, precision, recall, and F1-Score |

| Attention State | Performance Metrics |

| High Attention (HA) | Accuracy: 87% Precision: 91% Recall: 87% F1-Score: 88% |

| Low Attention (LA) | Accuracy: 87% Precision: 83% Recall: 85% F1-Score: 84% |

| Metric | Before EAC Adjustment | After EAC Adjustment |

|---|---|---|

| Average Correct Answers (%) | 71% | 89% |

| Standard Deviation (%) | 15% | 10% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Syed, M.K.; Wang, H.; Siddiqi, A.A.; Qureshi, S.; Gouda, M.A. EEG-Based Attention Classification for Enhanced Learning Experience. Appl. Sci. 2025, 15, 8668. https://doi.org/10.3390/app15158668

Syed MK, Wang H, Siddiqi AA, Qureshi S, Gouda MA. EEG-Based Attention Classification for Enhanced Learning Experience. Applied Sciences. 2025; 15(15):8668. https://doi.org/10.3390/app15158668

Chicago/Turabian StyleSyed, Madiha Khalid, Hong Wang, Awais Ahmad Siddiqi, Shahnawaz Qureshi, and Mohamed Amin Gouda. 2025. "EEG-Based Attention Classification for Enhanced Learning Experience" Applied Sciences 15, no. 15: 8668. https://doi.org/10.3390/app15158668

APA StyleSyed, M. K., Wang, H., Siddiqi, A. A., Qureshi, S., & Gouda, M. A. (2025). EEG-Based Attention Classification for Enhanced Learning Experience. Applied Sciences, 15(15), 8668. https://doi.org/10.3390/app15158668