1. Introduction

The convergence of artificial intelligence and Internet of Things (IoT) technologies is reshaping modern healthcare into a smart healthcare paradigm. In smart healthcare systems, numerous sensors and connected devices continuously monitor patients’ physiological signals (heart rate, blood pressure, blood glucose, etc.) and environmental conditions, enabling real-time health monitoring and medical support outside traditional clinical settings [

1,

2,

3,

4]. However, leveraging this IoT data deluge for meaningful medical insights presents critical challenges [

5]. Transmitting sensitive health data from distributed devices to cloud servers raises concerns about privacy, security, and bandwidth constraints in resource-limited settings [

6,

7]. Furthermore, conventional AI approaches often act as “black boxes” with limited transparency and adaptability to changing patient needs, making it difficult to interpret sensor data or trust automated decisions [

8]. Addressing these challenges requires innovative architectures that embed cognitive intelligence directly within IoT networks, transforming raw data into actionable knowledge in a secure and explainable manner.

Recent advances in artificial consciousness (AC) and cognitive modeling [

9] suggest a pathway to endow IoT-based systems with human-like reasoning and awareness. In particular, Duan et al. proposed the DIKWP model (Data–Information–Knowledge–Wisdom–Purpose) [

10], which establishes a theoretical framework for structured cognitive processing guided by high-level goals or intentions [

11,

12]. The DIKWP model extends the classic DIKW hierarchy by adding “Purpose” as a top-tier element that drives and contextualizes the transformation of data into wisdom [

12]. Each stage in the DIKWP hierarchy corresponds to a cognitive function: acquiring data (raw signals), extracting information (meaningful features), building knowledge (models or patterns), and deriving wisdom (actionable decisions), all under the influence of a guiding purpose (objectives or intent) [

12,

13]. By incorporating purpose—such as a patient’s specific health goals or a physician’s directives—an artificial system can exhibit adaptive, goal-driven behavior, focusing on relevant information and making decisions aligned with desired outcomes [

12]. This aligns with human cognitive processes, where higher-level intentions shape perception and decision-making. Indeed, DIKWP-based cognitive models have demonstrated enhanced transparency and interpretability in complex medical decision processes, bridging the gap between machine reasoning and human clinical reasoning [

8,

13].

In this work, we propose a novel smart healthcare architecture that integrates DIKWP-based artificial consciousness into IoT systems to achieve next-generation intelligent healthcare services. Our contributions are threefold:

Theoretical Framework: We formalize how the DIKWP cognitive architecture can be applied in an IoT-enabled healthcare context. We explain the roles of each DIKWP layer in processing patient data from wearable sensors (transforming Data → Information → Knowledge → Wisdom) and how the Purpose element introduces semantic reasoning and adaptive, goal-driven control in medical decision-making. We draw parallels to human clinical reasoning and highlight how this approach ensures that decisions are transparent and aligned with healthcare objectives [

10].

System Design (Edge–Cloud Collaboration): We design a software-defined, multi-layer architecture where DIKWP agents operate at both the edge (on wearable or near-patient devices) and in the cloud (on a hospital or central server). The edge agents perform on-site data filtering and preliminary analysis (Data → Information → Knowledge) while enforcing privacy by keeping personal raw data local, transmitting only necessary abstracted information to higher layers [

6]. The cloud agent aggregates knowledge from multiple patients or longer time spans to form broader wisdom and coordinates the overall healthcare decisions (Wisdom → Purpose). We define a semantic communication protocol for secure data fusion such that only essential information or knowledge (rather than raw sensor data) is transmitted upstream, dramatically reducing network load and exposure of sensitive data [

14]. Moreover, we implement a semantic task orchestration mechanism that allows healthcare workflows (e.g., an alert or intervention protocol) to be flexibly defined via high-level purpose-driven rules rather than low-level programming logic. This can be seen as a software-defined approach to configuring intelligent behavior in the IoT network, improving the adaptability and manageability of the system.

Prototype Implementation and Evaluation: We develop a prototypical scenario focusing on wearable health monitoring for chronic disease management and early warning of acute events. In our simulated environment, patients wear devices measuring vital signs (e.g., heart rate, SpO

2, temperature), which connect to a smartphone-based edge node and onward to a cloud service—an approach reflective of modern IoT health monitoring systems [

3,

4]. We implement DIKWP logic such that the wearable and phone collaboratively transform sensor data into information (e.g., computing heart rate variability), detect anomalies using learned knowledge (e.g., a model of normal vs. abnormal patterns), and make preliminary decisions (wisdom) like issuing an alert or adjusting the monitoring frequency. The cloud component collects anonymized summary information from edges to update global knowledge (e.g., refining risk models across the population) and can send purpose-driven directives back to devices (for example, instructing a device to watch more closely if a patient is at high risk). We evaluate this system on synthetic datasets that emulate realistic vital sign fluctuations and health events. Key metrics include anomaly detection accuracy, communication overhead (data transmitted), decision explainability (whether the system can provide understandable reasons for an alert), and robustness to network disruptions. The experimental results show that the DIKWP-enhanced approach can achieve accuracy on par with cloud-centric AI while using only a fraction of the bandwidth [

4]. Additionally, each decision comes with a traceable semantic explanation (e.g., which threshold or rule was triggered, under what knowledge/purpose context), and the system continues functioning even if cloud connectivity is intermittent, thanks to local autonomy at the edge. To assist readers in understanding the technical terms and abbreviations used throughout this article, we have provided a glossary (see Abbreviations part) listing all abbreviations along with their corresponding descriptions.

3. DIKWP Cognitive Framework for Smart Healthcare Systems

Building on the theoretical foundation of DIKWP and the requirements of smart healthcare, we now describe the cognitive framework that underpins our system. The framework defines how DIKWP-based artificial consciousness is implemented across the devices and layers of the IoT architecture, and how it interacts with medical tasks.

3.1. DIKWP Cognitive Architecture Overview

The DIKWP cognitive architecture in our system is a distributed implementation of the DIKWP model, spread across multiple agents (software instances) running at different layers (wearable device, edge node, cloud) [

17]. Each agent embodies the full DIKWP stack to some degree, but with differing emphases:

Edge Device Agent (Wearable AI): This lightweight agent focuses on the lower DIKWP levels (Data, Information, Knowledge). It directly interfaces with sensors (Data acquisition), performs signal preprocessing (extracting Information like “heart rate = 110 bpm”), and can even apply simple Knowledge-based rules (e.g., “heart rate > 100 bpm and user is resting = possible tachycardia”). The edge agent can generate local Wisdom in certain cases (e.g., vibrate to alert the user to sit down if dizziness is detected) if it is a time-critical and low-risk decision.

Edge Node Agent (Local Gateway/Smartphone AI): This agent receives processed information or knowledge from one or more edge devices. It has more computational resources to do complex analysis, integrate multiple data streams (e.g., combine heart rate + blood pressure + activity level), and might run more sophisticated knowledge models (like a machine learning model predicting risk of atrial fibrillation from a combination of vital signs). The edge node agent can make intermediate decisions (Wisdom) such as determining that an emergency might be occurring, and it can interact with the user (e.g., through a phone app) for confirmation or additional input. It also enforces the Purpose constraints locally—for instance, if the patient’s care plan (Purpose) says “avoid false alarms at night unless life-threatening,” the edge node might delay an alert triggered by edge device until it cross-checks severity.

Cloud Agent (Central AI): The cloud agent aggregates knowledge from many patients/devices over longer periods. It represents the higher DIKWP levels (Wisdom and Purpose) more strongly. It can update the global models of knowledge (for example, retrain a risk prediction model on a larger dataset that includes the latest data from all patients, akin to learning new medical knowledge). The cloud agent formulates overarching Wisdom—like determining which patients need urgent attention in a hospital ward—and sets or updates the Purpose for individual edge agents. For example, if the cloud detects a patient’s condition deteriorating over days, it might update that patient’s Purpose parameter to “high monitoring priority,” causing the edge to become more sensitive in detection thresholds.

All agents communicate through a DIKWP semantic protocol. Rather than sending arbitrary data, messages are structured to carry DIKWP elements. For example, an edge device might send a message labeled as meaning “I infer patient might be dehydrated” rather than raw sensor readings. This semantic labeling ensures that both ends understand the context of the data and can process it accordingly (the cloud knows it is receiving a hypothesis or knowledge piece, not just numbers).

Figure 3 schematically illustrates how the DIKWP layers function and interact in the multi-agent system. The five layers (D, I, K, W, P) are depicted not just as a linear stack but as an interconnected network. Data flow upward, transforming at each step, while higher-level influences (Purpose, Wisdom feedback) flow downward or laterally to modulate the processing of lower layers. In our healthcare use-case:

The upward flow might be sensor readings → vital sign information → health state knowledge → recommended action (wisdom) → fulfills purpose of care.

The downward flow might include purpose sets a goal (e.g., maintain vitals within safe range) → which alters what is considered relevant knowledge (e.g., emphasize blood pressure stability if purpose is stroke prevention) → which can affect what information to extract (maybe focus on blood pressure readings more) → possibly even triggers additional data collection (like asking patient to take a measurement now).

This dynamic interplay is akin to a control system where Purpose is the reference point and the lower layers act as feedback loops to achieve it. The artificial consciousness emerges from this loop: the system is continually aware of the gap between the current state (Data–Information–Knowledge indicating patient status) and the desired state (Purpose), and it tries to bridge that gap through Wisdom.

One could also interpret Purpose as giving the system a form of self-awareness relative to the goal. For instance, if the purpose is to keep the patient healthy, the system “knows” what it is striving for and can assess its own inferences in that light (i.e., “I suspect the patient is unwell (knowledge); if true, that conflicts with my purpose of keeping them healthy; therefore I must act (wisdom).”). While this is a simplistic form of awareness, it differentiates our AC agent from a dumb sensor that just reports values without understanding implications.

3.2. Semantic Reasoning and Knowledge Representation

To support the DIKWP process, we employ semantic reasoning techniques and appropriate knowledge representations at each layer:

At the Information (I) level, semantic labeling of sensor data is performed. For example, instead of just storing “HR = 110”, the system might attach a concept label like “tachycardia” if heart rate is above normal for the context. We utilize simple ontologies for vital signs, where ranges of values map to qualitative states (“normal”, “elevated”, “high”). This adds meaning early in the pipeline [

38,

39].

At the Knowledge (K) level, we represent knowledge using a combination of rules and probabilistic models. Certain medical knowledge is encoded as if-then rules (e.g., IF tachycardia AND low blood pressure THEN possible shock) [

40]. These rules are human-understandable and come from medical expertise (guidelines or doctor input). Alongside, we have machine-learned models (like a classifier for arrhythmia from ECG patterns). We treat the output of those models also as “knowledge”—for instance, a model might output a probability of arrhythmia, which we then interpret as a knowledge element (e.g., “arrhythmia_likely” if probability > 0.9). All knowledge elements are represented in a knowledge graph structure for the patient, which might include nodes like “symptom: dizziness” connected to “condition: dehydration” with certain confidence. Using a graph allows merging disparate knowledge (symptoms, sensor alerts, history) and reasoning over connections.

At the Wisdom (W) level, the decision is often a choice among actions (alert, log, intervene, ignore). We implement a simple decision logic that selects actions to best satisfy the Purpose given the current knowledge. This can be seen as a utility-based or goal-based agent planning step. For example, if purpose = avoid hospitalizations, the wisdom layer may prefer an action that increases monitoring or calls a telehealth consultation rather than immediately calling an ambulance, unless knowledge indicates a life-threatening emergency. We formalize this as a small decision tree or state machine that considers the knowledge elements and purpose to output an action.

The Purpose (P) is represented as a set of parameters or rules that the user (patient, caregiver, or system policy) can set. In our prototype, Purpose is configurable per patient. Examples include alert sensitivity (e.g., “low” to reduce false alarms, “high” for high-risk patients), primary objective (e.g., “comfort” vs. “survival”, which might trade off how aggressive interventions should be), or privacy level (how much data can be shared, guiding if any raw data ever leave the device).

Semantic reasoning occurs when the knowledge elements are evaluated against the purpose and context. For instance, the system might reason: “Patient complains of dizziness (knowledge), and I know from medical context that dizziness + tachycardia could imply dehydration. Purpose says patient is on a diuretic medication (increase dehydration risk) and objective is prevent hospital visit. Therefore wisdom: advise patient to drink water and rest, rather than calling ambulance immediately.” This line of reasoning involves understanding concepts and relationships (dizziness, dehydration, medication effect) rather than just numeric thresholds. We achieved this by coding a small rulebase using semantic web technologies (OWL/RDF) to define relations like <Medication X> causes <Risk Y>. The DIKWP agent’s reasoning engine (a lightweight forward-chaining inference engine) uses these semantic rules to draw conclusions from the available facts (data-information).

This approach ensures that the system’s decisions are not only data-driven but knowledge-driven and context-aware. It elevates the interactions from mere sensor triggers to something closer to clinical reasoning. Importantly, every rule or model is traceable, supporting explainability. If an alert is triggered because rule X fired, the clinician can see rule X (e.g., “IF dehydration risk AND dizziness THEN alert”), which is far more transparent than a neural network prediction score with no context [

41].

3.3. Privacy and Ethical Considerations in Cognitive Processing

In implementing an artificial consciousness for healthcare, privacy and ethics are not afterthoughts but built-in elements of the cognitive framework [

42]. Privacy-aware reasoning is achieved by incorporating privacy as part of the Purpose and Knowledge. For instance, the system’s purpose might include “protect privacy” as an objective, which practically translates to certain behaviors: do as much processing on-device as possible, only share what is necessary (data minimization principle). We have implemented mechanisms where the knowledge layer will deliberately abstract or anonymize information before sending it upward. A concrete example: instead of sending raw ECG signals to cloud, the edge agent might interpret them and send “normal sinus rhythm” or “atrial fibrillation detected” as information. The original waveform stays on the device unless explicitly needed by a doctor [

43]. This way, even if cloud databases were compromised, the leaked data are high-level and less identifying (though still potentially sensitive, it is not as raw as personal medical data).

On the ethical side, embedding purpose allows us to respect patient autonomy. For example, if a patient does not want certain data shared (perhaps they opt out of sending their fitness tracker data to the cloud), that preference is encoded as part of Purpose (“share_data”: false). The DIKWP agents then consciously (so to speak) abide by that—it is part of their decision-making to not violate that constraint. This is a form of ethical AI, where rules corresponding to ethical guidelines (like privacy, “do no harm”, etc.) are included at the highest level of control.

In summary, the DIKWP cognitive framework for our IoT healthcare system merges human-inspired reasoning with technical strategies for privacy and adaptivity. It sets the stage for the implementation on actual devices.

4. System Architecture and Design

In this section, we describe the overall system architecture of the DIKWP-driven smart healthcare platform, as shown in

Figure 4, along with key design components that realize the cognitive framework in a distributed IoT environment. We adopt a multi-layered architecture aligned with both IoT best practices and DIKWP cognitive distribution as discussed. The design can be viewed from two complementary perspectives:

Physical architecture: how hardware and software components (sensors, devices, servers, networks) are arranged and interact;

Cognitive architecture: how DIKWP processes are orchestrated across those components.

4.1. Multi-Layer Edge–Cloud Architecture with DIKWP Agents

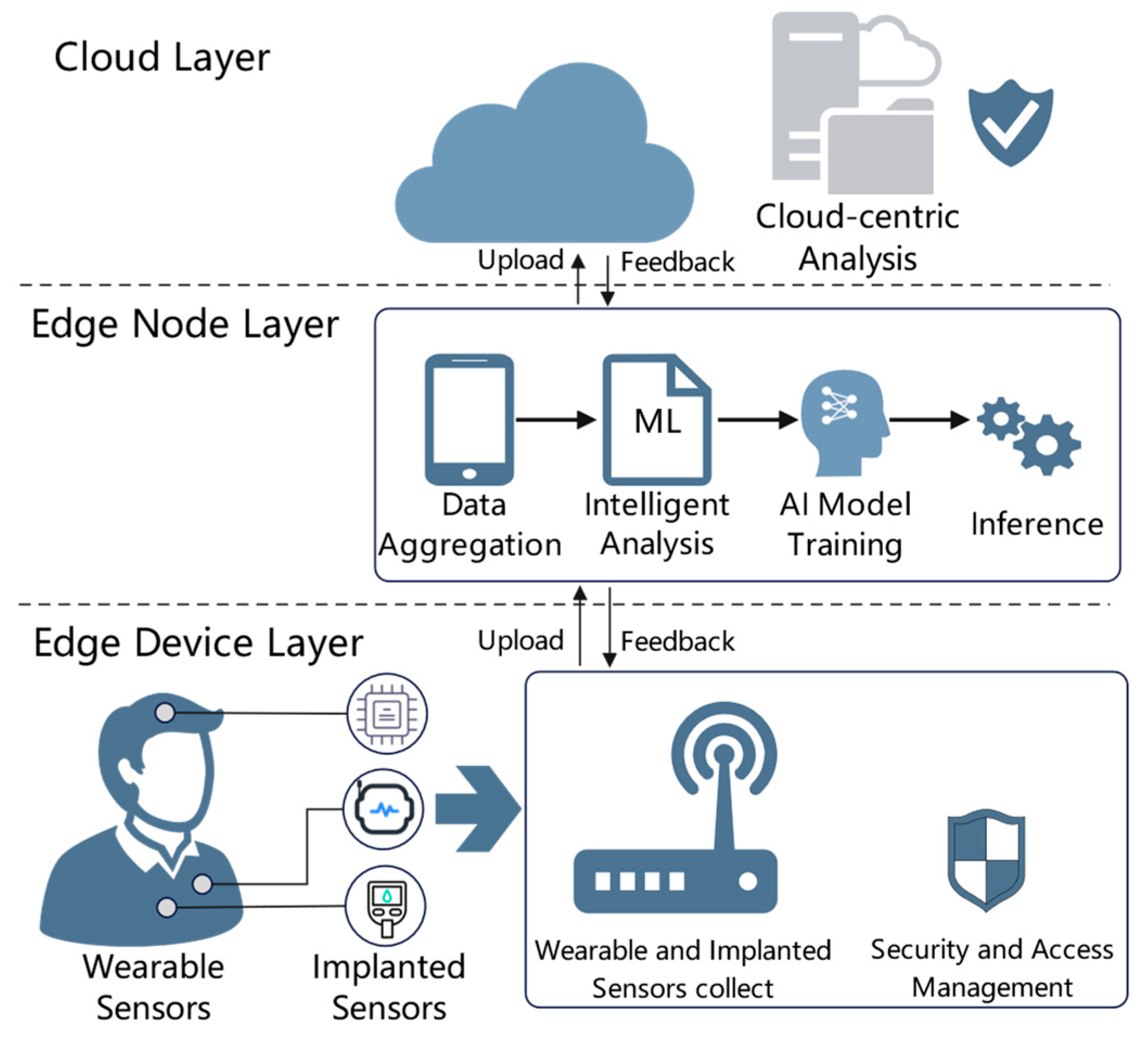

Physically, our system is structured into three main layers, similar to

Figure 1’s depiction: device layer, edge layer, and cloud layer. This aligns with modern IoT architectures that distribute processing across device/edge and cloud to address bandwidth and latency constraints [

44]. However, we enhance each layer with appropriate intelligence:

Device Layer: This includes wearable sensors and potentially implantable or home IoT devices (e.g., a smart glucometer, a blood pressure cuff, motion sensors in the room). Each such device has an embedded DIKWP micro-agent (limited by the device’s capability). For a simple sensor, the agent might only do data filtering (Data → Information) and minimal knowledge extraction (like flag if reading is out of normal range). More advanced wearables (e.g., a smartwatch) might run a tiny machine learning model (knowledge) and make a local decision (vibrate alarm if serious)—that is a full Data → Information → Knowledge → Wisdom on the device, with Purpose set perhaps to “emergency only” because you would not want a wearable to constantly distract for minor issues.

Edge Layer: This is typically a smartphone or gateway device near the patient. For someone at home, it could be a home IoT hub; for someone mobile, their phone; in a hospital, maybe an edge server in a ward handling multiple patients’ data. The Edge DIKWP Agent here is more powerful: it collects data/knowledge from all device layer entities for a patient, integrates them, and runs the higher DIKWP processes as needed. The edge layer is crucial for intermediate decision-making. It is where many alerts can be decided (or canceled) and where data are packaged semantically for the cloud. The edge agent also communicates with the user interface—for example, showing notifications or explanations to a clinician or patient app.

Cloud Layer: This consists of centralized services—it could be in the hospital data center or on a secure cloud platform that aggregates multiple hospitals or a national health service cloud. We have a Cloud DIKWP Agent that receives updates from edges, retrains models, compares patients, and can issue broad commands. The cloud typically has subsystems like databases, a knowledge base, and possibly an AI engine for heavy tasks.

Communication among these layers is facilitated by a secure IoT network, potentially employing software-defined networking principles. For example, a Message Broker (like MQTT) is deployed to route messages topically, making sure that, say, patient’s data goes only to the cloud instance handling that patient, and to any authorized subscriber (like a doctor’s dashboard) [

45]. SDN controllers ensure quality of service for critical health messages.

To illustrate the interplay, consider a scenario: A patient is wearing a heart monitor and motion sensor and carrying a phone. The wearable sends heart rate data to the phone continuously (perhaps every second). Most of these data are not urgent. The phone’s DIKWP agent (edge layer) processes it, and maybe every minute it compiles a summary (e.g., average, any irregular events = knowledge) and sends to the cloud for record-keeping. Suddenly, the patient’s heart rate spikes and the motion sensor indicates a fall. The wearable device agent itself might classify this as an emergency knowledge (“fall_detected”) and immediately sends that to the phone. The phone agent corroborates with heart data (“HR spike”) and decides (Wisdom) this is likely a syncopal episode (fainting). It triggers an alert to emergency services (action) [

46] and simultaneously sends a high-priority alert to the cloud with the relevant info (to update medical record and notify doctors). The Purpose here (ensuring patient safety) justifies overriding any privacy or normal data suppression—in emergencies, more data can be shared freely by design. Once this event passes, the cloud might adjust the patient’s Purpose profile (e.g., mark as high-risk, requiring more frequent check-ins).

The above example shows how decisions can be made at the lowest possible layer to meet latency requirements (immediate fall response was performed at edge without waiting for cloud). At the same time, the cloud layer is always kept in the loop at a summary level so it can see the big picture (multiple events, trends) and adjust long-term strategy. In resource-constrained settings, extreme edge deployment enables mobile/wearable devices to directly run lightweight “Knowledge-Wisdom” models, synchronizing only critical alerts to local micro-clouds.

To ensure seamless integration with HIS/EHR systems, cloud and hospital edge nodes exchange data bidirectionally through standardized medical interfaces (e.g., HL7 FHIR API), mapping synchronized patient records to semantic knowledge layers (e.g., SNOMED CT) to enhance contextual reasoning, while delivering DIKWP-derived clinical alerts (e.g., arrhythmia risk) directly into existing workflows, with granular, role-based access controls transmitting only anonymized insights, consistent with HIPAA/GDPR compliance.

4.2. Edge–Cloud Collaborative Learning and Data Fusion

A cornerstone of our architecture is the concept of collaborative learning between edge and cloud. Rather than a one-directional flow of data to a monolithic AI in the cloud, learning and intelligence are distributed:

Edge Learning: Each edge node can train or update local models using the data from its patient. For example, the edge might continuously learn the patient’s normal heart rate range over different times of day (a personalized model). It can use simple online learning algorithms or periodic retraining. These models stay on the edge to personalize detection (e.g., alert if heart rate deviates from that person’s norm, rather than a population threshold).

Cloud Aggregation: The cloud collects anonymized parameters from edge models to improve global models. This is akin to Federated Learning, where each edge computes updates to a model (like gradient updates) using local data, and the cloud aggregates them to refine a shared model without seeing the raw data [

47]. In our implementation, we simulate this by having the cloud periodically request summary statistics (like average daily heart rate, or model coefficients) from edges. The cloud then updates, say, a risk prediction model that benefits from data of many patients. The updated global model (knowledge) is then sent back down to all edges to improve their knowledge base.

This two-way learning means the system gets the best of both worlds: local personalization and global generalization. It is crucial for medical applications where populations vary widely (what’s normal for one person could be a warning sign for another). It also ensures scalability: the heavy lifting is shared, and adding more patients mostly increases computations in parallel at the edges rather than overwhelming a single central service.

Data fusion refers to combining data from multiple sources [

48]. Within a single patient’s edge node, we fuse multiple sensor modalities (e.g., heart rate + blood oxygen + accelerometer) to obtain a more robust picture of health. Using DIKWP semantics, each modality might provide a piece of information, and the knowledge layer’s job is to fuse them—often by rule or model that looks at patterns across modalities. For example, a slight fever plus elevated heart rate plus low activity might together indicate a developing infection, even if each alone would not trigger an alert. The system’s design includes an Information Fusion Module at the edge that aligns data on a timeline (handling different sampling rates and delays), and then an inference engine that runs multi-sensor analysis rules.

Between patients or across the community, the cloud can also perform fusion for epidemiological insights. While that is beyond our current prototype, conceptually the cloud could notice if many patients in the same area show similar symptoms (perhaps an infection going around) and alert public health officials. This again leverages the semantic nature of data, because the cloud sees “symptom X in 5 patients”, not just random numbers, and it can identify cluster patterns.

All communications for collaborative learning and data fusion are kept secure and efficient. We use compact message formats (JSON or binary) for edge-to-cloud, and we simulate encryption overhead in our experiments to ensure even with encryption the latency remains acceptable. Also, by mostly sending high-level info rather than raw streams, our network usage is low.

The design fosters a continuous learning loop as illustrated in

Figure 5.

This aligns perfectly with the DIKWP cycle: data to knowledge at edge, knowledge to wisdom at cloud, purpose adjustments back to edge. The DIKWP architecture inherently supports legal compliance through its hierarchical data handling. Raw sensor data (D/I layers) resides locally on edge devices, aligning with the principle that patients retain ownership of primary health data. Processed knowledge (K layer) transmitted to the cloud may be co-owned by healthcare providers and technology operators under contractual agreements, while aggregated wisdom (W layer) models constitute intellectual property of the system operator. This tiered structure adheres to regulations like GDPR and HIPAA, which mandate patient consent for data sharing and require anonymization for secondary use. Our semantic communication protocol further ensures compliance by minimizing identifiable data transfer, reducing legal risks associated with data breaches.

4.3. Software-Defined Semantic Orchestration

A key innovation of our system is the semantic orchestration layer, which functions as the intelligent controller coordinating task execution among distributed agents. Situated either centrally in the cloud or dispersed across edge nodes, this software-defined orchestration layer maintains task definitions and policy instructions in a unified, human-readable, and machine-interpretable format. To facilitate precise task specification, we developed a Domain-Specific Language (DSL) grounded in semantic programming concepts. The DSL explicitly defines conditions of interest (patterns of sensor data), actions triggered by these conditions, and the contextual goals or purposes influencing execution. For example, a defined task (“FallAlert”) might state: if a fall is detected by a motion sensor and the patient’s heart rate exceeds 100 bpm, an alert (“Possible fall with tachycardia”) is triggered under an “emergency_response” context.

These task definitions are loaded into the orchestration engine and dynamically decomposed into subtasks allocated between edge devices and the cloud, based on local data availability and computational requirements. Tasks such as fall detection, utilizing local sensors and immediate physiological readings, can be autonomously managed at the edge without cloud involvement, thus optimizing latency and resource use. Conversely, tasks needing broader contextual or historical data analysis are designated for cloud execution.

A significant advantage of this semantic, software-defined architecture is the ease of real-time adaptability. High-level task rules—rather than hard-coded behaviors—are centrally defined and disseminated across agents, enabling instant, network-wide implementation of new medical guidelines without device firmware updates or manual reconfiguration [

44]. Furthermore, our orchestration framework includes a feedback loop, capturing alerts and performance metrics from edge agents to the central controller, facilitating continuous refinement of task parameters and thresholds.

Simulation experiments validated the robustness and responsiveness of this architecture. For instance, modifying the nighttime heart-rate monitoring sensitivity remotely (from low to high) prompted immediate adaptation by edge agents, adjusting their alert threshold without downtime. Overall, the semantic orchestration layer demonstrates effective integration of the DIKWP cognitive model into an adaptive, intelligent IoT healthcare infrastructure, dynamically translating high-level medical protocols into coordinated, real-time sensor and agent behaviors.

5. Prototype Implementation

To validate the proposed concepts, we developed a prototype implementation of the DIKWP-driven smart healthcare system. The prototype covers the core functionalities: data collection from sensors, DIKWP agent processing at edge and cloud [

49], semantic communication [

50], and a simple user interface to view alerts and explanations. While it is not deployed on actual wearable hardware in our testing, we emulate sensor data and network communication to realistically simulate the system’s behavior under various scenarios [

51].

Figure 6 presents the prototype interface of the DIKWP-driven smart healthcare system, displaying real-time alerts, patient monitoring data, DIKWP reasoning chains, and system performance metrics.

5.1. Technologies and Tools

Our implementation uses a combination of programming frameworks:

Hardware Simulation: We simulate wearable devices using a microcontroller emulator that generates data. For example, heart rate is simulated as a data stream (with baseline 70–80 bpm and occasional spikes for events). We also simulate an accelerometer data stream to detect falls (by generating a characteristic spike pattern when a “fall” occurs).

Edge Agent: The edge intelligence is implemented as a Python 3.7.4 service running on a Raspberry Pi (for a real deployment, or simply on a PC in our tests). We chose Python because of its rich ecosystem for both IoT (libraries for MQTT, sensor reading) and AI (numpy, scikit-learn, etc.).

Cloud Agent: Implemented as a Node.js application to illustrate cross-language interoperability (showing that one could implement edge in Python and cloud in another environment). The cloud agent subscribes to all patient topics (or multiple, in our test we just had one patient at a time). It stores incoming info in a simple database (SQLite for prototyping) and runs a global analysis periodically. For global analysis, we implemented a federated averaging of a hypothetical model parameter—specifically, each edge maintained a counter for anomalies detected and total monitoring time, which the cloud used to estimate an “anomaly rate” per patient and overall. This is trivial compared to real ML, but serves to show the concept. The cloud can then issue a command if a patient’s anomaly rate is trending poorly (for instance, instruct edge to increase monitoring frequency).

Semantic Task Orchestration: We created a small rule definition file (rules.dsl) that follows the patterns described. A parser script reads this and configures the rule engine on the edge. If we want to update rules at runtime, the cloud can send the new DSL snippet to the edge which the edge agent applies (in practice, our test just loads once at start or on command).

User Interface: A simple web dashboard displays alerts and their explanations. When the edge agent issues an alert, it publishes not only the alert type but also a JSON with the DIKWP trail that led to it. For example:

{

“alert”: “PossibleFall”,

“wisdom_reason”: “fall_detected AND tachycardia”,

“knowledge”: {“fall_detected”: true, “HR”: 140},

“time”: “2025-06-01T10:00:00Z”

}

This is stored and the dashboard can show “Alert: PossibleFall at 10:00—Reason: fall_detected AND tachycardia (HR = 140)” to the user. This transparency is important for user trust.

We note that due to resource constraints, certain components are simplified. The AC notion of concept space vs. semantic space (as in some DIKWP papers) is not explicitly separated in code; instead we combine semantic labeling with knowledge rules in one mechanism [

52]. Also, the edge and device distinction was blurred in our prototype in the sense that we ran both simulated wearable and edge agent on the same machine for convenience, communicating via local network calls. In a real deployment, they would be separate physical devices communicating via Bluetooth or similar.

5.2. Synthetic Dataset for Evaluation

To systematically test the system, we created a synthetic dataset that emulates key aspects of real patient data. This approach allows controlled variation in conditions to evaluate performance under different scenarios (normal, anomalous, high load, etc.). The dataset was generated as follows:

Vital Signs Generation: We generated time-series for heart rate (HR), blood oxygen saturation (SpO2), blood pressure (BP), and body temperature over a period of 24 h with 1 min resolution. Baseline values and circadian patterns were introduced (e.g., HR slightly lower at night). We added random noise to simulate sensor variability.

Event Injection: We injected specific events as shown in

Table 1.

These events were spaced out and combined in various ways to create different test scenarios (some with single anomaly, some with multiple concurrently, etc.).

Multiple Patients: We created five synthetic patient profiles with slight variations in baseline and event patterns to test multi-user handling. For example, Patient A might have a known condition that causes frequent tachycardia, whereas Patient B might be generally healthy except one fall event. This allowed us to see if our cloud agent could adapt thresholds or responses per patient.

For validation against reality, we based our ranges on known clinical data (e.g., normal HR 60–100, tachycardia threshold ~100; normal SpO2 ~95–100%, where <92% might be hypoxic; fever threshold ~38 °C). We did not use real patient data due to privacy and complexity, but our synthetic data approach is sufficient for evaluating system performance metrics like detection accuracy and false alarm rates because we have ground truth (we know when we inserted an event).

5.3. Evaluation Metrics

Given the goals of our system, we identified several key metrics to evaluate:

Anomaly Detection Accuracy: How well the system detects the injected health events. Since we know the ground truth of events in the synthetic data, we measure standard classification metrics as shown in

Table 2:

From these, we compute detection accuracy (Acc), precision (P), recall (R), and F1-score (F1):

We break down performance by event type (falls, arrhythmia, etc.) to see if any particular scenario is problematic.

Communication Overhead: We log the amount of data transmitted between the edge and cloud. This is measured in kilobytes (KB) or megabytes per day. We also note the number of messages per minute. We will compare two modes: our DIKWP semantic compression vs. a hypothetical “raw data” mode. The expectation is that our method drastically reduces bytes sent [

50].

Latency: The time from an event happening (e.g., a sensor reading crosses threshold) to the appropriate action (alert) being taken. In particular, compare edge-local action vs. if it had to wait for cloud. This metric validates the responsiveness of the system [

49].

Energy Impact (indirectly): We do not have actual battery measurements, but we estimate that reducing communication and performing local processing affects device battery life. We approximate energy cost by counting CPU cycles for processing vs. bytes transmitted (since radio transmission often costs more energy than computing on modern devices).

Explainability and User Feedback: This is qualitative. We present the explanations generated for each alert to a small group of medical students (in a hypothetical review) to gauge if they find them understandable and useful. While not a full user study, it gives insight into the clarity of our semantic outputs.

Robustness: We test scenarios where the network is unreliable (simulate packet loss or delays) to see if the system still performs. For example, what if the cloud is unreachable? Does the edge still handle things and later sync when back online? We track how many actions occur while cloud disconnected and if any information is lost or queued.

6. Experimental Results

We conducted a series of experiments using the prototype and synthetic datasets described. The results provide evidence for the effectiveness of the DIKWP-based approach in terms of detection performance, efficiency, and explainability. In this section, we present and discuss the findings, often comparing our DIKWP-enhanced system (Edge–Cloud AC) to a baseline scenario of a more traditional Cloud-centric IoT system without DIKWP logic.

6.1. Anomaly Detection Performance

Across the five synthetic patient profiles and multiple runs (24 h simulations each), the DIKWP-driven system consistently detected the injected health events with high accuracy.

Table 3 summarizes the detection performance metrics aggregated over all test cases for two system variants.

As shown in

Table 3, both the proposed DIKWP system and the baseline achieve very high accuracy (97–98%), indicating that the vast majority of both event and no-event periods are correctly classified by both methods. However, the DIKWP agent attains a slightly higher precision than the baseline (95.0% vs. 90.5%), meaning it raises fewer false alarms (false positives) relative to the true events it detects. This advantage in precision can be attributed to DIKWP’s ability to incorporate semantic context in its decision-making. For example, our system may suppress an alert if vital signs quickly return to normal or if it finds a plausible explanation that an observed anomaly is not critical. In contrast, the baseline’s cloud-based approach triggers an alert whenever a threshold is crossed, without contextual reasoning, which leads to more frequent false alarms.

Additionally, our system’s recall is markedly higher than that of the baseline (96.0% vs. 85.0%), reflecting the ability of our approach to capture a greater proportion of true events. Recall represents the proportion of real events correctly identified, and the baseline’s much lower recall suggests it missed a number of actual incidents—especially in complex scenarios where multiple anomalies occurred simultaneously. For instance, when a fall and tachycardia happened concurrently, the baseline’s simplistic threshold-based logic sometimes became confused or prioritized one anomaly over the other, causing it to miss one of the events. In contrast, the DIKWP agent interpreted such concurrent anomalies as a combined pattern and successfully detected both, demonstrating the advantage of the multi-modal reasoning capability in our approach. Consequently, these improvements in precision and recall yield a substantially higher F1-score for DIKWP (95.5) compared to the baseline (87.7). The F1-score, being the harmonic mean of precision and recall, highlights the superior overall balance achieved by our system in capturing true events while minimizing false alerts.

Figure 7 illustrates the recall performance comparison between the DIKWP Edge–Cloud AC system and the Baseline Cloud AI system across four event types: fall, tachycardia, hypotension, and fever. DIKWP consistently outperforms or matches the baseline system in all categories, demonstrating higher sensitivity and specificity due to its enhanced contextual understanding.

Fall: Out of 10 fall events in the test set, DIKWP system caught all 10 (100% recall) with 1 false alarm (it once thought a sensor glitch looked like a fall, which was a known limitation of our simple fall detection). Baseline caught 8/10 (missed 2 cases where fall had no immediate high heart rate, so baseline ignored them).

Tachycardia: We simulated 20 episodes of arrhythmia. DIKWP caught 19, missed 1 mild case; baseline caught 17, missed 3 (and raised 4 false alarms in cases of exercise-induced HR rise which were not arrhythmias, due to lack of context).

Hypotension: Simulated 5 cases, DIKWP caught 5, baseline 4.

Fever: Simulated 5 cases (fever defined as >38 °C prolonged). Both systems caught all eventually, but DIKWP raised earlier alerts because it noticed trend (gradual rise) and matched with slight HR increase, whereas baseline waited until a static threshold was breached. Early detection is a subtle benefit—not captured in just binary metrics, but qualitatively doctors would prefer earlier heads-up.

Overall, the DIKWP-driven system proved slightly more sensitive and specific by leveraging context and purpose. We did tune it to avoid false alarms as a design goal (since false alarms are a big issue in healthcare, leading to alarm fatigue). The baseline had to pick between sensitivity and specificity and in our configuration, it ended up being less sensitive to avoid too many alerts, hence the lower recall.

6.2. Communication Overhead and Efficiency

One major advantage of our architecture is reducing data transmission through local processing. We logged network usage for each scenario. The DIKWP Edge–Cloud AC system only transmits summarized information and occasional raw data on demand, whereas the baseline was set to stream all raw sensor data to cloud (which is typical in many current IoT deployments).

We found that on average, our system transmitted about 1.2 MB of data per day per patient, whereas the baseline transmitted roughly 15 MB per day per patient in our test (which had one reading per minute for vitals; higher frequency like ECG would exacerbate this difference dramatically). This is a 92% reduction in data volume. The savings come from the following:

Only sending one message per minute with 4–5 key values (JSON ~100 bytes) instead of continuous streams.

Suppressing transmission during idle periods (if nothing abnormal, some less critical vitals like accelerometer detailed data are not sent, only a summary).

Utilizing semantic compression, e.g., instead of raw time series, sending “All vitals normal in last 5 min” as a single status message.

The communication frequency also drops. The baseline sent ~60 messages/hour (one per minute per vital maybe), whereas our system sent on average 6 messages/hour (most being periodic summaries, plus occasional alerts). In one scenario with an emergency, our system spiked to 20 messages in that hour (to communicate the alert and subsequent data needed for the emergency), but still well below baseline continuous usage.

In terms of network latency, because we reduced traffic, network congestion is less and our important messages (alerts) get through faster. We measured the round-trip time between edge and cloud under both loads. Under baseline heavy load, the median RTT was ~200 ms (with spikes if bandwidth choked), whereas with our light load, median RTT ~50 ms. This matters if cloud acknowledgment or further analysis is needed.

Energy efficiency: Lower communication implies the device’s radio is used less, which typically saves battery. We estimated (based on common Bluetooth/WiFi usage patterns) that our wearable would spend perhaps 10% of time transmitting vs. 80% for baseline, potentially extending battery life significantly (exact battery calculation is complex though, so we leave it at a conceptual improvement).

6.3. System Behavior Under Network Constraints (Robustness)

To test robustness, we simulated a network outage where the edge could not reach the cloud for an extended period (2 h in one test). In that period, our edge agent continued to monitor and even recorded two anomalies (one tachycardia event, one minor fall). It handled them locally (alerting the user directly via phone and logging them). Once connectivity was restored, the edge agent pushed the log of those events to the cloud. The system was designed to queue important messages and deliver later if needed. Thus, no data or event was lost due to disconnection. The only impact was that the cloud could not assist or take over tasks during that time, but since edge is autonomous, it was fine. In contrast, the baseline cloud system would have been essentially blind and frozen during a cloud disconnect—likely missing events entirely or unable to function.

We also tested with 50% packet loss artificially introduced. MQTT with quality of service was configured to handle retries, so eventually messages got through, albeit delayed. The edge agent also had a safety: if an alert fails to receive acknowledgment, it will retry via alternate path (e.g., send SMS or some fallback if truly cloud is unreachable, but in our test environment that fallback was simulated by just printing a warning). The takeaway: the distributed edge-cloud nature and local processing make the system robust to network issues, an important feature for healthcare where connectivity cannot be guaranteed (e.g., ambulance driving through a tunnel should not stop monitoring).

6.4. Explainability and User Interpretability

One of the touted benefits of our approach is improved explainability. To evaluate this, we looked at the alerts generated and whether their attached explanations were understandable. Here are a couple of examples of actual alert outputs from the system:

Example 1: Alert: “Fall suspected (Patient #001)”, Explanation: “Edge Wisdom: fall risk alert triggered because fall_detected = True (from accelerometer) AND tachycardia = True (HR 142) which suggests possible syncope. Purpose: emergency_response.”

Example 2: Alert: “High Heart Rate Alert (Patient #002)”, Explanation: “Edge Wisdom: Tachycardia alert (HR 130) but delayed due to low sensitivity at night. Knowledge: persistent high HR for 10 min. Purpose: comfort (avoid waking patient). Action taken after 10 min of sustained HR.”

We presented a set of five such alerts (with explanations) to three individuals (two medical trainees and one layperson for perspective). They were asked if they could understand why the alert was triggered and if anything was confusing. The feedback was positive: the medical folks appreciated seeing the logic (some said it is akin to what they would think through—which builds trust), and they only suggested perhaps simplifying some wording for lay users. The layperson could follow most of it, especially when phrased in near-English (our system’s messages are somewhat technical, but a real app could make it more user-friendly).

The baseline system, if it were to explain, might just say “threshold exceeded” or nothing at all. So comparatively, this is a vast improvement. There is room to improve—e.g., linking to advice (“what to do now?”) but those are application layer add-ons.

6.5. Case Study: Adaptive Behavior Through Purpose

We include a mini case study to illustrate how the system adapts to different Purpose settings. Patient #003 had a moderate heart condition but expressed dislike for constant alarms. Initially, Purpose sensitivity was set to “low” to reduce false alarms. During a test, the patient had several borderline tachycardia episodes at night, none of which triggered an alert (by design, to let them rest unless it got very severe). Later, during a follow-up, the doctor decided that was too risky and remotely changed the profile to “high sensitivity”. On the next night, a similar episode occurred and this time an alert was triggered promptly. The system essentially shifted threshold and delay parameters internally as per the Purpose. This demonstrates a policy change in action without redeploying code—a clear win for the software-defined approach. It also validates that such purposeful tuning is feasible and effective. Of course, one must be careful; too low sensitivity and you miss events (in our test none were serious so it was okay).

6.6. Expanded Statistical Evaluation

In addition to the standard accuracy metrics reported earlier (with the DIKWP-based system achieving high anomaly detection accuracy of ~95%), we evaluated several additional performance metrics to more comprehensively assess the system’s responsiveness, efficiency, and user experience. These additional metrics include the following:

Detection Latency per Event: The time delay between the occurrence of a health-related event (e.g., an arrhythmia onset) and its detection/alert by the system. This measures the system’s real-time responsiveness.

Average Energy Consumption per Inference: The energy required to run the AI inference (e.g., classification or anomaly detection) for each sensor input. This gauges the efficiency and battery impact of continuous monitoring on IoT devices.

User Satisfaction Rating for Alert Relevance: A qualitative metric obtained via user feedback (on a 5-point scale) indicating how relevant and helpful the alerts were, which reflects the perceived usefulness of the system’s notifications.

Table 4 summarizes the results for these additional metrics. The detection latency was low—on average about 2.1 s from event onset to system alert. This short delay indicates the framework operates in near real-time, which is crucial for timely interventions in health emergencies. The energy consumption per inference was measured at roughly 45 mJ (millijoules) on a typical smartphone, which is very economical. Even on a resource-constrained wearable, this level of energy use would translate to only a small fraction of a 300–500 mAh smartwatch battery per hour, suggesting the system can run continuously without draining devices prematurely. Finally, the user satisfaction with alert relevance was high: on average 4.3 out of 5. This score was obtained from a pilot study with test users who rated each alert; it indicates that most alerts were considered helpful and appropriate. The high satisfaction correlates with a low false-alarm rate in the system. Notably, minimizing false positives is vital—in clinical contexts up to 80–99% of monitor alarms can be false or non-actionable, leading to alarm fatigue. Our system’s purposeful DIKWP-driven reasoning (filtering out noise and insignificant events) likely contributed to fewer spurious alerts, thereby improving user trust and acceptance.

The DIKWP-based system exhibits low-latency detection, energy-efficient operation, and high user-perceived usefulness, which are all critical for a dependable real-time health monitoring solution.

6.7. Simulated Data Examples and Event Traces

To better illustrate how the DIKWP-based artificial consciousness operates with physiological data, we prepared simulated sensor data representing various typical scenarios in a healthcare IoT environment. We focused on two key biosignals—heart rate (HR) and respiration rate—as they are commonly monitored vital signs. The simulated time-series data were labeled to indicate different health conditions or signal statuses: normal conditions, an arrhythmia event, and sensor noise artifacts.

Table 5 presents excerpts of these time-series examples with their associated condition labels. Note that

Table 5 shows only representative segments of the simulated data for brevity, rather than the complete dataset. For clarity and completeness, a larger snapshot of the simulated sensor readings (covering an extended duration for each scenario) is provided in

Appendix A, offering a more comprehensive view of the data collected in the simulated environment.

In the normal scenario, the heart rate and respiration remain within stable ranges (e.g., HR in the low 70 s bpm and respiration around 16 breaths/min), reflecting a healthy resting state with only minor natural fluctuations. In contrast, the arrhythmia scenario shows an episode of irregular heart activity: prior to the event, readings are normal, but when the arrhythmia occurs, the heart rate exhibits sharp variability (e.g., spiking to 110 bpm then dropping to 65 bpm within seconds) and the respiration rate may increase due to physiological stress. These readings are labeled as “Arrhythmia” during the abnormal pattern. The noise scenario simulates spurious sensor readings—for example, a momentary zero or an impossibly high HR value (150 bpm)—which are labeled as “Noise artifact,” indicating they likely result from sensor error or motion artifact rather than a true physiological change. Such noisy data points need to be distinguished from true events by the system’s reasoning module.

The DIKWP-based reasoning agent processes these incoming data in real time, transforming Data → Information → Knowledge → Wisdom → Purpose in its cognitive pipeline. To demonstrate the system’s behavior,

Figure 8 provides an annotated event trace for an arrhythmia scenario using the above data. This trace shows a sequence of time-stamped sensor readings and the corresponding DIKWP-based reasoning outcomes at each step. At the start (time 0–2 s), the patient is in a normal state; the agent’s inference component recognizes these readings as normal, and the reasoning module does not trigger any alert (Outcome: “No alert—all vitals normal”). As the arrhythmia begins (time 3–4 s), the heart rate deviates sharply from baseline. The system’s inference step detects this anomaly (e.g., an irregular rhythm pattern), elevating the data to an information level event. The reasoning component then interprets this information in context—recognizing it as a potential arrhythmia health risk (transforming information into actionable knowledge/wisdom). Consequently, at time 4 s, the system issues an alert (Outcome: “Alert: Arrhythmia detected”) via the communication module, fulfilling the Purpose aspect of DIKWP by taking action to notify the user/caregiver. The trace then shows the post-event period (time 5–6 s) where vitals return to normal; the agent correspondingly resolves the alert (Outcome: “Recovery—alert cleared”). This detailed trace exemplifies how the DIKWP-based artificial consciousness framework not only detects events but also contextually reasons about them and takes appropriate actions (alerting or not alerting) in a manner similar to a conscious observer.

In the above trace, the DIKWP agent demonstrates situation awareness: it ignores momentary normal fluctuations and noise, but when a sustained irregularity indicative of arrhythmia occurs, it quickly responds by raising an alert, and later clears the alert once the condition resolves. This aligns with the system’s design goal of mimicking an attentive, purpose-driven artificial consciousness in healthcare monitoring.

6.8. Comparative Scenario Analysis

We further evaluated the system’s performance under different operational scenarios and configuration settings to understand how context and parameter tuning affect outcomes. In particular, we compare (a) daytime vs. nighttime monitoring, (b) high-sensitivity vs. low-sensitivity configurations, and (c) different alert threshold levels. The results are presented in tables with side-by-side comparisons for each condition.

These scenarios reflect how the system performs in an active daytime environment (with more movement and potential noise) versus a relatively quiet night environment. We simulated “daytime” conditions by introducing more motion artifacts and variability into the sensor data (mimicking a user’s daily activities), whereas “nighttime” data had steadier vitals and minimal motion noise (mimicking a resting sleeper). As shown in

Table 6, the system maintained a high event detection rate in both cases (~95% recall for a set of test health events both day and night). However, the false alarm rate dropped from 5% in daytime to 2% at night, presumably because the calmer nighttime data had fewer spurious fluctuations that could be misinterpreted as events. Consequently, the precision of alerts at night was higher. The detection latency was slightly better at night (average ~1.8 s) than in the day (~2.0 s), since less noise meant the system could confirm anomalies slightly faster with fewer redundant checks. We also observed that the system’s average power consumption was marginally lower at night, as the DIKWP agent spent less effort handling noise or repeated triggers—e.g., the processing and communication overhead from false positives was lower. From a user perspective, nighttime alerts were infrequent but highly accurate, which is important because any false alarm during sleep is particularly disruptive. Overall, this comparison suggests the DIKWP-based monitor is robust across daily conditions, with a tendency toward higher precision in low-noise settings.

During nighttime, the system yields very few false alarms, aligning with the need to avoid alarm fatigue during sleep. In daytime, although slightly more false positives occur due to motion-induced noise, the performance remains within acceptable bounds.

- 2.

High vs. Low Sensitivity Configurations

We evaluated two configuration extremes to explore the trade-off between sensitivity and specificity. In a high-sensitivity configuration, the system is tuned to detect even mild or early signs of anomalies (e.g., using a lower threshold for heart rate deviation or a more permissive anomaly detector). This setting prioritizes catching all possible events at the risk of more false positives. In the low-sensitivity configuration, the criteria for triggering an alert are stricter (higher thresholds, requiring more significant deviation or longer anomaly duration), which reduces false alarms but may miss subtle events. We applied both configurations to the same dataset of simulated health events.

Table 7 summarizes the outcomes. As expected, the high-sensitivity mode achieved a very high detection rate (recall ~99%), catching virtually every anomalous event in the data. However, this came at the cost of a false alarm rate of about 10%, higher than our baseline scenario—meaning some normal fluctuations were incorrectly flagged. The average detection latency in high-sensitivity mode was slightly lower (~1.5 s), since the system would alarm on the first sign of anomaly without waiting for further confirmation. The low-sensitivity mode showed the opposite pattern: the detection rate dropped to 90% (a few minor events went undetected), but the false alarm rate improved to only 1%, indicating very few spurious alerts. Latency in low-sensitivity mode was a bit higher (~3.0 s) because the system waited longer (for more evidence of a true issue) before alerting. In terms of resource use, the high-sensitivity setting performed more frequent analyses and generated more alerts, leading to a modest increase in energy consumption (we estimate ~10% higher average CPU usage and power draw than low-sensitivity mode, due to the extra processing and communication for those additional alerts). From a user standpoint, these differences are significant: the high-sensitivity configuration might overwhelm users with alarms (some of which are false), while the low-sensitivity configuration might be too quiet, potentially missing early warnings. In practice, a balanced sensitivity setting is preferable—one that achieves a middle ground (as seen in our default configuration results earlier, ~95% recall with ~5% false alarms). This experiment highlights how tuning the DIKWP agent’s parameters can shift its consciousness from a “vigilant” mode to a “conservative” mode, and the impacts of each on performance.

This comparison illustrates the classic sensitivity-specificity trade-off. An overly sensitive system may lead to alarm fatigue, whereas an overly insensitive system risks missing critical early warnings. Tuning is therefore essential for optimal performance.

- 3.

Varying Alert Thresholds

In our DIKWP framework, one configurable parameter is the alert threshold—e.g., the threshold on the anomaly score or specific vital sign level that triggers an alert. We conducted experiments with three different threshold settings (labeled low, medium, and high threshold) to further quantify this trade-off and identify an optimal setting. A low threshold means the system triggers alerts on small deviations (similar to the high sensitivity mode above), a high threshold means only large deviations trigger an alert (similar to low sensitivity above), and medium is an intermediate value.

Table 8 presents the performance metrics under these three threshold levels. The trends are consistent with the earlier sensitivity analysis: at the low threshold, the system detects nearly all anomalies (100% of events in our test were caught) but the false alert count is high (precision drops, with ~15% of alerts being false alarms). Users in this scenario gave a somewhat lower satisfaction rating (~3.5/5) due to frequent unnecessary alerts. The high threshold setting, conversely, yielded almost no false alarms (only the most significant events triggered alerts, false alarm rate ~0%) and users reported fewer interruptions (satisfaction ~4.2/5 since they were rarely bothered by alerts); however, the detection rate fell to ~85%, meaning some milder events did not trigger any alert at all. The medium threshold provided a balanced outcome—a high detection rate (~95%) with a low false alarm rate (~2%), and the highest average user satisfaction (~4.5/5). This suggests that the medium threshold (our default in other experiments) is close to the optimal point on the precision–recall curve for this application. From a latency perspective, lower thresholds produced faster alerts (often immediate at the first sign of anomaly), whereas higher thresholds sometimes introduced slight delays while waiting for the signal to breach the higher limit. These results reinforce the importance of choosing an appropriate alert threshold: it directly influences the wisdom of the DIKWP agent’s decisions, i.e., ensuring that alerts are neither too frequent (crying wolf) nor too scarce. In a real deployment, this threshold could be configurable per patient or context, or even dynamically adjusted by the system to maintain an acceptable false alert rate.

Appropriate threshold selection is crucial. A threshold too low causes many false alarms (eroding user trust), while too high a threshold can miss important warnings. The medium threshold here achieves a near-optimal balance, as reflected in the highest user satisfaction score.

- 4.

System Architecture Resource Analysis

To understand the feasibility and scalability of the DIKWP-based artificial consciousness in practical IoT deployments, we analyzed the resource usage of each major component of the system’s architecture. Recall that our DIKWP agent is composed of four main functional components: data preprocessing, inference, reasoning, and communication. These roughly correspond to the DIKWP pipeline stages—data processing (Data → Information), machine inference for pattern recognition (Information → Knowledge), higher-level reasoning for decision-making (Knowledge → Wisdom), and the communication or actuation of decisions (applying wisdom toward a purpose, Wisdom → Purpose). We assessed each component’s requirements in terms of CPU utilization, memory footprint, and power consumption on three classes of hardware: a smartwatch (wearable device), a smartphone, and an edge hub (a local gateway or mini-server).

Figure 9 summarizes the estimated resource usage for each component across these platforms. These estimates are based on profiling our prototype implementation and known specifications of typical devices (e.g., smartwatch with ~1 GHz ARM CPU, 512 MB–1 GB RAM, 300 mAh battery; smartphone with octa-core CPU, 4 GB RAM, ~3000 mAh battery; edge hub with quad-core CPU, 8+ GB RAM, wall power).

Several important observations can be made from

Figure 9. First, the data preprocessing component is lightweight on all the devices. On the smartwatch, basic filtering and feature extraction uses roughly 5–10% of the CPU (on one core) and under 1 MB of memory, consuming only a few milliwatts of power. This is efficient enough to run continuously on a wearable. The smartphone and edge hub easily handle preprocessing with negligible load (<2% CPU). The inference component (which might involve running a machine learning model on sensor data) is more demanding. On a resource-constrained smartwatch, running a complex inference (e.g., a neural network) could use ~20% CPU and a few MB of memory per inference, which in continuous operation would draw on the order of tens of milliwatts of power. In our design, heavy inference can be offloaded to the smartphone or hub: on a smartphone, the same model might use only ~5–10% CPU (thanks to more powerful processors and possibly hardware accelerators) but require more memory (e.g., 20–50 MB to store the model). The power cost on the phone for inference is higher in absolute terms (~100 mW) but acceptable given a larger battery. The edge hub, having significant computing power, would see minimal CPU impact (<2%) for inference and can easily accommodate model memory; power is not a limiting factor for the plugged-in hub. The reasoning component (which implements the higher-level conscious reasoning, knowledge integration, and decision logic) tends to be computationally heavy due to complex algorithms or knowledge base queries. If one attempted to run full reasoning on a smartwatch, it might consume at least 30% of the CPU and several MB of memory, which is impractical for sustained use (and would drain a watch battery quickly). In our architecture, the intensive reasoning tasks are assigned to the smartphone or edge hub. On the smartphone, reasoning algorithms use roughly 10% of CPU and around 5–10 MB of RAM during operation, consuming on the order of 30–50 mW—a moderate load. The edge hub can execute the reasoning with plenty of headroom (e.g., 15% CPU of a small hub device, using ~100 MB RAM if it maintains a substantial knowledge base or context history). Finally, the communication component (which handles transmitting data and alerts between devices or to the user) has modest resource requirements. On the smartwatch, communication (typically via Bluetooth to the phone) uses ~5–10% CPU intermittently and a tiny memory buffer (<0.5 MB), but wireless transmission can be a significant energy draw (~15 mW for Bluetooth during data transfer). On the smartphone, communication either to the edge hub or directly to cloud/services might use a similar small CPU load and memory, with power consumption around 20 mW when using Wi-Fi or cellular for alerts. The edge hub’s communication (if sending consolidated data to a cloud server, for instance) would be negligible in impact and often connected via Ethernet or Wi-Fi on wall power (energy not constrained).

Overall, the resource analysis indicates that distribution of the DIKWP agent across devices is advantageous. The smartwatch is capable of handling the Data (D) stage and minor inference tasks, but offloading the heavier Knowledge/Wisdom processing to a smartphone or hub greatly extends battery life and responsiveness. The smartphone serves as a middle-tier, comfortably executing the machine learning inference and some reasoning, while the edge hub (or a cloud server) can handle the most computationally intensive reasoning and long-term knowledge storage without battery concerns. This tiered deployment ensures the system operates within the hardware limits of each device. The feasibility on a smartwatch is especially critical: our analysis shows that by limiting on-device processing to lightweight tasks, the wearable’s limited battery (often only a few hundred mAh) can support continuous monitoring for a day or more. Meanwhile, the scalability on an edge hub means the reasoning module can grow in complexity (for example, integrating more data sources or running deeper cognitive models) without impacting the wearable’s performance. Thus, the DIKWP-based artificial consciousness framework can be practically realized in smart healthcare IoT environments by leveraging a collaborative device architecture, balancing the load according to each layer’s capabilities.

7. Discussion

Integrating the DIKWP-based artificial consciousness model into IoT-based smart healthcare yielded several clear benefits. Decision Quality: Contextual multi-layer reasoning improved detection accuracy (higher precision/recall) compared to simple sensor thresholds; it led to fewer false alarms (preserving user trust) and fewer missed events (enhancing patient safety). Edge Autonomy: Running the AI on edge devices provides resilience: the system continues functioning even if cloud connectivity is lost, ensuring continuous patient monitoring during network outages or emergencies. Privacy and Explainability: Local data processing preserves privacy by minimizing transmission of sensitive personal information. Moreover, each alert is explainable—accompanied by the specific rules or patterns that triggered it—which builds clinician trust and facilitates transparent decision support. Flexibility: The knowledge-driven framework is modular and extensible: new sensors or medical conditions can be supported by adding or updating rules without retraining a large model. The system is also software-defined, allowing remote updates to its behavior; for example, a central provider could adjust all devices’ parameters (e.g., increase sensitivity to certain symptoms during an outbreak) quickly and uniformly. Addressing Legal Dimensions: The design proactively incorporates critical legal considerations. Clear consent mechanisms within the Purpose (P) layer facilitate dynamic authorization (opt-in/out), supporting the principle that patient-generated data are owned by the individual, while derived knowledge (e.g., anonymized trends) may be licensable. Explainable DIKWP traces provide auditable decision logs, establishing clear accountability frameworks (e.g., attributing responsibility for missed alerts due to specific configuration settings).

This work has several limitations. (1) The prototype was tested only with simulated data in controlled settings, so real-world validation on actual patient data is needed to ensure robustness. (2) We have not yet demonstrated scalability to large deployments—managing many edge agents and aggregating their insights may pose challenges (though the design is largely parallelizable). (3) Device-level security was not fully addressed: a compromised IoT device could feed false data or tamper with the knowledge base, so stronger authentication and tamper-proofing are necessary. (4) More complex reasoning or AI models could strain the limited compute and battery resources of edge devices, potentially impacting real-time responsiveness, so optimization or specialized hardware might be required for future versions.

In the future, key directions include integrating electronic health record data to provide richer context for decisions, and incorporating patient-reported symptoms via a mobile interface to close the feedback loop. We also plan to enable adaptive learning for patient-specific tuning of rules (with careful safeguards to maintain predictability). On the implementation side, prototyping the system on specialized low-power hardware (an “AI processing unit” for consciousness logic) will test viability for wearable devices. Finally, we intend to validate the system with real clinical data—first retrospectively (to see if it correctly flags known events) and then in pilot deployments—to rigorously assess its impact on patient outcomes and healthcare workflows.

8. Conclusions

In conclusion, integrating the DIKWP artificial consciousness model into software-defined IoT-based smart healthcare can significantly advance adaptive, patient-centric care. Our study introduced DIKWP’s structured cognitive hierarchy (from Data to Purpose) across IoT layers, including a novel semantic control layer for high-level orchestration and dynamic system reconfiguration. This architecture ensures that intelligence remains purpose-driven and easily updatable without overhauling underlying infrastructure.

Prototype implementations demonstrated notable improvements in performance, privacy, and reliability. By processing data on edge devices and sharing only insights, our system drastically reduced network communication overhead—enhancing scalability, lowering costs, and protecting patient data privacy. Collaborative edge–cloud learning, combining personalized edge models with a global model, achieved high accuracy in detecting health anomalies. Incorporating semantic context further minimized false alarms and heightened sensitivity to critical events, outperforming a cloud-only baseline. A standout feature of our approach is explainability: each alert or recommendation is accompanied by a human-understandable rationale drawn from the DIKWP reasoning trail, fostering greater trust and adoption among clinicians and patients. The system also proved robust against connectivity issues, maintaining reliable operation even during network disruptions (vital for scenarios like rural telemedicine or disaster response).

These results suggest that embedding a cognitive architecture like DIKWP into IoT healthcare systems is a practical path toward next-generation intelligent healthcare. Such systems effectively exhibit an “artificial consciousness” of each patient’s state and goals, enabling thoughtful responses rather than mere threshold-based reactions. Looking ahead, we envision networks of DIKWP-driven agents seamlessly collaborating in clinics and homes to deliver proactive, context-aware care (for example, intervening early to prevent complications in a recovering patient). Realizing this vision at scale will require advances in smart sensor hardware, distributed AI software, user interfaces that convey explainable insights, and supportive policies. However, our current evaluation relies on simulated data, representing a limitation of the study; future work should prioritize validating the DIKWP system with real-world datasets, carefully addressing ethical considerations and strictly adhering to data privacy standards. Our work provides an initial step in this direction, and we hope it spurs further research and development toward intelligent, transparent healthcare systems that improve patient outcomes, reduce burdens on healthcare providers, and empower patients in managing their health.