2. The State of the Art

The emergence of collaborative robotics has transformed the specifications for robot control interfaces. In traditional industrial environments, robots are isolated from humans and operated by experts, typically by offline programming or teach pendants. Cobots function alongside human workers, collaboratively sharing responsibilities such as assembly assistance, tool delivery, or material handling [

5]. This tight engagement necessitates control systems that are rapid, adaptable, and secure, enabling an operator to modify robot movements instantaneously. Research shows that how easy a robot is to use is a key factor in getting people to use collaborative robots: the controls should make programming simpler and lessen the mental effort needed from human operators [

1,

5]. An evaluation of human factors in collaborative robotics suggests that intuitive interaction can improve productivity and safety in hybrid work environments [

5].

Traditional control methods, such as teach pendants or keyboard/mouse teleoperation, are often cumbersome for novices and inadequately swift for dynamic collaboration. Programming even a basic motion with a teach pendant may necessitate accessing menus and coordinate frames, thus disrupting the workflow. In a human–robot partnership, a more intuitive method of expressing purpose is preferable, like how a human could gesture or physically direct a colleague. This has resulted in the investigation of interfaces that leverage inherent human communication methods: gestures, vocalization, and kinesthetic direction. Gesture-based control has garnered considerable interest due to its non-intrusive and intuitive nature, utilizing skills that humans naturally employ in everyday communication [

6]. The ISO/TS 15066 technical definition for collaborative robot safety allows for the hand-guiding of robots, indicating that direct human interaction is permissible in collaborative environments [

1].

Industry 4.0/5.0 frameworks emphasize the utilization of modern digital technologies (sensors, the IoT, and AI) to develop more intelligent and responsive automation [

2]. Wearable devices adhere to this structure by operating as mobile sensor platforms that detect human movement or biological signals and swiftly relay that information to robotic Intelligent HMIs. Employing wearables allows individuals to remain “in the loop,” aligning with the human-centric automation goals of Industry 5.0 [

4]. An article on Industry 5.0 highlights that the future of automation will entail a synergistic collaboration between humans and robots, with interfaces designed for natural interaction being essential. Thus, research on wearable and gesture-based control is not conducted in isolation; it is part of a broader effort to humanize industrial robotics and facilitate robot training to the level of any other colleague.

Wearable human–machine interfaces provide an effective method for operating robots by detecting the operator’s physical movements or gestures using body-mounted sensors. A range of wearable systems has been proposed, from instrumented garments equipped with several sensors to basic consumer devices such as smartwatches. The primary objective is to measure human movement in real time and translate it into commands for robotic control [

7]. Unlike vision-based tracking, wearable sensors attached to the user are unaffected by line-of-sight limitations and lighting conditions, often providing high-frequency, low-latency data [

7]. Wearables are particularly appealing for real-time teleoperation or collaboration in unstable industrial environments.

An exemplary instance is the study of Škulj et al., who developed a wearable IMU system for flexible robot teleoperation [

6]. Their system is equipped with multiple inertial measurement units (IMUs) attached to the operator’s chest and arms, in addition to hand-mounted buttons. The IMUs capture the orientation of the operator’s upper body segments, and the operator’s arm movements are converted into the end-effector motions of a collaborative robot in Cartesian space [

6]. Activating the portable push button enables the user to toggle the robot’s mobility, functioning as a safety precaution. Škulj et al. indicate that this wearable IMU interface facilitates the intuitive control of the robot; the operator merely moves as though directing the robot with an unseen handle, and the robot mimics the operator’s posture [

6]. This approach significantly reduces the necessary skill; an operator can “instruct” the robot on a trajectory through natural arm movements rather than programming waypoints. The wearable device was discreet and did not require precise sensor placement, making it appropriate for use on the shop floor [

6].

Researchers have examined the amalgamation of diverse wearable sensor types to improve control precision. Kurpath et al. [

8] presents a lightweight exoskeleton-like controller utilizing three IMUs attached to the arm and ten potentiometers on the fingers, enabling the capture of up to 17 degrees of freedom in human arm–hand movement [

8]. Their method figures out joint angles from IMU quaternions using a special technique called Relative Orientation Vector and converts human arm and finger movements into a robotic arm–hand model [

8]. The incorporation of finger sensors facilitates the control of a robotic hand (gripper) alongside the arm, thereby enabling more intricate telemanipulation, such as gripping. They exhibited the precise mapping of human movements to the robot in real time, requiring just minimum calibration [

8]. This highlights the trend of sensor fusion in wearables, which involves the integration of inertial, angular, or muscular sensors to comprehensively record the operator’s intent.

The myoelectric armband, typified by the Thalmic Labs Myo, represents a burgeoning wearable interface. Worn on the forearm, these devices employ electromyography (EMG) data from muscular activity, sometimes in conjunction with an inertial measurement unit (IMU), to detect actions such as arm movements or hand grasps. Studies demonstrate that Myo armbands can proficiently control robotic arms for basic actions. Boru and Erin [

9] describe a system that utilizes wearable and contactless technology, where an operator’s arm movements, captured by IMU/EMG, were used to control an industrial robot, augmented by a vision sensor for improved contextual awareness [

9]. Multimodal techniques enhance robustness: wearable sensors deliver direct motion input, while external sensors, such as depth cameras, contribute to environmental awareness [

9]. Although EMG-based control may be more complex to learn than IMU-based tracking, it enables the detection of the user’s muscle commands prior to significant movements, potentially decreasing control latency. Moreover, EMG facilitates certain types of gesture identification without arm movement, such as identifying a hand clench to initiate an activity. This can enhance inertial data, particularly when the user’s arm is stationary, yet muscle action is evident.

Wearable interfaces encompass not just the monitoring of gross limb movements but also include wearable gloves designed for the capture of hand motions. Data gloves equipped with flex sensors or inertial measurement units can translate finger movements into commands for robotic grippers or decipher complex hand signals. A recent assessment demonstrates that glove-based gesture recognition can accurately capture 3D hand pose data irrespective of illumination conditions, making it optimal for human–robot interaction in manufacturing [

10]. Nonetheless, gloves may be unwieldy if they are wired or hinder the user’s typical hand functionality. Researchers are tackling this issue by employing lightweight, wireless gloves or conventional smartphones as wearable controls. Wu et al. [

11] employed a smartphone’s integrated sensors as a handheld controller for a robotic manipulator, focusing on users with disabilities. They developed three distinct smartphone-based interfaces: one utilized the phone’s 3D orientation (tilt) to maneuver the robot along Cartesian axes, another employed the touch screen as a virtual joystick, and the third integrated orientation with touch inputs [

11]. Their assessment of daily living activities, such as feeding and object retrieval, determined that smartphone control was intuitive and economical, enabling users to perform tasks with minimal training [

11]. This demonstrates how readily available consumer wearables (smartphones, tablets, and watches) may be converted into efficient robot controllers.

Wearable HMIs must prioritize user comfort and safety in their design. The extended or repetitive use of wearables should not cause fatigue or discomfort. Furthermore, any wireless wearable control must be dependable and secure to prevent inadvertent robotic movements. In collaborative environments, the system must consistently permit the human to override or halt the robot instantaneously—such as through a swift hand gesture or a button press on the wearable device (as demonstrated in Škulj’s system) [

6]. The literature indicates a distinct trend: wearable interfaces enhance the naturalness and flexibility of robot control, while current research is advancing their precision (by improved sensors and fusion algorithms) and usability (via ergonomic design and minimal calibration) [

7,

8].

Hand and arm movements serve as an innate mode of communication for humans and utilizing them for human–robot interaction (HRI) has become a dynamic field of study. Gesture-based robotic control can be achieved using vision-based systems (cameras seeing the user’s motions) or sensor-based systems (wearables detecting gestures, as previously noted). Both techniques aim to streamline the robot training process to the simplicity of gestures or pointing, like giving orders to another person.

Vision-based gesture recognition has advanced considerably owing to modern computer vision and deep learning techniques. High-resolution cameras and depth sensors, including Kinect and stereo cameras, enable robots to observe human counterparts and interpret their hand signals or body language. A recent comprehensive analysis by Qi et al. [

10] highlights that vision-based hand gesture recognition enhances natural and intuitive communication in human–robot interaction, effectively “bridging the human–robot barrier” [

10]. These systems typically include hand detection in photos, the extraction of spatio-temporal features of gestures, and gesture classification by machine learning. In production environments, an employee may execute a designated hand signal (e.g., a thumbs-up or a stop gesture) that the robot’s visual system identifies and reacts to. Papanagiotou et al. [

12] illustrated an egocentric vision methodology, wherein a camera affixed to the user (first-person perspective) transmits data to a 3D CNN (convolutional neural network) that identifies movements to modify a collaborative robot’s actions [

12]. Their solution enabled a worker to communicate with a proximate cobot using naturally performed hand gestures from their perspective, resulting in dependable real-time recognition rates [

12]. Vision-based solutions are advantageous as they do not necessitate the user to use any apparatus; yet they demand resilient computer vision capabilities in industrial environments, accommodating variable lighting, occlusions, and background interference.

Gesture recognition is associated with the previously mentioned wearable human–machine interface (HMI) concern. Rather than continuously monitoring movement, several systems evaluate specific gesture commands obtained from wearable sensor data. A smartwatch or armband can recognize distinct gesture patterns, such as a double-tap or movement, and initiate preprogrammed robotic functions. The wristwatch application in our context, as described at the beginning of this assessment, enables fundamental gesture recognition, including two-finger taps to perform commands [

3]. These subtle touch gestures on the wrist may facilitate mode changes or task validation in a human–robot collaboration setting. Compared to comprehensive skeletal tracking, recognizing a limited range of motions may be less computationally demanding and more resilient to noise, but this comes at the cost of expressiveness. As a result, several systems combine continuous control with gesture commands. Weigend et al. [

13] integrated verbal commands with smartwatch-based arm posture monitoring, allowing users to manipulate the robot via arm motions in addition to verbal or tactile directives for actions [

13]. This multimodal approach (voice + wearable motion) significantly enhanced the interface’s adaptability, enabling “anytime, anywhere” interaction in the robot’s operations by the human operator [

13].

Dynamic free-form motions, such as waving an arm to divert a robot’s attention or delineating a shape in the air, have been investigated. Xie et al. [

14] introduce a sophisticated human–robot interaction system in which an operator uses dynamic hand gestures to teleoperate a quadruped robot equipped with a manipulator [

14]. Their technology uses a vision sensor to monitor a series of hand movements, rather than a static gesture, to control the robot’s actions. They indicated that dynamic motions, analyzed by deep learning, facilitated the intuitive real-time remote control of a sophisticated robot (a bipedal machine with an arm) [

14]. The system might, for example, read a continuous circular motion of the hand as “rotate in place” or an upward wave as “stand up,” illustrating the expressive capacity of dynamic gestures [

15]. The problem resides in ensuring reliability; misreading a gesture may lead to unintended movement. Current research focuses on resilient, context-sensitive gesture recognition. Liu and Wang [

16] investigated gesture recognition for human–robot interaction and highlighted the need for strong algorithms that can handle different ways people perform gestures [

16]. Recent research utilizes advancements in deep learning, such as 3D CNNs and Transformers, and frequently integrates modalities, including vision and IMUs, to enhance accuracy [

10].

An important element of gesture-based human–robot interaction is user training and ergonomics. Vision-based gesture control is highly user-friendly (requiring no wearable devices); nonetheless, users must familiarize themselves with the specific movements recognized by the robot and execute them distinctly. A specific lexicon of gestures exists, like sign language for robots. Certain studies endeavor to implement adaptive learning, enabling the robot to acquire personalized gestures from the user via demonstration. Conversely, gesture control with wearables can capitalize on more nuanced actions, such as a delicate wrist flick identifiable by an IMU, which a camera may overlook if the hand is obstructed. Nichifor and Stroe (2021) developed a kinematic model of the ABB IRB 7600 robot, using Denavit–Hartenberg parameters and inverse kinematics methods to enable precise motion control [

15]. Although presented as motion tracking rather than gesture commands, it obscures the distinction: the system “recognizes” the operator’s arm movements (a trajectory gesture) and correlates them with the robot. They achieved a mean positional error of roughly 2.5 cm for the estimated wrist and elbow positions by optimizing machine learning models utilizing smartwatch sensor data [

13]. This accuracy illustrates that inertial gesture tracking from wearables can be sufficiently precise for controlling industrial robot arms in diverse tasks, from coarse positioning to fine adjustments.

Smartwatches have emerged as a notably intriguing platform for robot control among wearable gadgets. Smartwatches are commercially available, economically viable, and feature several sensors (accelerometer, gyroscope, magnetometer, etc.), a touchscreen interface, and wireless communication functionalities. A smartwatch is worn on the wrist, providing an advantageous position for monitoring arm and hand movements. Numerous recent studies have concentrated on employing smartwatches to manipulate robotic arms, illustrating both the viability and benefits of this method.

Weigand et al. [

13] proposed a pioneering framework named “iRoCo” for intuitive robot control utilizing a single smartwatch. The smartwatch’s inertial sensors are utilized to determine the three-dimensional pose of the wearer’s arm within their system. The smartwatch functions as a wearable motion-capturing apparatus on the wrist. They developed a machine learning model to deduce the locations of the wearer’s elbow and wrist from the watch data, producing a range of potential arm postures [

13]. By integrating this with voice commands (recorded through the smartwatch’s microphone or a connected device), the user can execute intricate teleoperation activities. In use-case demonstrations, an operator might promptly assume the control of a robot by moving their arm—such as modifying the robot’s carrying trajectory in real time—and thereafter revert to autonomous mode [

13]. The ability to “intervene anytime, anywhere” with a simple wrist movement and verbal command illustrates the effectiveness of smartwatch-based human–robot interaction (HRI). The authors reported a 40% reduction in pose estimation error compared to previous state-of-the-art methods, yielding an approximate mean error of 2.56 cm in wrist placement [

13]. This accuracy is significant given that only a single smartwatch is employed; it demonstrates the effectiveness of their refined learning approach and maybe the quality of modern smartwatch sensors.

Smartwatches can identify both general arm orientation and subtle movements or contextual data. Numerous commercial smartwatches, for instance, provide touch, double-tap, and rotating movements (employing the bezel or crown). These inputs can be employed to control mode transitions or incremental motions of a robot. In a 2025 study published in

Applied Sciences, Olteanu et al. developed a smartwatch-based monitoring and alert system for plant operators [

3]. The emphasis on worker monitoring (vital signs, movements, etc.) rather than direct robot control highlights that smartwatches are suitable for industrial settings, capable of connecting with cloud services and enduring prolonged usage throughout the day [

3]. A smartwatch that controls a robot might also record data, such as the frequency of specific gestures, and interact with Industrial IoT systems for analytics or safety assessments.

Our experimental setup corroborates the feasibility of employing a consumer smartwatch as a robotic controller. The gyroscope measurements of the watch are correlated with joint velocity commands for a compact industrial robotic arm within that system. The preliminary findings suggest that novices can maneuver the robot through fundamental movements (such as guiding an end-effector along a trajectory) following a brief orientation phase. This corresponds with the findings of Wu et al. regarding smartphone interfaces and expands the application to a more compact wrist-mounted device [

11]. The portability of smartwatches enables an operator to potentially operate a robot while moving freely on the shop floor, without the need for additional hardware. A technician may stand before a large robotic cell and use wrist movements to manipulate the robot for maintenance or programming, rather than using a tethered teach pendant. This may improve efficiency and ergonomics, as the individual’s hands remain largely unobstructed.

A technological concern for smartwatch-based control involves aligning sensor data with the robot’s coordinate system. The positioning of the watch on the wrist may not directly correlate with significant robotic movement without calibration or interpretation. Strategies to address this comprise initial calibration poses (requiring the user to maintain their arm in a predetermined position) or employing supplementary sensors to contextually anchor the data. Certain studies have explored the integration of a smartwatch with an additional device, such as synchronizing the wristwatch with a smartphone in the pocket to detect body orientation [

7]. Weigend et al. [

7] presented WearMoCap, an open-source library capable of functioning in a “Watch Only” mode or utilizing a phone affixed to the upper arm for enhanced precision [

7]. The incorporation of a secondary device (a phone functioning as an IMU on the upper arm) significantly enhanced wrist position estimations, decreasing the inaccuracy to approximately 6.8 cm [

7]. This dual-sensor configuration (watch and phone) remains highly practical, as most users possess a mobile device; it essentially establishes a body-area sensor network for motion capture. Despite operating in the restricted “watch-only” mode, WearMoCap attained an accuracy of roughly 2 cm in comparison to a commercial optical motion capture system for designated workloads [

7]. The findings are encouraging, suggesting that a single smartwatch can provide sufficient realism for various robotic control tasks. If enhanced precision is required, minimal augmentation, such as a phone or a second watch on the arm, can effectively bridge the gap to professional motion capture systems.

Smartwatches can present specific restrictions and challenges. Initially, sensor drift in inertial measurement units (IMUs) can induce inaccuracies over time; thus, methods such as Kalman filters or regular recalibration are necessary to preserve accuracy [

7]. The user interface on the watch (compact display) is constrained. Although tactile gestures (taps and swipes) can be utilized on the watch, intricate inputs are impractical. Consequently, wristwatch functionality is optimized when several commands are executed implicitly through motion, reserving the touchscreen or buttons for basic confirmations or mode switches. The battery life is a significant issue; uninterrupted sensor streaming can deplete a smartwatch battery within a few hours. Fortunately, industrial utilization may be sporadic, and energy-saving strategies (or employing enterprise smartwatches with enhanced battery capacity) help mitigate this issue. Finally, safety must be prioritized; an erroneous reading from the watch or a dislodged strap could result in an incorrect command. Multi-sensor fusion and fail safes, such as requiring a gesture to be maintained for a brief interval to register, are utilized to mitigate inadvertent activations [

13].

Notwithstanding these limitations, recent studies agree that smartwatch-based control is both practical and potentially extremely beneficial for specific categories of tasks. It excels in situations necessitating mobility and spontaneity, such as an operator promptly intervening to rectify a robot’s action or instructing a robot through a new duty by means of demonstration. As research on wearable human–machine interfaces advances, we may observe the emergence of specialized industrial smartwatches or wrist-mounted controllers tailored for robotic operation, featuring robust designs, additional sensors, or haptic input for the user. Contemporary standard smartwatches are utilized in fields like rehabilitation robotics for evaluating patient motions and managing therapeutic robots, as well as in telepresence applications. The interdisciplinary exchange across these domains indicates that advancements, such as enhanced wristwatch motion precision for medical applications, can lead to improved robotic control interfaces.

This analysis focuses on smartwatches and gesture control while emphasizing the necessity of situating both within the spectrum of human–robot interface modalities being examined. Multimodal interfaces, which incorporate voice, gesture, gaze, and more inputs, are particularly relevant in collaborative settings. Voice commands function as a complementary augmentation to gesture control; explicit spoken directives can elucidate gestures or provide additional parameters (e.g., articulating “place it there” while pointing). Lai et al. demonstrated the effectiveness of a zero-shot voice and posture-based human–robot interaction paradigm, emphasizing the integration of speech and body language for intuitive communication, employing extensive language models to interpret high-level commands [

13]. In industrial settings, an operator may issue a command such as “next step” accompanied by gestures, and the system utilizes both signals to execute an action. Smartwatches can enable this by providing a constantly accessible microphone for the user, transmitting voice in conjunction with motion data [

13].

Augmented reality (AR) interfaces are pertinent as well. Augmented reality glasses or tablets can superimpose robot status or intended trajectories within the user’s visual field, creating a potent interface when integrated with gesture input. An operator utilizing AR glasses may visualize a hologram indicating the robot’s intended movement and, through a simple hand gesture detected by the glasses’ camera, authorize or modify it. This pertains to wearable control, as the differences between “wearable” and “vision-based” technology blur in augmented reality; the glasses serve as wearable sensors on the head and may additionally track hand movements. The major goal is to achieve a seamless collaborative experience, allowing people to easily guide and receive feedback from the robot. Faccio et al. (2023) emphasize that a human-centric design of interfaces, considering ergonomic and cognitive attributes, is crucial for the successful integration of cobots into modern production [

1].

Ultimately, we identify a significant convergence in wearable robotics: technologies like powered exoskeletons (which are robotic wearables) have been investigated for their capacity to assist humans and to regulate other robots. An operator utilizing an upper-limb exoskeleton might have their movements directly mirrored by a larger industrial robot, exemplifying master–slave control. This provides the operator with tactile feedback (via the exoskeleton) while remotely managing the slave robot. While exoskeletons are generally employed for augmentation or rehabilitation, the notion of utilizing one as a “master device” for a subordinate arm has been evidenced in research contexts [

8]. While still somewhat impractical for industrial applications due to the weight and complexity of exoskeletons, it highlights that humans can be equipped to varying extents—from a basic smartwatch to a comprehensive full-body motion capture suit or exoskeleton—based on the required precision of control. In several industrial contexts, the lightweight method (smartwatches, armbands, and gloves) is preferred because of its practicality.

A multitude of studies completed from 2019 to 2025 augment and elaborate on the improvements in smartwatch and wearable interfaces previously referenced. Liu and Wang [

16] conducted a detailed review of gesture recognition systems used in human–robot collaboration (HRC), highlighting important technological issues in recognizing and classifying gestures in real-world industrial environments. Their classification of sensory techniques and categorization algorithms remains highly influential in HRC research.

Neto et al. [

17] introduced gesture-based interaction for assistive manufacturing, utilizing depth sensors and machine learning to identify hand and body movements for executing robotic orders. Their findings highlighted that consumers favor non-contact, natural methods, especially in restricted environments.

Lai et al. [

18] introduced a novel zero-shot learning approach utilizing posture and natural voice inputs analyzed by big language models. Although not reliant on smartwatches, this exemplifies how forthcoming human–robot interaction systems may incorporate semantic comprehension derived from voice and motion data collected via wearable devices.

Wang et al. [

19] examined hand and arm gesture modalities in human–robot interaction, encompassing EMG, IMU, and vision-based methodologies. Their findings indicate a trend towards multimodal fusion and context-aware systems that amalgamate wristwatch data with environmental sensing.

Oprea and Pătru [

20], from the Scientific Bulletin of UPB, developed IRIS, a wearable device for monitoring motion disorders. While centered on healthcare, their techniques for movement measurement and noise filtration can enhance wristwatch sensor processing.

Villani et al. [

21] performed an extensive survey of collaborative robots, emphasizing that they require intuitive interfaces and safe interactions in industrial settings. Their analysis highlights the importance of wristwatch systems in future HRC designs.

Recent advancements in control theory have led to the widespread adoption of methods such as Active Disturbance Rejection Control (ADRC), filtered disturbance rejection control, and multilayer neurocontrol schemes, particularly in handling uncertain, high-order nonlinear systems. For example, Zhang and Zhou [

22] applied an ADRC-based voltage controller to stabilize DC microgrids using an extended state observer, achieving high robustness in fluctuating conditions. Similarly, Herbst [

23] provided a frequency-domain analysis and practical guidelines for ADRC implementation in industrial settings. While these methods are highly effective, they often require complex modeling, parameter tuning, and expert intervention. In contrast, the smartwatch-based gesture control system proposed in this study emphasizes accessibility, rapid deployment, and user-friendliness. Rather than competing with advanced control frameworks, this approach complements them by enabling intuitive human–robot interaction, especially in collaborative or assistive scenarios.

These articles offer an extensive overview of how wearable human–machine interfaces, particularly smartwatch-based systems, are transforming human–robot interaction into collaborative industrial settings. Each study enhances the detailed understanding of how gesture-based, intuitive, and wearable control methods may improve safety, efficiency, and accessibility in robotic systems.

2.1. Challenges and Opportunities for Future Work

The present level of technology demonstrates that the wristwatch and wearable control of robotic arms is feasible, and it provides notable advantages in terms of intuitiveness and versatility. Nonetheless, there remain unresolved obstacles and prospects for future endeavors:

Accuracy and Drift: Although techniques like Weigend’s ML model [

13] have significantly enhanced arm posture estimation from a single watch, attaining millimeter-level precision for intricate manipulation tasks continues to be challenging. Future investigations may integrate sophisticated sensor fusion (e.g., amalgamating an IMU with a camera on a watch) or online learning calibration to perpetually rectify drift. Methods from smartphone augmented reality, utilizing simultaneous localization and mapping, may be modified to enhance the accuracy of tracking watch movement in global coordinates.

Delay and Communication: Reducing latency is crucial for real-time management. Bluetooth connections from smartwatches demonstrate delay and potential packet loss. Investigating high-speed wireless connections or on-device processing (executing portions of the control algorithm on the smartwatch) could improve responsiveness. Škulj et al. opted for a Wi-Fi UDP connection to achieve about 30 Hz control updates [

6]. As wearable technology progresses with improved CPUs and Bluetooth low-energy advancements, this gap will continue to narrow.

User Training and Ergonomics: The development of intuitive and non-exhaustive gesture languages or motion mappings constitutes an interdisciplinary challenge, integrating robotics and human factors. Studies demonstrate that users may quickly acclimate to wearable controls for simple operations; however, complex tasks may require support. Augmented reality or auditory feedback may aid in training people on how to operate the robot, successfully showcasing an augmented reality depiction of the arm illustrating the “optimal” motion. The enduring comfort of using equipment, especially during prolonged shifts, requires evaluation. Lightweight, breathable straps and potentially modular wearables that users can easily put on or off between tasks would be beneficial.

Safety and Intent Detection: A compelling avenue renders the wearable interface contextually aware. If the user begins to move swiftly, possibly in response to a falling object, the system may see this not as a control order but rather as a startled reaction, resulting in a cessation of robot movement. Differentiating intentional control gestures from unintentional human movements remains an unresolved issue. Machine learning techniques that classify motion patterns (control versus non-control) could be employed to prevent inadvertent robot activation. Moreover, including tactile or haptic feedback into wearables can enhance the feedback loop: a timepiece or wristband could vibrate to indicate that the robot has recognized a command or to warn the user if they are approaching a threshold or hazardous situation.

Scalability to Multiple Operators and Robots: In a production with numerous cobots and workers, how do wearable interfaces adapt to scale? One option involves each worker being assigned a dedicated robot (one to one). Another configuration is many to many, wherein any worker may possibly operate any cobot, contingent upon authentication. This necessitates the development of stringent pairing mechanisms and user authentication methods. Personalization may be implemented, allowing the system to learn each user’s unique motion patterns or gesture peculiarities, like existing gesture recognition models that utilized personalized frameworks [

6]. Coordination measures are essential to prevent disputes, such as two workers attempting to control a single robot; however, duties are typically given with clarity in practice.

Integration with Robotic Intelligence: Ultimately, wearable control should be considered a facet of a continuum of autonomy. The ideal utilization of cobots likely stems from the amalgamation of human intuitive guidance with the robot’s precision and computational capabilities. An operator can employ a smartwatch to roughly position a robotic arm, following which the robot’s vision system accurately fine-tunes the alignment for insertion. This cooperative control, sometimes referred to as shared control, can significantly enhance efficiency and precision [

13]. The future may witness robots capable of seamlessly transitioning between autonomous operation and operator-directed activity through wearables. In some situations, the robot may proactively solicit input through the wearable device—e.g., the watch vibrates and displays “Need confirmation to proceed with the next step,” which the user can authorize with a gesture or tap.

Challenges such as recognition latency, sensor noise, and gesture ambiguity pose a threat to reliable control. To mitigate these issues, the system integrates the pre-filtering of raw IMU data and uses a finite set of gestures with minimal motion overlap. Gestures were selected and positioned to reduce confusion between similar movements (e.g., side swing vs. tap). Latency was further reduced by prioritizing short, distinct gestures such as taps and flicks. These strategies contributed to improved recognition precision and real-time responsiveness, as confirmed by the evaluation results presented in “Results and Discussion”.

In conclusion, utilizing smartwatches to operate industrial robots represents a significant advancement in improving the accessibility and responsiveness of robotics within the production sector. These interfaces utilize humans’ intrinsic communication methods (motion and gesture), reducing the gap between intention and action in human–robot interaction (HRI). As collaborative robots ’advance and wearable technology evolves, we can anticipate a deeper convergence of these technologies. The anticipated result is a new generation of wearable-enabled collaborative robots that operators can effortlessly “wear and direct” robots that resemble colleagues rather than devices to be programmed, capable of comprehending our gestures and intents. This perspective augurs positively for the future of human-centered automation, enhancing both productivity and the work experience of individuals in industrial settings.

To address battery limitations caused by continuous IMU data streaming, the system minimizes data transmission intervals through low-latency gesture segmentation and avoids redundant sampling. Additionally, future implementations will explore event-driven data acquisition, adaptive sampling rates based on motion activity, and energy-efficient Bluetooth protocols (e.g., BLE 5.0) to extend operational time in practical deployments.

2.2. Contribution to Future Work Directions

The core innovation introduced in this paper was fully conceptualized and implemented by the authors. This includes the design of the smartwatch-based gesture recognition interface for robot control, the development of a custom signal mapping layer linking IMU features to robot actions, and the integration with a real-time cloud monitoring platform (ThingWorx 9.3.16 is a IIoT Platform). Although existing APIs such as TouchSDK 0.7.0 and WowMouse were used for gesture sensing, the implementation of the multi-layer control logic, gesture classification thresholds, experimental setup, and evaluation protocol are entirely the result of the authors’ work. The architecture was designed to enable modularity, scalability, and low-latency interaction, prioritizing usability in collaborative industrial environments.

The suggested approach directly adds to various future research paths identified in the literature, especially concerning intuitive, wearable interfaces for collaborative robots. Our smartwatch-based control technology illustrates the efficacy of consumer-grade wearables as ergonomic and mobile human–machine interfaces (HMIs) for industrial robotic arms. We specifically focus on the necessity for low-latency, real-time control through inertial sensor fusion, resilient gesture detection, and wireless data transmission. Our approach demonstrates scalable, user-friendly robotic interaction with little calibration by mapping gyroscopic input to joint velocity instructions and connecting with platforms such as ThingWorx for monitoring. This clearly endorses research trends advocating for context-aware, multimodal control systems, real-time responsiveness, and diminished training requirements for operators. Furthermore, our research aligns with the goal of integrating wearable HMIs into Industry 5.0 paradigms, where human-centric automation and intuitive robotic support are essential.

3. Methodology and Infrastructure

This study is to assess the efficacy and user-friendliness of a smartwatch-operated control interface for an industrial robotic arm. The system comprises a smartwatch integrated with inertial sensors (a gyroscope and accelerometer) and a touchscreen; Python programming for data acquisition and analysis; a real-time communication platform (ThingWorx), and an xArm robotic arm. The sensor data are analyzed as motion and orientation metrics to perform robotic activities. Although various commercial gesture recognition solutions exist—such as the Doublepoint Kit [

24] (Doublepoint, Helsinki, Finland)—this study did not employ any proprietary or closed-source packages. Instead, we used the Xiaomi Smartwatch 2 Pro (Xiaomi, Beijing, China), which offers built-in inertial sensors (IMUs) and runs Wear OS, combined with TouchSDK and WoW Mouse, both offered by Doublepoint, a flexible, platform-agnostic development kit.

TouchSDK was selected for its ability to provide direct access to real-time IMU data, making it suitable for integration with Python-based robotic control systems. This approach ensured enhanced system adaptability, interoperability among smartwatch models, and superior reproducibility, irrespective of proprietary engines or vendor-specific algorithms.

The system uses Doublepoint’s TouchSDK open-source to access real-time IMU data from the smartwatch. WowMouse provided fast integration during prototyping, while gesture recognition and signal processing are handled in custom Python scripts. The architecture remains modular and can be adapted to other smartwatch platforms or gesture engines.

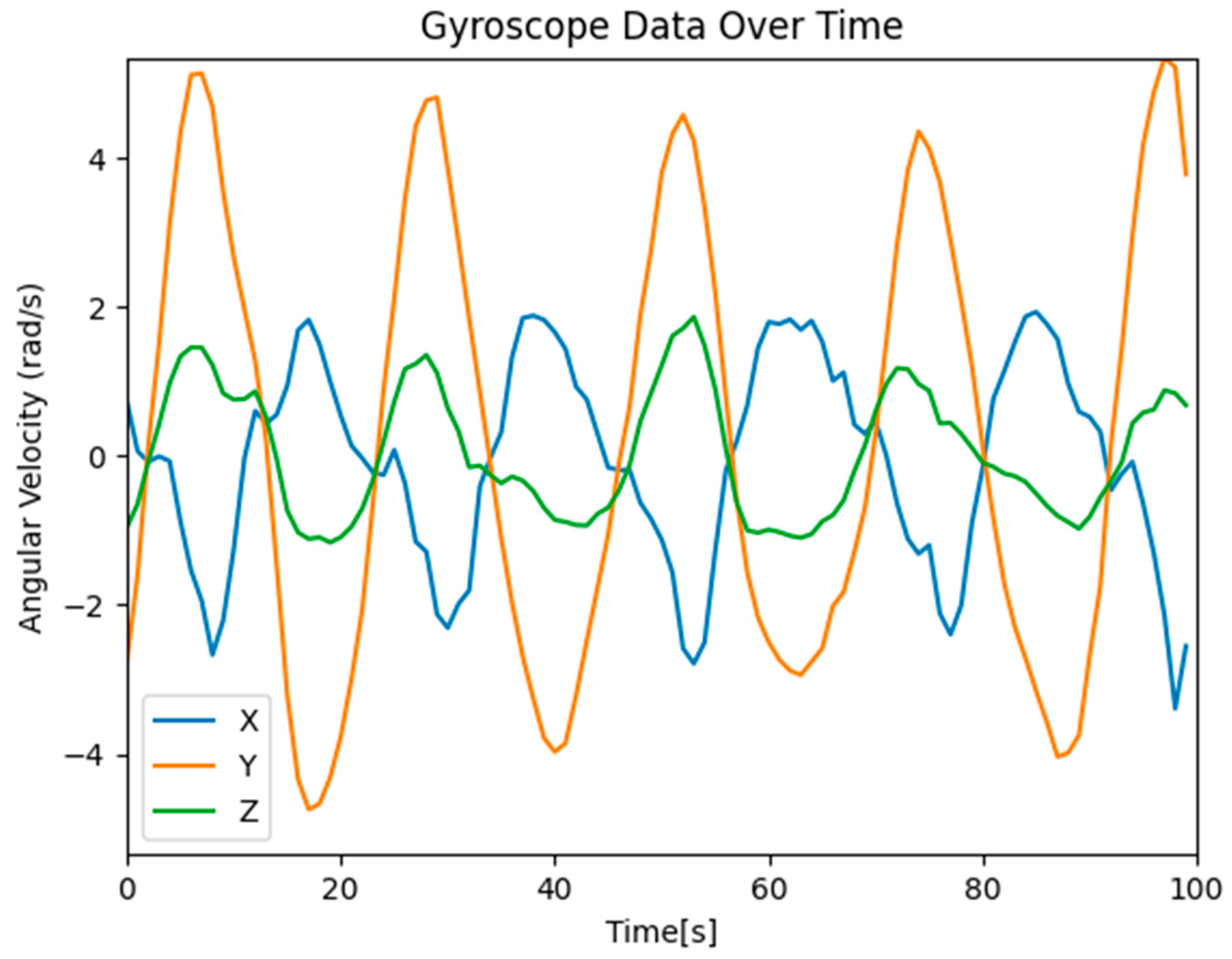

Figure 1 illustrates the unprocessed output of the smartwatch’s inertial measurement unit (IMU), depicting the temporal progression of rotational data over all three axes: X (blue), Y (green), and Z (orange). These signals indicate the angular velocities or orientation changes seen during a gesture-based robotic control session.

The real-time analysis and interpretation of inertial sensor information are essential for the efficacy and dependability of gesture-based robotic control systems. Multiple critical factors substantiate the imperative for ongoing surveillance:

Fluctuations in angular velocity, especially sudden spikes along particular axes (e.g., Z-axis), facilitate the recognition of deliberate actions such as “rotate,” “tilt,” or “tap.” Each identified motion can be correlated with predetermined robotic commands, such end-effector rotation or gripper activation. Precise real-time interpretation guarantees a responsive and intuitive interaction experience between humans and robots.

- 2.

Verification of Sensor Fusion and Filtering Algorithms

Raw IMU data are intrinsically noisy, exhibiting significant instability on axes affected by dynamic user movement, often X and Y. Evaluating these signals prior to and after filtering facilitates the assessment and refinement of sensor fusion algorithms—such as the complementing filter or Kalman filter—employed to stabilize input and enhance orientation estimation precision.

- 3.

Characterization of the Dominant Axis

Sensor monitoring enables the determination of the most reactive axis during gesture execution. In observed trials, the Z-axis demonstrated strong amplitude and low noise characteristics, indicating its appropriateness for rotation-based control commands. This understanding facilitates the enhancement of command mapping and strengthens the control strategy’s robustness.

- 4.

Calibration and System Debugging

Real-time signal tracking allows developers to identify and correct mismatches between input gestures and robotic responses. Such monitoring is essential for validating control loop integrity, reducing latency, and ensuring operational safety in industrial environments.

- 5.

Advantages of Research and Reproducibility

Access to both raw and processed sensor data improves transparency in the human–machine interaction process. It improves uniformity in experimental setups and facilitates comparative evaluations among different signal processing techniques. Furthermore, it promotes ongoing advancements in wearable robotics, human–machine interface design, and intuitive robot programming.

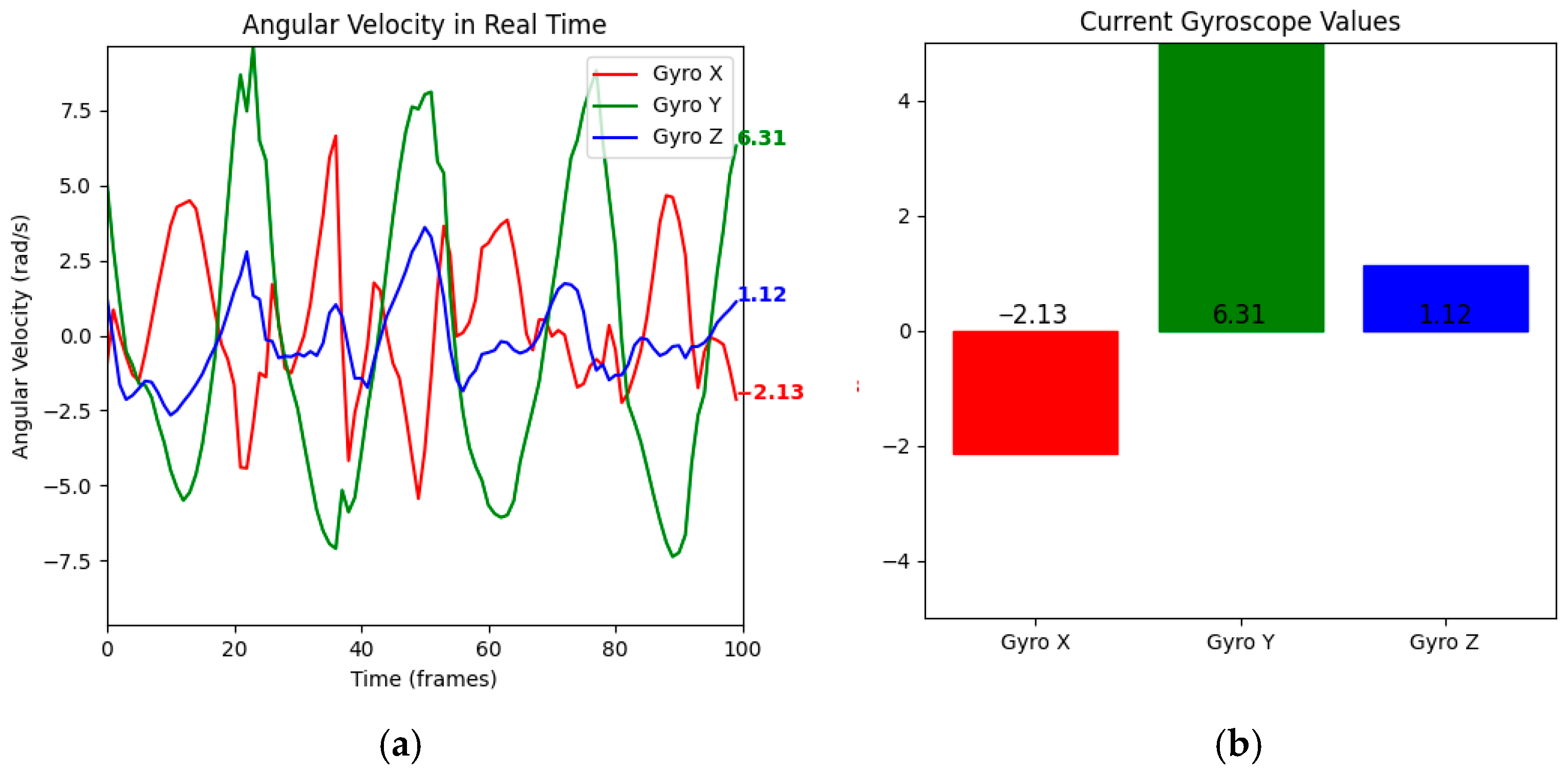

Figure 2 illustrates the time progression of gyroscope sensor values from the smartwatch and their analysis within the context of robotic control.

The graph on the left depicts fluctuations in angular velocity over time across all three axes. The primary variation on the Y-axis indicates rotational motion, which might be associated with a robotic arm rotation command.

- 2.

Immediate Feedback

The bar chart on the right encapsulates the latest gyroscope measurements. This visual feedback is essential for calibration and aids in identifying the prevailing direction of movement at a given moment.

- 3.

Functional Utility

It facilitates the operator’s evaluation of the gesture’s strength and clarity for system recognition.

It assists developers in identifying sensor anomalies or delays in data processing.

- 4.

Scientific Contribution

This type of visualization supports research on sensor fusion optimization by providing insight into the stability and reliability of gyroscope data during gesture-based robotic control.

The code presented above, Algorithm 1, initializes the smartwatch-based robot control system. It configures control modes, gesture state tracking, and sensor filtering parameters and initializes the robotic arm using the xArm Python SDK 1.15.0 API. It sets thresholds for motion filtering, response smoothing, and communication intervals, providing the core runtime environment for real-time gesture processing and robot movement.

| Algorithm 1: Initialization of Robotic Arm Controller |

1. class RoboticArmWatch(Watch)

2. “““

3. Control a robotic arm using a smartwatch via TouchSDK.

4. This class processes sensor data from the watch to extract

5. position information and control the robotic arm.

6. “““

7. def __init__(self, name_filter=None, robot_ip=“192.168.1.160”):

8. “““Initialize the robotic arm controller.”““

9. super().__init__(name_filter)

10. # Control state

11. self.active = False

12. self.mode = ControlMode.POSITION

13. self.last_tap_time = 0

14. self.double_tap_threshold = 0.5 # seconds

15. self.threading = False

16. # Position tracking

17. self.current_position = {“x”: 0.0, “y”: 0.0, “z”: 0.0}

18. self.target_position = {“x”: 0.0, “y”: 0.0, “z”: 0.0}

19. self.reference_orientation = None

20. self.reference_acceleration = None

21. self.last_update_time = time.time()

22. # Motion processing parameters

23. self.position_scale = 100.0 # Scale factor for position mode

24. self.orientation_scale = 2.0 # Scale factor for orientation mode

25. self.movement_threshold = 0.1 # Increased minimum movement to register (was 0.05)

26. self.smoothing_alpha = 0.3 # Low-pass filter coefficient (0-1)—slightly more responsive

27. # Robot control parameters

28. self.update_interval = 0.2 # Send position updates at most every 200 ms

29. self.last_send_time = 0

30. self.position_change_threshold = 5.0 # Minimum position change to send update (mm)

31. self.speed_factor = 50 # Speed factor for robot movement (mm/s)

32. self.max_speed = 200 # Maximum speed (mm/s)

33. # Gesture state

34. self.pinch_active = False

35. self.last_gesture_time = 0

36. self.gesture_cooldown = 1.0 # seconds

37. # Data filtering

38. self.filtered_acceleration = [0.0, 0.0, 0.0]

39. self.filtered_gravity = [0.0, 0.0, 0.0]

40. self.filtered_angular_velocity = [0.0, 0.0, 0.0]

41. self.filtered_orientation = [0.0, 0.0, 0.0, 1.0] # quaternion |

5. Experimental Configuration

Experiments were conducted in a laboratory setting with a single operator donning the smartwatch. Predefined gestures were assessed to determine accuracy, latency, and responsiveness. The ThingWorx platform recorded real-time motion data for analysis. The control system was assessed based on command repeatability, perceived usability, and the correlation between wristwatch movements and robotic actions.

The proposed method was implemented using a Xiaomi Smartwatch 2 Pro and the WowMouse application created by Doublepoint. This program was chosen for its exceptional versatility and cross-platform compatibility, facilitating smooth communication with various wristwatch devices operating on both Wear OS (Android-based) and watchOS (iOS-based) systems. The WowMouse application facilitated real-time communication between the smartwatch and the robotic platform. The TouchSDK Python library enabled the gathering of sensor data from the smartwatch’s integrated inertial sensors. The data streams, comprising accelerometer, gyroscope, and optionally magnetometer readings, were subsequently processed for gesture detection and motion tracking, establishing the foundation for robot control commands.

Figure 4 illustrates the practical interaction between the smartwatch interface and the industrial robotic arm during control tasks. In

Figure 4a, the operator’s hand is in a neutral pose, preparing to execute a control gesture. In

Figure 4b, the WowMouse app on the Xiaomi Smartwatch 2 Pro detects and highlights an active gesture (e.g., pinch or tap), which is subsequently interpreted by the processing script via TouchSDK and transmitted to the robot for execution. This visual feedback confirms successful gesture recognition and enables real-time user awareness during control tasks.

Figure 5 depicts the three principal hand movement directions aligned with the Cartesian coordinate axes—X, Y, and Z—as recorded by the inertial sensors (IMUs) within the smartwatch.

X-Axis Movement: Lateral (left–right) displacement of the hand, usually identified using accelerometer data on the horizontal plane.

Y-Axis Movement: Vertical displacement, typically linked to the elevation or descent of the hand.

Z-Axis Movement: Rotational movement (roll), demonstrated by a twisting motion of the forearm that alters the hand’s orientation around its central axis.

These motion directions are crucial for interpreting user intent in a smartwatch-controlled robotic system. The inertial measurement unit (IMU) embedded within the smartwatch captures acceleration and angular velocity in real time. The data are subjected to filtering and fusion algorithms to track movement along the three axes. Understanding and distinguishing these directed movements allows the system to associate each movement with certain robotic commands, such as moving the robotic arm along a specified axis or rotating its joints. Accurate calibration guarantees theuniform and precise identification of these movements among users and under varying operational conditions.

This visualization from

Figure 6 confirms the alignment between the interpreted gesture and its spatial projection. It supports the debugging of the sensor-to-robot mapping and allows trajectory comparison in gesture recognition training tasks.

During the initial phase of testing, the focus was placed on acquiring and interpreting raw sensor data from the smartwatch’s embedded inertial measurement unit (IMU). The IMU comprises an accelerometer, gyroscope, and optionally a magnetometer, facilitating multi-dimensional motion tracking and orientation estimation. Sensor fusion and signal processing algorithms were employed to extract the following components:

Linear acceleration from the accelerometer detected the device’s movement relative to an inertial reference frame. Raw acceleration data along the X-, Y-, and Z-axes were subjected to low pass filtering to mitigate noise prior to integration into motion trajectories, facilitating the translational control of the robotic arm.

Angular velocity obtained via the gyroscope measured rotational motion around the roll, pitch, and yaw axes. These signals were also filtered exponentially and integrated to estimate relative rotation, especially useful for detecting Z-axis rotations and contributing to gravity vector estimation.

Gravity vector, computed as a software-based virtual sensor combining accelerometer and gyroscope data, allowed the extraction of device orientation relative to the vertical axis. A similar low-pass filtering approach was performed to stabilize the resulting vector, which was utilized to estimate “up/down” directions based on wrist posture.

Three-dimensional orientation, represented as a quaternion, was computed through fusion of accelerometer, gyroscope, and optionally magnetometer inputs. These quaternions were normalized and later converted into Euler angles for interpretation within the robot control logic.

This 3D plot (

Figure 7) illustrates the spatial trajectory and orientation of the smartwatch as interpreted during one complete gesture cycle. It plays a crucial role in the evaluation of spatial accuracy, motion stability, and system responsiveness.

The blue line illustrates the entire motion trajectory in three-dimensional space, depicting the operator’s hand movement inside a volumetric framework.

This graphic verifies if the gesture stays inside anticipated space limits (e.g., secure working areas adjacent to the robot).

- 2.

Orientation Mapping

The use of quaternions to derive orientation axes (RGB) provides a robust and drift-resistant way of tracking rotational movement.

This is critical for differentiating gestures that may have similar trajectories but differ in wrist rotation.

- 3.

Current Position Feedback

The red marker offers immediate visual feedback on the final position at the end of the gesture.

This is useful for comparing start vs. end positions and for determining gesture completion.

- 4.

Utility in Research

This figure supports the development of more advanced sensor fusion techniques by combining orientation and position tracking.

It also enables the evaluation of gesture precision and repeatability, two essential metrics in human–robot collaboration scenarios.

Sensor fusion was achieved using algorithms like complementary and Kalman filters, which improved the stability and accuracy of orientation estimates. The TouchSDK provided access to both quaternion and gravity vector data, allowing smooth integration into the robot control system.

The diagram from

Figure 8 illustrates the end-to-end data flow in smartwatch-based robotic control architecture. It begins with the Xiaomi Smartwatch 2 Pro, which captures raw sensor data—including linear acceleration, angular velocity, gravity vector, and 3D orientation (quaternion). These values are processed using internal filtering (low-pass and sensor fusion), generating clean, interpretable motion data.

The processed data are then handled by a Python script, which performs further smoothing, mapping gestures to robotic commands, and managing system-level control logic. This Python layer serves as the central interface for robot execution and communication.

Next, two transmission paths are established:

One towards the xArm robot, where real-time motion commands are executed using Python APIs.

One towards the ThingWorx platform, which receives sensor streams and robot states via REST API, enabling cloud-based monitoring and digital twin visualization.

This visual flow summarizes the signal path from wearable sensor acquisition to final actuation and cloud monitoring. Each layer in the system—sensing, processing, command generation, execution, and visualization—plays a critical role in enabling an intuitive, real-time, and traceable control loop between the human operator and the robotic system. The integration of ThingWorx ensures transparency and robustness in both development and industrial deployment contexts.

The connection to the robot is established using the UFactory xArm libraries.

Data transmission to ThingWorx begins upon program initialization, during which the system performs calibration and starts a separate thread to continuously stream the robot’s current positional data and the smartwatch’s sensor readings. This real-time data flow enables the live monitoring and verification of system behavior. The connection to ThingWorx is implemented via a REST API.

APIs (Application Programming Interfaces) are essential for the interaction between software and hardware, allowing the exchange of data and commands between different components of the system. In our project, we use two main APIs:

xArm API—for controlling the robotic arm.

ThingWorx API—for real-time communication and monitoring between the local system and the cloud platform, allowing sensor and robot data to be sent, visualized, and monitored remotely.

For controlling the robotic arm, we use the xArmAPI to interact with the xArm robotic arm. This API allows the initialization, control, and monitoring of the robotic arm’s status.

This function presented in Algorithm 2, processes incoming sensor data from the smartwatch. It performs filtering, checks the activation status, ensures calibration is applied, and updates the robot’s position accordingly. The function enables real-time data-driven control based on the selected mode (position or orientation).

| Algorithm 2: Sensor Frame Processing. |

1. def on_sensors(self, sensor_frame):

2. “““Process sensor data updates from the watch.”””

3. # Apply low-pass filter to smooth sensor data

4. self._apply_smoothing_filter(sensor_frame)

5. # Skip processing if not active

6. if not self.active:

7. return

8. # Initialize reference on first activation

9. if self.reference_orientation is None:

10. self._calibrate()

11. return

12. # Calculate time delta for integration

13. current_time = time.time()

14. dt = current_time–self.last_update_time

15. self.last_update_time = current_time

16. # Process position based on current mode

17. if self.mode == ControlMode.POSITION:

18. self._update_position_from_motion(dt)

19. elif self.mode == ControlMode.ORIENTATION:

20. self._update_position_from_orientation()

21. # GESTURE mode only responds to gestures, not sensor updates

22. # Only send position update if enough time has passed and position changed significantly

23. self._update_arm_position() |

6. Results and Discussion

Results showed consistent gesture recognition with minimal latency. Rotational gestures using gyroscope data had a delay under 200 ms, while tap gestures were executed almost instantly. This confirms the system’s ability to support real-time robotic control based on wrist motion.

Sensor drift in Inertial Measurement Units (IMUs), particularly over extended durations or dynamic motions, leads to degraded orientation accuracy. This can be addressed using sensor fusion algorithms such as Extended Kalman Filters (EKFs), complementary filtering, or periodic orientation resets based on a known reference position.

Calibration challenges arise when mapping user gestures to robot commands due to individual variability in wearing position and biomechanics. To improve consistency, a startup calibration routine that includes pose alignment and guided gestures can be implemented.

Battery life is a critical concern due to the continuous transmission of high-frequency IMU data. Energy efficiency can be enhanced through adaptive sampling (lower frequency during inactivity), motion-triggered transmission, and the use of industrial-grade smartwatches with extended battery capacities.

To avoid unintended actions, the system applies gesture confirmation mechanisms such as minimum duration thresholds and optional double-tap inputs. These safety measures help distinguish deliberate commands from natural arm movements.

To ensure safe operation, the system incorporates a dedicated stop gesture, which immediately halts the robot’s motion upon detection. This acts as a software-level emergency interruption. Furthermore, the robotic arm used in the experimental setup includes a physical emergency stop button, as commonly found in industrial systems. Together, these features form a multi-layered fail-safe approach, combining gesture-driven and hardware-based safety mechanisms.

The ThingWorx platform effectively visualized state changes and position tracking. Data from the experiment logs confirms accurate alignment between smartwatch motion and robotic action, with X_R, Y_R, Z_R, X_W, Y_W and Z_W showing closely matching values during synchronized gestures.

Table 1 presents a sample of timestamped Cartesian coordinate data captured simultaneously from the smartwatch (columns marked “W”) and the robotic arm (columns marked “R”), highlighting motion synchronization in millimeter precision. These are data logs exported from the ThingWorx platform, capturing real-time sensor values from both the industrial robot and the smartwatch. Each row corresponds to a timestamped event, showing the following columns:

X_R, Y_R, and Z_R: Real-time positional or orientation values along the X-, Y-, and Z-axes of the robot.

X_W, Y_W, and Z_W: Corresponding sensor values from the smartwatch along the same axes.

This dataset is used to monitor and evaluate the synchronization accuracy between the operator’s smartwatch gestures and the robot’s response. It serves several key purposes:

Calibration verification: It allows developers to detect lag, mismatches, or drift between input and output.

System performance monitoring: It enables an analysis of sensor stability, noise, and gesture recognition reliability over time.

Debugging and improvement: The ability to log both device data streams in parallel helps optimize sensor fusion algorithms and data smoothing techniques.

Human–robot alignment tracking: It aids in understanding how effectively the smartwatch input translates into robotic motion, supporting both safety and precision in deployment scenarios.

This level of real-time visibility makes ThingWorx a powerful diagnostic and analytics tool within the architecture of wearable-controlled robotic systems.

Figure 9 presents synchronized real-time plots and bar graphs displaying motion data for both the smartwatch and the robotic arm along the X-, Y-, and Z-axes.

This visualization reflects the correlation between smartwatch input and robotic response in real time. The near-synchronous fluctuation patterns across the axes suggest effective mapping and communication between the wearable IMU and the robotic control logic. Differences in magnitude and delay are minimal, confirming successful data acquisition, processing, and actuation within the integrated system. The display also provides diagnostic value during system calibration and gesture testing.

A preliminary user study was conducted to evaluate the real-world usability of the smartwatch-based robotic control system. Ten participants (aged 22–31), none of whom had prior experience with robotics or wearable interfaces, were recruited for the test. After a 5 min guided tutorial, users were asked to perform a sequence of three predefined robot control tasks using wrist-based gestures.

All participants were able to complete the tasks successfully, requiring on average fewer than three attempts per gesture to reach consistent execution. Qualitative feedback was gathered through short interviews, with users highlighting the system’s intuitiveness, low cognitive load, and physical comfort. The use of familiar consumer hardware (the smartwatch) contributed to rapid adaptation and positive user acceptance. These results support the potential applicability of the proposed system in assistive and collaborative industrial environments and lay the groundwork for more comprehensive human-centered evaluations in future work.

To control the robot, a predefined set of discrete wrist gestures is used. These include the following:

Single tap: starts or stops the robot’s following behavior (also serves as a general stop command).

Double tap: toggles the gripper (open/close).

Lateral wrist flick (X-axis movement): triggers left/right motion.

Wrist tilt forward/backward (Y-axis movement): initiates forward or backward motion.

Wrist rotation (Z-axis): controls vertical translation (up/down).

These gestures are recognized using thresholds applied to IMU data (the accelerometer and gyroscope) and are not based on continuous positional tracking. This allows for robust gesture recognition regardless of user position or orientation, minimizing false triggers from natural arm movement.

Table 2 summarizes the mapping between gestures and robot actions.

The quantitative results presented in

Table 2 confirm the feasibility of utilizing smartwatch-based gesture recognition for real-time robotic control. Among the evaluated gestures, the two-finger tap demonstrated superior performance, achieving the lowest average latency and the highest precision, which highlights its robustness and suitability for time-critical tasks. In contrast, lateral movements along the X-axis exhibited greater variability, primarily due to increased sensitivity to inertial sensor noise and the presence of involuntary micro-movements. This type of gesture typically involves smaller amplitude displacements, which can overlap with natural arm sway during normal activities, thereby reducing classification confidence. These findings underscore the importance of selecting gesture types with high signal-to-noise ratios and further motivate the development of filtering and stabilization techniques to enhance the reliability of horizontal gesture detection in wearable control systems.

To enable multi-robot and multi-operator deployment, the system architecture must incorporate user identification, session management, and conflict arbitration mechanisms. Each smartwatch can be uniquely paired with a robot or assigned dynamically based on task context using authentication tokens or device IDs. Large-scale goals, such as collaborative manipulation or distributed inspection tasks, require a centralized coordination platform. This can be achieved using middleware such as ROS or IoT platforms like ThingWorx, which allow the dynamic routing of gesture commands, task scheduling, and state synchronization across devices.

Scalability is challenged by latency bottlenecks, wireless interference, and gesture ambiguity when multiple users operate simultaneously. These can be mitigated through frequency isolation (e.g., using BLE channel management), gesture personalization (per-user gesture profiles), and priority-based task allocation. Future developments may also explore cloud edge hybrid models, where gesture recognition runs locally on the smartwatch for a fast response, while high-level orchestration is handled in the cloud. These architectural upgrades will be essential for real-world industrial use cases involving complex robot networks and heterogeneous human teams.

While the system shows strong potential for alignment with Industry 4.0 and 5.0 paradigms—particularly in terms of human-centric interaction and digital connectivity—its current validation is limited to laboratory conditions. Full industrial readiness would require further testing under real-world conditions involving ambient noise, electromagnetic interference, and multiple simultaneous users. These aspects are planned for future investigations to assess system scalability and robustness in operational environments.

The current study was designed around a fixed set of predefined gestures to ensure controlled evaluation conditions and consistent baseline performance. However, such an approach does not fully capture the variability present in real-world scenarios. Gesture execution can be influenced by factors such as user fatigue, stress, or individual motor patterns. To address these challenges, future developments will focus on adaptive gesture recognition systems capable of continuous learning and real-time recalibration. Expanding the training dataset across diverse user profiles and usage contexts will also be essential to improve robustness and long-term usability.

7. Conclusions

The smartwatch control system offers a practical and ergonomic alternative to traditional robot–human machine interfaces. Its portable design and intuitive gesture-based interface make it particularly suitable for flexible or small-scale industrial applications. In comparison to conventional methods like teach pendants or script-based programming, the smartwatch system significantly accelerates user onboarding and empowers non-expert operators to engage with robotic systems more comfortably and efficiently.

In contrast to standard robotic programming interfaces such as teach pendants or coding environments, the smartwatch-based approach offers several notable benefits. Firstly, it facilitates intuitive, gesture-driven control without necessitating prior programming skills, thereby streamlining the learning curve for users with limited technical backgrounds. Secondly, the system supports untethered and mobile operation, liberating operators from the constraints of stationary consoles or wired connections. Thirdly, leveraging familiar consumer technology—specifically smartwatches—increases user comfort and acceptance, particularly in assistive or collaborative contexts. Collectively, these attributes contribute to expedited user onboarding, enhanced ergonomics, and improved accessibility across dynamic industrial and service environments.

While the preliminary findings are encouraging, further research should focus on benchmarking this smartwatch interface against traditional programming methods. Such evaluations should quantitatively assess differences in task efficiency, error rates, and user learning trajectories across diverse application scenarios.

To this end, a quantitative analysis was performed utilizing 2038 synchronized samples, capturing motion data in degrees per second (°/s) along the X-, Y-, and Z-axes for both the smartwatch and the robotic arm. The results are consolidated in the accompanying

Table 3, which details the Mean Absolute Differences (MADs) and Pearson correlation coefficients between the two data sources.

Minimal variation was seen on the Y-axis, signifying consistent alignment for vertical motion. The X- and Z-axes exhibited greater inaccuracies attributable to dynamic rotations and sensor drift. The Z-axis demonstrated the best correlation (0.855), indicating a robust consistency between smartwatch input and robotic motion for circular motions. Correlation values indicate a moderate to strong alignment across all axes, affirming the system’s efficacy for intuitive control.

This study illustrates the practicality and dependability of manipulating an industrial robotic arm via natural gestures utilizing a commercially available smartwatch integrated with inertial sensors. The suggested solution facilitates accurate and natural human–machine interaction through the integration of data fusion methods, real-time processing using Python, and cloud synchronization with ThingWorx.

The experimental evaluation reveals the following:

A strong correlation between smartwatch input and robotic motion (e.g., r = 0.855 on the Z-axis);

Average angular deviation below 65°/s on the Y-axis;

High stability in 3D orientation and position tracking based on quaternion processing.

Beyond the technical achievements, this work introduces a novel and cost-effective approach for implementing wearable robot control with several practical applications:

Assistive control for individuals with mobility impairments, allowing them to initiate or influence robotic actions in environments such as rehabilitation, assisted manufacturing, or emergency intervention.

Hands-free command execution in industrial settings, enabling operators to remotely change robot tools (e.g., switch grippers) or initiate pre-programmed routines without interacting with physical interfaces.

Intuitive teleoperation in constrained or hazardous environments, where traditional controllers may be impractical or unsafe to use.

Overall, the smartwatch-based interface provides a scalable, ergonomic, and accessible solution that aligns with Industry 5.0 objectives by enhancing collaboration between humans and intelligent machines.

Future work will also explore the integration of multimodal input channels, such as voice commands and gaze tracking, to improve interaction flexibility and naturalness. Voice input can enable command confirmation or mode switching without requiring additional gestures, while gaze-based interaction can support intuitive robot targeting or selection in collaborative settings. Combining these modalities with inertial gesture control has the potential to create a seamless, context-aware human–robot interface suitable for industrial and assistive environments.

Future improvements to the system will focus on integrating adaptive and context-aware control algorithms. By leveraging real-time feedback from inertial sensors and robot status data, the system could dynamically adjust gesture sensitivity, motion thresholds, or control mappings based on environmental or task-specific factors. Additionally, machine learning models such as recurrent neural networks or decision trees could be employed to improve robustness by adapting to individual user behavior or the operational context. These adaptive mechanisms are essential for enabling intuitive and reliable human–robot interaction in variable industrial conditions, where noise, fatigue, or workflow changes may affect input quality and system response.