The Role of Environmental Assumptions in Shaping Requirements Technical Debt

Abstract

1. Introduction

- A case study from the field of small uncrewed aerial systems (sUASs) is analyzed based on safety requirements and their associated environmental assumptions.

- This analysis illustrates how missing or incorrect environmental assumptions lead to different types of RTD.

- A new classification of RTD is proposed based on environmental assumptions. Specifically, various types of RTD that emerge due to incomplete, incorrect, or evolving environmental assumptions are highlighted.

- An initial attempt is made to relate these RTD types to the RTD quantification model (RTDQM), in order to show how environmental assumptions can influence measurable dimensions of debt.

2. Background and Related Work

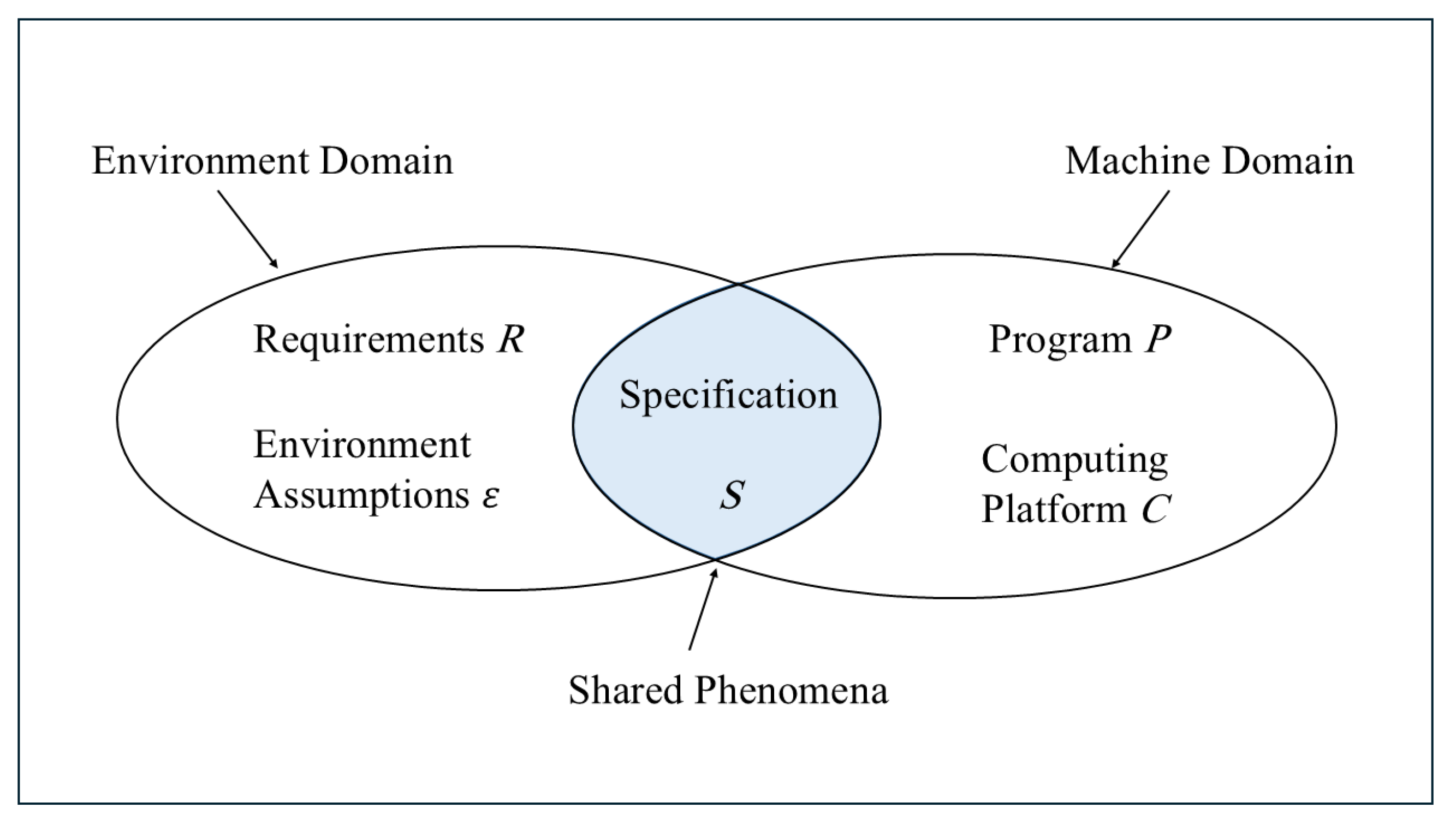

2.1. Environmental Assumptions

2.2. Requirements Technical Debt

3. Methodology

3.1. Case Study Context

3.2. Relevance to Environmental Assumptions

3.3. Analytical Procedure

- 1.

- Document Review: A thorough examination of the DroneResponse case study presented by Granadeno et al. [34] was conducted, with particular attention to how environmental assumptions were linked to derived safety requirements.

- 2.

- Scenario Manipulation: For each requirement–assumption pair, hypothetical scenarios were explored in which assumptions were omitted, altered, or allowed to evolve. These variations reflect plausible conditions such as increased communication latency, sensor inaccuracies, or changes in wind dynamics.

- 3.

- RTD Impact Evaluation: Using well-established concepts from the RTD literature [4,6,7], the potential consequences of each assumption breakdown were analyzed. The evaluation focused on how these assumption failures could introduce issues into the requirement specifications, such as ambiguity, misalignment, or degradation over time.

- 4.

- Interpretive Analysis: The analysis leveraged domain knowledge in cyber-physical systems and requirements engineering to interpret how assumption failures contribute to RTD formation.

4. Results and Analysis

4.1. Geolocation Uncertainty (DR1)

4.2. Stopping Distance Without Wind (DR2)

4.3. Impact of Wind on Stopping Distance (DR3)

4.4. Projected Distance During Communication Delays (DR4)

4.5. Safe Minimum Separation (DR5)

4.6. RTD Classification Summary

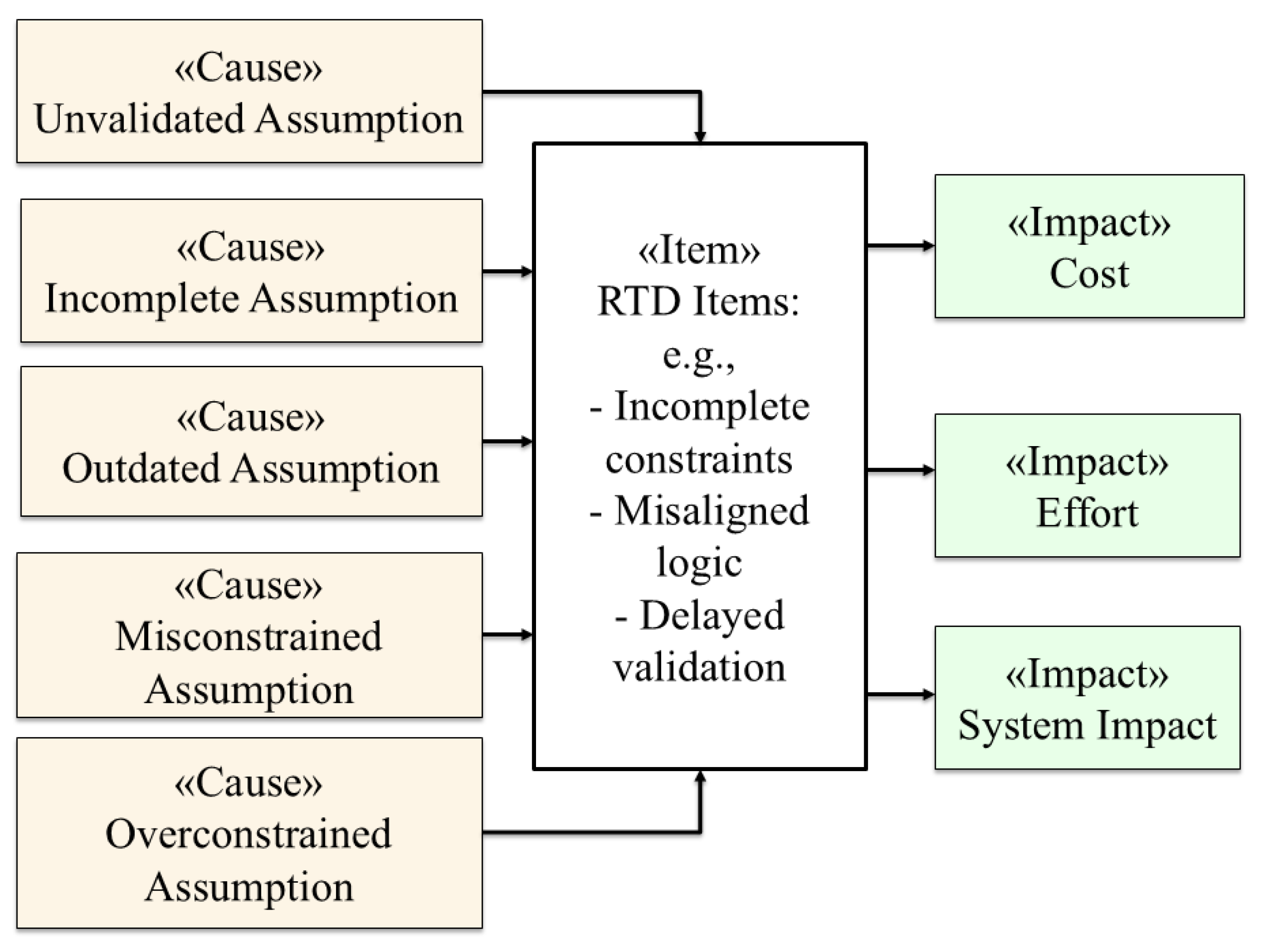

- Unvalidated assumption debt: This type of debt refers to requirements grounded in assumptions that were never verified or tested during development.

- Incomplete assumption debt: This type arises from the omission or lack of necessary environmental assumptions during the formulation of requirements.

- Outdated assumption debt: This type refers to the technical debt that accumulates when requirements are not updated to reflect changes in environmental assumptions.

- Misconstrained assumption debt: This type refers to technical debt arising from requirements that are based on environmental assumptions imposing incorrect or overly rigid constraints.

- Overconstrained assumption debt: This refers to overly detailed requirements because they are based on rigid assumptions, leaving no flexibility to adapt to changing conditions.

5. Discussion and Implications

6. Threats to Validity

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RTD | Requirements Technical Debt |

| sUAS | small Uncrewed Aerial System |

| RTDQM | Requirements Technical Debt Quantification Model |

| DR | Derived Requirement |

| ER | Requirements Engineering |

| CPS | Cyber-Physical Systems |

| EKF | Extended Kalman Filter |

| GPS | Global Positioning System |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

References

- McConnell, S. Managing Technical Debt; Technical Report; Construx Software Builders: Bellevue, WA, USA, 2008. [Google Scholar]

- Brown, N.; Cai, Y.; Guo, Y.; Kazman, R.; Kim, M.; Kruchten, P.; Lim, E.; MacCormack, A.; Nord, R.; Ozkaya, I.; et al. Managing technical debt in software-reliant systems. In Proceedings of the FSE/SDP Workshop on Future of Software Engineering Research (FoSER ’10), Santa Fe, NM, USA, 7–8 November 2010; pp. 47–52. [Google Scholar] [CrossRef]

- Kruchten, P.; Nord, R.L.; Ozkaya, I. Technical Debt: From Metaphor to Theory and Practice. IEEE Softw. 2012, 29, 18–21. [Google Scholar] [CrossRef]

- Ernst, N.A. On the role of requirements in understanding and managing technical debt. In Proceedings of the 2012 Third International Workshop on Managing Technical Debt (MTD), Zurich, Switzerland, 5 June 2012; pp. 61–64. [Google Scholar]

- Abad, Z.S.H.; Ruhe, G. Using real options to manage technical debt in requirements engineering. In Proceedings of the 2015 IEEE 23rd International Requirements Engineering Conference (RE), Ottawa, ON, Canada, 24–28 August 2015; pp. 230–235. [Google Scholar]

- Melo, A.; Fagundes, R.; Lenarduzzi, V.; Santos, W.B. Identification and measurement of Requirements Technical Debt in software development: A systematic literature review. J. Syst. Softw. 2022, 194, 111483. [Google Scholar] [CrossRef]

- Perera, J.; Tempero, E.; Tu, Y.C.; Blincoe, K. Modelling the quantification of requirements technical debt. Requir. Eng. 2024, 29, 421–458. [Google Scholar] [CrossRef]

- Robiolo, G.; Scott, E.; Matalonga, S.; Felderer, M. Technical debt and waste in non-functional requirements documentation: An exploratory study. In Proceedings of the Product-Focused Software Process Improvement: 20th International Conference, PROFES 2019, Barcelona, Spain, 27–29 November 2019; Springer: Cham, Switzerland, 2019; pp. 220–235. [Google Scholar]

- Alenazi, M. Requirements Technical Debt Through the Lens of Environment Assumptions. In Proceedings of the 2025 IEEE/ACM International Conference on Technical Debt (TechDebt), Ottawa, ON, Canada, 27–28 April 2025; pp. 40–46. [Google Scholar]

- Jackson, M. The Meaning of Requirements. Ann. Softw. Eng. 1997, 3, 5–21. [Google Scholar] [CrossRef]

- Yang, C.; Liang, P.; Avgeriou, P. Assumptions and their management in software development: A systematic mapping study. Inf. Softw. Technol. 2018, 94, 82–110. [Google Scholar] [CrossRef]

- Lann, G.L. An analysis of the Ariane 5 flight 501 failure-a system engineering perspective. In Proceedings of the 1997 Workshop on Engineering of Computer-Based Systems (ECBS ’97), Monterey, CA, USA, 24–28 March 1997; pp. 339–346. [Google Scholar]

- Knight, J. Safety critical systems: Challenges and directions. In Proceedings of the 24th International Conference on Software Engineering (ICSE 2002), Orlando, FL, USA, 25 May 2002; pp. 547–550. [Google Scholar]

- Steingruebl, A.; Peterson, G. Software Assumptions Lead to Preventable Errors. IEEE Secur. Priv. 2009, 7, 84–87. [Google Scholar] [CrossRef]

- Samin, H.; Walton, D.; Bencomo, N. Surprise! Surprise! Learn and Adapt. In Proceedings of the 24th International Conference on Autonomous Agents and Multiagent Systems (AAMAS ’25), Detroit, MI, USA, 19–23 May 2025; pp. 1821–1829. [Google Scholar]

- Sawyer, P.; Bencomo, N.; Whittle, J.; Letier, E.; Finkelstein, A. Requirements-Aware Systems: A Research Agenda for RE for Self-adaptive Systems. In Proceedings of the RE 2010, 18th IEEE International Requirements Engineering Conference, Sydney, NSW, Australia, 27 September–1 October 2010; pp. 95–103. [Google Scholar]

- Lewis, G.A.; Mahatham, T.; Wrage, L. Assumptions Management in Software Development; Technical Report; Software Engineering Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2004. [Google Scholar]

- Yang, C.; Liang, P.; Avgeriou, P.; Eliasson, U.; Heldal, R.; Pelliccione, P. Architectural Assumptions and Their Management in Industry—An Exploratory Study. In Proceedings of the Software Architecture—11th European Conference (ECSA 2017), Canterbury, UK, 11–15 September 2017; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2017; Volume 10475, pp. 191–207. [Google Scholar]

- Ali, S.; Lu, H.; Wang, S.; Yue, T.; Zhang, M. Uncertainty-Wise Testing of Cyber-Physical Systems. Adv. Comput. 2017, 106, 23–94. [Google Scholar]

- Zhang, M.; Selic, B.; Ali, S.; Yue, T.; Okariz, O.; Norgren, R. Understanding Uncertainty in Cyber-Physical Systems: A Conceptual Model. In Proceedings of the European Conference on Modelling Foundations and Applications (ECMFA), Vienna, Austria, 6–7 July 2016; pp. 247–264. [Google Scholar]

- Liu, C.; Zhang, W.; Zhao, H.; Jin, Z. Analyzing Early Requirements of Cyber-physical Systems through Structure and Goal Modeling. In Proceedings of the 20th Asia-Pacific Software Engineering Conference (APSEC 2013), Bangkok, Thailand, 2–5 December 2013; Volume 1, pp. 140–147. [Google Scholar]

- Jackson, M. Problems and requirements (software development). In Proceedings of the Second IEEE International Symposium on Requirements Engineering, York, UK, 27–29 March 1995; pp. 2–9. [Google Scholar]

- Peng, Z.; Rathod, P.; Niu, N.; Bhowmik, T.; Liu, H.; Shi, L.; Jin, Z. Testing software’s changing features with environment-driven abstraction identification. Requir. Eng. 2022, 27, 405–427. [Google Scholar] [CrossRef] [PubMed]

- Ghezzi, C.; Sharifloo, A.M. Quantitative verification of non-functional requirements with uncertainty. In Proceedings of the Sixth International Conference on Dependability and Computer Systems DepCoS-RELCOMEX 2011, Wroclaw, Poland, 27 June–11 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 47–62. [Google Scholar]

- Bloem, R.; Chockler, H.; Ebrahimi, M.; Strichman, O. Specifiable robustness in reactive synthesis. Form. Methods Syst. Des. 2022, 60, 259–276. [Google Scholar] [CrossRef]

- Mohammadinejad, S.; Deshmukh, J.V.; Puranic, A.G. Mining environment assumptions for cyber-physical system models. In Proceedings of the 2020 ACM/IEEE 11th International Conference on Cyber-Physical Systems (ICCPS), Sydney, NSW, Australia, 21–25 April 2020; pp. 87–97. [Google Scholar]

- Fifarek, A.W.; Wagner, L.G.; Hoffman, J.A.; Rodes, B.D.; Aiello, M.A.; Davis, J.A. SpeAR v2.0: Formalized past LTL specification and analysis of requirements. In Proceedings of the NASA Formal Methods: 9th International Symposium (NFM 2017), Moffett Field, CA, USA, 16–18 May 2017; Springer: Cham, Switzerland, 2017; pp. 420–426. [Google Scholar]

- Yang, X.; Chen, X.; Wang, J. A Model Checking Based Software Requirements Specification Approach for Embedded Systems. In Proceedings of the 2023 IEEE 31st International Requirements Engineering Conference Workshops (REW), Hannover, Germany, 4–5 September 2023; pp. 184–191. [Google Scholar]

- van Lamsweerde, A.; Letier, E. Integrating Obstacles in Goal-Driven Requirements Engineering. In Proceedings of the 1998 International Conference on Software Engineering (ICSE 98), Kyoto, Japan, 19–25 April 1998; pp. 53–62. [Google Scholar]

- Alrajeh, D.; Kramer, J.; van Lamsweerde, A.; Russo, A.; Uchitel, S. Generating obstacle conditions for requirements completeness. In Proceedings of the 34th International Conference on Software Engineering (ICSE 2012), Zurich, Switzerland, 2–9 June 2012; pp. 705–715. [Google Scholar]

- Alrajeh, D.; Cailliau, A.; van Lamsweerde, A. Adapting requirements models to varying environments. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering (ICSE ’20), Seoul, Republic of Korea, 5–11 October 2020; pp. 50–61. [Google Scholar]

- Sturmer, S.; Niu, N.; Bhowmik, T.; Savolainen, J. Eliciting environmental opposites for requirements-based testing. In Proceedings of the 2022 IEEE 30th International Requirements Engineering Conference Workshops (REW), Melbourne, VIC, Australia, 15–19 August 2022; pp. 10–13. [Google Scholar]

- Amin, M.R.; Bhowmik, T.; Niu, N.; Savolainen, J. Environmental Variations of Software Features: A Logical Test Cases’ Perspective. In Proceedings of the 2023 IEEE 31st International Requirements Engineering Conference Workshops (REW), Hannover, Germany, 4–5 September 2023; pp. 192–198. [Google Scholar]

- Granadeno, P.A.A.; Bernal, A.M.R.; Al Islam, M.N.; Cleland-Huang, J. An Environmentally Complex Requirement for Safe Separation Distance Between UAVs. In Proceedings of the 2024 IEEE 32nd International Requirements Engineering Conference Workshops (REW), Reykjavik, Iceland, 24–25 June 2024; pp. 166–175. [Google Scholar]

- van Lamsweerde, A. Requirements Engineering: From System Goals to UML Models to Software Specifications, 1st ed.; Wiley Publishing: Hoboken, NJ, USA, 2009. [Google Scholar]

- Cunningham, W. The WyCash portfolio management system. ACM Sigplan Oops Messenger 1992, 4, 29–30. [Google Scholar] [CrossRef]

- Rios, N.; Spínola, R.O.; Mendonça, M.; Seaman, C. The most common causes and effects of technical debt: First results from a global family of industrial surveys. In Proceedings of the 12th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Oulu, Finland, 11–12 October 2018; pp. 1–10. [Google Scholar]

- Perera, J.; Tempero, E.; Tu, Y.C.; Blincoe, K. Quantifying requirements technical debt: A systematic mapping study and a conceptual model. In Proceedings of the 2023 IEEE 31st International Requirements Engineering Conference (RE), Hannover, Germany, 4–8 September 2023; pp. 123–133. [Google Scholar]

- Fowler, M. Technical Debt Quadrant. 2009. Available online: https://martinfowler.com/bliki/TechnicalDebtQuadrant.html (accessed on 17 July 2025).

- Lenarduzzi, V.; Fucci, D. Towards a holistic definition of requirements debt. In Proceedings of the 2019 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Porto de Galinhas, Brazil, 19–20 September 2019; pp. 1–5. [Google Scholar]

- Sommerville, I. Software Engineering, 9th ed.; Pearson Education Inc.: London, UK, 2011. [Google Scholar]

- AACE. RECALL: Medtronic MiniMed Insulin Pumps Recalled for Incorrect Insulin Dosing. 2020. Available online: https://pro.aace.com/recent-news-and-updates/recall-medtronic-minimed-insulin-pumps-recalled-incorrect-insulin-dosing (accessed on 17 July 2025).

- Tirumala, A.S. An Assumptions Management Framework for Systems Software. Ph.D. Dissertation, University of Illinois at Urbana-Champaign, Champaign, IL, USA, 2006. [Google Scholar]

- Kohli, P.; Chadha, A. Enabling pedestrian safety using computer vision techniques: A case study of the 2018 Uber Inc. self-driving car crash. In Proceedings of the Future of Information and Communication Conference, San Francisco, CA, USA, 14–15 March 2019; Springer: Cham, Switzerland, 2019; pp. 261–279. [Google Scholar]

- Leveson, N.G.; Turner, C.S. An investigation of the Therac-25 accidents. Computer 1993, 26, 18–41. [Google Scholar] [CrossRef]

| Aspect | Requirement Phase | Implementation Phase |

|---|---|---|

| Origin | Incomplete, vague, or misunderstood requirements; missing or incorrect assumptions; neglecting user needs; failure to capture user feedback. | Poor coding practices; shortcuts; lack of refactoring, testing, architecture planning; outdated dependencies, insufficient modularization |

| Impact on Development | Incomplete functionality, safety risks, rework, misalignment with business goals, or large-scale redesigns. | Poor code quality, increased maintenance, localized bugs, and higher refactoring costs. |

| Visibility | Often unnoticed until late-stage testing or post-deployment; typically emerges during integration. | Usually detected during late development, testing, or feature addition. |

| Cost of Resolution | High as it may require redesign, extensive rework, or addressing regulatory compliance. | Moderate, as it typically resolved through targeted refactoring or added tests. |

| Risk Level | Strategic risk (e.g., functional failure, safety, or compliance issues). | Technical or operational risk (e.g., performance degradation, maintainability issues). |

| Requirement | Description | Environmental Assumptions | Type |

|---|---|---|---|

| DR1: Geolocation Accuracy | Drones must determine their geolocation and neighboring drones’ positions with at least 99% confidence within a 3D region. |

| Adjacent System |

| DR2: Stopping Distance Without Wind | Each drone must compute its maximum stopping distance under non-windy conditions, considering its velocity. |

| Operational environment (A8), Adjacent System (A9, A10) |

| DR3: Impact of Wind on Stopping Distance | Drones must account for wind effects when computing stopping distance. |

| Process (A11), Physical environment (A12, A13), Process (A14) |

| DR4: Projected Distance During Communication Delays | Drones must estimate the distance they and their neighbors will travel between status updates. |

| Operational environment |

| DR5: Maintaining Safe Minimum Separation | Drones must maintain a safe minimum separation distance at all times. |

| Operational environment |

| Assumption-Driven RTD | Defining Condition | Distinguishing Feature | Example from Case Study |

|---|---|---|---|

| Unvalidated Assumption Debt | The assumption is stated, but not verified or tested under real-world or expected conditions. | The assumption is explicit, but its correctness is uncertain. | Assuming GPS accuracy is always within 2 m without field validation. |

| Incomplete Assumption Debt | Key environmental factors are omitted entirely from the requirement specification. | The assumption is absent or only partially captured. | Not specifying wind variability in the separation distance requirements. |

| Outdated Assumption Debt | The assumption was once valid but has changed due to external/system evolution. | Reflects temporal decay or shifts in operational conditions. | Assuming reliable cloud connectivity that degrades as deployment context changes. |

| Incorrect Assumption Debt | The assumption is explicitly stated but factually wrong. | The assumption is invalid in context or leads to faulty behavior. | Assuming fixed communication latency when network delays fluctuate. |

| Overconstrained Assumption Debt | The system lacks flexibility to adapt when assumptions break or vary. | No alternative or adaptive mechanism is provided. | Assuming static geofence boundaries, with no support for dynamic airspace constraints. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alenazi, M. The Role of Environmental Assumptions in Shaping Requirements Technical Debt. Appl. Sci. 2025, 15, 8028. https://doi.org/10.3390/app15148028

Alenazi M. The Role of Environmental Assumptions in Shaping Requirements Technical Debt. Applied Sciences. 2025; 15(14):8028. https://doi.org/10.3390/app15148028

Chicago/Turabian StyleAlenazi, Mounifah. 2025. "The Role of Environmental Assumptions in Shaping Requirements Technical Debt" Applied Sciences 15, no. 14: 8028. https://doi.org/10.3390/app15148028

APA StyleAlenazi, M. (2025). The Role of Environmental Assumptions in Shaping Requirements Technical Debt. Applied Sciences, 15(14), 8028. https://doi.org/10.3390/app15148028