Abstract

Cross-lingual summarization (XLS) involves generating a summary in one language from an article written in another language. XLS presents substantial hurdles due to the complex linguistic structures across languages and the challenges in transferring knowledge effectively between them. Although Large Language Models (LLMs) have demonstrated capabilities in cross-lingual tasks, the integration of retrieval-based in-context learning remains largely unexplored, despite its potential to overcome these linguistic barriers by providing relevant examples. In this paper, we introduce Multilingual Retrieval-based Cross-lingual Summarization (MuRXLS), a robust framework that dynamically selects the most relevant summarization examples for each article using multilingual retrieval. Our method leverages multilingual embedding models to identify contextually appropriate demonstrations for various LLMs. Experiments across twelve XLS setups (six language pairs in both directions) reveal a notable directional asymmetry: our approach significantly outperforms baselines in many-to-one (X→English) scenarios, while showing comparable performance in one-to-many (English→X) directions. We also observe a strong correlation between article-example semantic similarity and summarization quality, demonstrating that intelligently selecting contextually relevant examples substantially improves XLS performance by providing LLMs with more informative demonstrations.

1. Introduction

Cross-lingual summarization (XLS) requires a system to condense a source-language document while simultaneously producing the summary in another language. This task combines the challenges of both summarization and translation, demanding systems that can effectively extract key information while navigating linguistic differences between languages. Early approaches to this complex problem decomposed the task into two principal methodologies: the translate-first paradigm and the summarize-first approach [1,2]. These approaches either converted source documents into the target language before applying summarization techniques or distilled content in the source language before translation. While conceptually straightforward, these approaches often suffered from limitations such as verbose translations or information loss during the condensation process. Both methodologies suffered from fundamental limitations: error propagation between processing stages [3], inefficient resource utilization [4], and to jointly optimize for both summarization and translation objectives [5]. These constraints prompted researchers to explore integrated solutions that could simultaneously navigate both tasks.

The paradigm shift toward end-to-end architectures gained momentum with advances in multilingual representation learning [6]. Neural approaches began to directly generate cross-lingual summaries without intermediate steps, demonstrating that unified architectures could outperform pipeline approaches while requiring fewer computational resources. Building on this foundation, researchers introduced dual attention mechanisms specifically designed to align cross-lingual content during the summarization process [7]. Despite these advances, significant challenges remain for low-resource language pairs where parallel summarization data is scarce.

More recently, the emergence of Large Language Models (LLMs) has opened new possibilities for addressing these challenges, as they have demonstrated promising capabilities for cross-lingual tasks [8,9]. These studies leverage multilingual knowledge to perform zero-shot and few-shot learning across various NLP tasks, demonstrating that few-shot in-context learning can significantly enhance performance without task-specific fine-tuning [10,11,12]. Building on this potential, research has increasingly focused on applying LLMs to XLS tasks. Initial evaluations of GPT-3.5’s zero-shot capabilities across several language pairs found promising results that approached supervised baselines for some high-resource languages. However, these approaches have primarily focused on basic example selection rather than optimizing the quality and relevance of demonstrations for cross-lingual contexts. Previous work has shown that few-shot in-context learning can significantly improve XLS performance of LLMs, particularly in low-resource settings, yet earlier approaches to few-shot learning for XLS were constrained by context length limitations, often selecting examples based primarily on random sampling rather than relevance. Specifically, prior methods typically prioritized random examples with the shortest token counts to fit within prompt constraints, limiting the semantic appropriateness of the selected demonstrations.

Despite these constraints, the emergence of powerful open-source models such as Llama-3-70B-Instruct and Mistral-7B-Instruct [13,14] have opened new possibilities for addressing these challenges. These models demonstrated impressive results across various multilingual NLP tasks, with their performance on cross-lingual understanding and generation tasks suggesting significant potential for enhancing XLS capabilities, especially for context length and languages with limited resources [15,16]. This potential has led to increased interest in retrieval-based approaches that can better leverage the capabilities of LLMs. Few-shot in-context learning has emerged as a powerful paradigm for leveraging LLMs in various NLP tasks without task-specific fine-tuning [17]. This approach involves providing the model with a few examples of the task before asking it to perform the same task on a new input. The effectiveness of few-shot learning heavily depends on the quality and relevance of the provided examples, especially in cross-lingual contexts where linguistic nuances vary significantly. Recognizing this dependency on example quality, retrieval-augmented in-context learning methods have gained prominence [18], as these methods dynamically select relevant examples from a larger pool to construct the prompt, often yielding substantial performance improvements over using fixed or randomly selected examples [19].

Building upon this concept, recent research has explored sophisticated retrieval techniques specifically designed to identify the most informative demonstrations [20]. The selection of appropriate examples can dramatically impact performance, particularly within the cross-lingual domain, where cross-lingual retrieval approaches have employed dual retrieval strategies to source effective demonstrations from high-resource languages to bolster performance on low-resource language tasks [21]. Such cross-lingual transfer is fundamental to improving XLS, aligning closely with the objectives of leveraging knowledge from well-resourced languages. Despite the promise of these techniques, their specific application and optimization for the nuances of XLS remain underexplored, as many existing retrieval approaches prioritize fitting examples within limited prompt lengths, sometimes resorting to shorter or randomly sampled demonstrations rather than optimizing for semantic relevance crucial for cross-lingual transfer.

This gap in the literature highlights a critical opportunity for improvement in cross-lingual summarization systems. As the field moves toward larger and more capable language models, the potential impact of intelligently selected examples becomes increasingly important to explore. Current explorations of LLMs for XLS have primarily focused on model selection and demonstration quantity rather than quality, leaving the critical question of demonstration selection—specifically, how to identify the most informative examples for a given test article—largely unexplored.

To address these limitations, we propose MuRXLS (Multilingual Retrieval-based Cross-lingual Summarization), an enhanced framework for XLS that leverages multilingual retrieval techniques to dynamically select the most relevant examples for each query article. While existing few-shot learning approaches for XLS have been constrained by context length limitations, often selecting examples based primarily on random sampling or shortest token counts rather than relevance, our approach employs multilingual embedding models to compute article embeddings and identify the closest matching examples based on semantic similarity. This retrieval-based method ensures that each article is paired with contextually appropriate demonstrations that can better guide the LLM in generating accurate cross-lingual summaries. Rather than selecting examples based merely on length constraints or random sampling, we propose a semantic similarity framework that identifies truly analogous examples across linguistic boundaries, substantially enhancing the effectiveness of in-context learning for this complex task.

Our comprehensive evaluation demonstrates the effectiveness of this approach across multiple dimensions. We evaluate our framework using several LLMs, including Mistral-7B-Instruct-v0.3, Llama-3-70B-Instruct, GPT-3.5-turbo, and GPT-4o across twelve diverse low-resource language pairs in both directions: Thai, Gujarati, Marathi, Pashto, Burmese, and Sinhala paired with English. Our evaluation reveals an interesting asymmetry: the retrieval-based approach significantly outperforms random example selection in many-to-one (X→English) scenarios but shows comparable performance in one-to-many (English→X) settings. This directional difference provides valuable insights into the dynamics of cross-lingual transfer and the impact of example selection across different language directions.

A key innovation of our work lies in examining how the effectiveness of retrieval-based in-context learning varies across translation directions. Through comprehensive analysis, we investigate the relationship between example similarity and summarization quality, revealing distinct patterns across different translation directions (X→English versus English→X). Furthermore, we analyze the relationship between example similarity and summarization quality, providing insights into how retrieval-based methods enhance cross-lingual transfer. Our findings demonstrate that intelligently selecting contextually relevant examples can substantially improve XLS performance by providing LLMs with more informative demonstrations, particularly when summarizing from low-resource languages into English.

Table 1 summarizes the key differences between our approach and existing cross-lingual summarization methods, highlighting the unique advantages of MuRXLS in terms of example selection strategy and cross-lingual effectiveness.

Table 1.

Comparison of cross-lingual summarization approaches.

The main contributions of our work are as follows:

- We demonstrate significant performance improvements across twelve language pairs, with particularly strong gains in X→English summarization.

- We identify a notable directional asymmetry where retrieval-based selection benefits high-resource target languages substantially more than low-resource targets.

- We establish a strong correlation between example similarity and summarization quality, providing statistical evidence for the efficacy of our approach.

- We verify the effectiveness of method across multiple LLMs of varying sizes and capabilities.

2. Methods

2.1. Dataset and Language Selection

2.1.1. Dataset Selection Rationale

We utilize the CrossSum dataset [22] as our primary data source for cross-lingual summarization experiments. CrossSum was selected based on several methodological criteria. First, it provides comprehensive language coverage with parallel article-summary pairs for over 1500 language pairs, enabling systematic evaluation across diverse linguistic contexts. Second, the dataset employs rigorous quality control measures, including human verification of cross-lingual alignments and content preservation validation. Third, CrossSum provides established train/validation/test splits and evaluation protocols, ensuring reproducibility and comparability with existing research. Finally, the dataset specifically addresses low-resource language scenarios, directly aligning with our research objectives.

2.1.2. Language Selection

Six low-resource languages were systematically selected based on multiple methodological considerations to ensure robust evaluation across different language families and typological structures.

For resource availability assessment, all selected languages meet low-resource criteria while ensuring sufficient data for meaningful evaluation. Each language has approximately 1000 article-summary pairs in the training corpus, which provides adequate statistical power for evaluation while maintaining low-resource characteristics. These languages have limited representation in multilingual LLM training data compared to high-resource languages and insufficient data for robust fine-tuning of large models, which typically requires over 10,000 examples.

Importantly, these six languages represent the optimal subset from CrossSum that balances data adequacy with low-resource characteristics. Other languages in the dataset either had significantly fewer pairs (typically < 500), making them unsuitable for reliable statistical evaluation, or exceeded low-resource thresholds. This constraint-based selection ensures that our evaluation is both statistically valid and representative of genuine low-resource scenarios.

Data quality validation was applied using specific metrics: minimum article length of 100 tokens, summary length ratio between 0.1–0.4 of source article, language detection confidence above 0.95 for both source and target, and content preservation score above 0.7 based on cross-lingual semantic similarity. The selection of these specific language pairs is methodologically crucial, as linguistic distance directly impacts embedding space alignment and retrieval effectiveness in our similarity calculation framework.

For linguistic diversity, we selected languages from different families: Gujarati and Marathi from the Indo-Aryan branch of Indo-European languages, Thai and Burmese from the Sino-Tibetan family with distinct scripts and tonal systems, Pashto from Indo-Aryan with Arabic script and different morphological patterns, and Sinhala from Sino-Tibetan with a unique script system. This diversity ensures comprehensive testing of our framework across varied linguistic structures.

Regarding typological distance from English, we chose languages with varying degrees of structural difference to assess cross-lingual transfer robustness. The selected languages exhibit diverse script systems including Devanagari (Gujarati, Marathi), Thai script, Burmese script, Arabic script (Pashto), and Sinhala script, with no Latin script representation. Word order patterns vary from SVO (Thai) to SOV (Gujarati, Marathi, Pashto) to mixed patterns (Burmese, Sinhala). Morphological complexity ranges from agglutinative to inflectional to isolating tendencies.

2.2. BGE-M3 Multilingual Embedding

2.2.1. Model Architecture and Technical Specifications

We employ BGE-M3 (BAAI General Embedding Model) [23] for computing dense vector representations across languages. BGE-M3 is a transformer-based encoder with cross-lingual alignment capabilities that produces 1024-dimensional dense vectors. The model was trained on over 100 languages using cross-lingual contrastive learning with a maximum context window of 8192 tokens. The alignment methodology combines self-knowledge distillation with multilingual contrastive learning to ensure effective cross-lingual representation.

The training methodology employs several alignment techniques. Contrastive learning uses positive pairs (translations) versus negative pairs (random texts) to learn cross-lingual similarities. Knowledge distillation applies a teacher-student framework for cross-lingual knowledge transfer. Multi-task learning simultaneously optimizes for multiple cross-lingual tasks to improve overall representation quality.

2.2.2. Embedding Computation

For each article x, the embedding process follows a systematic procedure. First, tokenization converts the article into individual tokens. Second, contextual encoding applies BGE-M3 to produce contextualized representations. Third, mean pooling across token representations creates a single article-level embedding. Finally, L2 normalization ensures unit vector length for cosine similarity computation.

The mathematical formulation can be expressed as:

where represents the final article embedding in , computes element-wise mean across token dimensions, and ensures unit vector length for similarity calculations.

2.2.3. Similarity Computation

Cosine similarity is calculated for query article embedding and candidate example embedding using the following formula:

This metric has several important mathematical properties: the range is where 1 indicates perfect similarity, it maintains symmetry, and computational complexity is where d equals 1024.

We validate embedding quality through cross-lingual validation methods. Translation pairs should achieve similarity scores above 0.8, same-language articles should cluster together in the embedding space, and semantic similarity should transcend language boundaries to enable effective cross-lingual retrieval.

2.3. Evaluation Metrics

2.3.1. Primary Evaluation Metric: ROUGE-L

ROUGE-L (Longest Common Subsequence) [24] was selected as our primary evaluation metric based on several considerations. It demonstrates cross-lingual suitability by being less sensitive to exact lexical matching, which is crucial for cross-lingual evaluation where direct word overlap may be limited. The metric preserves sequence order information, capturing content flow and organization. ROUGE-L represents an established standard widely adopted in summarization research, ensuring comparability with existing work. Additionally, it provides balanced precision-recall assessment, considering both content coverage and conciseness.

The mathematical formulation for ROUGE-L is:

where (recall), (precision), for equal weighting of precision and recall, and represents the length of the longest common subsequence.

2.3.2. Secondary Evaluation: BERTScore

For secondary evaluation, we employ BERTScore [25] using XLM-RoBERTa [26] as the underlying multilingual model for contextual embeddings. The BERTScore calculation process involves several steps. Each token in generated and reference summaries is converted to contextual embeddings. A similarity matrix is constructed using cosine similarity between all token pairs. The Hungarian algorithm performs optimal matching for maximum similarity pairing. Finally, score aggregation computes the harmonic mean of precision and recall.

The mathematical formulation is:

where:

XLM-RoBERTa’s cross-lingual training ensures multilingual robustness through consistent semantic similarity assessment across languages, reduced bias toward lexical overlap, and contextual understanding preservation across different linguistic contexts.

2.3.3. Language Adherence Evaluation: Correct Language Rate (CLR)

Correct Language Rate (CLR) measures the percentage of model outputs correctly generated in the intended target language. The mathematical formulation is:

This metric is critical for cross-lingual generation evaluation, as incorrect language output indicates fundamental failure regardless of content quality.

We employ FastText language detection [27,28] using the lid.176.bin model, which supports 176 languages. Our protocol requires a minimum confidence threshold of 0.95 for positive classification, with manual verification on a random 10% sample for accuracy confirmation. This rigorous approach ensures reliable language adherence assessment across all experimental conditions.

2.3.4. Statistical Analysis

Statistical analysis employs Pearson correlation coefficient to measure the relationship between example similarity and performance using the formula:

where represents similarity scores and represents ROUGE-L scores. For significance testing, we use paired t-tests to compare MuRXLS versus baseline performance across language pairs. Effect size assessment employs Cohen’s d for practical significance evaluation, and Bonferroni correction addresses multiple comparison issues across different language pairs.

2.4. Experimental Configuration

Large Language Model Selection

We selected four LLMs representing different scales and capabilities to ensure comprehensive evaluation. Mistral-7B-Instruct-v0.3 represents resource-efficient models with 7 billion parameters, using mixture of experts architecture with instruction tuning. This aligns with recent trends toward computationally efficient architectures that maintain performance while reducing resource requirements [29]. This model provides insight into performance with smaller, deployable models. Llama-3-70B-Instruct represents large-scale models with 70 billion parameters, employing dense transformer architecture with extensive instruction tuning for high-capacity performance ceiling assessment.

GPT-3.5-turbo serves as an API-based commercial model with approximately 175 billion parameters, representing practical deployment scenarios. GPT-4o represents the current state-of-the-art with undisclosed scale as a large multimodal model, providing performance benchmarking against the most advanced available systems.

The selection methodology prioritizes diversity across multiple dimensions. Scale variation ranges from 7B to 175B+ parameters for scalability analysis. Training approach comparison includes open-source versus commercial models for accessibility assessment. Architecture type evaluation covers dense versus sparse models to understand architectural impact on cross-lingual summarization performance.

2.5. Token Count Analysis

Token Calculation Protocol

Token calculation employs model-specific tokenization to ensure accuracy. Mistral uses SentencePiece tokenizer with 32 k vocabulary, Llama employs BPE tokenizer with specific vocabulary, GPT models use tiktoken tokenizer for precise counting, and each model is evaluated with its native tokenizer for consistency.

Context length measurement includes all prompt components:

Token distribution analysis shows instructions require fixed 20–30 tokens, examples vary based on article length and number, and queries typically range from 200–800 tokens depending on source article length.

3. Results

3.1. MuRXLS Framework Implementation

This section presents the implementation of our Multilingual Retrieval-based Cross-lingual Summarization (MuRXLS) framework, which addresses the limitations of existing cross-lingual summarization approaches through intelligent example selection.

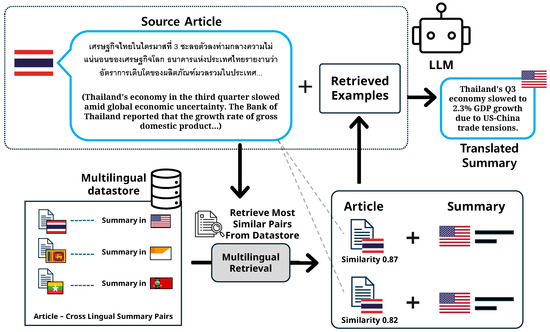

3.1.1. System Architecture Overview

The MuRXLS framework operates through three integrated components designed to optimize example selection for cross-lingual summarization tasks, as illustrated in Figure 1. The system processes source articles through multilingual embedding, retrieves semantically similar examples, and constructs contextually relevant prompts for target language models.

Figure 1.

Overview of the MuRXLS (Multilingual Retrieval-based Cross-lingual Summarization) framework. Our approach leverages a multilingual datastore processed through BGE-m3 for retrieval. For each article in the source language, we identify semantically similar examples based on cosine similarity scores. The selected examples and the test article are then combined in a prompt for the target LLM, which generates the cross-lingual summary.

The framework addresses the fundamental challenge in cross-lingual summarization by moving beyond random or length-based example selection to semantic relevance-based retrieval. Unlike previous approaches that prioritize fitting examples within context length constraints, our method ensures that each article is paired with contextually appropriate demonstrations that better guide the LLM in generating accurate cross-lingual summaries.

3.1.2. Multilingual Datastore Construction and Retrieval Process

The framework begins by processing the training corpus using BGE-M3 to create dense embeddings for all source articles. This creates a searchable multilingual datastore where semantically similar articles across languages are positioned closely in the embedding space. The datastore enables efficient retrieval of relevant examples regardless of source language, leveraging the cross-lingual alignment properties of BGE-M3.

Algorithm 1 presents the detailed procedure for our MuRXLS framework. The algorithm takes as input the training dataset, query article, number of examples (k), encoder model, and target LLM, then outputs a cross-lingual summary.

| Algorithm 1 Multilingual Retrieval-based Cross-lingual Summarization (MuRXLS) |

|

As detailed in Algorithm 1, for each test article, the system computes embedding using BGE-M3 encoder, calculates cosine similarity with all training examples, and selects the top-k most similar examples based on semantic relevance. This process identifies the most similar source article from the validation set and its corresponding target summary, providing the LLM with contextually relevant source-summary pairs for in-context learning.

3.1.3. Dynamic Example Selection and Prompt Construction

The retrieval-based approach transforms traditional prompt engineering for cross-lingual summarization through an embedding-guided example selection process. Unlike conventional approaches that randomly select or prioritize shorter examples, our framework dynamically constructs prompts by integrating semantically-aligned demonstrations identified through multilingual embedding similarity, as shown in Figure 2.

Figure 2.

Examples of prompt for different 2-shot settings in Gujarati-to-English cross-lingual summarization. The figure shows how prompts are structured with the instruction, retrieved examples, and the test article.

For k-shot settings where , we select the top-k most similar examples according to cosine similarity. The demonstration set contains the k most semantically relevant article-summary pairs that provide contextually appropriate guidance for the target task. The prompt follows the template presented in Table 2.

Table 2.

Template for XLS prompts with retrieved examples.

The prompt construction ensures that each test article receives demonstrations that are semantically aligned with its content, enabling more effective knowledge transfer during in-context learning. Upon receiving the prompt, the LLM interprets the retrieved examples as demonstrations guiding the execution of cross-lingual summarization, analyzing source-summary relationships to infer both content selection strategies and target-specific linguistic patterns.

3.2. Comprehensive Performance Evaluation

3.2.1. Overall Performance Analysis

Our comprehensive evaluation across twelve cross-lingual summarization tasks reveals significant performance improvements with the MuRXLS framework, as shown in Table 3. The results demonstrate consistent benefits of retrieval-based example selection across different models, language pairs, and shot configurations.

Table 3.

ROUGE-L results for multiple models and shot settings on the twelve XLS language-pair tasks. The bold values indicate the best performance for each language pair and model. The values in red show improvements and blue show decreases of MuRXLS over the Random (Random Sampling) and The values in gray show no change of MuRXLS over the Random (Random Sampling) baseline.

Several key findings emerge from the performance analysis. MuRXLS consistently outperforms random sampling across most experimental configurations, with improvements ranging from modest gains to substantial enhancements depending on the translation direction and language pair. The framework shows particular strength in scenarios involving linguistically distant language pairs, where semantic similarity becomes more crucial for effective cross-lingual transfer.

3.2.2. Direction-Specific Performance Patterns

Our results reveal a notable directional asymmetry in the effectiveness of retrieval-based example selection. For the X→EN direction (summarizing from low-resource languages to English), MuRXLS consistently outperforms random sampling across all models and language pairs. The average ROUGE-L improvements range from +0.26 to +1.53 points, with particularly substantial gains for Burmese→English (+4.20 points with Llama-3-70B in 1-shot setting) and Sinhala→English (+2.34 points with Llama-3-70B in 2-shot setting).

In contrast, for the EN→X direction (summarizing from English to low-resource languages), the performance improvements are more modest and variable. While MuRXLS still provides an overall positive impact (+0.17 to +0.35 average improvement), the gains are less consistent across language pairs. This asymmetry suggests that retrieval-based example selection is particularly beneficial when the target language is high-resource (English), where models have stronger generation capabilities.

3.2.3. Performance Among Models

Among the evaluated models, Llama-3-70B shows the most substantial improvements from retrieval-based example selection in the X→EN direction, with average ROUGE-L gains of +1.53 and +1.49 points in 1-shot and 2-shot settings respectively. This indicates that larger, more capable models can better leverage contextually relevant examples for cross-lingual transfer. GPT-4o demonstrates the highest absolute performance, achieving ROUGE-L scores of up to 12.27 for Burmese→English, while still benefiting from MuRXLS with consistent improvements across all language pairs.

Despite being the smallest model in our evaluation, Mistral-7B demonstrates respectable performance gains when utilizing retrieval-based selection. This is particularly evident for challenging language pairs such as Pashto→English (+1.36 points) and Burmese→English (+1.04 points). These results suggest that even smaller models can effectively leverage retrieval-based examples when transferring information from low-resource languages to English.

3.2.4. Performance Among Language Pairs

The impact of MuRXLS varies significantly across language pairs, revealing interesting patterns in cross-lingual transfer:

- Burmese→English shows the largest improvements across all models, with gains of up to +4.20 ROUGE-L points. This suggests that for languages with significant structural differences from English, contextually relevant examples provide crucial guidance for the summarization process.

- Pashto→English consistently benefits from retrieval-based examples, with substantial improvements across all models and shot settings. This further supports the observation that linguistically distant language pairs benefit more from retrieval augmentation.

- Thai→English and Gujarati→English show moderate but consistent improvements, indicating that even for language pairs where baseline performance is already reasonable, retrieval-based selection still provides meaningful benefits.

3.2.5. Performance Among the Number of Examples

We evaluate our approach in zero-shot, one-shot, and two-shot configurations. Our experiments were deliberately limited to a maximum of two-shot settings based on extensive preliminary analysis of three-shot performance that revealed two critical limitations: computational inefficiency and performance degradation.

3.2.6. Semantic Quality Assessment with BERTScore

While ROUGE metrics provide insights into lexical overlap, they may not fully capture semantic similarity in cross-lingual settings. To complement our evaluation, we compute BERTScore across all language pairs using XLM-RoBERTa, which provides contextual embeddings better suited for cross-lingual assessment.

- MuRXLS consistently outperforms random sampling. Table 4 presents BERTScore results across all language pairs, comparing our retrieval-based example selection (MuRXLS) against random sampling for both GPT-4o and Llama-3-70B models. Our analysis shows that MuRXLS consistently outperforms random sampling across nearly all language pairs and models. The average improvement in BERTScore F1 ranges from +0.02 to +0.07 depending on the model and direction. This confirms that retrieval-based examples enhance both lexical overlap and semantic similarity, providing summaries that better preserve the meaning of source articles.

Table 4. BERTScore F1 comparison between MuRXLS and Random approaches across language pairs. Values in red indicate improvements of MuRXLS over the Random (Random) baseline. Bold values indicate the highest BERTScore for each language pair and model.

Table 4. BERTScore F1 comparison between MuRXLS and Random approaches across language pairs. Values in red indicate improvements of MuRXLS over the Random (Random) baseline. Bold values indicate the highest BERTScore for each language pair and model. - Two-shot settings generally outperform one-shot configurations for MuRXLS. Two-shot settings generally outperform one-shot configurations for MuRXLS, aligning with our ROUGE findings. Interestingly, random sampling does not consistently benefit from additional examples, particularly in the EN→X direction.

- Directional asymmetry remains evident in both ROUGE and BERTScore. The directional asymmetry observed in ROUGE evaluation is also evident in BERTScore results, with X→EN generally achieving higher semantic similarity than EN→X across both models and methods. This persistent pattern suggests fundamental challenges in generating content in low-resource languages that retrieval-based methods help mitigate but cannot fully overcome.

- BERTScore highlights moderate semantic similarity for English-Pashto despite low lexical overlap. Particularly notable is the case of English-Pashto. While ROUGE scores for this language pair were consistently near zero in the EN→X direction, our BERTScore analysis reveals moderate semantic similarity (around 0.73 for GPT-4o with MuRXLS), indicating that generated summaries maintain semantic correspondence with references despite lacking direct lexical matches. This highlights BERTScore’s ability to detect meaning preservation even when vocabulary choices differ substantially.

- Three-shot configurations significantly increase computational costs without commensurate gains. Regarding experiment settings, as stated in Table 5, our analysis found that three-shot configurations significantly increase computational costs without corresponding performance gains. The average context length for three-shot plus query across all language pairs is over 4000 tokens, representing a consistent 50% increase in token consumption compared to two-shot settings.

Table 5. Token count analysis and performance comparison (ROUGE-L) for all 12 language pairs. Three-shot settings require approximately 50% more tokens than two-shot settings while rarely improving performance, and often degrading it significantly. Bold values indicate the higher performance between two-shot and three-shot settings. Red values indicate performance Blue values indicate performance degradations and improvements of three-shot over two-shot settings.

Table 5. Token count analysis and performance comparison (ROUGE-L) for all 12 language pairs. Three-shot settings require approximately 50% more tokens than two-shot settings while rarely improving performance, and often degrading it significantly. Bold values indicate the higher performance between two-shot and three-shot settings. Red values indicate performance Blue values indicate performance degradations and improvements of three-shot over two-shot settings. - Minimal performance improvements from three-shot usage justify focusing on up to two-shot settings. Our preliminary experiments demonstrated that this increased token usage rarely translated to performance improvements. For GPT-4o, three-shot settings only improved performance for 3 out of 12 language pairs with marginal gains. Meanwhile, GPT-3.5-turbo consistently underperformed in 10 out of 12 language pairs when using three examples. The performance impact was directionally asymmetric, with X→EN language pairs showing slightly better resilience to the three-shot format compared to EN→X pairs. Based on these findings, we focused our main experiments on zero-shot through two-shot settings, which provide the optimal balance between performance and computational efficiency.

3.2.7. Comparison with Fine-Tuned Models

While the mT5-Base fine-tuned model still outperforms in-context learning approaches for most language pairs, the gap is considerably narrowed when using MuRXLS with powerful LLMs like GPT-4o and Llama-3-70B. In some cases, such as Burmese→English summarization with Llama-3-70B, the MuRXLS approach achieves competitive performance (13.32 vs. 22.07 ROUGE-L) without requiring language-specific fine-tuning, demonstrating the potential of retrieval-based in-context learning as an effective alternative for cross-lingual tasks where parallel data is limited.

3.2.8. Impact of Retrieval-Based Examples on Language Adherence

In addition to evaluating summarization quality through ROUGE metrics, we measure the Correct Language Rate (CLR), which quantifies the percentage of model outputs correctly written in the intended target language.

Table 6 presents a comprehensive comparison of CLR performance between our MuRXLS approach and random sampling baselines across all language pairs and models. Our analysis reveals distinct patterns in language adherence across translation directions:

Table 6.

Comparison of Correct Language Rate (CLR) between Random and retrieval-based (MuRXLS) example selection for Mistral-7B and GPT-3.5 models. Bold values indicate the highest CLR for each language pair and direction. Values in red show improvements and values in blue show declines of MuRXLS over Random (Random Sampling). The CLR measures the percentage of model outputs correctly written in the target language.

- X→EN Direction Performance: Both approaches achieve excellent language adherence when generating English summaries, with near-perfect CLR scores across all settings. Zero-shot approaches already achieve 100% CLR for both models in this direction, indicating the inherent strength of LLMs in generating content in English regardless of the input language. MuRXLS provides slight improvements over random sampling in specific language pairs, particularly for Marathi→English and Pashto→English where improvements of +0.87 percentage points are observed.

- EN→X Direction Performance: In this more challenging direction, MuRXLS demonstrates significant improvements over random sampling for most language pairs:

- -

- Mistral-7B shows substantial CLR gains with MuRXLS for Thai (+0.46 to +0.99), Gujarati (+1.16 to +0.96), Marathi (+3.34 to +3.45), Pashto (+4.63 to +1.10), and especially Burmese, where the improvement reaches +7.24 percentage points in the two-shot setting.

- -

- GPT-3.5 demonstrates strong improvements with MuRXLS for Gujarati (+2.04 in one-shot, +0.70 in two-shot), Marathi (+0.84 in one-shot, +0.76 in two-shot), and Pashto (+0.68 in one-shot, +1.53 in two-shot).

- -

- Slight declines are observed for Sinhala with both models, suggesting potential language-specific challenges.

- Zero-shot vs. Few-shot Comparison: Zero-shot performance reveals significant directional asymmetry, with perfect X→EN performance but highly variable EN→X performance. Particularly challenging cases include Mistral-7B’s performance for Marathi (38.70%), Pashto (27.50%), and Burmese (71.07%). This highlights the value of in-context learning approaches for improving language adherence in these challenging directions.

- Shot Setting Impact: Two-shot configurations generally outperform one-shot settings for most language pairs, particularly with MuRXLS. The improvements for Mistral-7B with Burmese (+7.24 in two-shot vs. +2.90 in one-shot) exemplify how multiple semantically relevant examples provide complementary benefits. Similarly, GPT-3.5 shows stronger two-shot performance across many language pairs.

- Language-Specific Patterns: Languages with lower baseline CLR (e.g., Marathi for Mistral-7B at 38.70% in zero-shot) show larger improvements with MuRXLS, while languages with already high CLR occasionally show slight declines. This suggests that retrieval-based selection is particularly valuable for the most challenging language pairs.

These findings demonstrate that retrieval-based example selection significantly improves target language adherence, particularly for the more challenging EN→X direction. By providing semantically relevant examples, models better understand both the expected content structure and language characteristics. The improvements in CLR directly contribute to the enhanced ROUGE-L scores observed in our main experiments, confirming that better language adherence is an important factor in XLS quality.

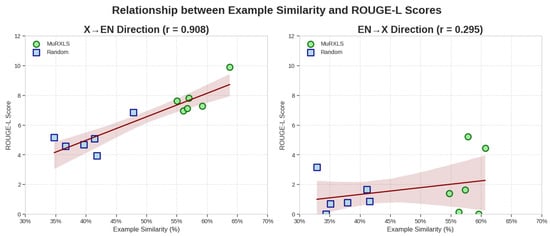

3.2.9. Correlation Between Example Similarity and Summarization Performance

To validate our hypothesis that semantically similar examples improve XLS performance, we analyze the relationship between example similarity and ROUGE scores. We compute the cosine similarity between each test article and its selected examples, then measure the correlation between these similarity scores and the resulting summarization performance.

To validate our hypothesis that semantically similar examples lead to better XLS performance, we analyze the correlation between example similarity and ROUGE-L scores. For each test article, we measure the cosine similarity between its embedding and the embeddings of the selected examples, comparing how MuRXLS and random sampling approaches differ in selecting semantically relevant examples.

As shown in Figure 3, we observe a significant difference in both similarity scores and their correlation with performance across the two translation directions. In the X→EN direction, there is a strong positive correlation (r = 0.908) between example similarity and ROUGE-L scores, indicating that more semantically similar examples consistently lead to better summarization performance when translating from low-resource languages to English. The MuRXLS-selected examples (green circles) consistently show higher similarity scores (ranging from 55% to 65%) compared to random sampling examples (orange squares, ranging from 35% to 48%), which explains their superior performance.

Figure 3.

Relationship between example similarity and ROUGE-L scores. Light green circles represent MuRXLS-selected examples, and light blue squares represent random sampling examples. The regression line shows a strong positive correlation (r = 0.908) in the X→EN direction, while the EN→X direction shows a weaker correlation (r = 0.295). This suggests that semantically similar examples have a stronger impact on summarization quality when translating from low-resource languages to English than vice versa.

In contrast, the EN→X direction exhibits a weaker correlation (r = 0.295) between similarity and performance. While MuRXLS still selects examples with higher similarity (54% to 61%) compared to random sampling (33% to 42%), this advantage translates less consistently to performance improvements. This weaker correlation helps explain the directional asymmetry observed in our main results: the benefits of retrieval-based example selection are more pronounced when generating content in English than in low-resource languages.

The strong correlation in the X→EN direction suggests that when LLMs are tasked with summarizing content in a language they are well-trained on (English), the semantic relevance of examples has a substantial impact on their ability to extract and organize key information from the source article. Conversely, when generating content in low-resource languages, other factors such as the model’s inherent language generation capabilities may limit the extent to which semantically relevant examples can improve performance.

This analysis provides statistical evidence supporting our core hypothesis that intelligently selecting examples based on semantic similarity substantially improves cross-lingual summarization performance, particularly in the X→EN direction. The consistent pattern across all language pairs demonstrates that the benefit of retrieval-based example selection represents a general principle for improving few-shot XLS rather than being specific to particular languages or models.

4. Discussion

Our comprehensive evaluation of MuRXLS across twelve diverse language pairs reveals several important insights about retrieval-based in-context learning for XLS. In this section, we discuss the broader implications of our findings, limitations of our approach, and promising directions for future research.

4.1. Directional Asymmetry in Cross-Lingual Transfer

One of the most striking findings from our experiments is the significant directional asymmetry in performance gains. The retrieval-based example selection consistently yields substantial improvements in the X→English direction while showing more modest and variable gains in the English→X direction. This asymmetry provides valuable insights into the nature of cross-lingual transfer in LLMs.

We hypothesize that this phenomenon stems from two key factors. First, LLMs generally have stronger generation capabilities in English due to the dominance of English data in their training corpora. When the target language is English, providing semantically relevant examples helps the model extract and organize key information from the source article, leveraging its strong English generation abilities. Second, the correlation analysis between example similarity and performance ( for X→English versus for English→X) suggests that semantic similarity plays a fundamentally different role depending on the direction of transfer.

This asymmetry has important practical implications for cross-lingual applications. It suggests that different strategies may be optimal depending on the direction of translation. For the X→English direction, focusing on semantic similarity yields substantial benefits. For the English→X direction, other factors such as target language fluency and stylistic considerations may need to be prioritized alongside semantic relevance.

4.2. Language Selection Limitations and Their Impact

Our study acknowledges several important limitations in language selection that may affect the generalizability of our findings. The six low-resource languages evaluated—Thai, Gujarati, Marathi, Pashto, Burmese, and Sinhala—exhibit notable geographical and linguistic concentration. Four of these languages (Gujarati, Marathi, Pashto, and Sinhala) belong to the Indo-European language family, while Thai and Burmese represent the Tai-Kadai and Sino-Tibetan families, respectively. This linguistic distribution creates potential biases in our evaluation.

The geographical concentration primarily spans South and Southeast Asia, which may limit the applicability of our findings to languages from other regions such as Africa, indigenous languages of the Americas, or Pacific languages. Different linguistic regions may present unique challenges for cross-lingual transfer that are not captured in our current evaluation. For instance, languages with different writing systems (such as Arabic script, various African scripts, or logographic systems) might exhibit different patterns of semantic similarity encoding and retrieval effectiveness.

Furthermore, the predominance of Indo-European languages in our dataset may overrepresent the effectiveness of our approach for languages that share certain structural similarities with English. The strong performance improvements observed in our X→English direction might be partially attributed to underlying linguistic relatedness that facilitates cross-lingual transfer, rather than purely representing the universal effectiveness of retrieval-based example selection.

Future work should evaluate MuRXLS across a more diverse set of languages, including representatives from major language families such as Niger-Congo (e.g., Swahili, Yoruba), Afro-Asiatic (e.g., Arabic, Amharic), and indigenous language families. Such evaluation would provide stronger evidence for the universal applicability of our approach and reveal potential language-family-specific patterns in retrieval effectiveness.

4.3. Language-Specific Effectiveness Patterns

Our analysis reveals that the effectiveness of MuRXLS varies significantly across different languages, suggesting that intrinsic linguistic properties influence the success of retrieval-based example selection. Several key patterns emerge from our cross-linguistic comparison:

Morphological Complexity and Performance Gains: Languages with different morphological structures show varying degrees of improvement from retrieval-based selection. Burmese, which employs a relatively isolating morphology, demonstrates the largest performance gains (+4.20 ROUGE-L points with Llama-3-70B), while languages with more complex morphological systems show more modest improvements. This pattern suggests that languages with simpler morphological structures may benefit more from semantic similarity-based retrieval, as surface-level variations are less likely to obscure underlying semantic relationships.

Script Systems and Embedding Quality: The writing systems employed by different languages appear to influence the effectiveness of multilingual embeddings used in our retrieval mechanism. Thai and Burmese, which use unique scripts, show substantial but variable improvements, while Devanagari-script languages (Marathi) and Arabic-script languages (Pashto) exhibit different response patterns. This variation suggests that the quality of multilingual embeddings varies across script systems, potentially affecting the accuracy of semantic similarity computations.

Language Resource Availability: The baseline performance levels across languages correlate with their relative resource availability, which in turn affects the magnitude of improvements achievable through retrieval-based methods. Languages with extremely limited baseline performance (such as Pashto in some configurations) show larger absolute improvements, suggesting that retrieval-based methods are particularly valuable for the most resource-constrained scenarios.

Syntactic Distance from English: Languages that exhibit greater syntactic divergence from English tend to benefit more from retrieval-based example selection in the X→English direction. This pattern aligns with our directional asymmetry findings and suggests that when translating from syntactically distant languages, providing contextually relevant examples helps bridge the structural gaps more effectively.

4.4. Comparison with Traditional Fine-Tuning Approaches

While our retrieval-based in-context learning approach does not fully match the performance of dedicated fine-tuning in all scenarios (e.g., mT5-Base fine-tuned specifically for each language pair), the gap is considerably narrowed for some language pairs, particularly in the X→English direction. This is remarkable considering that our approach requires no language-specific training and can be applied immediately to new language pairs with minimal example data.

For resource-constrained scenarios where fine-tuning large models is impractical, or for languages with extremely limited parallel data, MuRXLS offers a practical alternative that leverages the inherent cross-lingual capabilities of LLMs. Moreover, the flexibility of our approach allows it to be complementary to fine-tuning—organizations could deploy fine-tuned models for high-resource language pairs while using MuRXLS for emerging language needs.

4.5. Example Quality vs. Quantity

Our experiments with different shot settings reveal an interesting relationship between example quality and quantity. While traditional approaches often focus on maximizing the number of examples within context length constraints (often at the expense of example relevance), our findings suggest that the quality of examples—measured by semantic similarity to the query—plays a more crucial role than quantity.

This is particularly evident in our comparison of one-shot versus two-shot settings. While adding a second example generally improves performance, the gains are most substantial when both examples are highly relevant to the query. This suggests that practitioners should prioritize example relevance over quantity, especially when working with limited context windows.

4.6. Implications for Low-Resource Languages

Our work has significant implications for low-resource languages, which often lack sufficient parallel data for traditional XLS methods. By demonstrating that even a small number of semantically relevant examples can substantially improve performance, we provide a practical pathway for extending XLS capabilities to more languages.

The MuRXLS approach is particularly valuable for the X→English direction, which is often the primary need for information access from low-resource languages. For example, our results with Burmese→English show improvements of up to 4.20 ROUGE-L points using semantically selected examples, making information in Burmese significantly more accessible to English readers.

These language-specific patterns indicate that while MuRXLS provides consistent benefits across diverse languages, the magnitude and nature of improvements are influenced by fundamental linguistic properties. Understanding these patterns can inform future development of language-adaptive retrieval strategies and help practitioners set appropriate expectations for different language pairs.

4.7. Study Limitations

While our work demonstrates the effectiveness of retrieval-based in-context learning for cross-lingual summarization, several limitations should be acknowledged that may affect the generalizability and applicability of our findings.

Language Selection and Geographic Bias. Our evaluation is constrained by the selection of six low-resource languages that exhibit notable geographic and linguistic concentration. Four of the evaluated languages (Gujarati, Marathi, Pashto, and Sinhala) belong to the Indo-European family, while the remaining two (Thai and Burmese) represent Tai-Kadai and Sino-Tibetan families respectively. This distribution creates potential biases in our assessment, as the predominance of Indo-European languages may overrepresent the effectiveness of cross-lingual transfer due to underlying structural similarities with English. Furthermore, our language selection primarily spans South and Southeast Asia, limiting the applicability of findings to languages from other regions such as Africa, indigenous languages of the Americas, or Pacific language families. Different linguistic regions may present unique challenges for cross-lingual transfer that are not captured in our current evaluation framework.

Methodological and Technical Constraints. The quality of multilingual embeddings varies significantly across different script systems and languages, potentially affecting the accuracy of semantic similarity computations fundamental to our retrieval mechanism. Our reliance on BGE-M3 embeddings, while comprehensive, may introduce language-specific biases that influence retrieval effectiveness. Additionally, the computational overhead of three-shot configurations and beyond limits the practical scalability of our approach, particularly for resource-constrained deployment scenarios. The token count analysis reveals that extending beyond two-shot settings incurs substantial computational costs without corresponding performance improvements, constraining the potential benefits of additional examples.

Evaluation and Domain Limitations. Our evaluation framework, while comprehensive, relies primarily on lexical overlap metrics (ROUGE-L) and contextual similarity measures (BERTScore) that may not fully capture the nuanced aspects of cross-lingual semantic preservation. The assessment is further limited to news article summarization, which may not reflect performance across other domains such as scientific literature, legal documents, or creative content. The quality of reference summaries in low-resource languages presents additional challenges for reliable evaluation, as human annotation resources are often limited for these languages. Moreover, our correlation analysis between example similarity and performance, while statistically significant, may not account for confounding factors related to specific linguistic phenomena or model-specific biases that could influence the observed relationships.

5. Conclusions

In this paper, we introduced MuRXLS, a retrieval-based framework for cross-lingual summarization that dynamically selects semantically relevant examples for low-resource languages. By leveraging the BGE-M3 multilingual embedding model, our approach enhances few-shot learning for various LLMs including Mistral-7B, Llama-3-70B, GPT-3.5, and GPT-4o.

Our experiments across twelve language pairs revealed a clear directional asymmetry: MuRXLS significantly outperformed random sampling in X→English scenarios (with improvements up to 4.20 ROUGE-L points) while showing more modest gains in English→X settings. Analysis of Correct Language Rate (CLR) demonstrated that our approach substantially improves language adherence in challenging target languages.

The strong correlation (r = 0.908) between example similarity and summarization quality in the X→English direction provides statistical evidence for our core hypothesis, while the weaker correlation in the reverse direction (r = 0.295) helps explain the observed asymmetry. These findings suggest that the benefits of retrieval-based selection are most pronounced when generating content in a high-resource language.

While MuRXLS does not fully match dedicated fine-tuning in all scenarios, it offers a practical alternative that requires no language-specific training, making it valuable for low-resource languages with limited parallel data.

Future Research Directions. The findings of this work open several promising avenues for advancing cross-lingual summarization and retrieval-based in-context learning methodologies. Future research should prioritize evaluation across a more linguistically diverse set of languages, particularly including representatives from major language families such as Niger-Congo (e.g., Swahili, Yoruba), Afro-Asiatic (e.g., Arabic, Amharic), and indigenous language families from the Americas and Pacific regions. Such expansion would provide stronger evidence for the universal applicability of retrieval-based approaches and reveal potential language-family-specific patterns in cross-lingual transfer effectiveness.

Several technical directions could substantially improve the effectiveness of retrieval-based cross-lingual summarization. Developing ensemble approaches that combine multiple multilingual embedding models could enhance retrieval robustness and reduce language-specific biases. Dynamic example selection algorithms that adaptively determine the optimal number of examples based on query complexity and available context length represent another promising direction. Furthermore, exploring domain-specific embedding models trained on specialized corpora (scientific, legal, medical) could extend the applicability of our framework beyond news summarization.

Future work should also focus on developing more sophisticated evaluation metrics that better capture semantic preservation across languages, potentially incorporating human evaluation protocols specifically designed for cross-lingual content assessment. The application of our framework to real-world scenarios such as multilingual information retrieval systems, cross-lingual document indexing, and educational content generation for low-resource languages presents significant practical opportunities. These research directions collectively aim to enhance the accessibility, effectiveness, and practical applicability of cross-lingual summarization technologies, particularly for underserved language communities that would benefit most from improved multilingual information access capabilities.

Author Contributions

Conceptualization, G.P. and H.L.; methodology, G.P. and H.L.; software, G.P.; validation, G.P., J.P. and H.L.; formal analysis, G.P. and J.P.; investigation, G.P. and J.P.; resources, H.L.; data curation, G.P.; writing—original draft preparation, G.P. and J.P.; writing—review and editing, G.P., J.P. and H.L.; visualization, G.P.; supervision, H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (No.2021-0-01341, Artificial Intelligence Graduate School Program, Chung-Ang University) and the Chung-Ang University Graduate Research Scholarship in 2023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/csebuetnlp/CrossSum (accessed on 9 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Parnell, J.; Unanue, I.J.; Piccardi, M. Sumtra: A differentiable pipeline for few-shot cross-lingual summarization. arXiv 2024, arXiv:2403.13240. [Google Scholar]

- Wan, X.; Luo, F.; Sun, X.; Huang, S.; Yao, J.-G. Cross-language document summarization via extraction and ranking of multiple summaries. Knowl. Inf. Syst. 2019, 58, 481–499. [Google Scholar] [CrossRef]

- Ladhak, F.; Durmus, E.; Cardie, C.; McKeown, K. WikiLingua: A new benchmark dataset for cross-lingual abstractive summarization. arXiv 2020, arXiv:2010.03093. [Google Scholar]

- Zhang, R.; Ouni, J.; Eger, S. Cross-lingual Cross-temporal Summarization: Dataset, Models, Evaluation. Comput. Linguist. 2024, 50, 1001–1047. [Google Scholar] [CrossRef]

- Zhang, Y.; Gao, S.; Huang, Y.; Tan, K.; Yu, Z. A Cross-Lingual Summarization method based on cross-lingual Fact-relationship Graph Generation. Pattern Recognit. 2024, 146, 109952. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, Q.; Wang, Y.; Zhou, Y.; Zhang, J.; Wang, S.; Zong, C. NCLS: Neural Cross-Lingual Summarization. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 3054–3064. [Google Scholar]

- Cao, Y.; Liu, H.; Wan, X. Jointly Learning to Align and Summarize for Neural Cross-Lingual Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6220–6231. [Google Scholar]

- Wang, J.; Liang, Y.; Meng, F.; Zou, B.; Li, Z.; Qu, J.; Zhou, J. Zero-Shot Cross-Lingual Summarization via Large Language Models. arXiv 2023, arXiv:2302.14229. [Google Scholar]

- Wang, J.; Meng, F.; Zheng, D.; Liang, Y.; Li, Z.; Qu, J.; Zhou, J. A survey on cross-lingual summarization. Trans. Assoc. Comput. Linguist. 2022, 10, 1304–1323. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Lin, X.V.; Mihaylov, T.; Artetxe, M.; Wang, T.; Chen, S.; Simig, D.; Ott, M.; Goyal, N.; Bhosale, S.; Du, J.; et al. Few-shot Learning with Multilingual Generative Language Models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, UAE, 7–11 December 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 9019–9052. [Google Scholar]

- Asai, A.; Kudugunta, S.; Yu, X.; Blevins, T.; Gonen, H.; Reid, M.; Tsvetkov, Y.; Ruder, S.; Hajishirzi, H. BUFFET: Benchmarking Large Language Models for Few-shot Cross-lingual Transfer. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 1771–1800. [Google Scholar]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Zhang, P.; Shao, N.; Liu, Z.; Xiao, S.; Qian, H.; Ye, Q.; Dou, Z. Extending Llama-3’s Context Ten-Fold Overnight. arXiv 2024, arXiv:2404.19553. [Google Scholar]

- Li, P.; Zhang, Z.; Wang, J.; Li, L.; Jatowt, A.; Yang, Z. ACROSS: An Alignment-based Framework for Low-Resource Many-to-One Cross-Lingual Summarization. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 2458–2472. [Google Scholar]

- Ram, O.; Levine, Y.; Dalmedigos, I.; Muhlgay, D.; Shashua, A.; Leyton-Brown, K.; Shoham, Y. In-Context Retrieval-Augmented Language Models. arXiv 2023, arXiv:2302.00083. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, S.; Tan, C. Active Example Selection for In-Context Learning. arXiv 2022, arXiv:2211.04486. [Google Scholar]

- Min, S.; Lyu, X.; Holtzman, A.; Artetxe, M.; Lewis, M.; Hajishirzi, H.; Zettlemoyer, L. Rethinking the Role of Demonstrations: What Makes In-Context Learning Work? arXiv 2022, arXiv:2202.12837. [Google Scholar]

- Nie, E.; Liang, S.; Schmid, H.; Schütze, H. Cross-Lingual Retrieval Augmented Prompt for Low-Resource Languages. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 8320–8340. [Google Scholar]

- Bhattacharjee, A.; Hasan, T.; Ahmad, W.U.; Li, Y.-F.; Kang, Y.-B.; Shahriyar, R. CrossSum: Beyond English-Centric Cross-Lingual Summarization for 1500+ Language Pairs. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 2541–2564. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. BGE M3-Embedding: Multi-Lingual, Multi-Functionality, Multi-Granularity Text Embeddings Through Self-Knowledge Distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

- Lin, C.-Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004; pp. 74–81. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2020, arXiv:1904.09675. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 8440–8451. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Douze, M.; Jégou, H.; Mikolov, T. FastText.zip: Compressing text classification models. arXiv 2016, arXiv:1612.03651. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. arXiv 2016, arXiv:1607.01759. [Google Scholar]

- Zhang, J.; Zhang, R.; Xu, L.; Lu, X.; Yu, Y.; Xu, M.; Zhao, H. FasterSal: Robust and Real-Time Single-Stream Architecture for RGB-D Salient Object Detection. IEEE Trans. Multimed. 2025, 27, 2477–2488. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).