Abstract

Hydraulic concrete is a critical infrastructure material, with its surface condition playing a vital role in quality assessments for water conservancy and hydropower projects. However, images taken in complex hydraulic environments often suffer from degraded quality due to low lighting, shadows, and noise, making it difficult to distinguish defects from the background and thereby hindering accurate defect detection and damage evaluation. In this study, following systematic analyses of hydraulic concrete color space characteristics, we propose a Dual-Branch Luminance–Chrominance Attention Network (DBLCANet-HCIE) specifically designed for low-light hydraulic concrete image enhancement. Inspired by human visual perception, the network simultaneously improves global contrast and preserves fine-grained defect textures, which are essential for structural analysis. The proposed architecture consists of a Luminance Adjustment Branch (LAB) and a Chroma Restoration Branch (CRB). The LAB incorporates a Luminance-Aware Hybrid Attention Block (LAHAB) to capture both the global luminance distribution and local texture details, enabling adaptive illumination correction through comprehensive scene understanding. The CRB integrates a Channel Denoiser Block (CDB) for channel-specific noise suppression and a Frequency-Domain Detail Enhancement Block (FDDEB) to refine chrominance information and enhance subtle defect textures. A feature fusion block is designed to fuse and learn the features of the outputs from the two branches, resulting in images with enhanced luminance, reduced noise, and preserved surface anomalies. To validate the proposed approach, we construct a dedicated low-light hydraulic concrete image dataset (LLHCID). Extensive experiments conducted on both LOLv1 and LLHCID benchmarks demonstrate that the proposed method significantly enhances the visual interpretability of hydraulic concrete surfaces while effectively addressing low-light degradation challenges.

1. Introduction

Hydraulic concrete is a widely used construction material in hydraulic engineering that plays a crucial role in the development of infrastructure. Accurate analyses and assessments of hydraulic concrete properties are essential for ensuring the quality, safety, and durability of concrete structures []. Image-based analysis has emerged as a powerful tool for the non-destructive evaluation of hydraulic concrete, enabling the extraction of valuable information about its physical characteristics.

Many hydraulic structures are located in mountainous regions, where the environment is complex and includes numerous structural elements that require detailed inspection []. The quality of hydraulic concrete images is significantly influenced by factors such as the lighting conditions, precipitation, haze, and other environmental elements, with these effects being particularly pronounced in such challenging natural settings. The inherent complexity of hydraulic concrete images is manifested in drastic variations in luminance, contrast, and color, which pose significant challenges for subsequent visual tasks such as defect detection, as shown in Figure 1. Therefore, it is crucial to explore efficient image enhancement techniques to meet the stringent demands for image quality and clarity in practical applications []. In recent years, numerous methods have emerged in the field of image enhancement, including techniques based on histogram equalization [], methods grounded in Retinex theory [], strategies within deep learning frameworks [], and, more recently, transformer-based techniques []. However, most of these methods focus on enhancing images in general scenes, with relatively little research on specialized enhancement methods for hydraulic concrete images. As a result, when these methods are applied to hydraulic concrete images, further adaptation and optimization are often necessary to fully realize their potential.

Figure 1.

Images of a hydraulic concrete surface with poor luminance: (a) low light; (b) non-uniform luminance.

To address the unique challenges of hydraulic concrete image enhancement, we propose a Dual-Branch Luminance–Chrominance Attention Network, termed DBLCANet-HCIE. Our approach explicitly targets the texture-rich and defect-sensitive characteristics of hydraulic concrete surfaces. We first perform a detailed analysis of the color space properties of hydraulic concrete images and demonstrate the representational advantages of the YCbCr color space. Based on this, we decompose the enhancement process into two complementary subtasks: luminance adjustment and chrominance restoration. By incorporating hybrid attention mechanisms tailored to each branch and channel-wise denoising operations, our model effectively enhances low-light regions while preserving critical defect textures and structural details that are essential for downstream analysis. To support model training and evaluation, we construct a dedicated dataset of low-light hydraulic concrete images. Extensive experiments show that our method outperforms several state-of-the-art techniques across multiple image quality assessment metrics. Furthermore, in practical surface defect detection tasks on real hydraulic concrete structures, our enhanced images yield a substantial improvement in detection accuracy compared to their unenhanced counterparts, validating the effectiveness of our design in real-world scenarios.

Our main contributions are summarized as follows:

- We analyze the color space characteristics of low-light hydraulic concrete images and propose a novel Dual-Branch Luminance–Chrominance Attention Network (DBLCANet-HCIE) that is specifically designed to address the challenges of poor visibility and degraded texture in such images. The network jointly performs luminance adjustment and chrominance restoration to enhance both global contrast and fine structural details.

- We design a Luminance Adjustment Branch (LAB) and a Chroma Restoration Branch (CRB). The LAB integrates a Luminance-Aware Hybrid Attention Block (LAHAB) to adaptively enhance brightness in low-contrast regions where surface defects are often obscured. The CRB incorporates a Channel Denoiser Block (CDB) and a Frequency-Domain Detail Enhancement Block (FDDEB), effectively preserving and enhancing fine-grained defect textures and chrominance information crucial for structural inspection

- We construct a low-light hydraulic concrete image dataset (LLHCID) to facilitate effective training and validation of the model. Our experiments show that our DBLCANet-HCIE achieves satisfactory results in terms of several image quality metrics.

2. Related Work

Relatively few studies have specifically focused on hydraulic concrete images alone, but the related image enhancement methods are worth considering for reference. The image enhancement methods include traditional methods and deep-learning-based methods. The following section reviews these two method types, along with an exploration of recent advancements in transformer-based image enhancement techniques.

2.1. Traditional Methods

The traditional methods can mainly be classified into those based on histogram equalization and those based on Retinex. The principle of histogram equalization entails the uniform dissemination of grayscale values in an initial image, originally confined within a relatively narrow range, across the entire grayscale spectrum []. Zuiderveld [] proposed Contrast Limited Adaptive Histogram Equalization (CLAHE). By imposing a clipping limit on the histogram prior to redistributing the intensities, CLAHE ensures that the enhancement process remains constrained within a reasonable range. Lee et al. [] utilized the Local Difference Representation (LDR) technique to augment the differentiation of neighboring pixel intensities within a two-dimensional histogram. Li et al. [] proposed an underwater image enhancement method for defogging based on the underwater dark channel prior, which operates with minimal information loss and incorporates histogram prior distributions.

Unlike the histogram equalization methods, Retinex theory, introduced by Land et al. [], facilitates image enhancement by decomposing images into their illumination and reflectance components. Building on this foundation, Jobson et al. [,] developed Single-scale Retinex (SSR) and Multi-scale Retinex (MSR) techniques for improving low-light images. SSR is noted for its effective edge enhancement but may result in over-enhancement and subsequent information loss. Conversely, MSR offers advantages in color enhancement, color constancy, and dynamic range compression; however, it faces challenges in preserving smooth edges and high-frequency details. To address these issues, Si et al. [] proposed SSRBF, which integrates SSR with a Bilateral Filter (BF) to mitigate lighting problems and enhance video image quality. Xiao et al. [] introduced a scaled Retinex method incorporating fast mean filtering in the hue–saturation–value (HSV) color space for rapid image enhancement. Gu et al. [] suggested a fractional-order variation-based Retinex model for enhancing severely underlit images, while Hao et al. [] presented a semi-decoupled decomposition approach for low-light image enhancement. Zhang et al. [] transformed the Retinex approach into a statistical inference problem by utilizing a bidirectional perceptual similarity method to enhance underexposed images. Xi et al. [] proposed a composite algorithm for shadow removal from UAV remote sensing images by combining the Retinex algorithm with a two-dimensional gamma function. Nevertheless, when these algorithms are applied to images captured in the complex environment of hydraulic concrete structures, issues of distortion and restoration persist.

2.2. Deep-Learning-Based Methods

With the advancement of deep learning, researchers have increasingly attempted to apply methods such as Convolutional Neural Networks (CNNs) to image enhancement tasks. LLNet [] is an early deep-learning-based algorithm for low-light image enhancement that employs a modified stacked sparse denoising autoencoder capable of learning from synthetically darkened and noise-injected training samples. It can effectively enhance the quality of images captured in natural low-light environments or those impacted by hardware degradation. Numerous image enhancement methods based on CNNs or the integration of CNNs with Retinex theory have subsequently been proposed. For example, Li et al. [] presented LightenNet, a trainable CNN for weakly illuminated image enhancement that outputs an illumination map for enhanced images based on the Retinex mode. Wei et al. [] introduced a deep Retinex-Net trained on a low-light dataset (LOL) comprising low-/normal-light image pairs, including Decom-Net for decomposition and Enhance-Net for illumination adjustment and joint denoising on reflectance. Zhang et al. [] proposed the Deep Color Consistency Network (DCC-Net), which learns to enhance low-light images by decomposing color images into two main components: a grayscale image and a color histogram. In addition, Park et al. [] constructed a lighting block using a U-shaped network and a saturation-guided fusion module. Wei et al. [] proposed a Degradation-Aware Deep Retinex Network (DA-DRN) for enhancing low-light images. DA-DRN is composed of a decomposition U-Net and an enhancement U-Net. Yan et al. [] proposed a novel trainable color space, named Horizontal/Vertical Intensity (HVI), which not only decouples luminance and color from RGB channels to mitigate the instability during enhancement but also adapts to low-light images in different illumination ranges due to its trainable parameters. Wang et al. [] proposed a luminance perception-based recursive enhancement framework for high-dynamic-range LLIE. This framework consists of two parallel subnetworks: an adaptive contrast and texture enhancement network (ACT-Net) and a luminance perception network (BP-Net). Furthermore, several studies have investigated GAN-based approaches for underwater image enhancement, aiming to emphasize and preserve the salient features of underwater targets. Wang et al. [] proposed a Content-Style Control Network (CSC-SCL) for underwater image enhancement that improves domain generalization by integrating content and style normalization in the generator. To enhance visual quality, they introduced style contrastive learning that aligns enhanced image styles with clear images. Chen et al. [] proposed an underwater crack image enhancement network (UCE-CycleGAN). This network employs multi-feature fusion, incorporating edge maps and dark channels to mitigate blurring and enhance texture details.

2.3. Transformer-Based Methods

Recently, the revolutionary introduction of transformer [] architectures has propelled Vision Transformers (ViTs) [] to exhibit outstanding performance across a range of visual tasks. This has facilitated the proliferation of transformer-based methods in the field of image enhancement. These methods harness the powerful self-attention mechanisms of transformers to address complex spatial relationships and nonlinear degradation patterns, ultimately enhancing image quality and detail [,]. Wang et al. [] introduced a transformer-based network named LLFormer, specifically for enhancing low-light images. This network utilizes a transformer block designed around axes to execute self-attention operations on the spatial dimensions of height and width within a defined window. Cai et al. [] integrated Retinex theory with the transformer architecture to propose Retinexformer, a model designed for low-light image enhancement. Dang et al. [] proposed a lightweight and effective network based on the proposed pixel-wise and patch-wise cross-attention mechanism PPformer. Wang et al. [] proposed a novel luminance and chrominance dual-branch network, termed LCDBNet, for low-light image enhancement. It divides low-light image enhancement into two sub-tasks: luminance adjustment and chrominance restoration. Brateanu et al. [] introduced LYT-Net, a low-light image enhancement model based on lightweight transformers in the YUV color space. Complementary to these efforts, Li et al. [] designed ERNet with parallel multiview attention branches, effectively enhancing and preserving discriminative defect features from multiple perspectives, which is particularly beneficial for detail-sensitive enhancement tasks.

The related methods are developing towards multi-branch tasks and combinations of multiple methods. The above methods have rarely been applied in the study of hydraulic concrete image enhancement. Our study analyzes the characteristics of hydraulic concrete images and designs a targeted hydraulic concrete image enhancement method.

3. Methods

3.1. Color Space

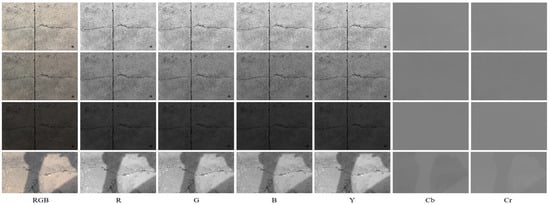

As shown in Figure 2, we conducted a comparative analysis of hydraulic concrete images with varying luminance levels in both the RGB and YCbCr color spaces. The results indicate that hydraulic concrete images exhibit similar content characteristics across each channel in the RGB space, primarily due to the limited color richness of these images. In the YCbCr color space, the Y channel effectively reflects the overall luminance of the image, while the Cb and Cr channels preserve image detail information well, particularly in terms of defect details.

Figure 2.

Color space analysis of hydraulic concrete images.

Given that the input images of hydraulic concrete are inherently in the RGB space, we incorporate a sophisticated color space conversion module at the initial stage of our model. This module meticulously transforms images from the RGB space to the YCbCr space, thereby isolating and acquiring the distinct information encapsulated within the three channels: Y (luminance), Cb, and Cr. Subsequently, these processed channels are dispatched to the backend modules for further elaboration and enhancement.

Based on the BT.601 [] specification, a color space transformation block (CSTB) was designed to transform RGB into YCbCr. The specific conversion method is outlined as follows:

where represents the luminance channel and and represent the chrominance channels. , , and represent the red, green, and blue channels, respectively, in the RGB color space of an image. Based on the distinct characteristics of , , and , various processing methods have been specifically designed for luminance and chrominance handling, with the aim of obtaining high-quality images of hydraulic concrete.

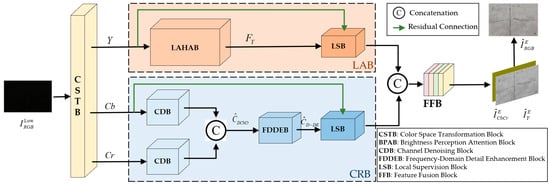

3.2. Overall Framework

Figure 3 illustrates the systematic architecture of our DBLCANet-HCIE network for hydraulic concrete image enhancement, which features a dual-path structure integrating four principal components: (a) a color space transformation block (CSTB), (b) a Luminance Adjustment Branch (LAB), (c) a Chroma Restoration Branch (CRB), and (d) a feature fusion block (FFB). The input image is converted into the color space using the CSTB, resulting in three components: , , and . The component is processed in the LAB via the BPAB, which extracts both the global and local luminance features . These features are then directed to the LSB for local supervised enhancement guided by luminance attention features. Meanwhile, the and components enter the CDB, where channel denoising is performed. After denoising, the and components are merged and passed to the FDDEB for texture detail enhancement, following local supervision in the LSB. The outputs from both branches are fused through a feature fusion block (FFB) to achieve overall image enhancement. The enhanced image [, ] remains in the space. The final enhanced RGB image is obtained by performing a color space conversion.

Figure 3.

Overall framework of DBLCANet-HCIE.

3.3. Luminance Adjustment Branch (LAB)

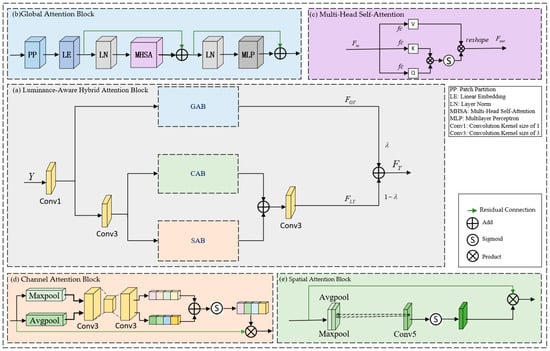

The LAB is responsible for adaptively enhancing the luminance of low-light hydraulic concrete images. At its core lies the LAHAB, the structure of which is illustrated in Figure 4. To effectively extract luminance features, the LAHAB adopts a multi-branch attention mechanism operating on the Y channel, integrating both global and local luminance perception for comprehensive modeling.

Figure 4.

The framework of the LAHAB. (a) Luminance-Aware Hybrid Attention Block. (b) Global Attention Block. (c) Multi-head self-attention. (d) Channel Attention Block. (e) Spatial Attention Block.

The Global Attention Block (GAB) is constructed based on the Swin Transformer architecture [], with its core comprising multi-head self-attention (MHSA). The GAB is responsible for capturing global luminance representations, enabling the model to understand the overall brightness distribution and structural context of the image. Inspired by [], the input luminance feature map is first partitioned into non-overlapping windows, followed by window-based self-attention and shifted window operations to model long-range dependencies. We adopt the feature separation operation to divide the query (Q), key (K), and value (V) into equal parts and obtain , , and . The calculation formula for each attention head is as follows:

where and represents the number of channels.

The Y-channel data are convoluted to obtain the luminance features map, then processed by different attention modules. Finally, they are adaptively fused. The process is as follows:

where is an adaptive fusion parameter, the value of which is updated automatically during the update process. The initial value of is set to 0.5. represents the luminance-aware feature, represents the global luminance feature, and represents the local luminance feature.

To complement the global perception with finer detail understanding, we further design a Channel Attention Block (CAB) and a Spatial Attention Block (SAB), following the principles proposed in [,], respectively. These two modules focus on local luminance features, such as crack boundaries, shadows, and texture variations, which are critical for highlighting defect regions. The CAB and SAB are connected in parallel, allowing the model to simultaneously attend to inter-channel relevance and spatial saliency. Convolutional layers with a kernel size of 3 are applied both before and after the attention modules to refine feature representation and ensure local consistency.

3.4. Chrominance Restoration Branch (CRB)

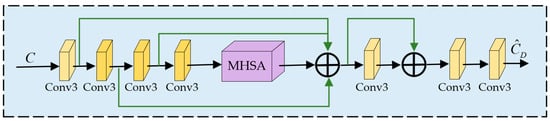

The CRB is responsible for recovering the color information (Cb and Cr channels) of hydraulic concrete images, which is essential for restoring visual realism and preserving defect-related chromatic cues. As shown in Figure 1, the CRB is primarily composed of two core modules: the CDB and the FDDEB.

Inspired by the work in [] and as illustrated in Figure 5, we design the CDB with MHSA as its core component, integrating convolutional and attention mechanisms to independently denoise the Cb and Cr channels. Specifically, each chroma channel is passed through the CDB, where it undergoes four convolutional layers, the first with a stride of 1 to preserve local information and the remaining three with strides of 2 to progressively down-sample and extract features at multiple spatial scales. This design enables the network to capture both fine and coarse chrominance representations. The multi-scale features are then processed through MHSA to model global dependencies. A residual connection is applied to maintain feature integrity, followed by an up-sampling operation to recover the original resolution, thus completing the channel-wise denoising process.

Figure 5.

The framework of the Channel Denoiser Block (CDB).

The CDB independently denoises the Cb and Cr channels and leverages multi-scale feature extraction to effectively suppress chromatic noise while preserving the subtle chrominance details essential for detecting surface defects in low-light hydraulic concrete images.

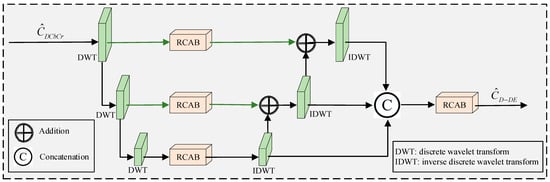

Inspired by [], we design an FDDEB within the CRB, aiming to more effectively capture and preserve the structural and textural information critical to hydraulic concrete surface inspection. In particular, this design is tailored to address the challenges of low-contrast defect boundaries, fine cracks, and subtle surface variations that are commonly present in real-world hydraulic concrete images.

As illustrated in Figure 6, within the FDDEB, denoised–merged chroma channel information undergoes a three-level discrete wavelet transform (DWT) for progressive down-sampling, followed by a symmetrical three-level inverse discrete wavelet transform (IDWT) during up-sampling. This wavelet-based decomposition captures both low-frequency chrominance context and high-frequency detail features, enabling the model to retain multi-scale texture cues such as crack edges, rough surfaces, and defect contours that are often lost in conventional down-sampling operations.

Figure 6.

The framework of the Frequency-Domain Detail Enhancement Block.

To further refine these decomposed features, each DWT level is processed through a Residual Channel Attention Block (RCAB) [], which selectively enhances informative channels while suppressing irrelevant or noisy responses. These RCAB-processed features are then connected via residual links to their IDWT levels, ensuring effective gradient flow and feature reuse. After up-sampling, the fused multi-scale outputs are again refined by an RCAB module to produce the final chrominance-restored feature map, .

This hierarchical design allows the network to emphasize defect-relevant high-frequency textures in the chroma channels, which are often critical for visual distinction between damaged and intact regions. The FDDEB achieves a fine balance between detail enhancement and chroma fidelity, thereby improving the interpretability of enhanced images.

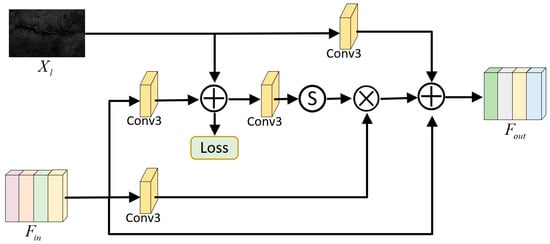

3.5. Local Supervision Block (LSB)

The LSB is designed in the LAB and CRB to obtain the locally enhanced output of each branch while strengthening the effective feature map and improving the model’s training efficiency. For the design of the block, we referred to the literature []. The structure of the LSB is shown in Figure 7.

Figure 7.

The structure of the LSB.

In the LSB, the input features generate residual features through convolution operations. The residual features are superimposed on the low-light image to obtain an enhanced image, using the ground-truth image to provide local supervision. Then, the enhanced image is convoluted and activated by applying a sigmoid function to generate an attention mask for recalibrating the transformed local features, thereby producing an attention-guided feature. An enhanced feature, , is obtained through the input residual link.

3.6. Feature Fusion Block (FFB)

The output feature maps from the LAB and CRB are then fed into the FFB, which combines the luminance-adjusted and chrominance-restored features to produce the final enhanced hydraulic concrete image.

The FFB employs a series of convolutional layers and skip connections to seamlessly integrate the learned features from both the luminance adjustment and chrominance restoration modules. Feature fusion is carried out with six layers of 3 × 3 convolution operations, and one 3× 3 convolution with a channel of 3 is used to produce an enhanced YCbCr image, which is finally converted to the RGB space. This fusion process ensures that the enhanced image retains the desired balance between luminance and chrominance, optimizing the overall visual quality.

3.7. Loss Function

In our approach, a hybrid loss function was designed to train the DBLCANet-HCIE model, which was obtained by weighting the luminance branch loss, the chrominance branch loss, and the luminance–chrominance fusion loss. The overall loss formula is as follows:

where and X1 and X2 are used to optimize the LAB and CRB, respectively is the loss function optimized by the YCBCR image enhancement model. and are hyperparameters that balance the contributions of and . After extensive experimentation, the optimal values for and were set to 0.15, as this combination yielded the most favorable trade-off between training stability and enhancement performance.

To address the challenges of hydraulic concrete image enhancement, including defect visibility improvement and surface texture preservation, synergistically combines the structural similarity (SSIM) loss, edge-aware loss, and Charbonnier loss. It is expressed as follows:

where , , and are weight hyperparameters reflecting the contribution degrees of each sub-loss. After extensive experimentation, the optimal values for , , and were set to 0.6, 0.3, and 0.1, respectively, as this combination yielded the most favorable trade-off between training stability and enhancement performance.

The SSIM loss preserves the global structural consistency between enhanced and reference images through luminance, contrast, and pattern comparisons within local windows. The SSIM [] is calculated as follows:

where and are the recovered image and ground-truth image, and are the average values for image and image within local windows, and are the variances of image and image , denotes the covariance between image and image , and and are two fixed small constants to avoid division by zero. The SSIM loss [] is calculated as follows:

The Charbonnier loss [] serves as a noise-resilient alternative to conventional L1 norms, combining the advantages of gradient stability and outlier robustness. Its formulation is as follows:

where ensures continuous gradients while maintaining robustness to the sensor noise and compression artifacts prevalent in field-captured concrete images. was set to 0.001.

To explicitly enforce edge consistency between enhanced and reference images, we propose a gradient alignment loss based on Sobel operators. Its formulation is as follows:

where and represent the horizontal and vertical gradient operators, respectively. was again set to 0.001.

4. Experiments and Results

In this section, we introduce the dataset employed in our experiment, detail the experimental environment, and elucidate the training procedures. Furthermore, we present the evaluation metrics and demonstrate the superiority of our approach through a multitude of experimental comparisons. Additionally, ablation studies are conducted on various branches, modules, and loss functions to thoroughly assess their individual contributions.

4.1. Dataset

We trained our model on the LOLv1 dataset [] and a self-built low-light hydraulic concrete image dataset (LLHCID). The LOLv1 dataset, which is frequently utilized for low-light image enhancement methods, comprises 500 pairs of low-light and normal-light images, with 485 pairs designated for training purposes and the remaining 15 pairs for testing.

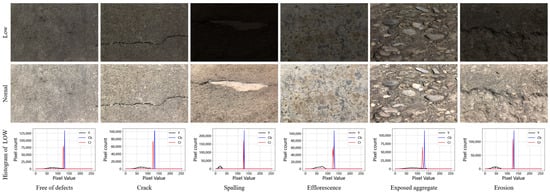

In our research, we found that there is currently no publicly available dataset specifically designed for low-light hydraulic concrete image enhancement. To address this gap and rigorously validate the effectiveness of our proposed method, we constructed a low-light hydraulic concrete image dataset (LLHCID) through a combination of on-site image acquisition and post-processing techniques. The dataset consists of 2000 image pairs, each containing a low-light image and a corresponding bright-light image. Among them, 1600 pairs were used for training, 200 pairs were used for validation, and 200 pairs were used for testing. All images were resized to 600 × 400 pixels using high-quality interpolation with Python V3.10’s Pillow library and saved in JPEG format to balance detail preservation and input consistency for the model.

All images in the LLHCID were of real-world hydraulic concrete structures located in Southwest China. The image acquisition was conducted using a Huawei Mate60 Pro smartphone—purchased from the official website of Huawei Technologies Co., Ltd. (Shenzhen, China)—mounted on a fixed tripod, with the aperture consistently set to f/2.0. The bright-light images were captured under controlled conditions, with ISO sensitivity ranging from 100 to 2000 and shutter speeds ranging from 1/125 to 1/60 s. The corresponding low-light images were captured at the same sites, using ISO sensitivity ranging from 50 to 80 and shutter speeds between 1/250 and 1/125 s, resulting in brightness levels that were approximately 20–60% of their bright-light counterparts. This acquisition protocol ensures significant luminance contrast while maintaining spatial alignment. Importantly, a large portion of the collected images contain visible surface defects, including cracks, spalling, and corrosion, making the dataset highly relevant for defect-aware enhancement and detection tasks.

To ensure the reliability and diversity of the dataset, all image pairs were manually reviewed and aligned. Figure 8 presents representative samples from the LLHCID, showcasing a variety of paired low-light and bright-light hydraulic concrete images captured under different illumination conditions. The samples include diverse surface states, such as those free of defects and those with cracks, spalling, efflorescence, exposed aggregate, and erosion.

Figure 8.

Sample diagram of the LLHCID.

We further analyzed the histogram distribution of low-light images in the YCbCr color space. The Y channel effectively reflects the overall luminance levels, while the Cb and Cr channels exhibit more concentrated pixel distributions in background regions. In contrast, defect regions tend to show distinguishable deviations in chrominance, allowing subtle defect textures to be better preserved and distinguished through chrominance restoration.

We plan to publicly release part of the dataset in future work to support broader research in low-light enhancement and structural inspection of concrete surfaces.

We used the Peak Signal-to-Noise Ratio (PSNR) and the Structural Similarity Index Measure (SSIM) as two indicators to quantitatively evaluate the performance of the model.

4.2. Experimental Environment

We implemented our method in the PyTorch2.5.1 [] framework. All the experiments were performed on a system with an Intel(R) Xeon(R) E5-2680 2.70 GHz CPU, 256GB of DDR4 ECC RAM (2133 MHz), and a GeForce RTX 3070Ti GPU (Intel, Santa Clara, CA, USA). The Adam [] optimizer with default parameters and was utilized to train and optimize the proposed DBLCANet-HCIE model. The learning rate was initialized to and decayed to using a cosine annealing learning rate scheduling strategy over 2000 epochs. During the training process, we randomly cropped the training images to patches of size 128 × 128. These cropped images then underwent random flipping and rotation for data augmentation purposes. The batch size was set to 8. To ensure a fair comparison, all baseline methods were re-implemented using the officially released source codes from their respective publications and executed under identical hardware and software conditions.

4.3. Results and Evaluation

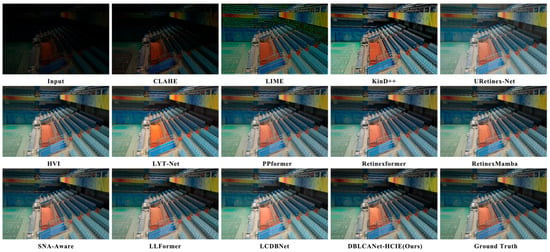

We trained the DBLCANet-HCIE model on the LOLV1 dataset and compared it with 12 other low-light image enhancement methods: CLAHE [], LIME [], KinD++ [], URetinex-Net [], HVI [], LYT-NET [], PPformer [], Retinexformer [], RetinexMamba [], SALLIE [], LCDBNet [], and LLFormer []. Table 1 presents the quantitative performance of these diverse enhancement methods on the LOLv1 dataset. It is evident that our proposed method demonstrated superior performance compared to the majority of these methods in both the PSNR and SSIM indicators. Notably, our method achieved the best results in the SSIM category and the second-highest score in the PSNR metric, with a marginal difference of only 0.12 dB from the leading method, HVI.

Table 1.

Quantitative results on the LOLv1 dataset in terms of the PSNR and SSIM. For each metric, bold indicates the second-best result.

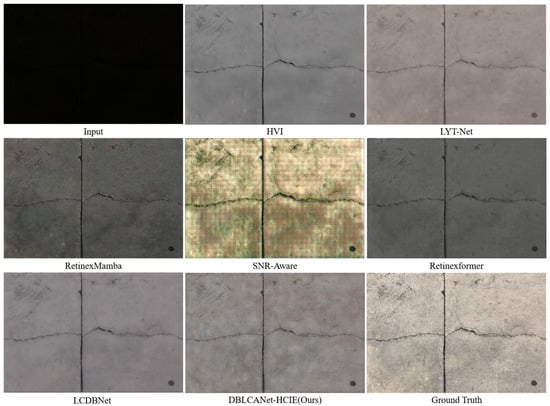

Figure 9 illustrates the results of qualitative comparisons between our proposed method and the existing approaches. The CLAHE method exhibited limited enhancement effects, while LIME improved the luminance but introduced severe distortion artifacts. Although KinD++ and LYT-Net achieved luminance enhancement, they simultaneously developed color over-saturation issues. Compared with the other enhancement methods illustrated in the images, our approach demonstrated better performance in terms of chromatic consistency, detail clarity, and luminance optimization.

Figure 9.

Visual comparisons of our method and others on the LOLv1 dataset.

As presented in Table 2, the PSNR and SSIM values of the seven models trained on the LOLv1 dataset showed a significant decrease when tested on our self-constructed LLHCID. Nonetheless, our method also achieved the highest scores in terms of the PSNR and SSIM. This indicates that the actual enhancement effects are far from satisfactory, which is further illustrated in Figure 10. The luminance enhancement is insufficient, and the detailed features are severely distorted.

Table 2.

Quantitative results for models trained on the LOLV1 dataset and tested on the LLHCID in terms of PSNR and SSIM. For each metric, bold indicates the second-best result.

Figure 10.

Visual comparisons of direct testing on the LLHCID with models trained on the LOLv1 dataset.

To ensure a fair and unbiased comparison, we retrained and evaluated all seven models on our self-constructed LLHCID under consistent experimental conditions. Specifically, the training data, preprocessing procedures, and key hyperparameter settings, such as learning rate, batch size, optimizer, and number of epochs, were maintained across all models. For the baseline models, we adopted their publicly available official implementations and retained their default hyperparameter settings, which were established by the original authors.

The results of the quantitative comparison of the LLHCID test set are shown in Table 3, where our proposed method exhibits excellent performance in terms of the PSNR metric, achieving 28.61 dB. Our method outperforms the second-best approach, Retinexformer [], by 1.48 dB in PSNR. Compared to the other competing methods, including LYT-Net [], RetinexMamba [], SNR-Aware [], HVI [], and LCDBNet [], our approach achieves improvements of 4.96 dB, 1.87 dB, 3.81 dB, 1.98 dB, and 2.05 dB, respectively. In terms of SSIM, which reflects structural similarity and perceptual fidelity, our method achieves the highest score of 0.9574, outperforming LYT-Net by 0.1022 and showing improvements of 0.0361, 0.0473, 0.0498, 0.0298, and 0.0215 over Retinexformer, RetinexMamba, SNR-Aware, HVI, and LCDBNet, respectively. These results clearly demonstrate the superior ability of our DBLCANet-HCIE to enhance low-light hydraulic concrete images while effectively preserving structural and texture details.

Table 3.

Quantitative results on the LLHCID in terms of the PSNR and SSIM. For each metric, bold indicates the second-best result.

To comprehensively evaluate the computational efficiency and complexity of the proposed model, we compared seven low-light image enhancement methods in terms of floating-point operations (FLOPs), number of parameters, and average inference time per image. To ensure fairness and comparability, all models were evaluated in the same hardware environment, with input tensors uniformly set to a size of 3 × 400 × 600, consistent with the training dataset. For inference time evaluation, each model was run multiple times, and the average value was recorded to reduce the impact of random fluctuations. As shown in Table 3, DBLCANet-HCIE contains 2.13 million parameters and requires 63.90 GFLOPs, achieving the best performance among all compared approaches while maintaining a moderate level of model complexity.

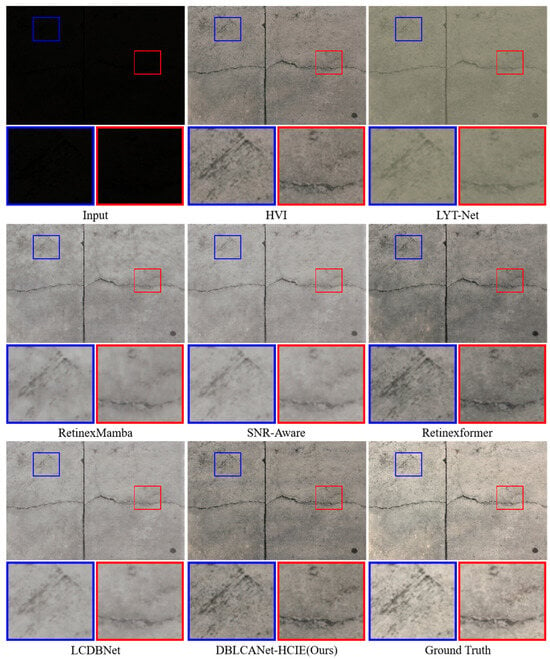

Figure 11 presents a visual comparison of the results after training on the LLHCID, clearly illustrating that the images enhanced using our proposed method most closely resembled the ground-truth images. The HVI, LYT-Net, and Retinexformer methods exhibited certain distortions in color representation, while the RetinexMamba, LCDBNet, and SNR-Aware methods showed less than ideal performance in terms of detail preservation. The DBLCANet-HCIE method exhibited the best performance among the seven methods in terms of its luminance enhancement and detailed feature preservation. The defect edge details were also well preserved.

Figure 11.

Visual comparisons of our method and others on the LLHCID. The red and blue boxes represent the enhanced contrast of the localized defects.

Comparisons of the enlarged defect regions in the visual results reveal that RetinexMamba, LCDBNet, and SNR-Aware introduce noticeable noise and artifacts around defect areas, which significantly compromise visual quality. Although LYT-Net, HVI, and Retinexformer show improved performance in suppressing such artifacts, they still suffer from detail loss and substantial color distortion in defect regions. In contrast, the proposed method not only enhances overall luminance effectively but also preserves fine-grained defect textures and removes noise, producing visual results that are visually closer to the reference bright-light images.

4.4. Ablation Study

To rigorously ascertain the efficacy of the DBLCANet-HCIE approach, a series of ablation studies were meticulously designed, focusing on the discrete impacts of individual modules, branching architectures, and sub-loss functions. These studies were uniformly executed within an identical training framework and environmental conditions, leveraging the LLHCID to ensure comprehensive and unbiased evaluations.

We performed ablation experiments by sequentially excluding the LAB, CRM, CDB, and FDDEB from the comprehensive model architecture. The results of the ablation studies on sub-blocks and sub-branches are shown in Table 4. The exclusion of the LAB reduced the model’s PSNR by 5.09 dB and its SSIM by 0.114. The absence of the CRB resulted in decreases of 2.96 dB and 0.0961 in the model’s PSNR and SSIM, respectively. These results demonstrate the effectiveness of the luminance adjustment and chrominance restoration sub-branches. The ablation results of the CDB and FDDEB modules demonstrate their distinct contributions to chrominance restoration. Specifically, the CDB effectively suppresses chromatic noise in the Cb and Cr channels, while the FDDEB enhances fine-grained detail features, enabling more accurate and perceptually consistent chrominance reconstruction.

Table 4.

The results of ablation studies on sub-blocks and sub-branches.

As evidenced in Table 5, the outcomes of the ablation studies substantiate the efficacy of the proposed joint loss function. Initial experiments employing solely the loss yielded a PSNR of 26.21 dB and an SSIM of 0.8827. Subsequent individual integration of the and losses improved these metrics to 28.14 dB/0.9253 and 27.86 dB/0.9168, respectively. The synergistic combination of all three losses () achieved optimal performance, attaining a peak PSNR of 28.61 dB and SSIM of 0.9674. These experimental findings substantiate the efficacy of integrating holistic and branch-specific losses, thereby validating the necessity of employing a joint loss function for hydraulic concrete image enhancement.

Table 5.

Quantitative results on the LLHCID in terms of the PSNR and SSIM.

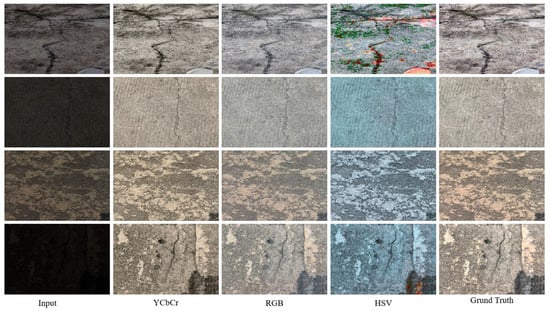

4.5. Evaluation in Different Color Spaces

To evaluate the influence of color space selection on the enhancement of hydraulic concrete images, we conducted experiments using three widely adopted color spaces: RGB, HSV, and YCbCr. The same network structure and training configuration were used in all cases to ensure a fair comparison.

Table 6 shows the quantitative results of the LLHCID across different color spaces. The results indicate that the YCbCr color space significantly outperforms the RGB and HSV color spaces in both the PSNR and SSIM metrics when applied to low-light hydraulic concrete images. This improvement is largely due to the explicit separation of luminance (Y) and chrominance (Cb/Cr) in the YCbCr space, which aligns well with the characteristics of our dual-branch architecture. In contrast, the RGB and HSV color spaces entangle brightness and color information, which makes it more difficult to isolate and enhance subtle surface features such as fine cracks, erosion marks, efflorescence, and aggregate exposure, often resulting in color distortion or detail loss during enhancement.

Table 6.

Quantitative results of different color spaces on the LLHCID.

Figure 12 presents the qualitative comparison results, indicating that the proposed model performs suboptimally in enhancing low-light underwater concrete images in the RGB and HSV color spaces, exhibiting color distortion and loss of detail. Attempts to use the LAB space resulted in non-convergence, likely due to its strong nonlinearity and incompatibility with the current architecture. In contrast, qualitative evaluations further demonstrate that images processed in the YCbCr color space achieve more natural color balance, clearer defect textures, and improved structural consistency. These results highlight the suitability of YCbCr for defect-aware enhancement and support its use as the preferred color space in our proposed framework.

Figure 12.

Visual comparisons of different color spaces on the LLHCID.

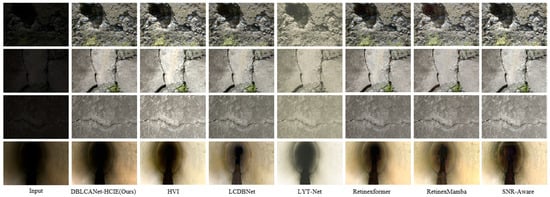

4.6. Real-World Low-Light Hydraulic Concrete Image Enhancement

To evaluate the effectiveness of the proposed method for enhancing real low-light hydraulic concrete images, we collected images from various low-light hydraulic concrete scenes, including dam bodies, water cushion slopes, and drainage pipelines. We compared the enhancement results of our method with several other image enhancement techniques. The visual results are presented in Figure 13. Other methods exhibit varying degrees of noise, color distortion, and detail loss in their enhanced outputs. In contrast, our method achieves superior enhancement performance, demonstrating its potential for hydraulic concrete image enhancement. Nevertheless, there remains room for further improvement in enhancing pipeline images.

Figure 13.

Comparisons between our method and others on real-world low-light concrete images.

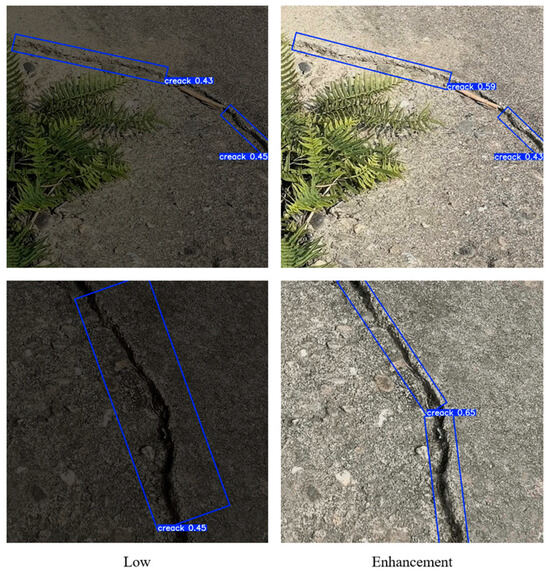

4.7. Low-Light Hydraulic Concrete Defect Detection

Building upon our previous work on rotational crack detection in hydraulic concrete, where we constructed a dataset of 5432 crack images with oriented bounding box annotations, we fine-tuned a YOLO11n-obb model to serve as a baseline detector. To evaluate the impact of our enhancement method on downstream detection tasks, we selected 452 low-light crack images from our hydraulic concrete inspection dataset and manually annotated them using the same rotation-based protocol. These images were tested using the pre-trained YOLO11n-obb model under two conditions: without enhancement and after applying various enhancement methods. As reported in Table 7, our method yielded the highest performance, improving precision by 11.38% and mAP@50 by 13.80% compared to the unenhanced input, surpassing all competing approaches. The visual comparisons in Figure 14 further illustrate this advantage: unprocessed low-light images often suffer from missed detections, whereas our enhanced images show notably improved crack localization with minimal omission. These results highlight the practical effectiveness of our enhancement model in improving not only image quality but also the accuracy and reliability of downstream defect detection in real-world hydraulic concrete inspection scenarios.

Table 7.

Quantitative results achieved in the LLHCID in terms of the PSNR and SSIM.

Figure 14.

Comparison of low-light hydraulic concrete defect detection.

5. Conclusions

In this study, we propose a Dual-Branch Luminance–Chrominance Attention Network (DBLCANet-HCIE) for low-light hydraulic concrete image enhancement. The network decomposes the task into two subtasks: luminance correction and chrominance restoration. The LAB employs global–local hybrid attention to enhance luminance and preserve structural details, while the CRB integrates CDB and FDDEB to suppress noise and enhance subtle chrominance and defect textures. Each branch integrates localized supervision modules to accelerate model convergence and generate intermediate enhancement results, complemented by a feature fusion block optimized for YCbCr space integration. Training was guided by a composite loss function combining structural preservation constraints and perceptual optimization. Extensive quantitative and qualitative evaluations on both the LOLv1 dataset and our custom LLHCID demonstrated the potential of the proposed framework for effective low-light hydraulic concrete image enhancement; our model achieved a PSNR of 28.61 dB and an SSIM of 0.9574 on hydraulic concrete images. Furthermore, real-world defect detection results show that the enhanced images significantly improve detection accuracy, highlighting the model’s potential for practical structural inspection under low-light conditions.

Future research will aim to extend the proposed method to a wider range of infrastructure types and to explore more advanced deep learning techniques to further improve enhancement performance. Additionally, efforts will be made to develop lightweight model architectures to facilitate real-time image enhancement on inspection devices with limited computational resources.

Author Contributions

Conceptualization, Z.P. and L.L.; methodology, Z.P.; software, Z.P., C.C., G.Z., J.J. and Z.T.; validation, Z.P., C.C., G.Z., R.T. and Z.T.; formal analysis, Z.P., L.L., R.T. and S.Z.; investigation, Z.P., R.T., G.Z., Z.T. and S.Z.; resources, Z.P., L.L., C.C., R.T., M.W., J.J., S.Z., Z.T. and Z.L.; data curation, Z.P., C.C., G.Z., R.T., M.W., S.Z. and Z.T.; writing—original draft preparation, Z.P., L.L., C.C., M.W. and Z.L.; writing—review and editing, Z.P., C.C., G.Z., J.J. and Z.L.; visualization, Z.P. and R.T.; supervision, Z.P. and L.L.; project administration, Z.P. and Z.L. funding acquisition, L.L. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number U21A20157.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LAB | Luminance Adjustment Branch |

| CRB | Chroma Restoration Branch |

| LLHCID | Low-light hydraulic concrete image dataset |

| LAHAB | Luminance-Aware Hybrid Attention Block |

| CDB | Channel Denoiser Block |

| FDDEB | Frequency-Domain Detail Enhancement Block |

| CNN | Convolutional Neural Network |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| SSR | Single-scale Retinex |

| MSR | Multi-scale Retinex |

| ViTs | Vision Transformers |

| GAB | Global Attention Block |

| CAB | Channel Attention Block |

| SAB | Spatial Attention Block |

| RCAB | Residual Channel Attention Block |

| MHSA | Multi-head self-attention |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index Measure |

References

- Zhu, Y.; Tang, H. Automatic damage detection and diagnosis for hydraulic structures using drones and artificial intelligence techniques. Remote Sens. 2023, 15, 615. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, H.; Li, Y. Theoretical and technical research prospects for intelligent inspection and safety evaluation of flood discharge and energy dissipation buildings in high dam hubs. Adv. Eng. Sci 2023, 55, 1–13. [Google Scholar]

- Guo, J.; Ma, J.; García-Fernández, Á.F.; Zhang, Y.; Liang, H. A survey on image enhancement for Low-light images. Heliyon 2023, 9, e14558. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Ward, R.K. Fast image/video contrast enhancement based on weighted thresholded histogram equalization. IEEE Trans. Consum. Electron. 2007, 53, 757–764. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, H.; Yi, X.; Ma, J. CRetinex: A progressive color-shift aware Retinex model for low-light image enhancement. Int. J. Comput. Vis. 2024, 132, 3610–3632. [Google Scholar] [CrossRef]

- Wang, Z.; Tao, H.; Zhou, H.; Deng, Y.; Zhou, P. A content-style control network with style contrastive learning for underwater image enhancement. Multimed. Syst. 2025, 31, 1–13. [Google Scholar] [CrossRef]

- Wen, Y.; Xu, P.; Li, Z.; Ato, W.X. An illumination-guided dual attention vision transformer for low-light image enhancement. Pattern Recognit. 2025, 158, 111033. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Zuiderveld, K.J. Contrast limited adaptive histogram equalization. Graph. Gems 1994, 4, 474–485. [Google Scholar]

- Lee, C.; Lee, C.; Kim, C.-S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef]

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.-U.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.-U.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Si, L.; Wang, Z.; Xu, R.; Tan, C.; Liu, X.; Xu, J. Image enhancement for surveillance video of coal mining face based on single-scale retinex algorithm combined with bilateral filtering. Symmetry 2017, 9, 93. [Google Scholar] [CrossRef]

- Xiao, J.; Peng, H.; Zhang, Y.; Tu, C.; Li, Q. Fast image enhancement based on color space fusion. Color Res. Appl. 2016, 41, 22–31. [Google Scholar] [CrossRef]

- Gu, Z.; Li, F.; Fang, F.; Zhang, G. A novel retinex-based fractional-order variational model for images with severely low light. IEEE Trans. Image Process. 2019, 29, 3239–3253. [Google Scholar] [CrossRef]

- Hao, S.; Han, X.; Guo, Y.; Xu, X.; Wang, M. Low-light image enhancement with semi-decoupled decomposition. IEEE Trans. Multimed. 2020, 22, 3025–3038. [Google Scholar] [CrossRef]

- Zhang, Q.; Nie, Y.; Zhu, L.; Xiao, C.; Zheng, W.-S. Enhancing underexposed photos using perceptually bidirectional similarity. IEEE Trans. Multimed. 2020, 23, 189–202. [Google Scholar] [CrossRef]

- Xi, W.; Zuo, X.; Sangaiah, A.K. Enhancement of unmanned aerial vehicle image with shadow removal based on optimized retinex algorithm. Wirel. Commun. Mob. Comput. 2022, 2022, 3204407. [Google Scholar] [CrossRef]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Porikli, F.; Pang, Y. LightenNet: A convolutional neural network for weakly illuminated image enhancement. Pattern Recognit. Lett. 2018, 104, 15–22. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Zhang, Z.; Zheng, H.; Hong, R.; Xu, M.; Yan, S.; Wang, M. Deep color consistent network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1899–1908. [Google Scholar]

- Park, J.Y.; Park, C.W.; Eom, I.K. ULBPNet: Low-light image enhancement using U-shaped lightening back-projection. Knowl.-Based Syst. 2023, 281, 111099. [Google Scholar] [CrossRef]

- Wei, X.; Lin, X.; Li, Y. DA-DRN: A degradation-aware deep Retinex network for low-light image enhancement. Digit. Signal Process. 2024, 144, 104256. [Google Scholar] [CrossRef]

- Yan, Q.; Feng, Y.; Zhang, C.; Wang, P.; Wu, P.; Dong, W.; Sun, J.; Zhang, Y. You only need one color space: An efficient network for low-light image enhancement. arXiv 2024, arXiv:2402.05809. [Google Scholar]

- Wang, H.; Peng, L.; Sun, Y.; Wan, Z.; Wang, Y.; Cao, Y. Brightness perceiving for recursive low-light image enhancement. IEEE Trans. Artif. Intell. 2023, 5, 3034–3045. [Google Scholar] [CrossRef]

- Chen, D.; Kang, F.; Li, J.; Zhu, S.; Liang, X. Enhancement of underwater dam crack images using multi-feature fusion. Autom. Constr. 2024, 167, 105727. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Zhang, K.; Li, Y.; Liang, J.; Cao, J.; Zhang, Y.; Tang, H.; Fan, D.-P.; Timofte, R.; Gool, L.V. Practical blind image denoising via Swin-Conv-UNet and data synthesis. Mach. Intell. Res. 2023, 20, 822–836. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, K.; Shen, T.; Luo, W.; Stenger, B.; Lu, T. Ultra-high-definition low-light image enhancement: A benchmark and transformer-based method. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 2654–2662. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12504–12513. [Google Scholar]

- Dang, J.; Zhong, Y.; Qin, X. PPformer: Using pixel-wise and patch-wise cross-attention for low-light image enhancement. Comput. Vis. Image Underst. 2024, 241, 103930. [Google Scholar] [CrossRef]

- Wang, H.; Yan, X.; Hou, X.; Li, J.; Dun, Y.; Zhang, K. Division gets better: Learning brightness-aware and detail-sensitive representations for low-light image enhancement. Knowl.-Based Syst. 2024, 299, 111958. [Google Scholar] [CrossRef]

- Brateanu, A.; Balmez, R.; Avram, A.; Orhei, C.; Ancuti, C. Lyt-net: Lightweight yuv transformer-based network for low-light image enhancement. IEEE Signal Process. Lett. 2025, 32, 2065–2069. [Google Scholar] [CrossRef]

- Li, P.; Tao, H.; Zhou, H.; Zhou, P.; Deng, Y. Enhanced Multiview attention network with random interpolation resize for few-shot surface defect detection. Multimed. Syst. 2025, 31, 36. [Google Scholar] [CrossRef]

- BT.601: Studio Encoding Parameters of Digital Television for Standard 4:3 and Wide-Screen 16:9 Aspect Ratios; CCIR Rep 2011; International Radio Consultative Committee International Telecommunication Union: Geneva, Switzerland, 2011.

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, X.; Pan, J.; Lu, J.; Fan, Z.; Li, H. Hybrid cnn-transformer feature fusion for single image deraining. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 378–386. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Zhu, X.; Cheng, D.; Zhang, Z.; Lin, S.; Dai, J. An empirical study of spatial attention mechanisms in deep networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6688–6697. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.-H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Zhang, D.; Huang, F.; Liu, S.; Wang, X.; Jin, Z. Swinfir: Revisiting the swinir with fast fourier convolution and improved training for image super-resolution. arXiv 2022, arXiv:2208.11247. [Google Scholar]

- Paszke, A. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond brightening low-light images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Yan, Q.; Feng, Y.; Zhang, C.; Pang, G.; Shi, K.; Wu, P.; Dong, W.; Sun, J.; Zhang, Y. Hvi: A new color space for low-light image enhancement. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 5678–5687. [Google Scholar]

- Bai, J.; Yin, Y.; He, Q.; Li, Y.; Zhang, X. Retinexmamba: Retinex-based mamba for low-light image enhancement. arXiv 2024, arXiv:2405.03349. [Google Scholar]

- Xu, X.; Wang, R.; Fu, C.-W.; Jia, J. Snr-aware low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17714–17724. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).