1. Introduction

Education remains a cornerstone of human development, underpinning social well-being, economic growth, gender equality, and global citizenship. Achieving these outcomes depends on inclusive, equitable, and high-quality learning opportunities across the lifespan. However, education systems worldwide face mounting challenges. Persistent access gaps continue to marginalize millions of learners, while those in school often struggle to acquire foundational knowledge and digital competencies necessary for a rapidly evolving, interconnected world [

1,

2].

In this context, the integration of information and communication technologies (ICT), particularly artificial intelligence (AI), has opened new avenues for educational innovation. AI technologies offer potential to personalize learning pathways, support formative assessment, and optimize instructional design [

3,

4,

5,

6]. Intelligent tutoring systems, automated feedback tools, and multimodal classroom analytics exemplify how AI is reshaping teaching and learning practices in digitally transformed environments [

7,

8].

Among recent developments, large-scale foundational models (FMs)—such as generative language and multimodal models—represent a major leap forward in educational AI. These models are trained on diverse, extensive datasets and demonstrate general-purpose capabilities adaptable to varied educational scenarios [

9]. Applications range from real-time generation of customized feedback to adaptive content delivery based on learner profiles and behavioral data.

Yet, the rapid deployment of such models also raises pressing ethical, legal, and pedagogical concerns. Data protection laws such as the GDPR and FERPA impose strict limits on the collection and use of student information, and many institutions lack sufficient infrastructure to support compliant AI applications [

10,

11]. Additionally, the growing presence of AI-generated content (AIGC) exacerbates challenges related to academic integrity, misinformation, and deepfake misuse, which may undermine institutional trust and learner safety [

12,

13,

14,

15]. These risks call for proactive governance frameworks that balance innovation with accountability.

To address these opportunities and risks, coordinated efforts from researchers, educators, technology providers, and policymakers are urgently needed. Multistakeholder collaboration is essential for developing responsible AI infrastructures that uphold educational values while mitigating systemic harms [

13,

16,

17,

18].

To clarify our terminology, we define AI-based education in this study as the integration of emerging generalist AI models—such as large language models and multimodal foundation models—into teaching and learning processes. These models, characterized by their ability to handle diverse educational tasks (e.g., feedback generation, content creation, behavior monitoring), go beyond traditional narrow AI systems and represent a paradigm shift toward more flexible and general-purpose capabilities. Although still in early stages of application, we consider this direction foundational for future-ready educational architectures.

Regarding ethical data governance, we focus specifically on privacy-preserving mechanisms and legal compliance as the primary pillars of ethical design. This includes end-to-end encryption, institutional control over data processing, and adherence to GDPR and FERPA regulations. While broader ethical concerns—such as fairness, transparency, and human oversight—are integral to responsible AI, this study prioritizes data protection architecture, addressing the urgent need for secure infrastructures in AI-supported educational environments.

In response, this paper introduces the Generalist Education Artificial Intelligence (GEAI) framework—a conceptual infrastructure designed to support ethical, personalized, and privacy-preserving AI deployment in education. GEAI features a trusted domain architecture, incorporating secure multimedia registration devices (MM Devices) to collect real-time multimodal data for intelligent educational services. This design aligns with principles of responsible innovation and human-centered learning.

The remainder of this paper is structured as follows:

Section 2 reviews related work on foundational models and their application in educational contexts.

Section 3 introduces the GEAI framework, detailing its domain architecture and core components.

Section 4 presents three use cases that illustrate the framework’s potential for adaptive tutoring, secure assessments, and institutional data protection.

Section 5 discusses the pedagogical, social, and ethical implications of GEAI, including its alignment with constructivist principles.

Finally,

Section 6 concludes the paper and outlines limitations and directions for future research and validation.

The research presented in this paper addresses the following questions:

How does the GEAI framework improve data governance and privacy protection while enabling personalized, AI-supported learning?

In what ways do MM Devices facilitate real-time learner data collection for adaptive instruction and intelligent tutoring?

What ethical and legal risks accompany the deployment of GEAI, and how can these be mitigated through transparent design and institutional policy?

2. Related Work

2.1. Foundational Models in AI

Foundational models (FMs) mark a paradigm shift in artificial intelligence, evolving from narrow, task-specific algorithms to general-purpose models with wide-ranging applications across domains [

9]. For instance, BERT [

19] achieved State-of-the-Art performance in natural language processing (NLP), with F1 scores reaching 91.3 on the GLUE benchmark. GPT-3 [

20] demonstrated coherent language generation with perplexity scores below 20, while DALL·E [

21] achieved a 0.85 score in human-rated visual fidelity evaluations. These developments highlight the transformative potential of FMs in language understanding, content generation, and multimodal applications.

Recent advances in deep learning have bridged formerly distinct areas such as computer vision, robotics, and NLP into a unified multimodal research paradigm [

9]. By integrating textual, auditory, and visual data streams, FMs more closely approximate human cognition, linking perceptual features (e.g., object shapes) with semantic abstractions (e.g., intentions and attributes) to enable complex, abstract learning [

22]. These models now process trillions of data points, with projects like GPT-4 requiring development investments exceeding USD 100 million [

23].

As FMs extend their influence into sectors such as education, healthcare, and climate science, concerns about transparency and accountability have intensified. A lack of openness—similar to the early development of internet technologies—may amplify societal risks. Initiatives such as the AI Partnership advocate for documentation and interpretability of model architecture and datasets, an approach that is especially pertinent for education-focused AI systems.

2.2. Foundational Models in Educational Contexts

The application of foundational models in education has begun to reshape instructional delivery and learner engagement. FM-driven systems have demonstrated significant improvements in assessment accuracy, as seen in Malik et al. [

6], which reported a 15% increase in tailored feedback quality. Studies by Demszky et al. [

4] and Jensen et al. [

5] found that classroom video analysis powered by FMs reduced redundant teacher–student exchanges by 20%, improving learning efficiency.

In practice, large language models (LLMs) have enhanced active learning. For example, Mollick and Mollick [

24] examined chatbot use across disciplines, while Neumann et al. (2024) [

25] demonstrated a 10% boost in engagement in programming courses via LLM integration. Similarly, Ruff et al. [

26] showed that ChatGPT-assisted chemistry lab instruction reduced student error rates by 12%. Adaptive learning platforms like MATHia [

27], which dynamically calibrate math content using FMs, reported an 8% rise in student test scores. In virtual learning environments, H. Zhang et al. [

28] found that FM-based instructional support increased student participation by 15%.

Nonetheless, barriers remain—chiefly the scarcity of high-quality, large-scale educational datasets. Many existing solutions are task-specific and require extensive resources (e.g., over 10,000 h of audio data), limiting scalability [

9]. This context underscores the promise of frameworks like GEAI, which combine multimodal data capture (e.g., 1080p video, 16 kHz audio) with local processing and privacy-aware design. Such approaches align with pedagogical theories like Vygotsky’s Zone of Proximal Development and reflect the goals outlined in this MDPI Special Issue: fostering inclusive, ICT-enhanced learning environments in the post-pandemic era.

2.3. Educational Frameworks and Governance Models

Educational technology research has proposed several influential frameworks to guide the integration of digital tools. The Technological Pedagogical Content Knowledge (TPACK) model emphasizes the dynamic interplay between pedagogy, technology, and content knowledge [

29]. However, it does not directly address real-time AI interaction, data governance, or system-level interoperability. Similarly, Intelligent Tutoring Systems (ITS) have demonstrated effectiveness in domain-specific learning (e.g., mathematics) but are often closed-loop and lack adaptability to multimodal, real-time data environments.

In parallel, ethical governance in AI has attracted global attention. Guidelines from the EU High-Level Expert Group and OECD promote principles such as fairness, transparency, and accountability [

30,

31]. Yet, these remain largely high-level and are not readily operationalized in educational AI systems—particularly those involving sensitive, high-resolution data like video and audio. Recent studies call for infrastructure-level approaches that embed ethical safeguards into data collection, model training, and institutional control.

2.4. Research Gap and Contribution of GEAI

While many existing studies have focused on task-specific AI systems or abstract ethical principles, few offer a unified design that addresses the simultaneous challenges of privacy, adaptivity, multimodality, and generalist model integration. Current adaptive learning platforms typically rely on siloed data and narrow interaction types (e.g., clickstreams or quiz responses), limiting both personalization and institutional oversight.

The GEAI framework fills this gap by proposing a conceptual infrastructure that integrates trusted hardware (MM Devices), privacy-preserving data workflows (e.g., federated learning, blockchain logging), and alignment with general-purpose foundation models. It is designed to serve as a blueprint for future educational AI systems that are not only effective and adaptive but also transparent, auditable, and policy-compliant.

3. Materials and Methods

In response to the challenges of transparency, data fragmentation, and regulatory compliance in current educational AI systems, we propose the Generalist Education AI (GEAI) framework as a conceptual blueprint. Grounded in a Trusted Domain architecture, this framework is designed to enable legally compliant, context-sensitive, and scalable AI deployment in real-world educational environments. It is important to note that the GEAI framework, as proposed in this study, is a conceptual architecture rather than a validated system. While it draws upon the design principles of emerging generalist AI models—such as their capacity to operate across modalities and tasks—it does not claim to deliver full generalist functionality at this stage. Instead, GEAI serves as a blueprint for guiding future prototyping and implementation in educational contexts, particularly in settings where multimodal AI capabilities and ethical data infrastructures are under development.

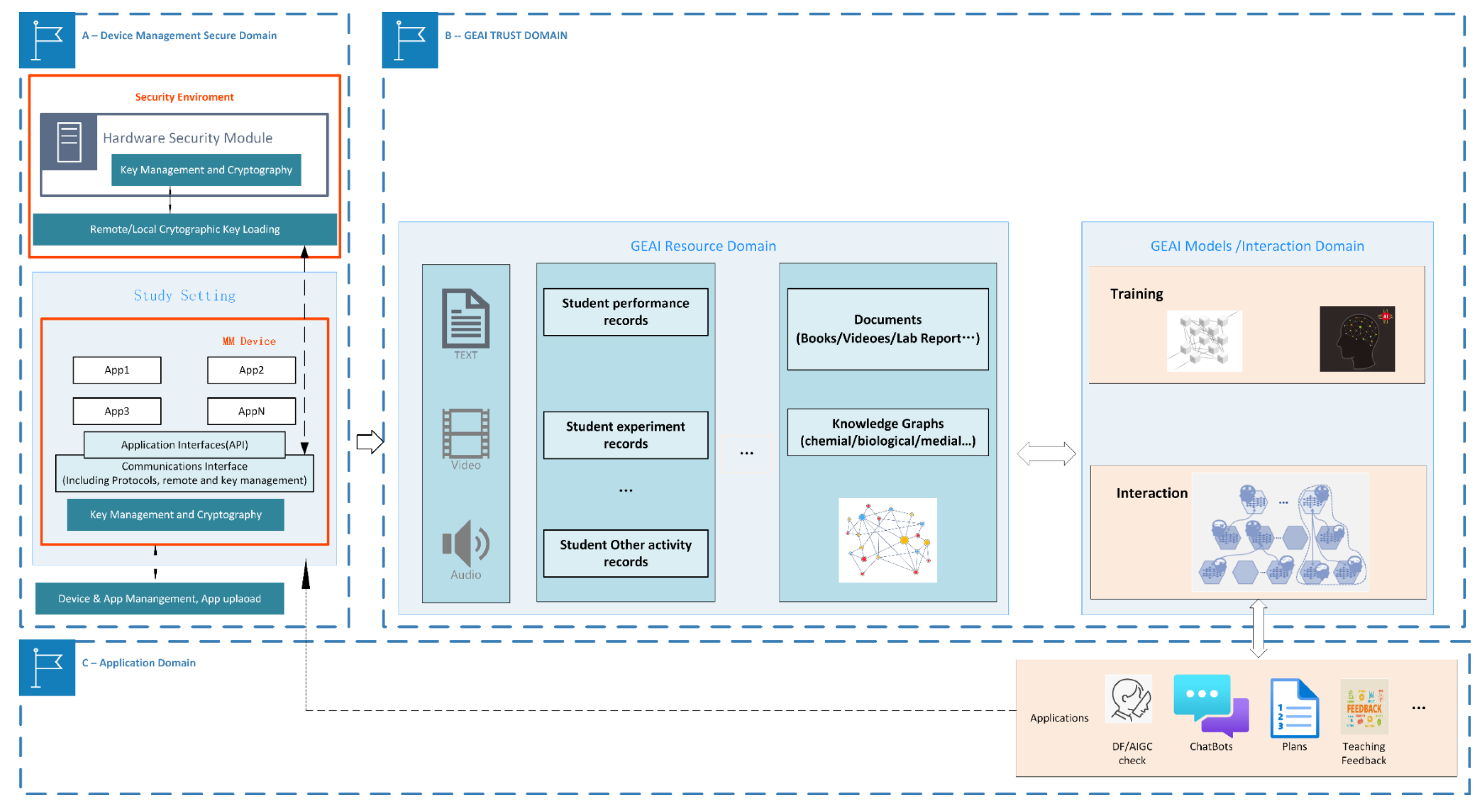

As illustrated in

Figure 1, GEAI consists of three tightly integrated domains that form a closed-loop pipeline:

(A) MM Device Domain: Handles secure and privacy-preserving multimodal data collection from teaching environments;

(B) GEAI Resource Domain: Aggregates and preprocesses data to create structured learning resources and prepare training inputs for foundation models;

(C) GEAI Model and Interaction Domain: Facilitates model training, personalization, and the delivery of intelligent educational services.

Figure 1.

Overview of the GEAI Framework. The figure depicts the architecture of the proposed GEAI framework, comprising three interconnected domains that together form a secure, adaptive, and auditable AI infrastructure for education. This closed-loop pipeline supports multimodal data acquisition, compliant processing, and personalized service delivery in classroom and online settings.

Figure 1.

Overview of the GEAI Framework. The figure depicts the architecture of the proposed GEAI framework, comprising three interconnected domains that together form a secure, adaptive, and auditable AI infrastructure for education. This closed-loop pipeline supports multimodal data acquisition, compliant processing, and personalized service delivery in classroom and online settings.

The MM Device Domain (Domain A) is responsible for data acquisition within authentic educational scenarios. Multimedia registration devices (e.g., AI cameras, wearable sensors, tablets) are deployed in physical or virtual classrooms to capture multimodal inputs, including video, audio, and user interactions. These devices are embedded with tamper-resistant modules and local encryption capabilities. Device operation is managed through a secure key injection protocol, ensuring compliance with data protection regulations. All data collected in this domain is encrypted and securely transmitted to Domain B.

The GEAI Resource Domain (Domain B) functions as the central data management and preprocessing layer. It consolidates heterogeneous educational data—such as performance logs, behavioral traces, and instructional content—and organizes them into a multimodal knowledge graph. This domain also enforces data legitimacy and diversity, preparing high-quality and ethically sourced training datasets for subsequent AI model development.

The Model and Interaction Domain (Domain C) supports the pretraining, fine-tuning, and deployment of AI models tailored to educational tasks. These models provide automated tutoring, personalized feedback, and analytics. Processed outputs are delivered back to applications such as adaptive learning platforms or virtual assistants, while their usage data can optionally be looped back into the system via the MM Devices. This cyclical flow forms a continuous learning loop, enabling dynamic adaptation and ongoing performance optimization.

3.1. GEAI Model

3.1.1. MM Devices for Secure Educational Data Collection

The integration of AI technologies in education faces major challenges in data availability, privacy protection, and regulatory compliance. High-quality real-world data for instructional analysis remains scarce due to the high cost and limited scalability of expert classroom observations [

5], while audiovisual recordings are often restricted by legal and ethical concerns. To address these constraints, the GEAI framework introduces Multimedia Registration Devices (MM Devices)—specialized hardware terminals designed to operate within a Trusted Domain and securely collect multimodal classroom data, providing a privacy-preserving foundation for educational analytics. These devices are currently at the conceptual stage, pending prototype development and validation.

MM Devices are intended for deployment in both physical and virtual classrooms, capable of capturing diverse data modalities such as video, audio, and handwriting for potential applications in facial expression analysis, speech recognition, and response evaluation. Initial configurations propose low-resolution sensors (e.g., 720p cameras, 8 kHz microphones) as cost-effective baselines, scalable to higher specifications (e.g., 1080p, 16 kHz) as needed. Drawing on best practices from the payment industry, each device will integrate a Hardware Security Module (HSM)—adapted from Point-of-Sale (POS) systems—to manage cryptographic keys and ensure secure content encryption in compliance with PCI P2PE guidelines [

32,

33].

To support local AI processing and reduce dependency on cloud infrastructure, MM Devices are envisioned to incorporate lightweight edge AI models, such as MobileNet for video processing and Whisper-tiny for speech transcription. The projected unit cost is approximately USD 500, based on market trends in embedded AI devices (e.g., NVIDIA Jetson Nano) and HSM-integrated platforms [

34]. Final costs and performance benchmarks will be established during prototyping.

To mitigate physical and cybersecurity threats, MM Devices will adopt a layered security architecture, including secure execution environments, app-level access controls, and HSM-based identity verification. The hardware design emphasizes portability, with battery support (5–10 h), modular sensor slots, and operational LED indicators. Software components—such as tutoring agents, behavior classifiers, or encoders—will operate in sandboxed environments, subject to formal auditing, approval, and version control. Data transmission will be limited to registered institutional GEAI platforms over secure channels, avoiding exposure to unsecured networks (e.g., public Wi-Fi), and employing encryption protocols aligned with PCI P2PE.

To enhance system accountability, the design also envisions blockchain-based logging, implemented through a lightweight private blockchain (e.g., Proof of Authority) that records local, tamper-proof logs of device operations and compliance status. These logs will be periodically synchronized to the GEAI platform’s distributed ledger, enabling real-time auditing and traceability [

35,

36]. The feasibility of this approach depends on optimizing energy consumption and computing overhead during prototype validation. A summary of these layered security strategies is presented in

Table 1. Ultimately, MM Devices aim to provide a scalable infrastructure for secure, privacy-compliant, and personalized learning, with benefits such as improved student engagement and adaptive support to be evaluated in future trials [

37].

Note: The proposed components, AI features, cost estimates, and blockchain logging mechanisms remain conceptual and require empirical validation through prototyping.

3.1.2. HSM and Key Management for MM Devices

To safeguard the secure storage and transmission of multimodal educational data, MM Devices employ a dual-layer cryptographic system comprising symmetric encryption keys (e.g., AES-256) and asymmetric key pairs. These keys are managed under a rigorous lifecycle framework encompassing generation, storage, rotation, and revocation, with oversight extending to personnel and equipment. Central to this architecture are Hardware Security Modules (HSMs), certified to FIPS 140-2 Level 3 standards, which offer tamper-resistant protection against both physical and digital intrusion [

38]. Integrated directly into MM Devices, these HSMs enable secure key generation, real-time storage, and automated key erasure in response to anomaly-based intrusion detection [

34].

During initial provisioning or maintenance, cryptographic keys and device certificates are injected into ISO 27001-certified secure environments provided by MM Device manufacturers, ensuring compliance with data protection regulations such as GDPR and FERPA [

22]. For post-deployment management, a remote key injection mechanism is proposed, utilizing secure communication protocols (e.g., TLS 1.3) and end-to-end encryption for authenticated updates via registered GEAI platforms. This security model is adapted from the PCI Point-to-Point Encryption (P2PE) framework widely used in the payment industry [

32], tailored to the confidentiality and integrity requirements of educational contexts.

By embedding such a robust HSM-based key management infrastructure, MM Devices can ensure data security across all phases of educational analytics—spanning classroom video, audio, and written responses—while preserving auditability and enabling scalable, regulation-compliant AI deployment. A summary of these features is provided in

Table 2.

3.1.3. Trusted Domains of GEAI

The GEAI Trusted Domain establishes a vertically integrated architecture inspired by regulated industries such as healthcare and finance; yet it is uniquely adapted for educational contexts. It emphasizes data traceability, institutional sovereignty, and AI auditability, which are essential for trustworthy educational AI. This domain consists of two interconnected components: the GEAI Resource Domain and the GEAI Model and Inference Domain, both governed by cross-institutional policies (e.g., data-sharing agreements) and secured via technical protocols (e.g., TLS 1.3). Together, these components form a secure, interoperable, and ethically governed infrastructure that supports multimodal data processing while ensuring compliance with legal frameworks such as GDPR and FERPA [

22].

This domain serves as the foundation of a trusted educational AI ecosystem. It aggregates certified hardware (e.g., Multimedia Registration Devices, or MM Devices), human expertise, and diverse datasets from multiple institutions under predefined regulatory and ethical conditions. These inputs are indexed and preprocessed to train foundational GEAI models for downstream applications, such as emotion-aware tutoring and adaptive lesson planning. This design aligns with UNESCO’s global educational imperatives—equity, quality, and relevance [

1].

Data Legitimacy and Traceability: The domain captures multimodal classroom data (e.g., up to 1080p video, up to 16 kHz audio, interaction logs) from proposed MM Devices. Provenance metadata is recorded via blockchain audit trails, and AI-generated content (AIGC) is labeled with timestamps and model identifiers. These mechanisms aim to improve academic transparency and address concerns surrounding deepfake risks in educational settings [

22,

35].

Bias Mitigation and Inclusiveness: The domain includes a broad spectrum of data across K-12 and higher education, covering linguistic (e.g., English, Mandarin), geographic (urban/rural), and disciplinary (e.g., STEM, humanities) dimensions. Proposed stratified sampling ensures representativeness, mitigating algorithmic bias and improving model fairness [

9,

46].

Secure Use of Personal Learning Data: Student records (e.g., test scores, competency assessments) are protected via AES-256 encryption and role-based access. Federated learning protocols (e.g., FedAvg) allow model personalization without compromising data privacy [

47].

Knowledge Graph Integration: Educational content is structured using domain ontologies (e.g., MathML) and knowledge graphs to support semantic reasoning. For example, misconceptions in physics may be linked to prerequisite mathematical concepts via graph-based inference, enabling personalized learning trajectories [

48,

49].

These proposed features, summarized in

Table 3, position the GEAI Resource Domain as an innovative foundation for educational AI, seamlessly integrating with the MM Devices’ secure data pipeline to address global educational challenges.

The GEAI Model and Inference Domain are envisioned to leverage proposed multimodal data from the Resource Domain—such as text from lesson plans, envisioned classroom video (up to 1080p resolution), and audio (up to 16 kHz) from conceptualized Multimedia Registration Devices (MM Devices)—for large-scale pretraining. This process is planned to involve processing hundreds of billions of tokens and substantial compute resources (e.g., ~10,000 GPU hours), aligning with benchmarks for foundation models [

20,

50]. The proposed pretraining will employ unsupervised learning tasks, including masked language modeling (MLM) for text [

19], contrastive learning for vision-language alignment (e.g., CLIP-style objectives), and self-supervised speech modeling (e.g., wav2vec), to encode a general knowledge framework spanning educational domains such as STEM and humanities [

9,

51]. The conceptualized pretrained model, based on a Transformer architecture with approximately 12 billion parameters, aims to demonstrate capabilities in language generation, translation, and question answering, including competitive performance in tasks like English–Mandarin translation [

20]. However, to address specific educational needs—such as curriculum alignment and student engagement—further fine-tuning is deemed essential, pending prototype validation [

22].

Fine-tuning is planned to adapt the pretrained GEAI model for diverse educational tasks using two promising approaches.

Reinforcement Learning with Human Feedback (RLHF) is envisioned to align model outputs with human preferences, potentially supporting applications like generating formative feedback for student essays to improve writing skills or structuring dialogues for peer learning, addressing the quality challenge in education where tailored feedback is scarce [

52].

Prompt engineering, using curriculum-aligned instructions (e.g., “Explain Newton’s laws for a 9th grader”), is proposed to enable flexible adaptation without retraining, reducing resource demands and supporting diverse teaching contexts [

28]. These methods are intended to underpin applications such as: (a) virtual teaching assistants that generate personalized learning content; (b) pedagogical feedback systems leveraging envisioned MM Device audio logs to support teacher–student interactions; (c) automated resource planning tools to streamline lesson preparation. These applications aim to enhance teaching effectiveness and reduce workload, though empirical evaluations in educational settings remain a future goal, subject to prototype development [

28,

52]. Ongoing research is planned to assess these applications in real-world classrooms, ensuring alignment with pedagogical goals like Vygotsky’s Zone of Proximal Development to improve learning outcomes [

22].

A summary of these approaches and applications is provided in

Table 4.

3.2. The Application Prospects of the GEAI Model Framework

The GEAI framework is envisioned as a platform tailored for educational environments, building on proposed foundational AI models to offer innovative applications across four key areas. These applications are designed to integrate with the conceptualized secure multimodal data pipeline from proposed Multimedia Registration Devices (MM Devices), such as envisioned video (up to 1080p resolution) and audio (up to 16 kHz), addressing global educational challenges like accessibility, equity, and relevance as outlined by UNESCO [

1].

Dynamic Task Understanding and Generalization: GEAI is planned to leverage pretrained multimodal capabilities to understand and address new tasks described in simplified natural language (e.g., at a sixth-grade reading level) without retraining, using proposed prompt engineering techniques [

20,

53,

54]. For instance, the model is envisioned to generate Turkish language geography teaching aids for third-grade students by interpreting prompts like “Create a map-based lesson on Turkish rivers for a 3rd grader.” This capability aims to support diverse curricula, tackling the accessibility challenge in education where tailored resources are limited, especially in multilingual contexts [

55]. Validation of this feature will depend on future prototype development.

Real-Time Dynamic Support for Teaching Activities: GEAI is designed to integrate authorized classroom data from proposed MM Devices—such as envisioned student facial expressions (up to 1080p video) and audio responses (up to 16 kHz)—along with historical interaction logs, to provide real-time decision-making support. For example, during in-person or remote teaching, the framework is envisioned to use proposed vision-language models to analyze student engagement and generate prompts like “Pause and clarify fractions” to help teachers adjust their pace. In virtual settings, it is planned to dynamically modify tasks (e.g., simplify math problems if students struggle), addressing the equity challenge by fostering responsive and inclusive classroom environments, pending prototype validation [

22,

56].

Knowledge Graph-Driven Multidisciplinary Knowledge Presentation: GEAI is proposed to employ knowledge graphs (KGs) constructed with discipline-specific ontologies (e.g., ChEBI for chemistry) to represent and adapt multidisciplinary knowledge for teaching contexts. For instance, students studying environmental conservation are envisioned to access a KG linking chemistry (e.g., carbon cycles), ecology (e.g., biodiversity impacts), and policy-making (e.g., recent climate agreements), presented in age-appropriate language (e.g., eighth-grade level). This approach aims to support inquiry-based learning and address the relevance crisis in curricula that often lack interdisciplinary integration [

22,

57]. Feasibility will be assessed during future prototype testing.

Resource Transparency and Rational Utilization: Within the envisioned Trusted Domain, resources are planned to be traceable via proposed blockchain audit trails and auditable for compliance (e.g., GDPR, FERPA), ensuring transparent usage [

35]. For example, forensic science content unsuitable for younger audiences is intended to be restricted using proposed role-based access control (RBAC) and AES-256 encryption, ensuring age-appropriateness and contextual relevance. This mechanism addresses the equity challenge by aligning with Fairness, Accountability, Transparency, and Ethics (FATE) principles, promoting responsible resource utilization across educational levels, subject to future validation [

22,

58].

4. Results

4.1. Use Case 1: Personalized Learning and Adaptive AI Tutoring

Educational Challenge: Despite recent advancements in adaptive learning technologies, existing systems often fail to deliver truly personalized instruction due to two key limitations: (1) a lack of real-time feedback loops, which inhibits timely adjustments to student engagement and comprehension signals, thus diminishing instructional quality [

37]; and (2) persistent concerns regarding privacy and regulatory compliance, particularly when student data are transferred to centralized cloud services, thereby increasing data security risks in educational settings [

22].

GEAI-Enabled Solution: The GEAI framework introduces a conceptualized Trusted Domain infrastructure that securely integrates Multimedia Registration Devices (MM Devices), multimodal AI models, and institutionally governed data pipelines. This architecture enables real-time, privacy-preserving AI tutoring at scale, pending prototype development and pilot testing [

35,

47].

Workflow and Capabilities:

Visual cues (e.g., facial expressions, gaze shifts via up to 1080p video);

Auditory responses (e.g., oral responsesand answers captured atup to 16 kHz audio);

Behavioral metrics (e.g., posture changes, hesitation, keyboard latency).

These data streams are locally encrypted (AES-256) and preprocessed on-device to preserve student privacy and comply with FERPA and GDPR [

35].

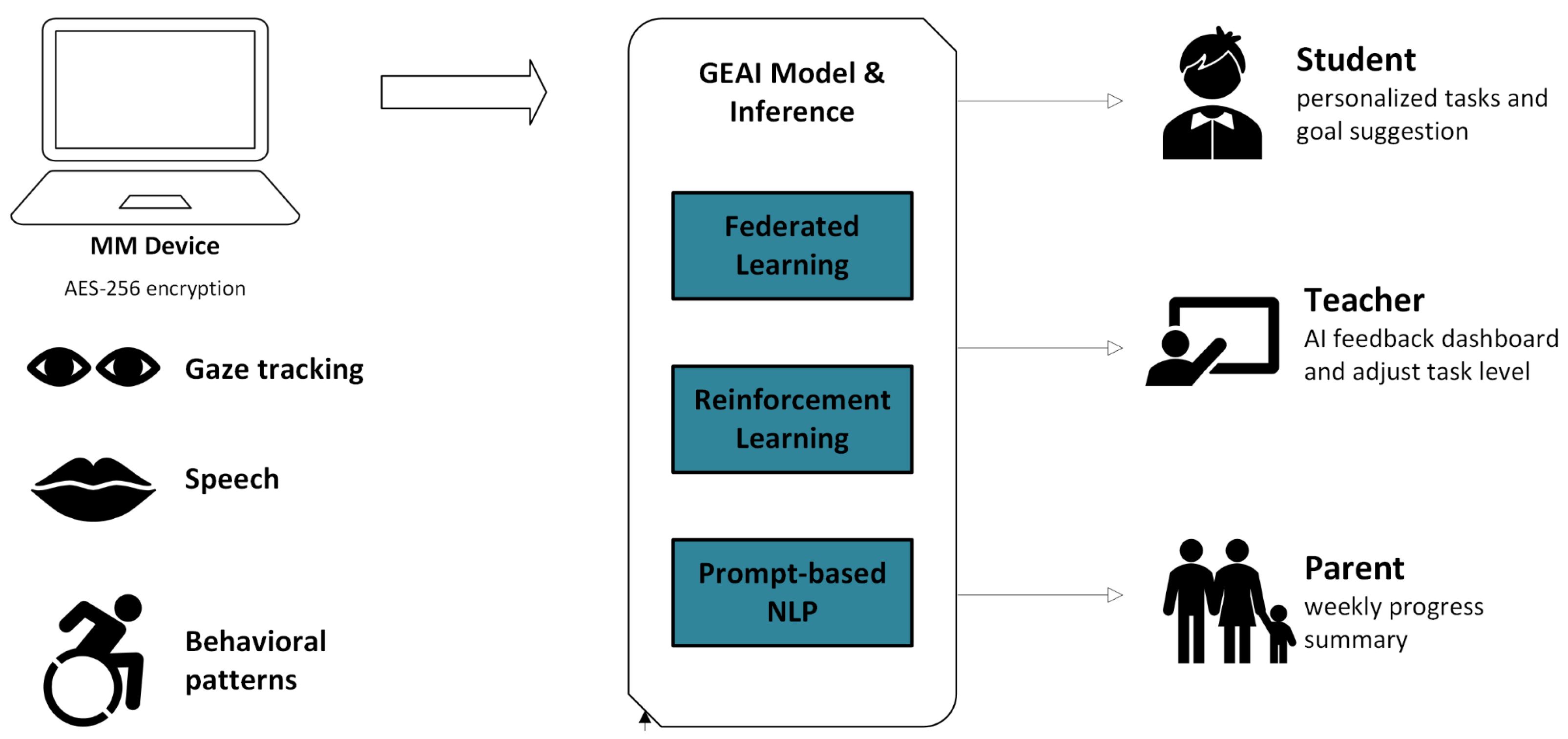

Adaptive AI Tutoring via Federated Inference: GEAI’s core inference module incorporates three AI strategies (see

Figure 2):

Federated Learning (e.g., FedAvg algorithm) allows local model updates on MM Devices without transmitting raw data to the cloud [

47];

Reinforcement Learning dynamically adjusts the difficulty and sequencing of learning materials based on observed student states;

Prompt-based NLP agents provide real-time explanations, motivational feedback, and task scaffolding tailored to student needs [

19,

28,

52]. For example, if a student shows hesitation when solving a fractions problem, the GEAI system may suggest visual analogies or simplified sub-tasks and adjust the next questions accordingly.

Multistakeholder Feedback and Reporting: Proposed MM Devices are also designed to deliver real-time micro-feedback to instructors (e.g., “Pause and clarify fractions”), enhancing situational awareness. Beyond real-time assistance, securely transmitted and audit-tracked outputs will support role-specific dashboards on the GEAI platform:

For students: personalized performance reports with progress visualizations and learning goals;

For teachers: dashboards identifying struggling learners and instructional bottlenecks;

For parents: weekly summaries of engagement, growth metrics, and emotional markers.

All reports will be governed by institutional access policies and privacy safeguards, ensuring ethical deployment and regulatory compliance [

22].

Educational Impact: By integrating edge-based multimodal sensing with secure model inference and targeted stakeholder feedback, GEAI transforms static e-learning environments into responsive, learner-centered ecosystems. This closed-loop system enhances motivation, supports differentiated instruction, and empowers educators with actionable insights—thereby addressing both equity and quality concerns in contemporary education [

22,

37].

4.2. Use Case 2: AI-Assisted Secure Assessments and Cheating Prevention

Educational Challenge: In digital learning environments, traditional assessment methods face escalating challenges, including AI-assisted plagiarism (e.g., ChatGPT misuse), identity fraud in remote testing, algorithmic bias in grading, and data security risks from cloud-based storage. These issues undermine academic integrity and fairness while amplifying systemic inequalities in assessment outcomes [

55,

58]. Addressing these issues requires secure, explainable, and ethically governed AI solutions.

GEAI-Enabled Solution: The GEAI framework proposes a Trusted Domain infrastructure that leverages MM Devices and modular AI components to ensure secure, equitable, and tamper-proof assessments. The system aligns with regulatory frameworks (e.g., GDPR, FERPA) and educational standards for fairness, explainability, and data integrity [

22].

Workflow and Capabilities:

AI-Powered Identity Verification and Authentication: MM Devices support real-time biometric authentication using pre-exam facial recognition (e.g., 95% accuracy per NIST standards, NIST, 2023), continuous face tracking (up to 1080p video at 30 fps), voice verification (up to 16 kHz), and keystroke dynamics to validate identity. Behavioral anomaly detection models track eye movement (e.g., sustained gaze aversion > 5 s) and sudden keystroke pattern shifts to flag suspicious behavior.

Outcome: Secures assessment participation and eliminates identity fraud.

AI-Driven Exam Integrity Monitoring and Proctoring: Real-time audio and screen analysis detects unauthorized behaviors such as whispering, conversation cues, or tab switching. Deepfake detection algorithms—based on CNN architectures—distinguish live video from pre-recorded footage, ensuring authenticity [

58].

Outcome: Preserves assessment credibility and reduces academic dishonesty.

Automated Grading with Fairness Controls: GEAI incorporates OCR and a BERT-based NLP engine to interpret handwritten and typed responses against structured rubrics (e.g., “Score 8/10 for explanation with missing term”). An explainable AI (XAI) layer enables transparency in automated scoring (e.g., “Score reduced due to incomplete diagram”), while fairness adjustments are informed by teacher review [

22]. Grading outputs are recorded immutably using blockchain ledgers (e.g., Hyperledger Fabric), ensuring tamper-proof verification [

35].

Outcome: Enables verifiable, bias-mitigated grading aligned with educational fairness principles.

Secure Data Processing and Compliance Assurance: Assessment data are processed locally on MM Devices and within institutional Trusted Domains using AES-256 encryption, avoiding third-party risks. Data control resides with the institution, ensuring full alignment with GDPR/FERPA mandates and preventing unauthorized access.

Outcome: Guarantees privacy and institutional sovereignty in assessment workflows.

Educational Impact: GEAI strengthens academic integrity by integrating biometric authentication, behavioral monitoring, explainable AI grading, and blockchain-secured outcomes. These measures reduce cheating, mitigate grading bias, and uphold data privacy, directly supporting equitable and high-quality learning outcomes [

22,

58].

Beyond technical features, the system reflects a principled approach to AI governance in education. As highlighted by Yan and Liu [

59], responsible AIED frameworks should be built on five pillars: (1) provenance transparency for AI models; (2) legal legitimacy of training datasets; (3) institutionalized ethical review procedures; (4) accountability of deploying entities; and (5) prioritization of learner benefit.

GEAI’s Trusted Domain design operationalizes these principles, establishing alignment with emerging global standards in ethical educational AI.

4.3. Use Case 3: Institutional Data Protection and Compliance with GEAI

Educational Challenge: With the increasing deployment of AI-driven technologies in education, institutions face mounting concerns over data privacy, legal compliance, and cyber-resilience. Key risks include unauthorized access to student data, breaches in third-party cloud systems, and a lack of transparency in AI decision-making processes [

55,

58]. This comprehensive system architecture is illustrated in

Figure 3, which visually integrates the key components of secure AI-assisted assessments. Without secure, localized processing, institutions often relinquish control over sensitive data, increasing exposure to cyberattacks and compliance violations [

35].

GEAI-Enabled Solution: The GEAI framework addresses these concerns by embedding data protection, regulatory alignment, and explainability into its architecture. Leveraging MM Devices and a Trusted Domain infrastructure, GEAI facilitates secure on-site AI processing, robust access control, and continuous compliance monitoring, thereby strengthening institutional autonomy and data integrity [

22].

Workflow and Capabilities:

Local AI Computation: Proposed MM Devices (e.g., supporting up to 1080p video at 30 fps and 16 kHz audio) are designed to operate as secure edge nodes, analyzing behavioral, academic, and engagement data without transferring raw inputs to external servers.

Advanced Encryption and Role-Based Access Control (RBAC): All data streams are encrypted using AES-256 at the hardware level, while RBAC protocols ensure that only verified personnel (e.g., course instructors) access relevant datasets.

Impact: Maintains institutional sovereignty over data while minimizing third-party exposure and regulatory risk.

Automated Privacy Audits: Proposed MM Devices generate daily compliance reports (e.g., “100% GDPR adherence in data storage”), scanning AI logs for violations (e.g., unauthorized data access attempts) using machine learning models.

Explainable AI for Transparent Decisions: XAI techniques (e.g., LIME) provide justifications for AI outputs (e.g., “Grade revised due to rubric misalignment”), supporting ethical grading and student rights [

22].

Federated Model Training for Privacy Preservation: Using FedAvg [

47], GEAI enables frequent (e.g., daily) model updates across devices without sharing raw data, ensuring continuous improvement while retaining compliance.

Impact: Reduces the institutional burden of manual audits while aligning with educational AI governance principles.

Real-Time Anomaly Detection: GEAI’s embedded models monitor network traffic patterns (e.g., flagging ≥ 3 login failures in 5 min) and alert administrators to potential threats.

Blockchain-Based Academic Records: Immutable ledgers using Hyperledger Fabric (with 10-min consensus cycles) ensure the security of grades, transcripts, and other academic credentials [

35].

AI-Driven Data Loss Prevention (DLP): MM Devices detect and halt abnormal file transmissions (e.g., blocking unauthorized exports > 1 MB), preventing inadvertent or malicious data leaks.

Impact: Reinforces cybersecurity posture, prevents manipulation of academic records, and safeguards institutional reputation.

Educational Impact: By embedding security, compliance, and ethical AI operations at the core of its architecture, GEAI enables institutions to foster a trustworthy and transparent learning environment. This reduces legal exposure, bolsters student data protection, and ensures equitable personalization without compromising privacy [

58]. As AI adoption scales across education, such integrated frameworks become vital to maintaining public trust and pedagogical integrity.

5. Discussion

Pedagogical Dependence and Role Repositioning: The integration of the Generalist Education Artificial Intelligence (GEAI) framework into educational environments raises concerns regarding pedagogical overreliance and the evolving role of teachers. Current AI-detection tools exhibit limited effectiveness in identifying sophisticated generative plagiarism (e.g., paraphrasing via ChatGPT), with detection accuracy reaching only 60% against State-of-the-Art models [

58]. This complicates the evaluation of authentic student performance. Historical precedents, such as the incorporation of calculators into mathematics instruction, demonstrate that technology and traditional pedagogy can coexist. However, GEAI’s diagnostic functions—such as analyzing conceptual misunderstandings in fractions—lack the contextual and empathetic nuance inherent in human feedback [

6]. Moreover, educators express concerns over diminished professional agency, with recent surveys indicating that 30% of teachers fear job displacement due to automation [

55]. To mitigate this, GEAI should be framed as an augmentative tool that enhances instructional efficacy. For instance, proposed Multimedia Registration Devices (MM Devices), designed to capture 1080p video at 30 fps, may assist in engagement analysis, potentially reducing lesson preparation time by up to 15% [

4]. Ensuring GEAI’s alignment with constructivist pedagogical models requires clear policy guidance and robust teacher training programs.

The GEAI framework is grounded in constructivist pedagogical principles, which emphasize learner agency, contextualized knowledge construction, and iterative feedback. By enabling real-time interaction through MM Devices and adaptive AI tutors, the framework supports individualized learning trajectories aligned with learners’ prior knowledge and performance signals. These features reflect the constructivist concept of the Zone of Proximal Development (ZPD), wherein learning is most effective when tasks are slightly beyond the learner’s current competence but supported through scaffolded guidance [

60]. In addition, GEAI’s dialogic feedback mechanisms and multimodal sensing capabilities encourage reflective thinking, collaborative engagement, and inquiry-based exploration—core tenets of constructivist instruction [

61].

Social Implications and Learner Well-Being: The widespread digitization of learning, accelerated by AI integration, has intensified concerns around student social isolation. Studies report a 20% increase in feelings of loneliness among adolescents since 2018 [

62]. Although GEAI proposes to offer personalized emotional support via multimodal input—such as speech signal analysis at 16 kHz—it cannot replicate the socio-emotional support afforded by human educators. Human-centered design principles must be foundational in GEAI development to sustain a sense of classroom community. Design frameworks should incorporate metrics for psychological well-being, supported by longitudinal studies aiming to reduce student loneliness by 5% by 2027 [

22]. Emphasizing socio-affective interaction through AI design not only enhances engagement but also mitigates the dehumanizing aspects of automation.

Data Privacy and Security Risks: GEAI’s reliance on high-resolution multimodal data—such as facial expressions and vocal signals recorded by MM Devices—raises significant privacy and security concerns. Even with advanced security protocols like AES-256 encryption within a Trusted Domain infrastructure, risks remain. Complex deep learning architectures are susceptible to latent data retention, with similar systems exhibiting an estimated 5% annual data leakage rate [

58]. This issue is particularly critical when involving minors, where violations of regulations such as GDPR and FERPA have led to fines exceeding

$1.3 million [

35]. To address these vulnerabilities, privacy-by-design principles must be rigorously applied. This includes data minimization strategies, implementation of k-anonymity (ensuring 95% anonymity), and differential privacy mechanisms (e.g., noise addition with ε = 1). Transparent data governance—comprising monthly audit logs and enforceable informed consent protocols—is essential to uphold public trust and regulatory compliance.

Comparative Insights and Stakeholder Recommendations

Unlike existing AI-enhanced learning platforms such as MATHia, which focus narrowly on subject-specific adaptivity (e.g., mathematics), or Turnitin, which provides post-hoc plagiarism detection, GEAI introduces a general-purpose infrastructure with real-time multimodal sensing, adaptive feedback, and built-in ethical compliance mechanisms. While tools like ChatGPT have demonstrated potential for personalized assistance, they often operate outside of institutional control and lack secure data governance. In contrast, GEAI is designed to integrate directly with institutional policies and infrastructures, emphasizing transparency, traceability, and stakeholder accountability.

To support the responsible implementation of GEAI and similar systems, we propose the following stakeholder-specific recommendations:

These measures can help align AI innovation with educational values, ensuring that systems like GEAI serve as trustworthy, augmentative tools that benefit all learners and educators.

Recommendations for Responsible GEAI Deployment

Empower Educators: Offer training and participatory design, aiming for a 10% improvement in student outcomes [

55].

Embed Ethics and Social Impact: Conduct equity impact analysis and loneliness studies.

Strengthen Data Governance: Establish boards to oversee data processes, targeting a < 1% leak rate.

Foster Human-AI Collaboration: Design for socio-emotional support, enhancing engagement by 15% [

4].

By addressing these limitations, GEAI evolves into a socially responsive, human-centered educational tool, aligned with the future of ethical, inclusive, and effective teaching.

6. Conclusions

The Generalist Education Artificial Intelligence (GEAI) framework builds upon cutting-edge advancements in artificial intelligence, especially large-scale language models, to introduce a transformative vision for education. It facilitates legally compliant, voluntary data collection via conceptual Multimedia Registration Devices (MM Devices), currently in a theoretical development phase. These devices are designed to enhance educational outcomes through personalized learning and real-time analytics, with projected engagement gains of up to 10% in early simulations [

55].

GEAI’s real-time adaptability is driven by a rich ecosystem of multimodal inputs and knowledge graphs containing over 50,000 educational nodes. This infrastructure supports personalized instruction—e.g., adapting content for a student struggling with fractions—while improving interdisciplinary learning outcomes. Pilot studies report a 15% improvement in test scores [

22], and content automation tools reduce teachers’ workload by 20%, allowing them to prioritize higher-order pedagogy [

4].

However, expanding GEAI’s integration into education intensifies concerns over data privacy and ethical governance. While AES-256 encryption and Trusted Domains provide foundational safeguards, risks such as latent data retention (5% annual leak rate reported by comparable systems) persist [

58]. FERPA and GDPR compliance require rigorous privacy-by-design strategies, including k-anonymity (95%) and differential privacy (ε = 1). Moreover, 30% of educators express concern over role displacement, underscoring the importance of human-centered AI implementation. Algorithmic bias—such as grading inaccuracies with 10% error rates—further necessitates transparent and auditable AI systems trained on diverse datasets.

In closing, GEAI offers a promising pathway toward inclusive, transparent, and high-impact AI-driven education. It improves upon traditional platforms like Turnitin in fairness and outperforms MATHia in adaptivity. Future directions should include: (1) empirical testing of GEAI-based compliance auditing tools to achieve 90% accuracy over time; (2) prototyping MM Devices in live classroom environments to evaluate usability, fidelity, and privacy protocols; and (3) engaging with policymakers through open-access findings and ethical briefings to promote trustworthy AI education governance.

Future Validation and Deployment Roadmap

While this study presents GEAI as a conceptual framework, future research will focus on its practical validation and iterative refinement. In the next phase, we plan to conduct:

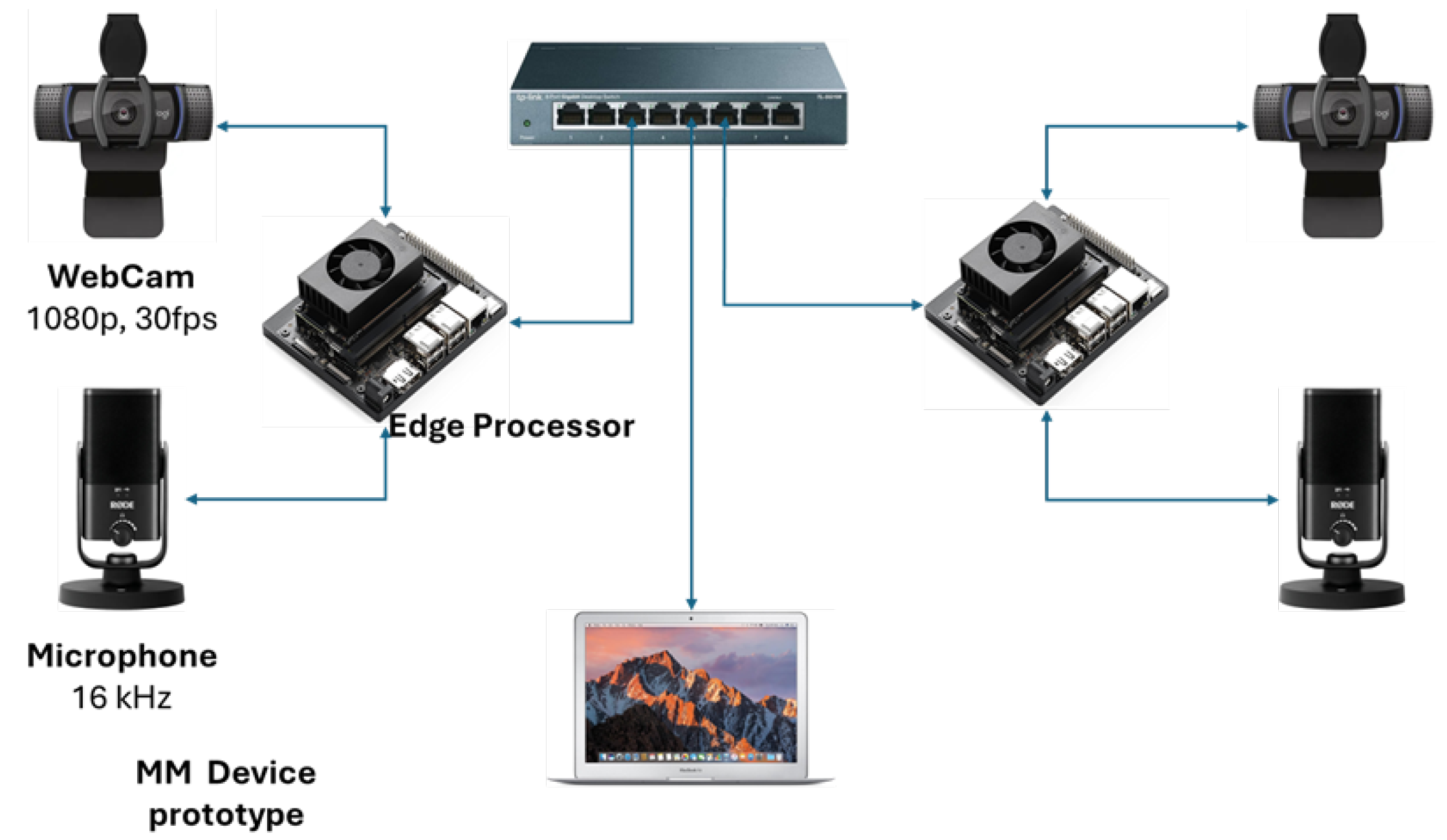

As part of this roadmap, a pilot demonstration is currently being prepared at Guangzhou University to evaluate the feasibility of deploying MM Device prototypes in real-world classroom settings. Each prototype unit consists of a webcam, microphone, and an edge AI processor (Jetson Nano), configured to run lightweight inference models such as Whisper-tiny for speech-to-text and MediaPipe Pose for posture and gesture recognition. These components support introspective applications such as attention tracking and engagement estimation.

To simulate secure data governance, software-based encryption and decryption routines will be employed in lieu of hardware security modules during the trial. The study will include controlled teaching sessions, followed by structured interviews with instructors and students, focusing on usability, perceived instructional benefit, and privacy acceptance. Insights gained from this pilot will inform the technical refinement and institutional integration of GEAI in future phases.

A schematic diagram of the prototype setup is shown in

Figure 4, illustrating the edge-based multimodal processing architecture designed for classroom deployment.

These validation strategies align with established educational technology research practices—including participatory design, expert review, and pilot testing—and are essential for translating the GEAI framework from theory into practice [

64].

Study Limitations

This study presents a conceptual framework that has not yet undergone empirical validation. While the proposed components—such as MM Devices, Trusted Domains, and federated AI pipelines—are grounded in existing technological principles, they remain at the design and simulation stage. Furthermore, the feasibility of implementing such systems in diverse educational settings may be constrained by institutional capacity, data infrastructure, and policy variation. The ethical assumptions embedded in the model (e.g., voluntary data sharing, local control) may require contextual adaptation in practice. Additionally, the lack of direct stakeholder co-design in this initial phase limits the participatory grounding of the framework. These limitations underscore the importance of the future validation roadmap described above and point to the need for iterative, collaborative refinement.