Abstract

Accurate pigment classification is essential for the analysis and conservation of historical paintings. This study presents a non-invasive approach based on supervised machine learning for classifying pigments using image data acquired under three distinct spectral illumination conditions: visible-light reflectography (VIS), ultraviolet false-color imaging (UVFC), and infrared false-color imaging (IRFC). A dataset was constructed by extracting 32 × 32 pixel patches from pigment samples, resulting in 200 classes representing 40 unique pigments with five preparation variants each. A total of 600 initial raw images were acquired, from which 4000 image patches were extracted for feature engineering. Feature vectors were obtained from visible reflectography, ultraviolet false-color imaging (UVFC), and infrared false-color imaging (IRFC) using statistical descriptors derived from RGB channels. This study demonstrates that accurate pigment classification can be achieved with a minimal set of three illumination types, offering a practical and cost-effective alternative to hyperspectral imaging in real-world conservation practice. Among the evaluated classifiers, the random forest model achieved the highest accuracy (99.30 %). The trained model was subsequently validated on annotated regions of historical paintings, demonstrating its robustness and applicability. The proposed framework offers a lightweight, interpretable, and scalable solution for non-invasive pigment analysis in cultural heritage research that can be implemented with accessible imaging hardware and minimal post-processing.

1. Introduction

The accurate identification of painting pigments is a crucial aspect of art conservation, historical research, and authentication. The ability to determine the materials used in a painting provides valuable insights into an artist’s techniques, historical context, and restoration history. Traditional pigment analysis methods, such as X-ray fluorescence (XRF), Raman spectroscopy, and Fourier transform infrared spectroscopy (FTIR) are highly accurate but can be spatially limited and time-consuming or require specific conditions that make them impractical for routine, large-scale, or in situ analysis of fragile objects [1].

Non-invasive imaging techniques have been developed to overcome these limitations, preserving the integrity of artworks while providing meaningful data. However, many rely on subjective visual interpretation by human experts, which can introduce potential for error due to perceptual variation. While hyperspectral imaging offers improved classification accuracy, it typically requires complex instrumentation and significant computational resources. These factors limit its applicability in conservation practice [2].

A fundamental challenge in non-invasive pigment classification, particularly when utilizing a limited number of broad spectral bands, is the issue of spectral overlap [3,4,5]. Certain pigments, such as ultramarine blue and Prussian blue, exhibit highly similar spectral responses across the visible, ultraviolet, and near-infrared ranges, making their differentiation challenging solely based on these modalities. This inherent ambiguity necessitates careful consideration of the diagnostic resolution achievable with a reduced spectral set. Furthermore, the spectral response of pigments in real-world artworks can be significantly influenced by various factors beyond their intrinsic properties, including the type of binding medium used, the effects of aging, and various degradation processes [6,7]. Such variations can alter the pigment’s spectral signature, complicating accurate classification. A major challenge also arises from the common historical painting practices of pigment mixing and layering. Simple classification models, which often assume a single dominant pigment per analyzed region, may struggle to accurately resolve these complex compositions [3]. Finally, there is an inherent trade-off between non-invasiveness and diagnostic resolution. While non-invasive methods are crucial for preserving fragile artworks, they typically provide less detailed chemical information compared to other advanced spectroscopic techniques like XRF or Raman spectroscopy. Acknowledging these limitations upfront establishes a clearer problem statement, demonstrating how the proposed lightweight solution aims to provide a practical and accessible alternative that balances these challenges rather than offering a complete replacement for all analytical needs. Despite significant progress in hyperspectral pigment classification, there remains a need for simpler and more accessible solutions that maintain diagnostic accuracy.

This study introduces an efficient and non-invasive methodology for pigment classification based on imaging performed across three well-defined spectral bands: the ultraviolet (320–400 nm), visible (400–700 nm), and near-infrared (700–1000 nm) bands. By capturing the characteristic spectral responses of pigments under these illumination conditions, the proposed approach enables accurate classification while maintaining low computational complexity. Unlike hyperspectral imaging, which typically requires the processing of hundreds of contiguous spectral bands, this method relies on a reduced set of three strategically selected bands. This design choice significantly minimizes the burden of data acquisition and post-processing while still ensuring reliable performance [3].

The principal advantage of this approach lies in its operational efficiency and ease of implementation. The use of only three spectral bands allows for the rapid extraction of discriminative pigment features without the need for specialized hyperspectral instrumentation. As such, the method is particularly well-suited for conservators, heritage professionals, and researchers who require a fast and dependable tool for pigment identification in situ. The results indicate that spectral information acquired via visible-light reflectography (VIS), ultraviolet reflectography (RUV), and near-infrared reflectography (NIR) provides sufficient discriminatory power to support accurate pigment classification, thereby offering a pragmatic alternative to more complex and resource-intensive imaging systems [8].

2. Related Work

Recent advancements in pigment classification have focused on improving cultural heritage analysis by replacing invasive techniques with imaging-based alternatives. Traditional methods such as X-ray fluorescence (XRF), Raman spectroscopy, and Fourier transform infrared spectroscopy (FTIR) are highly accurate but can be spatially limited and time-consuming or require specific conditions that make them impractical for routine, large-scale, or in situ analysis of complex artworks. To address these limitations, non-invasive approaches such as Visible Spectral Imaging (VSI) and Hyperspectral Imaging (HSI) have been developed [3,9,10]. Early computational techniques for analyzing such data included Spectral Angle Mapping (SAM) and Principal Component Analysis (PCA), which proved effective in simplified scenarios but are often insufficient for dealing with complex pigment mixtures [11,12]. Machine learning and deep learning methodologies have since emerged as powerful tools capable of addressing these challenges. Notably, Pouyet et al. [13] introduced a deep neural network (DNN) for pigment classification in the short-wave infrared range, achieving robust multi-label performance on complex surfaces such as Tibetan thangkas. Their method significantly outperforms SAM in both speed and accuracy. Similarly, Liu et al. [11] reviewed the growing application of neural networks in hyperspectral pigment analysis, emphasizing their ability to model nonlinear relationships and manage high-dimensional spectral data effectively. Flachot et al. [14] approached the problem from a perceptual perspective, training networks to replicate human color constancy under variable lighting conditions using synthetic data. Their DeepCC model achieved strong performance in reflectance classification tasks, demonstrating the broader applicability of biologically inspired models in cultural heritage imaging. Within the domain of visible spectral imaging, Wei et al. [9] proposed a multi-stage framework incorporating boundary segmentation, material recognition, and mixture proportion estimation. Their results highlighted the diagnostic potential of reflectance spectra while acknowledging limitations caused by pigment particle variability and spectral mixing. Expanding on this work, Liu et al. [10] developed a quadtree-based superpixel segmentation algorithm combined with geometric spectral descriptors for database-driven pigment identification, successfully automating region-level classification in polychrome surfaces. Reflectance unmixing strategies have also been explored. Valero et al. [12] demonstrated that transforming reflectance data into the logarithmic domain () can enhance the performance of linear unmixing models, particularly in aged or stratified paint layers. The present study builds on these developments by proposing a lightweight classification strategy that leverages three distinct illumination modalities—visible, ultraviolet, and infrared—to extract compact statistical features from reflectographic images. This design significantly reduces the complexity of data acquisition and processing in comparison to full-spectrum hyperspectral imaging, offering a practical and efficient solution for use in conservation contexts. Further contributions to scalable pigment analysis have emerged from the combination of deep learning and simulation-based workflows. Kogou et al. [15] employed Kohonen Self-Organizing Maps (SOMs) to cluster high-resolution reflectance imaging data from mural paintings in the Mogao Caves, enabling the identification of materials in both accessible and inaccessible regions. Their results underscore the potential of unsupervised learning in processing large-scale cultural heritage datasets. In the field of XRF imaging, Jones et al. [16] addressed the scarcity of training data by generating synthetic pigment spectra using the Fundamental Parameters (FP) method. A convolutional neural network (CNN) trained on these synthetic spectra achieved near-perfect results on simulated inputs and, following transfer learning with a limited number of real measurements, reached a classification accuracy of 96%. Their approach illustrates the feasibility of combining physics-based simulations with deep learning for pigment identification. Ugail et al. [17] developed a transfer learning pipeline that combines ResNet50 and support vector machines (SVMs) for authorship and stylistic attribution in Renaissance paintings. By incorporating edge detection algorithms, their model captures brushstroke-level patterns, demonstrating how stylistic and material analysis can be unified through deep feature extraction. Kleynhans et al. [18] returned the focus to pigment classification using a supervised one-dimensional CNN trained on reflectance spectra obtained from 14th-century illuminated manuscripts. Their end-to-end approach removes the need for manual clustering and produces accurate pigment maps with a mean per-class accuracy exceeding 98%. Building upon this, Radpour et al. [19] extended the training set to include ten 15th-century manuscripts and introduced a dual-CNN model: one trained on raw reflectance spectra and the other on first-derivative data. The derivative-based model exhibited improved generalization, emphasizing the importance of spectral preprocessing in enhancing class separability. More recent studies have begun to address the challenges posed by pigment mixtures and layered structures. Taufique and Messinger [20] applied the opaque form of the Kubelka–Munk theory to estimate pigment concentrations from hyperspectral data, achieving higher accuracy than traditional reflectance models. Wang et al. [21] applied spectral unmixing techniques to the surfaces of painted artifacts, explicitly accounting for spectral variability—an essential consideration in the analysis of complex heritage materials. From a deep learning perspective, Rohani et al. [22] proposed a neural network-based spectral unmixing model capable of effectively separating pigments in hyperspectral images of paintings. Multi-label classification schemes were examined by Haidar and Oramas [23], who compared training strategies for hyperspectral remote sensing images—a relevant foundation for the modeling of overlapping pigment distributions. Xu et al. [24] introduced a deep learning framework for analyzing stratified XRF data in painted surfaces, showing that layered pigment compositions can be automatically inferred with high accuracy. Collectively, these efforts reflect a clear progression toward intelligent, automatic, and domain-adaptable pigment classification systems. Leveraging a range of imaging modalities, including reflectance and XRF, and incorporating both supervised and unsupervised learning strategies, these innovations increasingly rely on simulated and transfer-learned datasets. As the field evolves, such methodologies are expected to deliver scalable, non-invasive solutions tailored to the analytical demands of cultural heritage science.

3. Dataset Preparation

In the initial stage of the study, a reference database was developed, comprising samples of pigments and organic dyes commonly employed by artists in historical painting layers. To facilitate this, twenty medium-density fiberboard (MDF) panels, each measuring 45 × 35 cm, were prepared as substrates. Each panel was coated with five uniform layers of a 10% glue–chalk ground formulated from rabbit-skin glue and Champagne chalk. The surfaces were subsequently refined by sequential sanding using abrasive papers with grit sizes of 500, 800, and 1000 to achieve a smooth and consistent painting ground.

Following surface preparation, pigment and dye samples were applied using two traditional binding media: (i) a tempera emulsion consisting of egg yolk and distilled water in a 1:1 ratio and (ii) a linseed oil-based varnish derived from sun-bleached linseed oil. The resulting painted samples encompassed both aqueous and oil-based techniques, reflecting common historical practices.

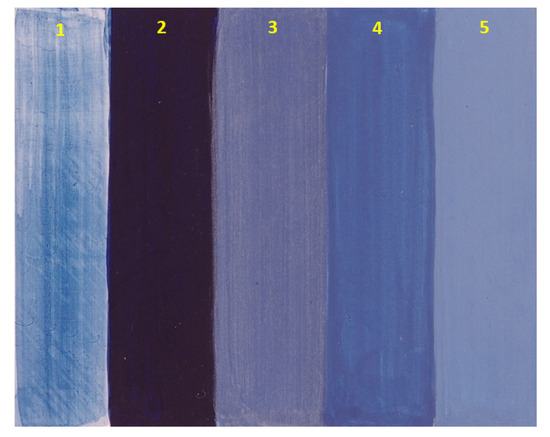

Pigments and organic dyes commonly employed by artists in historical painting layers were used as reference samples. These included but were not limited to traditional pigments such as ultramarine blue, umber, iron oxide red, vegetable black, and titanium white. They were categorized into seven chromatic families: white, yellow, red, green, blue, brown, and black. All pigments and dyes stored in the database were supplied by Kremer Pigments GmbH & Co. KG (Aichstetten, Germany). For each pigment–binder combination, the sample was structured into five discrete sections, arranged according to the following standardized scheme (Figure 1):

Figure 1.

Example of a pigment sample (Prussian Blue), structured into five layers: (1) diluted transparent layer; (2) opaque layer; (3) opaque layer mixed with lead white (1:1); (4) opaque layer mixed with zinc white (1:1); and (5) opaque layer mixed with titanium white (1:1). Samples were prepared with tempera emulsion and linseed oil varnish.

- Diluted transparent paint—pigment or dye;

- Opaque paint—pigment or dye;

- Opaque paint—pigment or dye + lead white (1:1);

- Opaque paint—pigment or dye + zinc white (1:1);

- Opaque paint—pigment or dye + titanium white (1:1).

Reference samples of pigments and organic dyes were imaged using a medium-format digital camera (Pentax 645Z, 50 megapixels) (Tokyo, Japan) equipped with a sensor sensitive to a broad spectral range of approximately 320–1000 nm [25]. Imaging was conducted using reflectography techniques under three distinct illumination conditions, each corresponding to a specific spectral band and wavelength range:

- Ultraviolet (UV) imaging utilized a black-light blue ultraviolet source emitting at a peak wavelength of 365 nm, covering the 320–400 nm band.

- Visible light (VIS) imaging employed halogen photographic lamps, covering the 400–700 nm band.

- Near-infrared (NIR) imaging also used halogen photographic lamps, covering the 700–1000 nm band.

For each spectral range, appropriate optical filters were mounted on the camera lens to isolate the desired band (a filter transmitting ultraviolet radiation (range: 300 nm–400 nm), a UV and IR cut-off filter (transmitting range: 400 nm–700 nm), and infrared filters transmitting radiation above 780 nm). Illumination was provided by halogen photographic lamps in conjunction with a black-light blue ultraviolet source emitting at a peak wavelength of 365 nm.

To ensure reproducibility and radiometric consistency, standardized color and grayscale calibration targets were incorporated during each image acquisition session (FADGI Device Level Target Standard, FADGI ISO 19264 Device Level Target). The images were recorded in RAW format and subsequently processed using dedicated software to perform white-balance correction and exposure adjustment. In the subsequent stage, false-color imaging techniques were applied:

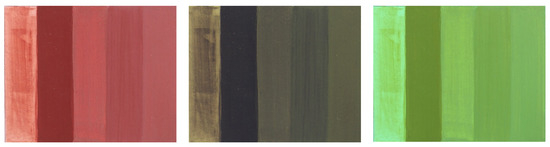

- For the ultraviolet false-color composite (UVFC), high-resolution images obtained in the visible (VIS) and ultraviolet (RUV) domains served as the input. These images were processed using an RGB channel substitution method as follows: the green (G) channel from the VIS image was mapped to the red (R) channel, the blue (B) channel from the VIS image was assigned to the green (G) channel, and the ultraviolet (RUV) image was inserted into the blue (B) channel. This resulted in a synthesized GBUV image (Figure 2, middle).

Figure 2. Iron Oxide Red pigment sample in (left to right) VIS, UVFC (GBUV image), and IRFC (IRRG image) techniques.

Figure 2. Iron Oxide Red pigment sample in (left to right) VIS, UVFC (GBUV image), and IRFC (IRRG image) techniques. - Simultaneously, an infrared false-color composite (IRFC) was generated using images acquired in the visible and near-infrared (NIR) ranges. The processing followed a similar RGB substitution scheme: the near-infrared (NIR) image was mapped to the red (R) channel, the original red (R) channel from the VIS image was shifted to the green (G) channel, and the green (G) channel from the VIS image was mapped to the blue (B) channel. This resulted in the construction of the IRRG image (Figure 2, right), enhancing the perceptual contrast of infrared-responsive materials.

4. Methodology

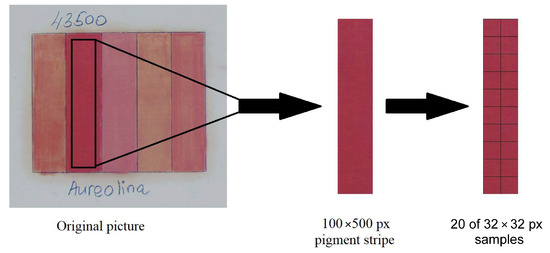

To construct the dataset for classification, each pigment sample was initially cropped into a 100 × 500 pixel stripe. This stripe was subsequently subdivided into 20 smaller image patches, each measuring 32 × 32 pixels, as illustrated in Figure 3. This oversampling strategy enabled the generation of a more comprehensive feature set and contributed to increased robustness during the training phase. In total, the dataset comprised 200 distinct classes, corresponding to 40 unique pigments, each represented by five formulation variants.

Figure 3.

Visualization of the oversampling procedure used for training data preparation. Each pigment sample was cropped into a 100 × 500 pixel stripe, which was then subdivided into 20 image patches measuring 32 × 32 pixels, yielding individual training examples.

From each image patch, a set of features was extracted from the visible (VIS), ultraviolet false-color (UVFC), and infrared false-color (IRFC) images. For each RGB channel, the mean and standard deviation were computed, resulting in six statistical descriptors per image modality. These values were concatenated into a unified feature vector of 18 dimensions (3 channels × 3 modalities × 2 statistics). Given that all pixel values were in the range of 0 to 255, no additional normalization was applied.

A comprehensive evaluation of classical machine learning algorithms was conducted using the scikit-learn library [26]. The tested classifiers included Support Vector Machine (SVM), Stochastic Gradient Descent (SGD), Decision Tree, AdaBoost, Bagging, Extra Trees, K-Nearest Neighbors (KNN), Random Forest, Logistic Regression, and Gaussian Naive Bayes (NB). All models were used with their default hyperparameter configurations unless otherwise stated (Table 1).

Table 1.

Default hyperparameters used for each classifier (scikit-learn) [27].

Each classifier was trained on the 18-dimensional feature vectors using five-fold cross-validation with class stratification. The entire procedure was repeated twice, with shuffling of the dataset prior to each iteration to enhance the reliability of the performance estimates. The final evaluation metrics—accuracy, precision, recall, and F1 score—were averaged across all ten folds (2 repetitions × 5 folds), and their respective standard deviations were also computed and reported.

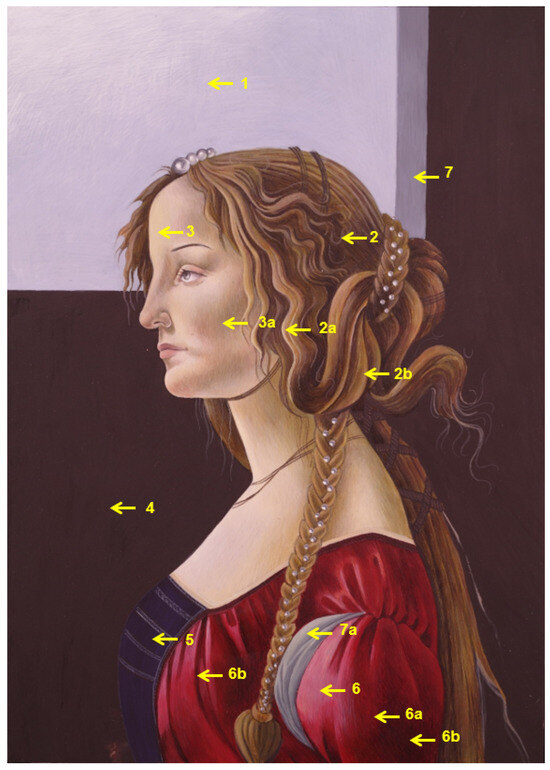

Given the limited size of the laboratory dataset, no separate holdout set was reserved. Instead, final validation was conducted using data from real-world painting-like samples to ensure greater diversity. For this purpose, domain experts annotated regions of interest (ROIs) in four modern reference paintings designed to emulate 14th–15th-century Italian panel painting techniques (Figure 4). These artworks were created with egg tempera using well-documented pigments sourced from Kremer Pigments GmbH & Co. KG (Aichstetten, Germany). As a result, the ground-truth labels for annotated regions were established based on the known materials used during their fabrication; no instrumental analyses (e.g., XRF or Raman spectroscopy) were conducted on these validation samples. The annotated regions were segmented into 32 × 32 pixel patches, consistent with the training setup. The same feature extraction and classification pipeline was applied. The final classifier, selected based on cross-validation performance, was used to predict pigment labels within these ROIs. Evaluation metrics (accuracy, precision, recall, and F1 score) were computed to assess the model’s performance on these external, practice-oriented samples (Figure 5), reflecting its real-world applicability in conservation contexts.

Figure 4.

Example of an annotated painting used for end-to-end classifier evaluation. Regions of interest were labeled by domain experts as follows: 1—sky: titanium white, ultramarine blue; 2—hair (glaze brown): ocher, vegetable black; 2a—highlights: titanium white; 2b—warm glaze: ocher; 3—complexion, highlights: titanium white, ocher, iron oxide red; 3a—shadows: titanium white, ocher, vegetable black; 4—dark brown: vegetable black, iron oxide red; 5—dark blue: ultramarine blue, vegetable black, with a small addition of titanium white; 6—red robe, highlights: titanium white, iron oxide red; 6a—red halftone: madder lake, ocher; 6b—deep shadows: madder lake, ocher, vegetable black; 7—grays: titanium white, vegetable black, ocher; 7a—gray halftone (underpainting): titanium white, vegetable black, ocher; Highlights—titanium white, ocher.

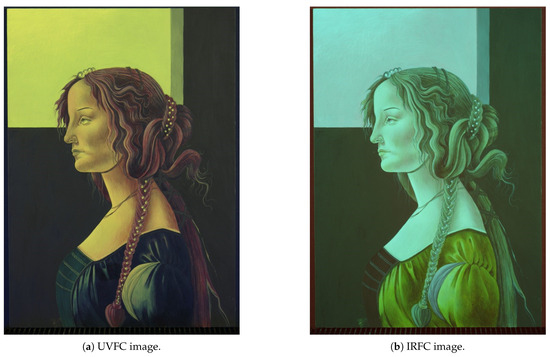

Figure 5.

False-color representations of the painting shown in Figure 4: (a) ultraviolet false color (UVFC), (b) infrared false color (IRFC). Images were acquired using different optical filters, while the illumination setup remained constant.

5. Results

To provide a reliable and comparative evaluation of all classifiers, a stratified five-fold cross-validation protocol was adopted. This procedure was repeated twice (i.e., 2 × 5-fold), with random shuffling of the dataset prior to each repetition. Within each fold, 80% of the data were used for training and 20% for validation. The same data partitions were consistently applied across all evaluated models to ensure comparability. As a result, each sample appeared once in a validation fold and four times in a training set during each repetition. Performance metrics, including accuracy, precision, recall, and F1 score, were computed for each fold. The final reported values represent the mean scores across all ten validation subsets, accompanied by standard deviation values to reflect result variability.

The classification results from this cross-validation procedure are presented in Table 2. Among all tested models, the random forest classifier achieved the highest overall performance, with an average accuracy of 99.30 %, along with consistently high values of precision, recall, and F1 score, all exceeding 99.29%. The K-Nearest Neighbors (KNN) algorithm demonstrated similarly strong performance, with an accuracy of 99.26 %. The Gaussian naïve Bayes classifier also performed well, reaching 96.60 %, which suggests a notable generalization ability despite the assumption of feature independence. The support vector machine (SVM) achieved a lower accuracy of 90.00 % but still provided competitive results.

Table 2.

Cross-validation results of selected classification algorithms.

Other classifiers yielded more variable outcomes. The extra trees classifier achieved relatively good performance ((94.25 %)), while logistic regression resulted in 69.03 % and Adaboost performed substantially worse (54.26 %). Several algorithms, including the decision tree algorithm (5.83 ± 0.02%), stochastic gradient descent (SGD) (23.82 ± 3.95%), and bagging (44.05 ± 2.96%), exhibited low accuracy and high variability, indicating limited suitability for the classification task addressed in this study.

In addition to evaluating the classifiers themselves, two labeling strategies were considered. In the unified-class strategy, all five preparation variants of a given pigment—transparent; opaque; and mixtures with lead, zinc, or titanium white—were grouped into a single class label. In contrast, the subclass-based strategy assigned a distinct class to each variant, enabling more detailed categorization. While both approaches produced the same top-1 accuracy of 25.0%, the subclass-based model consistently outperformed the unified strategy in top-3 and top-10 accuracy. This suggests that more granular class definitions improve the model’s ability to exclude implausible candidates and rank relevant pigments more effectively.

To further assess the model’s applicability in conservation-oriented contexts, a real-world validation study was conducted using 41 annotated pigment samples selected from four modern reference paintings. These artworks were created to emulate the techniques of 14th- and 15th-century Italian panel painting, using egg tempera as a binder and well-documented pigments obtained from Kremer Pigments GmbH & Co. KG. All pigment compositions were known in advance based on documentation, and no additional instrumental analyses were performed prior to annotation. Ground-truth labels for selected regions of interest (ROIs) were assigned by domain experts based on this prior knowledge.

The validation focused on visually homogeneous regions, where the assumption of a single dominant pigment is more likely to hold. Segmentation was performed using 32 × 32 pixel patches, consistent with the model’s training configuration. The classifier trained on laboratory data was applied to these patches using the same feature extraction and prediction pipeline. Results presented in Table 3 show that, within homogeneous regions, the subclass-based classifier reached top-10 accuracy values of up to 100%, demonstrating the system’s potential to generalize effectively beyond controlled datasets.

Table 3.

Accuracy of pigment classification on real painting samples.

Despite this strong performance, certain limitations were observed. Misclassification occurred in cases where pigments had similar spectral profiles, such as ultramarine blue and Prussian blue, due to overlapping reflectance across the three imaging modalities: visible reflectance, ultraviolet false-color, and infrared false-color modalities. Additionally, organic lakes and degraded materials, which often exhibit minimal spectral contrast, proved more difficult to identify reliably. These issues highlight the limited spectral discrimination afforded by the current imaging setup.

This scenario reflects a trade-off between the simplicity of the imaging protocol and the level of diagnostic precision. While the use of three imaging modalities offers a low-complexity and accessible workflow, it may be insufficient for distinguishing between pigments with overlapping visual or spectral characteristics. Future improvements could include the incorporation of multi-label classification frameworks or spectral unmixing techniques to enhance performance in more complex or ambiguous cases.

These findings demonstrate that the classification framework, particularly when using ensemble methods and subclass-based labeling, achieves high accuracy and generalization capacity across both experimental and real-world data, supporting its applicability in conservation-focused pigment analysis.

6. Discussion

The proposed framework offers a lightweight, interpretable, and cost-effective solution for non-invasive pigment classification in cultural heritage contexts. Its reliance on accessible imaging hardware and compact statistical features ensures operational efficiency and rapid pigment identification, making it practical for institutions without access to advanced spectroscopic systems.

The high classification performance achieved by ensemble methods, particularly the random forest classifier (99.30% accuracy), confirms their suitability for modeling the complex, non-linearly separable feature space of pigment data. In contrast, simpler models such as linear classifiers and shallow decision trees struggled to generalize, often exhibiting underfitting or overfitting depending on the class distribution and feature granularity. This highlights the benefit of using more flexible learning algorithms in small, high-dimensional pigment datasets.

Furthermore, the subclass-based classification strategy, which differentiates between pigment variants (e.g., pure, opaque, or mixed with white pigments), consistently outperformed the unified-class approach in top-N accuracy metrics. This indicates that capturing preparation-specific distinctions improves the model’s ability to generate more precise, ranked lists of plausible pigment candidates, which is particularly valuable in conservation workflows.

Despite these strengths, several limitations must be acknowledged. A primary challenge is the difficulty of distinguishing pigments with highly similar spectral profiles using only three broad spectral bands. For example, misclassifications were observed between ultramarine blue and Prussian blue, particularly in the near-infrared and ultraviolet bands. The model also showed reduced sensitivity in detecting organic lakes and degraded materials, which tend to exhibit weak or ambiguous reflectographic signals. While the laboratory dataset included samples prepared using various traditional binding media, systematic testing of artificially aged binders and varnishes was not conducted as part of this pilot study. The influence of such aging processes on spectral characteristics remains a complex and open research question.

An additional limitation is the framework’s assumption of a single dominant pigment per patch. This simplification, while suitable for visually homogeneous regions, does not account for the presence of pigment mixtures or stratified layers, which are common in historical artworks. More realistic modeling of such scenarios will require the integration of multi-label classification approaches or spectral unmixing techniques capable of resolving complex pigment combinations.

Compared to hyperspectral imaging systems, the framework offers a significantly more accessible and resource-efficient alternative. While deep learning methods provide end-to-end solutions in related domains, the classical machine learning approach employed here prioritizes interpretability, low computational overhead, and compatibility with limited training data. This makes the framework well-suited for deployment in smaller research environments or field-based conservation projects. Overall, the results support the practical viability of the approach and establish a foundation for future methodological extensions.

7. Conclusions

This study presented a supervised machine learning framework for non-invasive pigment classification based on image data acquired under three spectral modalities: visible light reflectography (VIS), ultraviolet-induced visible fluorescence (UVFC), and near-infrared reflectography (IRFC). Compact statistical descriptors extracted from each modality enabled efficient feature representation, supporting the application of classical classifiers. Among the tested models, the random forest classifier achieved the highest performance in cross-validation (99.30 %) and demonstrated strong generalization during external validation on annotated real-world painting samples.

The comparative analysis of classification models revealed that simpler methods, such as stochastic gradient descent, logistic regression, and decision trees, were insufficient in modeling the nonlinear and high-dimensional structure of the pigment dataset. These models exhibited either underfitting or overfitting tendencies, resulting in poor predictive performance. In contrast, ensemble learning techniques (random forest and extra trees) and instance-based methods (KNN) achieved significantly better results, confirming their suitability for learning flexible decision boundaries in complex feature spaces.

Two classification strategies were examined: a unified-class approach that groups pigment variants into 40 general categories and a subclass-based approach that assigns a distinct label to each pigment formulation, such as transparent, opaque, or mixed with white pigments. While the two approaches achieved identical top-1 accuracy (25.0%) during real-world validation, the subclass-based model consistently outperformed the unified model in top-3 and top-10 metrics. This indicates that subclassing enables the model to better capture subtle differences in spectral and visual properties, which improves its ability to filter out implausible candidates. Although the unified model simplifies the classification scheme, it sacrifices specificity, which is critical for practical applications in heritage science.

An error analysis of the predictions highlighted specific limitations. Misclassifications were most prominent among pigment pairs with similar spectral characteristics, particularly ultramarine blue and Prussian blue, whose profiles overlap in the near-infrared and ultraviolet bands. Furthermore, the method showed limited effectiveness in detecting organic lakes and degraded materials, which often lack strong spectral signatures across the available imaging modalities. While the dataset included samples prepared with different binding media, no systematic tests were performed on artificially aged binders or varnishes. These factors may influence spectral behavior and should be considered in future investigations.

Another important limitation is the current assumption of a single dominant pigment per image patch. Although this assumption holds in homogeneous regions, it does not reflect the common practice of pigment mixing and layering in historical artworks. While the subclass-based model achieved top-10 accuracy of up to 100% in visually uniform areas, future improvements will require multi-label classification frameworks or spectral unmixing methods capable of resolving complex pigment compositions.

Despite these constraints, the results demonstrate that a lightweight and cost-effective imaging setup—based on a high-resolution digital camera and selected optical filters—can provide meaningful pigment identification capabilities. This system offers a scalable, non-destructive, and interpretable alternative to advanced hyperspectral imaging, making it suitable for smaller laboratories and institutions lacking specialized spectroscopic instrumentation.

Future research will focus on expanding the training dataset to include a broader range of pigment formulations, artificial aging effects, and layered samples. Additionally, deep learning architectures, synthetic data generation, and advanced unmixing strategies will be explored to improve model robustness and diagnostic precision. Ultimately, this framework contributes to the development of accessible artificial intelligence tools for cultural heritage diagnostics, bridging the gap between complex analytical instrumentation and practical conservation workflows.

Author Contributions

Conceptualization, B.S. and J.R.; methodology, M.P.M. and B.S.; validation, M.M. and R.B.; investigation, M.P.M. and S.G.; data curation, M.P.M. and S.G.; writing—original draft preparation, M.P.M. and B.S.; writing—review and editing, M.P.M., S.G., M.M., R.B., J.R. and B.S.; visualization, S.G.; project administration, B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Wrocław University of Science and Technology through a basic research grant (No. 82 111 04 160).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

B.S. and M.P.M. gratefully acknowledge Ivan Danylenko for his constructive feedback and valuable assistance. The authors would like to thank Wrocław University of Science and Technology for financial support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Derbyshire, A.; Withnall, R. Non-Destructive Pigment Analysis Using Raman Microscopy. V&A Conserv. J. 1999. Available online: http://www.vam.ac.uk/content/journals/conservation-journal/issue-30/non-destructive-pigment-analysis-using-raman-microscopy/ (accessed on 25 June 2025).

- Cucci, C.; Delaney, J.K.; Picollo, M. Reflectance Hyperspectral Imaging for Investigation of Works of Art: Old Master Paintings and Illuminated Manuscripts. Acc. Chem. Res. 2016, 49, 2070–2079. [Google Scholar] [CrossRef] [PubMed]

- Cosentino, A. Identification of Pigments by Multispectral Imaging: A Flowchart Method. Herit. Sci. 2014, 2, 8. [Google Scholar] [CrossRef]

- Berns, R.S.; Imai, F.H. The application of Kubelka-Munk theory for the digital imaging of paintings. In Proceedings of the Ninth Color Imaging Conference: Color Science and Engineering Systems, Technologies, Applications, Scottsdale, AZ, USA, 6–9 November 2001; Society for Imaging Science and Technology: Cambridge, MA, USA, 2001; pp. 178–182. [Google Scholar]

- Kleynhans, T.; Messinger, D.W.; Easton, R.L., Jr.; Delaney, J.K. Low-Cost Multispectral System Design for Pigment Analysis in Works of Art. Sensors 2021, 21, 5138. [Google Scholar] [CrossRef] [PubMed]

- Karapanagiotis, I.; Amanatiadis, S.A.; Apostolidis, G.; Karagiannis, G. Characterization of Color Degradation of Byzantine Pigments Due to Artificial Aging. Minerals 2021, 11, 782. [Google Scholar] [CrossRef]

- Nevin, A.; Cather, S.; Burnstock, A.; Anglos, D. The influence of visible light and inorganic pigments on fluorescence excitation-emission spectra of egg-, casein- and collagen-based painting media. Appl. Phys. A 2008, 92, 69–76. [Google Scholar] [CrossRef]

- Delaney, J.K.; Thoury, M.; Zeibel, J.G.; Ricciardi, P.; Morales, K.M.; Dooley, K.A. Visible and Infrared Imaging Spectroscopy of Paintings and Improved Reflectography. Herit. Sci. 2016, 4, 6. [Google Scholar] [CrossRef]

- Wei, C.A.; Li, J.; Liu, S. Applications of Visible Spectral Imaging Technology for Pigment Identification of Colored Relics. Herit. Sci. 2024, 12, 321. [Google Scholar] [CrossRef]

- Liu, S.; Wei, C.A.; Li, M.; Cui, X.; Li, J. Adaptive Superpixel Segmentation and Pigment Identification of Colored Relics Based on Visible Spectral Images. Herit. Sci. 2024, 12, 350. [Google Scholar] [CrossRef]

- Liu, L.; Miteva, T.; Delnevo, G.; Mirri, S.; Walter, P.; de Viguerie, L.; Pouyet, E. Neural Networks for Hyperspectral Imaging of Historical Paintings: A Practical Review. Sensors 2023, 23, 2419. [Google Scholar] [CrossRef]

- Valero, E.M.; Martínez-Domingo, M.A.; López-Baldomero, A.B.; López-Montes, A.; Abad-Muñoz, D.; Vílchez-Quero, J.L. Unmixing and Pigment Identification Using Visible and Short-Wavelength Infrared: Reflectance vs Logarithm Reflectance Hyperspaces. J. Cult. Herit. 2023, 64, 290–300. [Google Scholar] [CrossRef]

- Pouyet, E.; Miteva, T.; Rohani, N.; de Viguerie, L. Artificial Intelligence for Pigment Classification Task in the Short-Wave Infrared Range. Sensors 2021, 21, 6150. [Google Scholar] [CrossRef] [PubMed]

- Flachot, A.; Akbarinia, A.; Schütt, H.H.; Fleming, R.W.; Wichmann, F.A.; Gegenfurtner, K.R. Deep Neural Models for Color Classification and Color Constancy. J. Vis. 2022, 22, 17. [Google Scholar] [CrossRef] [PubMed]

- Kogou, S.; Shahtahmassebi, G.; Lucian, A.; Liang, H.; Shui, B.; Zhang, W.; Su, B.; van Schaik, S. From Remote Sensing and Machine Learning to the History of the Silk Road: Large Scale Material Identification on Wall Paintings. Sci. Rep. 2020, 10, 19312. [Google Scholar] [CrossRef]

- Jones, C.; Daly, N.S.; Higgitt, C.; Rodrigues, M.R.D. Neural Network-Based Classification of X-ray Fluorescence Spectra of Artists’ Pigments: An Approach Leveraging a Synthetic Dataset Created Using the Fundamental Parameters Method. Herit. Sci. 2022, 10, 88. [Google Scholar] [CrossRef]

- Ugail, H.; Stork, D.G.; Edwards, H.; Seward, S.C.; Brooke, C. Deep Transfer Learning for Visual Analysis and Attribution of Paintings by Raphael. Herit. Sci. 2023, 11, 268. [Google Scholar] [CrossRef]

- Kleynhans, T.; Schmidt Patterson, C.M.; Dooley, K.A.; Messinger, D.W.; Delaney, J.K. An Alternative Approach to Mapping Pigments in Paintings with Hyperspectral Reflectance Image Cubes Using Artificial Intelligence. Herit. Sci. 2020, 8, 84. [Google Scholar] [CrossRef]

- Radpour, R.; Kleynhans, T.; Facini, M.; Pozzi, F.; Westerby, M.; Delaney, J.K. Advances in Automated Pigment Mapping for 15th-Century Manuscript Illuminations Using 1-D Convolutional Neural Networks and Hyperspectral Reflectance Image Cubes. Appl. Sci. 2024, 14, 6857. [Google Scholar] [CrossRef]

- Taufique, A.M.N.; Messinger, D.W. Hyperspectral Pigment Analysis of Cultural Heritage Artifacts Using the Opaque Form of Kubelka-Munk Theory. arXiv 2021, arXiv:2104.04884. [Google Scholar]

- Wang, Y.; Lyu, S.; Ning, B.; Yan, D.; Hou, M.; Sun, P.; Li, L. Spectral Unmixing of Pigments on Surface of Painted Artefacts Considering Spectral Variability. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, X-4-2024, 403–409. [Google Scholar] [CrossRef]

- Rohani, N.; Pouyet, E.; Walton, M.; Cossairt, O.; Katsaggelos, A.K. Pigment Unmixing of Hyperspectral Images of Paintings Using Deep Neural Networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3217–3221. [Google Scholar] [CrossRef]

- Haidar, S.; Oramas, J. Training Methods of Multi-label Prediction Classifiers for Hyperspectral Remote Sensing Images. arXiv 2023. [Google Scholar] [CrossRef]

- Xu, B.J.; Wu, Y.; Hao, P.; Vermeulen, M.; McGeachy, A.; Smith, K.; Eremin, K.; Rayner, G.; Verri, G.; Willomitzer, F.; et al. Can deep learning assist automatic identification of layered pigments from XRF data? J. Anal. At. Spectrom. 2022, 37, 2672–2682. [Google Scholar] [CrossRef]

- Ricoh Imaging. PENTAX 645Z IR Product Page. Available online: https://www.ricoh-imaging.co.jp/english/products/645z-ir/ (accessed on 25 June 2025).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- scikit-learn Developers. scikit-learn API Reference: Classifier Estimators. scikit-learn. 2025. Default hyperparameters for SVC, SGDClassifier, DecisionTreeClassifier, RandomForestClassifier, ExtraTreesClassifier, AdaBoostClassifier, BaggingClassifier, KNeighborsClassifier, LogisticRegression, GaussianNB (Version 1.7.0). Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 25 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).