Pointer Meter Reading Recognition Based on YOLOv11-OBB Rotated Object Detection

Abstract

1. Introduction

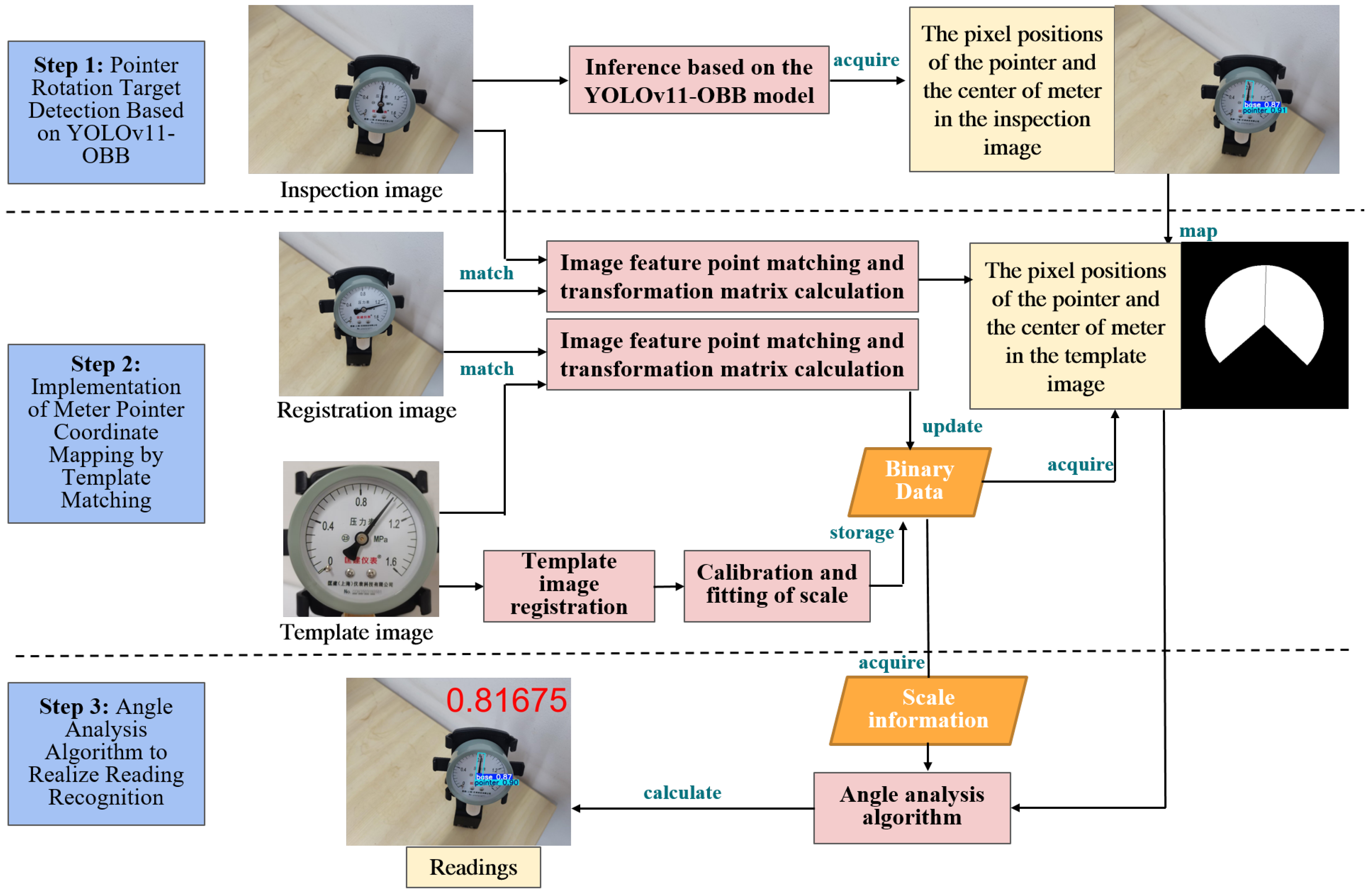

- Rotated Object Detection Network Designing: This paper presents YOLOv11-OBB, a rotated object detection network built upon the YOLOv11 framework. By employing rotation matrix-based bounding boxes, the network achieves precise localization of pointer positions and orientations with high accuracy. A long-edge representation scheme combined with circular smooth labeling (CSL) is introduced to robustly discriminate pointer directions while enabling detection of dial centers in a unified learning framework.

- Adaptive Model Library Construction and Matching: A transformation relationship between inspection and template images is established through a pointer table model library. Key variables are identified and stored following standard model registration and calibration fitting. The transformation matrix is computed using feature point matching algorithms, brute force matching, and the RANSAC algorithm to ensure precise alignment between inspection and template images. This method improves the system adaptability across different instruments and facilitates instrument detection in complex industrial environments.

- Experimental Verification and Performance Analysis: The proposed method demonstrated exceptional performance in handling pointer instrument datasets, achieving a mean Average Precision (mAP) of 99.5%, an average relative error of 0.4157%, and a maximum relative error of 1.1464%. Furthermore, the method’s robustness was validated by its ability to accurately process low-quality images with various impairments, including blur, darkness, overexposure, and tilted images, while still meeting industrial standards. These findings establish the method as a highly adaptable and reliable automated reading solution for pointer instruments in the intelligent industrial field, underscoring its substantial practical engineering value.

2. Previous Works

2.1. Pointer Detection

2.2. Reading Recognition

3. Materials and Methods

3.1. Pointer Rotation Object Detection Based on YOLOv11-OBB

3.1.1. YOLOv11-OBB Object Detection Model

3.1.2. Pointer Rotation Object Detection

3.2. Implementation of Meter Pointer Coordinate Mapping by Template Matching

3.2.1. Standard Model Construction of Pointer Meter

- Interactive recording of rectangular frame coordinates: Real-time tracking of mouse interaction enables the automatic conversion of drawn rectangular frames into precise center mark points on a scale. This enhances the efficiency and accuracy of scale positioning, as illustrated in Figure 4a, and supplies crucial data for subsequent calculations and analyses.

- Circle fitting and angle calculation: Circle fitting employs the least squares method using the coordinate data of all calibration points to determine the center coordinates and radius, as illustrated in Figure 4b. This process is crucial for accurately defining the pointer’s center of rotation. Following this, the center coordinates of the circle are established, and the offset and angle of each rectangular box’s center point relative to the circle’s center are computed to verify that the angle value conforms to the specified range.

- Pointer area determination: The circle’s radius from the fitting process is utilized as the outer radius for the pointer area. By integrating this with the predetermined inner radius parameter, as illustrated in Figure 4c, the extent of the pointer area can be precisely established.

3.2.2. Template Images and Registration Images Matching

3.2.3. Matching of Inspection Image and Template Image

3.3. Angle Analysis Algorithm to Realize Reading Recognition

4. Experimental Results

4.1. Datasets and Experimental Setting

4.1.1. Datasets Configuration

4.1.2. Environment Configuration

4.2. Model Comparison Experiment

4.2.1. Model Performance Metrics

4.2.2. Model Comparison Result

4.3. Pointer Meter Reading Recognition Experiment

4.3.1. Pointer Meter Reading Evaluation Metrics

4.3.2. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ueda, S.; Suzuki, K.; Kanno, J.; Zhao, Q. A Two-Stage Deep Learning-Based Approach for Automatic Reading of Analog Meters. In Proceedings of the IEEE International Conference on Soft Computing and Intelligent Systems (SCIS-ISIS), Hachijo Island, Japan, 5–8 December 2020. [Google Scholar]

- Thomasnet. Analog Gauges vs. Digital Gauges. Available online: https://www.thomasnet.com/insights/analog-vs-digital-gauges/ (accessed on 10 August 2019).

- Peixoto, J.; Sousa, J.; Carvalho, R.; Santos, G.; Cardoso, R.; Reis, A. End-to-End Solution for Analog Gauge Monitoring Using Computer Vision in an IoT Platform. Sensors 2023, 23, 9858. [Google Scholar] [CrossRef] [PubMed]

- Haine, C.B.; Scharcanski, J. A New Approach for Automatic Visual Monitoring of Analog Meter Displays. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Graz, Austria, 13–16 May 2012. [Google Scholar]

- Chen, X.; Peng, P.A.; Wang, L. MAMRS: Mining Automatic Meter Reading System Based on Improved Deep Learning Algorithm Using Quadruped Robots. Appl. Sci. 2024, 14, 10949. [Google Scholar] [CrossRef]

- Liu, F.T.; Wu, W.; Ding, J.; Ye, W.; Li, C.; Liang, Q. A Robust Pointer Meter Reading Recognition Method Based on TransUNet and Perspective Transformation Correction. Electronics 2024, 13, 2436. [Google Scholar] [CrossRef]

- Alegria, E.C.; Serra, A.C. Automatic Calibration of Analog and Digital Measuring Instruments Using Computer Vision. IEEE Trans. Instrum. Meas. 2000, 49, 94–99. [Google Scholar] [CrossRef]

- Yue, G.Y.; Li, B.S.; Zhao, S.T. Intelligence Identifying System of Analog Measuring Instruments. J. Sci. Instrum. 2003, 24, 430–431. [Google Scholar]

- Sun, F.J.; An, T.J.; Fan, J.Q.; Yang, C.P. Study on the Recognition of Pointer Position of Electric Power Transformer Temperature Meter. Proc. Chin. Soc. Electr. Eng. 2007, 27, 70–75. [Google Scholar]

- Wang, Y.; Li, J.; Zhang, H.; Liu, Y.; Chen, W. Pointer Instrument Reading Recognition Based on Improved YOLOv5 and Attention Mechanism. Measurement 2022, 199, 111554. [Google Scholar]

- Zhang, X.; Wang, L.; Zhou, P.; Li, S.; Yang, C. An Efficient Deep Learning Approach for Pointer Instrument Reading in Complex Industrial Scenarios. Pattern Recognit. Lett. 2023, 167, 114–121. [Google Scholar]

- Hou, L.; Wang, S.; Sun, X.; Mao, G. A Pointer Meter Reading Recognition Method Based on YOLOX and Semantic Segmentation Technology. Measurement 2023, 218, 113241. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Ke, Y. A Detection and Recognition System of Pointer Meters in Substations Based on Computer Vision. Measurement 2020, 152, 107333. [Google Scholar] [CrossRef]

- Wang, L.; Wang, P.; Wu, L.; Xu, L.; Huang, P.; Kang, Z. Computer Vision Based Automatic Recognition of Pointer Instruments: Data Set Optimization and Reading. Entropy 2021, 23, 272. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Shi, X.; Jiang, Y.; Gong, J. A High-Precision Automatic Pointer Meter Reading System in Low-Light Environment. Sensors 2021, 21, 4891. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wang, K.; Zhang, J.; Zhou, F.; Zou, L. Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5. Sensors 2024, 24, 1507. [Google Scholar] [CrossRef] [PubMed]

- Zou, L.; Wang, K.; Wang, X.; Zhang, J.; Li, R.; Wu, Z. Automatic Recognition Reading Method of Pointer Meter Based on YOLOv5-MR Model. Sensors 2023, 23, 6644. [Google Scholar] [CrossRef]

- Zhang, C.L.; Shi, L.; Zhang, D.D.; Ke, T.; Li, J.R. Pointer Meter Recognition Method Based on Yolov7 and Hough Transform. Appl. Sci. 2023, 13, 8722. [Google Scholar] [CrossRef]

- Tian, E.; Zhang, H.L.; Hanafiah, M.M. A Pointer Location Algorithm for Computer Vision-Based Automatic Reading Recognition of Pointer Gauges. Open Phys. 2019, 17, 136–143. [Google Scholar] [CrossRef]

- Gao, S.S.; Wang, Y.Y.; Chen, Z.F.; Zhou, F.; Wang, R.G.; Guo, N.H. Design and Implementation of Local Threshold Segmentation Based on FPGA. J. Electr. Comput. Eng. 2022, 2022, 6532852. [Google Scholar] [CrossRef]

- Zhao, W.D.; Huang, H.C.; Li, D.; Chen, F.; Cheng, W. Pointer Defect Detection Based on Transfer Learning and Improved Cascade-RCNN. Sensors 2020, 20, 4939. [Google Scholar] [CrossRef]

- Zhao, M.G.; Yu, H.B.; Shao, H.Y. Experimental Study on Instrument Pointer Detection Based on Hough Transform and RANSAC Algorithm. In Proceedings of the 4th International Conference on Algorithms, Computing and Artificial Intelligence (ACAI ‘21), Sanya, China, 22–24 December 2021. [Google Scholar]

- Wan, J.; Wang, H.; Guan, M.; Shen, J.; Wu, G.; Gao, A.; Yang, B. An Automatic Identification for Reading of Substation Pointer-Type Meters Using Faster R-CNN and U-Net. Power Syst. Technol. 2020, 44, 3097–3105. [Google Scholar]

- Zhang, W.; Ji, D.; Yang, W.; Zhao, Q.; Yang, L.; Zhuoma, C. Application of Swin-Unet for Pointer Detection and Automatic Calculation of Readings in Pointer-Type Meters. Meas. Sci. Technol. 2023, 35, 025904. [Google Scholar] [CrossRef]

- Zhang, Y.R.; Deng, C.H. Pointer Meter Reading Recognition Method Based on Rotating Object Detection. Comput. Eng. Des. 2023, 44, 1804–1811. [Google Scholar]

- Hassan, H.A.; Zabidi, A.; Yassin, A.I.M. Analog to Digital Meter Reader Converter Using Signal Processing Technique. In Proceedings of the 2021 IEEE Symposium on Computers & Informatics (ISCI), Kuala Lumpur, Malaysia, 16 October 2021. [Google Scholar]

- Fan, Z.; Shi, L.; Xi, C.; Wang, H.; Wang, S.; Wu, G. Real Time Power Equipment Meter Recognition Based on Deep Learning. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Chi, J.; Liu, L.; Liu, J.; Jiang, Z.; Zhang, G. Machine Vision Based Automatic Detection Method of Indicating Values of a Pointer Gauge. Math. Probl. Eng. 2015, 2015, 1–19. [Google Scholar] [CrossRef]

- Kucheruk, V.; Kurytnik, I.; Kulakov, P.; Lishchuk, R.; Moskvichova, Y.; Kulakova, A. Definition of dynamic characteristics of pointer measuring devices on the basis of automatic indications determination. Arch. Control Sci. 2018, 28, 401–418. [Google Scholar] [CrossRef]

| No. | Model | Class | Precision (%) | Recall (%) | mAP@50 (%) | mAP@75 (%) | mAP@50:90 (%) | Weight Size (MB) | Parameter (MB) | FLOPs |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | YOLOv8n | all | 97.7 | 99.6 | 98.8 | 96.2 | 83.7 | 6.3 | 11.4 | 8.1 |

| base | 95.5 | 99.1 | 98.1 | 93.1 | 0.762 | |||||

| pointer | 99.9 | 1 | 99.5 | 99.5 | 0.918 | |||||

| 2 | YOLOv9m | all | 98 | 99.7 | 98.3 | 95.6 | 85.3 | 40.8 | 20.0 | 76.5 |

| base | 95.9 | 99.4 | 97.0 | 92 | 78 | |||||

| pointer | 99.9 | 1 | 99.5 | 99.2 | 92.7 | |||||

| 3 | YOLOv10n | all | 97.5 | 99.7 | 98.5 | 95.7 | 83.2 | 5.8 | 10.3 | 8.2 |

| base | 95.3 | 98.9 | 97.5 | 92.1 | 75.7 | |||||

| pointer | 99.9 | 1 | 99.5 | 99.4 | 92.2 | |||||

| 4 | YOLOv11n | all | 97.7 | 99.7 | 98.8 | 96 | 83.4 | 5.5 | 10.3 | 6.3 |

| base | 96.2 | 99.3 | 98.2 | 92.5 | 76 | |||||

| pointer | 1 | 1 | 99.4 | 99.4 | 92.5 | |||||

| 5 | YOLOv11s | all | 97.8 | 99.6 | 98.7 | 96.5 | 85.0 | 19.2 | 9.41 | 21.3 |

| base | 95.8 | 99.2 | 97.9 | 93.6 | 77.4 | |||||

| pointer | 99.9 | 1 | 99.5 | 99.4 | 92.6 | |||||

| 6 | YOLOv11-OBB | all | 98.1 | 99.7 | 99.1 | 98.7 | 88.7 | 6.1 | 10.6 | 6.6 |

| base | 96.2 | 99.4 | 98.7 | 98.1 | 88.1 | |||||

| pointer | 1 | 1 | 99.5 | 99.4 | 89.3 |

| Model | Preprocess (ms) | Inference (ms) | Postprocess (ms) | Total (ms) | FPS |

|---|---|---|---|---|---|

| YOLOv8n | 0.5 | 0.8 | 0.8 | 2.1 | 476 |

| YOLOv9m | 0.5 | 3.9 | 0.8 | 5.2 | 192 |

| YOLOv10n | 0.5 | 1.9 | 0.2 | 1.7 | 588 |

| YOLOv11n | 0.5 | 0.9 | 0.8 | 2.2 | 454 |

| YOLOv11s | 0.5 | 1.6 | 0.8 | 2.9 | 345 |

| YOLOv11-OBB | 0.5 | 1.6 | 2.4 | 4.5 | 222 |

| No. | v | v′ | L | ε | No. | v | v′ | L | ε |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.815 | 0.81675 | 1.6 | 0.1094% | 23 | 9.45 | 9.49973 | 10 | 0.4973% |

| 2 | 0.475 | 0.47422 | 1.6 | −0.049% | 24 | 9.4 | 9.48564 | 10 | 0.8564% |

| 3 | 0.475 | 0.47422 | 1.6 | −0.049% | 25 | 9.3 | 9.35447 | 10 | 0.5447% |

| 4 | 0.1475 | 0.14717 | 0.16 | −0.206% | 26 | 9.5 | 9.57793 | 10 | 0.7793% |

| 5 | 0.1475 | 0.14684 | 0.16 | −0.412% | 27 | 9.35 | 9.38994 | 10 | 0.3994% |

| 6 | 0.1475 | 0.14714 | 0.16 | −0.225% | 28 | 8.4 | 8.44358 | 10 | 0.4358% |

| 7 | 0.1475 | 0.14821 | 0.16 | 0.4438% | 29 | 8.4 | 8.41409 | 10 | 0.1409% |

| 8 | 0.1475 | 0.14739 | 0.16 | −0.069% | 30 | 8.45 | 8.55788 | 10 | 1.0788% |

| 9 | 0.1325 | 0.13271 | 0.16 | 0.1312% | 31 | 8.25 | 8.36464 | 10 | 1.1464% |

| 10 | 0.1325 | 0.13224 | 0.16 | −0.163% | 32 | 8.25 | 8.34174 | 10 | 0.9174% |

| 11 | 2.4 | 2.42996 | 10 | 0.2996% | 33 | 5.3 | 5.26465 | 10 | −0.3535% |

| 12 | 0.1325 | 0.13141 | 0.16 | −0.681% | 34 | 5.45 | 5.40727 | 10 | −0.4273% |

| 13 | 0.086 | 0.08664 | 0.16 | 0.4% | 35 | 5.1 | 5.08627 | 10 | −0.1373% |

| 14 | 0.086 | 0.08669 | 0.16 | 0.4313% | 36 | 2.35 | 2.30057 | 10 | −0.4943% |

| 15 | 0.086 | 0.08637 | 0.16 | 0.2313% | 37 | 2.4 | 2.35854 | 10 | −0.4146% |

| 16 | 0.084 | 0.08392 | 0.16 | −0.05% | 38 | 2.4 | 2.32309 | 10 | −0.7691% |

| 17 | 0.046 | 0.04559 | 0.16 | −0.256% | 39 | 2.3 | 2.31063 | 10 | 0.1063% |

| 18 | 0.044 | 0.04286 | 0.16 | −0.712% | 40 | 2.5 | 2.46894 | 10 | −0.3106% |

| 19 | 0.045 | 0.04395 | 0.16 | −0.656% | 41 | 2.4 | 2.35407 | 10 | −0.4593% |

| 20 | 0.0425 | 0.04183 | 0.16 | −0.419% | 42 | 2.3 | 2.30689 | 10 | 0.0689% |

| 21 | 0.045 | 0.04372 | 0.16 | −0.8% | 43 | 2.5 | 2.47312 | 10 | −0.2688% |

| 22 | 0.0445 | 0.04339 | 0.16 | −0.694% | 44 | 2.45 | 2.42295 | 10 | −0.2704% |

| Avg | 0.41568% | ||||||||

| Group | No. | v | v′ | v′ − v | L | ε |

|---|---|---|---|---|---|---|

| Original Image | 1 | 0.81 | 0.81675 | 0.00675 | 1.6 | 0.422% |

| 2 | 0.086 | 0.08637 | 0.00037 | 0.16 | 0.231% | |

| 3 | 5.2 | 5.16308 | −0.03692 | 10 | −0.369% | |

| Blurred Image | 1 | 0.81 | 0.79725 | −0.01275 | 1.6 | −0.797% |

| 2 | 0.086 | 0.08646 | 0.00046 | 0.16 | 0.288% | |

| 3 | 5.2 | 5.16158 | −0.03842 | 10 | −0.384% | |

| Darkness Image | 1 | 0.81 | 0.81781 | 0.00781 | 1.6 | 0.488% |

| 2 | 0.086 | 0.08656 | 0.00056 | 0.16 | 0.35% | |

| 3 | 5.2 | 5.16155 | −0.03845 | 10 | −0.385% | |

| Overexposure Image | 1 | 0.81 | 0.81811 | 0.00811 | 1.6 | 0.507% |

| 2 | 0.086 | 0.08633 | 0.00033 | 0.16 | 0.206% | |

| 3 | 5.2 | 5.16986 | −0.03014 | 10 | −0.301% | |

| Tilted Image | 1 | 0.81 | 0.81860 | 0.0086 | 1.6 | 0.538% |

| 2 | 0.086 | 0.08627 | 0.00027 | 0.16 | 0.169% | |

| 3 | 5.2 | 5.19130 | −0.0087 | 10 | −0.087% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Wang, L.; Deng, C.; He, B. Pointer Meter Reading Recognition Based on YOLOv11-OBB Rotated Object Detection. Appl. Sci. 2025, 15, 7460. https://doi.org/10.3390/app15137460

Xu X, Wang L, Deng C, He B. Pointer Meter Reading Recognition Based on YOLOv11-OBB Rotated Object Detection. Applied Sciences. 2025; 15(13):7460. https://doi.org/10.3390/app15137460

Chicago/Turabian StyleXu, Xing, Liming Wang, Chunhua Deng, and Bi He. 2025. "Pointer Meter Reading Recognition Based on YOLOv11-OBB Rotated Object Detection" Applied Sciences 15, no. 13: 7460. https://doi.org/10.3390/app15137460

APA StyleXu, X., Wang, L., Deng, C., & He, B. (2025). Pointer Meter Reading Recognition Based on YOLOv11-OBB Rotated Object Detection. Applied Sciences, 15(13), 7460. https://doi.org/10.3390/app15137460