1. Introduction

Computer Vision (CV) technologies have become indispensable in agri-food industry tasks, such as quality control, counting and grading, where camera hardware is paired with dedicated software pipelines [

1,

2,

3,

4]. The latest leap in performance has come from deep-learning, particularly Artificial Neural Networks (ANNs) [

5,

6,

7,

8,

9,

10]. Although ANNs deliver high accuracy and real-time throughput, they demand extensive datasets, careful annotation, hyper-parameter tuning and long training cycles [

4,

8,

11,

12].

Manual annotation can inadvertently introduce noisy labels that impair supervised learning. Recent literature describes two complementary mitigation strategies: (i) PSSCL, a progressive-sample-selection framework with contrastive loss that detects and prunes potentially mis-labelled instances [

13]; and (ii) BPT-PLR, which partitions the data into balanced mini-batches and employs a pseudo-label-relaxed contrastive loss to dampen label noise during training [

14]. Within our table olive workflow, this risk is virtually eliminated because SAM is used in zero-shot mode, obviating the need for a dedicated dataset or additional annotation; nevertheless, the aforementioned strategies would be valuable if domain-specific models are trained in the future.

A different paradigm emerged with the Segment Anything Model (SAM), released by Meta AI in 2023 [

15,

16]. The word Anything underscores SAM’s capacity to generalize to previously unseen objects and scenes, enabled by its billion-mask pre-training and prompt-agnostic interface. SAM was trained on >1 billion masks and can segment virtually any object after receiving only a simple prompt (point, box or text), without any additional fine-tuning. Its zero-shot capability offers an attractive shortcut for industrial environments where building task-specific models is costly. Even so, SAM has been explored mainly in remote sensing and precision agriculture [

17,

18,

19,

20], while its potential for inline food product inspection remains largely untested.

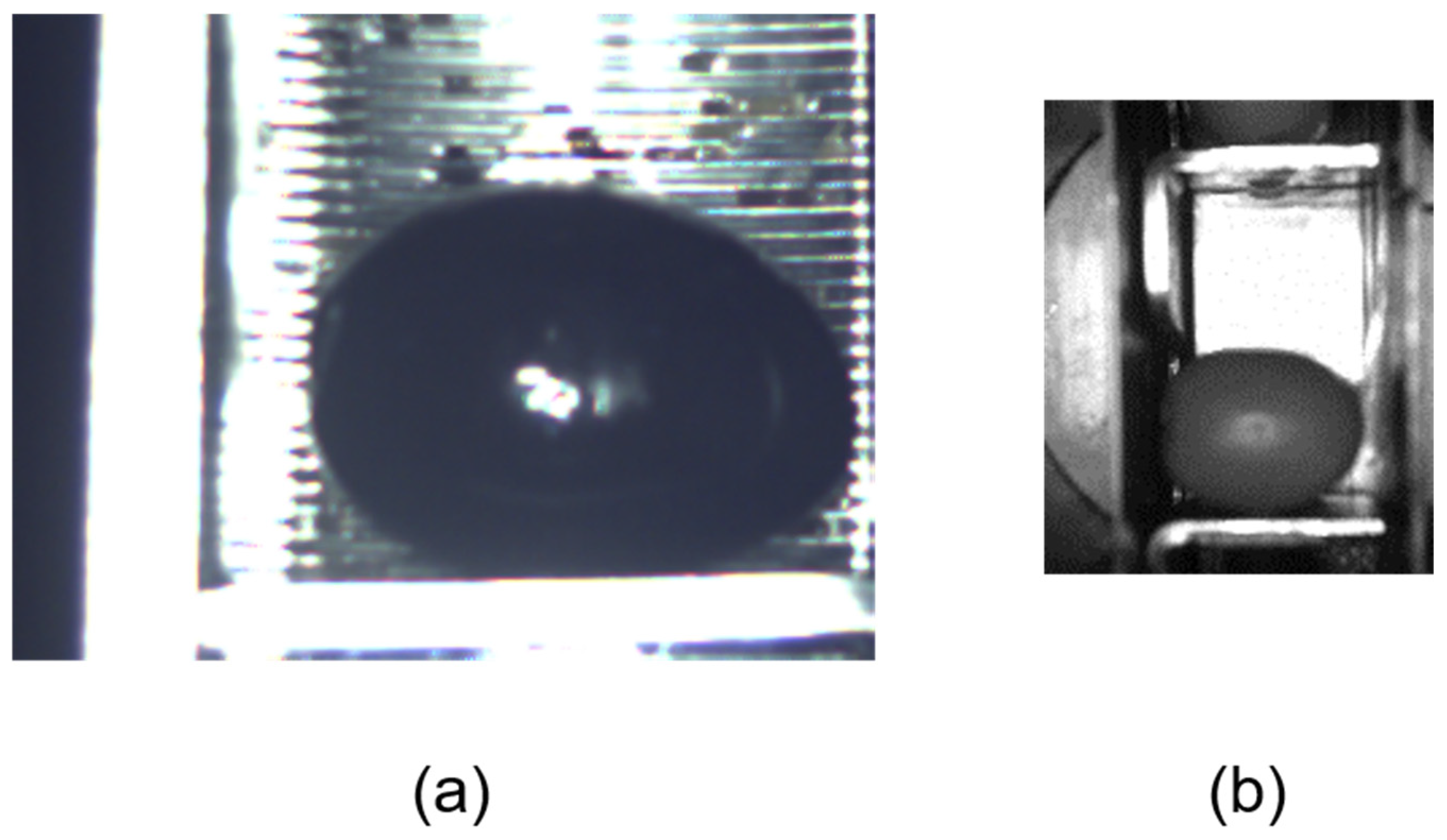

Table olive processing is an ideal test bed. Pitting, slicing and stuffing machines (DRRs) run at 2500–3000 olives min

−1 but still suffer from the so-called boat error: olives that arrive vertically oriented are pierced off-axis, producing pulp damage and pit splinters that jeopardize product safety [

8,

9]. CV can either (i) diagnose the root causes of these misorientations or (ii) close the loop with electromechanical actuators. In both cases, fast and reliable segmentation of each olive on the carrier chain is a prerequisite.

1.1. State-of-the-Art Segmentation Models for Industrial and Agri-Food Applications

Image segmentation under variable illumination, background clutter and occlusion is challenging [

1]. Traditional algorithms (thresholding, active contours, region growing) have gradually been replaced by deep-learning approaches that are more robust to such variability [

1].

Four families dominate current practice:

U-Net [

12]—a symmetric encoder–decoder that yields accurate semantic masks even with medium-sized datasets but does not separate individual instances.

Mask R-CNN [

10]—adds an instance-mask branch to Faster R-CNN, producing highly precise masks at the cost of heavy computation and dense supervision.

DeepLab v3+ [

21]—leverages atrous (dilated) convolutions and ASPP to achieve state-of-the-art IoU, yet relies on deep backbones that increase latency.

SAM [

16]—a foundation model trained on SA-1B; delivers zero-shot masks but uses a ViT-H encoder (~632 M parameters) that is computation-intensive. MobileSAM [

22] shrinks SAM ≈ 60×, reaching ~10 ms image

−1.

Agri-food studies have begun to adapt SAM to crop imagery [

17], employ it as an annotation accelerator [

1], or benchmark it against task-specific CNNs [

20]. UAV trials confirm MobileSAM’s ability to delineate fruits at video rate [

19].

Table 1 summarizes the main trade-offs of these standalone models.

1.2. Hybrid Detector–Prompt Pipelines

To meet strict cycle-time budgets, recent studies couple a fast detector with a prompt-based segmenter. A one-stage detector (e.g., YOLOv8) first supplies bounding-box prompts; SAM (or MobileSAM) then refines pixel-level masks inside these regions, cutting SAM’s workload by one to two orders of magnitude [

23]. Other combinations use Grounding DINO for open-vocabulary boxes [

24], light objectness CNNs feeding MobileSAM on edge devices [

25], or FastSAM paired with a proposal network [

26]. Conversely, SAM can propose global masks that a tiny CNN filters [

27].

Table 2 profiles the most representative hybrids.

1.3. Scope of the Present Work

The current study integrates SAM without any fine-tuning into an olive-pitting line. Images are captured by a machine-vision unit mounted above the carrier chain; SAM segments the olives, and a lightweight post-processing step selects the correct mask. After that, morphological/statistical analyses are performed to quantify the boat-error incidence and to examine whether off-caliber olives increase that error. By relying on a foundation model plus minimal code, the extensive data collection and training phase typical of bespoke CNN-based solutions have been eliminated, which demonstrates a practical, low-overhead path to 100% inline inspection.

4. Discussion

The zero-shot application of the SAM model to an image analysis process has enabled the segmentation of olive images into masks, captured directly from the carrier chain during an industrial pitting operation, bypassing the complexity of developing a task-specific ANN [

8,

10,

12] and stages commonly dependent on algorithmic development [

4,

8,

10,

40] or manual interventions [

4,

40], thereby addressing a significant bottleneck. Furthermore, the segmentation results demonstrated good definition, evaluated based on the contours of the olive relative to the background in images that, due to the absence of color or low resolution, frequently encounter identification challenges in industrial analysis operations based on OpenCV. In these cases, SAM has performed well, even without special attention to scene conditions, such as lighting, background or framing. These operations are currently entrusted to more advanced and precise technologies that offer advantages, such as self-learning architectures, including CNN or U-Net [

8,

9,

10,

12,

40]. However, these approaches incur high design costs and long processing times and often require several iterations before a satisfactory solution is obtained. Furthermore, they are typically tied to a single case study: when the product changes or the system is installed on a different machine, a new dataset must be collected and annotated, and the model must be redesigned, retrained and re-optimized.

Although SAM itself is a large vision-transformer neural network, it is released as a frozen foundation model; therefore, no additional architecture design, training or tuning is required for our task. SAM thus removes the burden of developing a task-specific ANN while still leveraging the power of deep neural networks, providing full adaptability to diverse segmentation scenarios.

Recent work in industrial vision corroborates this trend. Purohit and Dave [

41] present a comparative study—“Leveraging Deep Learning Techniques to Obtain Efficacious Segmentation Results”—that benchmarks classical CNNs (U-Net, DeepLab v3+, Mask R-CNN) against pretrained transformer models in low-contrast settings. They report that foundation-style models retain high accuracy without case-specific retraining, precisely the advantage observed here with SAM. By adopting SAM as a frozen backbone, our pipeline achieves sub-pixel geometric errors and an IoU of ≈0.95 while avoiding the extensive data collection and network redesign normally required in industrial automation.

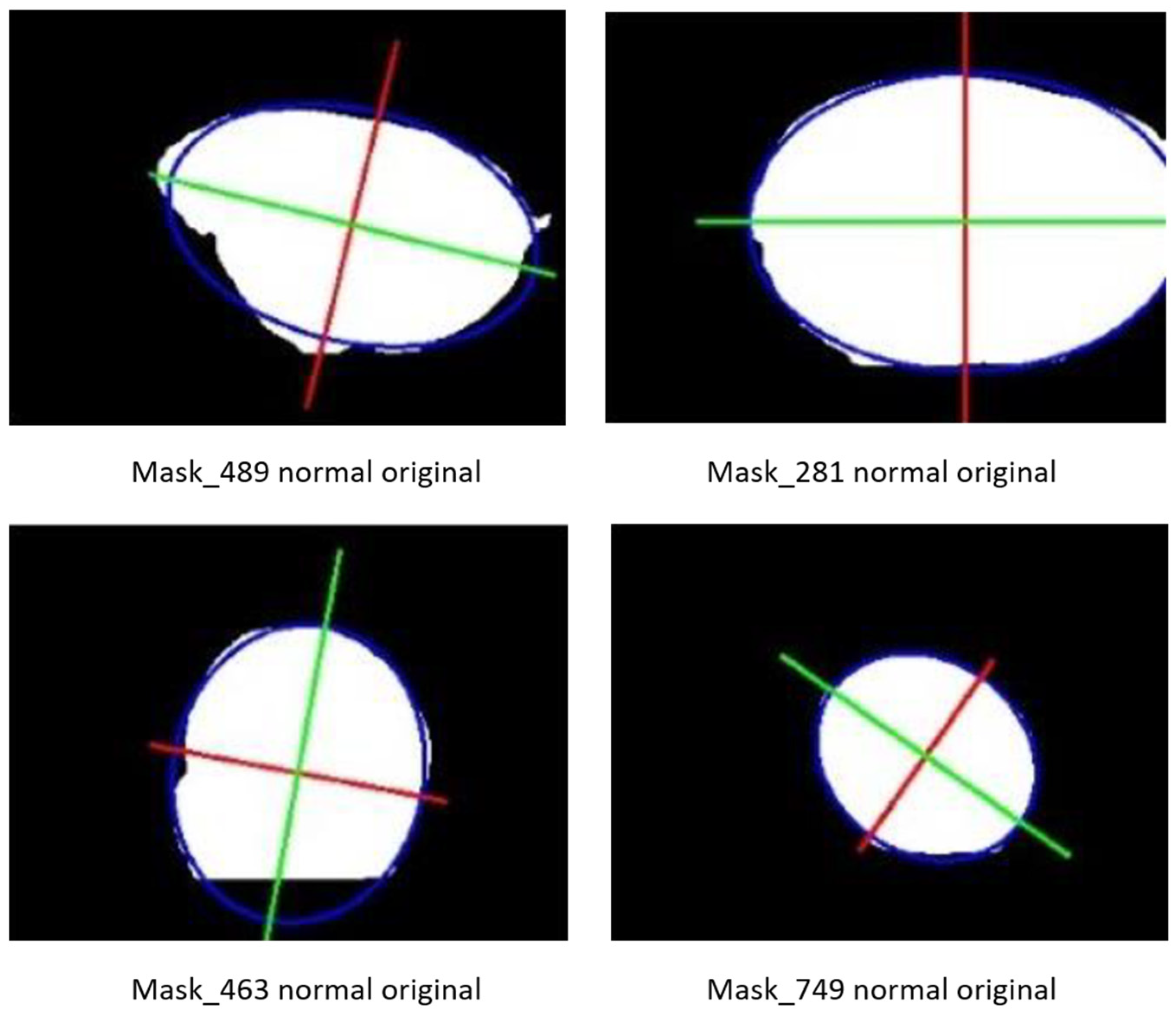

Assuming a Cartesian reference frame XYZ, the working plane of the pitting machine corresponds to the XY plane, and the conveyor carries the olives toward the pitting station along the Y-axis:

Normal position (long axis ≈ X). While advancing, the olives roll about their own longitudinal axis, which facilitates alignment.

Boat position (long axis ≈ Y). The fruit lies broad-side, does not roll and accounts for roughly 1–2% of the flow.

Pivoted position (long axis ≈ Z). The fruit stands upright on one pole; this orientation is very rare (fewer than 1 in 1000 olives).

The levelling brush mounted above the feed chain effectively corrects pivoted olives by applying a torque that forces them to roll and return to the normal position, but it is ineffective for olives travelling in boat position. Consequently, the brush alone cannot guarantee proper alignment. The machine-vision system presented in this study monitors the orientation of every olive in real time and records any fruit that reaches the pitting knives in a misaligned state, providing objective feedback for line adjustment or, if desired, for triggering external rejection mechanisms.

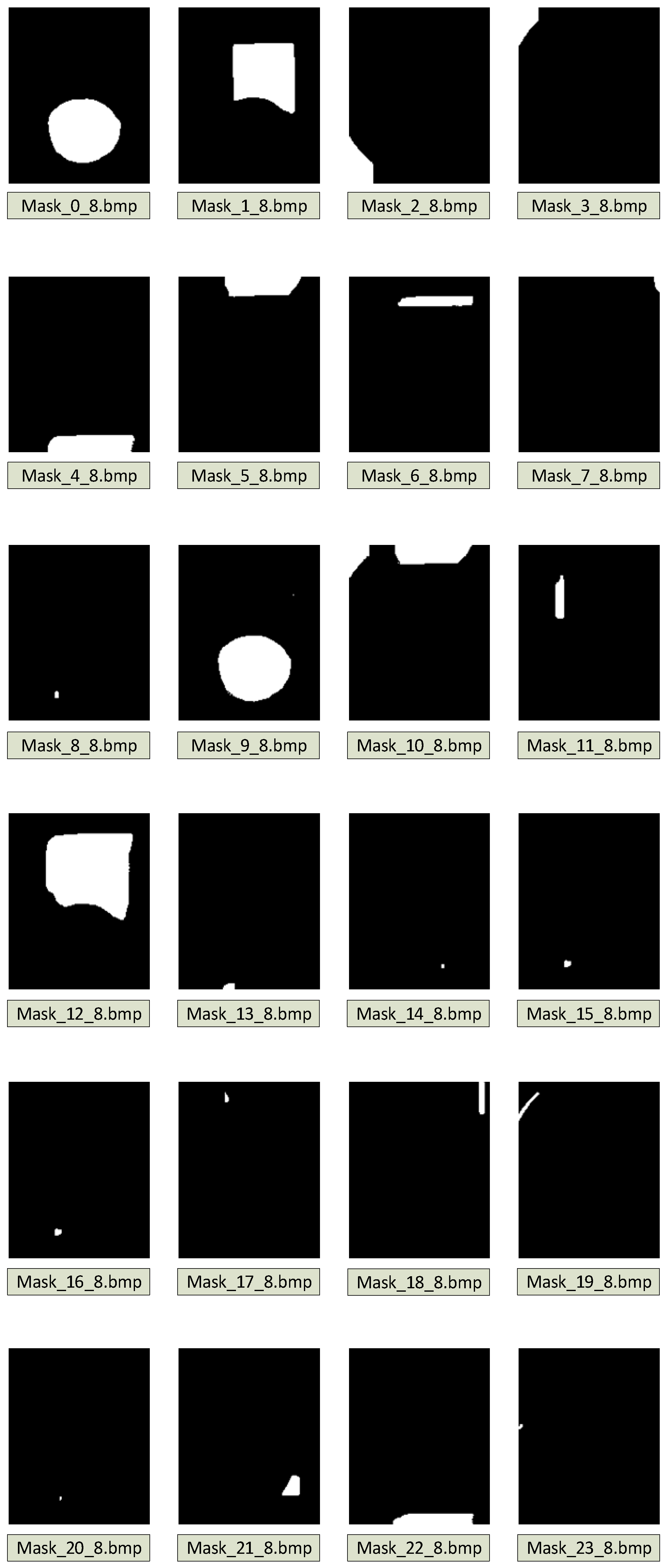

In addition, the raw camera images were processed without any pre-processing, such as ROI adjustment, scale normalization, RGB-channel separation or noise filtering. The study deliberately ran SAM in its “everything” mode [

16] instead of prompting the actual olive with points or bounding boxes; this choice necessitated only minor post-processing of the masks. Such post-processing is not a limitation of SAM itself but a corrective step for occasional noise caused by highlights and shadows in high-illumination, color-poor scenes. While some reports [

16,

18,

20] have questioned SAM’s utility in specialized industrial tasks without supervision, the well-defined contours obtained for olives demonstrate that the model remains highly effective and can be refined with lightweight post-processing—even at the cost of a slightly longer computation time.

Processing time remains the primary limitation for executing the model, as its high computational cost currently prevents real-time operation at rates of 40–50 images per second, restricting its use to offline processing. Nevertheless, the demo version of SAM remains open to critique and potential improvements [

16,

20] in both classification and segmentation, as well as processing time, and future specialized versions may emerge for industrial applications. Enhancements to the model itself, combined with the next generation of quantum computing, may eventually enable the use of SAM beyond what has been demonstrated in this experiment. In the meantime, a hybrid strategy that integrates SAM with lightweight models, such as a traditional classifier or a small ANN, could be a viable alternative.

The corrected segmented images allowed for a morphological analysis by extracting representative data on the size and shape of the processed olives. In this case, a method based on contour fitting with an ellipse was chosen, enabling straightforward measurement of parameters, such as area, axis length and orientation. The statistical data generated provides precise insights into the total size distribution and the orientations of each olive before pitting. It is well established that an orientation between 30° and 150° [

8] is a determining factor in whether the olive will be properly pitted, thereby validating this approach.

Currently, the boat error is evaluated occasionally to adjust machinery settings, maintaining a minimal loss threshold of 1–2%. This is achieved by adjusting the density of a brine tank and assessing the percentage of freshly pitted olives that sink due to their weight, as they often contain whole pits or fragments. However, this method is not fully effective, requiring an additional expert assessment to evaluate the percentage of olives that may have floated due to trapped air, allowing them to reach the consumer. The alter-native proposed in this study enables an exact measurement of this error, eliminating the randomness of the flotation and sensory evaluation phases. Moreover, it can be applied to any process without the need for evaluation of personnel or additional equipment, pre-venting product loss and providing a cost-effective solution.

Additionally, assessing the range of processed sizes (

Figure 11 left and

Figure 12 left) represents a novel contribution, as size control is often overlooked, relying solely on effective calibration before the olives enter the DRR machine. However, this study demonstrates that such control is not always rigorously enforced, at least not to the level required by these systems, whose efficiency is maximized through precise selection of pieces and mechanical couplings [

8].

The generation of size and orientation data has made it possible to evaluate whether this factor influences the increase in boat error due to a direct correlation between parameters (

Table 10 and

Table 11), yielding a negative result. In other words, despite the need for precise adjustments, these systems do not experience a decline in performance when processing sizes that deviate from the nominal value. This finding supports the necessity of implementing CV systems in these machines to achieve full efficiency in the short term and remove the speed limitation imposed to increase production.

4.1. Future Work

The following lines of research are planned to enable full real-time and closed-loop deployment of the system:

Operator feedback and active ejection: The current vision module can already display, in real time, the share of olives travelling in boat or pivoted positions. Showing these metrics on the HMI enables operators to fine-tune brush height or conveyor speed until the misalignment rate is minimized, thereby closing a manual feedback loop. A lightweight, quantized version of SAM (≥50 fps) could also drive an array of air nozzles located upstream of the pitting knives: any mis-oriented olive would be blown back onto the in-feed conveyor for a second pass, further reducing the fraction of off-axis fruit without interrupting line throughput.

Digital-twin integration: The same orientation data can be streamed to a digital-twin layer that supervises the pitting line and automatically adjusts variables, such as brush height or belt speed. A pertinent precedent is the framework developed for an electrical submersible pump by Don et al. [

42], where continuous sensor streams are synchronized with an electromechanical model to enable predictive control. Adapting this architecture to a table olive pitter would allow the image-based orientation signals reported here to update PLC set-points in real time, closing the feedback loop and enabling data-driven optimization.

Real-time hybrid architectures: The current pipeline validates SAM offline, yet factory floor deployment demands inference latencies below 20 ms per frame. Two complementary strategies will therefore be pursued:

- (i)

Cascaded SAM → lightweight model: Full-size SAM will be executed once on ~5 k representative frames to generate high-quality masks that are then used to distil a compact network (e.g., a MobileNet-V3 encoder with a three-level FPN decoder). A Dice + IoU distillation loss will transfer SAM’s spatial priors, while TensorRT INT8 quantization is expected to reduce model size to <12 MB and runtime to ≈8 ms on an NVIDIA Jetson Orin-NX.

- (ii)

FastSAM/MobileSAM variants: Recent works, such as FastSAM [

43] and MobileSAM [

22], replace the heavy ViT encoder with a YOLO-N segmentation head or a ShuffleViT backbone, achieving 50–120 fps on a laptop GPU. Both variants will be benchmarked on olive images, fine-tuning only the prompt encoder (points + box) to keep the annotation cost negligible.

A hybrid schedule—FastSAM on every frame, full SAM every 30 s for self-supervised drift correction—will be evaluated to maintain IoU ≥ 0.94 while meeting the 50 fps throughput of the pitting line.

4.2. Cyber-Security Considerations

The prototype is currently deployed on an air-gapped local network inside the factory, isolating the pitting line from the corporate backbone and thus shielding production data. Remote connectivity is enabled only intermittently via a 4 G router for online diagnostics. Even under this restricted topology, an IoT-enabled version must address the well-known vulnerabilities of industrial edge devices—unsecured brokers, weak TLS, device spoofing and adversarial inputs to the vision model. Aryavalli and Kumar’s survey “Safeguarding Tomorrow: Strengthening IoT-Enhanced Immersive Research Spaces with State-of-the-Art Cybersecurity” [

44] reviews these threats and recommends a layered defense: device attestation, zero-trust segmentation, encrypted OPC UA or MQTT transport, secure-boot on Jetson/Edge-TPU boards, and runtime monitoring for anomalous inference patterns. These counter-measures will be adopted in the next project phase so that feedback signals, digital-twin commands and potential air-jet actuations remain authenticated and tamper-proof, even when 4 G connectivity is temporarily enabled.

4.3. Beyond Incremental Change

Classical table olive graders rely on bespoke CNNs or hand-tuned morphology that must be retrained, re-lit and re-calibrated whenever the cultivar, camera or conveyor geometry changes. In contrast, the pipeline proposed here leverages a frozen foundation model (SAM) that transfers zero-shot to two morphologically distinct cultivars (Gordal vs. Hojiblanca) without additional labels, delivers sub-pixel geometric accuracy (IoU ≥ 0.94) under harsh specular lighting and integrates seamlessly with deterministic post-processing to recover millimetric morpho-metrics (area, ellipse, major axis orientation). The ability to generalize across cultivars, cup designs and illumination regimes—while maintaining real-time throughput—positions SAM not as an incremental replacement for U-Net or Mask R-CNN but as a paradigm shift: segmentation moves from task-specific model engineering to prompt-based configuration. This decouples algorithm development from plant maintenance, lowers the entry barrier for small processors and creates a reusable foundation for digital-twin feedback loops and predictive control across the broader agri-food sector.

5. Conclusions

The use of the SAM model by META AI helps mitigate the segmentation bottleneck in industrial visual inspection operations, such as in the olive pitting process, by providing an ANN without the need for its development. In this specific case, SAM has demonstrated its ability to adapt not only without pre-training but also without re-training when applied to two types of images that are challenging for conventional OpenCV-based systems: black olives against a metallic gray background and grayscale images of Hojiblanca olives. The model stands out for its high definition and versatility. Additionally, these images were processed without any preprocessing, exactly as they were captured by the camera. The segmentation errors were minimal and could be easily corrected using complementary Matlab scripts, positioning this demo version as a viable, cost-effective and hardware-efficient alternative for certain industrial applications. Future improvements in both the model and hardware could make it a highly effective solution by providing fundamental image analysis operations, such as segmentation, pre-solved in advance, making it particularly valuable if it could operate at machine speed (40–50 images per second).

The segmentation of olive images immediately before pitting, using this simple, alternative and optimized method, enables the extraction of size and orientation data for each olive, facilitating a more rigorous diagnostic approach compared to the conventional method based on the flotation tank and expert assessment. This method can be applied in every pitting operation, recognizing parameters, such as area, axis length and orientation. Additionally, the generated data have, for the first time, identified the absence of correlation between miscalibration and boat error, demonstrating that DRR machines do not experience a decline in performance when processing sizes different from the nominal caliber (for which the machine was adjusted), despite their precise calibration requirements [

8]. This finding supports the integration of CV systems alongside other automated solutions as a strategy to achieve full efficiency (100%) in the short term while eliminating the imposed speed limitation, preventing an increase in error and enhancing the productivity of these mechanical systems.

This initiative represents an optimized alternative method of interest for modernizing the table olive industry. However, given the general-purpose nature of SAM, it is also fully applicable to other automated processing operations in the agri-food sector, being accessible remotely at any time via the internet.