Product Image Generation Method Based on Morphological Optimization and Image Style Transfer

Abstract

1. Introduction

2. Literature Review

2.1. Intelligent Design of Product Form

2.2. Aesthetic Evaluation of Product Form

2.3. Image Style Transfer

- 1.

- We construct a model aimed at optimizing product forms. By utilizing Bézier curves to delineate the contours of the products, we employ an esthetic comprehensive evaluation model as the fitness function. Subsequently, genetic algorithms are leveraged to refine the product forms, ultimately yielding esthetically superior solutions.

- 2.

- We employ genetic algorithms for morphological optimization. Initially, an initial population is established by encoding key morphological parameters of the target product. Through iterative application of stochastic genetic operators—selection, crossover, and mutation—successive generations are generated. An esthetic index serves as the objective fitness function, quantifying individual fitness values within each population. This cyclic process of fitness evaluation, operator application, and population renewal continues until convergence criteria are satisfied. Systematic evaluation of algorithmic outputs enables identification of Pareto-optimal solutions, ultimately achieving computational optimization and innovative morphological generation.

- 3.

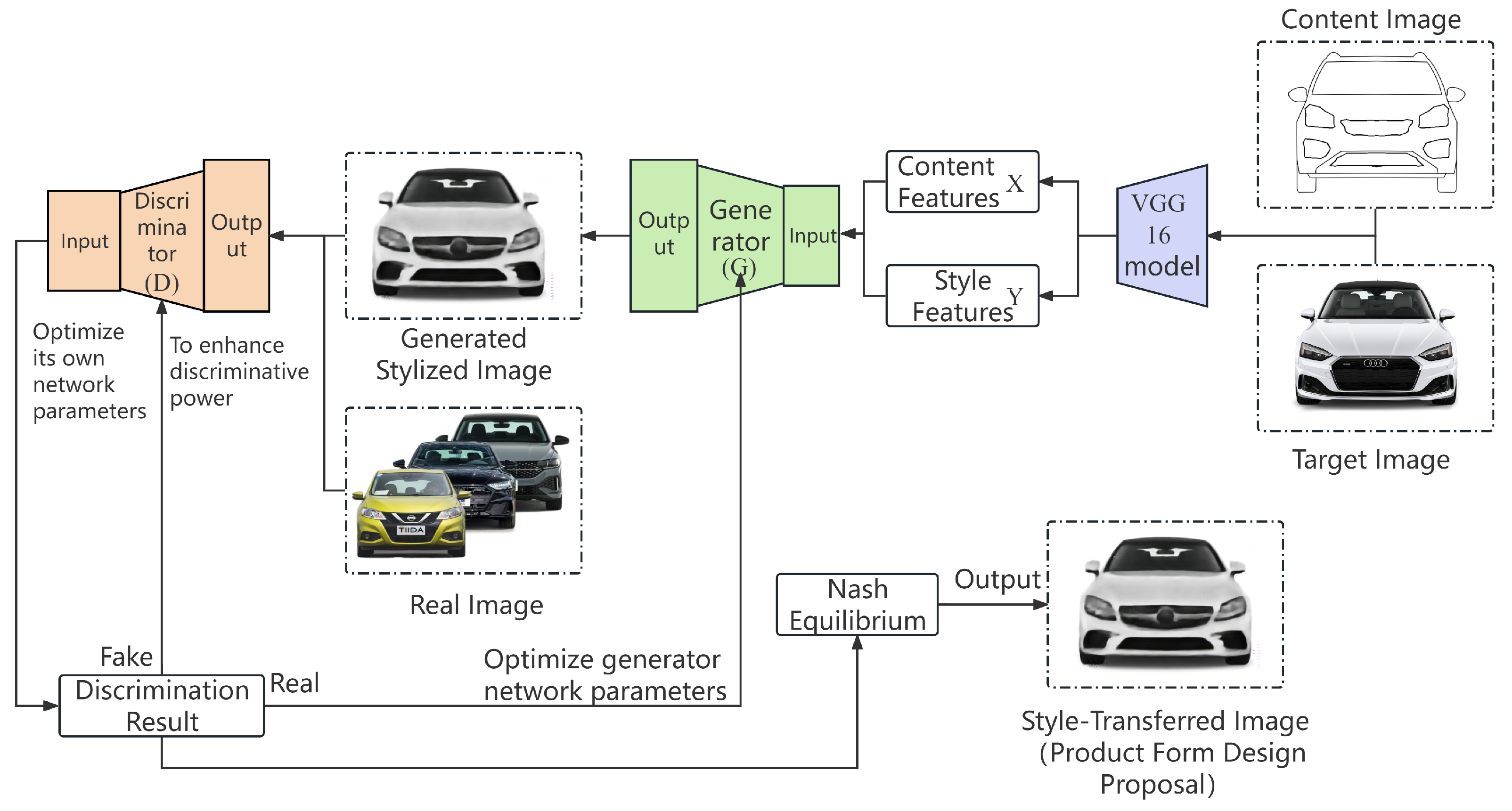

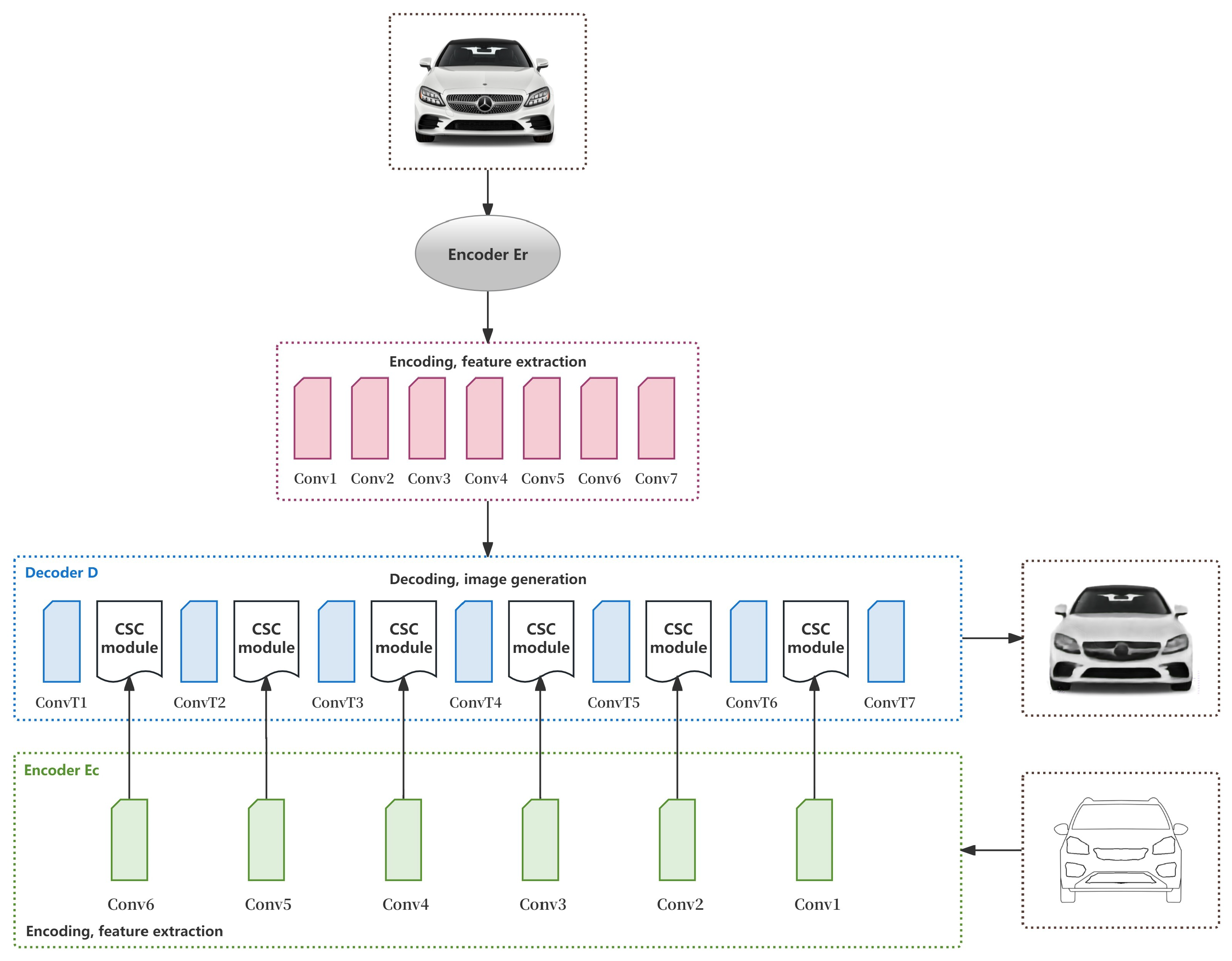

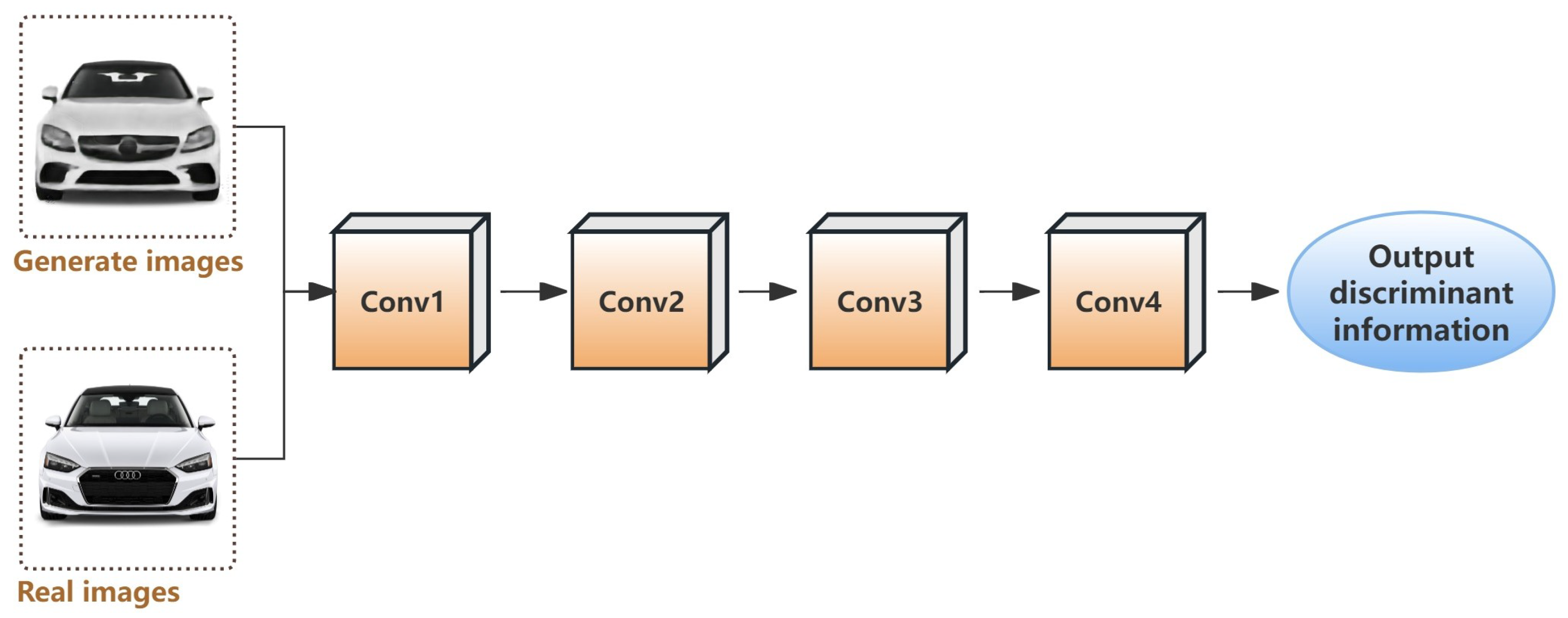

- We construct a GAN model for IST. Utilizing web crawling techniques, we compile an image dataset comprising car front faces to train the GAN model. The product form solutions serve as content images, while car front-face images from the market are chosen as the target style images. The encoder is responsible for extracting both content and style features. These features are then fed into the generator to produce style-transferred images. Ultimately, the discriminator assesses whether the generated image’s style aligns with the target image. Through iterative optimization, we obtain product form solutions that are consistent with the desired target style.

3. Optimization Design Model of Product Form Based on Genetic Algorithm and Esthetic Evaluation

3.1. A Morphological Optimization Design Model Based on the Genetic Algorithm

- (1)

- Compatibility: Binary encoding, as a classical implementation of the genetic algorithm, can directly apply standard crossover and mutation operators;

- (2)

- Scalability: The coordinate values of each control point are mapped from bounded continuous intervals (e.g., ]) to binary segments, which supports high-precision parameter optimization;

- (3)

- Efficiency advantage: Binary coding can effectively balance the scale of the bounded discrete search space and computational efficiency in morphological optimization.

3.2. A Comprehensive Esthetic Evaluation Model for Product Form

3.2.1. Construction Method of Esthetic Index Formula

3.2.2. Construction of the Esthetic Index Formula

3.2.3. Stability

3.2.4. Hierarchy

3.2.5. Contrast

3.2.6. Complexity

3.2.7. Esthetic Evaluation Model of Product Form Based on Entropy Weighting Method

4. IST Model Based on GAN

4.1. Architecture of GAN

4.2. Building a Style Transfer Product Image Generation Model

4.2.1. Generator Module

4.2.2. Discriminator Module

4.2.3. Loss Function

5. Case Study

5.1. Experimental Design for Car Front-Face Form Optimization

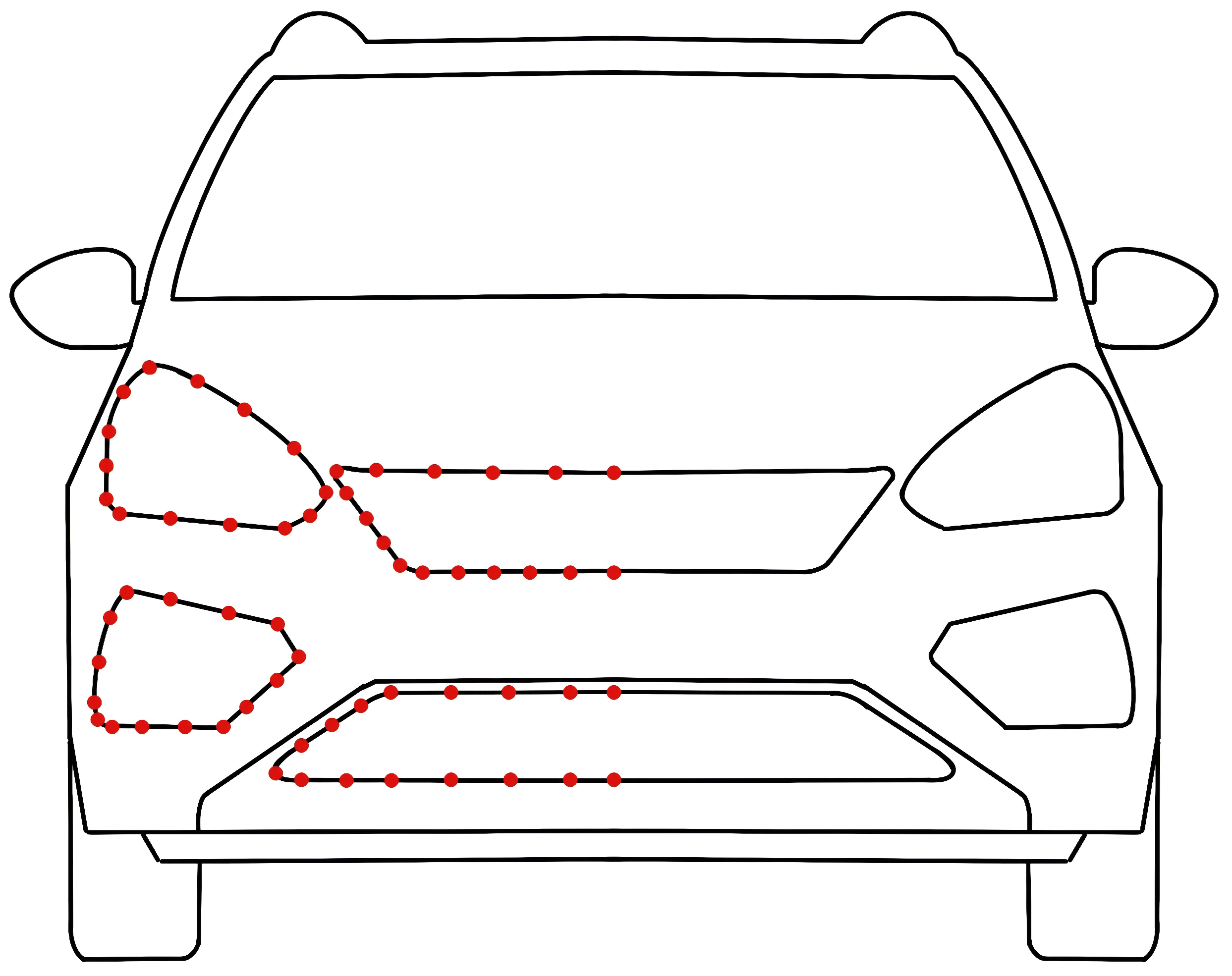

5.1.1. Car Front-Face Form Design Parameters

5.1.2. Optimizing the Front Fascia of the Automobile

5.1.3. Calculating the Esthetic Index for the Front Fascia of Automobiles

5.1.4. Weight Analysis Based on Entropy Method

5.2. Automotive Front-Face Style Transfer Experiment Design

5.2.1. Experimental Setup and Dataset

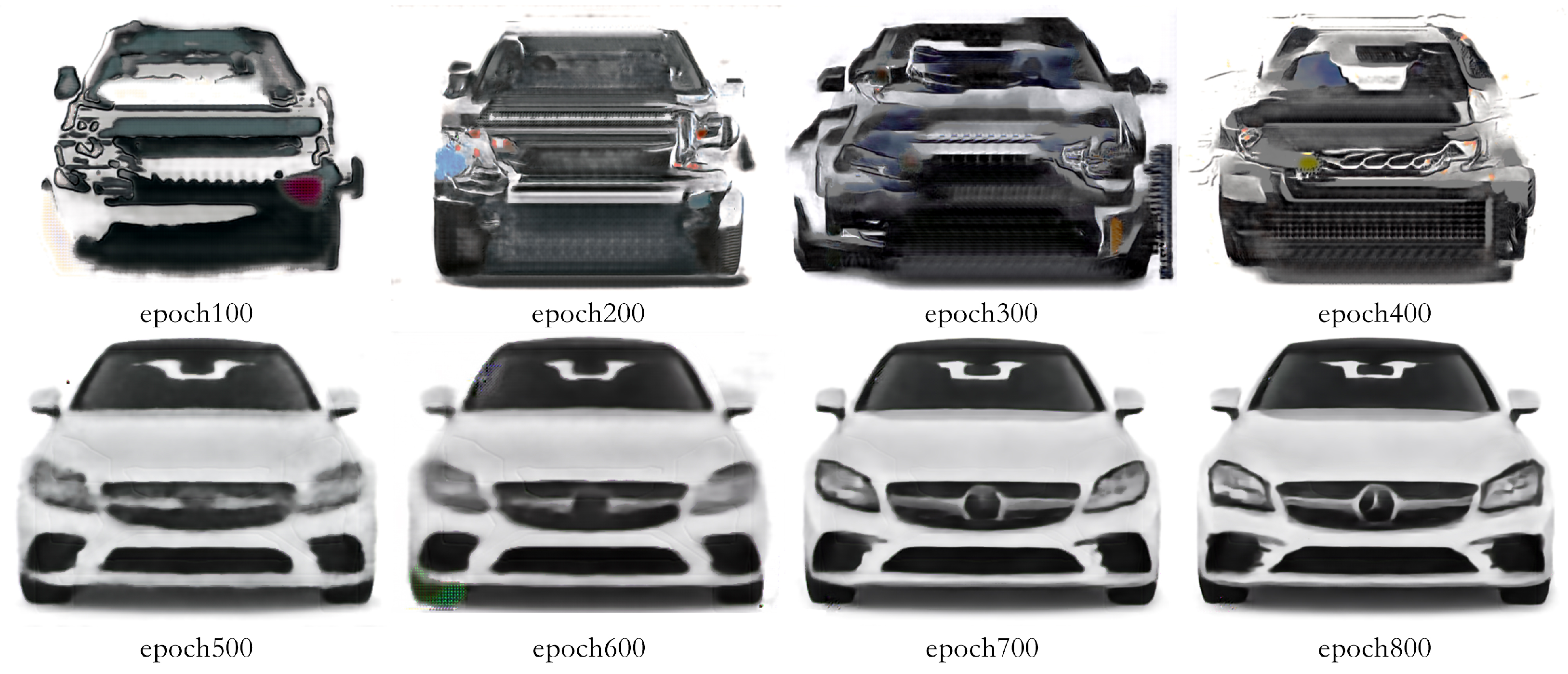

5.2.2. Product Image Generation

5.2.3. Comparative Assessment of Multi-Style Transfer

6. Discussion

- (1)

- By integrating Bezier curves with genetic algorithms, the parameters of key points in car front-face form samples are extracted. The esthetic index serves as the fitness function to assess the outcomes of genetic operations. Bezier curves, owing to their distinctive form, can distinctly discern curvature changes in forms, whereas genetic algorithms possess robust parameter optimization capabilities for extracting the most representative features from intricate data. This methodology obviates the need for designers to invest considerable time in extensive model training. Commencing directly from form parameters, it minimizes human factor interference and ensures the objectivity of the evaluation process. This approach offers designers an abundance of product form designs and facilitates the swift development of diverse product form solutions.

- (2)

- Product form design is conducted on the basis of esthetic index calculation. The formula for calculating the esthetic index determines the esthetic index value of experimental samples, while the entropy weight method is employed to ascertain the weights of the indices and obtain evidence for objective esthetic evaluation, thereby achieving esthetic evaluation of product form. In contrast to traditional manual evaluation methods utilized in intelligent product form design, this methodology utilizes computational esthetics technology to select solutions, yielding superior objectivity and reliability.

- (3)

- We apply a GAN to generate realistic images of car front-face outlines. A dataset, comprising paired outline images and their corresponding real images, is created to facilitate the network’s learning and mapping processes in complex feature spaces. The GAN meticulously examines both the input form scheme image and the real image, identifying structural proportions and intricate details, thereby enabling it to train effectively and generate realistic images of car front faces. In comparison to previous product outline optimization designs, this methodology attains more realistic image effects that align more closely with actual requirements. Furthermore, when compared to traditional style transfer methods, this approach offers superior controllability over the generated content images.

- (1)

- Dataset: Firstly, the training of the GAN necessitates a substantial amount of high-quality data, and the limited experimental data constitutes a primary influencing factor. Furthermore, inconsistencies may exist within the dataset. Secondly, the distribution of car front-face data is intricate, and training the GAN requires intricate parameter adjustments. Inadequate parameter settings can readily impair model performance.

- (2)

- Design: This study primarily focuses on preliminary exploration of car front-face contour design. In future endeavors, a more thorough analysis and evaluation of contour images of diverse product types can be conducted to observe the performance effects across various product forms. Additionally, strategies can be explored to enhance the method’s universality.

- (3)

- Image Generation Quality: This method can provide relatively clear and high-resolution images, yet numerous challenges remain in achieving optimal image quality. For instance, the generated images may lack realism, exhibit compatibility issues with complex contours, and omit color details. Consequently, further in-depth research is imperative in the future to ensure that the generated car appearances closely resemble reality and to produce high-precision designs that meet designers’ expectations.

- (4)

- Algorithm Application: This research solely explores the application effects of a GAN. Currently, various deep learning network models are emerging, and the processing capabilities of hardware devices are continually improving. The research achievements in deep learning are becoming increasingly plentiful and possess significant driving effects on solving practical problems. In the future, more efficient algorithms can be introduced to investigate product form design issues. It is also conceivable to integrate them with AIGC technology to tackle more intricate design challenges.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, J.; Shao, Z.; Zheng, X.; Zhang, K.; Yin, J. Integrating aesthetics and efficiency: AI-driven diffusion models for visually pleasing interior design generation. Sci. Rep. 2024, 14, 3496. [Google Scholar] [CrossRef] [PubMed]

- Sohail, A. Genetic algorithms in the fields of artificial intelligence and data sciences. Ann. Data Sci. 2023, 10, 1007–1018. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, W.; Yang, M.; Han, D. A multi-objective evolutionary algorithm model for product form design based on improved SPEA2. Appl. Sci. 2019, 9, 2944. [Google Scholar] [CrossRef]

- Wang, T.; Zhou, M. Product Development and Evolution Innovation Redesign Method Based on Particle Swarm Optimization. In Proceedings of the Advances in Industrial Design: Proceedings of the AHFE 2021 Virtual Conferences on Design for Inclusion, Affective and Pleasurable Design, Interdisciplinary Practice in Industrial Design, Kansei Engineering, and Human Factors for Apparel and Textile Engineering, 25–29 July 2021, USA; Springer: Cham, Switzerland, 2021; pp. 1081–1093. [Google Scholar]

- Zhou, A.; Ouyang, J.; Su, J.; Zhang, S.; Yan, S. Multimodal optimisation design of product forms based on aesthetic evaluation. Int. J. Arts Technol. 2020, 12, 128–154. [Google Scholar] [CrossRef]

- Świątek, A.H.; Szcześniak, M.; Stempień, M.; Wojtkowiak, K.; Chmiel, M. The mediating effect of the need for cognition between aesthetic experiences and aesthetic competence in art. Sci. Rep. 2024, 14, 3408. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Yu, J.; Zhang, K.; Zheng, X.S.; Zhang, J. Computational aesthetic evaluation of logos. ACM Trans. Appl. Percept. (TAP) 2017, 14, 1–21. [Google Scholar] [CrossRef]

- Lo, C.H. Application of aesthetic principles to the study of consumer preference models for vase forms. Appl. Sci. 2018, 8, 1199. [Google Scholar] [CrossRef]

- Deng, L.; Wang, G. Quantitative Evaluation of Visual Aesthetics of Human-Machine Interaction Interface Layout. Comput. Intell. Neurosci. 2020, 2020, 9815937. [Google Scholar] [CrossRef] [PubMed]

- Lugo, J.E.; Schmiedeler, J.P.; Batill, S.M.; Carlson, L. Quantification of classical gestalt principles in two-dimensional product representations. J. Mech. Des. 2015, 137, 094502. [Google Scholar] [CrossRef]

- Valencia-Romero, A.; Lugo, J.E. An immersive virtual discrete choice experiment for elicitation of product aesthetics using Gestalt principles. Des. Sci. 2017, 3, e11. [Google Scholar] [CrossRef]

- Ngo, D.C.L.; Teo, L.S.; Byrne, J.G. Modelling interface aesthetics. Inf. Sci. 2003, 152, 25–46. [Google Scholar] [CrossRef]

- Zhou, A.; Ma, J.; Zhang, S.; Ouyang, J. Optimal Design of Product Form for Aesthetics and Ergonomics. Comput.-Aided Des. Appl. 2023, 20, 1–27. [Google Scholar] [CrossRef]

- Haeberli, P. Paint by numbers: Abstract image representations. In Proceedings of the 17th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 6–10 August 1990; pp. 207–214. [Google Scholar]

- Hertzmann, A.; Jacobs, C.E.; Oliver, N.; Curless, B.; Salesin, D.H. Image analogies. In Seminal Graphics Papers: Pushing the Boundaries; ACM: New York, NY, USA, 2023; Volume 2, pp. 557–570. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the International Conference on Computer Vision, Bombay, India, 7 January 1998. [Google Scholar]

- Efros, A.A. Image quilting for texture synthesis and transfer. In Proceedings of the Computer Graphics, Hong Kong, China, 6 July 2001. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A Neural Algorithm of Artistic Style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image Style Transfer Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Sheng, J.; Hu, G.; Li, Y. Emotional Rendering of 3D Indoor Scene with Chinese Elements. J. Front. Comput. Sci. Technol. 2024, 18, 465–476. [Google Scholar]

- Skorokhodov, I.; Tulyakov, S.; Elhoseiny, M. StyleGAN-V: A Continuous Video Generator with the Price, Image Quality and Perks of StyleGAN2. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wang, C.; Duan, Z.; Liu, B.; Zou, X.; Chen, C.; Jia, K.; Huang, J. Pai-diffusion: Constructing and serving a family of open chinese diffusion models for text-to-image synthesis on the cloud. arXiv 2023, arXiv:2309.05534. [Google Scholar]

- Chen, J.; Xu, G. The Application of Style Transfer Algorithm in the Design of Lacquer Art and Cultural Creation. J. Art Design 2020, 3, 82–85. [Google Scholar]

- Liu, Z.; Gao, F.; Wang, Y. A generative adversarial network for AI-aided chair design. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 486–490. [Google Scholar]

- Deng, Z.; Lyu, J.; Liu, X.; Hou, Y.; Wang, S. StyleGAN-based Sketch Generation Method for Product Design Renderings. Packag. Eng. 2023, 44, 188–195. [Google Scholar]

- Dai, Y.; Li, Y.; Liu, L.J. New Product Design with Automatic Scheme Generation. Sens. Imaging Int. J. 2019, 20, 29. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, 18–24 June 2022; pp. 10674–10685. [Google Scholar]

- Hollstien, R.B. Artificial Genetic Adaptation in Computer Control Systems; University of Michigan: Ann Arbor, MI, USA, 1971. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Guoqiang, C.; Zhengyi, S.; Li, S.; Mengfan, Z.; Tong, L. Intelligent Cockpit Perceptual Image Prediction Based on BP Neural Network Optimization Genetic Algorithm. Automot. Eng. 2023, 45, 1479–1488. [Google Scholar]

| Index ID | Aesthetic Index | Explanation of Meaning |

|---|---|---|

| Balance | Calculate the difference between the total weight of the elements on either side of the horizontal and vertical axes of symmetry. | |

| Symmetry | Calculate the degree of symmetry between interface elements in the 3 directions of vertical, horizontal, and diagonal. | |

| Proportionality | Calculates and compares the similarity between the scale values and the value scale between interface elements and layouts. | |

| Rhythmicity | Rhythm is the dynamic generated when elements appear continuously according to certain rules, and is expressed through the arrangement order, size ratio, quantity distribution, and form changes of elements. | |

| Sequentiality | Order is an indicator that quantifies whether the arrangement of interface elements conforms to the natural reading order of the human eye (from top to bottom, left to right, and large to small). | |

| Integrity | Wholeness is a measure of how compact the layout of the elements is. The higher the degree of wholeness, the lower the morphological complexity, the easier it is to identify, and the more harmonious the morphology. | |

| Regularity | The degree of regularity is the degree of alignment of the elements, including the left, right, top, and bottom alignment of the elements and the horizontal and vertical alignment of the centroid (the center of the smallest circumscribed rectangle). | |

| Common Directionality | Describes the parallelism of the contour lines by how parallel they are. | |

| Continuity | Continuity is a measure of the degree to which the visual continuity of the morphological elements within the contour line is grouped. | |

| Simplification | Simplification is a measure of the degree to which a morphological element within a contour line has a set of visual simplifications. | |

| Similarity | Similarity is a measure of the degree of visual similarity of morphological elements within the contour line. | |

| Proportional Similarity | The similarity ratio is a measure of the similarity of the aspect ratio of elements; the higher the similarity ratio, the more harmonious the form. | |

| Stability | Stability is a measure of the ability of a morphological element within a contour line to maintain its visual morphological stability. | |

| Hierarchy | The factors affecting the degree of hierarchy are as follows: first, the distance between different levels and the distance within the layer, and second, the number of layers. | |

| Contrast | Contrast refers to the difference in form and size of design elements, usually represented by changes in the length of the lines. | |

| Complexity | The complexity of the contour is described in terms of the relative increment in the circumference of the contour relative to the perimeter of its convex hull. |

| Number | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.891 | 0.943 | 0.870 | 0.421 | 0.875 | 0.547 | 0.083 | 0.981 | 0.993 | 0.400 | 0.180 | 0.515 | 0.592 | 0.764 | 0.734 | 0.626 |

| 2 | 0.961 | 0.989 | 0.851 | 0.445 | 0.500 | 0.467 | 0.191 | 0.975 | 0.992 | 0.588 | 0.108 | 0.542 | 0.573 | 0.854 | 0.556 | 0.659 |

| 3 | 0.939 | 0.944 | 0.834 | 0.425 | 0.750 | 0.456 | 0.094 | 0.974 | 0.993 | 0.333 | 0.111 | 0.493 | 0.584 | 0.743 | 0.717 | 0.668 |

| 4 | 0.914 | 0.755 | 0.861 | 0.341 | 1.000 | 0.475 | 0.256 | 0.975 | 0.992 | 0.650 | 0.162 | 0.585 | 0.581 | 0.832 | 0.509 | 0.616 |

| 5 | 0.911 | 0.906 | 0.795 | 0.457 | 0.500 | 0.521 | 0.375 | 0.981 | 0.993 | 0.533 | 0.207 | 0.567 | 0.599 | 0.828 | 0.516 | 0.733 |

| 6 | 0.923 | 0.679 | 0.857 | 0.429 | 0.625 | 0.481 | 0.352 | 0.976 | 0.993 | 0.682 | 0.294 | 0.577 | 0.581 | 0.722 | 0.730 | 0.644 |

| 7 | 0.924 | 0.370 | 0.846 | 0.445 | 0.500 | 0.585 | 0.231 | 0.978 | 0.993 | 0.600 | 0.216 | 0.565 | 0.914 | 0.753 | 0.717 | 0.599 |

| 8 | 0.935 | 0.549 | 0.846 | 0.411 | 0.750 | 0.544 | 0.103 | 0.981 | 0.993 | 0.529 | 0.189 | 0.527 | 0.879 | 0.769 | 0.738 | 0.561 |

| 9 | 0.886 | 0.787 | 0.851 | 0.416 | 1.000 | 0.322 | 0.054 | 0.979 | 0.993 | 0.357 | 0.157 | 0.567 | 0.595 | 0.844 | 0.516 | 0.644 |

| 10 | 0.939 | 1.104 | 0.858 | 0.432 | 0.750 | 0.418 | 0.053 | 0.980 | 0.993 | 0.421 | 0.174 | 0.504 | 0.589 | 0.765 | 0.721 | 0.608 |

| 11 | 0.936 | 1.118 | 0.814 | 0.431 | 0.750 | 0.402 | 0.115 | 0.980 | 0.993 | 0.417 | 0.053 | 0.525 | 0.590 | 0.766 | 0.720 | 0.738 |

| 12 | 0.899 | 1.168 | 0.874 | 0.420 | 0.750 | 0.401 | 0.074 | 0.979 | 0.992 | 0.588 | 0.213 | 0.530 | 0.580 | 0.841 | 0.530 | 0.663 |

| 13 | 0.882 | 1.078 | 0.844 | 0.445 | 0.625 | 0.507 | 0.102 | 0.976 | 0.993 | 0.563 | 0.210 | 0.532 | 0.590 | 0.834 | 0.529 | 0.658 |

| 14 | 0.916 | 0.409 | 0.823 | 0.487 | 0.500 | 0.536 | 0.075 | 0.981 | 0.993 | 0.400 | 0.170 | 0.564 | 0.580 | 0.738 | 0.733 | 0.712 |

| 15 | 0.926 | 0.816 | 0.846 | 0.439 | 0.750 | 0.311 | 0.075 | 0.977 | 0.985 | 0.200 | 0.040 | 0.507 | 0.589 | 0.749 | 0.729 | 0.813 |

| 16 | 0.901 | 0.100 | 0.814 | 0.417 | 0.625 | 0.502 | 0.054 | 0.980 | 0.993 | 0.429 | 0.133 | 0.568 | 0.590 | 0.843 | 0.511 | 0.685 |

| 17 | 0.915 | 1.415 | 0.878 | 0.444 | 0.750 | 0.548 | 0.086 | 0.977 | 0.993 | 0.563 | 0.148 | 0.513 | 0.882 | 0.778 | 0.721 | 0.662 |

| 18 | 0.929 | 0.722 | 0.842 | 0.412 | 0.750 | 0.602 | 0.063 | 0.979 | 0.993 | 0.333 | 0.115 | 0.472 | 0.589 | 0.754 | 0.738 | 0.720 |

| 19 | 0.906 | 0.922 | 0.865 | 0.429 | 1.000 | 0.458 | 0.045 | 0.978 | 0.992 | 0.636 | 0.207 | 0.557 | 0.600 | 0.838 | 0.520 | 0.558 |

| 20 | 0.909 | 0.305 | 0.813 | 0.444 | 0.500 | 0.385 | 0.037 | 0.977 | 0.993 | 0.200 | 0.035 | 0.407 | 0.591 | 0.828 | 0.522 | 0.832 |

| Sample ID | Evaluation Value | Ranking | Sample ID | Evaluation Value | Ranking |

|---|---|---|---|---|---|

| 1 | 0.600 | 6 | 11 | 0.592 | 9 |

| 2 | 0.568 | 16 | 12 | 0.580 | 11 |

| 3 | 0.578 | 12 | 13 | 0.573 | 15 |

| 4 | 0.612 | 5 | 14 | 0.548 | 18 |

| 5 | 0.595 | 7 | 15 | 0.568 | 16 |

| 6 | 0.614 | 4 | 16 | 0.526 | 19 |

| 7 | 0.656 | 2 | 17 | 0.685 | 1 |

| 8 | 0.655 | 3 | 18 | 0.578 | 12 |

| 9 | 0.574 | 14 | 19 | 0.595 | 7 |

| 10 | 0.584 | 10 | 20 | 0.507 | 20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, A.; Wang, X.; Huang, Y.; Wang, W.; Zhang, S.; Ouyang, J. Product Image Generation Method Based on Morphological Optimization and Image Style Transfer. Appl. Sci. 2025, 15, 7330. https://doi.org/10.3390/app15137330

Zhou A, Wang X, Huang Y, Wang W, Zhang S, Ouyang J. Product Image Generation Method Based on Morphological Optimization and Image Style Transfer. Applied Sciences. 2025; 15(13):7330. https://doi.org/10.3390/app15137330

Chicago/Turabian StyleZhou, Aimin, Xinle Wang, Yujin Huang, Weitang Wang, Shutao Zhang, and Jinyan Ouyang. 2025. "Product Image Generation Method Based on Morphological Optimization and Image Style Transfer" Applied Sciences 15, no. 13: 7330. https://doi.org/10.3390/app15137330

APA StyleZhou, A., Wang, X., Huang, Y., Wang, W., Zhang, S., & Ouyang, J. (2025). Product Image Generation Method Based on Morphological Optimization and Image Style Transfer. Applied Sciences, 15(13), 7330. https://doi.org/10.3390/app15137330