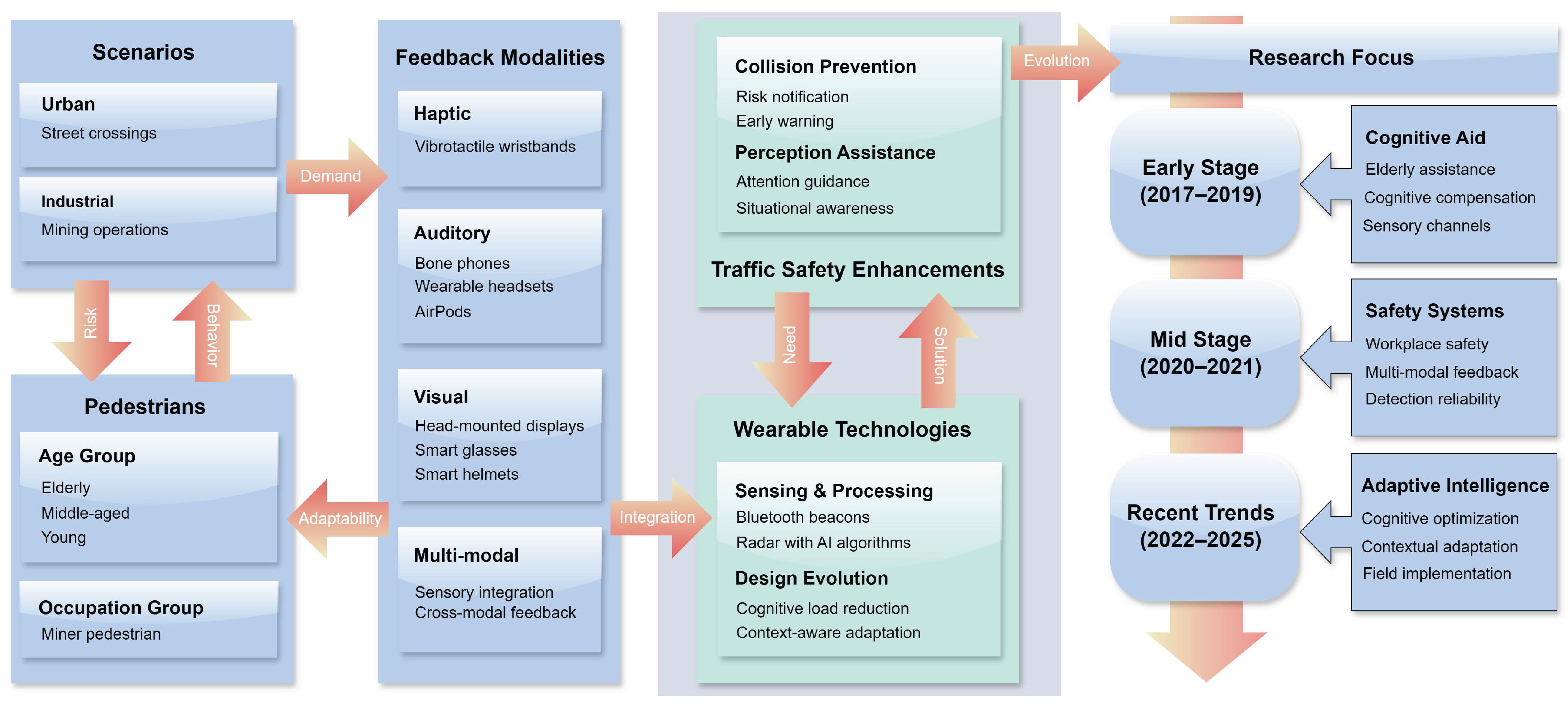

4.1. Wearable Safety Solutions for Pedestrians

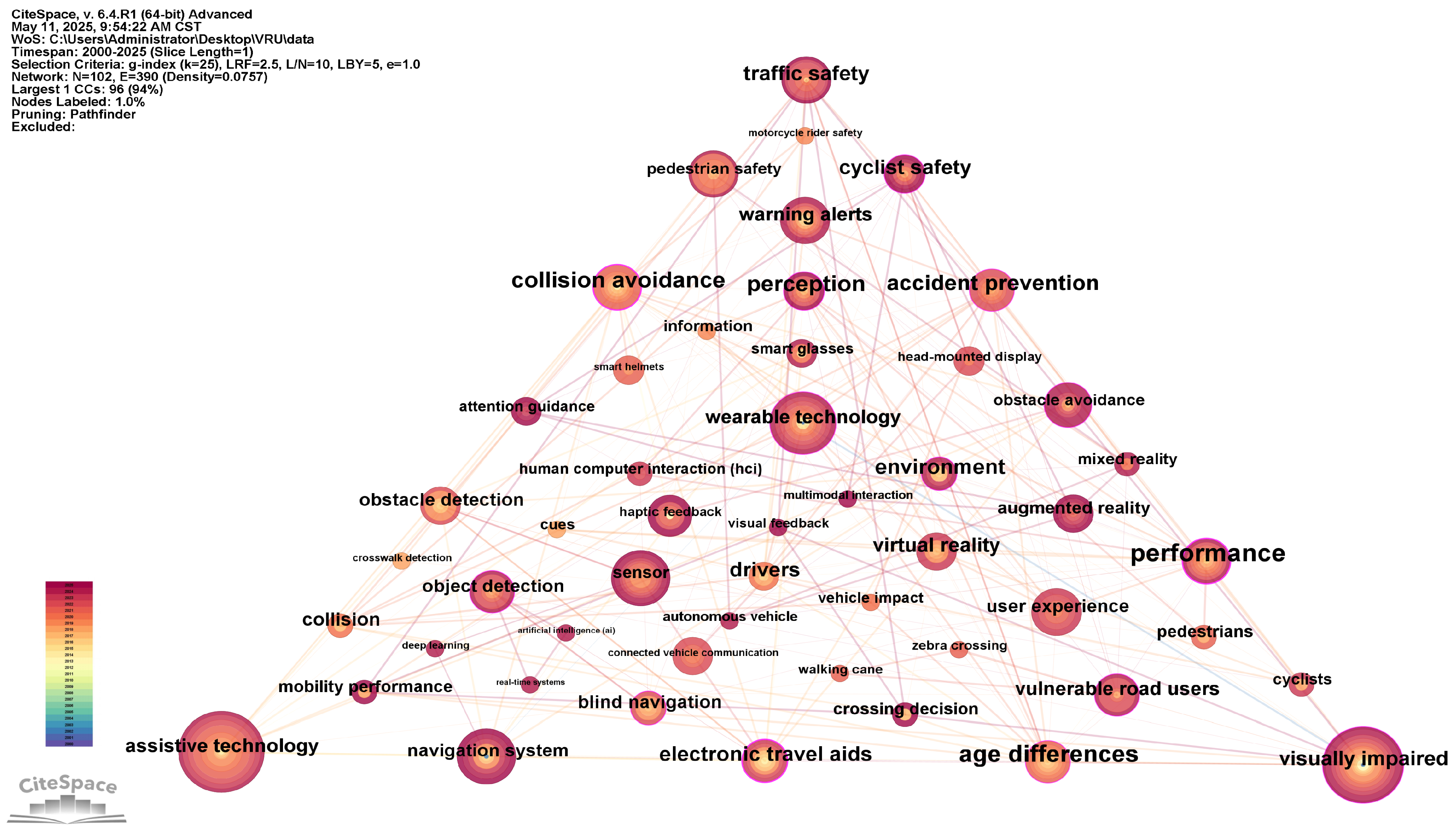

Eleven research papers have focused on utilizing wearable technology to enhance pedestrian traffic safety (

Table 6), with three targeting elderly pedestrians [

30,

31,

32] and two addressing mine workers [

33,

34]. Existing wearable devices in these studies can be categorized into haptic feedback devices, such as vibrotactile wristbands [

30,

31]; auditory feedback devices, including bone conduction headphones [

31] and wearable headsets [

35]; visual feedback devices, such as AR systems [

31,

36,

37,

38,

39] and VR headsets [

32]; smart protective equipment, including smart glasses [

33] and helmets [

34]; and wearables with integrated radar sensors [

40].

In these studies, Cœugnet et al. conducted the earliest research targeting elderly pedestrians, developing a vibrotactile wristband in 2017 that significantly improved street-crossing safety for older adults [

30], particularly reducing collision risks for older women in far lanes and with fast-approaching vehicles. This tactile system effectively addressed cognitive deficits in elderly people’s perception of vehicle gaps. Building on this initial experience, the research of Dommes et al. further compared vibration wristbands, bone conduction headphones, and AR visual feedback for elderly navigation [

31], finding all sensory assistance methods superior to traditional paper maps. Among these, combined audiovisual feedback devices proved especially effective for the oldest elderly participants. Stafford et al. used head-mounted displays to conduct perception training for elderly individuals in virtual street-crossing tasks [

32]. The research demonstrated that different auditory cues could effectively guide older adults to reallocate attention to specific or non-specific visual information during street-crossing decisions. This finding provided new directions for perceptual training in developing road safety interventions for the elderly.

Beyond research focused on older adults, wearable devices for pedestrian safety in specialized work environments have also received attention. Baek et al. developed a smart glasses system that accurately receives proximity warnings from heavy equipment or vehicles via Bluetooth beacons [

33], delivering visual feedback to workers. The system effectively detects equipment at distances of at least 10 m regardless of pedestrian orientation, achieving a 100% alarm success rate in all 40 tests [

33] while maintaining workers’ efficiency. Subsequently, Kim et al. from the same research team further developed a smart helmet system [

34], also using Bluetooth beacons to receive vehicle approach information but providing visual warnings through LED light strips. Compared to smart glasses, smart helmets not only reduced workers’ cognitive burden and stress while freeing their hands to maintain work efficiency but also scored lower in calculated overall workload ratings, demonstrating superior user experience.

Meanwhile, research into wearable devices and augmented reality technology for pedestrian safety in ordinary road environments has also deepened. Tong et al. developed an AR warning system that provides collision risk information to pedestrians through wearable glasses [

36]. The system displays vehicle paths, conflict points, and collision times in real time, guiding attention through elements such as sidebars and arrows, particularly suitable for pedestrians with obstructed views or low situational awareness, providing timely and intuitive warning information. The experiments of Wu et al. revealed the impact of matching distraction with warning modality [

37], finding that when distraction and warnings use different sensory channels (visual and auditory), pedestrians exhibit more cautious street-crossing behavior, including reduced walking speed, expanded visual scanning range, and faster responses to vehicles. Furthermore, Tran et al. refined visual prompt research through VR simulation [

38], comparing dynamic zebra crossings, green vehicle overlays, and their combinations, revealing that pedestrians feel safer with multiple visual prompts combined, though green vehicle overlays tend to be overlooked in multi-vehicle environments. Building on these theoretical research insights, Clérigo et al. advanced research to practical application stages [

39], providing pedestrians with two types of visual feedback through AR headsets: arrow-indicated collision warnings and virtual traffic lights. The experimental results validated that these AR assistance methods significantly improved pedestrians’ sense of safety and reduced cognitive load, further confirming the value of AR technology in pedestrian crossing safety.

Beyond visual feedback, auditory feedback and radar sensing also have important applications in pedestrian safety. The research of Ito et al. on AirPods Pro-based auditory notification systems showed high acceptance of voice notifications during outdoor walking [

35], though requiring intelligent identification of appropriate notification timing. This echoes the emphasis of Wu et al. on how sensory channel selection affects pedestrian behavior [

37], jointly indicating the need for careful consideration of notification modality and timing when designing pedestrian safety systems. Wang et al. developed a wearable device based on radar sensors [

40], providing 4–8 s of warning time through fuzzy evaluation and neural network algorithms, achieving 80% warning accuracy across 1000 scenarios and significantly improving the system’s adaptability and safety assurance capability under various environmental conditions [

40].

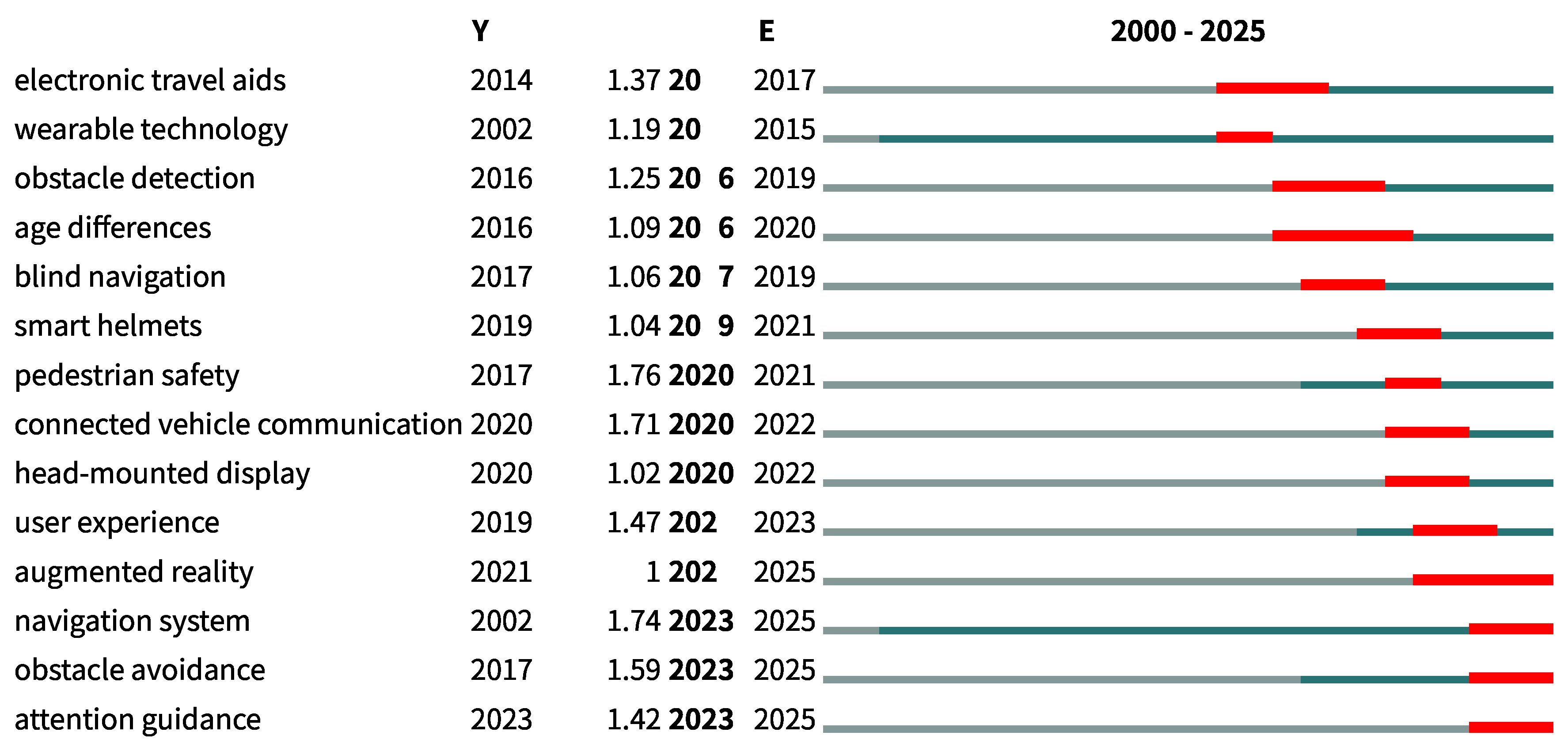

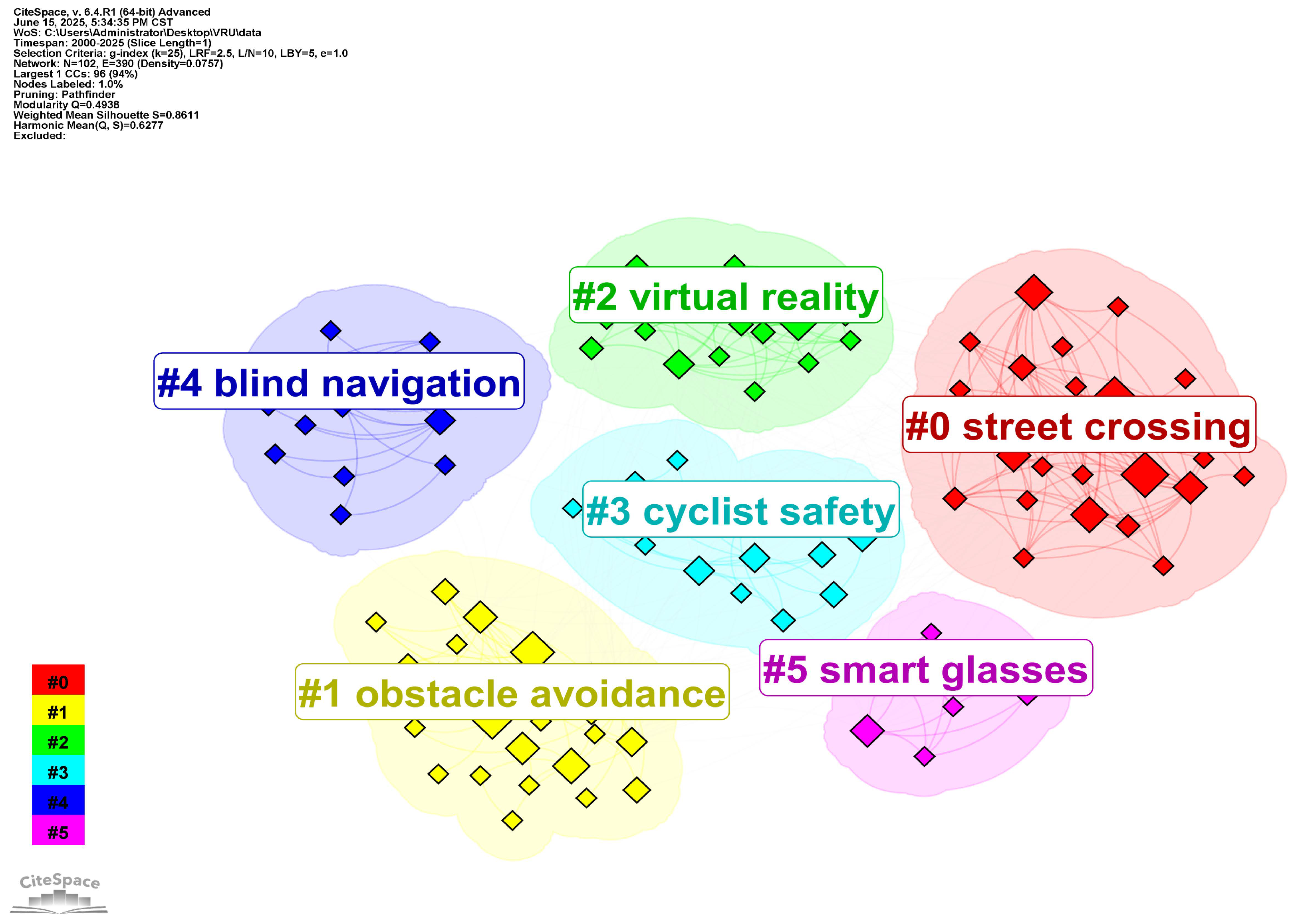

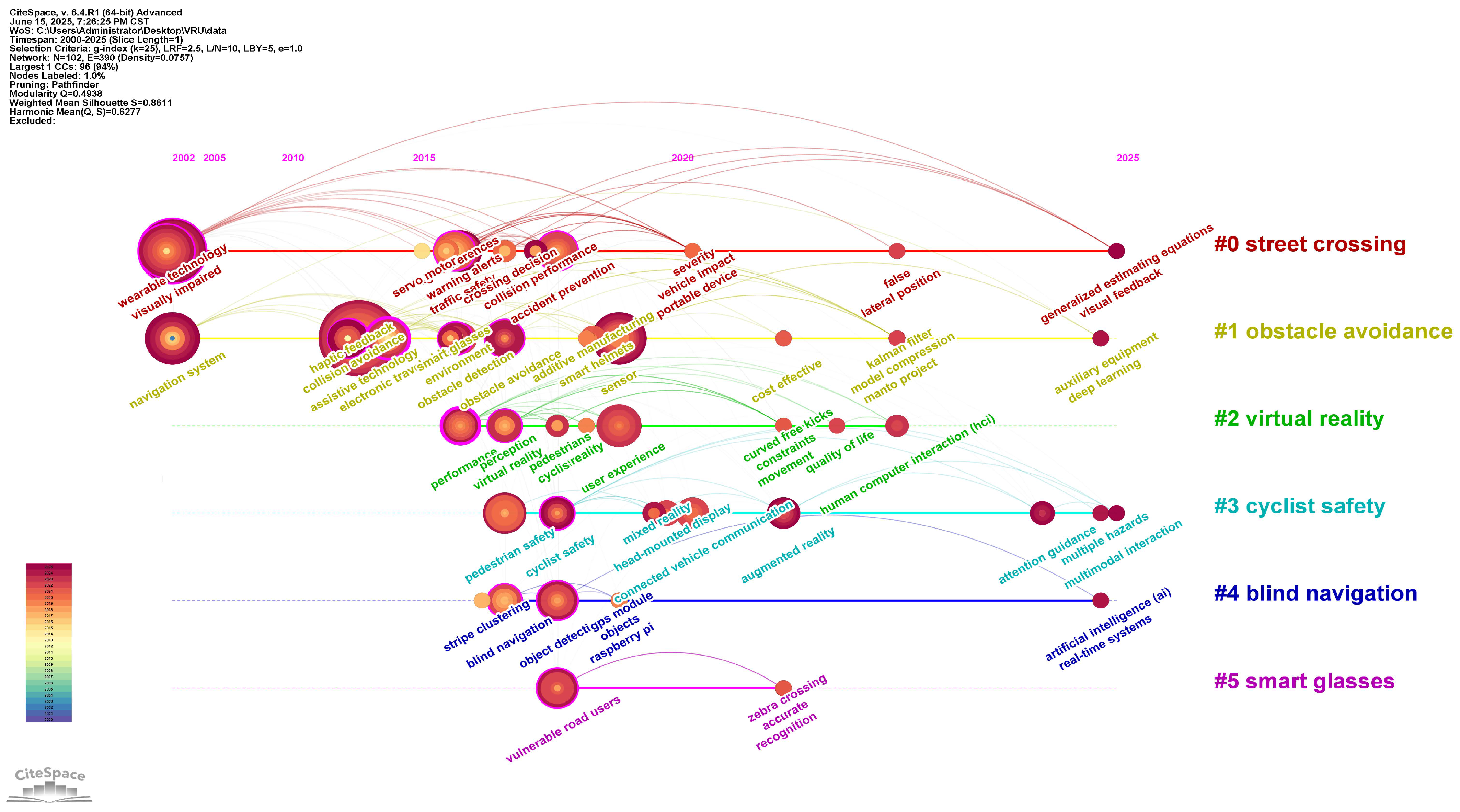

Examining the evolution of pedestrian safety wearable technologies (

Table 6 and

Figure 9), the research focus has progressed from simple alerts through single sensory channels to intelligent warning systems with multisensory integration, aligning with the evolutionary trend from “pedestrian safety” toward “multimodal interaction” in the “#3 cyclist safety” cluster. These advanced solutions provide second-level warnings before pedestrian decision-making, dynamically allocate attention resources, and significantly reduce cognitive load, reflecting a critical shift from passive protection to active perception enhancement. Current research is advancing in two key directions: first, enhancing long-term wearability and user compliance through lightweight hardware design and personalized interaction mechanisms; second, validating both behavioral and physiological indicators in real-road environments or immersive mixed-reality settings, which resonates with the virtual reality application innovation methods demonstrated in the “#2 virtual reality” cluster, thereby establishing solid engineering foundations and human factors support for large-scale deployment of smart transportation systems.

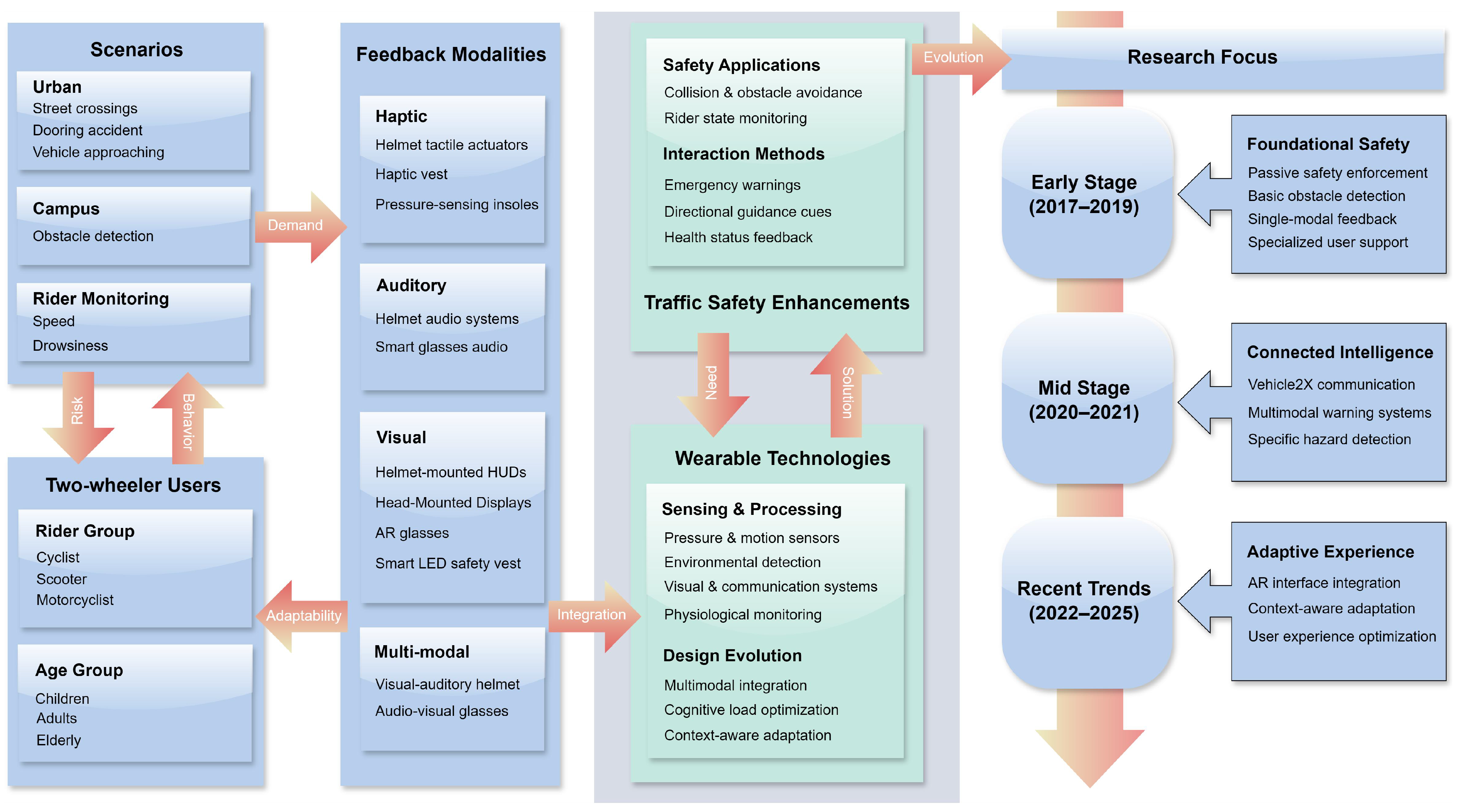

4.2. Wearable Technologies for Two-Wheeler Users

Research on enhancing traffic safety for two-wheeler users through wearable technology encompasses 16 studies (

Table 7). These studies address various types of two-wheeler users, including cyclists [

41,

42,

43,

44,

45,

46,

47,

48,

49,

50], scooter riders [

51,

52,

53], and motorcyclists [

54,

55,

56]. Existing research on wearable devices for two-wheeler users can be primarily categorized as smart helmets [

41,

44,

45,

46,

47,

51,

54,

55,

56], smart glasses and augmented reality displays [

49,

50,

53], smart clothing, such as jerseys and vests [

43,

48,

50], wearable sensor systems [

42], and specialized footwear [

52].

With advancements in connected vehicle technology, research on cyclist safety systems has evolved from single-function to multi-function integration and from unimodal to multimodal interaction approaches. Matviienko et al. discovered that unimodal signals are suitable for directional cues, while multimodal signals are more effective for emergency situations, successfully reducing children’s reaction times and accident rates [

41]. von Sawitzky et al. developed a helmet-mounted display system that warns against “dooring accidents” [

44,

46], proving that audiovisual cue combinations enhance cycling safety, with participants strongly preferring interactions incorporating auditory feedback [

47]. Their subsequent research [

49] emphasized that effective warning systems should be concise, intuitive, and display only critical hazard information. The systematic evaluation [

50] of Ren et al. further validated this direction, finding that arrow visual cues were most efficient in unimodal interfaces, while visual-haptic combined feedback significantly improved user experience while reducing cognitive load.

To achieve these objectives, researchers have explored technical solutions for improving bicycle safety through two key dimensions: perception and interaction. In perception technology, Bieshaar et al. [

42] and Bonilla et al. [

43] focused on rider behavior recognition and physiological monitoring, respectively—the former achieving high-precision detection of starting movements through smart devices, while the latter developed an integrated solution combining physiological monitoring with light signaling systems. For interaction methods, the haptic feedback helmet [

45] of Vo et al. demonstrated that head-based tactile feedback efficiently communicates directional and proximity information, while the smart vest of Mejia et al. [

48] showcased the value of adaptive visual feedback in low-visibility conditions. These studies collectively indicate that cycling safety systems must flexibly select information modalities based on specific contexts—maintaining simplicity in ordinary situations while appropriately increasing multimodal redundancy in high-risk scenarios—while carefully avoiding information overload to ensure balanced attention allocation for cyclists. Overall, cycling safety research demonstrates a progression from purely technical validation toward user experience optimization, with research priorities gradually shifting from performance metrics to usability and user acceptance in real-world cycling environments, reflecting the field’s transition into a more mature application phase.

With the proliferation of electric micro-mobility, electric scooter safety technology has emerged as a research focus, with solutions incorporating features from both bicycle and motorcycle safety systems. Hung et al. designed a helmet system specifically for elderly riders that incorporates ultrasonic sensing technology [

51], precisely detecting surrounding obstacles and providing visual warnings to prompt appropriate speed reduction in hazardous situations. Gupta et al. developed innovative pressure-sensitive insoles [

52] that monitor rider balance in real time and provide intelligent path assistance, particularly beneficial for riders with varying proficiency levels navigating complex terrain. Matviienko et al. compared different warning mechanisms through an augmented reality environment [

53], with the results indicating that combined auditory and AR visual warnings provide faster hazard reaction times and enhanced safety experiences, establishing a direction for future electric scooter safety technology development. The research progression reveals a gradual shift from simple sensing to multidimensional interactive warnings in electric scooter safety technology, with future advancements promising more intuitive and efficient safety systems through deep integration of sensing technologies and augmented reality.

Similar safety enhancement principles have been applied to motorcycle applications, though with greater emphasis on rider state monitoring and proactive hazard detection. Mohd Rasli et al. developed a system using pressure sensors to verify proper helmet wearing as a prerequisite for engine ignition [

54] while incorporating speed warning functionality. Muthiah et al. advanced helmet intelligence by ingeniously utilizing three-axis accelerometers to sense head movements for controlling headlight direction [

55], while incorporating drowsiness detection that automatically triggers alerts when riders show signs of fatigue. The system of Chang et al. further integrated license plate recognition technology, using infrared transceivers and image processing to identify approaching large vehicles in real time with 75% daytime and 70% nighttime recognition accuracy [

56], providing timely voice warnings to help riders address blind spot hazards. Research developments indicate that motorcycle safety technology is rapidly evolving toward multisensor fusion and intelligent warning systems, with the focus expanding from purely passive safety constraints to comprehensive safety systems that include rider physiological state monitoring and active environmental hazard identification.

Examining the evolution of wearable safety technologies for two-wheeler users (

Table 7 and

Figure 10), the research focus has progressed from basic alerts with single functionalities to intelligent systems integrating multiple sensing and feedback capabilities, perfectly aligning with the rapid evolution of cycling safety research toward intelligent systems, as presented in the “#3 cyclist safety” cluster. These innovative solutions flexibly select information modalities according to specific scenarios—maintaining simplicity and intuitiveness in ordinary situations while appropriately increasing feedback redundancy in high-risk contexts—effectively balancing safety assurance with cognitive load. Current research is advancing in two key directions: first, shifting from purely technical validation toward greater emphasis on user experience optimization, focusing on usability and acceptance in practical application environments, reflecting the integration of technology from basic “cyclist safety” research toward “head-mounted display” and “connected vehicle communication”; second, through the integration of sensing technologies with intelligent algorithms, achieving a transformation from passive safety constraints to active hazard identification and warning, echoing the emerging research priorities of “attention guidance” and “multimodal interaction,” providing riders with more intuitive and efficient safety protection systems.

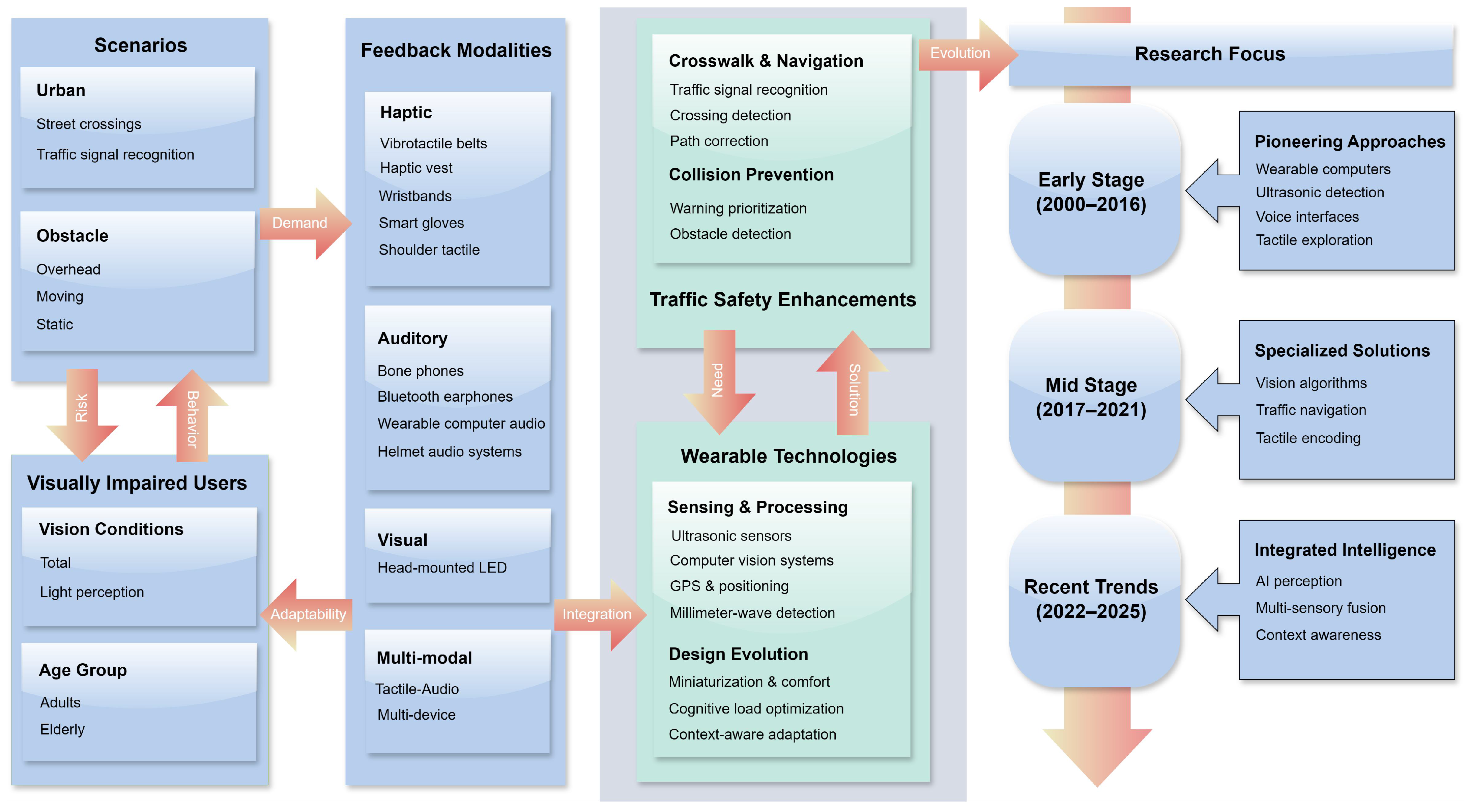

4.3. Wearable Assistive Devices for Visually Impaired Users

Visual impairment presents numerous challenges in daily activities, particularly in crossing streets, independent navigation, and obstacle avoidance. With the advancement of wearable technology, researchers have developed various assistive devices to enhance mobility safety and independence for visually impaired individuals. The literature review identifies 31 studies (

Table 8 and

Table 9), which can be categorized into smart glasses and head-mounted systems [

6,

29,

57,

58,

59,

60], tactile feedback and wearable vibration systems [

8,

61,

62,

63,

64,

65,

66,

67], chest/neck-mounted systems [

5,

68,

69,

70,

71], multimodal sensing systems [

72,

73,

74,

75,

76,

77,

78], and wearable computers with voice interfaces [

79,

80,

81,

82,

83].

In the early development of assistive technologies for the visually impaired, wearable computer systems played a pioneering role. Ross et al. evaluated three interfaces—virtual sound beacons, digitized speech, and tactile tapping—in street crossing scenarios [

61], finding that the combination of tactile cues and voice output most effectively helped visually impaired pedestrians cross streets safely. In their subsequent research, they optimized this system [

79], confirming that shoulder tactile feedback performed best in body orientation mode, significantly reducing users’ deviation when crossing streets and establishing the theoretical foundation for multimodal interaction. Meanwhile, Helal et al. developed a navigation system capable of calculating optimal routes based on user preferences [

80], providing real-time guidance through voice prompts, significantly enhancing the independent mobility of visually impaired individuals in complex campus environments. To address the challenge of recognizing navigation instructions in noisy environments, Wilson et al. innovatively employed non-speech sound beacons to replace traditional voice commands [

72], effectively overcoming the limitations of voice instructions in noisy environments and improving system adaptability across various environmental conditions. However, regarding wearable technology applications, Tapu et al. conducted a comprehensive comparison of 12 different wearable technology solutions [

70], indicating that no single system could fully meet all needs, and future development should focus on complementing rather than replacing the traditional white cane. Although these early systems pioneered the exploration of various feedback modes, they were limited by the processing capabilities and sensor technologies of the time, facing challenges such as large device size and short battery life in practical applications. These limitations prompted researchers to seek more lightweight solutions focused on specific needs, such as chest/neck-mounted systems for upper body protection.

Compared to computer-assisted systems, neck and chest-mounted devices offered an innovative approach, particularly addressing the issue of upper body obstacles that traditional white canes struggle to detect. Jameson et al. developed a head collision warning system using chest-mounted miniature ultrasonic sensors [

68], capable of precisely detecting obstacles within a 1.5-m range, providing visually impaired individuals with sufficient reaction time. Based on a similar concept, Villamizar et al. designed a necklace-like ultrasonic system [

69] that provided environmental awareness assistance through tactile feedback, effectively reducing upper body collisions during walking and significantly improving the safety of independent travel for visually impaired individuals. With advances in computer vision technology, the chest-mounted camera systems by Abobeah et al. [

71] and Li et al. [

75] not only detected obstacles but also identified traffic signals and pedestrian crossings, guiding users to safely cross intersections through voice instructions. Furthermore, the chest-mounted binocular camera system [

5] of Asiedu Asante et al. further improved the accuracy and intelligence of obstacle detection, automatically prioritizing obstacles based on distance and movement status and providing users with more precise warning information. These designs focus on addressing the blind spots of white canes while not interfering with users’ ability to operate the white cane, showing a technological evolution from simple ultrasonic sensing to the integration of computer vision and intelligent processing. While these systems effectively addressed upper body protection issues, they still primarily relied on voice prompts for environmental information transmission, which had limitations in noisy environments, prompting researchers to explore alternative perception channels such as tactile feedback.

In the development of sensory feedback technologies, tactile feedback has become an essential component of navigation systems for the visually impaired due to its intuitiveness and reliability in noisy environments. Adame et al. developed a belt system integrating mobile phone vibration motors into wearable devices [

62], conveying direction and distance information through vibrations of varying positions and intensities, which was simple to use and required almost no special training. Similarly, based on waist-worn concepts, the smart belt of Hung et al. utilized different frequencies from vibration motors on the left and right sides [

67] to effectively distinguish between guidance path directions and obstacle positions. In terms of hand-based tactile feedback, the wrist-worn ultrasonic sensor system of Petsiuk et al. [

63] enabled users to successfully complete complex navigation tasks after brief training, while the smart glove systems by Bhattacharya et al. [

65] and Shen et al. [

66] transmitted more detailed spatial information by stimulating different fingers, with tests showing users could identify obstacle positions with high accuracy and successfully avoid collisions. The dual wristband system by Pundlik et al. provided three-directional collision warnings [

64], not only significantly reducing the number of collisions but also improving the rate of correct navigation decisions when directional information was available. Regarding feedback mode selection, a comparative study by Ren et al. [

8] found that although visual feedback (LED light strips) had certain advantages in decision-making efficiency, most visually impaired users still preferred the comfort and intuitiveness of tactile feedback (vibration vests). These systems are particularly suitable for noisy environments and scenarios requiring auditory perception of environmental sounds, providing visually impaired individuals with a supplementary perception channel beyond hearing. Research trends indicate that this field is moving toward more refined vibration encoding, expressing richer spatial relationship information through varied vibration patterns. Despite tactile feedback’s excellent performance in noisy environments, its limited information transmission capacity makes it difficult to express complex scene details, a limitation that has driven the development of more comprehensive head-mounted systems.

Beyond tactile feedback systems, smart glasses and head-mounted systems have gradually become mainstream in assistive research for the visually impaired due to their convenient wear and natural field-of-view advantages, demonstrating a development trend from single feedback to multimodal integration. Mohamed Kassim et al. designed an electronic glasses system that provided obstacle warnings through single-ear headphones or wrist vibrators [

57], enabling users to correctly identify obstacle positions in a short time and effectively enhancing environmental perception capabilities. In technical implementation, the glasses system of Kang et al. innovatively applied perspective projection geometry to measure grid shape changes [

81], providing directional auditory feedback through Bluetooth headphones and achieving significant progress in the accuracy and processing speed of obstacle detection. Addressing the critical scenario of traffic safety, Cheng et al. developed specialized systems for crosswalk navigation [

82] and intersection navigation [

83], using head-mounted cameras and bone conduction headphones to identify crosswalks and traffic light status in complex environments, improving the crossing safety of visually impaired pedestrians. Son et al. conducted two in-depth studies based on this foundation [

58,

59], further enhancing the accuracy and fluency of visually impaired pedestrians in crosswalk navigation by optimizing traffic light recognition algorithms and navigation instruction systems. Recent research shows a trend toward multisensory integration, with Scalvini et al. combining 3D spatialized sound technology with helmet-mounted cameras [

60], creating a more intuitive obstacle perception experience, and the smart glasses and smartphone combination system of Gao et al. integrated advanced computer vision and multisensor fusion technology, providing dual voice and tactile feedback and achieving a 100% obstacle avoidance rate in complex indoor and outdoor environment tests [

6]. With the advantage of simulating natural visual perception processes, these systems are particularly suitable for complex street and traffic scenarios. Research trends indicate the field is moving toward multisensory integration and full-scenario adaptability, providing more intuitive and precise environmental understanding capabilities through the integration of advanced sensors and intelligent algorithms. However, even the most advanced single-type systems struggle to meet the needs of visually impaired users in all scenarios, a recognition that has prompted researchers to further explore multimodal systems that deeply integrate the advantages of different technologies.

From the perspective of overall technological evolution, multimodal systems embody the convergent development direction of assistive technologies for the visually impaired by integrating different types of sensors and feedback methods. Kumar et al. developed a system combining head-mounted cameras, ultrasonic sensors, and voice output [

73], capable of providing comprehensive obstacle information and position navigation under various environmental conditions. The auditory display framework of Khan et al. achieved efficient obstacle recognition and scene understanding in outdoor environments through computer vision and multisensor data fusion [

74]. Addressing the key challenge of crossing scenarios, Chang et al. designed an assistive system integrating smart glasses, waist-mounted devices, and smart canes [

29], forming a complete information collection and feedback loop capable of real-time crosswalk recognition and guiding users to maintain the correct path. With the enhancement of mobile computing capabilities, Meliones et al. used smartphones as the core processing unit [

76], working in conjunction with wearable cameras and servo motor-controlled ultrasonic sensors to achieve real-time obstacle detection and precise voice instruction generation. The AI-SenseVision system by Joshi et al. [

78] provided more intelligent environmental perception capabilities through advanced object detection algorithms and ultrasonic sensing collaboration. In terms of sensor optimization, research by Flórez Berdasco et al. provided important design guidance for multimodal systems, finding that high-gain antennas performed better in medium-distance obstacle detection, while low-gain antennas were more effective in short-distance imaging applications [

77], which is a finding with significant reference value for integrated systems that need to simultaneously satisfy different usage scenarios. By intelligently integrating multiple perception channels and feedback methods, these systems are particularly suitable for complex and variable urban environments and high-risk traffic scenarios. Research trends indicate that the field is evolving from simple technology superposition toward deep, intelligent integration, with the popularization of mobile computing platforms, such as smartphones, providing a solid foundation for practical applications.

Examining the evolution of wearable assistive technologies for visually impaired individuals (

Table 8 and

Table 9, and

Figure 11), the research focus has progressed from early basic obstacle detection and simple path navigation to intelligent systems capable of processing complex street scenarios and precisely identifying traffic signals, highly consistent with the systematic evolution from basic research on “wearable technology” and “visually impaired” to “warning alerts” and “vehicle impact” in the “#0 street crossing” cluster. These technical solutions flexibly select perception modalities according to usage scenarios and environmental conditions—prioritizing tactile feedback in noisy environments while integrating auditory and multimodal feedback when complex information needs to be conveyed—effectively balancing information transmission with cognitive load. Current research is advancing in three key directions: first, shifting from technology-driven approaches toward deeper user-need orientation, designing differentiated solutions for totally blind, low-vision, and light-perception capable visually impaired groups, reflecting an evolution from basic assistance to precise prevention; second, through miniaturization design and intelligent algorithm optimization, improving device wearability and long-term use experience and reducing physical and cognitive burden on users; third, integrating wearable assistive devices with smart city infrastructure to enhance synergies among environmental perception, information processing, and user feedback, echoing the technological development path from “object detection” toward “artificial intelligence” and “active system” in the “#4 blind navigation” cluster and providing more comprehensive technical support for independent and safe mobility of visually impaired individuals.