Enhanced Localisation and Handwritten Digit Recognition Using ConvCARU

Abstract

1. Introduction

- New gradient-regularised GAN loss A modified GAN loss function is proposed that stabilises the adversarial training process and improves the quality of feature extraction, thus enhancing the visual fidelity of the predicted frames.

- Parameter-efficient ConvCARU gate By replacing traditional linear operations with CNN-based computations in the gating mechanisms, our ConvCARU design captures extended spatio-temporal dependencies while reducing computational overhead.

- Ablation-verified performance gain Systematic ablation studies confirm that each component of our approach—from the adaptive fusion of features to the decoupling of foreground and background information—significantly contributes to performance improvements measured in SSIM, PSNR, and memory efficiency compared to state-of-the-art methods.

2. Related Work

3. Proposed Method

3.1. Feature Extraction from Frame Sequences

3.2. Enhanced Feature Extraction and Sequence Decoupling

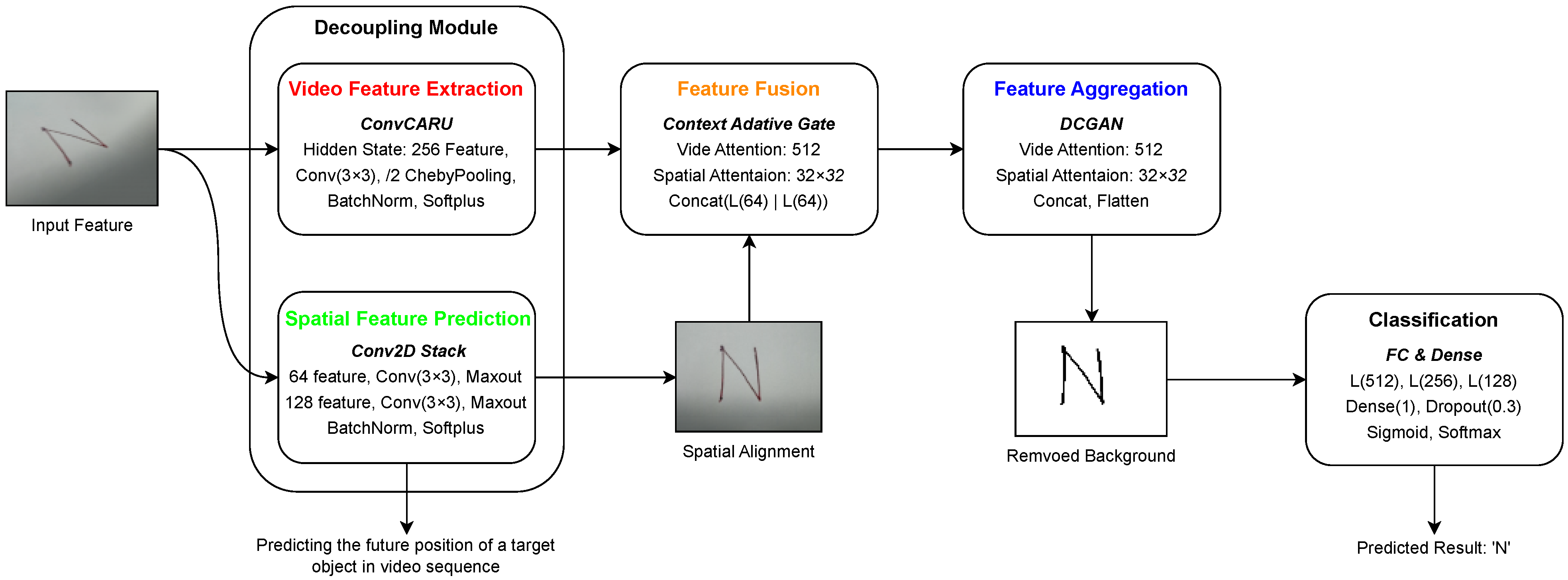

- Video feature extraction The initial processing step involves using ConvCARU to refine the input features by applying a series of convolutional operations, followed by a ChebyPooling layer [59], batch normalisation, and activation functions. This structure ensures the efficient encoding of temporal dependencies within the video frames.

- Spatial feature prediction A sequence of convolutional layers extracts spatial attributes using multi-channel feature mapping and activation functions, such as Maxout, as well as normalisation techniques. These spatial descriptors provide valuable information about the object’s positional variations.

- Feature fusion The extracted video and spatial features are integrated to improve contextual perception. This mechanism uses attention layers to prioritise the most relevant information from different sources.

- Feature aggregation A DCGAN-based aggregation unit is used to process feature fusion, with the aim of further refining structured patterns before classification.

- Final classification The final prediction is generated by processing the refined feature representations through FC layers with dropout regularisation and activation functions (e.g., Sigmoid or Softmax).

- ConvCARU for temporal modelling This component is responsible for capturing and propagating spatio-temporal dependencies with convolutional operations embedded in its gating mechanisms. By avoiding the use of traditional fully connected (FC) layers within the recurrent unit, it preserves crucial spatial information and improves parameter efficiency.

- Modified DCGAN for background and foreground separation Rather than integrating several potential pooling or segmentation strategies, we adopted a modified version of DCGAN that replaces conventional pooling with strided convolutions. This approach stabilises training and effectively decouples the dynamic (foreground) from the static (background) features.

- ChebyPooling for feature abstraction We incorporate a ChebyPooling layer, which uses the Chebyshev polynomial formulation to enhance the robustness of the pooling process, ensuring that spatial details are accurately retained.

3.3. Feature Extraction and Adaptive Fusion

- (6a)

- The input undergoes processing through a linear layer. The resulting output determines the next hidden state, which is passed to the content-adaptive gate. If the previous hidden state is unavailable, the output directly becomes .

- (6b)

- The linear layer combines the previous hidden state with the output of (6a), and the sum is processed by the tanh activation function to extract integrated information.

- (6c)

- The CARU’s update gate(s) facilitate hidden state transitions by combining the current input with the previous hidden state. This step uncovers relationships over content but introduces challenges in handling long-term dependencies.

- (6d)

- To address long-term dependency issues, this step evaluates the features of the current input, which are able to dynamically adjust through conduction, boosting or reducing the input for accurate predictions during RNN decoding.

- (6e)

- The final output is derived through linear interpolation.

3.4. Feature Aggregation and DCGAN

Role of DCGAN in Feature Aggregation

3.5. Integration with Attention Mechanism

4. Experimental Results and Discussion

4.1. Training Configuration and Strategy

- Training configuration The design and implementation of the model incorporate several crucial aspects to enhance its performance and adaptability. The training process employs the Adam optimiser [63] with a learning rate of 0.001, and sets to 0.5 and to 0.999. Each GPU is allocated a batch size of 32, and the training extends across 100 epochs. To refine the learning dynamics, a cosine annealing scheduling strategy is applied alongside a warm-up phase to stabilise the initial training stages.

- Model architecture The architecture itself incorporates ConvCARU layers with hidden dimensions of 128 and 256, enabling efficient sequential processing. An eight-headed attention mechanism is incorporated to strengthen the model’s ability to focus on relevant features. The feature fusion component, structured with 512 channels, also enhances multi-level information integration. With a total of 2.8 million parameters, the model is designed to handle complex pattern recognition tasks effectively.

- Data augmentation To further improve robustness, various data augmentation techniques are employed to further improve robustness. Training images undergo random rotation within a range of , while scaling variations between 0.9 and 1.1 are applied. Positional transformations introduce random translations of up to 10%, and Gaussian noise with a standard deviation of 0.01 is added to simulate real-world uncertainties. These augmentation strategies enhance the model’s ability to adapt to different input conditions, reducing its susceptibility to minor distortions or variations.

4.2. Performance Comparison

- and are the mean values of images x and y,

- and are the variances,

- is the covariance,

- and are small constants to avoid division by zero.

- is the maximum possible pixel value,

- is the Mean Squared Error.

- and are pixel values of the original and compressed images,

- N is the number of pixels.

- and are the mean feature vectors of real and generated videos,

- and are the covariance matrices,

- denotes the trace operation.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharma, V.; Gupta, M.; Kumar, A.; Mishra, D. Video Processing Using Deep Learning Techniques: A Systematic Literature Review. IEEE Access 2021, 9, 139489–139507. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, R.; Liu, F.; Yang, S.; Hou, B.; Li, L.; Tang, X. New Generation Deep Learning for Video Object Detection: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3195–3215. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Moetesum, M.; Diaz, M.; Masroor, U.; Siddiqi, I.; Vessio, G. A survey of visual and procedural handwriting analysis for neuropsychological assessment. Neural Comput. Appl. 2022, 34, 9561–9578. [Google Scholar] [CrossRef]

- Haddad, L.E.; Hanoune, M.; Ettaoufik, A. Computer Vision with Deep Learning for Human Activity Recognition: Features Representation. In Engineering Applications of Artificial Intelligence; Springer Nature: Cham, Switzerland, 2024; pp. 41–66. [Google Scholar] [CrossRef]

- Diaz, M.; Moetesum, M.; Siddiqi, I.; Vessio, G. Sequence-based dynamic handwriting analysis for Parkinson’s disease detection with one-dimensional convolutions and BiGRUs. Expert Syst. Appl. 2021, 168, 114405. [Google Scholar] [CrossRef]

- Hasan, T.; Rahim, M.A.; Shin, J.; Nishimura, S.; Hossain, M.N. Dynamics of Digital Pen-Tablet: Handwriting Analysis for Person Identification Using Machine and Deep Learning Techniques. IEEE Access 2024, 12, 8154–8177. [Google Scholar] [CrossRef]

- Tse, R.; Monti, L.; Im, M.; Mirri, S.; Pau, G.; Salomoni, P. DeepClass: Edge based class occupancy detection aided by deep learning and image cropping. In Proceedings of the Twelfth International Conference on Digital Image Processing (ICDIP 2020), Osaka, Japan, 19–22 May 2020; Fujita, H., Jiang, X., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 13. [Google Scholar] [CrossRef]

- Sánchez-DelaCruz, E.; Loeza-Mejía, C.I. Importance and challenges of handwriting recognition with the implementation of machine learning techniques: A survey. Appl. Intell. 2024, 54, 6444–6465. [Google Scholar] [CrossRef]

- AlKendi, W.; Gechter, F.; Heyberger, L.; Guyeux, C. Advancements and Challenges in Handwritten Text Recognition: A Comprehensive Survey. J. Imaging 2024, 10, 18. [Google Scholar] [CrossRef]

- Huang, X.; Chan, K.H.; Wu, W.; Sheng, H.; Ke, W. Fusion of Multi-Modal Features to Enhance Dense Video Caption. Sensors 2023, 23, 5565. [Google Scholar] [CrossRef]

- Gao, Z.; Tan, C.; Wu, L.; Li, S.Z. SimVP: Simpler yet Better Video Prediction. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 3160–3170. [Google Scholar] [CrossRef]

- Tan, C.; Gao, Z.; Li, S.; Li, S.Z. SimVPv2: Towards Simple yet Powerful Spatiotemporal Predictive Learning. IEEE Trans. Multimed. 2025, 1–15. [Google Scholar] [CrossRef]

- Hu, X.; Huang, Z.; Huang, A.; Xu, J.; Zhou, S. A Dynamic Multi-Scale Voxel Flow Network for Video Prediction. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 6121–6131. [Google Scholar] [CrossRef]

- Barrere, K.; Soullard, Y.; Lemaitre, A.; Coüasnon, B. Training transformer architectures on few annotated data: An application to historical handwritten text recognition. Int. J. Doc. Anal. Recognit. (IJDAR) 2024, 27, 553–566. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.B.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Shawkat Ali, A.B.M.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Im, S.K.; Chan, K.H. More Probability Estimators for CABAC in Versatile Video Coding. In Proceedings of the 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 23–25 October 2020; pp. 366–370. [Google Scholar] [CrossRef]

- Chan, K.H.; Ke, W.; Im, S.K. CARU: A Content-Adaptive Recurrent Unit for the Transition of Hidden State in NLP. In Neural Information Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 693–703. [Google Scholar] [CrossRef]

- Liu, B.; Lv, J.; Fan, X.; Luo, J.; Zou, T. Application of an Improved DCGAN for Image Generation. Mob. Inf. Syst. 2022, 2022, 9005552. [Google Scholar] [CrossRef]

- Vilchis, C.; Perez-Guerrero, C.; Mendez-Ruiz, M.; Gonzalez-Mendoza, M. A survey on the pipeline evolution of facial capture and tracking for digital humans. Multimed. Syst. 2023, 29, 1917–1940. [Google Scholar] [CrossRef]

- Aldausari, N.; Sowmya, A.; Marcus, N.; Mohammadi, G. Video Generative Adversarial Networks: A Review. ACM Comput. Surv. 2022, 55, 1–25. [Google Scholar] [CrossRef]

- Ometov, A.; Shubina, V.; Klus, L.; Skibińska, J.; Saafi, S.; Pascacio, P.; Flueratoru, L.; Gaibor, D.Q.; Chukhno, N.; Chukhno, O.; et al. A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges. Comput. Netw. 2021, 193, 108074. [Google Scholar] [CrossRef]

- Edriss, S.; Romagnoli, C.; Caprioli, L.; Zanela, A.; Panichi, E.; Campoli, F.; Padua, E.; Annino, G.; Bonaiuto, V. The Role of Emergent Technologies in the Dynamic and Kinematic Assessment of Human Movement in Sport and Clinical Applications. Appl. Sci. 2024, 14, 1012. [Google Scholar] [CrossRef]

- Pinheiro Cinelli, L.; Araújo Marins, M.; Barros da Silva, E.A.; Lima Netto, S. Variational Autoencoder. In Variational Methods for Machine Learning with Applications to Deep Networks; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 111–149. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Yan, D.; Deng, S.; Yang, Y. Revisiting Graph-based Recommender Systems from the Perspective of Variational Auto-Encoder. ACM Trans. Inf. Syst. 2023, 41, 1–28. [Google Scholar] [CrossRef]

- Wang, T.; Hou, B.; Li, J.; Shi, P.; Zhang, B.; Snoussi, H. TASTA: Text-Assisted Spatial and Temporal Attention Network for Video Question Answering. Adv. Intell. Syst. 2023, 5, 2200131. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Qi, W.; Yang, F.; Xu, J. FreqGAN: Infrared and Visible Image Fusion via Unified Frequency Adversarial Learning. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 728–740. [Google Scholar] [CrossRef]

- Navidan, H.; Moshiri, P.F.; Nabati, M.; Shahbazian, R.; Ghorashi, S.A.; Shah-Mansouri, V.; Windridge, D. Generative Adversarial Networks (GANs) in networking: A comprehensive survey & evaluation. Comput. Netw. 2021, 194, 108149. [Google Scholar] [CrossRef]

- Khoramnejad, F.; Hossain, E. Generative AI for the Optimization of Next-Generation Wireless Networks: Basics, State-of-the-Art, and Open Challenges. IEEE Commun. Surv. Tutor. 2025, 1. [Google Scholar] [CrossRef]

- Chan, K.H.; Im, S.K. Using Four Hypothesis Probability Estimators for CABAC in Versatile Video Coding. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–17. [Google Scholar] [CrossRef]

- Im, S.K.; Chan, K.H. Multi-lambda search for improved rate-distortion optimization of H.265/HEVC. In Proceedings of the 2015 10th International Conference on Information, Communications and Signal Processing (ICICS), Singapore, 2–4 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Xing, Z.; Feng, Q.; Chen, H.; Dai, Q.; Hu, H.; Xu, H.; Wu, Z.; Jiang, Y.G. A Survey on Video Diffusion Models. ACM Comput. Surv. 2024, 57, 1–42. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, S.; Liu, S.; Zhan, Y.; Tao, D.; Chen, Z.; Chen, Z. Sample-Cohesive Pose-Aware Contrastive Facial Representation Learning. Int. J. Comput. Vis. 2025, 133, 3727–3745. [Google Scholar] [CrossRef]

- Li, S.; Yang, J.; Bao, H.; Xia, D.; Zhang, Q.; Wang, G. Cost-Sensitive Neighborhood Granularity Selection for Hierarchical Classification. IEEE Trans. Knowl. Data Eng. 2025, 1–12. [Google Scholar] [CrossRef]

- Archana, R.; Jeevaraj, P.S.E. Deep learning models for digital image processing: A review. Artif. Intell. Rev. 2024, 57, 11. [Google Scholar] [CrossRef]

- Xie, L.; Luo, Y.; Su, S.F.; Wei, H. Graph Regularized Structured Output SVM for Early Expression Detection with Online Extension. IEEE Trans. Cybern. 2023, 53, 1419–1431. [Google Scholar] [CrossRef]

- Kuba, R.; Rahimi, S.; Smith, G.; Shute, V.; Dai, C.P. Using the first principles of instruction and multimedia learning principles to design and develop in-game learning support videos. Educ. Technol. Res. Dev. 2021, 69, 1201–1220. [Google Scholar] [CrossRef]

- Chan, K.H. Using admittance spectroscopy to quantify transport properties of P3HT thin films. J. Photonics Energy 2011, 1, 011112. [Google Scholar] [CrossRef][Green Version]

- Ehrhardt, J.; Wilms, M. Autoencoders and variational autoencoders in medical image analysis. In Biomedical Image Synthesis and Simulation; Elsevier: Amsterdam, The Netherlands, 2022; pp. 129–162. [Google Scholar] [CrossRef]

- Wang, J.Z.; Zhao, S.; Wu, C.; Adams, R.B.; Newman, M.G.; Shafir, T.; Tsachor, R. Unlocking the Emotional World of Visual Media: An Overview of the Science, Research, and Impact of Understanding Emotion. Proc. IEEE 2023, 111, 1236–1286. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Ru, S.R.; Zeng, Z.H.; Zhang, L. Research on motion recognition algorithm based on bag-of-words model. Microsyst. Technol. 2019, 27, 1647–1654. [Google Scholar] [CrossRef]

- Xie, L.; Hang, F.; Guo, W.; Lv, Y.; Ou, W.; Vignesh, C.C. Machine learning-based security active defence model - security active defence technology in the communication network. Int. J. Internet Protoc. Technol. 2022, 15, 169. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, B. A modified uncertain maximum likelihood estimation with applications in uncertain statistics. Commun. Stat. Theory Methods 2023, 53, 6649–6670. [Google Scholar] [CrossRef]

- Cheng, K.; Xue, X.; Chan, K. Zero emission electric vessel development. In Proceedings of the 2015 6th International Conference on Power Electronics Systems and Applications (PESA), Hong Kong, China, 15–17 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Im, S.K.; Pearmain, A.J. Unequal error protection with the H.264 flexible macroblock ordering. In Proceedings of the Visual Communications and Image Processing 2005, Beijing, China, 12–15 July 2005; p. 110. [Google Scholar] [CrossRef]

- Xu, J.; Park, S.H.; Zhang, X. A Temporally Irreversible Visual Attention Model Inspired by Motion Sensitive Neurons. IEEE Trans. Ind. Inform. 2020, 16, 595–605. [Google Scholar] [CrossRef]

- Chan, K.; Im, S. Sentiment analysis by using Naïve-Bayes classifier with stacked CARU. Electron. Lett. 2022, 58, 411–413. [Google Scholar] [CrossRef]

- Li, L.; Chang, J.; Vakanski, A.; Wang, Y.; Yao, T.; Xian, M. Uncertainty quantification in multivariable regression for material property prediction with Bayesian neural networks. Sci. Rep. 2024, 14, 10543. [Google Scholar] [CrossRef]

- Gomes, A.; Ke, W.; Lm, S.K.; Siu, A.; Mendes, A.J.; Marcelino, M.J. A teacher’s view about introductory programming teaching and learning—Portuguese and Macanese perspectives. In Proceedings of the 2017 IEEE Frontiers in Education Conference (FIE), Indianapolis, IN, USA, 18–21 October 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Zahari, Z.; Shafie, N.A.B.; Razak, N.B.A.; Al-Sharqi, F.A.; Al-Quran, A.A.; Awad, A.M.A.B. Enhancing Digital Social Innovation Ecosystems: A Pythagorean Neutrosophic Bonferroni Mean (PNBM)-DEMATEL Analysis of Barriers Factors for Young Entrepreneurs. Int. J. Neutrosophic Sci. 2024, 23, 170–180. [Google Scholar] [CrossRef]

- Liu, B.; Lam, C.T.; Ng, B.K.; Yuan, X.; Im, S.K. A Graph-Based Framework for Traffic Forecasting and Congestion Detection Using Online Images From Multiple Cameras. IEEE Access 2024, 12, 3756–3767. [Google Scholar] [CrossRef]

- Benbakhti, B.; Kalna, K.; Chan, K.; Towie, E.; Hellings, G.; Eneman, G.; De Meyer, K.; Meuris, M.; Asenov, A. Design and analysis of the As implant-free quantum-well device structure. Microelectron. Eng. 2011, 88, 358–361. [Google Scholar] [CrossRef]

- Liu, Q.; Netrapalli, P.; Szepesvari, C.; Jin, C. Optimistic MLE: A Generic Model-Based Algorithm for Partially Observable Sequential Decision Making. In Proceedings of the 55th Annual ACM Symposium on Theory of Computing (STOC ’23), Orlando FL USA, 20–23 June 2023; pp. 363–376. [Google Scholar] [CrossRef]

- Li, D.; Hu, J.; Wang, C.; Li, X.; She, Q.; Zhu, L.; Zhang, T.; Chen, Q. Involution: Inverting the Inherence of Convolution for Visual Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Yang, X.; Im, S.K. Speech emotion recognition based on Graph-LSTM neural network. EURASIP J. Audio Speech Music Process. 2023, 2023, 40. [Google Scholar] [CrossRef]

- Xu, C.; Qiao, Y.; Zhou, Z.; Ni, F.; Xiong, J. Enhancing Convergence in Federated Learning: A Contribution-Aware Asynchronous Approach. Comput. Life 2024, 12, 1–4. [Google Scholar] [CrossRef]

- Zheng, H.; Yang, Z.; Liu, W.; Liang, J.; Li, Y. Improving deep neural networks using softplus units. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, UK, 12–17 July 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Sun, W.; Su, F.; Wang, L. Improving deep neural networks with multi-layer maxout networks and a novel initialization method. Neurocomputing 2018, 278, 34–40. [Google Scholar] [CrossRef]

- Chan, K.H.; Pau, G.; Im, S.K. Chebyshev Pooling: An Alternative Layer for the Pooling of CNNs-Based Classifier. In Proceedings of the 2021 IEEE 4th International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 13–15 August 2021; pp. 106–110. [Google Scholar] [CrossRef]

- Chan, K.H.; Im, S.K.; Ke, W. Variable-Depth Convolutional Neural Network for Text Classification. In Neural Information Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 685–692. [Google Scholar] [CrossRef]

- Deng, L. The MNIST Database of Handwritten Digit Images for Machine Learning Research [Best of the Web]. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Ansel, J.; Yang, E.; He, H.; Gimelshein, N.; Jain, A.; Voznesensky, M.; Bao, B.; Bell, P.; Berard, D.; Burovski, E.; et al. PyTorch 2: Faster Machine Learning Through Dynamic Python Bytecode Transformation and Graph Compilation. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 2 (ASPLOS ’24), La Jolla, CA, USA, 27 April–1 May 2024; pp. 929–947. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Le Guen, V.; Thome, N. Disentangling Physical Dynamics From Unknown Factors for Unsupervised Video Prediction. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11471–11481. [Google Scholar] [CrossRef]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent Neural Networks for Predictive Learning using Spatiotemporal LSTMs. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Yu, W.; Lu, Y.; Easterbrook, S.; Fidler, S. Efficient and Information-Preserving Future Frame Prediction and Beyond. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity From Spatiotemporal Dynamics. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9146–9154. [Google Scholar] [CrossRef]

- Wu, H.; Yao, Z.; Wang, J.; Long, M. MotionRNN: A Flexible Model for Video Prediction with Spacetime-Varying Motions. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15430–15439. [Google Scholar] [CrossRef]

- Tang, S.; Li, C.; Zhang, P.; Tang, R. SwinLSTM: Improving Spatiotemporal Prediction Accuracy using Swin Transformer and LSTM. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 13424–13433. [Google Scholar] [CrossRef]

| Model | SSIM↑ | PSNR↑ (dB) | MSE↓ | FVD↓ | Memory Usage (GB) |

|---|---|---|---|---|---|

| ConvLSTM [64] | 0.873 | 26.46 | 0.055 | 185.2 | 9.7 |

| PhyDNet [65] | 0.885 | 27.52 | 0.048 | 175.4 | 12.3 |

| PredRNN [66] | 0.887 | 27.83 | 0.046 | 172.1 | 10.5 |

| CrevNet [67] | 0.888 | 27.99 | 0.045 | 172.1 | 11.5 |

| MIM [68] | 0.892 | 24.29 | 0.041 | 169.5 | 13.7 |

| SimVP [12] | 0.893 | 28.34 | 0.043 | 168.2 | 11.2 |

| MotionRNN [69] | 0.899 | 28.46 | 0.042 | 159.7 | 10.1 |

| SwinLSTM [70] | 0.891 | 26.55 | 0.040 | 158.6 | 11.5 |

| DMVFN [14] | 0.902 | 29.11 | 0.039 | 156.3 | 10.8 |

| Proposed Model | 0.901 | 29.31 | 0.041 | 155.5 | 10.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Im, S.-K.; Chan, K.-H. Enhanced Localisation and Handwritten Digit Recognition Using ConvCARU. Appl. Sci. 2025, 15, 6772. https://doi.org/10.3390/app15126772

Im S-K, Chan K-H. Enhanced Localisation and Handwritten Digit Recognition Using ConvCARU. Applied Sciences. 2025; 15(12):6772. https://doi.org/10.3390/app15126772

Chicago/Turabian StyleIm, Sio-Kei, and Ka-Hou Chan. 2025. "Enhanced Localisation and Handwritten Digit Recognition Using ConvCARU" Applied Sciences 15, no. 12: 6772. https://doi.org/10.3390/app15126772

APA StyleIm, S.-K., & Chan, K.-H. (2025). Enhanced Localisation and Handwritten Digit Recognition Using ConvCARU. Applied Sciences, 15(12), 6772. https://doi.org/10.3390/app15126772