2. Materials and Methods

This section, structured in four steps, outlines the process for developing a methodology aimed at improving production and service systems, specifically within the context of solid waste management. It begins with the identification of sector-specific needs and challenges, followed by the proposal of a general definition of DTs, along with architecture and framework. Subsequently, a replicable methodology for the implementation of DTs is presented, and finally, the approach is validated through a real-world case study.

Step 1: Contextual Analysis and Problem Definition: Assessment of the waste management sector’s context, identifying challenges related to efficiency and sustainability in organic waste management. This review included the analysis of data on waste generation, current practices, and limitations of conventional systems.

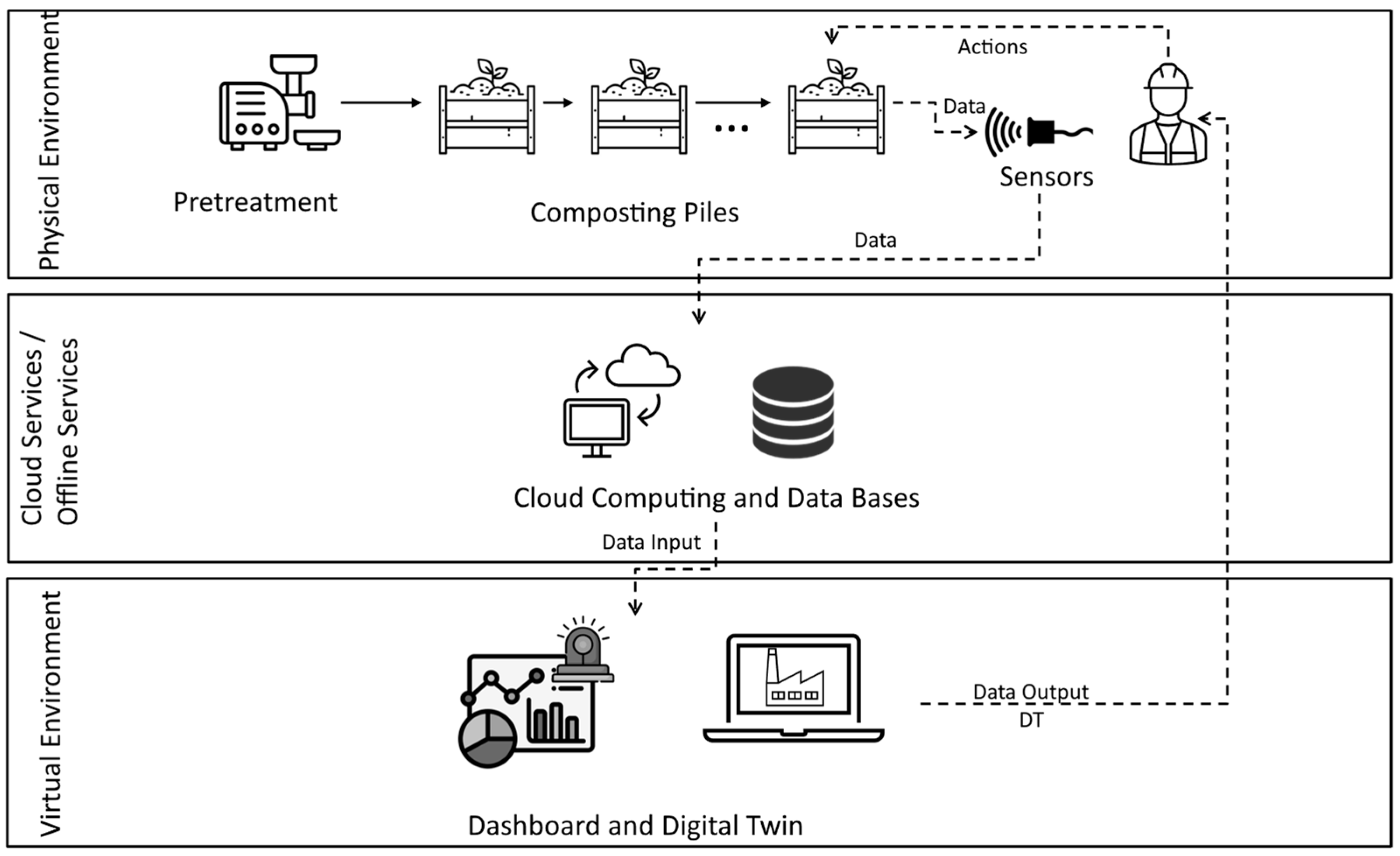

Step 2: Definition and Design of the DTs Architecture and Framework: In this step, the conceptual and technical framework guiding the implementation of the DTs is established. This process involves defining the functional layers that structure the system and detailing how they interact to ensure an efficient flow of information. The proposed architecture is designed to enable bidirectional interaction between the physical system and its digital counterpart, integrating advanced capabilities for real-time monitoring, analysis, and optimization.

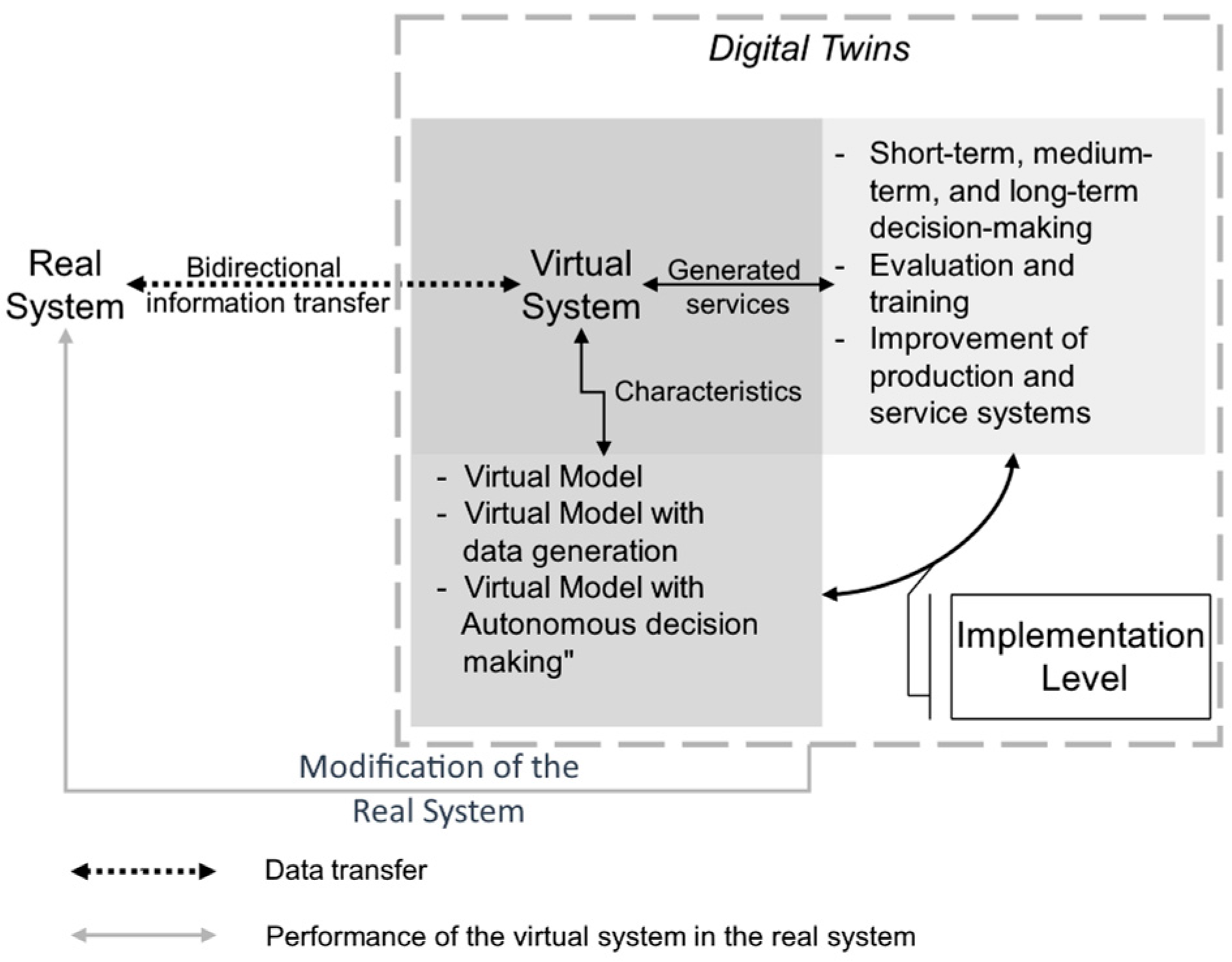

The proposed definition of DTs is illustrated in

Figure 1, which highlights the characteristics, levels of implementation, and the feedback flow between the physical and virtual systems.

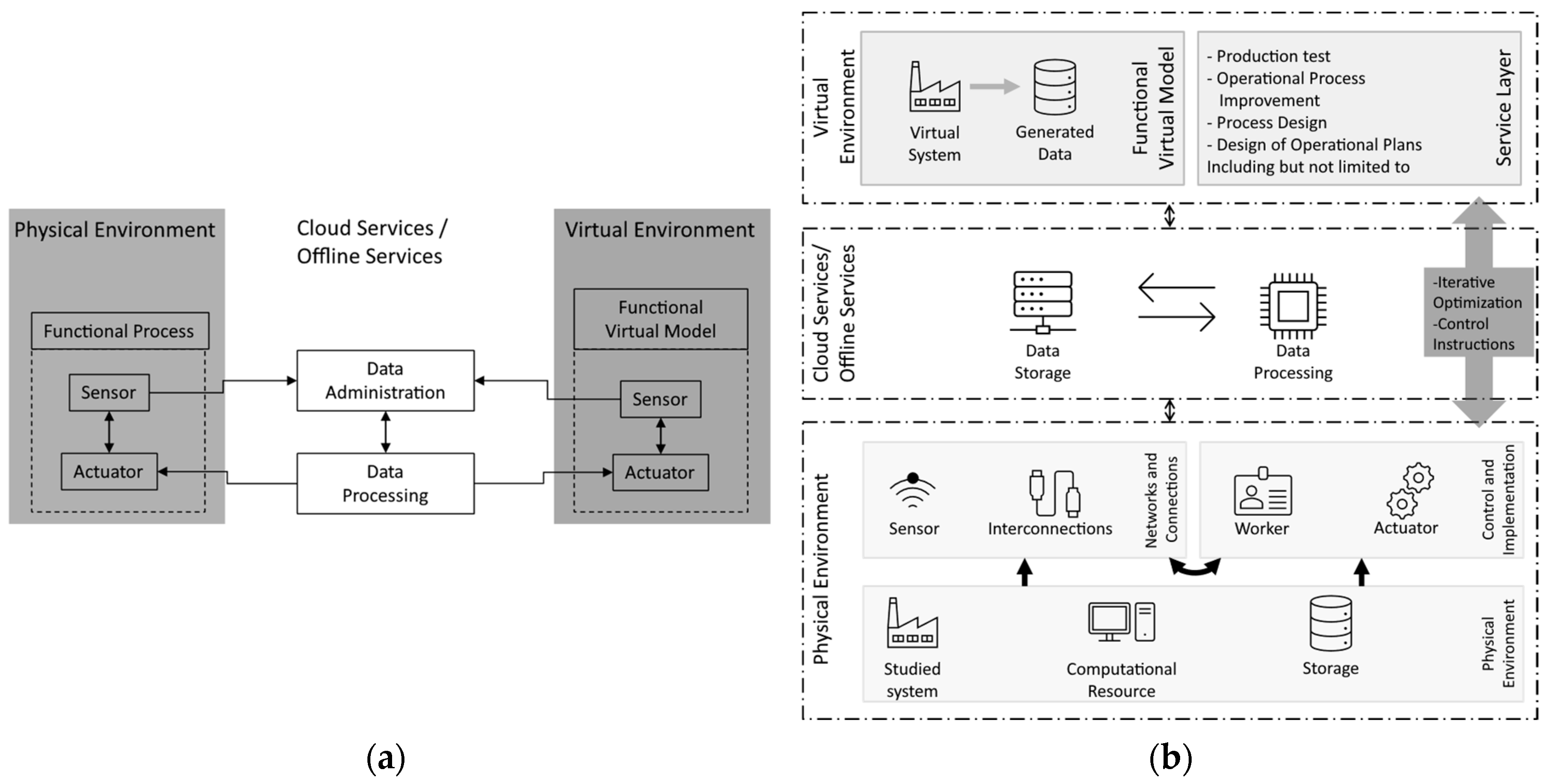

Figure 2 shows the modular three-layer architecture and the associated implementation framework, which structures the bidirectional interaction between the physical environment and the digital twin.

Physical Environment: Hosts the system under study with sensors to capture data and actuators to execute actions based on processed information.

Cloud Services: Facilitate the storage, analysis, and management of data, ensuring bidirectional information transfer and enabling both batch and real-time processing.

Virtual Environment: Digitally replicates the physical system, allowing for simulations, predictive analysis, and the generation of services such as process optimization, predictive maintenance, and training.

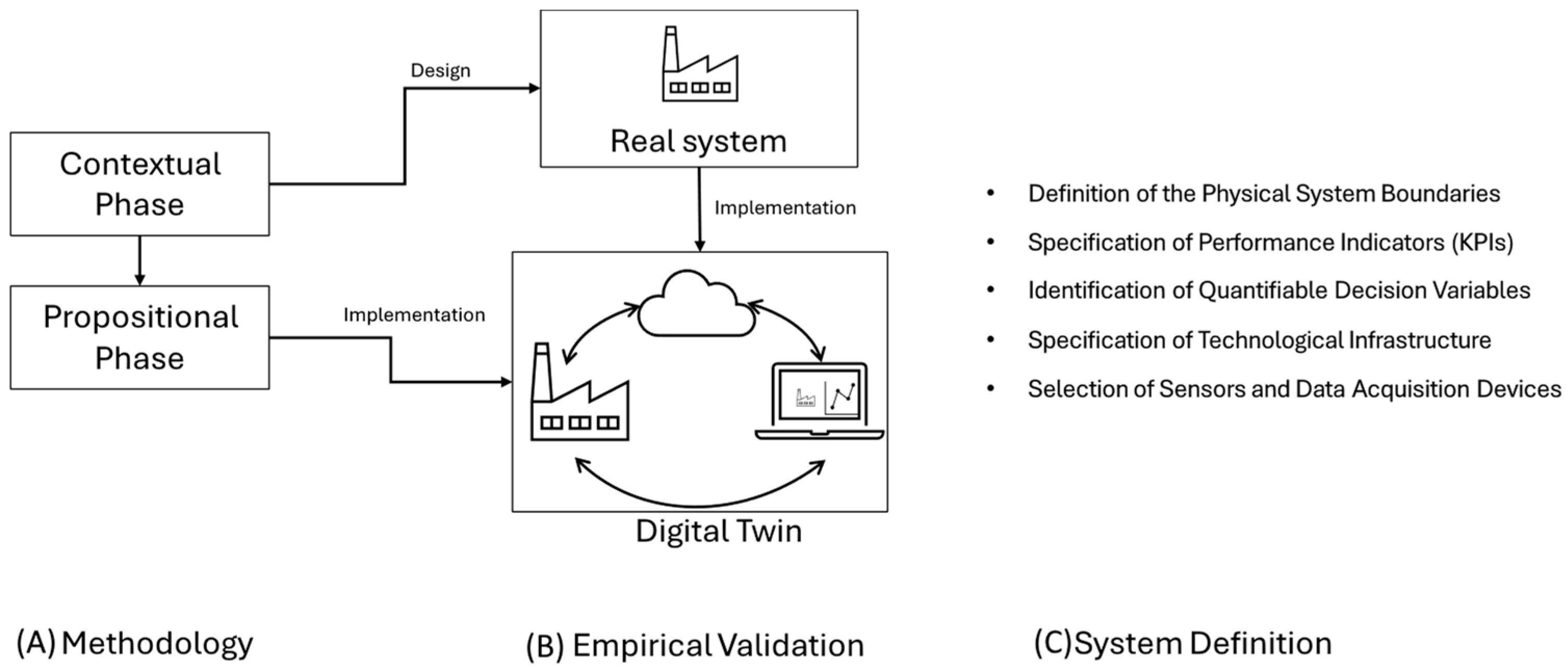

Step 3: Methodology Development: This step focuses on designing a structured methodology for implementing DTs in production and service systems, considering the identified needs and the characteristics of the target system. Technical and operational procedures are defined, including the selection of tools, integration strategies, and guidelines for evaluating system performance. The modular and adaptive approach ensures the methodology is customizable, providing scalability, efficiency, and interoperability across various industrial environments.

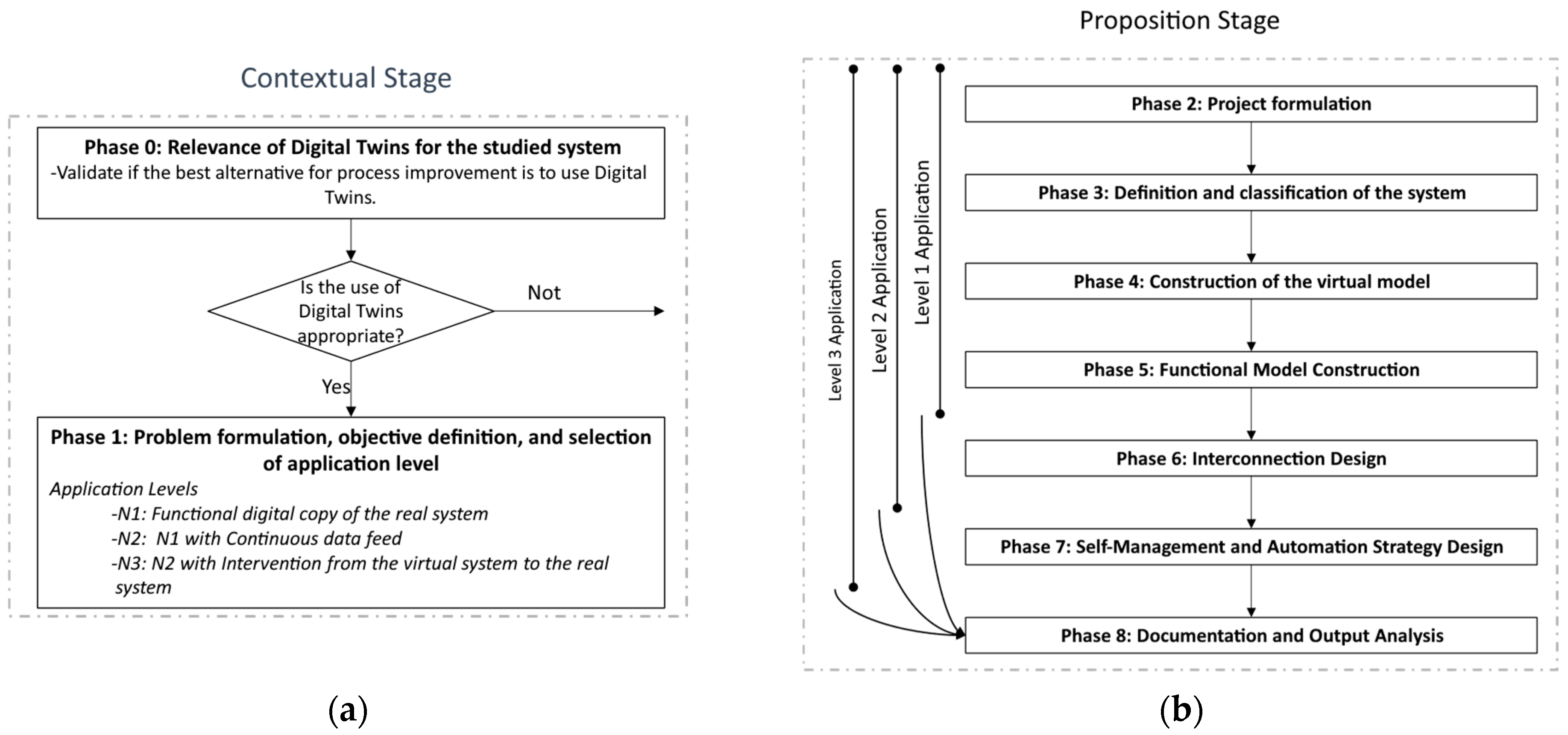

Figure 3 presents the proposed methodology, divided into two main stages (contextual and propositional), each comprising structured phases to guide implementation.

The proposed methodology consists of two sequential stages: the contextual stage defines the system’s suitability for DT implementation by establishing objectives, KPIs, and technical requirements; the propositional stage involves the design, modeling, and integration of the DT based on those parameters, enabling decision support and performance evaluation.

Step 4: Validation of the Developed Methodology: In this step, the designed methodology was implemented and validated through a quasi-experimental setup carried out at a rural composting facility located in Cajamarca, Colombia. The validation involved the deployment of low-cost temperature and humidity sensors, a cloud-based data processing layer, and a virtual simulation environment to assess real-time operational performance.

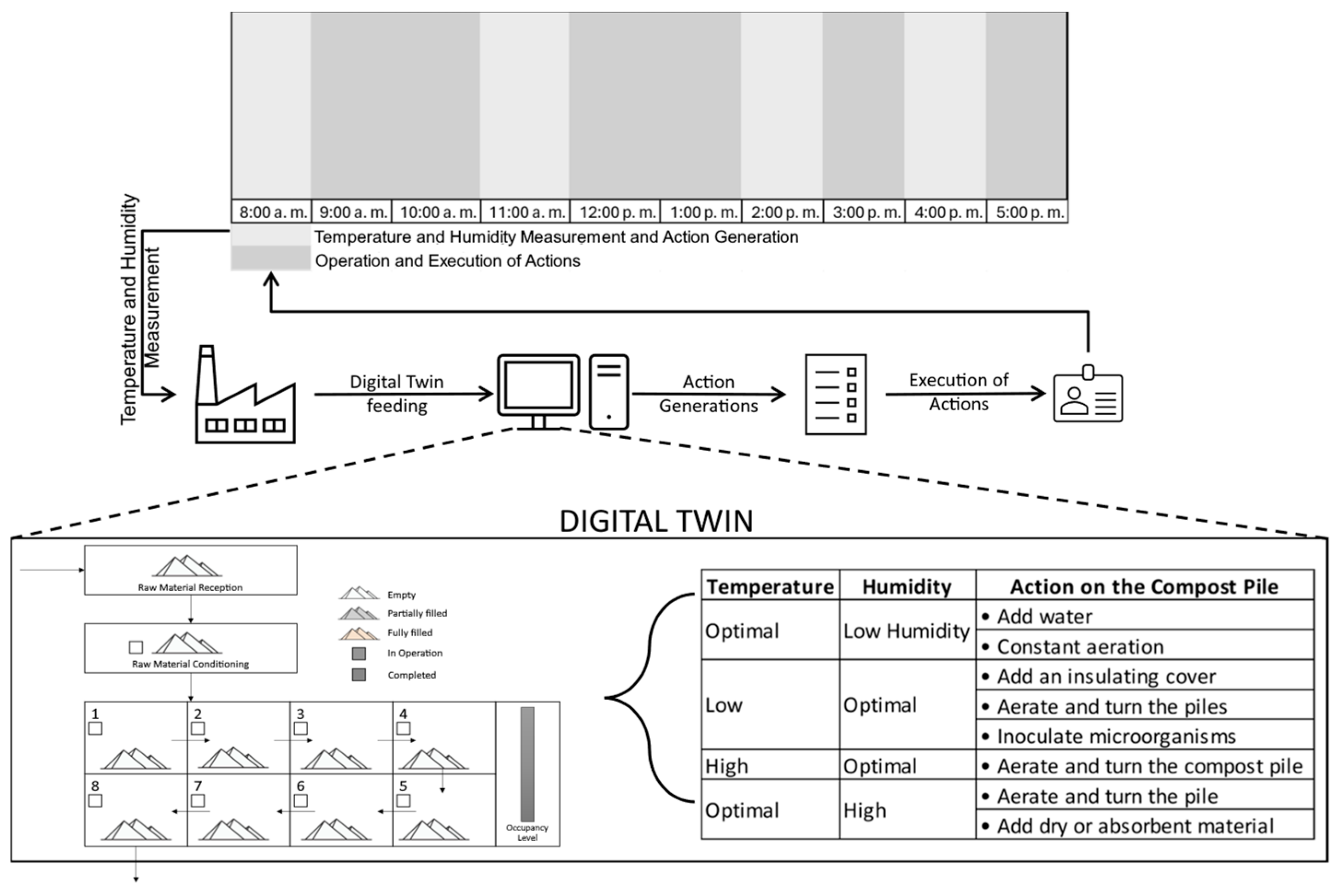

Figure 4 illustrates the overall structure of the developed DT system, showing the integration of physical, cloud, and virtual components, as well as their interaction with system operators.

The proposed DT was structured according to a three-layer architecture: physical, cloud, and virtual.

The physical layer consisted of a real composting plant operated manually, equipped with a DS18B20 temperature sensor (Maxim Integrated, Shenzhen, China) and a stainless-steel soil moisture sensor with an LM393 comparator (Texas Instruments, Shenzhen, China), both connected to an Arduino Uno (Arduino AG, Ivrea, Italy).

The cloud layer handled real-time data acquisition, processing, and storage, using Node-RED v3.0.x for data routing, visualization, and rule-based analysis.

The virtual layer was developed using AnyLogic Professional 8.7, enabling a digital replica of the composting process that simulated key parameters to support prediction, performance evaluation, and dynamic adjustment.

This architecture enabled continuous interaction between real-world data and the virtual model to support decision-making and feedback.

The field validation was conducted over a 29-week period in a high-capacity composting facility located in Cajamarca, Colombia. Trained personnel recorded four measurements per day (Monday through Friday) targeting key parameters such as internal pile temperature, humidity, and transformation indicators. This data collection strategy was aligned with the operational routine of the facility, ensuring that no disruptions were introduced to daily workflows while capturing diurnal variations and medium-term process trends. Data were manually collected from embedded sensors (DS18B20 and LM393-based soil moisture probes) and entered into an Arduino Uno, which transmitted the information to a local gateway for cloud processing. Node-RED served as the data acquisition and routing platform, while AnyLogic handled the virtual simulation.

This configuration allowed for the collection and analysis of performance indicators under authentic field conditions, thereby ensuring ecological validity and practical applicability of the proposed DT framework. The validation process includes measuring key performance indicators (KPIs), such as transformation performance, which assesses the efficiency of the process in converting organic matter into compost, and variability reduction, which measures consistency across processed batches. These indicators allow for a comparison between the obtained results and the initial objectives, determining the success of the process.

Additionally, specific criteria are defined to evaluate the integration, functionality, and replicability of the model:

Integration: It is verified that the physical, digital, and cloud layers interact seamlessly and enable real-time feedback.

Functionality: The model is evaluated to ensure it enables predictive adjustments based on data and facilitates the operational control of the system.

Replicability: It is validated that the results obtained are reproducible under similar conditions, ensuring the adaptability of the methodology to other contexts.

3. Results

Building upon the methodology presented in the previous section, this section presents the results derived from its application in a real-world scenario. The findings are organized following the same four-step structure: (1) identification of needs, (2) design of the DT architecture and framework, (3) development of the implementation methodology, and (4) validation through a case study in a composting facility. This structure allows for a comprehensive evaluation of the proposed approach, demonstrating its feasibility, effectiveness, and potential for replication in other production and service systems.

Step 1: Contextual Analysis and Problem Definition: Municipal solid waste represents a major environmental challenge on a global scale [

6]. Agriculture and household activities are the most common sources of organic waste, and the primary challenge in organic waste management lies in transforming organic matter into usable products [

3]. Among the processes for waste reduction and utilization, composting stands out as a potential technology capable of transforming organic substances into stable fertilizers, making it a viable recycling option [

6]. The global increase in waste, driven by economic development and population growth, makes sustainable management essential. This approach must align with the circular economy, prioritizing reduction, reuse, and recycling before resorting to recovery [

43]. Inadequate management of organic waste causes environmental and health issues, prompting municipalities to adopt strategies aimed at reducing improper disposal, as highlighted in specialized literature [

44].

Proper management of organic waste, which accounts for 28% to 64% of municipal solid waste, is crucial to preventing significant environmental impacts, such as gas emissions and leachate production in landfills [

2]. Smart cities, driven by cyber infrastructure, promote sustainable development by integrating economic growth, improved quality of life, and efficient resource management [

45]. To achieve this, Industry 4.0 drives full automation through cyber–physical systems, cloud computing, and IoT, fostering smart manufacturing. This approach enables the design and management of complex ecosystems with real-time information and autonomous interaction between systems [

46]. In Industry 4.0, DTs stand out by representing the duality of systems: a physical version and a digital or informational counterpart of the system [

47]. DTs offer a promising solution to enhance automation and advance towards smart manufacturing through a bidirectional connection between the physical and digital worlds [

48]. Despite their potential in various fields, DTs lack a formal definition, a unified implementation process, and a framework to classify and define their use cases [

49]. DTs has the potential to transform composting systems by optimizing processes, improving efficiency, and minimizing environmental impacts.

Step 2: Definition, Design of the DTs Architecture and Framework

3.1. Proposed Definition

It is proposed to define DTs as an active representation of a real system that, through continuous feedback and the use of tools such as machine learning, data mining, and sensors, generates predictive and actionable information in a virtual environment. Their implementation enables decision-making, evaluation, training, and continuous improvement of systems, facilitating simulations, real-time adjustments, and operational optimization. The planning of a DT should follow project management principles, considering the relevance of the problem to be solved. Its complexity may range from a basic virtual replica to an intelligent system capable of influencing the physical environment through continuous feedback, always aligned with predefined objectives. The proposed definition, previously introduced in

Figure 1 (

Section 2), emphasizes the key characteristics of a DT, its implementation levels, and the flow of information between the physical and virtual domains.

3.2. Proposed Architecture and Framework

The proposed architecture for DTs organizes the flow of information into three fundamental layers: the physical environment, cloud services, and the virtual environment. Each layer includes key elements that enable efficient and bidirectional integration between the real system and its digital counterpart. This architectural structure, as presented in

Figure 2 (

Section 2), depicts both the multi-layered configuration and the implementation framework that guides the interaction across these components.

The DTs can be integrated with IoT platforms for enhanced sensor communication, AI for predictive modeling, and Big Data platforms for large-scale performance analytics. This flexibility enables synergistic innovation in complex industrial environments. The proposed framework, based on this architecture, guides the development of DTs for the improvement of production and service systems. This structured approach enables constant iterations between the physical and virtual environments, optimizing performance through a continuous cycle of data capture, analysis, and feedback. The services generated by the DTs include data analysis, simulations, system integration, and operational improvement strategies, ensuring sustainable and effective implementation. The framework is scalable by design, with modular data flows and API-based integration. For larger-scale applications, deployment would require cloud-based orchestration, parallel sensor networks, and retraining of predictive models to match local dynamics

Step 3: Methodology Development: The proposed methodology consists of two main stages, each with phases designed to guide the structured development and implementation of DTs, ensuring continuous improvement of production and service systems. These stages, as previously illustrated in

Figure 3 (

Section 2), encompass the entire process—from the initial assessment to the integration and validation of the DT.

3.3. Contextual Stage

In the contextual stage, the relevance assessment ensures that the system under study is suitable for the implementation of a DT, considering its complexity, available data, and the problems to be addressed. Clear objectives are defined, such as simulations, predictions, or predictive maintenance, along with key performance indicators (KPIs). Additionally, the scope of the physical system is delineated by identifying its boundaries, critical variables, and necessary technological resources, such as sensors, actuators, and communication systems.

3.4. Propositional Stage

In the propositional stage, the complete construction of the DTs is carried out based on the objectives, scope, and level of integration established in the contextual phase. This sequential stage aims to generate the DTs that will support decision-making related to the process, system, or product under study. To achieve this, a structured development project formulation is created, the studied system is interpreted, system coding and modeling are performed, along with connections to the real system, and finally, the obtained results are analyzed.

In alignment with existing literature that differentiates between Digital Models (DM), Digital Shadows (DS), and DTs [

50], this study proposes three practical levels of application for DTs implementations in production and service systems. These levels are structured to guide the progressive adoption of DTs according to the available infrastructure, integration capacity, and system complexity:

Level 1—Monitoring (Digital Model): At this level, the digital system receives data from the physical environment at predefined intervals. The representation is static or manually updated, and its main function is to support system observation and historical analysis.

Level 2—Diagnosis and Simulation (Digital Shadow): Here, data is automatically collected in real time and used to simulate or predict system behavior. Although the digital system does not influence the physical process directly, it offers valuable insights for operators, enabling decision support.

Level 3—Closed-Loop Optimization (DTs): This level enables bidirectional communication between the digital and physical environments. The virtual model performs predictive analysis and provides actionable outputs that are integrated into the operational workflow, either automatically or through operator-mediated execution. This dynamic feedback loop allows the system to adapt continuously and optimize performance in real time.

The implementation presented in this study reaches Level 3, as it integrates real-time sensing, cloud-based processing, simulation, and a feedback mechanism that informs operational adjustments. This ensures continuous system adaptation, aligning with the core principles of fully functional DTs.

Step 4: Validation of the Developed Methodology: The field validation was carried out in a rural composting facility located in Cajamarca, Tolima (Colombia), at an altitude of approximately 1700 m above sea level. The region is characterized by a temperate-humid climate with average daytime temperatures ranging from 18 °C to 26 °C and relative humidity between 70% and 85%, which can influence composting dynamics and sensor behavior. The composting process studied consists of a material reception area where the received organic material is homogenized, eight composting beds equipped with a forced aeration system operating in a linear arrangement with the product being rotated every eight days, and a packaging area. The control variables are the moisture and temperature of the compost piles, which are essential to ensuring high-quality compost. The DTs developed for composting in Cajamarca is a comprehensive system based on a three-layer architecture: physical, cloud, and virtual. In the physical layer, temperature and humidity sensors monitor the process conditions in real time, while operators adjust parameters such as ventilation and irrigation. The cloud layer processes and stores the collected data, feeding a predictive model in the virtual layer that simulates and optimizes the system’s behavior. The DTs were developed following the methodology and architecture proposed in

Table 2.

The real system is operated by personnel responsible for executing the necessary actions. To integrate it with the DT, specific schedules were established for feeding the DT with data from the real system and implementing in the real system the actions recommended by the DT. This type of integration not only optimizes operations by enabling real-time feedback and predictive decision-making but also improves resource efficiency and process consistency. For instance, dynamic control of aeration and irrigation based on sensor feedback reduced energy consumption and minimized the overuse of water. Additionally, the DT enabled early detection of deviations in temperature profiles, allowing for timely interventions that enhanced composting homogeneity and reduced batch variability. The overall structure of the DTs implemented in the composting facility is shown in

Figure 4 (

Section 2). This diagram summarizes the integration of the physical, cloud, and virtual layers, as well as their interaction with system operators. It also defines the timing of data acquisition and the corresponding actions triggered based on the measurements taken.

Table 3 summarizes the technical configuration of the DT implemented for composting process monitoring and optimization. It presents the core system components, including sensor types, data acquisition frequency, operational thresholds, and modeled failure scenarios. The table also outlines the specific actions triggered by the DT system when defined thresholds are crossed or anomalies are detected. This structured representation complements the architectural description and provides insight into the practical functioning and responsiveness of the proposed methodology under field conditions.

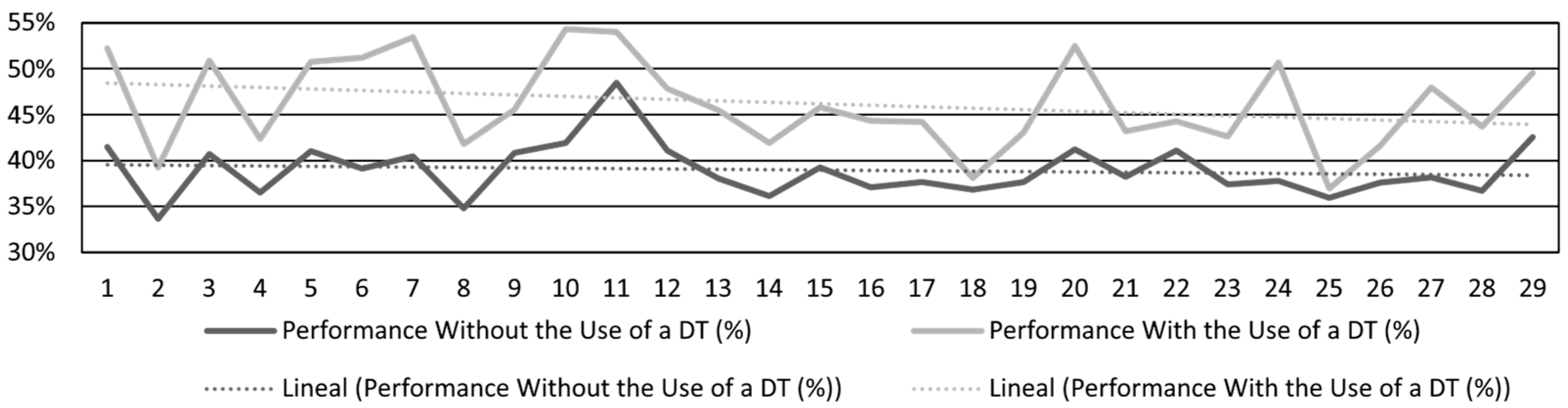

As illustrated, the physical layer captures real-time data through temperature and moisture sensors, which are then transmitted to the cloud layer for processing and storage. The virtual layer uses this data to simulate process behavior and generate predictions. The structure also highlights the role of human operators in executing system adjustments based on feedback from the DT. This bidirectional interaction between physical and digital components enables dynamic optimization of the composting process. The implementation of the DT increased composting efficiency from an average of 40% (35–43%) to 50% (40–54%), reducing variability and optimizing the process through real-time dynamic adjustments. The implementation of the DTs was evaluated over a 30-week period to gather performance data, which is presented in

Figure 5. The performance reflects the weekly output measured at the time the products were packaged.

To evaluate the impact of the DT implementation on process efficiency, a structured and sustained data collection process was conducted. In this context, “Process Efficiency” refers to the amount of stable compost produced per unit of raw organic material over a defined period, accounting for factors such as moisture optimization and accelerated transformation rates. Over a period of 29 consecutive weeks, trained personnel recorded four measurements per day, Monday through Friday, targeting key variables such as internal pile temperature, humidity, and material transformation indicators. This schedule, aligned with the facility’s operational availability, allowed the capture of diurnal variation and gradual trends without disrupting daily workflows. The volume and temporal resolution of the resulting dataset provided sufficient statistical power for rigorous analysis. A one-way analysis of variance (ANOVA) was performed to compare the mean efficiency before and after the deployment of the DTs. The null hypothesis (H0) stated that no significant difference would be observed between both conditions, while the alternative hypothesis (H1) posited that the DTs would produce a measurable improvement. The results yielded high statistical significance (p < 0.001), confirming that the implementation led to a notable increase in average efficiency. Additionally, the Levene test showed a significant reduction in performance variability (p < 0.01), indicating that the process became more consistent and stable. These findings validate not only the effectiveness but also the robustness and reliability of the methodology under real-world operational conditions.

During this validation, significant results were observed. First, a 10% increase in composting efficiency was achieved, significantly improving the transformation of organic matter into fertilizer. Additionally, the implementation of the DTs minimized inconsistencies between processed batches, resulting in more homogeneous and higher-quality products. Another key outcome was the optimization of resources, thanks to the DT’s capabilities for facilitating predictive analysis and real-time adjustments, which improved operational control and reduced waste during the process. Despite being conducted in a real operational environment with non-specialized personnel and low-cost technology, the case study adheres to principles of ecological validity, enhancing the applicability of the model in similar contexts. The presence of external factors was not considered a limitation, but rather an intentional part of the methodological design. To ensure a reliable result, specific control and mitigation measures were implemented.

Table 4 outlines the main external factors that could potentially affect the performance and consistency of the composting process during the DT validation. These include environmental variables (e.g., ambient temperature and humidity), human operator variability, raw material heterogeneity, and sensor reliability. Each factor is associated with mitigation measures that were implemented to minimize its impact. This framework helps to contextualize the operational conditions of the study and supports the interpretation of the experimental results under realistic field constraints.

This workflow diagram summarizes the main components and data flows of the proposed DT implementation (see

Figure 6). The physical environment includes composting piles and operators, which are monitored using low-cost sensors. Data is acquired via microcontrollers and transmitted to a cloud-based processing layer using wireless protocols. The virtual environment receives real-time data for simulation and decision support, while visualization dashboards enable human-in-the-loop supervision. Operational feedback is then applied manually or automatically, closing the control loop and enabling continuous optimization under real-world conditions.

The implementation of the DTs in the composting plant, with an initial investment of USD 50 covering low-cost hardware such as sensors and an Arduino board—resulted in a monthly production increase of 1200 kg of compost. This improvement translated into additional revenues of USD 789.9 per month, or approximately USD 9478.8 annually.

The Return on Investment (ROI) was calculated using the standard formula:

Substituting the actual values:

The economic analysis conducted, focused on the calculation of Return on Investment (ROI), was intentionally simplified to demonstrate the viability of the model in contexts with limited access to structured financial and technological data. This approach aligns with the characteristics of the rural composting facility studied, where indirect costs and accounting processes are not formally digitalized. Nevertheless, the ROI was calculated based on actual investment and revenue data, reinforcing its validity as a decision-support tool. In future implementations particularly in more complex industrial environments sensitivity analyses, advanced financial indicators, and more detailed cost models will be incorporated, in line with best practices for the economic evaluation of emerging technologies. In addition to financial performance, the methodology supports core aspects of environmental sustainability. By enabling real-time feedback and predictive control, the DT improved the uniformity of compost batches, reduced process variability, and optimized the use of resources such as energy and water. For example, more accurate aeration control minimized electricity usage, while targeted irrigation reduced water consumption.

These outcomes are aligned with the principles of the circular economy, particularly in terms of:

Waste reduction: By maximizing the conversion of organic matter into usable compost.

Resource efficiency: Through optimized inputs and reduction in rework.

Closed-loop systems: Where outputs are reintegrated into productive agricultural cycles.

The DT system not only improves process performance but also strengthens the regenerative logic of circular systems by enabling data-driven decision-making, local deployment, and continuous improvement.

The proposed methodology was also compared to that of Qamsane [

16], revealing significant advantages in several dimensions. In terms of adaptability and customization, the proposed approach demonstrated superior flexibility by allowing adjustments tailored to specific contexts—such as composting—through a modular and scalable design. This contextual focus contrasts with the more general-purpose orientation of Qamsane’s methodology. Regarding implementation cost, the use of existing infrastructure and open technologies resulted in substantial savings, significantly outperforming the compared model. While Qamsane’s solution offered greater interoperability, especially in standardized manufacturing environments, the methodology presented here excelled in domain-specific flexibility by enabling iterative refinements throughout the deployment cycle. Both methodologies proved to be scalable; however, the proposed approach stands out for its continuous customization capabilities. Additionally, the reduced technical complexity of the system makes it more accessible and practical for low-tech environments, increasing its potential impact in developing regions or resource-constrained scenarios. A more extensive comparative analysis—including additional methodologies and evaluation criteria—is available in the author’s doctoral thesis, where the proposed methodology is examined in greater technical and contextual depth. The economic impact was also significant. The application of the DTs increased the monthly compost production by 1200 kg, from 5600 to 6800 kg. This increase was accompanied by an exceptional ROI of 18,857.6%, establishing it as a highly profitable investment in industrial processes. Additionally, more efficient resource management was achieved, reducing operational costs and optimizing operations.

4. Discussion

The results obtained in this study were made possible through the application of the developed methodology in a controlled environment at a composting plant located in Cajamarca, Colombia. The conditions included the availability of historical data, technological infrastructure for integrating sensors and actuators, as well as a management system that allowed real-time operational adjustments. This context facilitated the implementation of the DTs and enabled the validation of its capability to improve both the efficiency and quality of the compost produced. The composting process is inherently sensitive to various external variables that can affect the validity and reproducibility of experimental results. These include ambient temperature and humidity, human intervention, sensor drift over time, and variability in the composition of organic matter. If unaccounted for, these factors may introduce biases or mask the actual performance of the DTs system.

In this study, the system was operated under normal facility conditions, without artificial isolation of the composting beds. The decision not to cover the composting rows allowed the DT to be exposed to real environmental fluctuations, enabling the evaluation of its adaptive behavior in operationally realistic scenarios, such as changing weather and uncontrolled humidity. To mitigate human-induced variability, a standard operating procedure (SOP) was established for key manual tasks such as irrigation, pile turning, and data logging. Additionally, incoming organic material was preselected and homogenized prior to composting to reduce heterogeneity in its chemical and biological properties. While sensors were not recalibrated during the trial period, they were configured using verified factory settings and validated prior to deployment, ensuring acceptable accuracy levels throughout the experiment. Under these conditions, the DT system demonstrated stable behavior and generated valuable recommendations despite the presence of environmental noise. This highlights its operational robustness and suitability for deployment in non-laboratory, real-world environments. The decision to use one-way ANOVA and Levene’s test was driven by the need to clearly and accessibly demonstrate the statistically significant difference in process efficiency before and after the implementation of the DTs. Given the applied nature of the study and the operational context in which it was conducted, interpretative clarity was prioritized over methodological complexity. Nonetheless, the collection of over 500 data points provides a robust foundation for conducting multivariate analyses and regression modeling, which are currently being developed as part of the project’s next phase. This initial stage successfully fulfills the objective of validating the model’s effectiveness under real-world conditions with strong statistical power.

Beyond the results obtained in the composting case study, the proposed methodology stands out for its modular, scalable, and adaptable structure, which enables its application in other productive sectors with specific adjustments. This adaptability lies in the functional separation of its three layers—physical, cloud, and virtual—which can be reconfigured according to the technological and operational requirements of each new context. In precision agriculture, the methodology could incorporate sensors for soil nutrients, crop stress, or satellite imagery, alongside decision models focused on irrigation scheduling, fertilization, or pest control. The virtual layer could simulate plant growth dynamics and provide real-time recommendations. Expected benefits include greater resource efficiency, increased agricultural productivity, and reduced environmental impact. The validation process revealed several key aspects that contributed to the success of the proposed methodology. What worked particularly well was the integration of low-cost sensors with cloud-based analytics and virtual modeling, which enabled accurate and timely operational feedback. This setup proved effective even in a rural environment with limited resources and minimal technical expertise. However, the implementation also exposed some technological risks, such as sensor drift, data communication interruptions, and dependence on local connectivity infrastructure. These risks were mitigated through calibration protocols, standard operating procedures (SOPs), and redundancy in manual data verification. The conditions of success identified include the presence of at least a basic digital infrastructure, clearly defined performance indicators (KPIs), and personnel with the capacity to respond to system recommendations. Moreover, the ability to adapt the virtual layer dynamically and to simulate future scenarios in response to environmental or operational changes was critical in achieving the desired outcomes. These insights will be instrumental in future deployments of the methodology across other sectors.

This would optimize system performance and ensure regulatory compliance. Potential benefits include lower energy consumption, improved treatment quality, and greater operational stability. Beyond these sectors, the methodology can be extended to food processing, recycling, industrial fermentation, and smart logistics, where continuous monitoring and simulation capabilities could support process optimization, traceability, and waste reduction. However, transferring the methodology to more complex or regulated environments involves specific challenges, including the development of domain-specific simulation models, integration of diverse data sources, training of specialized personnel, and compatibility with existing systems such as SCADA or LIMS. Even so, the associated benefits, such as greater operational resilience, process transparency, and data-driven decision support, justify its implementation in alignment with circular economy strategies and Industry 5.0 objectives.

Regarding limitations, it is important to highlight that the methodology was tested in a single case study, which limits the generalizability of the results. Furthermore, the availability of technological infrastructure and initial costs may pose a barrier to its implementation in sectors with limited resources. The technical complexity of the setup could also present a challenge in environments with little experience in advanced technologies. Compared to methodologies like [

17,

24], this approach provides higher adaptability to context-specific variables and lower technical implementation costs. While previous work focused on manufacturing lines, our proposal addresses environmental applications with high variability. In addition to the comparison with the methodology of [

24], this study incorporates a broader comparative analysis with other relevant works in the field of waste treatment and DTs. For example, the methodology proposed by [

17] focuses on data-driven modeling for production systems using invariant patterns, but it lacks a contextualized application to biological or waste-related processes and does not demonstrate real-world validation or economic impact [

17].

Similarly, ref. [

4] developed a digital portfolio tool for waste mitigation based on cognitive DTs, emphasizing portfolio management over real-time operational control. Their work, while conceptually rich, does not implement a closed-loop feedback system or show measurable improvements in process efficiency [

4]. In contrast, our approach integrates physical sensors, simulation, and dynamic feedback loops, achieving a 10% increase in composting efficiency and over 1200 kg/month in additional product yield. Ref. [

13] explored the use of DTs in waste systems using business process modeling. However, their implementation remains theoretical, lacking performance metrics and real-time interaction between physical and virtual environments [

13]. The case study also adheres to the principles of ecological validity, as it was conducted in a real operational environment using low-cost technologies and non-specialized personnel. This reinforces the applicability of the proposed model in similar low-tech contexts. Far from being a limitation, the validation under real conditions, combined with statistically significant results (ANOVA,

p < 0.001), demonstrates the model’s viability in settings where advanced technologies are rarely applied. Compared to previous works, our case study provides not only conceptual advances but also empirically validated operational impact through an accessible and cost-effective implementation. The modular, scalable, and replicable nature of the methodology was intentionally designed to enable deployment in other sectors and regions, as further discussed. To strengthen the comparative analysis, a detailed evaluation was conducted against the methodology proposed by [

24], which is widely recognized for its robustness in industrial DT implementations. Unlike Qamsane’s approach rooted in system development life cycle (SDLC) principles and primarily aimed at manufacturing environments, our methodology demonstrated superior adaptability, cost-efficiency, and contextual relevance, particularly in composting systems. A comprehensive quantitative analysis, developed as part of a doctoral dissertation, evaluated both methodologies across a broad set of criteria such as scalability, interoperability, implementation costs, and customization capacity. The results revealed that the proposed methodology consistently outperformed Qamsane’s approach in areas requiring contextual flexibility and cost efficiency. While Qamsane’s framework demonstrated strong alignment with industrial standards and robust structural design, it showed limitations when applied to specific domains like composting, where adaptability and practical deployment in low-tech environments are essential. This comparative evaluation reinforces the methodological soundness and practical suitability of our proposal for low-tech or decentralized systems where flexibility and economic feasibility are critical.

Future research will focus on multi-case studies in fields such as wastewater treatment, precision agriculture, and decentralized manufacturing, where the minimum replication conditions have already been outlined. At this stage, however, the depth, traceability, and robustness of the validation process outweigh the need for multiple study sites.

Beyond composting, recent studies in anaerobic digestion [

7] and recycling systems [

3] highlight the need for intelligent monitoring and process optimization in decentralized waste streams. Our methodology directly addresses these needs through its adaptable architecture and capacity for local deployment, offering a solution that balances technical performance with economic and operational viability. Moreover, in the context of smart cities, ref. [

50] emphasizes the importance of classifying DTs based on their lifecycle and integration levels. While their taxonomy is useful for conceptual design, it does not provide an implementation framework. In contrast, the methodology proposed here offers a replicable process tested in real conditions with measurable impact, aligning with smart city objectives such as sustainability, circularity, and resource efficiency.

An analysis of potential negative effects reveals that implementing the DTs could increase reliance on complex digital systems, potentially leading to vulnerabilities in the event of technological failures or cyberattacks. Additionally, poorly planned scalability could result in overspending and difficulties maintaining consistency in results. In the case of adaptations to other contexts, it will be crucial to analyze the specificities of the target system to avoid imbalances in the integration of the digital and physical layers. Integration with other waste management systems represents a significant opportunity. DTs can be linked with IoT platforms and geographic information systems (GIS) to improve coordination and decision-making. However, this requires designs that ensure compatibility and real-time data exchange.

This study was deliberately limited to a single case application at a composting facility in Cajamarca, Colombia, due to its alignment with the research scope of the doctoral project and the availability of a controlled environment appropriate for initial validation. This methodological choice reflects a strategic decision to conduct a deep, context-sensitive validation under real operating conditions. Implementing DTs in low-tech environments such as rural composting facilities requires a high degree of customization, close control over contextual variables, and extended observation periods. These constraints justify the adoption of an intensive single-case study rather than a multi-site or extensive approach, which could have compromised the level of detail, control, and iterative refinement necessary at this stage of development.

Far from being a limitation, this focused approach enabled rigorous control of operational variables and robust validation under real-world conditions. The modular architecture of the proposed model, along with explicitly defined minimum replication conditions, ensures adaptability to other sectors and use cases. Rather than relying on abstract claims, the study presents verifiable evidence through high-power statistical metrics and a quantifiable return on investment (ROI), reinforcing both its scientific rigor and its practical relevance. Although the experimental validation focused on composting, the selected performance indicators, such as process duration, material homogeneity, and operational efficiency, represent fundamental metrics that are applicable across various production and service systems. These indicators were chosen for their practical relevance, ease of measurement, and direct responsiveness to DT interventions, especially in low-digitalization contexts. Their statistical significance demonstrates the feasibility and added value of the proposed methodology under real-world conditions. While the current study prioritizes clarity and robustness over scope, future research will expand the range of indicators, such as emissions, process variability, or economic return, to further validate the methodology’s applicability in sectors like precision agriculture, water treatment, and decentralized manufacturing. Additionally, the methodology was carefully designed to balance academic validity with operational feasibility in rural, low-digitalization contexts, prioritizing traceability, interpretability, and the use of accessible technologies. The absence of complex explainable AI (XAI) components is not a shortcoming, but a strategic choice aimed at maximizing sustainability and technology transfer in constrained environments. In this regard, the study not only delivers an effective technical solution but also introduces a replicable conceptual framework aligned with international DT standards, digital maturity models, and explicit scalability criteria. This positions the work as a robust and transferable contribution to the field of DTs in low-tech environments and opens the door to future multi-case validations, progressive XAI integration, and cross-sector applications.

A direct performance comparison between the proposed DT methodology and other existing approaches was not possible due to the lack of standardized quantitative metrics or publicly available field data in most reviewed studies. As identified in the literature review, the majority of related works remain at a conceptual or simulated stage, often lacking empirical validation in real-world conditions. This study contributes to closing that gap by offering one of the first field-tested implementations specifically applied to rural composting systems, with measurable improvements in efficiency, consistency, and economic return. The results obtained serve as a practical benchmark for future DT deployments in low-tech environments.

This focused implementation allowed for a rigorous evaluation of the methodology’s effectiveness, including real-time deployment, sensor integration, operator interaction, and statistical validation of performance improvements. Nonetheless, we acknowledge that reliance on a single use case may limit the generalizability of the findings.

The proposed methodology, due to its modular and scalable structure, holds significant potential for application in other sectors beyond composting. In precision agriculture, it could be adapted to monitor variables such as soil moisture, nutrient levels, and crop stress, enabling real-time decision-making for irrigation, fertilization, and pest control. In the context of wastewater treatment, the methodology could integrate parameters like pH, dissolved oxygen, and sludge retention time to optimize biological treatment processes and ensure regulatory compliance. Additionally, in decentralized manufacturing or cold chain logistics, DTs could be used to monitor equipment performance or temperature-sensitive goods, respectively. These examples illustrate the flexibility of the model to support operational efficiency, predictive maintenance, and data-driven decision-making across diverse industrial and environmental scenarios.

For successful replication, certain minimum conditions must be met:

Basic digital infrastructure, including sensors for critical variables and connectivity (e.g., WiFi, LoRa, or 4G).

Access to cloud or edge computing platforms capable of running simulations and storing data.

Defined performance indicators (KPIs) relevant to the target process.

Operational control points that can be influenced via feedback (manually or automatically).

Willingness and capacity for operator engagement, especially in environments with human-in-the-loop systems.

These foundational requirements enable the methodology to maintain its modular integrity while ensuring contextual adaptability. Future studies will focus on piloting the proposed framework in these sectors to test its scalability and robustness under different technical, environmental, and organizational conditions. The proposed methodology is particularly applicable to scenarios characterized by operational variability, limited automation, and the need for contextualized feedback systems. Suitable environments include rural composting units, decentralized agricultural operations, small-scale wastewater treatment plants, and logistics systems requiring traceability and real-time monitoring. Common enabling conditions include access to basic sensing infrastructure, connectivity, and human operators capable of interacting with digital feedback. In these contexts, the modular and scalable design of the methodology facilitates adaptation without requiring full digital maturity or high capital investment.

The proposed methodology was designed to support technical interoperability across various systems and industrial contexts using standardized and modular communication protocols. At the physical and edge layers, data acquisition devices (e.g., sensors, PLCs, microcontrollers) can communicate using MQTT, Modbus RTU/TCP, or OPC-UA, depending on the sector’s requirements and available infrastructure. These protocols allow for efficient, low-latency, and scalable data transfer across heterogeneous devices. At the middleware and cloud levels, RESTful APIs, WebSocket connections, and Node-RED-based orchestration layers are used to ensure flexible integration with enterprise systems, digital dashboards, and decision support tools. The virtual environment may operate on simulation engines such as AnyLogic, MATLAB R2021a, or custom Python 3.8 models, allowing bidirectional data flows between the DT and external applications, such as ERP, MES, or GIS platforms.

For integration with more complex or emergent systems, such as smart factories, circular supply chains, or urban infrastructure (smart cities), the architecture can be scaled horizontally and vertically. Horizontal scaling involves the replication of DT modules across multiple assets or units (e.g., composting beds, fields, tanks), while vertical scaling allows for integration into enterprise-level analytics platforms or AI-based supervisory control systems. The use of containerization technologies (e.g., Docker) and standardized message schemas (e.g., JSON, XML, OPC-UA nodesets) facilitates deployment in hybrid environments (on-premise + cloud). This interoperability approach ensures not only the extensibility of the methodology across industrial sectors but also its resilience and adaptability to evolving technological ecosystems. It enables organizations to gradually adopt DT technologies without requiring complete system overhauls, aligning with the principles of sustainable digital transformation.

To ensure that the proposed methodology can be successfully verified and transferred to other domains, its implementation should follow a structured validation process. This involves (i) adapting the system configuration to local operational and environmental characteristics, (ii) defining measurable key performance indicators (KPIs), and (iii) conducting case study trials to monitor short-term and long-term impacts.

For this purpose, the following minimum requirements are necessary:

Logistical support: Routine access to physical infrastructure, operational continuity, and availability of basic tools for system monitoring and adjustment.

Technological infrastructure: At least one sensor or data acquisition device per critical variable; a local gateway or cloud platform for processing and storage; and basic connectivity (WiFi, 4G, LoRa).

Human resources: One or more personnel trained in system operation, familiar with basic process variables, and capable of following operational procedures and responding to DT suggestions.

Integration capability: The ability to connect with existing systems, such as PLCs, spreadsheets, SCADA, or ERP platforms, directly or via APIs, depending on the digital maturity of the target environment.

Meeting these requirements allows the methodology to maintain its modular and adaptable nature while ensuring operational feasibility. In the proposed architecture, the integration of the proposed model with other existing technologies and platforms different from those discussed in this document can be validated. This strategic approach facilitates deployment even in environments with limited resources and lays the foundation for scaling the methodology to more complex industrial or urban contexts. The robustness of the methodology is also reflected in its modular architecture, which allows for continuous iterations and facilitates adaptation to various contexts. In conclusion, the proposed methodology represents a significant advancement in the application of DTs for the improvement of production and service systems. However, future research should focus on addressing the identified limitations, improving interoperability, and exploring strategies to ensure sustainable and scalable implementation across different industrial sectors.

5. Conclusions

This work represents a substantial advancement in the conceptualization, implementation, and validation of DTs for production and service systems, with a special focus on organic waste management. The contributions are categorized into three distinct dimensions:

- (a)

Methodological Contributions

This study introduces a set of methodological innovations that advance the implementation of DTs in low-tech, variable environments:

An applicable and operational definition of DTs was proposed, which integrates predictive capabilities, dynamic feedback, and autonomous management. This conceptual clarity facilitates the distinction between Digital Models, Shadows, and Twins, and supports their implementation across different maturity levels.

A modular and scalable three-layer architecture—composed of physical, cloud, and virtual environments—was designed to enable integration with emerging technologies such as IoT, AI, and cloud computing. This structure supports real-time monitoring, simulation, and feedback loops in dynamic contexts.

The step-by-step methodology, structured in contextual and propositional phases, offers a replicable framework for guiding the development of DTs. It includes stages for system diagnosis, tool selection, simulation modeling, physical integration, and iterative validation.

A novel approach to gradual DT adoption was established through three levels of implementation: Digital Model (monitoring), Digital Shadow (simulation), and DT(optimization). This staged framework enables organizations to scale up based on their available infrastructure and operational complexity.

Additionally, the methodology introduces the concept of minimum replicability conditions, outlining the essential requirements for transferring the model to new sectors or environments. These include the availability of sensors, KPI definitions, connectivity, and human-operator engagement.

This set of contributions provides a robust foundation not only for technical implementation but also for strategic planning of DT adoption in sectors traditionally excluded from digital transformation efforts.

To consolidate the main contributions of this study,

Figure 7 provides an integrative representation of the proposed DT methodology. It illustrates the logical flow of the two-phase framework, contextual and propositional, along with its empirical validation through real-world deployment in a rural composting facility. Additionally, it outlines the methodology’s potential adaptability to other domains, including wastewater treatment, smart agriculture, and decentralized bio-processing systems. Beyond serving as a synthesis of the case study, this figure also conveys the generalizable procedure or baseline structure required to replicate and scale the methodology across different sectors. As such, it reinforces the modular nature, strategic sequence, and cross-sectoral transferability of the approach, making it a practical foundation for applying DTs in circular economic contexts with limited digital infrastructure.

- (b)

Practical Contributions

The methodology was successfully validated in a real composting facility located in Cajamarca, Colombia, under operational conditions and using non-specialized personnel. This validation generated several concrete and replicable outcomes:

A 10% increase in efficiency and reduction in process variability.

A monthly gain of 1200 kg of compost, increasing total production without changes to the physical process, solely through digital optimization.

A Return on Investment (ROI) of 18,957.6%, derived from minimal capital investment (~$50 USD), showcasing the feasibility of DT adoption in low-budget contexts.

The DT enabled dynamic adjustments to aeration and irrigation, improving resource use and reducing waste. The case study confirmed that real-time decision support, even with basic connectivity and computing infrastructure, can significantly enhance operational outcomes.

Importantly, the implementation demonstrated ecological validity: results were obtained in an uncontrolled, real-world setting with external factors such as climate and operator variability, reinforcing the robustness and practical reliability of the model.

- (c)

Theoretical Contributions

This work advances the theoretical foundations of DTs by proposing a context-sensitive definition that incorporates predictive intelligence, dynamic feedback, and actionable virtual environments. Unlike purely conceptual frameworks, this definition is grounded in measurable outcomes and operational metrics.

The research addresses an evident gap in DT literature by providing a domain-specific implementation framework for organic waste treatment, especially composting, which has traditionally been excluded from industrial digital transformation efforts. While most existing methodologies focus on manufacturing, this work contributes a novel application schema that supports real-time adaptation in biologically variable processes.

In addition, the study provides a quantitative comparison with established methodologies, such as the model by [

24], using a multi-criteria evaluation that includes scalability, interoperability, cost, and replicability. The results of this comparative analysis, supported by a doctoral research framework, highlight the superiority of the proposed model in terms of domain adaptation, economic impact, and practical relevance.

Finally, this work introduces the concept of minimum replicability conditions—a set of foundational requirements (e.g., sensor access, KPIs, human engagement) that allow organizations to assess their readiness for DT adoption. This theoretical contribution bridges the gap between abstract frameworks and operational feasibility.

- (d)

Long-Term Effects

The results suggest that DTs can act as catalysts for systemic transformation in production systems by enabling continuous improvement and real-time optimization. In the long term, the methodology can support transitions toward circular economy models, improving sustainability through data-driven decisions and feedback-controlled operations.

Future expansions include:

Multi-case validations across sectors like precision agriculture, wastewater treatment, and smart logistics.

Integration with AI, blockchain, and XAI (explainable artificial intelligence) to enhance transparency, traceability, and adaptive learning.

The framework’s modularity and extensibility allow for continuous evolution, keeping pace with technological advances without requiring disruptive infrastructure overhauls.

- (e)

Implementation Costs and Scalability

Despite the initial perception of high investment for DT projects, this study demonstrates that costs can be dramatically reduced through strategic design choices and open technologies. The extremely high ROI observed in the case study confirms that even low-resource organizations can benefit from DT adoption.

The model is scalable both horizontally (multiple units or assets) and vertically (integration into enterprise-level platforms), with compatibility ensured through standard protocols (e.g., MQTT, REST, OPC-UA). This facilitates deployment in hybrid environments and enhances system resilience.

To ensure sustainability and broader adoption, future efforts should focus on:

Capacity-building initiatives to train personnel in DT operations.

Funding and incentive mechanisms to support small and medium enterprises (SMEs) and public sector entities.

Policy frameworks to promote digital innovation in circular economic ecosystems.