1. Introduction

Artificial intelligence (AI) and the Internet of Things (IoT) have emerged as two of the most significant technologies in the contemporary world. The IoT is a network of real-world objects, including cars, appliances, and other devices, which are embedded with sensors, software, electronics, and network connectivity to enable them to gather and share data online. The Internet of Things (IoT) has found applications in many areas. For example, IoT systems enable comprehensive home management. Before arriving home, we can use our smartphone to turn on the heating, ensuring the temperature is set to an ideal level. Smart locks automatically close, and the alarm system is activated. The refrigerator checks whether products are running low and can send a shopping list to your phone. Also, cameras and sensors connected to the Internet allow immediate notifications about unwanted guests. The system can distinguish between household members and intruders, record the event, and notify security services [

1,

2].

The computer science field of AI develops intelligent machines and computer systems that can perform tasks typically associated with human intelligence. AI enables machines to replicate human cognitive abilities through learning, reasoning, problem-solving, and language understanding capabilities [

3,

4].

AI transforms IoT by enabling devices to operate independently without human supervision. The central processing element of IoT systems relies on AI to analyse sensor data and device information in real time throughout the system. The main functions of AI systems include event prediction, automatic environmental response and process optimisation. The continuous learning capability of IoT systems becomes possible through AI integration, leading to enhanced algorithmic performance. The system achieves better precision and effectiveness in decision-making through data processing, leading to the creation of an intelligent environment that adapts to user needs through continuous learning [

5,

6,

7].

Developing intelligent acoustic systems for the IoT requires building distributed networks that combine microphones and sound sensors. The network extends its coverage across residential buildings, industrial production halls, cities, and forest areas. Each sensor operates as an information node, gathering environmental sound data. AI algorithms analyse and interpret the acoustic signals which the network acquires. Thanks to this, we can achieve automatic sound classification, which recognises the type of sound, identifies anomalies and unusual events, and determines the context and source of its occurrence. We can apply sound analysis in many areas, including security and cybersecurity, where the appropriate analysis of acoustic information, combined with a proper response, can save lives and property.

The integration of AI and the IoT in acoustic systems opens up limitless possibilities. As technology advances, we can expect increasingly sophisticated systems to record sounds, understand them, and predict their behaviour.

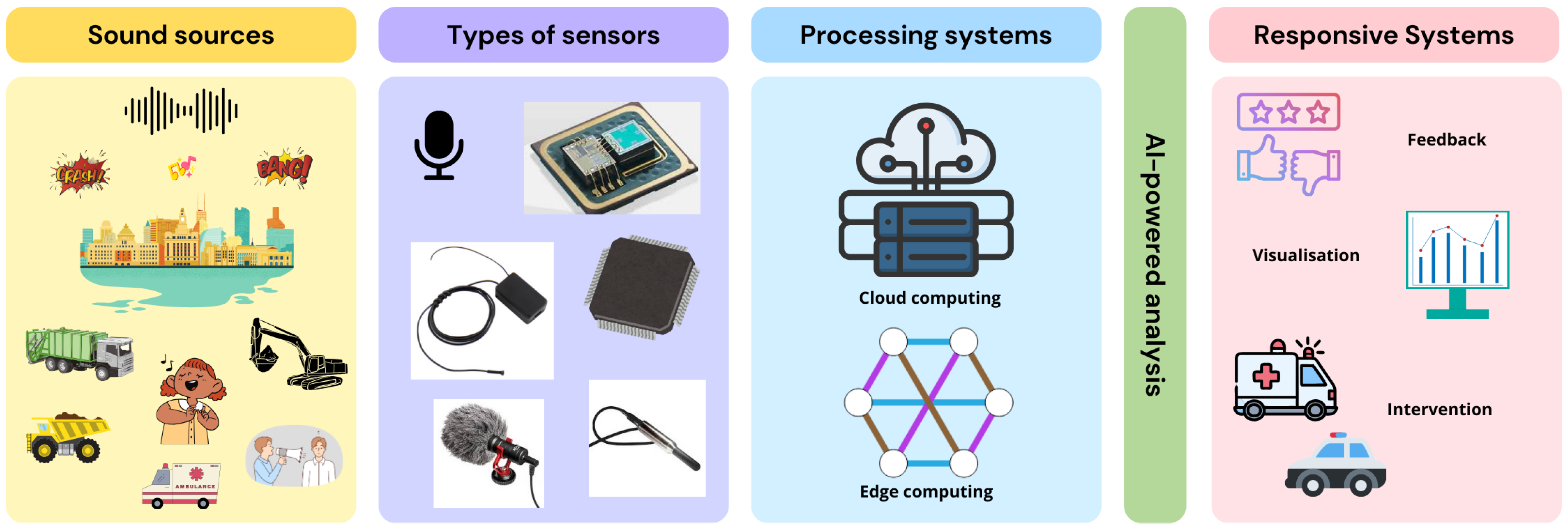

Figure 1 provides a symbolic overview of acoustic sensing and analysis in IoT ecosystems, including various sound sources and sensors, as well as processing and responsive systems.

1.1. Motivations and Contributions

The increasing need to track, comprehend, and respond to technical and environmental acoustic signals in real time inspired this article. Sound is now a rich data source for intelligent machines and a carrier of information for human hearing, thanks to the rapid development of the IoT infrastructure and AI techniques. The increased availability of MEMS sensors, edge computing devices, and wireless networks allows for the widespread deployment of acoustic monitoring systems in urban planning, predictive maintenance, health monitoring, and public safety. However, a comprehensive overview of state-of-the-art solutions is necessary to identify trends, challenges, and research gaps, given the diversity of application domains, fragmentation of research efforts, and rapid technological advancements.

This manuscript advances the field in the following ways. It begins by providing a cross-domain, organised overview of contemporary acoustic data collection and analysis techniques. Second, it identifies key technological enablers, including acoustic sensor networks, edge and cloud computing paradigms, and artificial intelligence algorithms. Third, it showcases new applications and use cases with practical implications. The paper concludes by outlining potential avenues for future research and the possibility of further combining acoustic monitoring with cyber-physical system architectures and innovative city systems.

This manuscript examines specific domain applications, such as medical auscultation, mechanical diagnostics, and environmental noise monitoring. This review provides a unified perspective on acoustic information in intelligent systems. Our work extends beyond summarising current methods, as it establishes conceptual connections between different use cases through the integration of AI and the IoT.

The main contributions of this manuscript are as follows:

We provide a structured and comparative overview of acoustic sensing solutions across healthcare, industry, smart cities, and environmental monitoring.

We identify common technological enablers and patterns, such as the role of MEMS sensors, edge computing, and neural network architectures.

We highlight methodological gaps, particularly in how distributed systems handle uncertainty, interference, and real-time inference.

We propose a conceptual synthesis that frames acoustic data as a unifying layer in cyber-physical systems, suggesting new directions for research into context-aware, adaptive, and resilient monitoring platforms. This broader view enables researchers and practitioners to recognise overlaps between currently fragmented efforts, paving the way for more holistic and interoperable solutions.

1.2. Research Methodology

This article is based on a systematic literature review conducted between 2020 and 2024, encompassing peer-reviewed journal articles, conference proceedings, and white papers in the fields of AI, the IoT, and acoustic sensing. The research process involved utilising scientific databases, including IEEE Xplore, ScienceDirect, SpringerLink, and MDPI. Keywords included combinations of “acoustic sensing,” “machine learning,” “IoT,” “edge computing, ”5G, “MEMS microphones,” and “sound-based diagnostics.”

The articles were selected based on their relevance to the thematic scope, novelty of the approach, and clarity of methodological description. Each publication was analysed in terms of the following aspects: type of acoustic signal, sensor technology, data preprocessing and feature extraction methods, AI/ML models applied, and practical application domain. The findings were categorised and synthesised to identify common patterns, gaps, and promising future directions. Additionally, real-world case studies were reviewed to assess the feasibility and impact of the technologies in operational environments.

1.3. Organisation

The article’s structure presents the reader with the basics of the technologies and their practical applications. Initially, the problem is outlined, and why sound, although often overlooked, can be a valuable carrier of information in intelligent systems. The following sections describe the key technologies used for acquiring and analysing acoustic data, including sensors, machine learning algorithms, and data processing architectures. Particular attention is paid to real-world examples of acoustic systems applications, from medicine and industry to public safety. At the end of the article, current challenges, possible development directions, and the future of sound monitoring in the context of AI and IoT are discussed.

2. Related Works

In recent years, we have observed a dynamic development of acoustic technologies, supported by artificial intelligence and the Internet of Things (IoT), in medical, industrial, and urban applications. Increasingly, reviews in the literature focus on specific aspects of these issues, ranging from wearable sound sensors in health monitoring to fault detection in transportation systems and acoustic machine diagnostics. Despite numerous valuable studies, there is a lack of papers that cover this topic in a holistic, cross-sectional manner and consider contemporary possibilities resulting from the integration of acoustics, AI, and the IoT. This article fills this gap, presenting a unique and broad view of modern technologies for acquiring and analysing acoustic information, both from a theoretical perspective and from the perspective of real applications. Thanks to this approach, the article provides an overview of the current state of knowledge. It serves as a practical compendium for researchers and engineers seeking a comprehensive understanding of this rapidly evolving field.

In [

8], Kong et al. provided a comprehensive review of the latest developments in portable and wearable acoustic sensors for monitoring human health. They focused on various technologies for detecting sounds from the body, such as heart, lung, or gastrointestinal sounds, analysing the mechanisms of sensors based on capacitive, piezoelectric, and triboelectric principles, among others. They discussed specific examples of portable devices (for example, electronic stethoscopes) and modern, wearable sensors in skin patches, bright fabrics, or bioinspired MEMSs [

9]. They also analysed their application in remote care, diagnostics, and telemedicine. The authors assessed the advantages and limitations of individual solutions. They indicated directions for further research, emphasising the potential of these technologies in precise, non-invasive health monitoring, especially in home conditions and among patients requiring constant supervision.

In [

10], Mallegni et al. reviewed the applications of miniature microelectromechanical systems (MEMSs) in medicine, particularly in analysing acoustic signals from the body, such as heart and breathing sounds. They showed that MEMSs are gradually replacing traditional stethoscopes, offering greater precision in recording and analysing small vibrations and pressure changes. The article describes both the principles of operation of these sensors and their advantages (miniaturisation, accuracy, and potential for personalising care), as well as current limitations, including a low signal amplitude, susceptibility to interference, limited patient acceptability when wearing them, and challenges related to production costs and recycling. The authors highlight the need to develop new materials, enhance algorithms for data analysis, and integrate these devices more comprehensively with Internet of Things systems and artificial intelligence. They also point out that developing this technology requires technical progress and building greater trust from the clinical community. In their opinion, the future of MEMS in medicine lies in systems that are more intelligent, comfortable for users, integrated with remote and personalised care, and capable of recording less-studied signals, such as swallowing sounds.

In [

11], Aalharbi et al. conducted a comprehensive literature review on belt idler fault detection using acoustic and vibration signals and machine learning methods. Idlers are an essential but difficult-to-monitor element of conveyor systems due to their large number and dispersion in the industrial environment. The review encompasses all key stages of the diagnostic process, from data collection, signal processing, and feature extraction to the construction of machine learning models. Various machine learning and deep learning models, such as SVM, KNN, decision trees, ANN, and CNN, are discussed, with a particular emphasis on their effectiveness in detecting early signs of damage. The authors note that the lack of publicly available datasets significantly hinders further development in this field and poses challenges in selecting optimal model parameters. Drones are proposed as a future tool for automatic fault detection in distributed idlers. The work provides a solid foundation for researchers and practitioners developing belt diagnostic systems using modern ML methods.

In [

12], Alshorman et al. comprehensively reviewed methods for the early fault diagnosis of induction motors using sound analysis and acoustic emission. The authors discuss techniques for detecting four main types of faults—bearings, rotor, stator, and complex faults—focusing on signal acquisition and processing methods, feature extraction methods, and applied machine and deep learning algorithms. The review also includes available datasets and practical challenges, such as the presence of industrial noise or difficulties in locating fault sources. The article organises the current knowledge, identifies research gaps (for example, a lack of public data or limited tests in real-world conditions), and provides a valuable starting point for further research on acoustic diagnostics in industrial systems.

The analysis of existing studies reveals that, although many works focus on specific aspects, such as the detection of body sounds, engine diagnostics, or fault detection based on vibrations, there is a lack of an approach that combines these areas into a coherent vision of future acoustic technologies. This paper compares the latest solutions from different domains and indicates directions for their joint development. Importantly, we emphasise the potential synergy between artificial intelligence, acoustic sensors, and the Internet of Things, especially in the context of distributed, adaptive, and interference-capable systems. Thanks to this, the article is not only a review but also a conceptual one, indicating new possibilities for using sound as a source of knowledge about the state of humans, machines, and the environment.

3. Technologies Supporting Intelligent Acoustic Monitoring

This section presents the theoretical information on widely used technologies that support intelligent acoustic monitoring.

3.1. IoT in Acoustic Systems

The IoT is a networked system that unites everyday devices with specialised systems and sensors to perform automated processes through device communication. IoT technology operates in various sectors, including residential smart spaces and urban areas, industrial operations, healthcare facilities, and military command systems. The technology depends on sensors to detect environmental data, which they transmit to analytical or control systems. The detection of sounds and acoustic waves in various environmental conditions makes acoustic sensors essential for IoT systems. Acoustic sensors serve multiple functions, including speech recognition, structural analysis, water sound wave study, and security system enhancement for monitoring purposes [

13,

14,

15,

16].

MEMS (microelectromechanical system) microphones represent a significant advancement in IoT acoustic sensor technology. The combination of semiconductor technology allows manufacturers to create small devices that maintain their sound quality and sensitivity levels. MEMS microphones operate at low power levels, making them suitable for mobile devices, smart speakers, speech recognition systems, and wearable technology applications. The thin silicon membranes inside their structure convert acoustic pressure fluctuations into electrical signals. MEMS microphones have been widely adopted in IoT systems because they combine CMOS technology with other electronic systems [

17,

18,

19,

20].

Acoustic analysis requires hydrophones and ultrasonic sensors as fundamental components for water environments and industrial applications. Hydrophones’ underwater sound recording ability makes them specialised underwater microphones that serve oceanographic and biological monitoring, as well as sonar navigation systems. Industrial sensors employ hydrophones to detect pipeline leaks and monitor the condition of underwater installations. Industrial systems employ ultrasonic sensors for three main applications: quality control inspections, object detection, and medical imaging diagnostics. The IoT implements ultrasound technology for contactless distance measurements in car parking systems and logistics process automation applications [

21,

22,

23,

24].

Directional microphones and microphone arrays are solutions that allow for a significant improvement in sound recording quality. Directional microphones can focus on the sound source, eliminating background noise, which is critical in conference systems, voice recording, and security applications. Microphone arrays, consisting of multiple microphones working together, enable even more precise filtering of unwanted sounds and localisation of the sound source in space. Due to beam-forming algorithms, microphones can adjust their sensitivity according to their surroundings. This makes them useful in robotics, smart voice assistants, and acoustic monitoring systems [

25,

26,

27,

28].

Modern technology relies on IoT sensors and devices, including microphones, hydrophones, and ultrasonic devices. The technology extends beyond sound recording because these devices are used for environmental monitoring, detecting acoustic anomalies, and analysing building structures for critical infrastructure. The sensors in IoT systems are linked to communication modules, which enable both remote control operations and immediate data analysis. Artificial intelligence algorithms combined with these sensors enable them to record sounds and interpret their meaning. This ability opens up new possibilities for security systems, monitoring technologies, and how people interact with devices [

26,

29,

30,

31].

Figure 2 illustrates a conceptual four-layer architecture for intelligent acoustic IoT systems. It encompasses sensor hardware, wireless communication protocols, edge intelligence (for example, TinyML), and cloud-based analytics and integration. Each layer comprises typical components commonly found in real-world implementations, providing a modular and scalable foundation for applications in public safety, health monitoring, smart environments, and industrial diagnostics.

3.2. Artificial Intelligence in Acoustic Analysis

The computer science discipline of AI develops systems that execute tasks that need human intelligence. The field encompasses a range of techniques, from basic search algorithms to sophisticated machine learning models and neural networks. The application of AI technology extends across various domains, including data analysis, process automation, robotics, medical applications, and cybersecurity. Artificial intelligence enables the analysis of sounds through acoustic processing, which surpasses the capabilities of traditional methods. AI enables sound recording and storage capabilities and real-time analysis for meaning detection and pattern classification, leading to immediate conclusions [

4]. We can use AI in many areas, like medicine [

32,

33,

34], critical systems [

35], security [

36,

37,

38,

39], or logistics [

40,

41,

42].

AI plays a key role in sound analysis, especially in Natural Language Processing (NLP) [

43,

44,

45], sound classification [

46,

47,

48], and acoustic pattern recognition [

49,

50,

51]. The artificial intelligence branch of NLP enables machines to understand and process human language in both spoken and written forms. NLP enables speech recognition, language translation, sentiment analysis, and transcription of recordings in sound analysis applications. Voice assistants, voice control systems, and conversation monitoring systems use this technology to detect undesirable content. Sound classification is crucial because AI systems can identify various types of sound signals, including background noise, machine sounds, alarm sounds, and human speech. The technology enables industrial system fault detection through automation, supporting the recording of anomaly evaluation and music and voice identification. AI technology detects acoustic patterns to achieve three main objectives: medical diagnosis of lung diseases using cough analysis, security applications with speaker recognition, and environmental studies of animal vocalisations for ecological research.

Sound analysis relies on multiple AI algorithms that effectively process and understand acoustic signals. The Convolutional Neural Network (CNN) model [

52,

53,

54] is a widely used solution that has demonstrated outstanding performance in image and sound spectrogram analysis. The analysis of sequential signals with the detection of temporal dependencies depends on recurrent neural networks (RNNs) [

55,

56,

57] and their advanced version, Long Short-Term Memory (LSTM) [

58,

59,

60]. Speech recognition and acoustic analysis have been revolutionised by Transformer-based models, including Whisper from OpenAI [

61] and Wav2Vec2 from Meta AI [

62], which deliver highly accurate speech transcription and interpretation results. Sound classification and recognition systems use supervised and unsupervised learning models, including Support Vector Machines (SVMs) [

63,

64,

65] and Gaussian Mixture Models (GMMs) [

66,

67,

68] for sound source segmentation and recognition. Implementing these algorithms enables both automatic sound analysis and real-time processing of large recordings, as well as speaker identification and anomaly detection.

3.3. Audio Signal Processing Techniques

The fundamental components of sound analysis in modern AI systems are noise reduction and feature extraction. Combining these methods produces clean and structured signals that can be applied to various speech recognition applications, including security sound detection, factory defect identification, and healthcare breath sound analysis for the diagnosis of lung diseases.

Pattern analysis and sound classification rely heavily on artificial intelligence and machine learning. Instantaneous recognition, comprehension, and handling of sound signals by AI algorithms are helpful in online security, healthcare, industry analysis and in capable support systems. Machine learning models can recognise particular sound patterns, classify them based on previously learnt information, and detect unusual signals that could indicate equipment issues, eavesdropping attempts, or other undesirable circumstances. Sound classification converts sound waves into numerical values and identifies various sound types using techniques such as neural networks (CNN, RNN, Transformer), SVMs, or GMMs. AI can differentiate between signals, including speech, music, background noise, and odd noises in industrial settings, provided it is properly processed and analysed [

3].

Sound capture techniques are crucial for recording signal quality and subsequent analysis. Classic microphones operate on the principle of membranes reacting to acoustic waves, converting them into electrical signals. Despite their widespread use in various applications, they suffer from limited susceptibility to interference and background noise. Adaptive microphones, unlike classical ones, can dynamically adjust their characteristics to the surrounding conditions, filtering out unwanted sounds and focusing on the sound source. They rely on speech recognition systems, IoT devices, and intelligent acoustic monitoring systems. Another advanced technology is beamforming, which tracks sound sources in microphone arrays. It involves using multiple microphones to selectively amplify the signal from a specific direction, thereby significantly improving the recorded audio quality and reducing background noise. It is a key technology in video conferencing systems, speech recognition in noisy environments, and military and industrial applications. You can capture sound signals in two ways: passive methods, which listen to sounds around us, and active methods, which send out sound waves and study how they bounce back. Active methods, such as sonar or echolocation, are used in object detection, underwater navigation, and intrusion detection systems [

69,

70].

The extraction of key sound characteristics and their accurate analysis are made possible by sophisticated audio signal processing techniques. A crucial tool for identifying the harmonic components of sound is the fast Fourier transform (FFT) [

71], which transforms a signal from the time domain to the frequency domain. The method is essential for sound recognition systems, disturbance detection, and signal spectrum analysis. Sound classification and speech recognition applications rely on Mel-Frequency Cepstral Coefficients (MFCCs) [

72] as a crucial technique. Audio signal processing systems utilise MFCCs as a valuable feature extraction method because it emulates human auditory perception of sounds. The short-time Fourier transform (STFT) [

73] in spectrogram analysis displays signal frequency changes over time to detect abnormal sounds, analyse music, and enhance security systems. Advanced sound analysis systems emerge from combining these methods with AI algorithms, which enable pattern recognition, signal sorting, and environmental sound adaptation.

Noise reduction is essential for enhancing the quality of sound signals, especially in systems that recognise speech, classify sounds, and process microphone signals in noisy places. Classical noise reduction techniques involve bandpass filtering, like low-pass, high-pass, or band-stop filters, which remove specific frequency ranges that cause unwanted noise. More advanced methods employ algorithms such as adaptive filtering (for example, Wiener or Kalman filters) [

74], which adjust their settings according to the varying sound conditions surrounding them. Contemporary methods based on artificial intelligence, such as deep neural networks (DNNs) [

75,

76], autoencoders [

77,

78], or Generative Adversarial Networks (GANs) [

79,

80], allow for very effective noise reduction by training models on large datasets containing clean and noisy audio samples. Popular solutions, such as Deep Speech Enhancement (DSE) or those using Wave-U-Net models, can successfully separate the sounds we want from background noise, making the audio more discernible even in challenging situations [

81,

82].

Audio signal processing relies on feature extraction to obtain essential sound information while removing superfluous data. The traditional audio processing techniques, MFCCs, LPC, and PLP, are designed to mimic human auditory perception. The speech recognition field primarily uses MFCCs because they accurately represent human frequency perception; however, LPC and PLP are useful for voice and music signal analysis. Modern AI techniques utilise unsupervised feature extraction methods, such as autoencoders and principal component analysis (PCA), to reduce data dimensions while preserving critical information. The transformer-based models Wav2Vec2 and HuBERT automatically discover sound features that enhance the effectiveness of classification and signal recognition [

83,

84].

The fundamental components of sound analysis in modern AI systems are noise reduction and feature extraction. Combining these methods yields clean and structured signals that can be applied to various speech recognition applications, including security sound detection, factory defect identification, and healthcare breath sound analysis for the diagnosis of lung diseases.

3.4. Integration with Other Technologies

Combining IoT technology with 5G networks, cloud computing, and edge computing systems enhances the capabilities of systems that use acoustic sensors. Network infrastructure development and edge-device computational power enable real-time sound signal analysis and transmission, essential for environmental monitoring, security systems, and industrial fault detection applications [

85,

86].

The IoT connects with audio systems through distributed acoustic sensor networks, which gather data across multiple locations before sending them to central processing systems. The network comprises MEMS microphones in mobile devices and smart speakers, alongside advanced monitoring systems, including hydrophones for underwater use and ultrasonic sensors for industrial applications. Such solutions require sensor synchronisation and calibration as the main challenge to achieve data consistency for accurate sound analysis. Before sending this information to relevant authorities, security systems use distributed microphones to generate real-time acoustic maps that detect disturbing sounds, including gunshots, explosions, and screams. For example, a similar approach is used in environmental acoustic monitoring to track animal populations based on their vocalisations [

87,

88].

The transmission and processing of signals in real time are made possible by modern network technologies and computational solutions. A key role here is played by the low latency in data transmission provided by 5G networks. In traditional IoT systems, audio data were typically processed in the cloud, necessitating the transmission of large quantities of data to remote servers. However, contemporary solutions increasingly utilise edge computing, which involves processing data directly on edge devices or local network nodes. Thanks to this, it is possible to perform preliminary filtering, analysis, and classification of sounds before sending them to the central system, which reduces bandwidth requirements and speeds up the response of control systems [

89,

90].

Cloud and edge computing operate as complementary technologies that process data in different ways. Cloud computing provides extensive computational power, making it suitable for permanently analysing extensive datasets, including recording, archiving, and AI algorithm applications. The local data processing capability of edge computing makes it suitable for applications that need low latency, such as smart alarm systems and speech recognition in mobile devices. The organisation uses a combined system that performs elementary processing tasks at network edges and advanced operations within a cloud infrastructure [

91,

92].

Implementing 5G connectivity is crucial for IoT acoustic systems, as it enables fast data transfer and low latency. The immediate alarm and recording transmission capability of 5G security systems enhances threat detection effectiveness. Through 5G technology in smart cities, noise detection systems and real-time acoustic environment quality monitoring have become possible. The industrial application of 5G technology enables the more precise control of robot sound sensors for machine fault diagnosis through operational sound analysis [

93,

94].

The selection between cloud computing and edge AI for audio data processing depends on the application requirements. Edge AI outperforms cloud-based systems for speech recognition and alarm monitoring because it enables data analysis directly at the local level, allowing for faster and more efficient processing. On the other hand, in cases where long-term analysis and result accuracy are crucial, such as in advanced acoustic anomaly detection systems, cloud processing offers better computational capabilities and access to advanced machine learning algorithms. A hybrid approach, combining the cloud’s computational power with the speed of edge AI, seems to be the optimal solution for most IoT systems related to sound analysis [

95].

3.5. Summary and Comparison

The technologies described in this section demonstrate how the intelligent interplay between sensing hardware, signal processing methods, and AI algorithms shapes acoustic monitoring. On the one hand, we have diverse and highly specialised sensor types—from MEMS microphones to hydrophones and directional arrays—which differ in cost, deployment complexity, and target environment. On the other hand, the increasing use of edge AI and adaptive processing brings advanced analytical capabilities closer to the source of the signal. What emerges is a modular system where sound is not only captured but also intelligently processed and interpreted in near real time. Compared to earlier, more centralised approaches, modern architectures promote decentralisation, context awareness, and low-latency responses, especially in safety-critical or mobile applications.

4. Applications of Intelligent Acoustic Monitoring

In this section, we present the applications of intelligent acoustic monitoring in key areas of human life. We focus on public safety, including detecting shots, explosions, or screams in cities. Then, we deal with environmental monitoring and methods for analysing noise and detecting ecosystem changes. We also study industry and machine diagnostics in the context of sound-based fault detection. As part of our further considerations, we delve into health care, specifically focusing on using voice assistants and monitoring patient conditions through speech and ambient sound analysis. We also consider topics related to intelligent buildings and smart cities, considering the automatic adjustment of acoustic conditions.

4.1. Public Safety and Security

This subsection presents the use of acoustic monitoring to detect threats such as shots, explosions, the presence of drones or firearms in public spaces, in the context of shot detection and weapon classification [

96,

97,

98,

99], explosion detection using smartphones [

100], weapon detection in images [

101], and drone detection [

102].

4.1.1. Shot Detection and Weapon Classification

In [

96,

97], Svatos et al. presented a method and system for acoustic detection of gunshots, which could detect, localise, and classify acoustic events. The system was tested in open and closed shooting ranges, using various firearms and subsonic and supersonic ammunition. The system employed continuous wavelet [

103] and Mel Frequency Transformation [

104] methods for signal processing, along with a two-layer fully connected neural network for classification. The results showed that the acoustic detector could be reliable for gunshot detection, localisation, and classification.

In [

98], Raza et al. found out that gunshot sounds were common in crimes, causing fear and panic among victims. Additionally, these sounds were associated with high mortality rates. Based on this, the authors focused on efficient gunshot detection as crucial for preventing crimes. They proposed a novel Discrete Wavelet Transform Random Forest Probabilistic (DWT-RFP) feature engineering approach and a meta-learning-based Meta-RF-KN (MRK) method. Experiments on a gunshot sound dataset using 851 audio clips from YouTube showed the MRK approach achieved 99% k-fold accuracy, outperforming state-of-the-art methods. This approach could potentially help prevent crimes.

In [

99], Valliappan et al. used YAMNet, a deep learning-based classification model, to identify firearm types based on the sounds of gunfire. The technique extracted features from audio data using Mel spectrograms [

105]. The study used 1174 audio samples from 12 distinct weapons, achieving an accuracy of 94.96%. The research highlighted the effectiveness of YAMNet in real-world weapon detection and its relevance in forensic, military, and defence applications. The model outperformed existing deep learning and hybrid techniques, and the study emphasised the importance of integrating extra Keras layers for improved training efficacy.

4.1.2. Explosion Detection Using Smartphones

Takazawa et al. in [

100] noted that monitoring explosions was challenging due to the uneven distribution of infrasound and seismoacoustic sensor networks. They proposed using smartphone sensors for explosion detection. The authors combined two machine learning models (D-YAMNet [

106] and LFM [

107]) into an ensemble model for smartphone explosion detection. They observed that explosion detection on smartphone sensors using machine learning was a relatively new endeavour, and no specialised models were available for evaluating the performance of ensemble models.

4.1.3. Weapon Detection in Images

In [

101], Yadav et al. developed the WeaponVision AI system, which automatically identified weapons, such as pistols or rifles, in images, videos, and surveillance camera transmissions. The primary objective was to develop a system that could rapidly detect threats and operate independently, which is crucial in situations such as armed robberies in public areas. The system was constructed using a modified version of the YOLOv7 model, well-known for its high object detection efficiency. Over 79,000 images, including those with challenging conditions such as blurry shots, nighttime photos, dark backgrounds, or intricate, noisy scenes, were used to train the model. Thanks to this, WeaponVision AI also coped well in unfavourable conditions, as confirmed by the achieved results—detection precision was almost 92%. Also, the system was equipped with a night vision function. It was designed to work on ordinary processors and graphics cards, making it more universal and easier to implement. The whole system was complemented by a straightforward graphical interface, which enabled specialists and non-technical users to operate the system without prior training. In practice, this means that WeaponVision AI can support security services, allowing for a faster and more accurate response to threats related to the use of weapons.

4.1.4. Drone Detection

In [

102], Fang et al. presented a new approach to drone detection using fibre-optic acoustic technology. They developed FOAS sensors, which, as part of the DAS system, allowed for highly sensitive recording of airborne sounds, including drone signals. Unlike traditional microphones, the system provided synchronisation and centralised data processing on a large scale. In field tests, detecting and locating a drone with high accuracy (RMSE of 1.47°) was successfully achieved, confirming the potential of this technology in security and urban monitoring applications.

These works show the increasing effectiveness of acoustic threat detection, thanks to the combination of sensors, sound processing methods, and neural networks. An interesting trend is the use of common devices, such as smartphones, and the development of low-cost, easy-to-implement systems.

4.2. Smart Cities and Urban Monitoring

This subsection describes monitoring urban noise, street traffic, user positions, or the presence of emergency vehicles in the context of indoor positioning [

108], urban sound classification [

109], traffic monitoring with DAS [

110,

111,

112], noise monitoring with 5G/IoT [

113], emergency vehicle signal detection [

114,

115], footstep detection in homes [

116], and crowdsensing and acoustic zone classification [

117,

118].

4.2.1. Indoor Positioning

In [

108], Zhang et al. proposed a hybrid indoor positioning system (H-IPS) that integrated data from three sources: mobile device MEMS sensors, Wi-Fi fingerprinting, and acoustic measurements. Accurate user localisation in challenging indoor environments, particularly with obstacles present (NLOS, or non-line-of-sight), was the primary objective. The authors developed the DMDD (data and model dual-driven) model, which enhanced accuracy in various phone-holding modes by integrating a deep learning-based speed predictor with the traditional inertial navigation system (INS) approach. Additionally, they developed a drift estimator (ADE) and a technique for detecting NLOS errors for acoustic signals, and they enhanced the Wi-Fi fingerprinting algorithm using a dual-weight K-NN (DW-KNN) and accuracy index (WAI). A data fusion algorithm based on the Adaptive Unfiltered Kalman Filter (AUKF) combined all these components. Even under challenging circumstances with signal interference, the system produced excellent results in field tests, achieving an accuracy of about 2 m. The authors showed that stable and accurate positioning on the scale of entire buildings was possible by combining different data sources and error compensation techniques.

4.2.2. Urban Sound Classification

In [

109], Özseven et al. investigated how to effectively classify urban sounds by comparing two primary methods: the traditional signal processing approach (with manually selected acoustic features) and the deep learning approach, where sounds are transformed into images (such as mel-spectrograms or scalograms) and then classified by CNN models. They conducted experiments on two popular datasets, ESC-10 and UrbanSound8k, without any prior audio preprocessing. They applied classic SVM and k-NN algorithms to acoustic features, as well as deep learning models, including ResNet-18, ShuffleNet, and their five-layer CNN model. Their hybrid approach—combining acoustic features with visual representations of sound—proved the most effective. The best results were achieved by combining scalograms and acoustic features using the AVCNN model, reaching 98.33% accuracy on ESC-10 and 97.70% on UrbanSound8k using mel-spectrograms with acoustic features classified by an SVM. The conclusions of the work indicated that combining classical sound parameters with images generated from acoustic signals yielded the best results, and techniques known from speech recognition could be effectively transferred to sound classification in the context of smart cities.

4.2.3. Traffic Monitoring with DAS

In [

110], Fakhruzi et al. described an experiment using Distributed Acoustic Sensing (DAS) technology to monitor road traffic in real urban conditions, utilising existing communication fibre optics in Granada (Spain) as sensors. The aim was to determine whether DAS could be useful for the constant and exact observation of street traffic events. Analysing signals captured by optical fibres, they identified various types of moving objects, such as cars, and generated a feature vector using domain knowledge to more precisely separate acoustic events. Clustering methods were employed to identify the optimal frequency bands, key measurement points, and distinctive signal characteristics; exploratory data analysis (EDA) was also conducted. Apart from offering a valuable approach for processing DAS data in an urban setting, the study also opened the door for future research on automatic detection of anomalies and irregular urban traffic events employing artificial intelligence. Similar observations were made by Ende et al. in [

112] and Garcia et al. in [

111]. Garcia et al. in [

111] concluded that DAS technology could be effectively used for continuous and anonymous monitoring of urban traffic, enabling the automatic recognition of different types of traffic participants and generating data that helped plan innovative and sustainable cities. They also demonstrated that it was possible to obtain helpful information despite interference and changing environmental conditions by using appropriate signal processing.

In [

115], Szwoch et al. developed and tested an algorithm for detecting and counting road vehicles based on the sound intensity analysis measured by a small, passive acoustic sensor placed on the road. The innovative element of the method was the use of two-dimensional sound intensity measurement (parallel and perpendicular to the roadway), which allowed not only the detection of the presence of a vehicle but also the determination of its direction of movement. The system was tested on a 24 h recording in real conditions and showed high efficiency (precision, sensitivity, and F-score of 0.95) in increased traffic conditions. Compared to other acoustic detection methods or technologies such as induction loops or radars, the authors’ solution was distinguished by its simplicity, low cost, lack of wave emission and the ability to operate on weaker equipment without the need for installation in the road surface. Due to these features, the system could be widely used in traffic monitoring networks within smart cities. In the future, the authors plan to conduct further tests under more challenging conditions, such as increased traffic intensity, environmental noise, or vehicle obscuration phenomena.

4.2.4. Noise Monitoring with 5G/IoT

In [

113], Segura et al. focused on designing and implementing a comprehensive environmental noise monitoring system for smart cities, based on 5G technology and the Internet of Things (IoT). Their work showed that 5G capabilities could offload sensor computations and distribute psychoacoustic data processing between system elements. They developed different variants of dividing the functions of algorithms for calculating noise metrics. They placed them in different system locations (for example, at the network’s edge, in the cloud, and in end devices). Finally, they compared the performance of these variants, analysing how they affected the efficiency and implementation possibilities of the entire system.

4.2.5. Emergency Vehicle Signal Detection

In [

114], Shabbir et al. proposed an innovative system for detecting emergency vehicle signals by analysing microphones’ recorded road noise in real conditions. The aim was to improve traffic management and shorten the response time of emergency services in smart cities. They developed a deep learning model as a stacking ensemble, combining MLP and DNN networks as base models and LSTM as superior models (meta-learner). The system was based on the advanced analysis of acoustic features such as MFCCs, mel-spectrogram, centroids, flux, tonnetz, or chroma. Thanks to this design, the model achieved very high efficiency, up to 99.12% accuracy and an F1 score reaching 98%, which was better than previous solutions. Experiments were conducted on a real dataset, which included road traffic and siren sounds, and the proposed approach proved to be precise and scalable for smart city monitoring applications. The authors emphasised that their method could also be adapted to other fields, such as sound recognition in smart homes, nature monitoring, or patient analysis in healthcare systems.

4.2.6. Footstep Detection in Homes

In [

116], Summoogum et al. proposed a new approach to passive footstep detection in home conditions, which could support the development of assistive technologies, especially when monitoring the mobility of the elderly in their natural environment. They focused on using a deep learning model—a recurrent neural network with an attention mechanism—to detect footsteps in complex, noisy sounds typical of home environments. The model was trained on synthetic data representing realistic acoustic scenes, and its effectiveness was assessed based on PSDS indicators, comparing it with systems previously described in the literature. The proposed solution achieved the best PSDS result among the compared methods, similar to the results of universal systems for detecting indoor sounds in the DCASE competition. The system was designed to detect and track footsteps, making it a promising tool for supporting remote healthcare and independent living for seniors.

4.2.7. Crowdsensing and Acoustic Zone Classification

In [

117], Pita et al. proposed a practical application of unsupervised learning methods, specifically the k-means algorithm, to analyse long-term acoustic data collected by a network of sound sensors deployed in Barcelona. The aim was to classify urban areas based on their acoustic characteristics, using annual noise indices (such as Lday, Lnight, and Lden) without requiring labelled data. The model enabled the identification and interpretation of four main classes of acoustic environments, allowing for the tracking of seasonal changes and the impact of special events (such as lockdowns), making it a valuable tool in supporting urban noise management strategies. Notably, the comparison with the official noise map showed the high consistency of the results and the possibility of detecting new areas requiring attention. It is worth noting that that work has significant practical implications, as it demonstrates how relatively simple machine learning methods can effectively monitor and manage the acoustic environment of a large city in an automated and scalable manner.

In [

118], Jezdovic et al. developed and implemented a scalable crowdsensing system for monitoring city noise pollution, based on a mobile application, cloud computing, and big data infrastructure. The solution enabled residents to collect acoustic data from microlocations inaccessible to traditional measurement systems, either actively or passively, and then send them for real-time analysis. The experiment conducted in Belgrade confirmed the system’s usefulness for citizens and city authorities, enabling the identification of local noise problems and supporting planning and environmental decisions. Compared to existing solutions, the system stood out with its technological openness, flexible methodology for selecting locations, the possibility of integration with other systems, and the use of micro-scale data analytics. Although the authors acknowledged certain limitations, including measurement quality variability and the risk of abuse, the overall assessment suggested that the platform had great potential as a cost-effective, adaptive, and socially engaging tool for smart cities.

There is a wide variety of approaches, from extensive infrastructure systems to participatory crowdsensing applications. The common denominator is the pursuit of low-cost, scalable, and socially oriented monitoring.

4.3. Health Monitoring and Ambient Intelligence

This subsection presents the use of sound in health diagnostics and support for everyday functioning, in the context of speech monitoring and disorder detection [

119] and MEMS microphones in biomedical monitoring [

120].

4.3.1. Speech Monitoring and Disorder Detection

In [

119], Ali et al. developed an intelligent system for the automatic detection of voice disorders, which could be used in healthcare in smart cities. The system extracted speech signal features based on linear prediction analysis (LP). It assessed the distribution of acoustic energy, identifying phonation disorders, especially in the low-frequency range (1–1562 Hz), which proved crucial in detecting irregularities. The solution achieved very high efficiency—almost 10%—for both steady sounds and continuous speech, making it useful in everyday use. The system could support prevention in individuals particularly exposed to occupational hazards (for example, teachers, lawyers, and singers) and among seniors, whose number is growing worldwide. The work presented a valuable proposition for e-health—a simple, effective, and ready-to-implement technology for monitoring voice health in urban environments.

4.3.2. MEMS Microphones in Biomedical Monitoring

In [

120], Okamoto et al. designed and tested a new MEMS microphone based on a piezoresistive cantilever, which was characterised by exceptionally high sensitivity and the ability to detect very low sound pressures, down to 0.2 mPa, in the frequency range of 0.1 to 250 Hz. The key element of that sensor was an ultra-thin cantilever, only 340 nm thick, equipped with a wide platform and narrow hinges, which allowed the authors to achieve a sensitivity about 40 times greater than in previous designs of this type. In tests, the device demonstrated the ability to measure very low-frequency sounds, such as the heart’s precise sounds—S1 and S2 tones were recorded with a high signal-to-noise ratio of 58 db. The microphone could find applications in many areas, including wearable health monitoring systems, photoacoustic gas detection sensors, and monitoring natural phenomena such as earthquakes or changes in atmospheric pressure.

We are seeing the growing importance of passive and non-invasive diagnostic methods. These systems are critical in the context of ageing societies and care for dependent people.

4.4. Industry and Predictive Maintenance

This subsection shows the detection of faults in machines and industrial infrastructure based on sound and vibration analysis, in the context of machine and rotor diagnostics [

121,

122,

123,

124,

125,

126,

127].

In [

121], Shubita et al. present a fault diagnosis system for rotating machines, such as drills, based on acoustic emission (AE) analysis and edge machine learning (ML). Their approach enables the real-time detection and classification of bearing, gear, and fan faults without transmitting data to the cloud, thereby increasing security, reducing latency, and conserving bandwidth. The system was implemented on a low-cost, low-power STM32F407 microcontroller, where all stages—from data acquisition, filtering, and feature extraction to classification—are performed locally. Using an analysis of variance (ANOVA) for feature selection and a decision tree classifier, the system achieved a high accuracy of 96.1%, outperforming many previous methods, especially in terms of performance on limited hardware. The authors demonstrate that edge ML can be an effective and practical solution for industrial machine health monitoring, and due to its low computational requirements, it is well suited for implementation in real-world conditions.

In [

122], Brusa et al. demonstrated that deep learning networks previously trained to recognise sounds and music could be effectively applied to machine fault diagnostics, particularly for detecting bearing damage. For this purpose, they used the YAMNet model, which was created to analyse sounds from video recordings (for example, YouTube). They applied a transfer learning approach, which allowed them to reuse the learned network features to analyse machine vibration spectrograms. The studies showed that even with a limited quantity of training data, it was possible to achieve very high classification efficiency (even 100% in some datasets), and the appropriate use of dropout layers allowed them to reduce the problem of overfitting in the case of uneven class distribution. The results confirmed that transferring knowledge from sound recognition to machine diagnostics was not only possible but also highly practical, especially in scenarios where access to data is limited. With this approach, the authors planned further research on the impact of dataset size, noise, and hyperparameter selection on diagnostic efficiency.

In [

123], Tagawa et al. presented a deep learning-based anomaly detection system for industrial machine sounds that worked effectively even in high ambient noise conditions, one of the main challenges in less automated industrial plants. The main innovation is the use of a GAN to reconstruct sound signals and identify deviations from standard operating patterns, taking into account preprocessing using the Kalman filter and the L2 regularised loss function. The studies were conducted on the MIMII dataset. They demonstrated that the proposed solution outperformed other methods (OC-SVM, classic autoencoder) in terms of fault detection accuracy (AUC), particularly in cases involving noisy pump data. Based on the analysis of Log-Mel spectrograms and optimised for operation in industrial conditions, the system could support predictive maintenance in the spirit of Industry 4.0. The authors planned to further develop the method by designing more noise-tolerant features and loss functions, as well as implementing deep convolutional networks for shorter audio data sections. This was expected to improve the system’s performance in real production conditions.

In [

124], Bondyra et al. developed a prototype diagnostic system for detecting and locating damaged rotor blades in multi-rotor drones (UAVs) by analysing acoustic signals recorded by an onboard microphone array. Their solution used a data-driven approach using artificial neural networks and MFCC-based features. This enabled the precise detection of rotor damage, including tip cracks and blade edge damage, and the identification of the failed rotor. The system was built on a lightweight, on-board single-board computer and tested in realistic flight conditions, including both hovering and intensive manoeuvres. An extensive analysis of the effectiveness of models based on convolutional networks and LSTM was conducted, with the latter proving to be more computationally efficient, achieving over 98% accuracy in classifying faults based on 200 ms samples. The authors were the first to present a comprehensive solution for acoustic FDI that operated with on-board signal recording, significantly bringing it closer to practical use. In the future, they plan to further optimise the algorithm for real-time operation, extend the system to detect other types of faults (for example, engine bearings), and integrate data from vibration and acoustic sensors to increase detection reliability.

In [

125], Song et al. developed a method for diagnosing faults in mine fans based on the analysis of acoustic signals. The authors proposed a four-element expert system that covered the acquisition and denoising of sound signals, feature extraction, recognition of the operating state, and fault diagnosis. As part of the work, an analysis of the sources of fan noise was conducted, utilising wavelet transformation and wavelet packets for denoising and extracting characteristic signal features. To identify faults, the authors generated artificial sound signals corresponding to three failure scenarios (bearing overheating, bearing damage, and drive belt problem). The results showed that different failure states were characterised by clearly different feature vectors, allowing for their effective differentiation. The evaluation of the method indicated its great potential as an inexpensive, non-contact tool for 24/7 monitoring of the technical condition of fans in difficult mine conditions.

In [

126], Yaman et al. presented a lightweight and effective method for the early detection of brushless motor faults in unmanned aerial vehicles (UAVs), based on sound analysis and feature extraction using the MFCC method, followed by classification using the SVM algorithm. In that study, four UAV models were built—a helicopter, a duocopter, a tricopter, and a quadcopter—and sound data from the motors in a healthy state and with different types of faults (imbalance, magnet, propeller, and bearing damage) were collected. The method achieved very high classification efficiency—from 90.53% to 100%, with the highest accuracy obtained in single- and twin-motor models. The key advantage of the proposed approach was its low computational complexity, which enabled it to be applied in real time on embedded systems, distinguishing it from more resource-intensive solutions based on deep learning. The work is valuable for its practical application and extensive evaluation of system performance on various UAV configurations.

In [

127], Suman et al. proposed an intelligent device for the early detection of mechanical faults in vehicles, based on the analysis of acoustic and vibration signals recorded inside the car. The system, equipped with a microphone, vibration sensors, and a microcontroller, used a Kalman filter to reduce noise and MFCCs to analyse the characteristics of the engine sound, whose changes may indicate a failure. The presented results indicated that the proposed solution effectively identified mechanical problems at an early stage, thereby reducing the risk of road accidents. The advantage of the work lay in the combination of the simplicity of implementation with a real-world application, as well as its openness to future extensions. The authors assumed the integration of the system with VANET networks was possible to monitor the technical condition of vehicles in real time. Their practical approach makes a valuable contribution to the development of intelligent road safety systems.

Acoustic diagnostic systems are becoming a real alternative to more complex and expensive solutions, offering good accuracy even in harsh industrial conditions. Edge computing and lightweight ML models are increasingly used here.

4.5. Environmental Monitoring and Biodiversity

This subsection focuses on the analysis of environmental sounds and their use in assessing the condition of ecosystems when monitoring urban parks and biota [

128].

In [

128], Benocci et al. conducted a pilot study using passive acoustic monitoring (PAM) and eco-acoustic indicators to assess the sound environment in Milan’s Parco Nord urban park. They used a network of inexpensive sound recorders, distributed over approximately 20 hectares, to capture sounds in various locations and at different times of day. A set of seven eco-acoustic indicators, including ACI, ADI, BI, and AEI, was calculated from the recordings. Principal component analysis (PCA) and clustering were applied to distinguish areas with varying degrees of biotic activity and anthropogenic disturbance. The results indicated clear zones dominated by road and construction noise, as well as areas with richer biotic activity, which was also confirmed by field listening. The method proved effective and cheap, and the authors emphasised its potential as a diagnostic tool for monitoring biodiversity, detecting early effects of climate change, and supporting planning and conservation decisions. In the future, they plan to expand the study to other habitat types to evaluate the effectiveness of this methodology in different natural environments.

Environmental acoustics is gaining importance as a low-cost and non-invasive tool in ecological research and urban greenery management.

4.6. Infrastructure and Road Quality

This subsection focuses on monitoring the quality of road surfaces using acoustic sensors, in the context of road surface diagnostics [

129] and road noise modelling [

130].

In [

129], Jagatheesaperumal et al. developed an innovative system for monitoring road surface conditions in smart cities, based on acoustic analysis and artificial intelligence. The proposed solution consisted in placing a sensor equipped with a microphone and an ultrasonic module in the rim of the vehicle wheel, which collected sound data and information about the depth of road surface damage. The system classifies the road as smooth, slippery, grassy, or uneven, and the data were analysed using machine learning algorithms such as MLP, SVM, RF, and kNN. The highest efficiency was achieved thanks to the MLP model, which achieved an accuracy of 98.98%. The distinguishing feature of the system was its practical, hardware nature and the ability to automatically inform road services about the location and nature of road surface damage. The authors suggested that the system may support vehicle-to-vehicle communication (V2V) in the future, enabling warnings about poor road conditions and the dynamic designation of alternative routes, which can significantly enhance city safety and traffic flow.

In [

130], Pascale et al. presented a new road noise estimation model (RTNM) that enabled the dynamic prediction of noise levels in smart cities based on real-time traffic intensity and speed data. The model integrated their developed noise emission model for light vehicles (VNSP), taking into account differences resulting from the type of vehicle (diesel, petrol, hybrid, LPG), with the CNOSSOS model (for heavy vehicles) and with a sound propagation model that converted sound power levels (Lw) to sound pressure levels (LAeq) at the reception points. The input data came from a radar in Aveiro (Portugal), and the model results were compared with data from a professional noise sensor. The results showed good agreement with reality (the average error did not exceed 5.8%), although the model slightly overestimated noise during rush hours and underestimated it at night. This suggested the need to incorporate background noise and vehicle acceleration data in future studies. The proposed solution was scalable, could be supported by data from low-cost sensors, and provided valuable information to city decision-makers in noise management. The authors emphasised the potential for further model development, including the addition of more vehicle types, detailed micro-motion data, and corrections for terrain slope and acceleration manoeuvres.

These works demonstrate the practical potential of acoustics in road infrastructure management. Integration with vehicles (V2V, VANET) creates new possibilities for automatic and dynamic response to faults.

Summary and Comparison

The mentioned applications showed how acoustic monitoring is being employed in many varied fields, yet some patterns are repeated. In smart cities and public safety, the primary goals are speed, reliability, and the ability to utilise common devices (such as smartphones) for large-scale deployment. In healthcare and industry, the focus shifts to accuracy, non-invasiveness, and the ability to operate in loud or confined spaces. Environmental systems vary in type and are placed in different areas. On the other hand, they value long-term monitoring, low cost, and little maintenance. A unifying thread in all of these areas is the increasing use of lightweight machine learning models and edge computing to make decisions in real time and close to the source. We are witnessing the growth of distributed acoustic intelligence, which is flexible, adaptable, and becoming increasingly autonomous, rather than relying on isolated systems.

5. Challenges and Limitations

AI-based acoustic systems are continually improving and are being integrated with IoT infrastructure. However, there are still numerous technical and practical issues that make it challenging to utilise intelligent acoustic monitoring. The quality of the data is a big problem. Acoustic signals are susceptible to noise, echoes, and sources that are too close together in the environment. This makes it more difficult to obtain useful features and increases the likelihood of false positives in detection systems. Advanced filtering and deep learning methods can mitigate some of these effects. Still, they often require large, labelled datasets, which are difficult to obtain and time-consuming to collect, especially in real-world applications.

Another problem is that sensors are different and are placed in various locations. Distributed acoustic sensor networks require calibration and synchronisation to achieve consistent results. Different hardware settings (microphones, cases, or gain settings) can bias the data and slow the system down. Additionally, edge devices have limited computing power, which makes utilising complex models challenging, particularly in applications that require real-time analyses.

Scalability is also a big problem. Many systems developed in research settings cannot be easily scaled up for use on a large scale due to cost, infrastructure issues, or concerns about data privacy. Finally, in areas such as health and safety, regulatory and ethical issues (such as the right to acoustic privacy or being responsible for missed detections) create limits that are often overlooked in the technical literature but are crucial for acceptance in the real world.

Smart sound processing has come a long way, but numerous issues remain with acoustic monitoring systems that utilise AI and the IoT. The accuracy of the classification and the likelihood of false alarms are two key factors to consider. When security or medical systems generate false alarms, they can cause unintended consequences. Detection must be highly reliable and capable of functioning even in the presence of significant noise or movement. Ethics and privacy are also problems. When devices are recording in private areas, it can be hard to tell where proper monitoring ends and possible surveillance begins. Questions about the acceptability of such technology and the need for its social familiarisation arise.

Issues related to the security of audio data, especially those sensitive to health or legal contexts, are no less important. Effective encryption, anonymisation, and access control mechanisms are necessary. At the same time, the development of distributed systems that collect data from many sources creates a challenge in managing large datasets, from their transmission and storage to the optimisation of processing and analysis in real time. In particular, the need for efficient edge computing architectures and cloud integration becomes crucial for the scalability of such solutions.

6. Future of Intelligent Acoustic Monitoring

The future of intelligent acoustic monitoring looks bright. AI and the IoT are coming together to create new generations of systems that can sense their surroundings. We expect a shift towards more self-sufficient and flexible solutions that continually learn from new data while maintaining user privacy and operating effectively on low-power edge devices. Federated learning and transformer-based architectures could allow future models to combine the accuracy of centralised training with the privacy benefits of decentralised processing.

Another area of focus will be multimodal integration, which involves combining acoustic data with other types of data, such as vibration, video, environmental sensors, or contextual metadata, to make detection systems more reliable and easier to understand. For example, combining sound with vibration patterns can enhance industrial diagnostics, and combining sound with geolocation data could improve the safety of smart city applications.

In the medical field, tiny MEMS microphones built into wearables could enable continuous and passive health monitoring, including the detection of breathing problems, vocal fatigue, or emotional stress in real time. Also, smart acoustic systems are likely to help protect the environment by monitoring biodiversity, climate change indicators, or finding wildfires early.

Ultimately, intelligent acoustic systems will go from reacting to sounds to predicting what will happen next. They will turn sounds in our environment into useful information about the physical, biological, and social state of things.

The development of intelligent sound monitoring systems is not slowing down. On the contrary, in the coming years, we can expect breakthroughs that will further integrate technology into the everyday functioning of societies. Increasingly advanced artificial intelligence algorithms, including self-learning, generative models, and graph networks, will enable better differentiation of acoustic patterns, particularly in conditions of high noise, interference, and uncertainty. One of the development directions is integrating acoustic systems with multimodal monitoring platforms, combining sound with video image analysis, motion detection, vibrations, or environmental parameters. Such systems will not only be more accurate but will also better understand the context of the observed situation.

We can expect experimental implementations using quantum technologies in the medium term, particularly for rapidly analysing complex signals or modelling nonlinear acoustic phenomena in real time. Another direction is applications in new, rarely explored areas—for example, precision agriculture, where sound monitoring can support pest detection or crop condition diagnosis, and space exploration, where acoustics can be used to test structures and diagnose the operation of mechanisms in vacuum conditions or controlled environments.

This leads to a vision of the future in which intelligent acoustic analysis becomes a standard component of IoT and AI systems, regardless of whether the discussion is about urban, industrial, medical, or planetary environments. However, realising this vision requires technological progress, appropriate regulations, ethical guidelines, and social acceptance.

7. Discussion and Comparative Analysis

The analysis of recent studies presented in the section Applications of Intelligent Acoustic Monitoring reveals a growing interest in using AI-based acoustic methods for a wide range of practical applications. Although the works differ in scope, technology, and purpose, several recurring patterns and comparative insights emerge.

First, many systems rely on Mel-Frequency Cepstral Coefficients (MFCCs) as a fundamental feature extraction method, regardless of the application domain. This is observed in works related to UAV fault detection [

126], vehicle diagnostics [

127], and voice disorder detection [

119]. MFCCs are a lightweight and effective representation of sound, enabling high classification accuracy even with limited computing resources.

Second, the accuracy of classification models across studies is generally very high. For instance, Yaman et al. reported up to 100% accuracy for UAVs with fewer motors, while Ali et al. achieved over 99% accuracy in detecting voice disorders. This suggests that well-preprocessed acoustic data, paired with classical machine learning methods such as SVM, can be highly effective without resorting to deep neural networks, which are often more computationally demanding.

In contrast, more complex urban environments, such as those addressed in the works of Jezdovic et al. [

118] and Benocci et al. [

128], require a scalable infrastructure and spatial-temporal analysis, often employing unsupervised methods, such as k-means or eco-acoustic indexes. These approaches emphasise the importance of context and long-term monitoring rather than just event-based classification.

Another interesting differentiation is in the level of hardware integration. Some approaches, such as that of Jagatheesaperumal et al. [

129], integrate acoustic modules into physical infrastructure (wheel rims), while others, like the crowdsensing system from Jezdovic et al., leverage mobile devices and user participation. This contrast highlights two strategies: passive integration into the environment versus active human involvement, both with their pros and cons.

Finally, many works touch on future scalability and integration with other systems (for example, VANET, innovative city platforms, and healthcare monitoring). Yet, none of them propose a unified, multimodal acoustic platform that could operate across domains—an area in which our work provides an integrative vision.

The reviewed works demonstrate that acoustic monitoring is rapidly evolving, with robust, efficient, and domain-specific solutions. However, our article’s novelty lies in offering a cross-sector perspective, outlining how AI, the IoT, and acoustics converge to form intelligent, scalable systems. This conceptual synthesis, absent in the surveyed literature, marks our contribution as both timely and unique.

To better understand the diversity of approaches in intelligent acoustic monitoring,

Table 1 summarises selected publications discussed in this article. It contains a comparison of the main features, including application, type of analysed acoustic data, methods of feature extraction and classification used, achieved accuracy, and the most important innovations of each work. Despite the standard core processing of audio signals, we can observe that the approaches are strongly differentiated depending on the application context. The highest accuracies (above 99%) were achieved in biomedical applications and UAV damage detection, where the acoustic environment is relatively controlled. In turn, works carried out in an urban environment (for example, crowdsensing or noise mapping) focused not on classification but on spatial-temporal analysis and supporting decision-makers by interpreting large datasets. The distinctive approaches include, among others, the use of lightweight classification methods that can be run on edge devices, as well as the integration of multiple sensors (for example, microphones and ultrasound), which indicates the direction of development of acoustic solutions towards effective, contextual, and low-cost real-time monitoring.

8. Conclusions

This review aimed to explore how sound, often overlooked or treated as a secondary signal, has come to play a significantly more central role in intelligent systems. We found that across very different application areas, from monitoring road traffic to diagnosing health conditions, sound was not just useful, it was essential.

The range of current implementations is already impressive. Some systems detect mechanical faults from just a few seconds of engine noise, while others analyse city soundscapes to identify patterns in biodiversity. But what makes them effective is not only the use of advanced AI techniques. Equally important are practical elements, including the placement and type of sensors, the method of data processing (whether locally or in the cloud), and how the system handles disruptions, noise, or limited power.

As we reviewed dozens of studies, a few clear themes emerged. One of them was the steady move toward combining multiple data sources—not just sound, but also video, temperature, and motion. Another was the increasing reliance on decentralised processing, where instead of sending all data to the cloud, they are analysed where they are captured. That shift matters, especially for applications where real-time feedback or data privacy is critical.

However, the field still faces significant challenges. Many systems struggle with consistency in noisy or unpredictable environments. Some models perform well in the lab but lose accuracy in the real world. Additionally, there is the question of privacy—continuous listening, even for legitimate reasons, can raise serious concerns when it is carried out in public or semi-private spaces.

We also noticed several research gaps. For instance, there was a lack of shared benchmarks across domains, making it difficult to compare results or replicate findings. Very few studies looked at long-term performance or system durability. And in many cases, there was too little discussion of what happens when these systems fail—something we will need to understand better as adoption grows.