Abstract

Many dynamic systems experience unwanted actuation caused by an unknown exogenous input. Typically, when these exogenous inputs are stochastically bounded and a basis set cannot be identified, a Kalman-like estimator may suffice for state estimation, provided there is minimal uncertainty regarding the true system dynamics. However, such exogenous inputs can encompass environmental factors that constrain and influence system dynamics and overall performance. These environmental factors can modify the system’s internal interactions and constitutive constants. The proposed control scheme examines the case where the true system’s plant changes due to environmental or health factors while being actuated by stochastic variances. This approach updates the reference model by utilizing the input and output of the true system. Lyapunov stability analysis guarantees that both internal and external error states will converge to a neighborhood around zero asymptotically, provided the assumptions and constraints of the proof are satisfied.

1. Introduction

Dynamic systems can often experience unwanted actuation caused by an exogenous input. For aero-space systems, the input can be a gust of wind, changes in atmospheric pressure, and variation in temperature, among other factors [1,2,3]. These outside conditions can affect the dynamics and overall performance of the system. Two main methods that deal with exogenous inputs are disturbance accommodating control (DAC) and robustness techniques. Several variations in DAC exist; one notable technique involves identifying the disturbance’s basis and utilizing adaptive control to determine the appropriate linear combination of the basis to remove the unknown input [4,5]. Notably, the adaptive control method of DAC is derived from a fixed gain method, which requires solving a set of matching conditions—also known as solvability conditions—before implementing DAC [6]. The fixed gain method requires significant knowledge of the system. Conversely, the DAC can be implemented for the adaptive case if system stability conditions are satisfied. An alternative viewpoint is treating disturbances as unknown inputs to the system, and an observer-estimator can be formulated to estimate the disturbance [7,8].

In many instances, the exogenous-unknown input behaves stochastically, and the basis set of the disturbance remains unidentified. In 2002, Fuentes, R. and Balas explored the case of Robust Adaptive DAC, given that a set of known and unknown input basis functions exist [9]. The emergence of robustness techniques stemmed from the limitations of the Luenberger-like estimators, the inability to account for stochastic noise adequately [10,11]. This limitation ultimately led to the development of traditional Kalman-like filters, which operate under the assumption that the stochastic variation in the unknown input follows a Gaussian distribution centered around zero [12,13]. For Luenberger and Kalman-like estimators to work, there must be minimal uncertainty about the true system. Luenberger and Kalman-like estimator’s sensitivity to minimal uncertainty about the true system led to the creation of plant-robust techniques [14,15].

The proposed control technique expands our original finding by accounting for unknown input stochastic variation, where the input basis and plant model uncertainty are unknown [16]. The control technique can accommodate significant changes to the true system’s plant, as defined in the derivation, which may arise from environmental factors, aging, or usage effects. Changes to the plant can involve modifications to internal interactions and constitutive constants. In 2022, Griffith and Balas developed an open-loop coupled approach for robust adaptive plant and input estimation. Plant estimation depends on adaptive control, and input estimation uses a fixed gain approach [17]. The proposed control technique is a closed-loop approach, enabling the decoupling of plant and input estimation. The closed-loop method allows access to the true system’s input and output to update the reference model adaptively.

The proposed control scheme is designed for a generic system and can, in theory, be implemented in any system that falls within the assumptions and constraints of the proof. Nonetheless, practical and numerical limitations may arise, some of which will be mentioned later in the text. While the proof is presented for globally linear time-invariant (LTI) systems, the proposed observer-estimator can also be applied to local LTI systems, provided that the assumptions and constraints of both the local LTI system and the derived theorem are satisfied. Given that it is common engineering practice to linearize non-linear systems around an operating point, the proposed scheme is well suited to aid state estimation in such cases [18,19]. Moreover, the proposed method can either complement or replace gain estimation scheduling techniques, which are often employed to estimate the state of a system under varying operational conditions or non-linear dynamics [20]. Gain estimation scheduling can be computationally intensive, especially when a large number of operating points must be considered, and it often requires detailed knowledge of the system dynamics.

Although the required decomposition of the true plant may seem restrictive, it is one of the strongest attributes. This becomes especially apparent when the true system is derived from a non-linear model linearized at a nominal operating point. If the system subsequently undergoes a health-related change—such that the original non-linear model no longer accurately captures the dynamics—the theory still guarantees that the error states will converge to a neighborhood around zero, provided that the altered system continues to satisfy the structured decomposition. However, it is essential to note that there may still be numerical and practical limitations. While the theorem is often framed in the context of health monitoring, it can also be interpreted as an adaptive observer that tracks changes in the true system across different localized operating regimes.

Additionally, given that the proposed estimator is non-invasive—meaning that none of the estimated states are fed back into the true system—the control scheme can be utilized without adversely affecting the true system. The robustness proof depends on two stability criteria: Strictly Positive Real (SPR) and Almost Strictly Dissipative (ASD). For a formal definition, along with SPR and ASD assumptions and constraints, refer to [16,21].

2. Main Result

The work presented herein aims to determine the internal and external state of the true physical system given a form of health change. In this context, a health change constitutes any deviation that can be captured within the prescribed decomposition of the true plant, typically manifesting as variations in constitutive constants or internal interactions. Such changes may arise due to environmental influences, aging, operational wear, or shifts in the system’s operating point. The initial model and the true system may differ substantially, as long as the true system conforms to the prescribed decomposition, the proposed proof guarantees that the estimation error will converge to a neighborhood around zero. However, there may exist real-world limitations of the theorem, some of which are mentioned in the discussion section of the paper.

This study extends our previous findings by considering the case where the true system experiences stochasticities and non-linearities in the dynamics, summarized in Theorem 1. In contrast, earlier results only considered model uncertainties [16]. Here, given an initial model of the true plant, states are estimated using known inputs and the true system’s external state. Due to the inherent stochasticities, state error can only be guaranteed to converge in a neighborhood around zero . In the absence of such stochasticities, our prior results demonstrate that the estimation error asymptotically converges to zero [16].

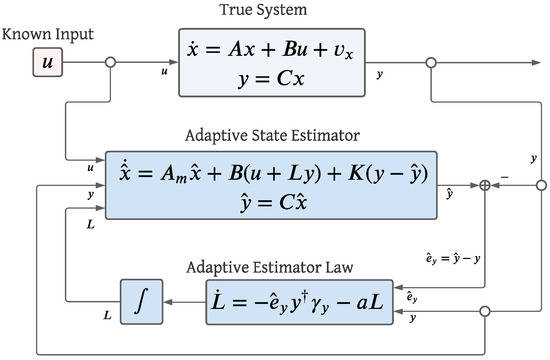

Although presenting the main theorem prior to introducing the true system and the proposed observer may seem unconventional, this structure is intended to provide a high-level overview before delving into the technical details. The control diagram for Theorem 1 is expressed in Figure 1.

Theorem 1.

Output Feedback on the Reference Model for Robust Adaptive Plant and State Estimation.

Consider the following state error system:

where is the estimated internal state error, is the estimated external state error, is the fixed-correction matrix, is the variability-uncertainty term, K is a fixed gain, is an interaction tuning term, ‘a’ is a positive scalar feedback term, and v are the unknown stochastic variations present in the system.

Given:

- 1.

- The triples of and are ASD and SPR, respectively.

- 2.

- A model plant must exist.

- 3.

- Input matrix and output matrix are known.

- 4.

- Allow .

- 5.

- The set of eigenvalues of the true and reference plants are stable (i.e., & .

- 6.

- Input must be bounded and continuous.

- 7.

- Allow the stochastic variation to be bounded .

- 8.

- Allow ‘a’ to be a scalar value , where is the minium eigenvalue of matrix and is the maximum eigenvalue of matrix .

- 9.

- Allow to be bounded ∋.

- 10.

- Allow satisfy .

If conditions are met, then asymptotically, where is the radius confidence around zero. is guaranteed to be bounded; however, there is no guarantee of converging.

Figure 1.

Control diagram for adaptive plant and state estimation accounting stochastic variations and environmental/health changes in the true system using known inputs and outputs.

2.1. Defining True System Dynamics

This case study assumes the true plant dynamics are linear time-invariant and either weak stochasticities or non-linearities are bounded and present in the dynamics:

The input matrix , output matrix , output (external) state , and input are assumed to be known and well understood. The true plant is assumed to have stable eigenvalues , also referred to as a Hurwitz stable matrix. Given that the true plant experiences a health change, the true plant dynamics related to internal interactions and constitutive constants are unknown, but an initial plant model exists.

2.2. Overview of Updating the Reference Model

The following sections will derive a control law to minimize the state error between the true (Equation (2)) and model (Equation (3)) systems given the presence of stochasticities in the true system. Note that both the true and model systems match in dimensions but may differ in internal interactions and constitutive constants. The eigenvalues of the initial model plant are stable and the weak stochasticities are unknown, bounded, and not accounted for in the reference model. Accounting for stochasticities in the true system adds an additional layer of complexity, potentially making the reference model dynamics less reliable.

A board overview, the reference model considers both the input and output of the true system to modify its dynamics, aiming to minimize the estimated state errors . To update the reference model, the true system’s plant is assumed to be decomposable into two components: the initial plant model and the plant correction term :

The decomposition of the true plant is structured so that the initial model can be updated and corrected in the estimator via a correction term :

where is the variability-uncertainty term. Derived later, the correction term depends on the output state and the output error between the model and true systems to adaptively update. By adjusting the reference dynamics, both internal and external errors can be guaranteed to converge to a neighborhood around zero asymptotically. Alternatively, for the derived control scheme to be feasible, the triplet of must be ASD and is SPR.

2.3. Estimated State Error

Internal state information is often blended into a linear combination or omitted from the external state . Therefore, an estimator is required to capture the full state vector . However, the estimator complexity increases when the true system undergoes a health change and when bounded stochasticities exist in the system dynamics. Given the initial plant and using the true system’s external state, an estimator-observer can be created:

Note that the estimator and true systems are actuated using the same input .

To minimize the error between both the estimator and the true systems, consider the following error states:

If both internal and external error states converge to zero ∋, the true plant states have been numerically captured. To guarantee , error state dynamics must be investigated:

Therefore, the state error equations can be written as follows:

where and . In addition, the estimator can be modified to use fixed gain :

Resulting in the following error equations:

In order to use the fixed gain , must be observable and selected fixed gain must satisfy: .

Independent of the desired estimator, cannot guarantee to converge to zero because of the two residual terms in the error equations. An additional argument will be needed to remove and bound residual terms.

2.4. Lyapunov Stability for the Estimated State Error

Due to the presence of residual terms in the error equations, cannot be guaranteed. To address this, Lyapunov stability analysis will be employed to minimize and possibly remove all of the error state. In this analysis, the internal error state will be minimized by considering an energy-like function for the error system with assumed real scalars:

where is the conjugate-transpose operator. Following, is a positive-definite and self-adjoint matrix , alternatively written as . To understand how the error state in the energy-like function evolves with time, take the time derivative of and substitute error dynamics:

The inner product operator is detonated as .

Consider the SPR condition modified for the reference model:

where . Applying the reference model SPR condition on ,

Removing the residual terms in Equation (15), results in .

In order to remove or compensate the two residual terms in Equation (15), consider another Lyapunov energy-like function:

To determine the energy-like time rate of change of , take the time derivative of :

A control law is defined for the time rate of change of plant correction variance :

where a is a scalar. Additional constraints for a will be derived later. The term in Equation (18) functions as a feedback filter. Substituting Equation (18) into Equation (17):

Therefore, the closed-loop Lyapunov function for the error system can be written as follows:

Following, the closed-loop energy-like time rate of change for the error system follows:

While adaptive feedback has eliminated some of the residual terms in the closed-loop time derivative of the Lyapunov function, as demonstrated in Equation (21), residual terms still exist such that is not guaranteed. As a result, the Barbalat–Lyapunov Lemma cannot be applied and therefore does not guarantee asymptotically. An additional argument will be presented to establish an upper bound on the estimated state error term, confining both the internal and external error to a neighborhood around zero.

2.5. Robustness Lyapunov Analysis for Error System

To determine the radius of convergence for the estimated state error , each component from the right-hand side (RHS) of Equation (21) must be bounded. The following sections will derive bounds for each component on the RHS of Equation (21) by combining Sylvester’s Inequality, the Cauchy–Schwarz Inequality, and algebraic manipulation.

2.5.1. Upper Bounding

In order to find the upper bound of , first consider bounding the Lyapunov function in Equation (12) using Sylvester’s Inequality:

where are the minimum and maximum eigenvalues of matrix , respectively, and is the norm of the internal state error . Continuing, can be upper-bounded via:

From Equation (23), can be solved for:

Moving forward, consider using Sylvester’s inequality to bound the term from Equation (21):

where are the minimum and maximum eigenvalues of matrix , respectively. Therefore, the upper-bound of Equation (25) follows:

Substituting the term from Equation (24) into Equation (26) results in the following:

where and is a scalar. Substituting Equation (27) into Equation (21),

results in an updated closed-loop energy-like time rate of change for the error system.

2.5.2. Upper Bounding

2.5.3. Upper Bounding

The right-hand side of Equation (31) can be upper bounded by taking the absolute value of terms:

To further bound , recall the Cauchy–Schwarz inequality: . Therefore, applying the Cauchy–Schwarz’s Inequality on :

From Equation (33), the term can be further bounded by applying Sylvester’s Inequality:

Therefore, the upper-bound of follows:

Given that norm of the bounded-stochastic waveform can vary with time, the upper bound of can be expressed as a supremum () such that

Using the result from Equation (36), the upper-bound of from Equation (35) can be expressed as follows:

Therefore, using the results from Equations (33) and (37), the upper-bound of can be expressed as follows:

2.5.4. Upper Bounding

Transitioning, lets consider bounding the term from Equation (32). Using the Cauchy–Schwarz inequality, alternatively written as , on :

Using the trace operator property of invariant under circular shifts (i.e., ), the term from Equation (39) can be rewritten as follows:

Equation (40) can be upper bounded by applying the Cauchy–Schwarz inequality:

For notation purposes, allow the following assumptions:

and

Substituting Equations (42) and (43) in Equation (41),

Following, Equation (44) can be substituted in Equation (39):

2.5.5. Combining Bounds to Determine Radius of Convergence

Using the result from Equations (38) and (45) in Equation (32) results in the following:

Following, Equation (46) can also be expressed as follows:

Consider an alternative expression for the left-hand side of Equation (47):

Therefore, Equation (47) can be expressed as follows:

Integrating and evaluating both sides of Equation (49) from 0 to results in the following:

Solving for :

From Equation (51), can be expressed as being bounded for all , which indicates that is also bounded. Given is a function of , it follows that both are likewise bounded.

Continuing, can be lower bounded by considering the following:

Substituting the lower bound for Equation (52) lower bound into Equation (51),

Solving for results in the following:

Taking the supremum of as time approaches infinity results in the following:

Therefore, upper bounding the error state norm to a radius of convergence around a neighborhood of zero.

Alternatively, if the following adaptive law is considered,

where from Equation (18), robustness proof can be modified accordingly, resulting in the following radius of convergence:

This version of the robustness proof has looser constraints, as Equation (43) need not be satisfied. Regardless of the adaptive law selected, robustness proof can be extended without loss of generality to consider the error system with fixed gains, as in Equation (11).

2.5.6. Determining the Bounds for

In order to determine a radius of convergence for , recall from Equation (16). Using the cyclic property of the trace, can be alternatively expressed as follows:

Applying the Cauchy–Schwarz inequality on Equation (58),

where is the Frobenius norm of . Given that can be lower-bounded by additional norms, the norm of must be constricted to . Using the previous assumption from Equation (43) on Equation (59) results in the following:

Therefore, can be expressed as follows:

Meaning, Equation (51) can alternatively be lower bounded by

Solving for in Equation (62):

Taking the supremum of as time approaches infinity results in the following:

Therefore, upper-bounding the correction variance norm to a radius of convergence of . Alternatively, if the control law in Equation (56) is considered, the supremum of as time approaches infinity results in the following:

2.5.7. Alternative Bound

As detailed in the previous section, the bound on depends on the assumption outlined in Equation (43). Consider the case where the adaptive law is considered without the feedback filter , as detailed in Equation (56), and the Equation (43) constraint is removed. Applying Sylvester’s Inequality to

Therefore, can be alternatively bounded:

Meaning, the lower bound of can be expressed as follows:

Using the result from Equation (68) to lower bound Equation (51):

Solving and taking the supremum of from Equation (69) as time approaches infinity results in the following:

There are several bounds for as depicted in Equations (64), (65), and (70); however, they are all dependent on the assumptions and constraints of the control problem.

2.6. Main Result Summarized

In conclusion, given that the true system experiences a health change and there exist weak and bounded stochasticities in the true system, robustness proof with adaptive law (Equation (71)) guarantees internal state error to converge to a radius around the neighborhood of zero, . However, stability proof is only valid when the triples of the model and true system are SPR and ASD, respectively, such that the true plant can be written as . Proof does guarantee the plant variance to be bounded; however, there is no guarantee of . If numerically, the true system’s dynamics or some energy equivalence has been numerically captured. The control diagram for proof is shown in Figure 1.

3. Illustrative Example

This section will demonstrate an illustrative example implementing the control diagram, Figure 1, for state estimation given the uncertainty in the model plant and stochasticities existing in the true dynamics. True and model values for this example are modified from [17]. All initial values for are equal to zero, while the values for range between . While the illustrative example may be perceived as limited, the full capacity of the theorem can be constrained by the specific application and the local uncertainties related to certain operational points. View Appendix A for an alternative example, where the true plant is altered and the fixed gain in the estimator is not considered. Note the assumptions and constraints for this control scheme detailed in Theorem 1.

3.1. State Space Representations for Reference and True Systems

In order to implement the proposed control scheme, an initial model plant must exist. Allow the model system, as defined in Equation (3), to have the following properties:

For the control approach to be viable, as defined in Theorem 1, allow . For this example, allow the fixed correction matrix to follow:

Therefore, the true system, as defined in Equation (2), is written as follows:

Allow the stochasticities to be bounded such supremum of . Recall that internal interactions and constitutive constants of the true system’s plant are unknown, but an initial model exists such that plant dimensions correspond. Following the input and output matrices are known.

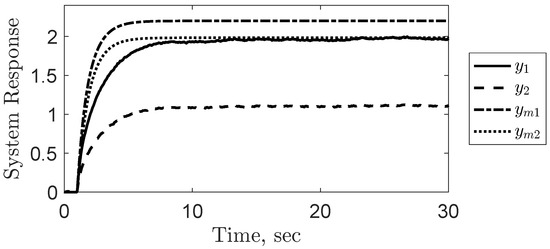

Giving both model and true systems, defined by proprieties in Equations (72) and (74), respectively, a unit step input, results in discrepancies in steady-state output responses, shown in Figure 2. The variation in the output response becomes particularly evident when comparing the eigenvalues of the model and the true plant:

3.2. Defining the Known Input

The estimator and true systems are actuated using the same known bounded and continuous input . For this example, allow the known input to be defined as follows:

This input may represent a known disturbance or any arbitrary excitation used to query the system. Alternatively, the selected input can correspond to the control input that regulates the true system response. Crucially, both the true system and the estimator must be subjected to the same input, beyond the stochastic variation acting solely on the true system.

Figure 2.

True and reference system’s output response given a unit-step input .

3.3. Adaptive State Estimation

The following section implements the control scheme outlined in Theorem 1 and illustrated in Figure 1. The proof demonstrates that the proposed control scheme can be executed with or without a fixed gain in the estimator. Utilizing a fixed gain can offer certain advantages, as it can influence the convergence of the state error. Both cases presented will utilize the same fixed gain, which is derived using a Linear Quadratic Regulator with and . In contrast, the adaptive laws will exhibit slight variations, as noted in Equations (56) and (71). Furthermore, the interaction tuning term controls the estimator’s sensitivity to output error, directly impacting how the error state converges. There are numerical limits for , if increased excessively, the estimator can diverge. For this example, .

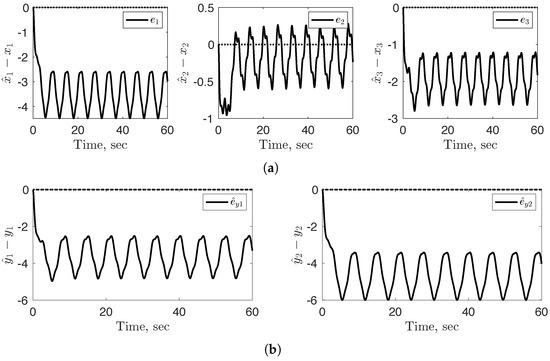

3.3.1. Adaptive Control Scheme Without a Feedback Filter ()

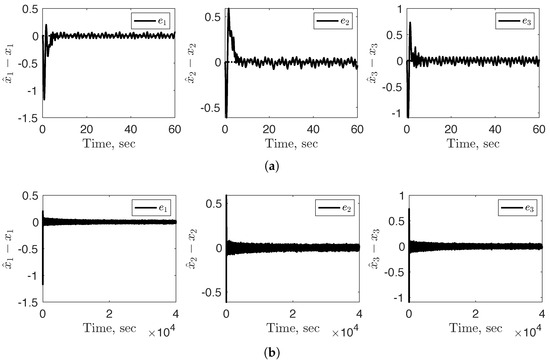

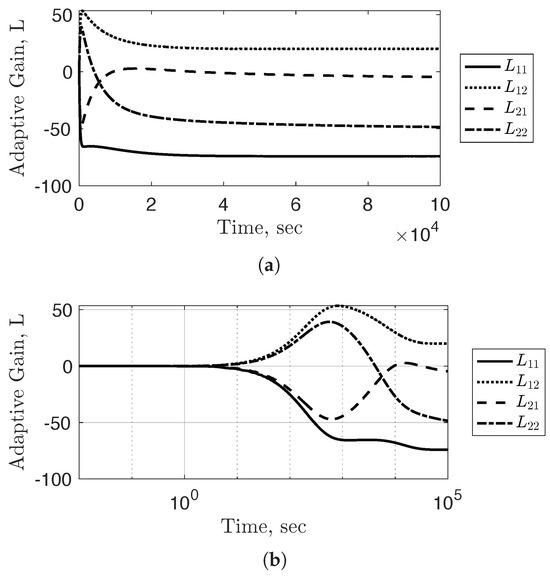

The control scheme illustrated in Figure 1 is implemented with a fixed gain and the adaptive law outlined in Equation (56), where and . As illustrated in Figure 3 and Figure 4, both internal and external state errors converge to a radius around zero. Although the proof establishes that the internal and external error states will converge to a neighborhood around zero, it only assures that the variance matrix will remain bounded. In this particular example, numerical results indicate as collapses such that , shown in Figure 5. Consequently, as , the internal error radius further reduces to a smaller neighborhood around zero, as seen in Figure 3b.

Due to the stochastic variations in the true system, values of L will show variability as time approaches infinity. In order to compare the estimated plant with the true system, the last 20 s of L’s simulated data were averaged, denoted by . Consequently, the for this example is as follows:

Therefore, the estimated plant can be expressed as follows:

where the eigenvalues of are . Observe the similarities in internal interactions and constitutive constants between true and estimated plants, as shown in Equation (74) and Equation (78), respectively.

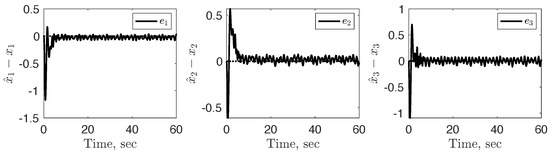

Figure 3.

Both sub-figures (a,b) illustrate the internal state error converging to a radius around a neighborhood of zero; however, they do so at two different time histories. The implemented control scheme used a fixed gain and .

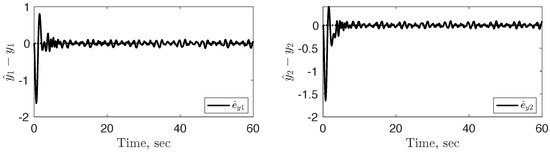

Figure 4.

External state error converging to a radius around zero with the use of the fixed gain and .

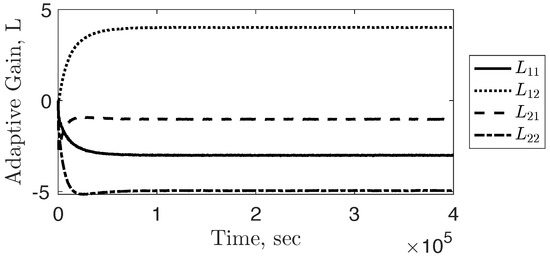

Figure 5.

Adaptive Gain converging to with the use of the fixed gain and .

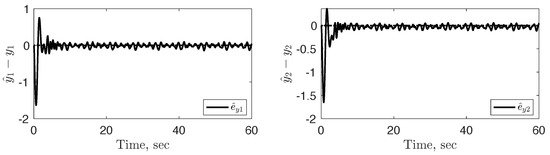

3.3.2. Adaptive Control Scheme with a Feedback Filter ()

In this section, the control scheme illustrated in Figure 1 and adaptive law (Equation (71)) are implemented using a fixed gain (i.e., ), , and . As shown in Figure 6 and Figure 7, both internal and external state errors converge to a radius around zero. Although there is variability in L as time approaches infinity, Figure 8, the average L term as time approaches infinity follows:

Consequently, this leads to the following estimated true plant matrix :

where the eigenvalues are given by . Although the estimated plant matrix does not equal the true plant, gives a better intuition into the true plant compared to the initial model plant and grants . For this example, it is important to note that as , there were no noteworthy changes in the convergence error radius for both internal and external error states, nor in L.

Note the similarities in the state error responses when implementing the adaptive control scheme both with and without the feedback filter. This is evident in the internal error comparison shown in Figure 3a and Figure 6, as well as the external error comparison in Figure 4 and Figure 7. Although the adaptive estimation law differs (as given by Equations (56) and (71)), the error states still converge to a neighborhood around zero. In the case without the feedback filter (), since , the estimation error converges to a smaller-radius neighborhood around zero. This level of reduction in error is not observed in the cases with the feedback filter .

Figure 6.

Internal state error converging to a radius around zero with the use of fixed gain and .

Figure 7.

Externalstate error converging to a radius around zero with the use of the fixed gain and .

Figure 8.

AdaptiveGain approaching a steady state with the use of a fixed gain and .

3.3.3. Kalman–Bucy Filter–Kalman Filter in Continuous Time

In both Section 3.3.1 and Section 3.3.2, the error dynamics for the proposed adaptive state estimator are presented in two variations: without and with the feedback filter. This section depicts the error dynamics without implementing the adaptive estimator; the fixed gain value in the estimator remains unchanged compared to Section 3.3.1 and Section 3.3.2. As illustrated in Figure 9a,b, the lack of adaptation in the reference model results in significantly larger internal and external estimation errors compared to those observed when the adaptive control scheme is applied.

Figure 9.

Sub-figures (a,b) illustrate the internal state error and external state error , respectively, in the absence of reference model updating to account for changes in the true system health. Both error states exhibit significantly larger estimation errors compared to their counterparts obtained using the adaptive scheme.

4. Discussion

The theorem and illustrative example presented in this paper pertain to global LTI systems. However, since many real-world problems are inherently non-linear, the proposed theorem can also be applied to any local linear approximation of a non-linear system, provided that the assumptions and constraints of both the theorem and the linear approximation are met.

While there are theoretical hard constraints, such as that the decomposition of the true plant must follow , these constraints are essential for the proof. Future research could explore ways to relax or circumvent these constraints. Moreover, the decomposition of the true plant may appear unrealistic for many applications; however, if additional actuators and sensors can be incorporated, thereby rendering the true system over-controllable and over-observable, the feasibility of satisfying the decomposition improves. If adding extra sensors is not an option, employing sensor blending or fusion techniques can aid in meeting the decomposition constraints. Sensor blending or fusion techniques are routinely used in signal processing and state estimation applications [22,23]. Although the necessary decomposition of the true system may initially seem restrictive, given an initial model, the theory enables the error states to converge to a neighborhood around zero, regardless of how large or small the values inside are. In practice, numerical limitations may exist in the theorem due to the integration of large errors.

Knowledge of the input and output matrices is essential for the theoretical development and stability proof. This assumption is common in control design as the input matrix denotes how the input influences the system dynamics, and the output matrix specifies the linear combination of the internal states that are measured-observed. Practical issues may arise if these matrices degrade over time or with use, but those types of degradation are outside the scope of this work.

The current design of the proposed estimator is non-invasive, meaning that none of the estimated states are fed back into the true system. As a result, the estimation scheme can be implemented without any risk of harming the true system. One potential application of the estimator is to operate in conjunction with governing inputs that regulate the true system response. This parallel implementation can assist in monitoring or refining the equations of motion that govern the true system dynamics. A significant change in a specific constitutive constant or internal interaction could alert an operator to inspect a particular area for potential failures or indicate that a component within the system needs to be replaced.

The theorem only guarantees an asymptotic convergence of the error states, which may limit the potential real-time, online use. In principle, the theorem can be applied to both fast and slow dynamics systems. However, several factors may limit the practical implementation of the theorem. These include the accuracy of the initial system model, the operational range of the true system, and whether the estimated states are used in control design. For instance, consider a scenario involving a mechatronic system with a poorly identified initial model, where the goal is trajectory tracking using the estimated states. In such cases, the implementation of the proposed theorem may not be feasible for online use. However, the scenario can still be evaluated in an offline setting to assess its performance. Additionally, incorporating a fixed gain term in the estimator can aid or hinder state estimation, depending on how the fixed gain term is tuned. However, the proof indicates that the inclusion of this fixed term is not necessary for the state estimation error to converge to a neighborhood around zero.

The outlined theorem in this paper pertains to accounting for model uncertainty and stochastic noise. If noise term is removed , the need for the robust analysis subsides. From here, our previous finding, outlined in [16], can be used to ensure . However, the result in [16] can be extended by considering the control law defined in Equation (18), which truncates the Lyapunov analysis expressed in Equation (32) to the following:

where is a scalar value greater than zero . Following a similar analysis described earlier in this text, the error state norm is bounded by the following radius of convergence, denoted as :

where represents a radius around a neighborhood of zero.

5. Conclusions

The true system is influenced by stochastic variations and health changes, resulting in alterations in internal interactions and constitutive constants. If these health changes are not considered, discrepancies may arise between the true system and the reference model. The proposed control scheme addresses these issues by updating the reference model utilizing the input and output of the true system. The stability proof ensures that both the internal and external error states will converge within a defined radius around zero. The size of this radius of convergence is contingent upon the extent of the stochastic variations. A potential application of the estimator is to operate in parallel with the system inputs that regulate the response. This parallel configuration can assist in monitoring the health status of the true system, potentially alerting operators when specific internal interactions or constitutive parameters deviate from their nominal values. Future work will focus on relaxing certain Lyapunov-based stability constraints—most notably, the requirement for a specific decomposition of the true plant.

Author Contributions

Writing—original draft, K.F.; Supervision, M.B. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| A | True Plant |

| Model Plant | |

| ASD | Almost Strictly Dissipative |

| B | Input Matrix |

| Ideal Plant Correction Term | |

| ∈ | Belongs |

| C | Output Matrix |

| Conjugate Transpose | |

| ^ | Estimate |

| ∀ | For All |

| Fixed Correction Matrix | |

| Variance Matrix | |

| Norm | |

| SPR | Strictly Positive Real |

| Set of Eigenvalues | |

| ∋ | Such that |

| Radius of Convergence | |

| Re | Real |

| ∃ | There Exists |

| Inner Product | |

| u | Input |

| x | Internal State (Full State Vector) |

| y | External (Output) State |

Appendix A. Alternative Illustrative Example

This section adopts the same architecture outlined in Section 3, with modifications to the parameters of the true system and the removal of the fixed gain assumption in the estimator. All other estimation parameters and inputs remain unchanged unless otherwise specified. The purpose of this example is to demonstrate that the theorem remains applicable beyond local uncertainty of the model plant.

Appendix A.1. Defining the True System Plant

Recall for the adaptive estimator to be utilized, the true plant must follow a specific decomposition: . In this example, consider an alternative value for given by the following:

Appendix A.2. Adaptive Control Scheme Without a Feedback Filter (aL = 0) and Without a Fixed Gain (K = 0)

The control scheme illustrated in Figure 1 is implemented without a fixed gain and the adaptive law without the feedback filter , as described in Equation (56). Additionally, the interaction turning term and the input remain the same from the previous example.

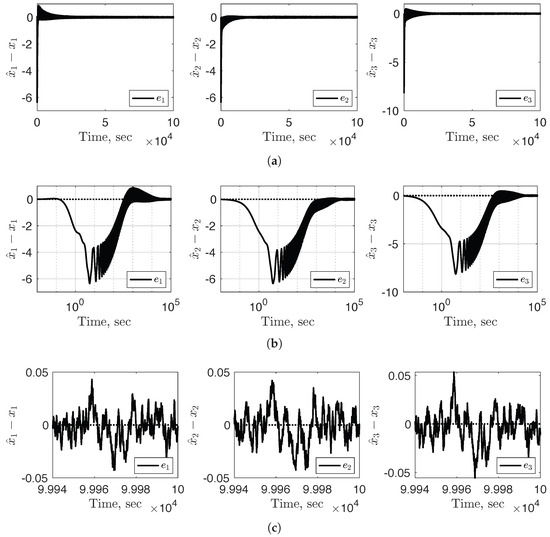

As illustrated in Figure A1 and Figure A2, both internal and external errors converge to a radius around zero. Due to the theorem only guaranteeing asymptotic convergence and the relatively large discrepancy between the initial model and the true system, it does take a relatively significant time for the error states to approach zero. Nonetheless, the model is updated so the error states converge to a radius around zero.

As discussed in the main text, if there is a large discrepancy between the model and the true system, this theorem may not be realistic in an online setting for fast dynamic systems. This is imparted by the time required for the error states to approach zero can be substantial. Conversely, the theorem could be beneficial offline, where extended time frames can be simulated quickly.

Furthermore, due to the true system having eigenvalues with large negative real parts, the true system response has a smaller magnitude than the previous example using the same input. Consequently, applying the same input leads to slower observable error decay. For this specific case, increasing the magnitude of the input scales the true system’s response accordingly, allowing the adaptive estimator to adjust rapidly and causing the error to subside faster.

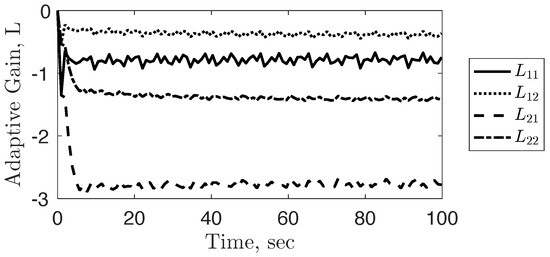

Figure A1.

Sub-figures (a–c) illustrate the internal state error converging to zero over varying time scales. Due to the relatively large discrepancies between the model and the true plant, the internal error requires a significant amount of time to converge to a small neighborhood around zero. The implemented control scheme uses neither a fixed gain nor the feedback filter on the adaptive estimator law .

Although there exists variability in the estimated L’s data as time approaches infinity, as shown in Figure A3, the last 20 s of simulated data was averaged, denoted by , resulting in the following:

Therefore, the estimated plant can be expressed as follows:

where the eigenvalues of follows: . Compare the similarities between the true and estimated plant, denoted by Equations (A2) and (A4), respectively.

This example illustrates that model uncertainty can be either local or global. As long as the uncertainty adheres to the specified decomposition, the error states can be shown to converge to the neighborhood around zero. Furthermore, the decay of the error states is influenced by the true system input, emphasizing the role of excitation in the adaptation process.

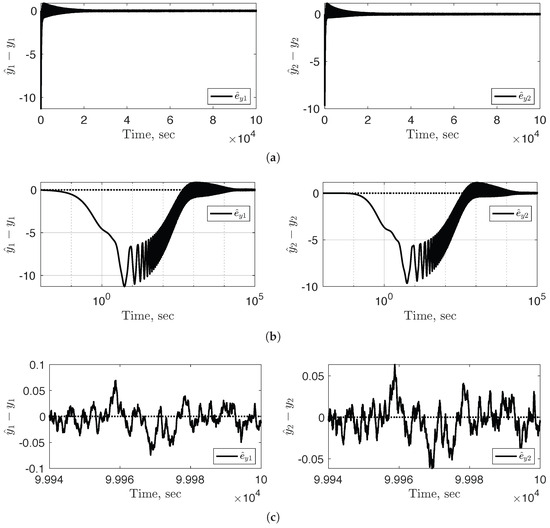

Figure A2.

Sub-figures (a–c) illustrate the external state error converging to zero over varying time scales.

Figure A3.

Sub-figures (a,b) illustrated the Adaptive Gain converging to without the use of the fixed gain and , across different time scales.

References

- Cook, R.G.; Palacios, R.; Goulart, P. Robust gust alleviation and stabilization of very flexible aircraft. AIAA J. 2013, 51, 330–340. [Google Scholar] [CrossRef]

- Von Karman, T. Compressibility effects in aerodynamics. J. Spacecr. Rocket. 2003, 40, 992–1011. [Google Scholar] [CrossRef]

- Chen, S.; Gojon, R.; Mihaescu, M. High-temperature effects on aerodynamic and acoustic characteristics of a rectangular supersonic jet. In Proceedings of the 2018 AIAA/CEAS Aeroacoustics Conference, Atlanta, Georgia, 25–29 June 2018; p. 3303. [Google Scholar]

- Balas, M.; Fuentes, R.; Erwin, R. Adaptive control of persistent disturbances for aerospace structures. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Monterey, CA, USA, 5–8 August 2000; p. 3952. [Google Scholar]

- Fuentes, R.J.; Balas, M.J. Direct adaptive disturbance accommodation. In Proceedings of the 39th IEEE Conference on Decision and Control (Cat. No. 00CH37187), Sydney, NSW, Australia, 12–15 December 2000; Volume 5, pp. 4921–4925. [Google Scholar]

- Wang, N.; Wright, A.D.; Balas, M.J. Disturbance accommodating control design for wind turbines using solvability conditions. J. Dyn. Syst. Meas. Control 2017, 139, 041007. [Google Scholar] [CrossRef]

- Chen, J.; Patton, R.J.; Zhang, H.Y. Design of unknown input observers and robust fault detection filters. Int. J. Control 1996, 63, 85–105. [Google Scholar] [CrossRef]

- Guan, Y.; Saif, M. A novel approach to the design of unknown input observers. IEEE Trans. Autom. Control 1991, 36, 632–635. [Google Scholar] [CrossRef]

- Fuentes, R.J.; Balas, M.J. Robust model reference adaptive control with disturbance rejection. In Proceedings of the 2002 American Control Conference (IEEE Cat. No. CH37301), Anchorage, AK, USA, 8–10 May 2002; Volume 5, pp. 4003–4008. [Google Scholar]

- Luenberger, D.G. Observing the State of a Linear System. IEEE Trans. Mil. Electron. 1964, 8, 74–80. [Google Scholar] [CrossRef]

- Luenberger, D. An introduction to observers. IEEE Trans. Autom. Control 1971, 16, 596–602. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Kalman, R.E.; Bucy, R.S. New Results in Linear Filtering and Prediction Theory. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Doyle, J.C. A review of μ for case studies in robust control. IFAC Proc. Vol. 1987, 20, 365–372. [Google Scholar] [CrossRef]

- Nagpal, K.M.; Khargonekar, P.P. Filtering and smoothing in an H/sup infinity/setting. IEEE Trans. Autom. Control 1991, 36, 152–166. [Google Scholar] [CrossRef]

- Fuentes, K.; Balas, M.J.; Hubbard, J. A Control Framework for Direct Adaptive Estimation With Known Inputs for LTI Dynamical Systems. In Proceedings of the AIAA SCITECH 2025 Forum, Orlando, FL, USA, 6–10 January 2025; p. 2796. [Google Scholar]

- Griffith, T.; Gehlot, V.P.; Balas, M.J. Robust adaptive unknown input estimation with uncertain system Realization. In Proceedings of the AIAA SCITECH 2022 Forum, San Diego, CA, USA, 3–7 January 2022; p. 611. [Google Scholar]

- Rigatos, G.G. A Derivative-Free Kalman Filtering Approach to State Estimation-Based Control of Nonlinear Systems. IEEE Trans. Ind. Electron. 2012, 59, 3987–3997. [Google Scholar] [CrossRef]

- Jo, N.H.; Seo, J.H. Input output linearization approach to state observer design for nonlinear system. IEEE Trans. Autom. Control 2000, 45, 2388–2393. [Google Scholar] [CrossRef]

- Leith, D.J.; Leithead, W.E. Survey of gain-scheduling analysis and design. Int. J. Control 2000, 73, 1001–1025. [Google Scholar] [CrossRef]

- Balas, M.; Fuentes, R. A non-orthogonal projection approach to characterization of almost positive real systems with an application to adaptive control. In Proceedings of the 2004 American Control Conference, Boston, MA, USA, 30 June 30–2 July 2004; Volume 2, pp. 1911–1916. [Google Scholar]

- Sun, S.L.; Deng, Z.L. Multi-sensor optimal information fusion Kalman filter. Automatica 2004, 40, 1017–1023. [Google Scholar] [CrossRef]

- Wang, S.; Yi, S.; Zhao, B.; Li, Y.; Li, S.; Tao, G.; Mao, X.; Sun, W. Sowing Depth Monitoring System for High-Speed Precision Planters Based on Multi-Sensor Data Fusion. Sensors 2024, 24, 6331. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).