A Deep Learning-Based Algorithm for Ceramic Product Defect Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Construction

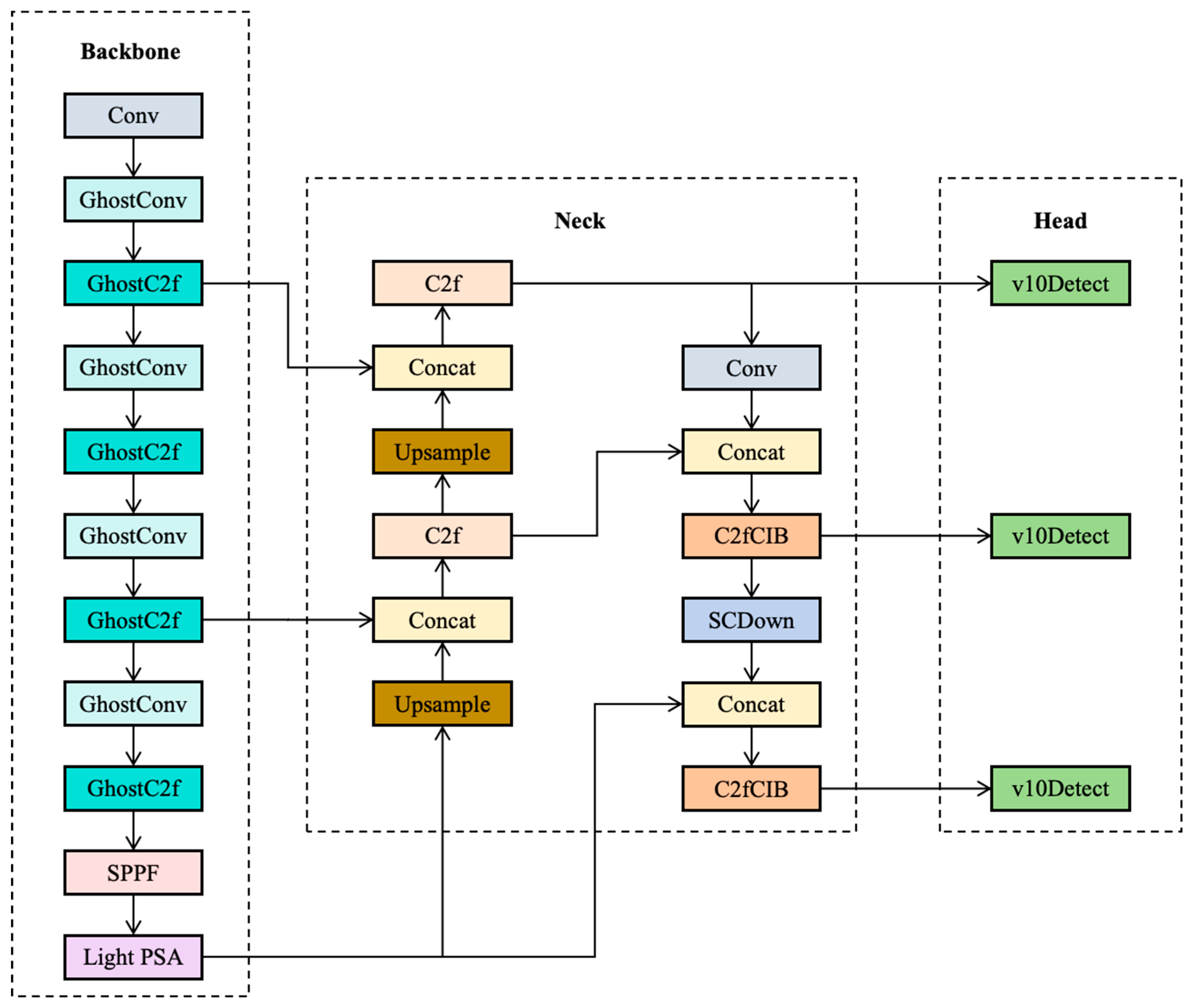

2.2. Lightweight YOLOv10s Model

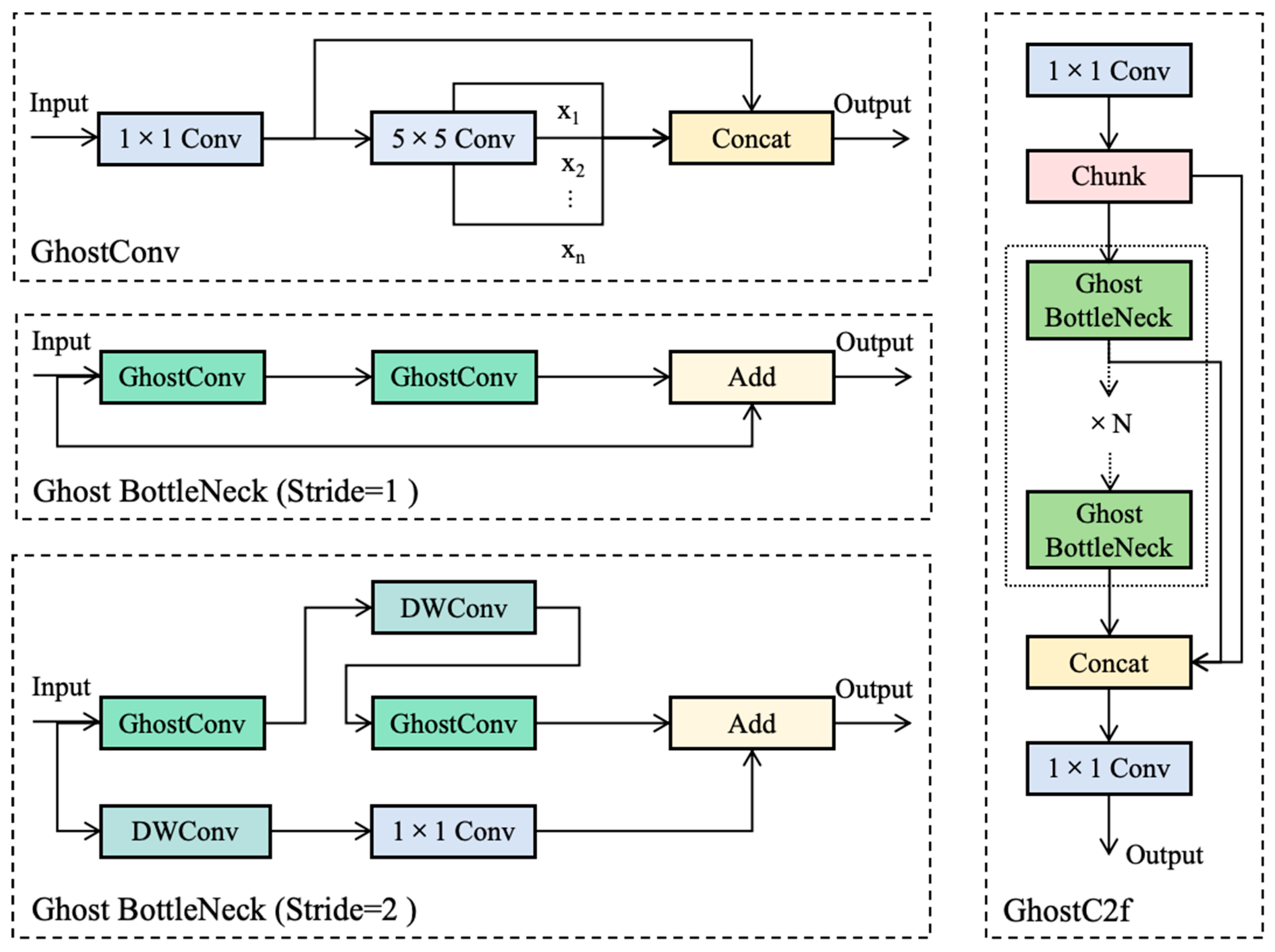

2.2.1. Lightweight Backbone Network

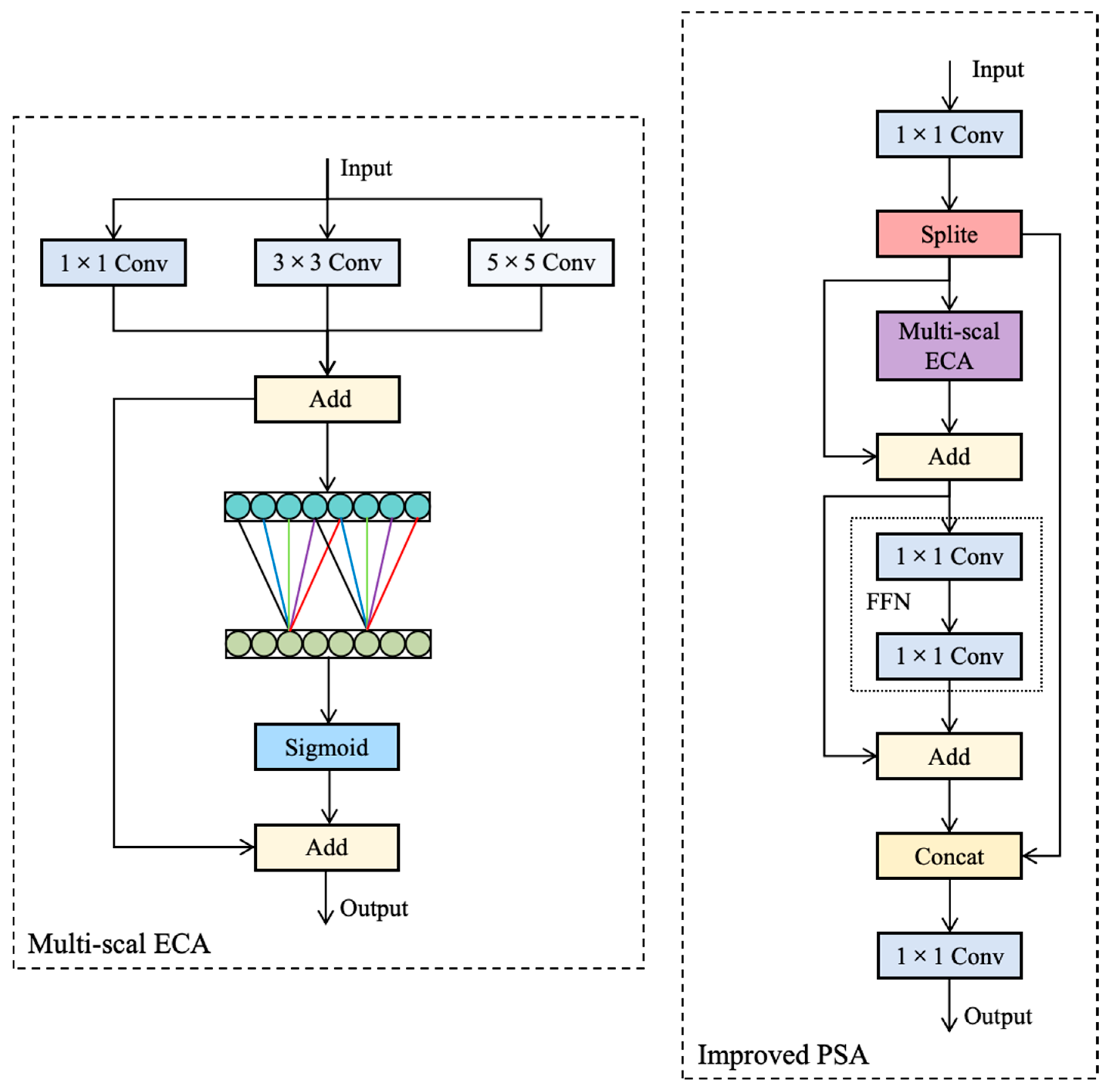

2.2.2. Improved PSA Module

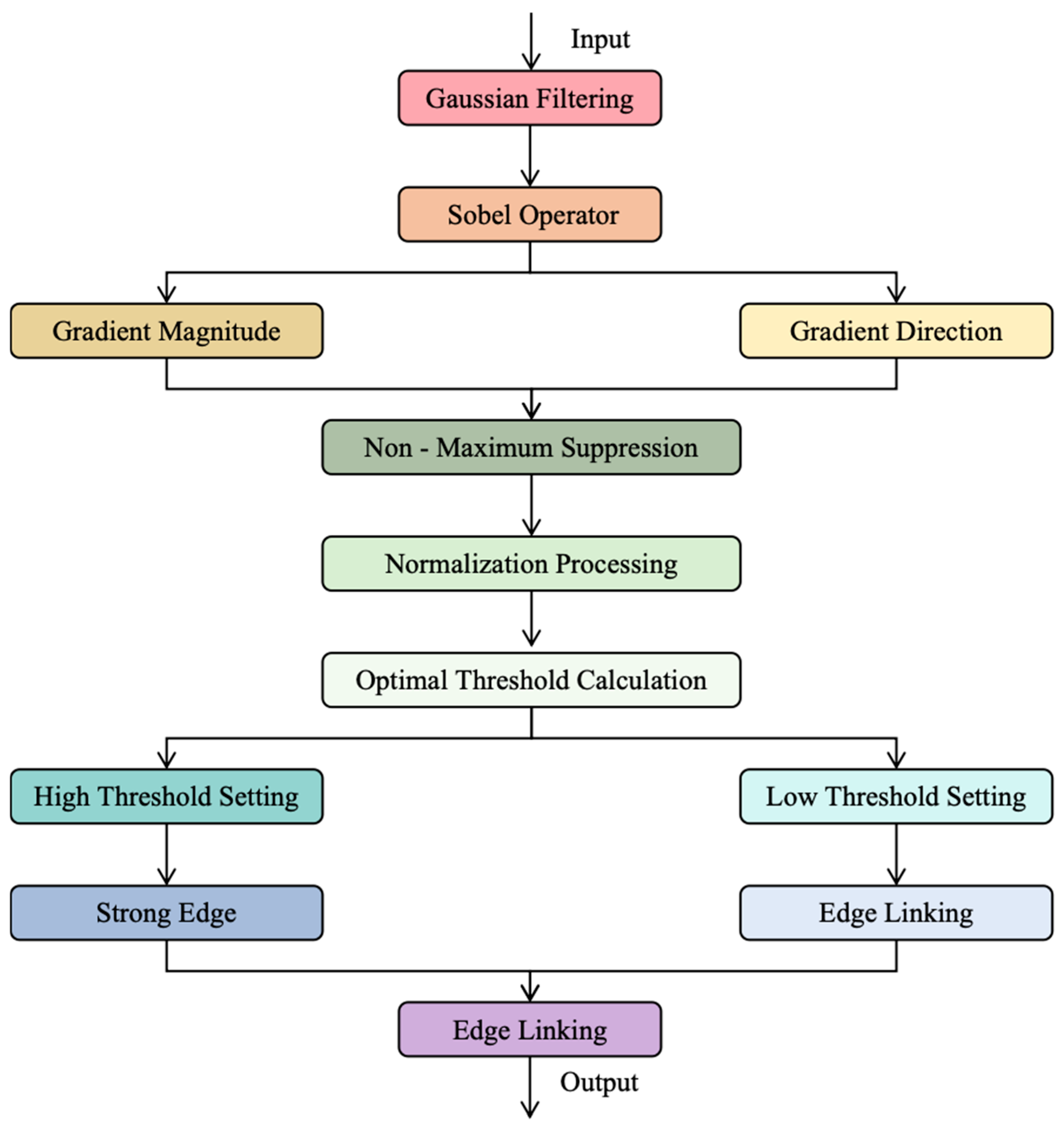

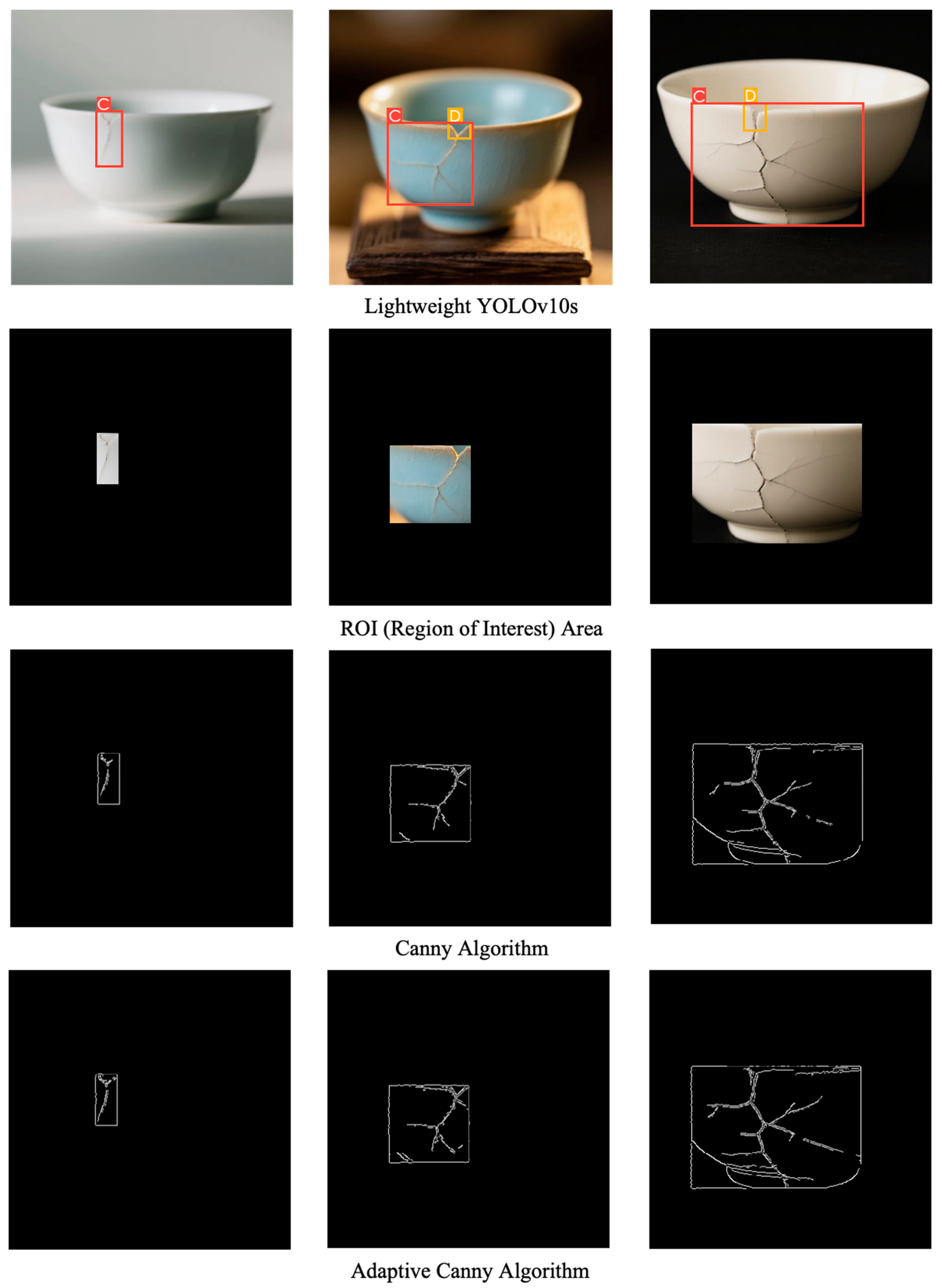

2.3. Adaptive Canny Edge Detection Algorithm

3. Experiments and Results

3.1. Ceramic Defect Detection Model Based on Lightweight YOLOv10s

3.1.1. Evaluation Metrics

3.1.2. Ablation Experiments

3.1.3. Comparative Experiments on Different Ceramic Product Defect Detection Models

3.2. Ceramic Product Crack Detection Algorithm Based on Adaptive Canny

3.2.1. Evaluation Metrics

3.2.2. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dong, G.; Pan, X.; Liu, S.; Wu, N.; Kong, X.; Huang, P.; Wang, Z. A review of machine vision technology for defect detection in curved ceramic materials. Nondestruct. Test. Eval. 2024, 1–27. [Google Scholar] [CrossRef]

- Tercan, H.; Meisen, T. Machine learning and deep learning based predictive quality in manufacturing: A systematic review. J. Intell. Manuf. 2022, 33, 1879–1905. [Google Scholar] [CrossRef]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using deep learning to detect defects in manufacturing: A comprehensive survey and current challenges. Materials 2020, 13, 5755. [Google Scholar] [CrossRef]

- Yang, R.; Yu, Y. Artificial convolutional neural network in object detection and semantic segmentation for medical imaging analysis. Front. Oncol. 2021, 11, 638182. [Google Scholar] [CrossRef]

- Nan, Z.; Peng, J.; Jiang, J.; Chen, H.; Yang, B.; Xin, J.; Zheng, N. A joint object detection and semantic segmentation model with cross-attention and inner-attention mechanisms. Neurocomputing 2021, 463, 212–225. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A comprehensive review of object detection with deep learning. Digit. Signal Process. 2023, 132, 103812. [Google Scholar] [CrossRef]

- Sharma, V.K.; Mir, R.N. Saliency guided faster-RCNN (SGFr-RCNN) model for object detection and recognition. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1687–1699. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An improved SSD object detection algorithm based on DenseNet and feature fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Wu, B.; Liang, A.; Zhang, H.; Zhu, T.; Zou, Z.; Yang, D.; Tang, W.; Li, J.; Su, J. Application of conventional UAV-based high-throughput object detection to the early diagnosis of pine wilt disease by deep learning. For. Ecol. Manag. 2021, 486, 118986. [Google Scholar] [CrossRef]

- Diao, Z.; Yan, J.; He, Z.; Zhao, S.; Guo, P. Corn seedling recognition algorithm based on hyperspectral image and lightweight-3D-CNN. Comput. Electron. Agric. 2022, 201, 107343. [Google Scholar] [CrossRef]

- Hsu, S.H.; Hung, H.T.; Lin, Y.Q.; Chang, C.M. Defect inspection of indoor components in buildings using deep learning object detection and augmented reality. Earthq. Eng. Eng. Vib. 2023, 22, 41–54. [Google Scholar] [CrossRef]

- Lin, C.J.; Jhang, J.Y. Intelligent traffic-monitoring system based on YOLO and convolutional fuzzy neural networks. IEEE Access 2022, 10, 14120–14133. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Fu, L.; Li, S. A new semantic segmentation framework based on UNet. Sensors 2023, 23, 8123. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Fang, M. Semantic segmentation of underwater images based on improved Deeplab. J. Mar. Sci. Eng. 2020, 8, 188. [Google Scholar] [CrossRef]

- Sun, J.; Yang, S.; Gao, X.; Ou, D.; Tian, Z.; Wu, J.; Wang, M. Masa-segnet: A semantic segmentation network for polsar images. Remote Sens. 2023, 15, 3662. [Google Scholar] [CrossRef]

- Koitka, S.; Kroll, L.; Malamutmann, E.; Oezcelik, A.; Nensa, F. Fully automated body composition analysis in routine CT imaging using 3D semantic segmentation convolutional neural networks. Eur. Radiol. 2021, 31, 1795–1804. [Google Scholar] [CrossRef]

- Alam, M.; Wang, J.F.; Guangpei, C.; Yunrong, L.V.; Chen, Y. Convolutional neural network for the semantic segmentation of remote sensing images. Mob. Netw. Appl. 2021, 26, 200–215. [Google Scholar] [CrossRef]

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C. Maize crop row recognition algorithm based on improved UNet network. Comput. Electron. Agric. 2023, 210, 107940. [Google Scholar] [CrossRef]

- Kim, Y.H.; Park, K.R. MTS-CNN: Multi-task semantic segmentation-convolutional neural network for detecting crops and weeds. Comput. Electron. Agric. 2022, 199, 107146. [Google Scholar] [CrossRef]

- Wan, G.; Fang, H.; Wang, D.; Yan, J.; Xie, B. Ceramic tile surface defect detection based on deep learning. Ceram. Int. 2022, 48, 11085–11093. [Google Scholar] [CrossRef]

- Lu, Q.; Lin, J.; Luo, L.; Zhang, Y.; Zhu, W. A supervised approach for automated surface defect detection in ceramic tile quality control. Adv. Eng. Inform. 2022, 53, 101692. [Google Scholar] [CrossRef]

- Nogay, H.S.; Akinci, T.C.; Yilmaz, M. Detection of invisible cracks in ceramic materials using by pre-trained deep convolutional neural network. Neural Comput. Appl. 2022, 34, 1423–1432. [Google Scholar] [CrossRef]

- Chen, W.; Zou, B.; Huang, C.; Yang, J.; Li, L.; Liu, J.; Wang, X. The defect detection of 3D-printed ceramic curved surface parts with low contrast based on deep learning. Ceram. Int. 2023, 49, 2881–2893. [Google Scholar] [CrossRef]

- Cao, T.; Song, K.; Xu, L.; Feng, H.; Yan, Y.; Guo, J. Balanced multi-scale target score network for ceramic tile surface defect detection. Measurement 2024, 224, 113914. [Google Scholar] [CrossRef]

- Fang, F. Crack repair model of ancient ceramics based on digital image. Sci. Program. 2022, 2022, 4932183. [Google Scholar] [CrossRef]

- Zhou, J.; Li, H.; Lu, L.; Cheng, Y. Machine vision-based surface defect detection study for ceramic 3D printing. Machines 2024, 12, 166. [Google Scholar] [CrossRef]

| Inspection Technology | Advantages | Disadvantages |

|---|---|---|

| Manual Visual Inspection | Intuitive, capable of handling complex defects | Low efficiency, poor data traceability |

| Traditional Machine Vision | Simple, capable of handling repetitive tasks | Limited functionality, sensitive to the environment |

| Object Detection Algorithm | Strong real-time performance, adaptable to complex environments | High model complexity, high resource requirements |

| Semantic Segmentation Algorithm | Pixel-level, provides fine-grained boundary information | Large computational load, relatively poor real-time performance |

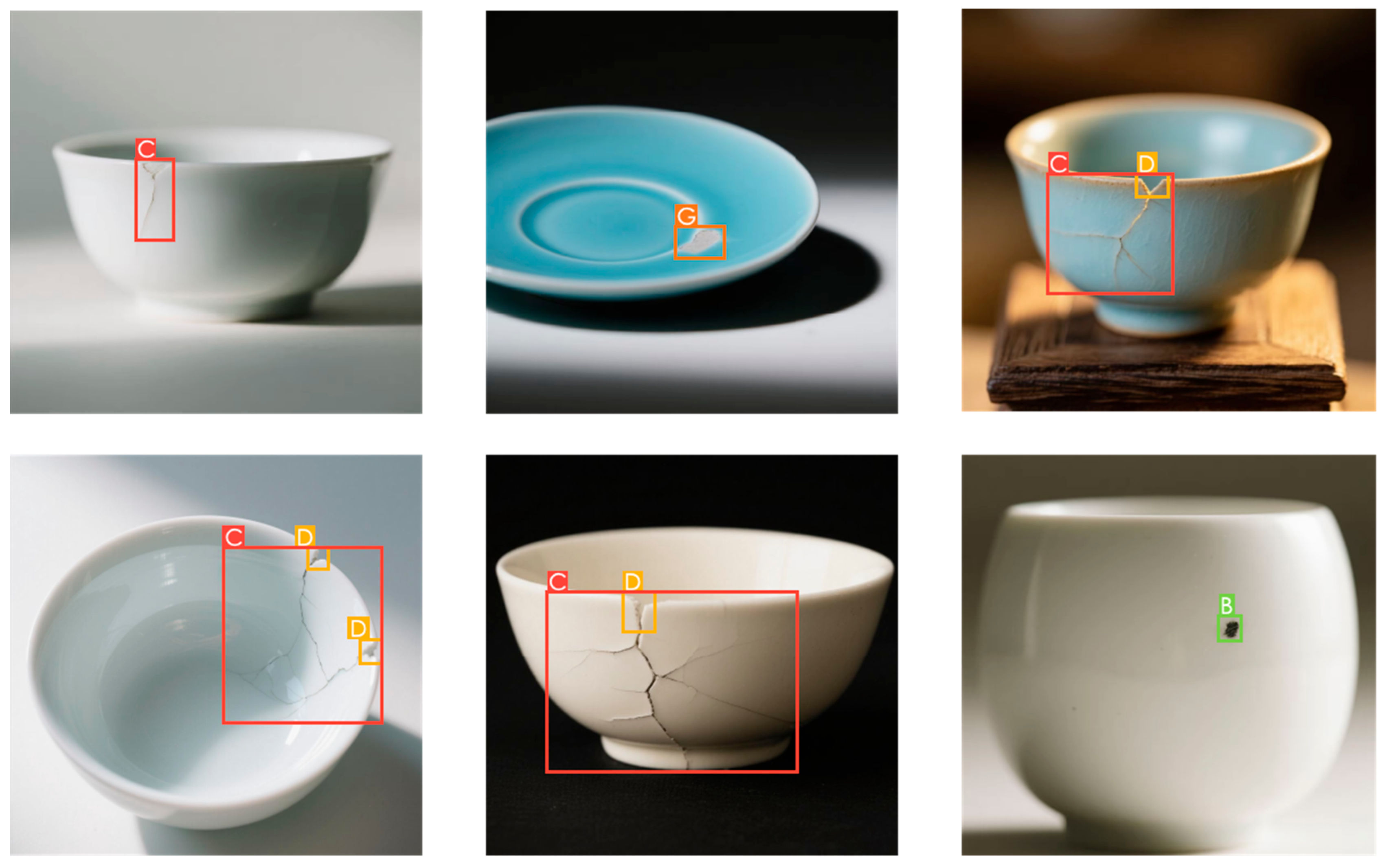

| Category | Annotation Label | Training Set | Validation Set | Test Set |

|---|---|---|---|---|

| Crack | C | 552 | 69 | 69 |

| Glaze Deficiency | G | 456 | 57 | 57 |

| Damage | D | 824 | 103 | 103 |

| Black Spot | B | 592 | 74 | 74 |

| Model | mAP@50 (%) | F1-Score (%) | Params (M) | GFLOPs (G) | Size (MB) |

|---|---|---|---|---|---|

| YOLOv10s | 92.5 | 90.1 | 8.07 | 24. | 16.8 |

| +GhostConv | 90.6 | 87.5 | 6.12 | 15.1 | 12.5 |

| +GhostConv + ECA | 90.5 | 87.7 | 5.66 | 14.6 | 12.1 |

| +GhostConv + Improved ECA (Lightweight YOLOv10s) | 92.8 | 90.3 | 5.97 | 14.9 | 12.3 |

| Model | mAP@50 (%) | F1-Score (%) | Params (M) | GFLOPs (G) | Size (MB) | FPS (f/s) |

|---|---|---|---|---|---|---|

| YOLOv5s | 89.1 | 86.9 | 7.02 | 15.8 | 13.9 | 52 |

| YOLOv7 | 88.3 | 86.1 | 16.32 | 35.5 | 29.2 | 33 |

| YOLOv8s | 91.1 | 88.9 | 12.46 | 28.7 | 22.5 | 38 |

| YOLOv9s | 90.2 | 88.1 | 7.23 | 26.8 | 14.2 | 41 |

| YOLOv10s | 92.5 | 90.1 | 8.07 | 24 | 16.8 | 45 |

| Lightweight YOLOv10s (Ours) | 92.8 | 90.3 | 5.97 | 14.9 | 12.3 | 63 |

| Model | ELE (px) | ECR (%) | WER (%) | FPS (f/s) |

|---|---|---|---|---|

| Lightweight YOLOv10s + Canny | 3.2 | 76 | 65 | 44 |

| Lightweight YOLOv10s + Adaptive Canny | 2.4 | 91 | 82 | 40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diao, J.; Wei, H.; Zhou, Y.; Diao, Z. A Deep Learning-Based Algorithm for Ceramic Product Defect Detection. Appl. Sci. 2025, 15, 6641. https://doi.org/10.3390/app15126641

Diao J, Wei H, Zhou Y, Diao Z. A Deep Learning-Based Algorithm for Ceramic Product Defect Detection. Applied Sciences. 2025; 15(12):6641. https://doi.org/10.3390/app15126641

Chicago/Turabian StyleDiao, Junxiang, Hua Wei, Yawei Zhou, and Zhihua Diao. 2025. "A Deep Learning-Based Algorithm for Ceramic Product Defect Detection" Applied Sciences 15, no. 12: 6641. https://doi.org/10.3390/app15126641

APA StyleDiao, J., Wei, H., Zhou, Y., & Diao, Z. (2025). A Deep Learning-Based Algorithm for Ceramic Product Defect Detection. Applied Sciences, 15(12), 6641. https://doi.org/10.3390/app15126641