1. Introduction

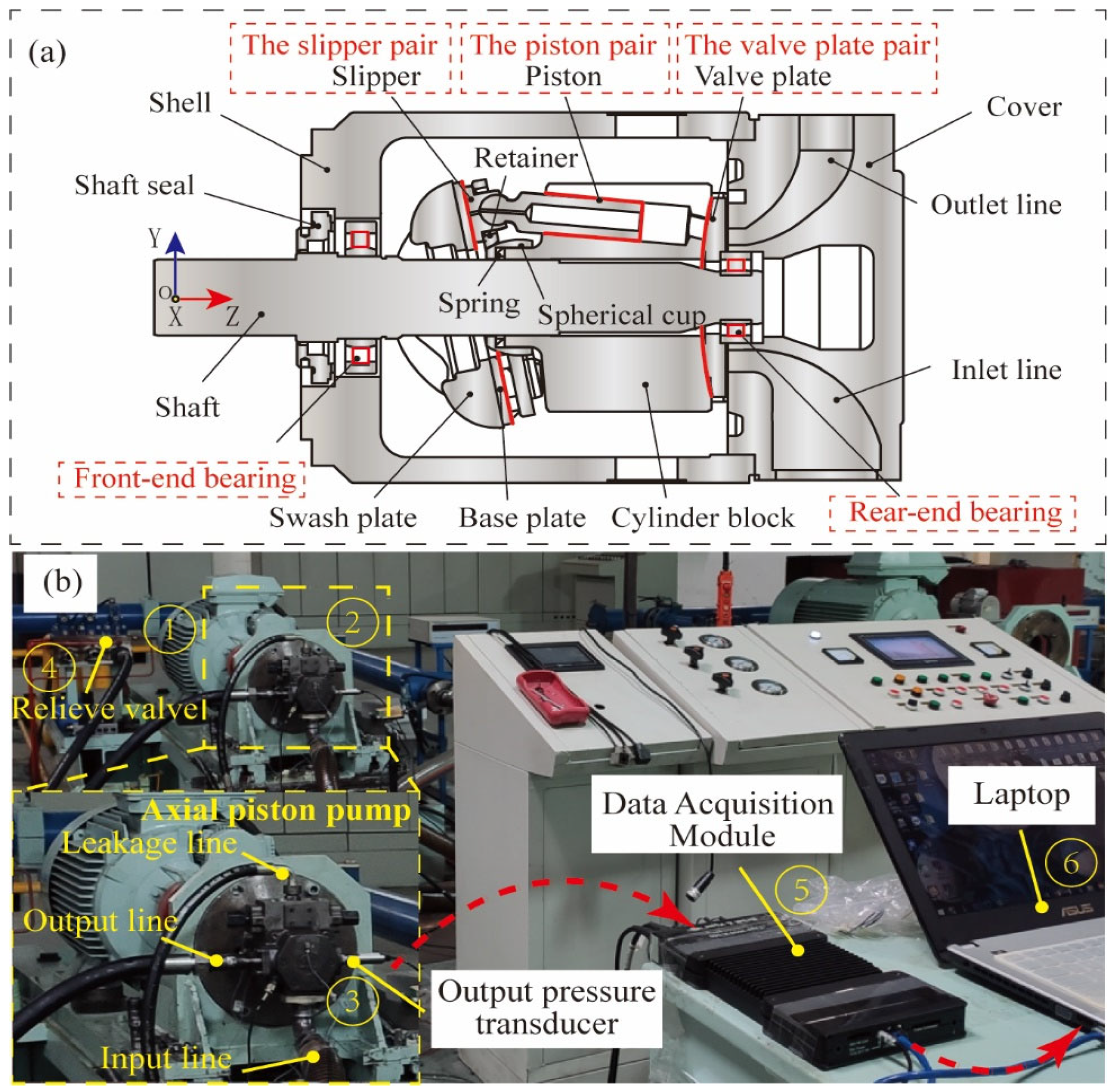

The axial piston pump is an essential component used to transmit power in hydraulic systems in various fields such as aerospace, rail transit, engineering machinery, and ship equipment. The failure of the axial piston pump can lead to severe safety hazards in equipment operation. The working conditions of axial piston pumps are typically harsh, with high levels of noise. Fault diagnosis in axial piston pumps can be challenging due to their concealed, coupled, random, and complex fault characteristics, making feature extraction and identification difficult. As a result, identifying an efficient and viable fault diagnosis method for axial piston pumps holds paramount importance in improving their performance.

Currently, fault diagnosis methods typically involve model-driven and data-driven approaches [

1,

2]. It is crucial to note that the model-driven methods establish a physical model, which reflects the device’s operational status based on its dynamic characteristics. However, this type of approach poses significant challenges due to its complex modeling process, which results in poor model universality. Conversely, data-driven methods do not require extensive knowledge of fault mechanisms [

3,

4]. With the growth of artificial intelligence, data-driven approaches rooted in deep learning made substantial progress in various domains, including, but not limited to, image processing, speech recognition, and fault diagnosis [

5,

6,

7], and attracted widespread attention. Tang et al. [

8] introduced a normalized convolutional neural network (NCNN) framework, leveraging batch normalization for both feature extraction and fault recognition in hydraulic pumps. These experiments revealed strong performance even in noisy environments. In recent times, the small sample ensemble intelligent fault diagnosis method was found to have remarkable applications in intelligent diagnosis in practical industrial scenarios, generating adversarial networks. The experimental results show that this method effectively improves the classification accuracy of bearing faults in situations where samples are limited [

9]. However, axial piston pumps are complex electromechanical products with high integration, and ensuring their multisource information and generalized fault diagnosis remains a challenge [

10]. As a result, data-driven approaches could fail to detect critical diagnostic information. DL only performs nonlinear mapping and transformation on Euclidean space, and it is therefore easy to overlook the interdependence between data [

11].

Graph structure data contains a wealth of relational information that is distinct from image or voice signals and exists in non-Euclidean spaces. Currently, graph convolution neural networks (GNNs) focus more on data connectivity [

12,

13]. Traditional neural networks, such as CNNs, lack translation invariance within non-Euclidean structures (convolution kernels of the same size cannot be used for convolution) and can only analyze data in Euclidean space, disregarding the significant structural relationships of signals. This results in the loss of potential valuable information that can assist in distinguishing between failure modes [

14]. Graph convolution networks (GCNs) revolutionized the processing of such data by enabling convolution operations on irregular graph structures. New solutions for the fault diagnosis of cross-coupled electromechanical products, such as that of axial piston pumps, are enabled by GCNs. Yu et al. [

15] introduced a fast deep graph convolutional network (FDGCN) for diagnosing faults in wind turbine gearboxes, and their results demonstrate that the method achieves excellent fault classification ability and overcomes the noise problem of FDGCN by fusing traditional networks with graph convolution networks (GCNs). Good predictive performance was also achieved by deploying a deep graph convolutional network in the acoustic fault diagnosis of rolling bearings [

16]. In response to the challenge posed by limited labeled data in industrial settings, a proposed intelligent fault diagnosis method for electromechanical systems introduces a semi-supervised graph convolutional deep belief network algorithm. The results depict high prediction accuracy with little labeled data [

17]. The correlation of vibration signals at different time points is crucial in identifying and aggregating features to improve the prediction model’s robustness. Wei et al. [

18] introduced an adaptive graph convolutional network (SAGCN) that utilizes a self-attention mechanism for feature correlation without a recursive function. The multi-scale deep graph convolutional network (MSDGCN) algorithm addresses the disordered fluctuations in the measured signal of the rotor-bearing system, demonstrating high accuracy and generalization [

19]. The proposed graph isomorphic network (GIN) proves the graph structure’s representational capability while ensuring the integration of edge weights for different fault samples, resulting in an injective graph. The proposed model outperforms other machine learning models on all three real-world datasets [

20].

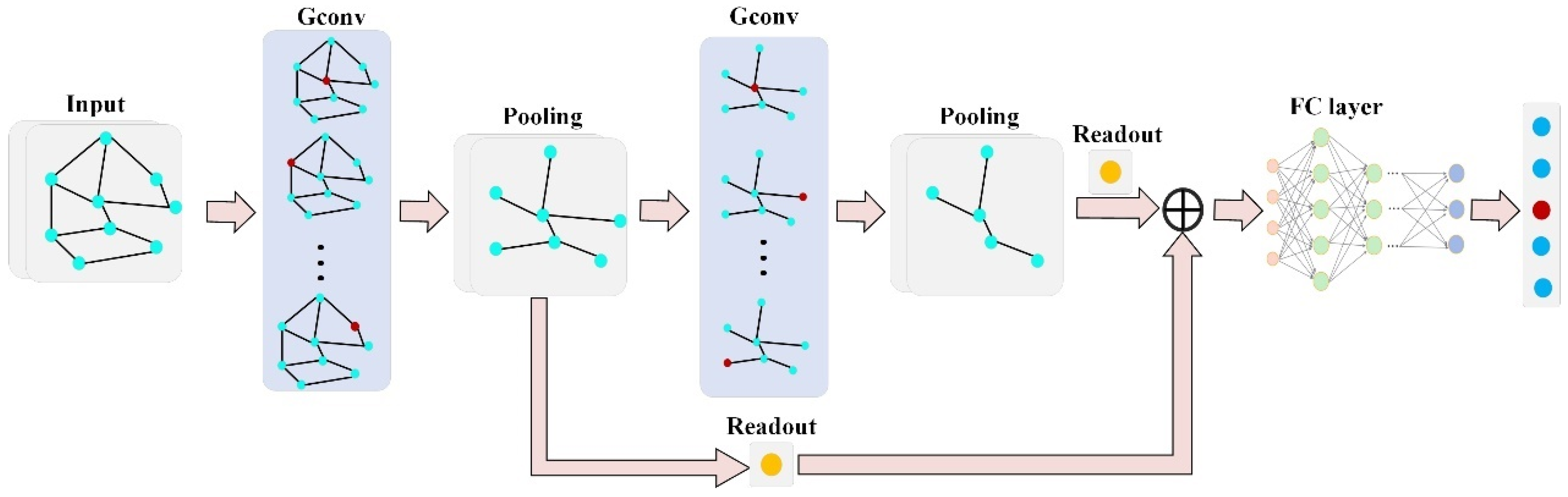

Current traditional GNN methods for fault diagnosis of complex electromechanical equipment, such as axial piston pumps, have poor classification accuracy, and lack temporal interpretability due to the dynamic characteristics of fault features in time series signals. GIN [

21], is a variant of traditional GNN that was specifically designed for graph classification tasks. Inspired by the research work of previous scholars, a GIN with an attention mechanism is proposed in this article, in which a new attention-based READOUT module and Transformer encoder are designed to learn dynamic graph representations of different faults of axial piston pumps with spatio-temporal attention. The primary contributions of this paper include the following:

- (1)

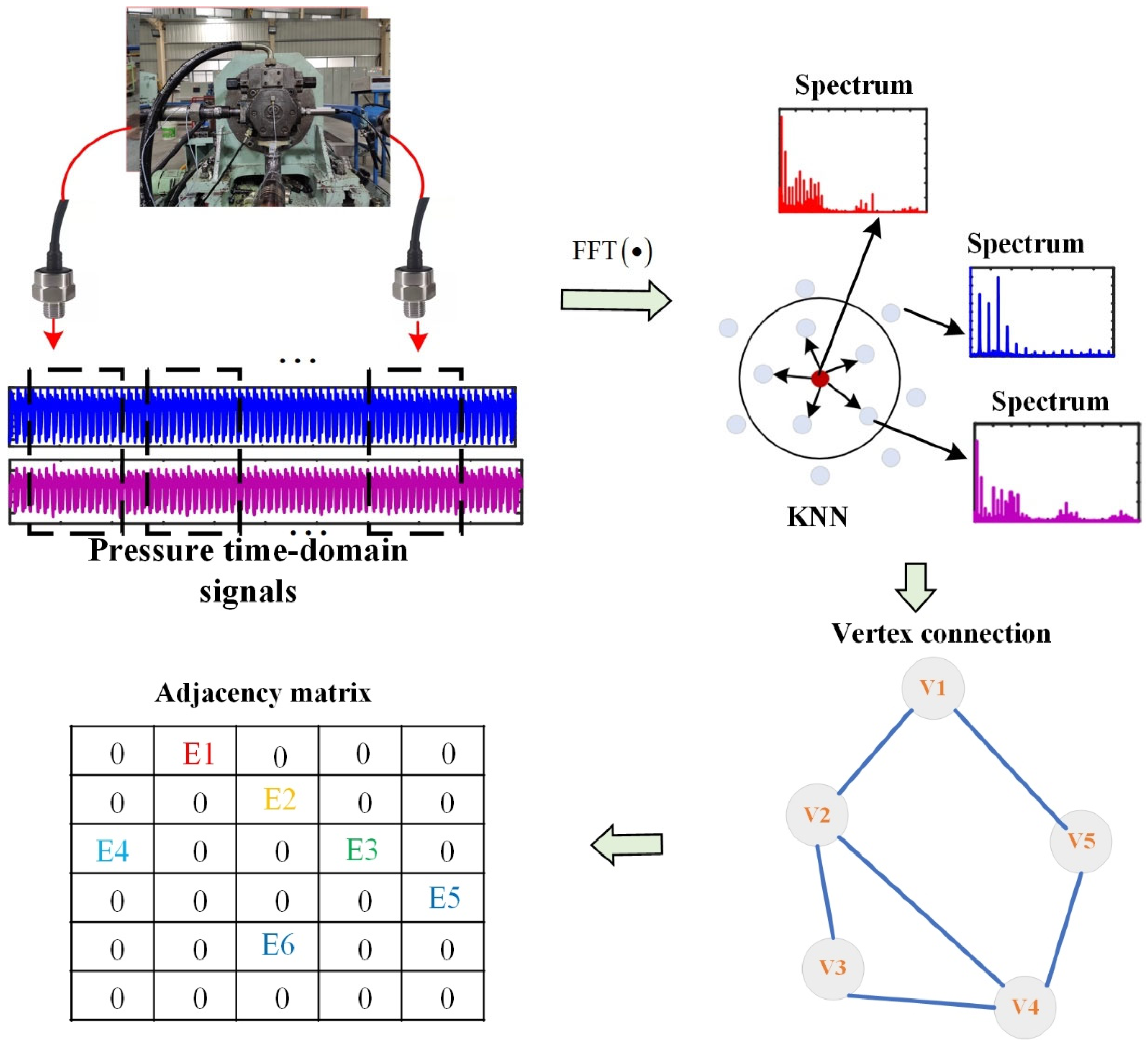

The relationship among input signals is gauged by transforming the raw signals into a weighted graph. Most current GCN implementations are built on unweighted graphs, despite the fact that, in reality, the importance of node neighbors often varies. By employing the weighted graph as the input, the proposed method (GIN-ST) exhibits a capacity to learn more comprehensive feature representations.

- (2)

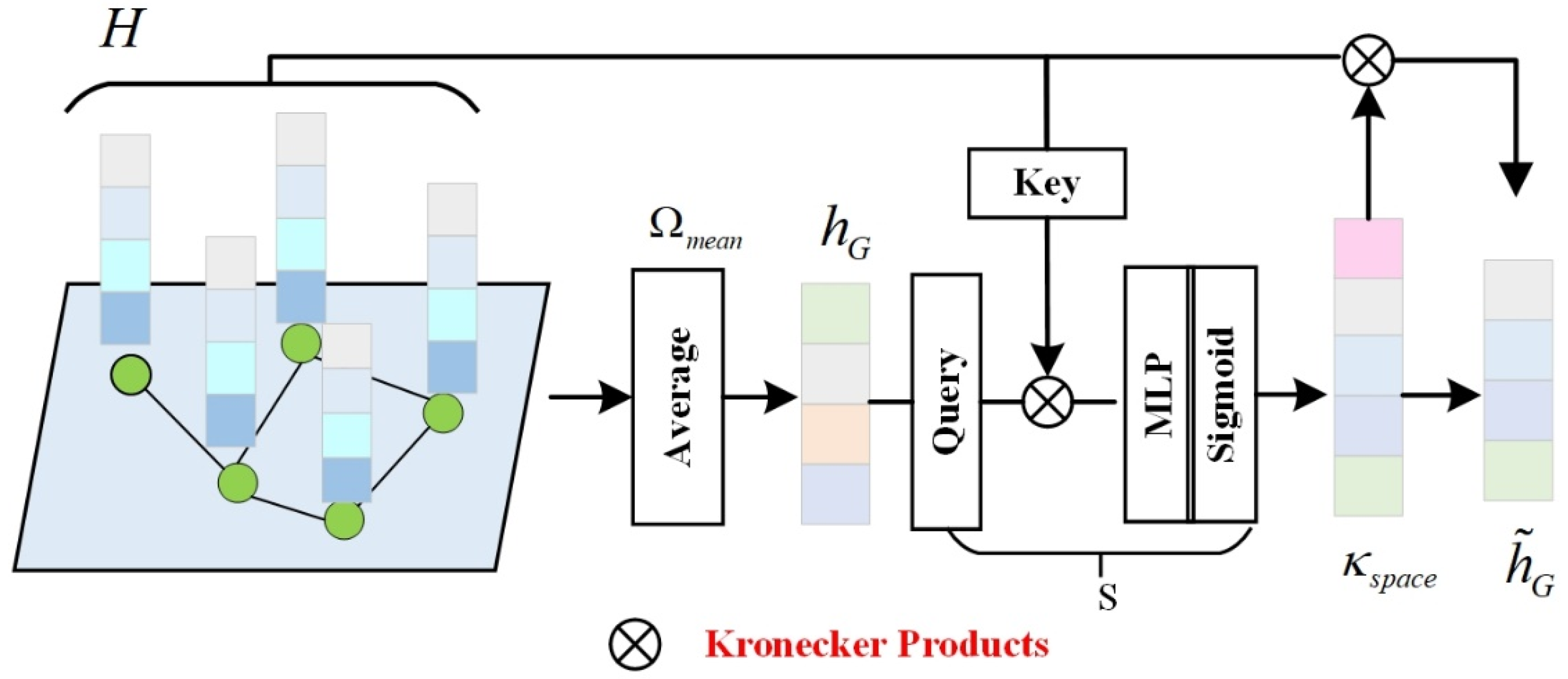

A novel attention-based READOUT module and Transformer encoder was devised in the present study. In contrast to conventional GIN networks, the designed READOUT module and Transformer encoder can decode deeper global features related to various faults in axial piston pumps. This enhancement markedly elevates the performance of the classification task, while concurrently affording spatiotemporal interpretability.

The paper’s organization follows a specific structure, beginning with the theoretical derivation of the graph convolutional networks (GCN) for diagnosing faults in axial piston pumps in

Section 2. In

Section 3, the proposed method is expounded upon, and

Section 4 outlines the acquisition of experimental data for the axial piston pump. The subsequent

Section 5 contains a discussion of the experimental results, and

Section 6 provides a summary of the work in the concluding remarks. A brief flow of the proposed GIN-AM method for failure diagnosis of the axial piston pump is shown in

Figure 1.

5. Results and Discussion

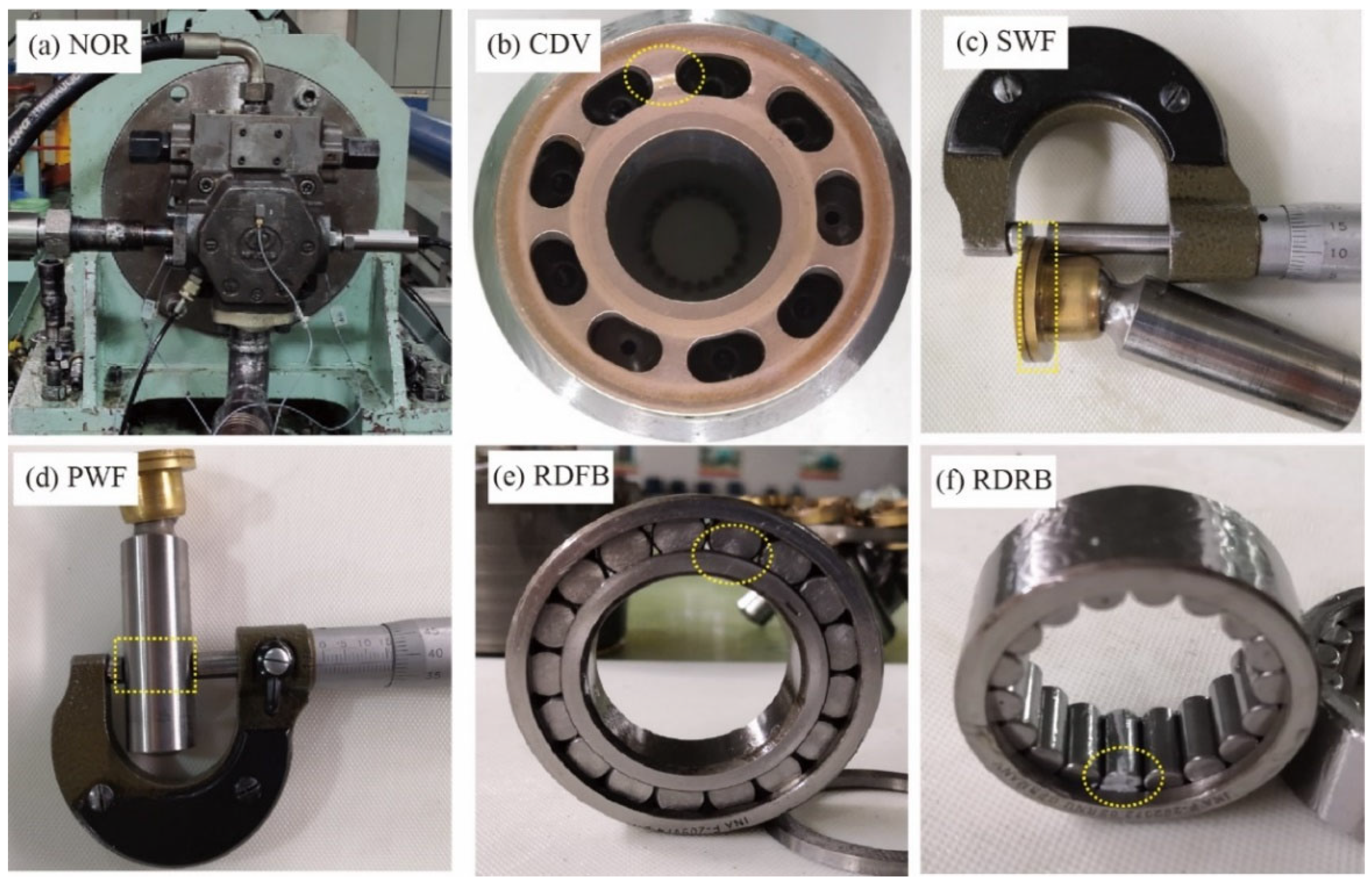

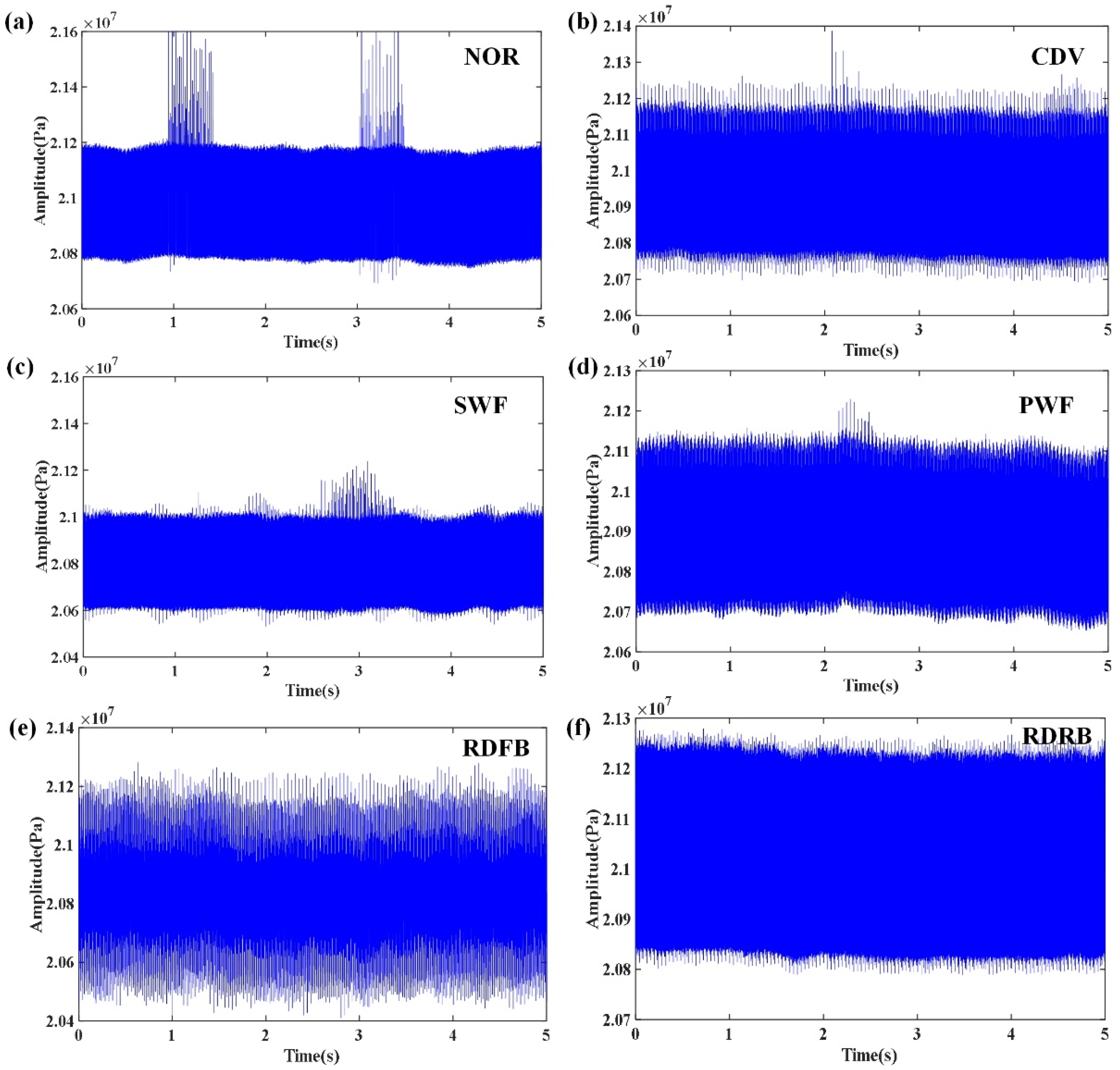

The intelligent diagnosis system for the axial piston pump can be broadly categorized into three key steps. In the primary stage, data acquisition equipment and monitoring software are employed to capture the pressure signals of the axial piston pump. Subsequently, a subset of training and testing samples can be randomly selected for analysis. In the second stage, the training sample subset is used to learn the GIN-ST model. Finally, in the third stage, a subset of test samples is inputted into the trained GIN-ST to obtain recognition outcomes for various fault modes.

5.1. Input Data

For future deployment of diagnostic models in the failure diagnosis of axial piston pumps, the present study avoids the use of traditional diagnostic models to extract deep-level features or multi-feature fusion approaches for input data. Instead, pressure signal data are collected from axial piston pump outlets and then normalized by means of minimum–maximum normalization [

24]. Following this, an original signal is truncated using a sliding window of length 1024 without overlapping. After data segmentation, 2000 subsamples were generated for each dataset, of which the training set constituted 80%, with the remaining 20% retained for the test set. Subsequently, utilizing the weighted graph construction method proposed in

Section 3.3 the graph data were constructed using subsamples taken from both the training set and the test set.

To further evaluate the intra-class variability of the dataset, we conducted a statistical analysis on key signal features extracted from samples within the same fault category. Specifically, we computed the standard deviation of root mean square (RMS), kurtosis, and spectral centroid across all samples in each fault class. The results reveal notable intra-class variation, particularly in the RMS and frequency domain features, which may be attributed to operating condition fluctuations and minor inconsistencies in fault progression.

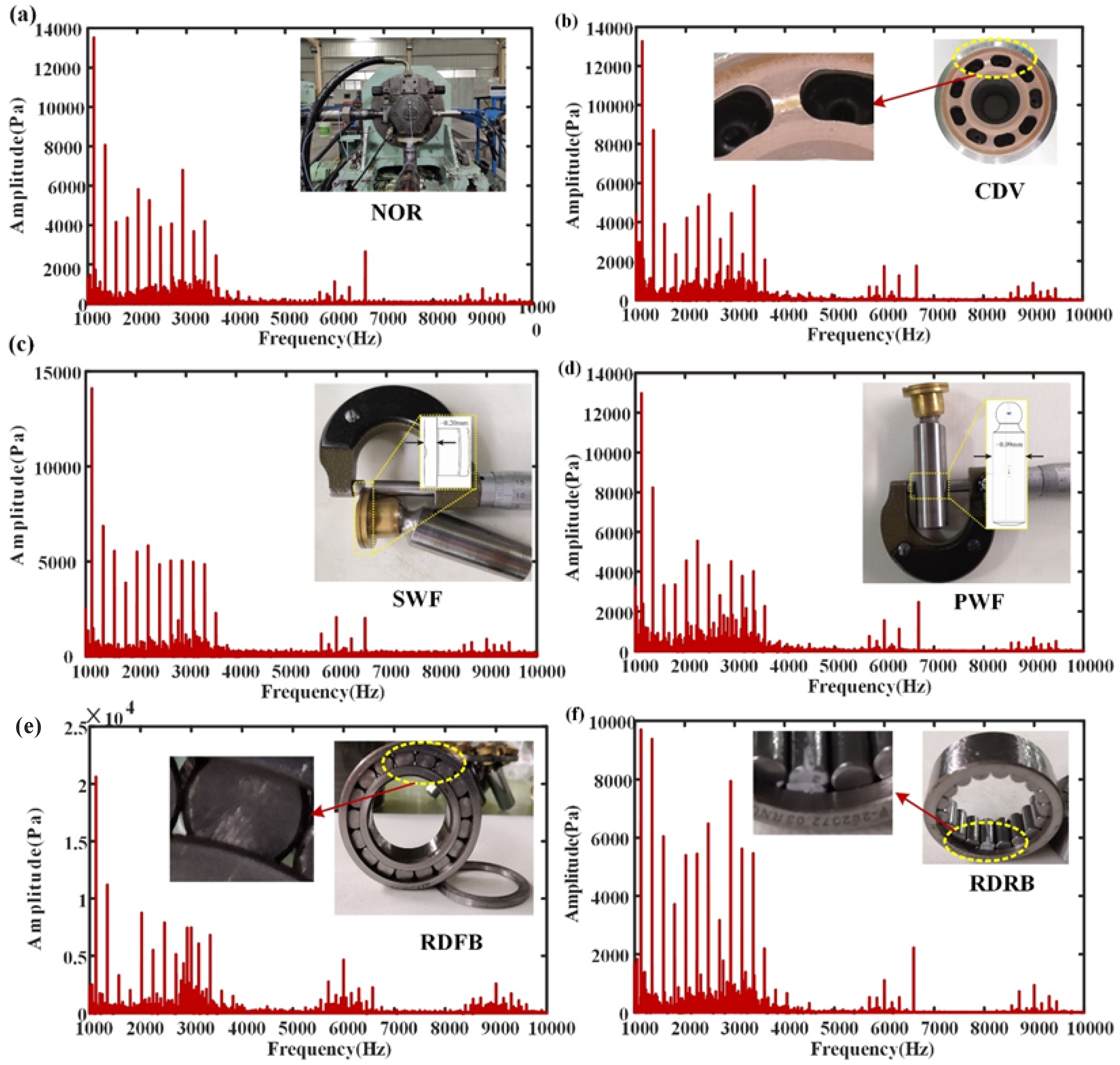

Construction of the weight graph generates graph data for every 10 subsamples employed. Therefore, the resultant training set includes 160 subgraphs, and the test set comprises 40 subgraphs. Furthermore, the study considers two input types—the real-time domain (TD) input and frequency domain (FD) input, with the latter meaning preprocessing of subsamples through FFT.

5.2. Parameter Setting and Diagnostic Details

The experiment was conducted on a NVIDIA GeForce GTX 3080 Ti GPU, The software versions used for data processing in this text are Python version 3.8.6 and MATLAB version R2024b.The GIN-AM model

is trained using a supervised end-to-end approach with loss

, where the cross-entropy loss is

and

is the proportion coefficient of orthogonal regularization. The number of layers

is set, embedding dimension

, and regularization coefficient

. Hyperparameters are adjusted by trial and error, with a batch size of 64, and with specific settings shown in the

Table 5.

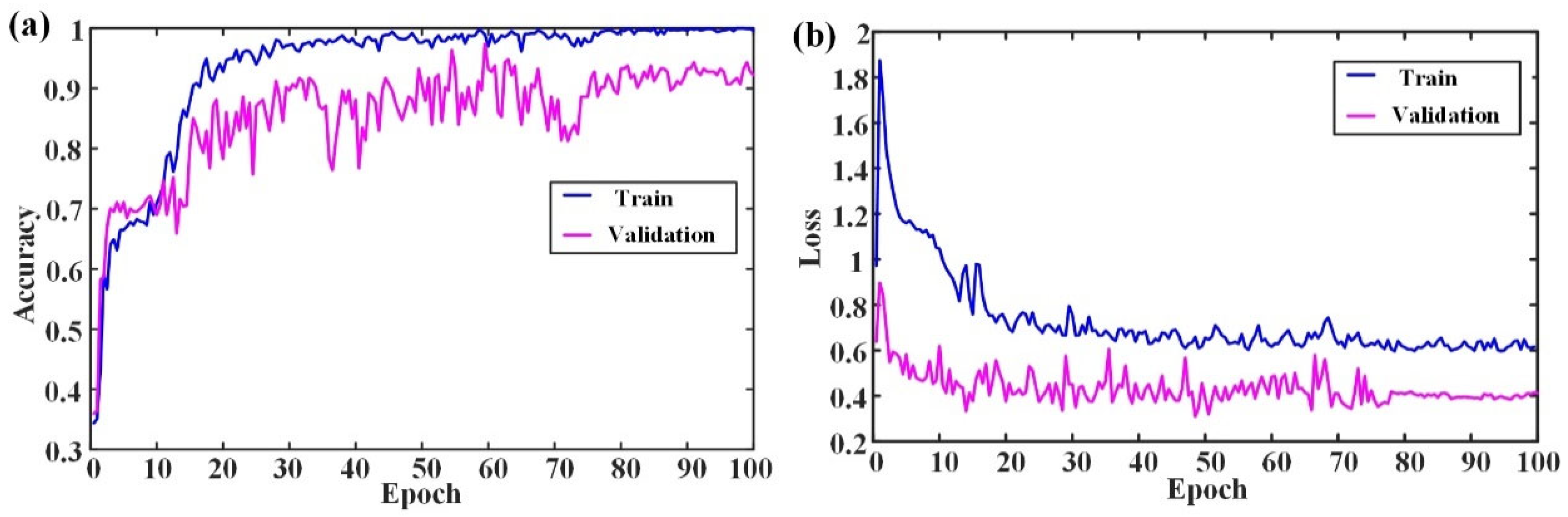

Figure 10 illustrates the training and validation process of the suggested fault diagnosis methodology for an axial piston pump on the GIN-ST model. To eliminate any potential random effects, five trails are conducted. The results in

Figure 10 demonstrate that the GIN-ST model achieved convergence at epoch 100 with a training and validation loss approaching zero.

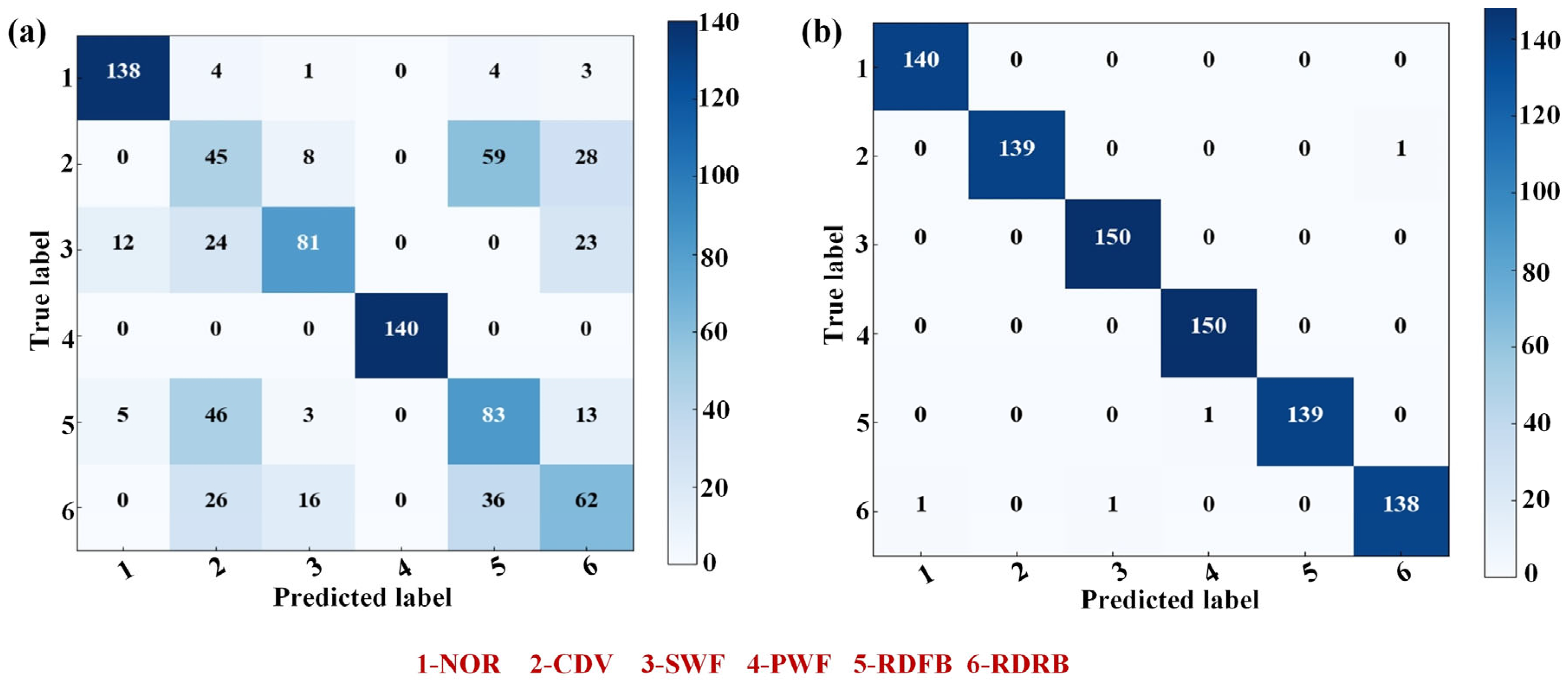

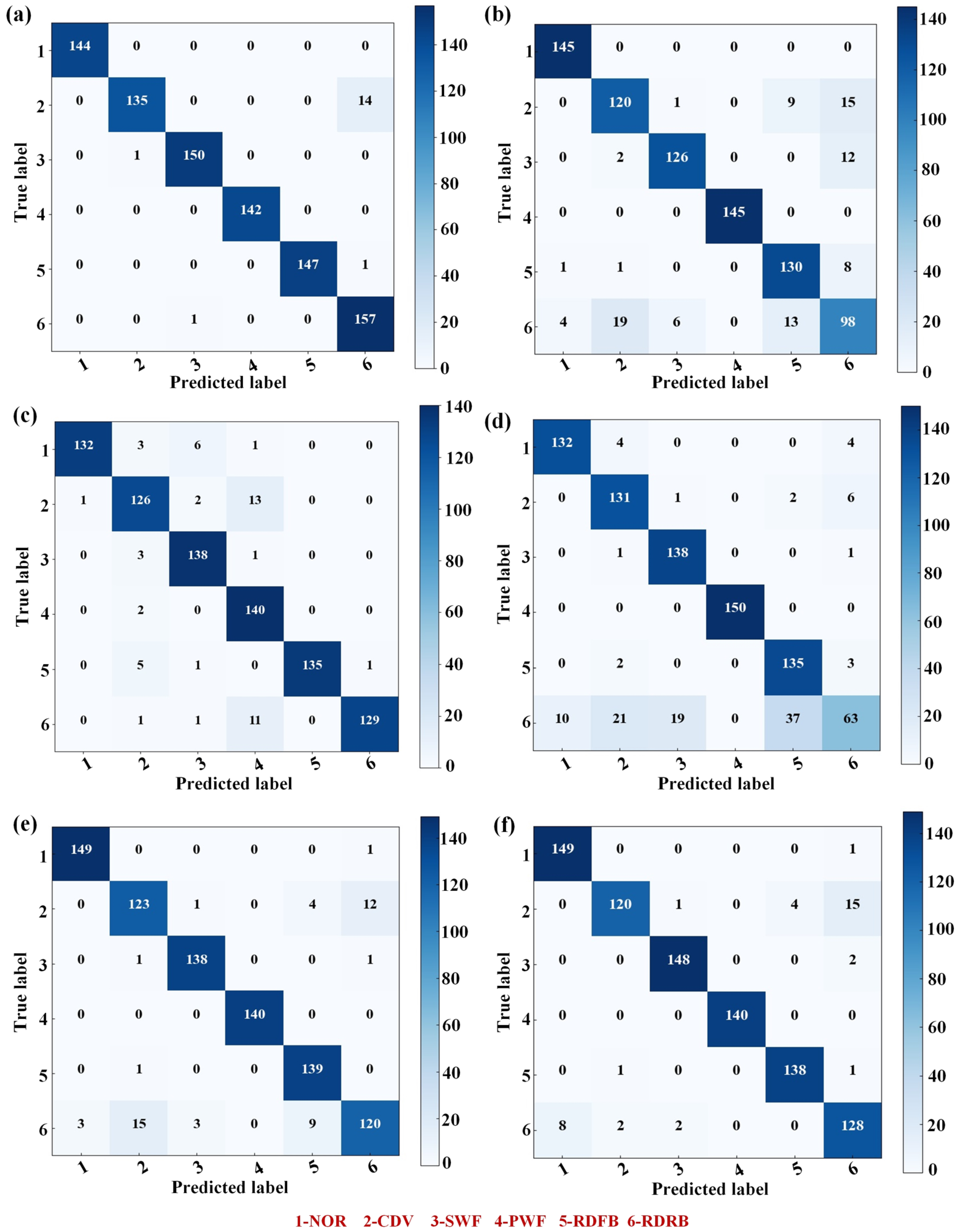

The confusion matrices in

Figure 11 illustrate the performance of the proposed diagnostic method under both time domain and frequency domain input conditions. Within the graph, frequency domain input signifies the preprocessing of subsamples through the utilization of fast Fourier transform (FFT), aiding in the mitigation of noise interference inherent in time domain signals. The application of the proposed method to fault classification with two distinct inputs yields results as depicted in

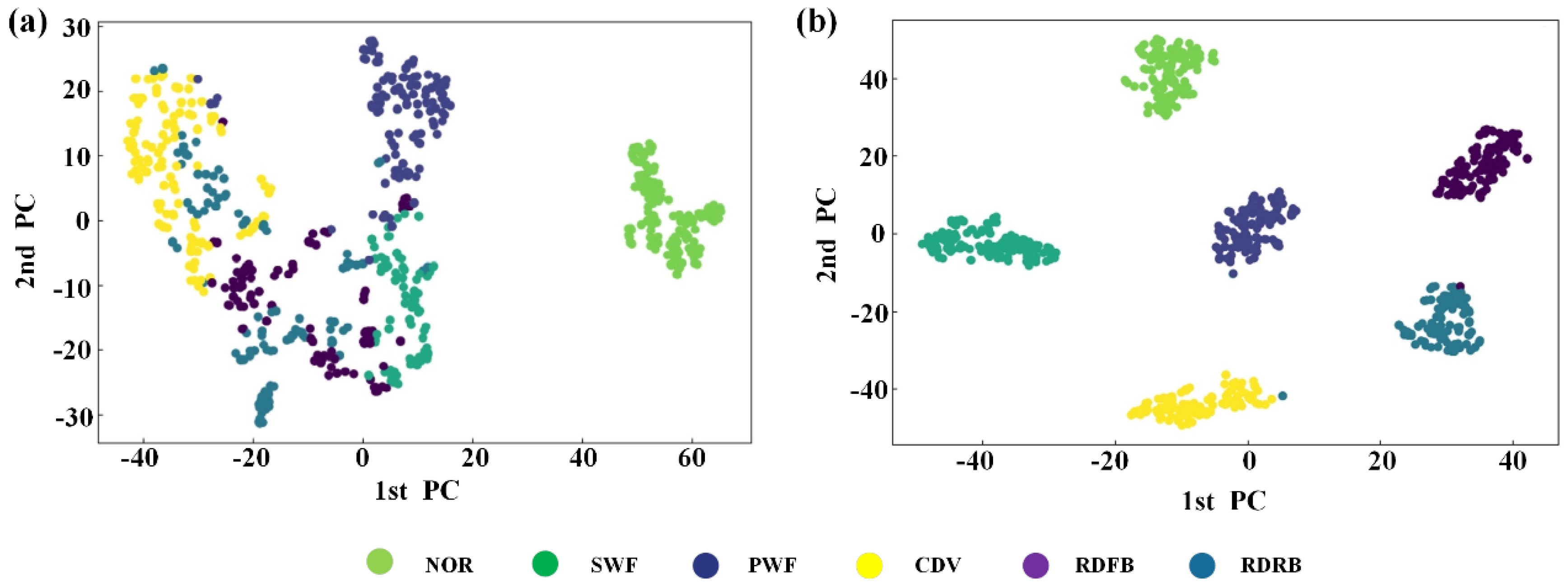

Figure 12a,b, respectively. The classification results show that predictions based on frequency domain input are markedly superior to those based on time domain input. This difference in outcome can be attributed to the substantial levels of noise present within the industrial working environment of axial piston pump systems. The application of FFT processing to the data can effectively reduce the disruptive influence of environmental noise on node features of the graph, thus enabling an easier acquisition of more meaningful node and graph representations.

To examine the outcomes of graph-based feature learning derived from the proposed model, we employed the widely utilized t-distribution stochastic neighbor embedding (t-SNE) method. The horizontal and vertical axes correspond to the two dimensions of the t-SNE embedding space, denoted as Component 1 and Component 2, respectively. As depicted in

Figure 12, the t-SNE results reveal that in the time domain (TD), apart from capturing the typical characteristics of the plunger pump, the features associated with other fault categories exhibit incorrect classification. Conversely, in the frequency domain (FD), nearly all fault categories can be accurately classified.

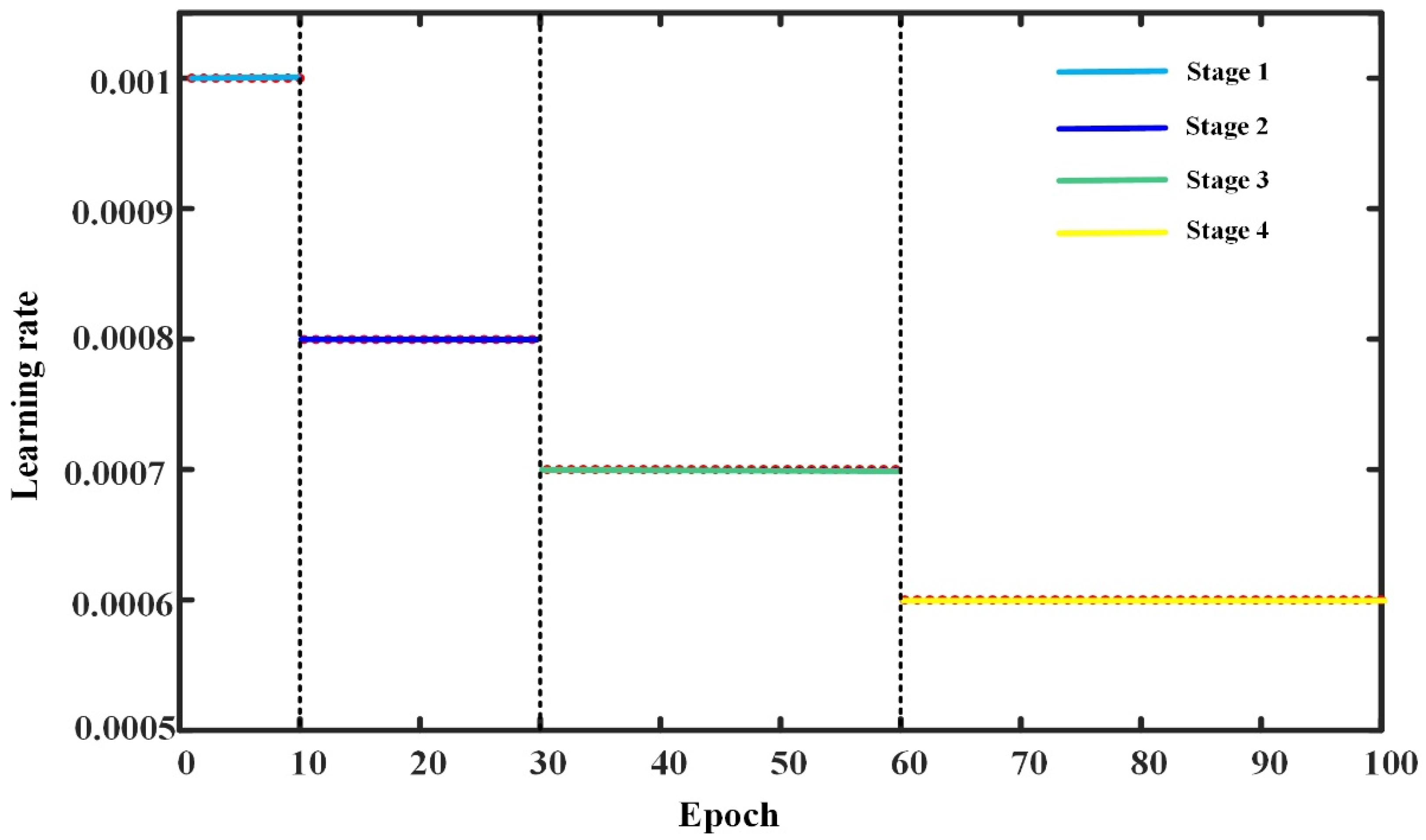

5.3. Compared with Other Methods

To demonstrate the efficacy and superiority of our proposed diagnostic approach, it is essential to compare it with other intelligent fault diagnosis methods. Accordingly, we benchmarked our GIN-ST algorithm against ARGCN [

25], DCA-BiGRU [

24], GCN [

26], GAT [

27], GIN [

28], and other similar algorithms. Moreover, we compared our proposed method against the traditional five-layer CNN [

23]. During our experimental process, the labeled samples were utilized before constructing the weighted graph as the input of CNN, while the weighted graph was used as the input of GCN. All models underwent training using 100 epochs with an initial learning rate of 0.001, and the learning rate during the model training process was adjusted through a multi-step strategy, which was divided into four stages: 0–10, 10–30, 30–60, and 60–100 training epochs, each using a different learning rate. The specific setting strategy is shown in

Figure 13 and the optimizer was set to Adam.

To mitigate potential impacts of random events on our findings and to underscore the robustness and dependability of our proposed methodology, five experiments were performed. The assessment of classification efficacy centered on the overall classification accuracy, encompassing metrics such as maximum accuracy (max-acc), minimum accuracy (min-acc), average accuracy (avg-acc) across five trials, and training duration. The classification outcomes of various methods are delineated in

Table 6.

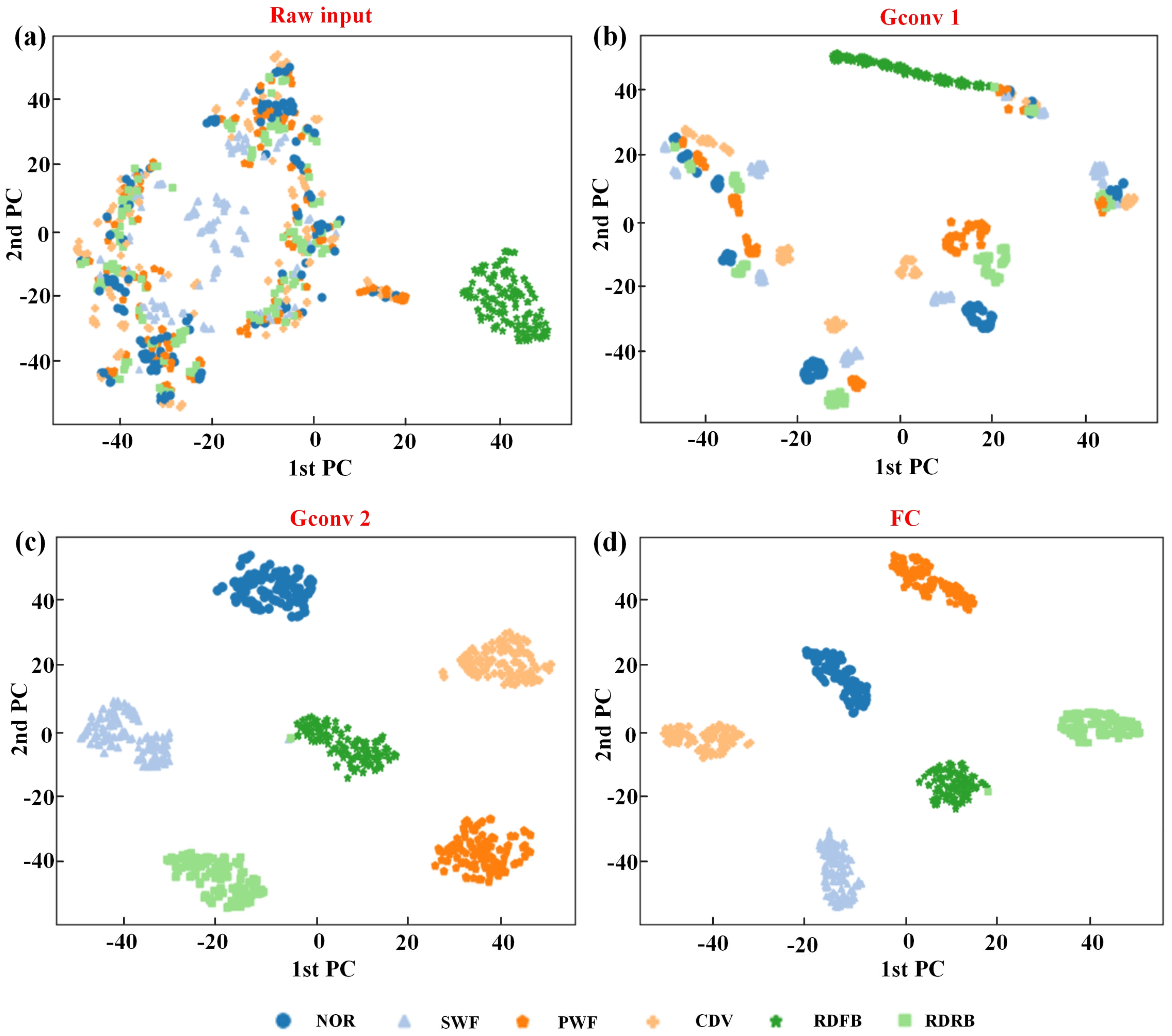

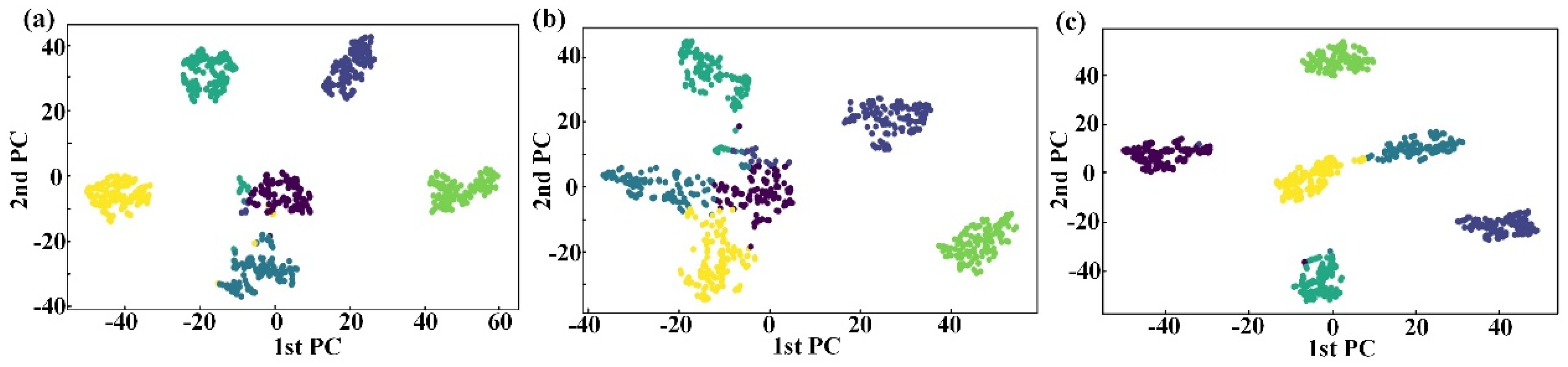

The failure diagnosis model presented in this study learns the process of graph feature in each layer, as demonstrated in

Figure 14. The graph depicts scattered points in the distribution of features of the raw input. However, certain features in the RDFB category (

![Applsci 15 06586 i001]()

) tend to cluster; meanwhile, the majority of features representing other category faults continue to display almost uniform distribution, even after being processed by GConv1. Further information extraction by GConv2 enables the classification and clustering of distinct faults features. Eventually, feature clustering is established in the same type through the learning of FC, and clear classes are distinguishable at this stage. By utilizing the frequency domain input of the pressure signals, GIN-AM successfully accomplishes fault classification of the axial piston pump.

Figure 15 illustrates the confusion matrices of various intelligent failure diagnosis methods, excluding the proposed approach, under a similar frequency domain input. The classification results indicate substantial inconsistencies in sensitivity to strong noise among different diagnosis model input features. Moreover, it is commonly observed that graph convolutional neural networks with an attention mechanism (GAT) and GIN show significant superiority over other methods. Therefore, this paper provides an idea for designing a spatio-temporal attention mechanism module based on the traditional GIN model.

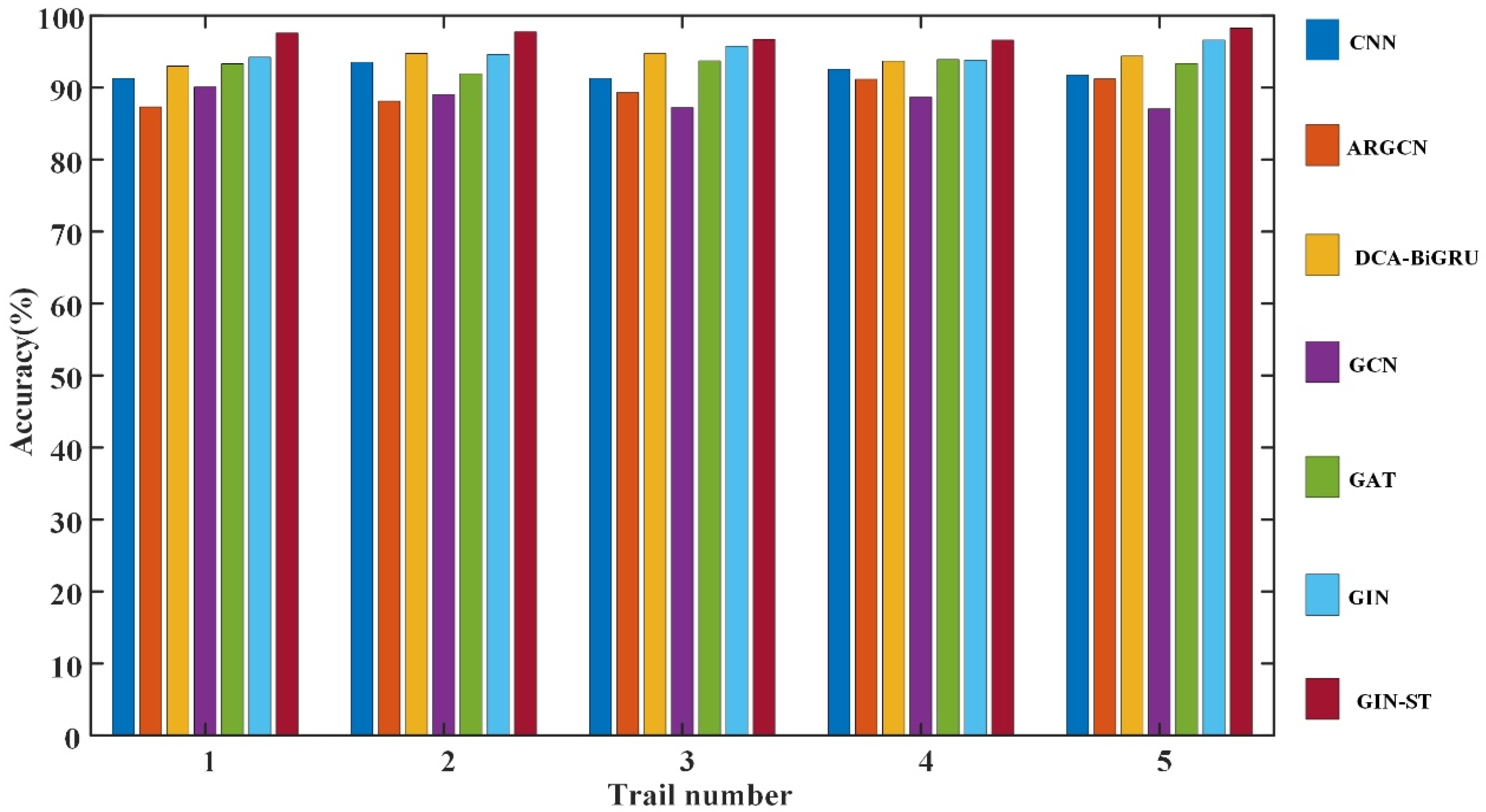

Further, the diagnostic results of the above six intelligent fault diagnosis methods in five random trails were compared with the proposed GIN-ST, as shown in

Figure 16. The performance of GIN-ST is noted for its remarkable stability, consistently achieving peak performance across all datasets. The average diagnostic accuracies of GIN-ST for each dataset are 97.59%, 97.75%, 96.70%, 96.62%, and 98.28%, respectively. These figures underscore that the proposed method delivers the most comprehensive and optimal results in terms of efficiency and accuracy, particularly under conditions of significant noise interference.

To enhance the complexity of fault diagnosis, white noise with varying signal-to-noise ratios (SNR) was introduced into the dataset, mimicking the noise found in various operating conditions of axial piston pumps. Subsequently, the resulting dataset was randomly divided into a training set (80%) and a testing set (20%). Illustrated in

Figure 17, the diagnostic accuracies of the aforementioned methods were assessed under different levels of random noise. The classification results demonstrate the superior noise resistance performance of the GIN-ST model compared to other fault diagnosis methods, primarily attributed to the design of the spatio-temporal attention module, which emphasizes fault features. Thus, these experimental findings indicate that the proposed model shows enhanced robust stability and noise resistance performance for diagnosing variable working conditions in axial piston pumps.

5.4. Ablation Research

In this section, two variants of the GIN-ST model are proposed for the ablation study that are aimed at evaluating the individual impact of model components on the final prediction outcomes: (1) UWG-GIN-ST, which transforms subsamples of the original signal into unweighted graphs as inputs for GIN-ST, wherein the importance of node neighbors is considered equally important. (2) WG-GIN, which omits the design of spatio-temporal dynamic attention mechanisms, employing the original GIN network with inputs as subsamples of the original signal transformed into weighted graphs but without the designed attention-based READOUT module and Transformer encoder. The average accuracy of both model variants in diagnosing faults in the axial piston pump over five instances is presented in

Table 7.

The analysis results in

Table 7 indicate that the average predictive accuracy of the proposed GIN-ST is 5.13% higher than that of UWG-GIN-ST. This suggests that weighted graphs should be used as inputs during the graph convolution operation, acknowledging the varying importance of node neighbors. Furthermore, GIN-ST outperforms WG-GIN by 7.48%, demonstrating that the introduction of a novel attention-based READOUT module and Transformer encoder effectively addresses the issue of incorporating temporal information into input node features. This approach facilitates the connection of encoded timestamps with node features, enabling the decoding of deeper global features associated with various faults in the axial piston pump. Consequently, this enhances fault classification accuracy.

To showcase the efficacy of these three models in feature learning, the features extracted from the last layer of the feature extractor are visualized through T-SNE.

Figure 18 shows the visualization features of these three different models to illustrate their impact on the task.

From the feature visualization and classification results in

Figure 18a, b, it is evident that both UWG-GIN-ST and WG-GIN struggle to effectively distinguish between the rolling element damage of the front-end bearing (RDFB,

![Applsci 15 06586 i002]()

) and rolling element damage of the rear-end bearing (RDRB,

![Applsci 15 06586 i003]()

). This observation suggests that employing unweighted graphs as the input and omitting the spatiotemporal dynamic attention module are detrimental to the accurate classification of fault features in the axial piston pump. The feature visualization and classification results of the proposed method, as shown in

Figure 18c, demonstrate that the proposed approach successfully and comparatively accurately classifies various fault features.

5.5. Real-Time Feasibility

To assess the applicability of the proposed method in embedded or on-board monitoring scenarios such as aircraft or railway systems, we evaluated its real-time feasibility in terms of computational latency and resource demand. The model was deployed on a representative edge computing platform (e.g., NVIDIA Jetson Nano with 4 GB RAM and quad-core ARM CPU), and inference time was measured using preprocessed input signals of fixed length (e.g., 1024 points after FFT).

The average inference time per sample was approximately 22.6 ms, which meets the real-time processing requirement for most low-frequency hydraulic monitoring tasks. Compared with a baseline 1D CNN, our GNN-based model demonstrated slightly higher latency (by ~6 ms) but provided significantly improved accuracy and noise robustness. The memory footprint remained below 250 MB during inference, ensuring compatibility with embedded environments.

6. Conclusions

In this paper, the GIN-ST model is introduced, presenting a novel fault diagnosis framework derived from the traditional GIN model by incorporating a spatio-temporal attention mechanism. The proposed method is applied to fault diagnosis in axial piston pumps under conditions of significant noise interference. Empirical results validate that GIN-ST surpasses other intelligent diagnostic tools in both classification accuracy and robustness. The following summarizes the conclusions drawn from this study:

- (1)

A novel attention-based READOUT module and Transformer encoder was developed which can decode the deeper whole graph features of different faults in an axial piston pump. The proposed model can enhance the performance of the classification task and offers spatio-temporal interpretability.

- (2)

This study investigated the effect of different inputs on fault diagnosis models and found that frequency domain input can partially mitigate the impact of strong noise on fault graph node features. However, diagnostic methods varied in sensitivity to noise. By introducing a spatio-temporal attention mechanism into the diagnostic model, the fault diagnosis of axial piston pumps under strong noise conditions can be more robust.

- (3)

After comprehensive consideration of efficiency and accuracy, as well as a comparison of predictive results with other intelligent fault diagnosis methods, it was determined that GIN-ST exhibits superior diagnostic accuracy and robustness in the diagnosis of piston pump faults, particularly under conditions of strong noise.

Furthermore, the proposed method shows strong potential for integration into on-board aircraft health monitoring systems (AHMS). Its lightweight architecture, robustness to noise, and ability to extract discriminative features from non-stationary signals make it suitable for real-time condition monitoring under the strict operational and safety requirements of aviation environments.

In future work, we plan to explore hybrid modeling approaches that integrate physics-informed knowledge with data-driven representations. Specifically, incorporating fluid mechanics models—such as leakage flow equations, pressure drop formulations, and volumetric efficiency constraints—can provide a physically consistent foundation to augment the learned features from the GNN framework. This fusion of domain knowledge and deep learning has the potential to improve interpretability, enhance generalization to unseen fault modes, and enable failure diagnosis under sparse data conditions. Such hybrid architectures are particularly promising for safety-critical applications such as aviation hydraulics, where explainability and reliability are essential.

) tend to cluster; meanwhile, the majority of features representing other category faults continue to display almost uniform distribution, even after being processed by GConv1. Further information extraction by GConv2 enables the classification and clustering of distinct faults features. Eventually, feature clustering is established in the same type through the learning of FC, and clear classes are distinguishable at this stage. By utilizing the frequency domain input of the pressure signals, GIN-AM successfully accomplishes fault classification of the axial piston pump.

) tend to cluster; meanwhile, the majority of features representing other category faults continue to display almost uniform distribution, even after being processed by GConv1. Further information extraction by GConv2 enables the classification and clustering of distinct faults features. Eventually, feature clustering is established in the same type through the learning of FC, and clear classes are distinguishable at this stage. By utilizing the frequency domain input of the pressure signals, GIN-AM successfully accomplishes fault classification of the axial piston pump. ) and rolling element damage of the rear-end bearing (RDRB,

) and rolling element damage of the rear-end bearing (RDRB,  ). This observation suggests that employing unweighted graphs as the input and omitting the spatiotemporal dynamic attention module are detrimental to the accurate classification of fault features in the axial piston pump. The feature visualization and classification results of the proposed method, as shown in Figure 18c, demonstrate that the proposed approach successfully and comparatively accurately classifies various fault features.

). This observation suggests that employing unweighted graphs as the input and omitting the spatiotemporal dynamic attention module are detrimental to the accurate classification of fault features in the axial piston pump. The feature visualization and classification results of the proposed method, as shown in Figure 18c, demonstrate that the proposed approach successfully and comparatively accurately classifies various fault features.