Fault Diagnosis Systems for Robots: Acoustic Sensing-Based Identification of Detached Components for Fault Localization

Abstract

1. Introduction

2. Materials and Methods

2.1. Concept of Transfer Function and Frequency Response

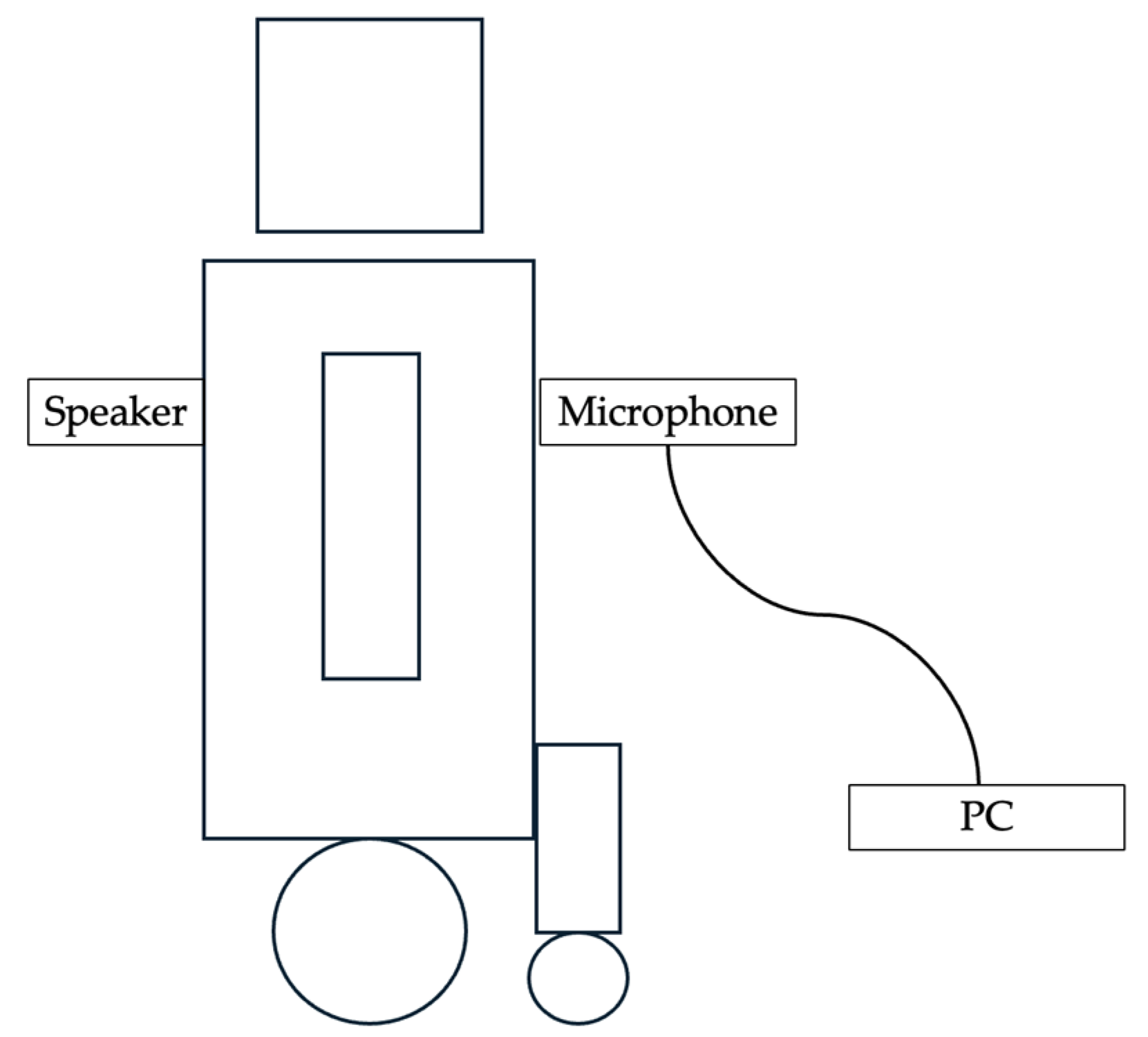

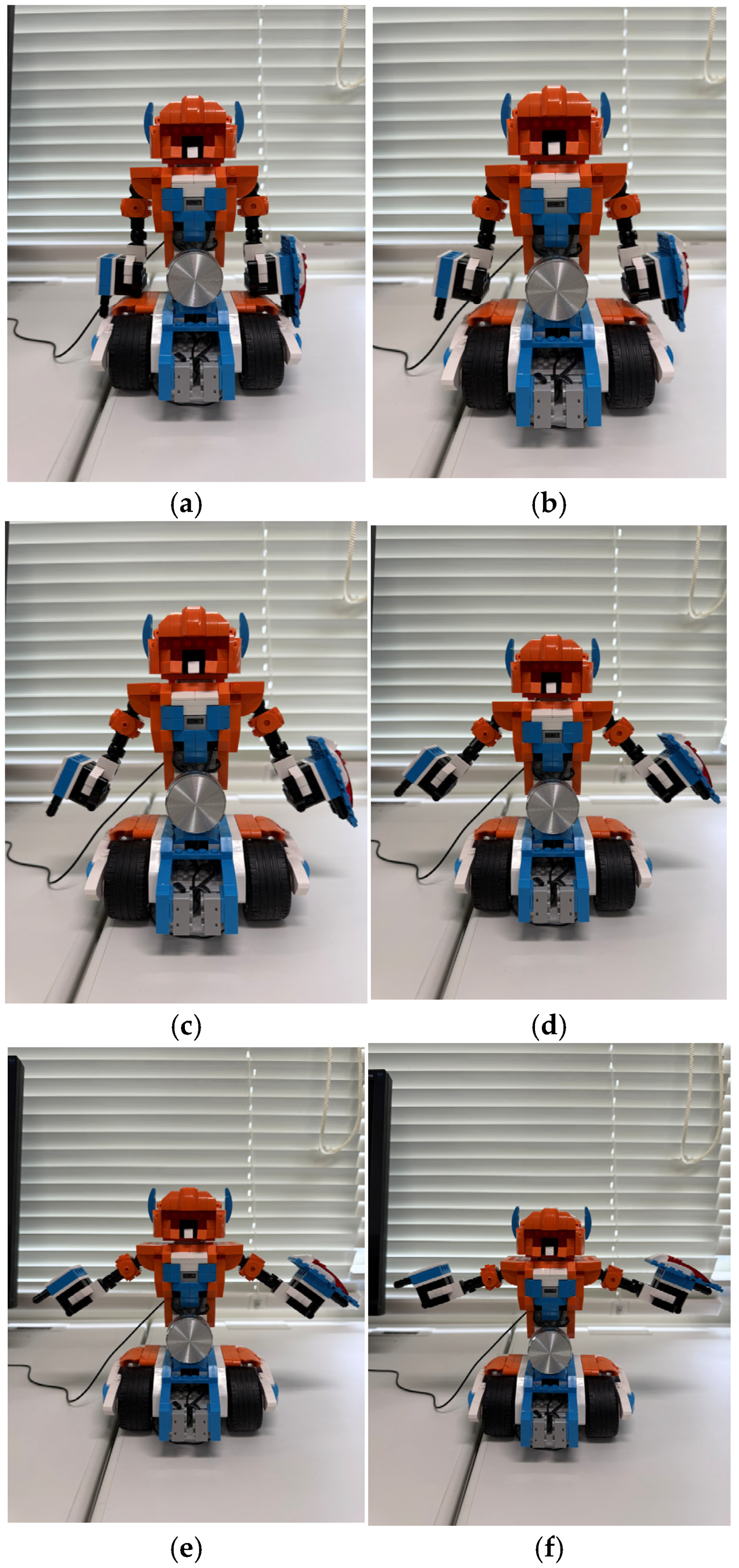

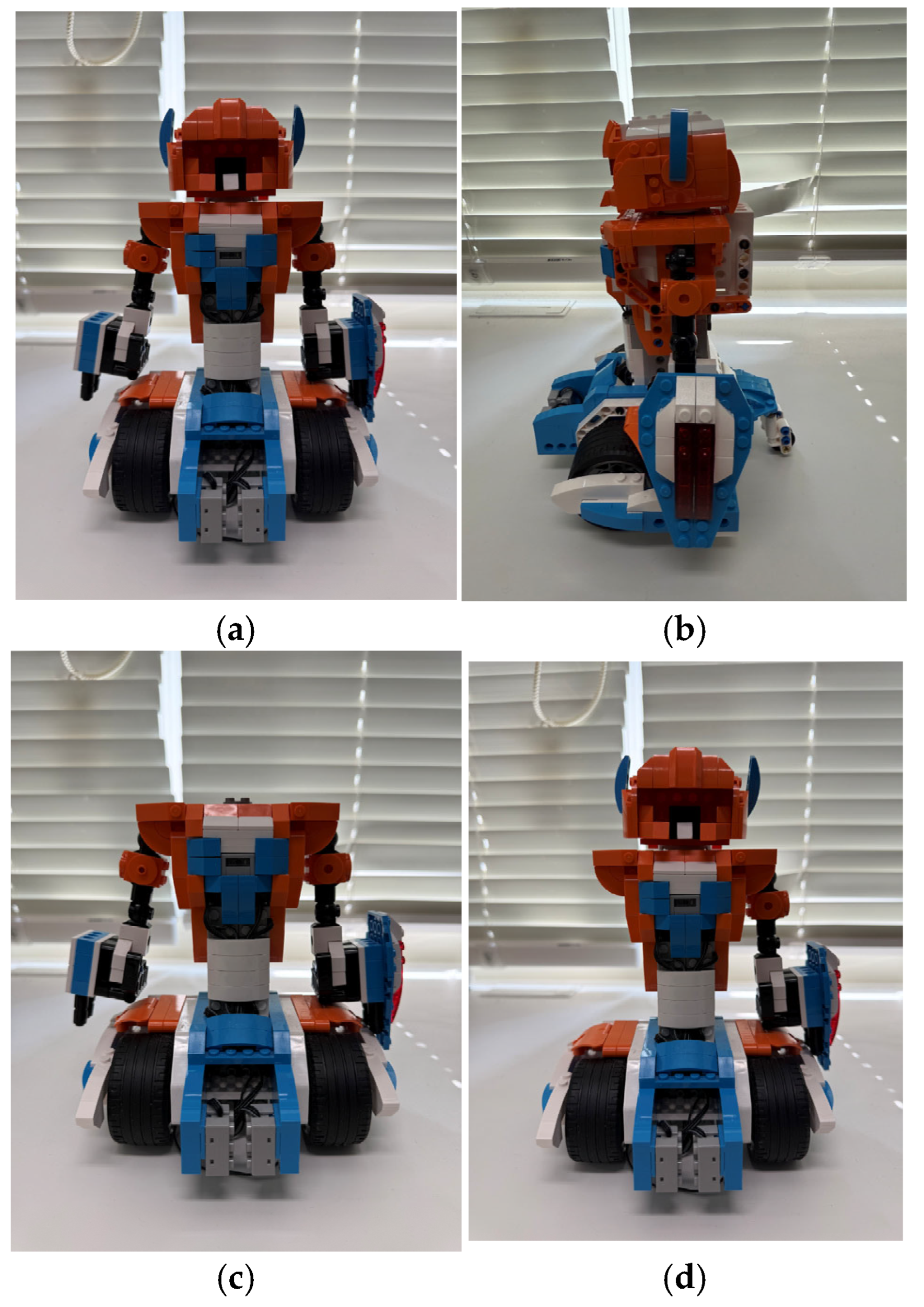

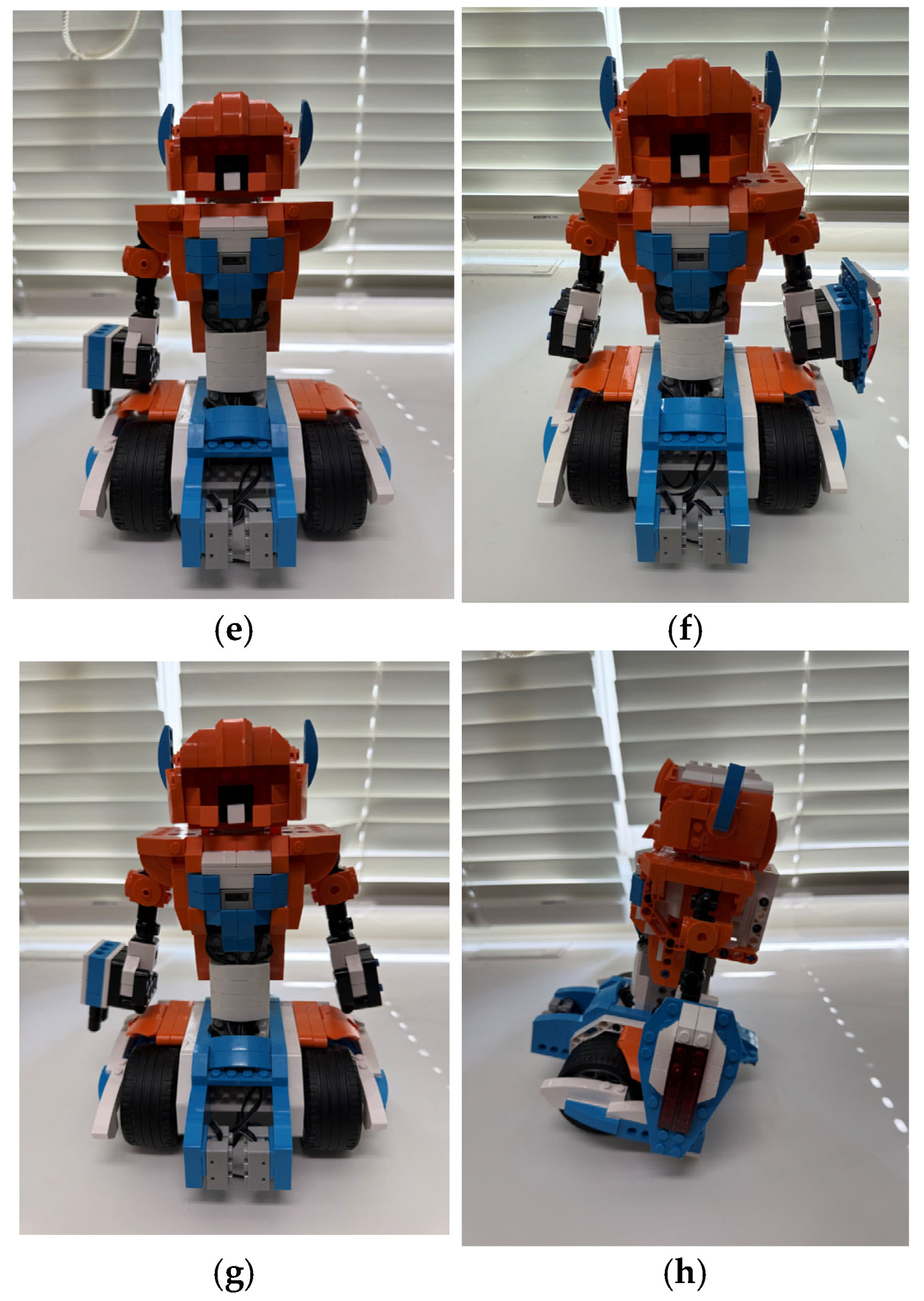

2.2. Experimental Setup and Data Acquisition

2.3. Signal Processing and Feature Extraction

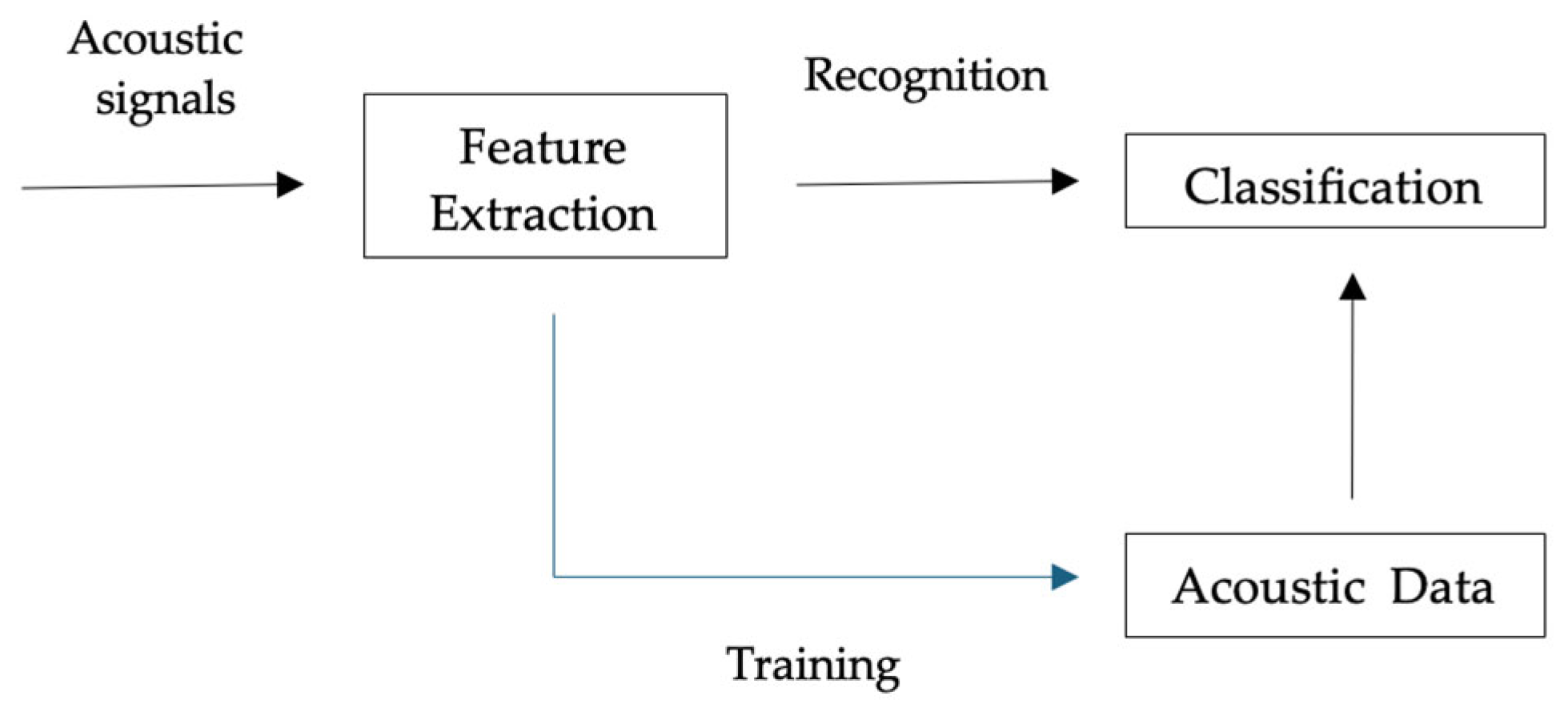

2.4. Fault Classification and Model Comparison

3. Results

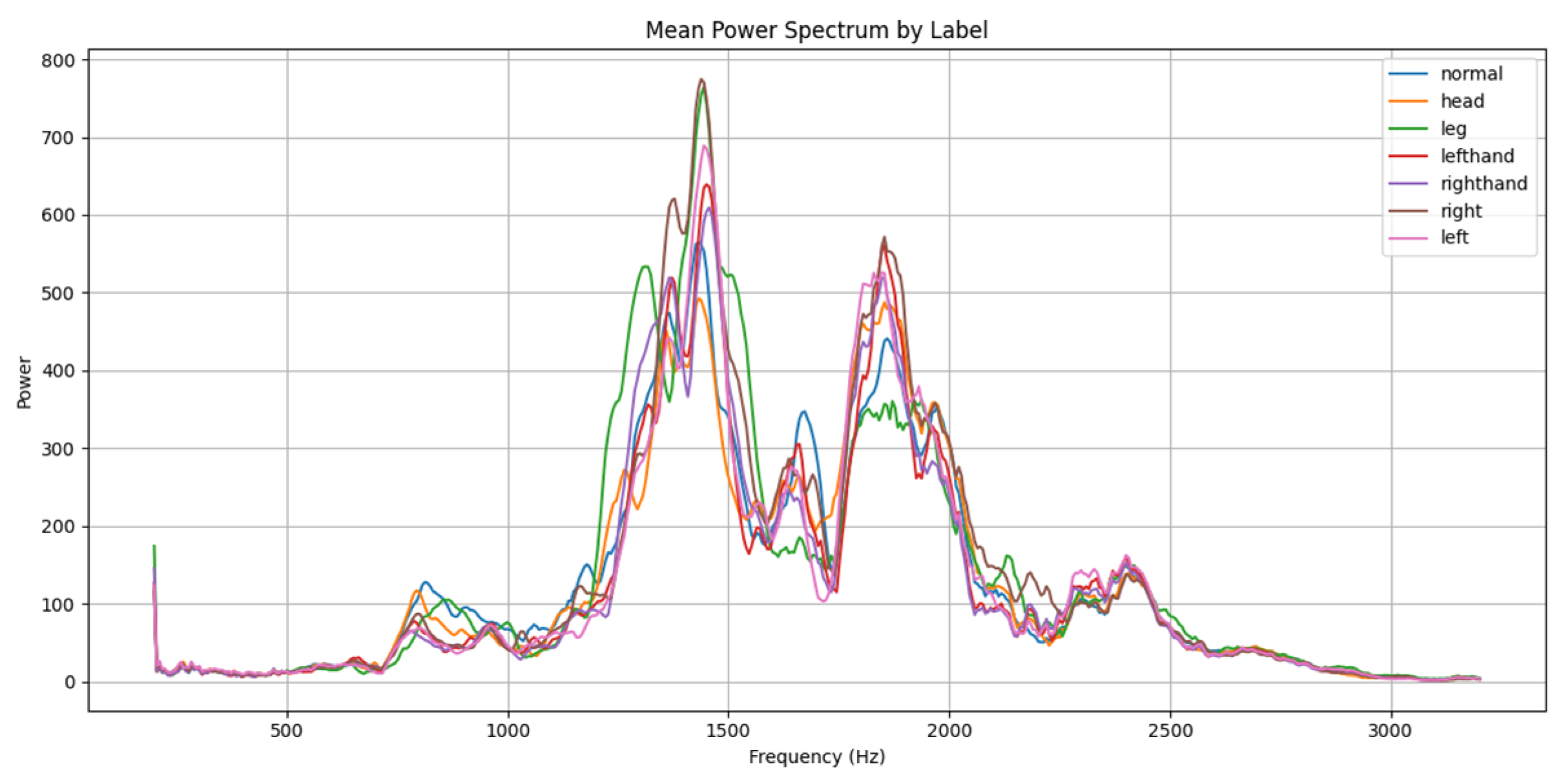

3.1. Acoustic Signal Analysis

3.2. Classification Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAS | Active acoustic sensing |

| AE | Acoustic emission |

| FFT | Fast Fourier transform |

| KNN | K-nearest neighbor |

| MLP | Multi-layer perceptron |

| RF | Random forest |

| SVM | Support vector machine |

| Laplace transform of the input signal | |

| Laplace transform of the output signal | |

| Transfer function in the Laplace domain | |

| Complex frequency variable | |

| σ | Real part of the s |

| ω | Angular frequency in radians per second |

| Frequency response of the system | |

| X(f) | Input signal in the frequency domain |

| Y(f) | Output signal in the frequency domain |

| Transfer function in the real frequency domain | |

| x(t) | Input signal in the time domain |

| y(t) | Output signal in the time domain |

| Frequency in Hertz |

References

- Chen, L.; Liu, L.; He, M.; Liu, D. Gearbox Fault Diagnosis Based on VMD and Acoustic Emission Technology. In Proceedings of the 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Auckland, New Zealand, 20–23 May 2019; pp. 1–6. [Google Scholar]

- Li, H.; Wu, Z.; Xue, K.; Yang, G. Research on aero-engine bearing fault using acoustic emission technique based on wavelet packet decomposition and support vector machine. In Proceedings of the 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 25–26 March 2017; pp. 1444–1448. [Google Scholar]

- Dou, H.; Liu, T.; Yang, X.; Li, Z.; Zhang, J.; Yang, J.; Mao, Y.; Mu, X. Planetary Gear Fault Detection Using PMUT Based Acoustic Emission Sensor. In Proceedings of the 2025 IEEE 38th International Conference on Micro Electro Mechanical Systems (MEMS), Kaohsiung, Taiwan, 19–23 January 2025; pp. 1027–1030. [Google Scholar]

- Sause, M. Situ Monitoring of Fiber-Reinforced Composites: Theory, Basic Concepts, Methods, and Applications; Springer Series in Materials Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 242. [Google Scholar]

- Godin, N.; Reynaud, P.; Fantozzi, G. Acoustic Emission and Durability of Composites Materials; ISTE-Wiley: London, UK, 2018; ISBN 9781786300195. [Google Scholar]

- Romhany, G.; Czigany, T.; Karger-Kocsis, J. Failure assessment and evaluation of damage development and crack growth in polymer composites via localization and acoustic emission events: A review Polymer Reviews. Polym. Rev. 2017, 57, 397–439. [Google Scholar] [CrossRef]

- Morscher, G.N.; Godin, N. Use of Acoustic Emission for Ceramic Matrix Composites; John Wiley&Sons, Inc.: Hoboken, NJ, USA, 2014; pp. 569–590. [Google Scholar]

- Lee, X.Y.; Kumar, A.; Vidyaratne, L.; Rao, A.R.; Farahat, A.; Gupta, C. An ensemble of convolution-based methods for fault detection using vibration signals. In Proceedings of the 2023 IEEE International Conference on Prognostics and Health Management (ICPHM), Montreal, QC, Canada, 5–7 June 2023; pp. 172–179. [Google Scholar]

- Al-Ghamd, A.M.; Mba, D. A comparative experimental study on the use of acoustic emission and vibration analysis for bearing defect identification and estimation of defect size. Mech. Syst. Signal Process. 2006, 20, 1537–1571. [Google Scholar] [CrossRef]

- Eftekharnejad, B.; Carrasco, M.R.; Charnley, B.; Mba, D. The application of spectral kurtosis on Acoustic Emission and vibrations from a defective bearing. Mech. Syst. Signal Process. 2011, 25, 266–284. [Google Scholar] [CrossRef]

- Roennau, A.; Heppner, G.; Kerscher, T.; Dillmann, R. Fault diagnosis and system status monitoring for a six-legged walking robot. In Proceedings of the 2011 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Budapest, Hungary, 3–7 July 2011; pp. 874–879. [Google Scholar]

- Lee, C.J.; Zhang, R.; Agarwal, D.; Yu, T.C.; Gunda, V.; Lopez, O.; Zhang, C. Echowrist: Continuous hand pose tracking and hand-object interaction recognition using low-power active acoustic sensing on a wristband. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–21. [Google Scholar]

- Yu, T.C.; Hu, G.; Zhang, R.; Lim, H.; Mahmud, S.; Lee, C.J.; Li, K.; Agarwal, D.; Nie, S.; Oh, J.; et al. Ring-a-Pose: A Ring for Continuous Hand Pose Tracking. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; 2024; Volume 8, pp. 1–30. Available online: https://dl.acm.org/journal/imwut/about (accessed on 8 June 2025).

- Alemu, M.Y.; Lin, Y.; Shull, P.B. EchoGest: Soft Ultrasonic Waveguides based Sensing Skin for Subject-Independent Hand Gesture Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2366–2375. [Google Scholar] [CrossRef] [PubMed]

- Bi, H.; Zhang, W.; Li, S.; Chen, Y.; Zhou, C.; Zhou, T. SmartSit: Sitting posture recognition through acoustic sensing on smartphones. IEEE Trans. Multimed. 2024, 26, 8119–8130. [Google Scholar] [CrossRef]

- Kubo, Y.; Koguchi, Y.; Shizuki, B.; Takahashi, S.; Hilliges, O. AudioTouch: Minimally Invasive Sensing of Micro-Gestures via Active Bio-Acoustic Sensing. In Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ‘19), Taipei, Taiwan, 1–4 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; Volume 36, pp. 1–13. [Google Scholar]

- Kishi, F.; Ito, K.; Fujita, K.; Itoh, Y. Recognizing object localization using acoustic markers with active acoustic sensing. Qual. User Exp. 2024, 9, 2. [Google Scholar] [CrossRef]

- Murakami, H.; Nakamura, M.; Yamasaki, S.; Hashizume, H.; Sugimoto, M. Smartphone Localization Using Active-Passive Acoustic Sensing. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 206–212. [Google Scholar]

- Watanabe, H.; Kakizawa, H.; Sugimoto, M. User Authentication Method Using Active Acoustic Sensing. J. Inf. Process. 2021, 29, 370–379. [Google Scholar] [CrossRef]

- Iwakiri, S.; Murao, K. User Authentication Method for Wearable Ring Devices using Active Acoustic Sensing. In Proceedings of the 2023 ACM International Symposium on Wearable Computers (ISWC ‘23), Cancun, Mexico, 8–12 October 2023. [Google Scholar]

- Tanigawa, R.; Ishii, Y. Hear-your-action: Human action recognition by ultrasound active sensing. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 7260–7264. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Guido, R.; Ferrisi, S.; Lofaro, D.; Conforti, D. An overview on the advancements of support vector machine models in healthcare applications: A review. Information 2024, 15, 235. [Google Scholar] [CrossRef]

- Khan, T.A.; Sadiq, R.; Shahid, Z.; Alam, M.M.; Su’ud, M.B.M. Sentiment analysis using support vector machine and random forest. J. Inform. Web Eng. 2024, 3, 67–75. [Google Scholar] [CrossRef]

- Alfred, R.; Chinthamu, N.; Jayanthy, T.; Muniyandy, E.; Dhiman, T.K.; John, T.N. Implementation of advanced techniques in production and manufacturing sectors through support vector machine algorithm with embedded system. Meas. Sens. 2024, 33, 101119. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Latifah, A.L.; Shabrina, A.; Wahyuni, I.N.; Sadikin, R. Evaluation of Random Forest model for forest fire prediction based on climatology over Borneo. In Proceedings of the 2019 International Conference on Computer, Control, Informatics and its Applications, Tangerang, Indonesia, 23–24 October 2019; pp. 4–8. [Google Scholar]

- Iranzad, R.; Liu, X. A review of random forest-based feature selection methods for data science education and applications. Int. J. Data Sci. Anal. 2024, 1–15. [Google Scholar] [CrossRef]

- Xu, W.; Tu, J.; Xu, N.; Liu, Z. Predicting daily heating energy consumption in residential buildings through integration of random forest model and meta-heuristic algorithms. Energy 2024, 301, 131726. [Google Scholar] [CrossRef]

- Shih, C.M.; Yang, K.C.; Chao, W.P. Predicting Stock Price Using Random Forest Algorithm and Support Vector Machines Algorithm. In Proceedings of the2023 IEEE International Conference on Industrial Engineering and Engineering Management, Singapore, 18–21 December 2023; pp. 0133–0137. [Google Scholar]

- A, U.N.; Dharmarajan, K. Diabetes Prediction using Random Forest Classifier with Different Wrapper Methods. In Proceedings of the 2022 International Conference on Edge Computing and Applications, Tamilnadu, India, 13–15 October 2022; pp. 1705–1710. [Google Scholar]

- Yunneng, Q. A new stock price prediction model based on improved KNN. In Proceedings of the 2020 7th International Conference on Information Science and Control Engineering, Changsha, China, 18–20 December 2020; pp. 77–80. [Google Scholar]

- Vaishnnave, M.P.; Devi, K.S.; Srinivasan, P.; Jothi, G.A.P. Detection and Classification of Groundnut Leaf Diseases using KNN classifier. In Proceedings of the 2019 IEEE International Conference on Systems, Computing, Automation and Networks, Pondicherry, India, 29–30 March 2019; pp. 1–5. [Google Scholar]

- Halder, R.K.; Uddin, M.N.; Uddin, M.A.; Aryal, S.; Khraisat, A. Enhancing K-nearest neighbor algorithm: A comprehensive review and performance analysis of modifications. J. Big Data 2024, 11, 113. [Google Scholar] [CrossRef]

- Tajmouati, S.; Wahbi, B.E.; Bedoui, A.; Abarda, A.; Dakkon, M. Applying k-nearest neighbors to time series forecasting: Two new approaches. J. Forecast. 2024, 43, 1559–1574. [Google Scholar] [CrossRef]

- Xu, X.; Jia, W.; Xu, S.; Ning, F.; Lei, J. Relationship between high-level color features and temperature mapping of magnesium alloy surface images based on the K-nearest neighbor algorithm. Appl. Therm. Eng. 2025, 259, 124940. [Google Scholar] [CrossRef]

- Suprayoga, R.; Zega, S.; Muhathir; Mardiana, S. Classification of Mango Leaf Diseases Using XGBoost Method and HoG Feature Extraction. In Proceedings of the 2023 International Conference on Modeling & E-Information Research, Artificial Learning and Digital Applications (ICMERALDA), Karawang, Indonesia, 24 November 2023; pp. 197–202. [Google Scholar]

- Badola, S.; Mishra, V.N.; Parkash, S. Landslide susceptibility mapping using XGBoost machine learning method. In Proceedings of the 2023 International Conference on Machine Intelligence for GeoAnalytics and Remote Sensing (MIGARS), Hyderabad, India, 27–29 January 2023; pp. 1–4. [Google Scholar]

- Nageswari, A.; Jyothi, U.; Divya, G.; Ammannamma, T.; Usha, V. Water Quality Classification using XGBoost method. In Proceedings of the 2024 IEEE 6th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Hamburg, Germany, 19–20 October 2024; pp. 302–306. [Google Scholar]

- Dharani Nivash, A.; Ammal Dhanalakshmi, M. Identification of Cyber Bullying Using XGBoost Compared to Random Forest Classifier to Improve Accuracy. In Proceedings of the 2025 International Conference on Artificial Intelligence and Data Engineering (AIDE), Nitte, India, 6–7 February 2025; pp. 856–859. [Google Scholar]

- Onoja, M.; Jegede, A.; Mazadu, J.; Aimufua, G.; Oyedele, A.; Olibodum, K. Exploring the Effectiveness and Efficiency of LightGBM Algorithm for Windows Malware Detection. In Proceedings of the 2022 5th Information Technology for Education and Development (ITED), Abuja, Nigeria, 1–3 November 2022; pp. 1–6. [Google Scholar]

- Wang, Y.; Zhang, Z.; Wang, Z.; Xian, Y. An Ant Colony Optimization-Enhanced LightGBM Algorithm. In Proceedings of the 2024 IEEE/IAS Industrial and Commercial Power System Asia (I&CPS Asia), Pattaya, Thailand, 9–12 July 2024; pp. 605–609. [Google Scholar]

- Kumar, G.V.D.; Deepa, V.; Vineela, N.; Emmanuel, G. Detection of Parkinson’s disease using LightGBM Classifier. In Proceedings of the 2022 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; pp. 1292–1297. [Google Scholar]

- Weng, D.; Chen, R.; Li, Y.; Zhao, D. Techniques and applications of electrical equipment image processing based on improved MLP network using BP algorithm. In Proceedings of the 2016 IEEE 8th International Power Electronics and Motion Control Conference (IPEMC-ECCE Asia), Hefei, China, 22–26 May 2016; pp. 1102–1105. [Google Scholar]

- Sameera, N.; Ram, B.A.; Prasad, P.S.S.; Lakshmanarao, A. Plant Disease Detection using MLP, Convnets and Densenet models. In Proceedings of the 2024 Fourth International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 11–12 January 2024; pp. 1–5. [Google Scholar]

- Amit, M.L.; Fajardo, A.C.; Medina, R.P. Recognition of Real-Time Hand Gestures using Mediapipe Holistic Model and LSTM with MLP Architecture. In Proceedings of the 2022 IEEE 10th Conference on Systems, Process & Control (ICSPC), Malacca, Malaysia, 17 December 2022; pp. 292–295. [Google Scholar]

- Xu, C. Applying MLP and CNN on Handwriting Images for Image Classification Task. In Proceedings of the 2022 5th International Conference on Advanced Electronic Materials, Computers a Engineering (AEMCSE), Wuhan, China, 22–24 April 2022; pp. 830–835. [Google Scholar]

- Honggang, C.; Mingyue, X.; Chenzhao, F.; Renjie, S.; Zhe, L. Mechanical Fault Diagnosis of GIS Based on MFCCs of Sound Signals. In Proceedings of the 2020 5th Asia Conference on Power and Electrical Engineering (ACPEE), Chengdu, China, 4–7 June 2020; pp. 1487–1491. [Google Scholar]

| Model | Hyper Parameters |

|---|---|

| SVM | c = 0.3, kernel = linear |

| Random Forest | n estimators = 142, max depth = 10 |

| KNN | n neighbors = 4, weights = distance |

| XGBoost | boosting rounds = 100, learning rate = 0.1 |

| LightGBM | boosting rounds = 100, learning rate = 0.1 |

| MLP | single hidden layer, Relu activation |

| Model | Accuracy |

|---|---|

| SVM | 65.7 |

| Random Forest | 51.4 |

| KNN | 60.0 |

| XGBoost | 57.1 |

| LightGBM | 62.9 |

| MLP | 71.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeo, W.; Matsumoto, M. Fault Diagnosis Systems for Robots: Acoustic Sensing-Based Identification of Detached Components for Fault Localization. Appl. Sci. 2025, 15, 6564. https://doi.org/10.3390/app15126564

Yeo W, Matsumoto M. Fault Diagnosis Systems for Robots: Acoustic Sensing-Based Identification of Detached Components for Fault Localization. Applied Sciences. 2025; 15(12):6564. https://doi.org/10.3390/app15126564

Chicago/Turabian StyleYeo, Woonghee, and Mitsuharu Matsumoto. 2025. "Fault Diagnosis Systems for Robots: Acoustic Sensing-Based Identification of Detached Components for Fault Localization" Applied Sciences 15, no. 12: 6564. https://doi.org/10.3390/app15126564

APA StyleYeo, W., & Matsumoto, M. (2025). Fault Diagnosis Systems for Robots: Acoustic Sensing-Based Identification of Detached Components for Fault Localization. Applied Sciences, 15(12), 6564. https://doi.org/10.3390/app15126564