An Interpretable Machine Learning Framework for Athlete Motor Profiling Using Multi-Domain Field Assessments: A Proof-of-Concept Study

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants and Study Settings

2.2. Experimental Design and Study Period

2.3. Testing Procedures

2.3.1. Lower-Body Isometric Strength

2.3.2. Dynamic Balance

2.3.3. Functional Movement Screen

2.4. Data Preprocessing Feature Engineering

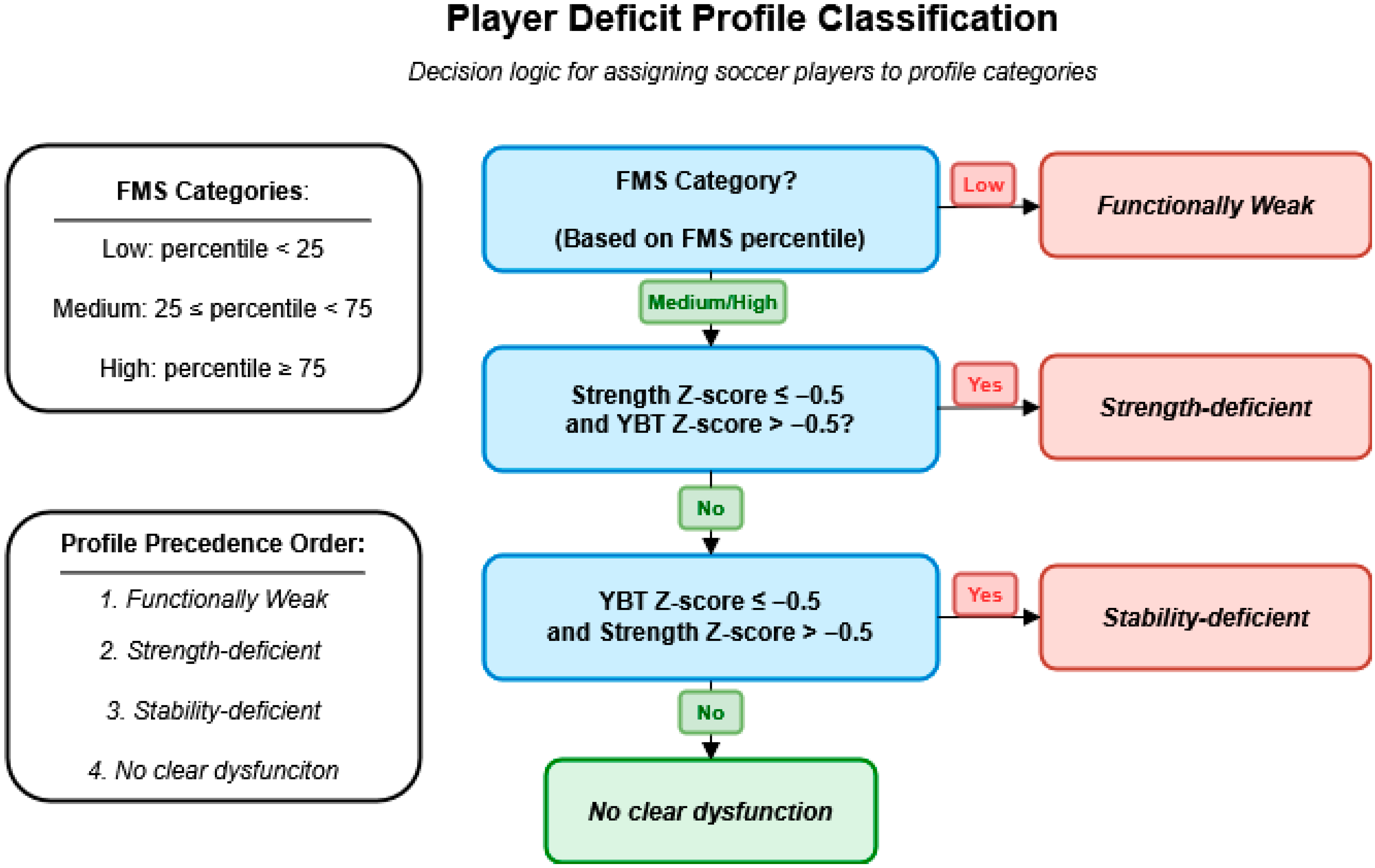

2.5. Expert Rule-Based Classification

2.6. Deficit Flagging System

2.7. Machine Learning Model Development (Training and Evaluation)

2.8. Cross-Validation Performance

2.9. Model Interpretability (SHAP Analysis)

2.10. External Validation

2.11. Software Tool: Athlete Functional Report Generator

2.12. Quantification and Statistical Analysis

3. Results

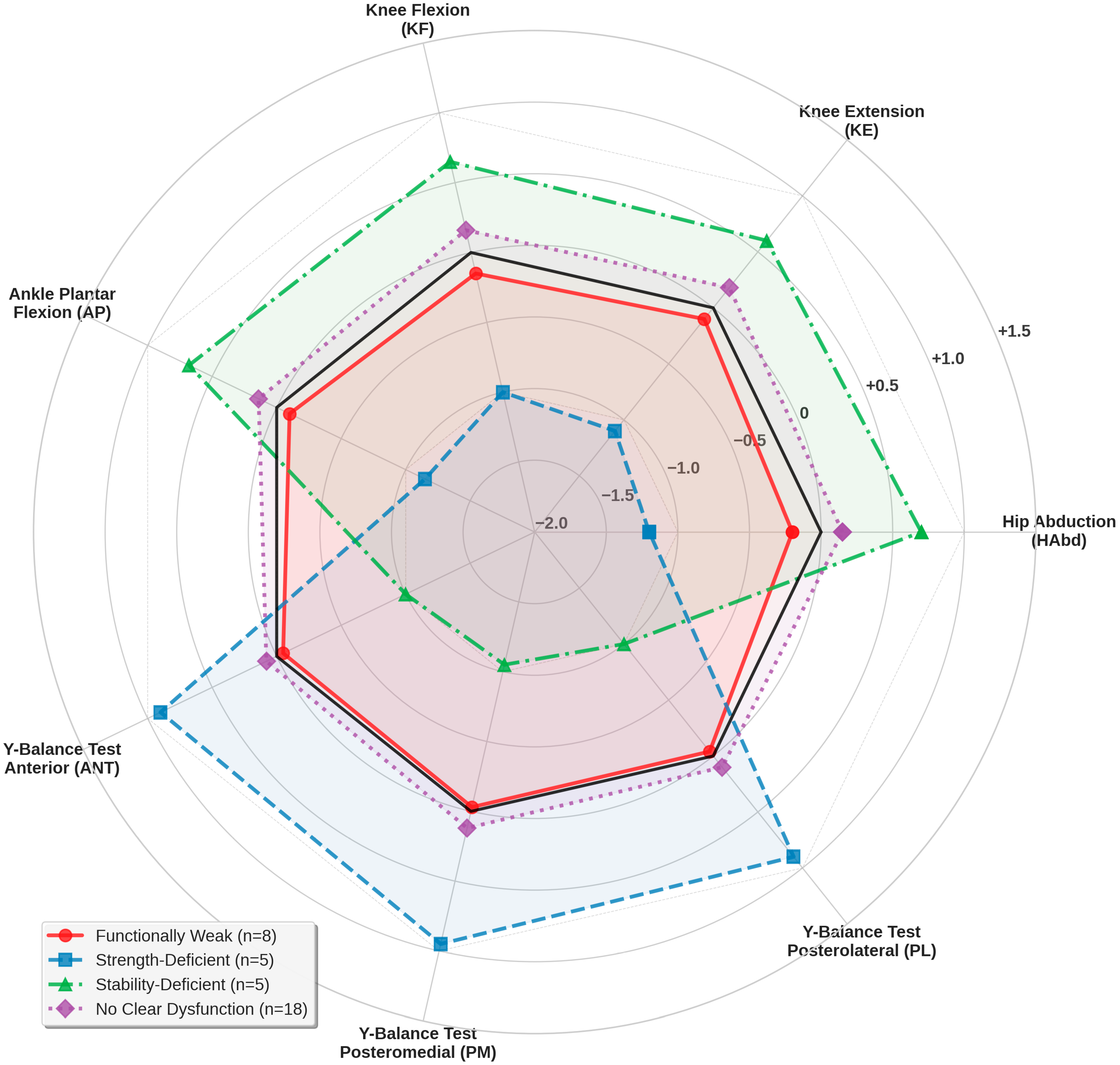

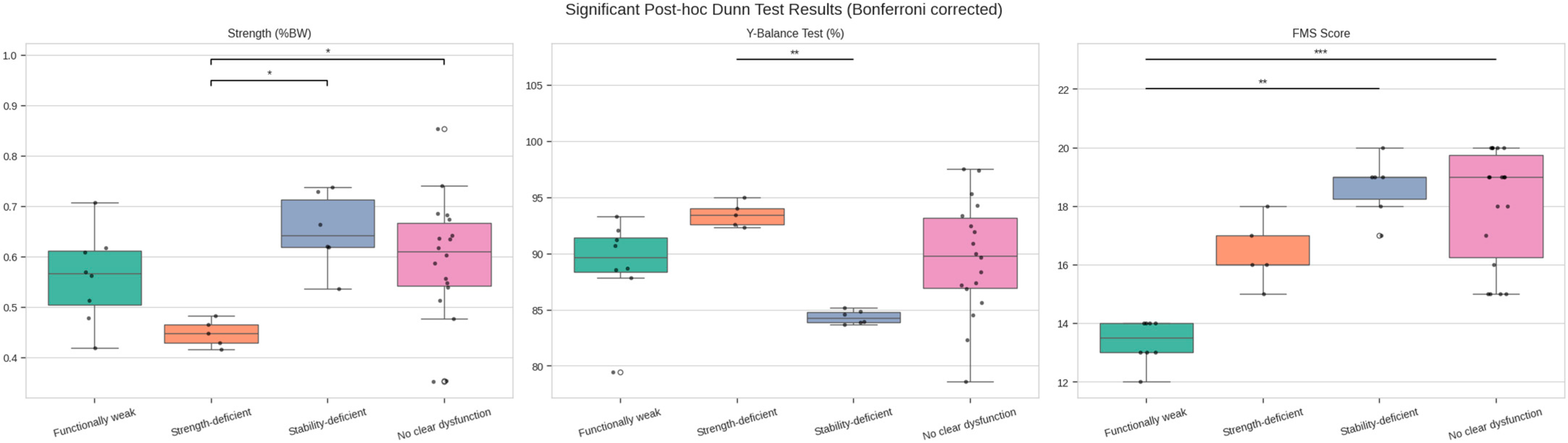

3.1. Functional Profile Distribution in Youth Soccer Players

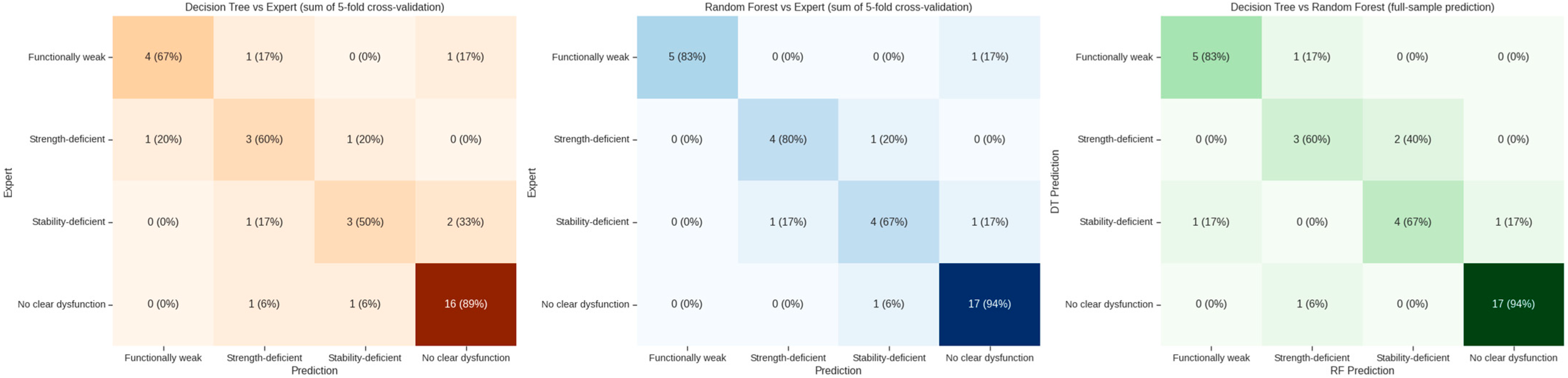

3.2. Random Forest Outperforms Decision Tree in Classifying Functional Profiles

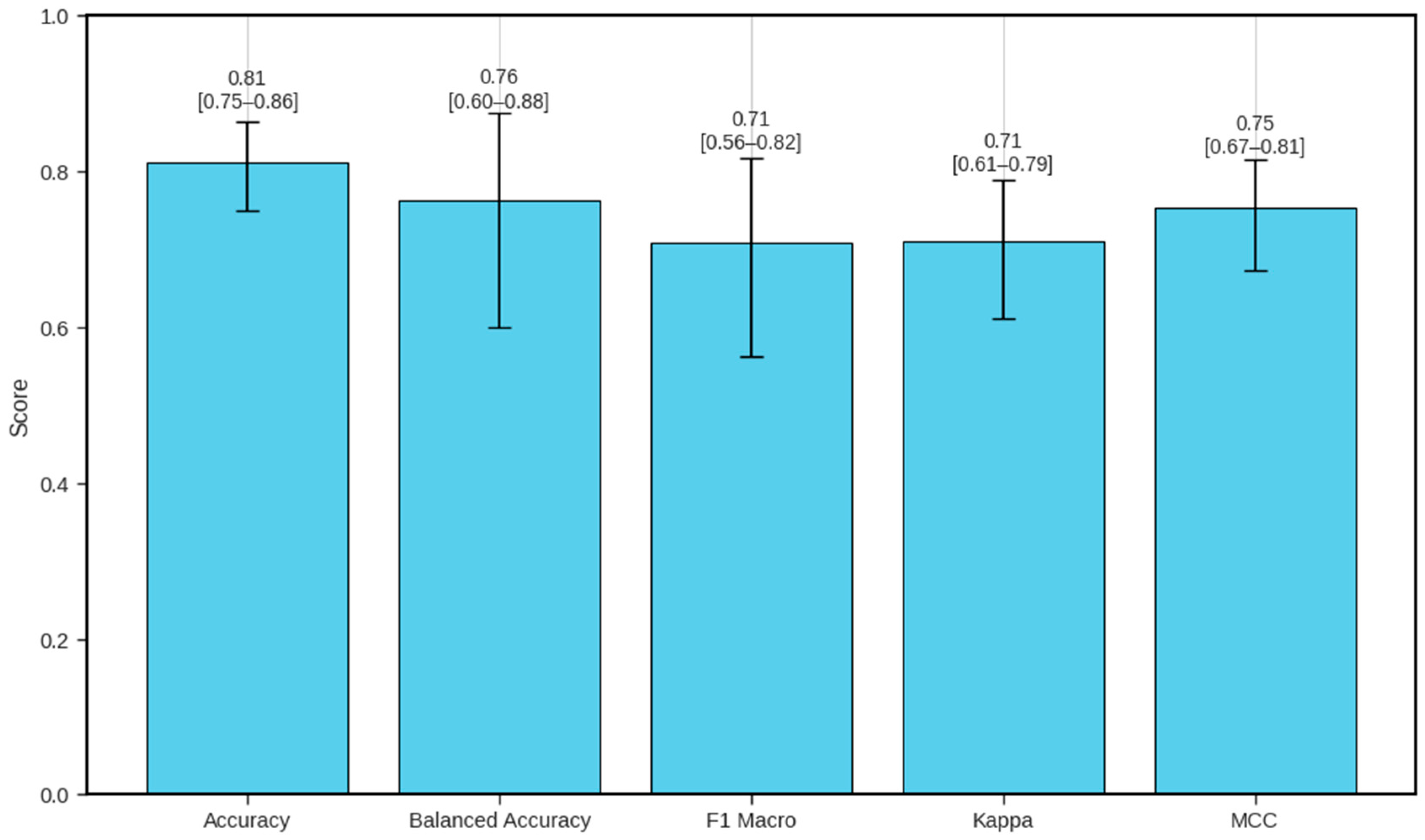

3.3. Bootstrap Validation Confirms the Reliability of the Random Forest Model

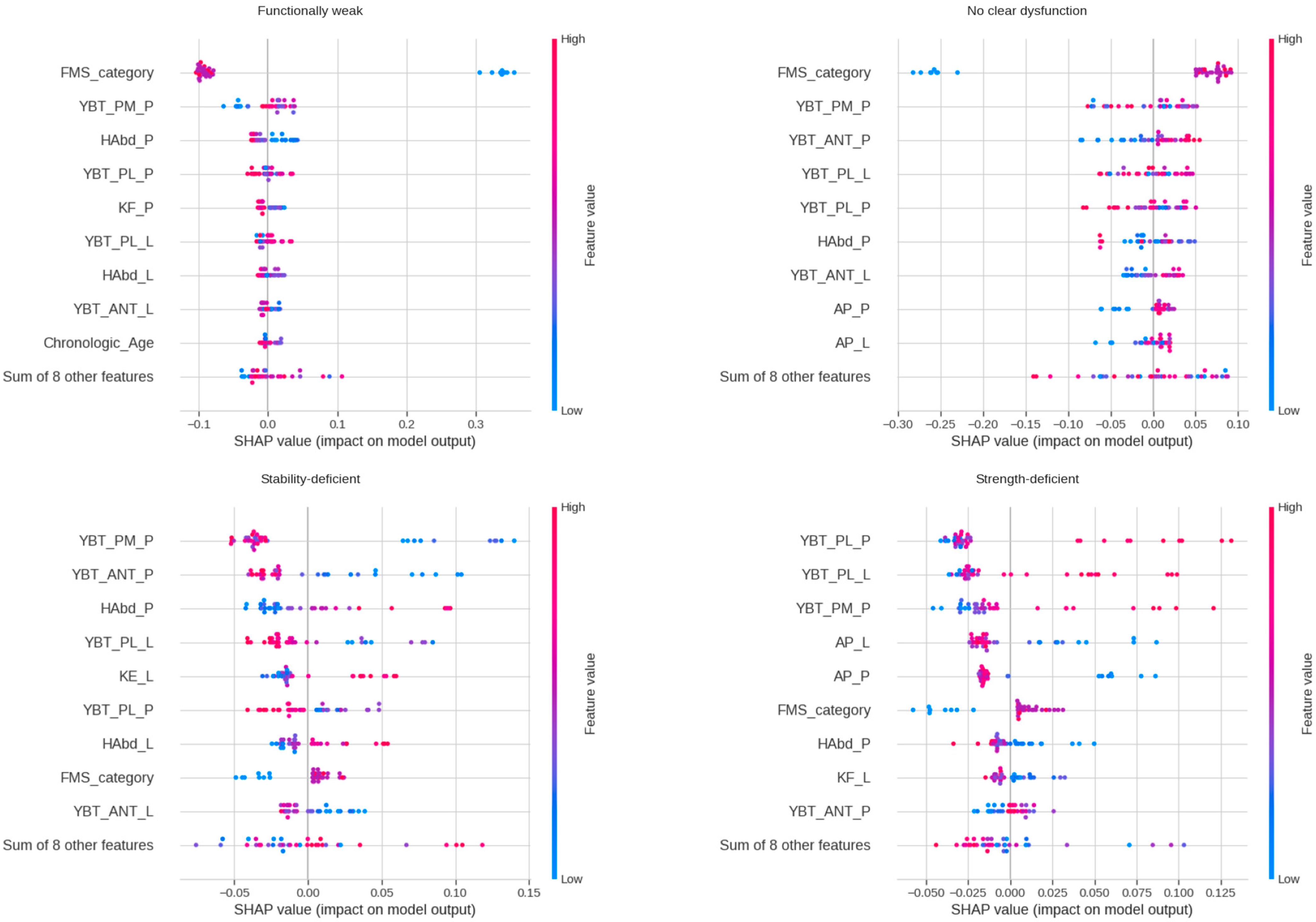

3.4. Key Features Driving Classification (SHAP Analysis)

3.5. External Validation on Handball Players

4. Discussion

4.1. Model Accuracy and Robustness

4.2. Flag System Alignment

4.3. Validation in a Separate Athlete Group

4.4. Comparison with Existing Screening Tools

4.5. Practical Implications

4.6. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Reagent or Resource | Source | Identifier |

|---|---|---|

| Deposited data | ||

| De-identified raw data of experiment | Open Science Framework | DOI: 10.17605/OSF.IO/AKUQ8 https://osf.io/akuq8/ (accessed on 5 June 2025) |

| Experimental models: Organisms/strains | ||

| Youth soccer athletes (male, 8–17 yrs) | Regional academy teams | NCT06325228 |

| Youth handball athletes (male and female, 8–17 yrs) | Regional academy teams | NCT06325228 |

| Software and algorithms | ||

| Python (v3.10) | Python software foundation | http://www.python.org/ (accessed on 5 June 2025) |

| scikit-learn (v1.2) | scikit-learn developers | https://scikit-learn.org/ (accessed on 5 June 2025) |

| SHAP (v0.42) | Lundberg and Lee | https://github.com/slundberg/shap (accessed on 5 June 2025) |

| Streamlit | Streamlit Inc. | https://streamlit.io/ (accessed on 5 June 2025) |

| Athlete Functional Report Generator (App) | This study/GitHub | https://athletereportgenerator.streamlit.app/ (accessed on 5 June 2025) |

| Athlete Functional Report Generator (Code) | This study/GitHub | https://github.com/BartWil/athlete_report_generator (accessed on 5 June 2025) |

| Other | ||

| Hand-held dynamometer (Model 01165) | Lafayette Instrument Company | https://lafayetteinstrument.com/ (accessed on 5 June 2025) |

| Lower Quarter Y-Balance Test Kit | Functional Movement Systems | https://www.functionalmovement.com/ (accessed on 5 June 2025) |

| Functional Movement Screen Kit | Functional Movement Systems | https://www.functionalmovement.com/ (accessed on 5 June 2025) |

- -

- De-identified data have been deposited at OSF and are publicly available as of the date of publication. The open-access link is listed in the key resources table.

- -

- Athlete Functional Report Generator: A publicly available, open-source web app designed to classify athletes into functional profiles using test data and generate individualized reports. https://athletereportgenerator.streamlit.app/ (accessed on 8 May 2025).

- -

- Source code for Report Generator: GitHub repository for modification, deployment, and integration. https://github.com/BartWil/athlete_report_generator (accessed on 8 May 2025).

References

- Pfeifer, C.E.; Sacko, R.S.; Ortaglia, A.; Monsma, E.V.; Beattie, P.F.; Goins, J.; Stodden, D.F. Functional Movement ScreenTM in Youth Sport Participants: Evaluating the Proficiency Barrier for Injury. Int. J. Sports Phys. Ther. 2019, 14, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Huebner, B.J.; Plisky, P.J.; Kiesel, K.B.; Schwartzkopf-Phifer, K. Can Injury Risk Category Be Changed in Athletes? An Analysis of an Injury Prevention System. Int. J. Sports Phys. Ther. 2019, 14, 127–134. [Google Scholar] [CrossRef]

- Rommers, N.; Rössler, R.; Verhagen, E.; Vandecasteele, F.; Verstockt, S.; Vaeyens, R.; Lenoir, M.; D’Hondt, E.; Witvrouw, E. A Machine Learning Approach to Assess Injury Risk in Elite Youth Football Players. Med. Sci. Sports Exerc. 2020, 52, 1745–1751. [Google Scholar] [CrossRef] [PubMed]

- Kokstejn, J.; Musalek, M.; Wolanski, P.; Murawska-Cialowicz, E.; Stastny, P. Fundamental Motor Skills Mediate the Relationship between Physical Fitness and Soccer-Specific Motor Skills in Young Soccer Players. Front. Physiol. 2019, 10, 596. [Google Scholar] [CrossRef]

- Bruzda, R.; Wilczyński, B.; Zorena, K. Knee Function and Quality of Life in Adolescent Soccer Players with Osgood Shlatter Disease History: A Preliminary Study. Sci. Rep. 2023, 13, 19200. [Google Scholar] [CrossRef] [PubMed]

- Wilczyński, B.; Cabaj, P.; Biały, M.; Zorena, K. Impact of Lateral Ankle Sprains on Physical Function, Range of Motion, Isometric Strength and Balance in Professional Soccer Players. BMJ Open Sport Exerc. Med. 2024, 10, e002293. [Google Scholar] [CrossRef]

- Wilczynski, B.; Taraszkiewicz, M.; de Tillier, K.; Biały, M.; Zorena, K. Sinding-Larsen-Johansson Disease. Clinical Features, Imaging Findings, Conservative Treatments and Research Perspectives: A Scoping Review. PeerJ 2024, 12, e17996. [Google Scholar] [CrossRef]

- Jukic, I.; Prnjak, K.; Zoellner, A.; Tufano, J.J.; Sekulic, D.; Salaj, S. The Importance of Fundamental Motor Skills in Identifying Differences in Performance Levels of U10 Soccer Players. Sports 2019, 7, 178. [Google Scholar] [CrossRef]

- Biały, M.; Wilczyński, B.; Forelli, F.; Hewett, T.E.; Gnat, R. Functional Deficits in Non-Elite Soccer (Football) Players: A Strength, Balance, and Movement Quality Assessment After Anterior Cruciate Ligament Reconstruction. Cureus 2024, 16, e75846. [Google Scholar] [CrossRef]

- Cook, G.; Burton, L.; Hoogenboom, B.J.; Voight, M. Functional Movement Screening: The Use of Fundamental Movements as an Assessment of Function—Part 1. Int. J. Sports Phys. Ther. 2014, 9, 396–409. [Google Scholar]

- Plisky, P.; Schwartkopf-Phifer, K.; Huebner, B.; Garner, M.B.; Bullock, G. Systematic Review and Meta-Analysis of the y-Balance Test Lower Quarter: Reliability, Discriminant Validity, and Predictive Validity. Int. J. Sports Phys. Ther. 2021, 16, 1190–1209. [Google Scholar] [CrossRef]

- Kolodziej, M.; Nolte, K.; Schmidt, M.; Alt, T.; Jaitner, T. Identification of Neuromuscular Performance Parameters as Risk Factors of Non-Contact Injuries in Male Elite Youth Soccer Players: A Preliminary Study on 62 Players With 25 Non-Contact Injuries. Front. Sport. Act. Living 2021, 3, 615330. [Google Scholar] [CrossRef] [PubMed]

- Kawaguchi, K.; Taketomi, S.; Mizutani, Y.; Inui, H.; Yamagami, R.; Kono, K.; Takagi, K.; Kage, T.; Sameshima, S.; Tanaka, S.; et al. Hip Abductor Muscle Strength Deficit as a Risk Factor for Inversion Ankle Sprain in Male College Soccer Players: A Prospective Cohort Study. Orthop. J. Sport. Med. 2021, 9, 1–8. [Google Scholar] [CrossRef]

- Trinidad-Fernandez, M.; Gonzalez-Sanchez, M.; Cuesta-Vargas, A.I. Is a Low Functional Movement Screen Score (≤14/21) Associated with Injuries in Sport? A Systematic Review and Meta-Analysis. BMJ Open Sport Exerc. Med. 2019, 5, e000501. [Google Scholar] [CrossRef] [PubMed]

- Šiupšinskas, L.; Garbenytė-Apolinskienė, T.; Salatkaitė, S.; Gudas, R.; Trumpickas, V. Association of Pre-Season Musculoskeletal Screening and Functional Testing with Sports Injuries in Elite Female Basketball Players. Sci. Rep. 2019, 9, 9286. [Google Scholar] [CrossRef]

- Lehr, M.E.; Plisky, P.J.; Butler, R.J.; Fink, M.L.; Kiesel, K.B.; Underwood, F.B. Field-expedient Screening and Injury Risk Algorithm Categories as Predictors of Noncontact Lower Extremity Injury. Scand. J. Med. Sci. Sports 2013, 23, e225–e232. [Google Scholar] [CrossRef] [PubMed]

- Eckart, A.C.; Ghimire, P.S.; Stavitz, J.; Barry, S. Predictive Utility of the Functional Movement Screen and Y-Balance Test: Current Evidence and Future Directions. Sports 2025, 13, 46. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Maru, S.; Kuwatsuru, R.; Matthias, M.D.; Simpson Jr, R.J. Public Disclosure of Results From Artificial Intelligence/Machine Learning Research in Health Care: Comprehensive Analysis of ClinicalTrials.Gov, PubMed, and Scopus Data (2010–2023). J. Med. Internet Res. 2025, 27, e60148. [Google Scholar] [CrossRef]

- Rico-González, M.; Pino-Ortega, J.; Méndez, A.; Clemente, F.M.; Baca, A. Machine Learning Application in Soccer: A Systematic Review. Biol. Sport 2023, 40, 249–263. [Google Scholar] [CrossRef]

- Benjaminse, A.; Nijmeijer, E.M.; Gokeler, A.; Di Paolo, S. Application of Machine Learning Methods to Investigate Joint Load in Agility on the Football Field: Creating the Model, Part I. Sensors 2024, 24, 3652. [Google Scholar] [CrossRef] [PubMed]

- Freitas, D.N.; Mostafa, S.S.; Caldeira, R.; Santos, F.; Fermé, E.; Gouveia, É.R.; Morgado-Dias, F. Predicting Noncontact Injuries of Professional Football Players Using Machine Learning. PLoS ONE 2025, 20, e0315481. [Google Scholar] [CrossRef] [PubMed]

- Nassis, G.P.; Verhagen, E.; Brito, J.; Figueiredo, P.; Krustrup, P. A Review of Machine Learning Applications in Soccer with an Emphasis on Injury Risk. Biol. Sport 2023, 40, 233–239. [Google Scholar] [CrossRef]

- Billingham, S.A.; Whitehead, A.L.; Julious, S.A. An Audit of Sample Sizes for Pilot and Feasibility Trials Being Undertaken in the United Kingdom Registered in the United Kingdom Clinical Research Network Database. BMC Med. Res. Methodol. 2013, 13, 2–7. [Google Scholar] [CrossRef] [PubMed]

- Mentiplay, B.F.; Perraton, L.G.; Bower, K.J.; Adair, B.; Pua, Y.H.; Williams, G.P.; McGaw, R.; Clark, R.A. Assessment of Lower Limb Muscle Strength and Power Using Hand-Held and Fixed Dynamometry: A Reliability and Validity Study. PLoS ONE 2015, 10, e0140822. [Google Scholar] [CrossRef]

- Hébert, L.J.; Maltais, D.B.; Lepage, C.; Saulnier, J.; Crête, M. Hand-Held Dynamometry Isometric Torque Reference Values for Children and Adolescents. Pediatr. Phys. Ther. 2015, 27, 414–423. [Google Scholar] [CrossRef]

- Plisky, P.J.; Gorman, P.P.; Butler, R.J.; Kiesel, K.B.; Underwood, F.B.; Elkins, B. The Reliability of an Instrumented Device for Measuring Components of the Star Excursion Balance Test. N. Am. J. Sports Phys. Ther. 2009, 4, 92–99. [Google Scholar] [PubMed]

- Wilczyński, B.; Radzimiński, Ł.; Sobierajska-Rek, A.; de Tillier, K.; Bracha, J.; Zorena, K. Biological Maturation Predicts Dynamic Balance and Lower Limb Power in Young Football Players. Biology 2022, 11, 1167. [Google Scholar] [CrossRef]

- Wilczyński, B.; Radzimiński, Ł.; Sobierajska-Rek, A.; Zorena, K. Association between Selected Screening Tests and Knee Alignment in Single-Leg Tasks among Young Football Players. Int. J. Environ. Res. Public Health 2022, 19, 6719. [Google Scholar] [CrossRef]

- Schwiertz, G.; Brueckner, D.; Schedler, S.; Kiss, R.; Muehlbauer, T. Performance and Reliability of the Lower Quarter Y Balance Test in Healthy Adolescents from Grade 6 to 11. Gait Posture 2019, 67, 142–146. [Google Scholar] [CrossRef]

- Shaffer, S.W.; Teyhen, D.S.; Lorenson, C.L.; Warren, R.L.; Koreerat, C.M.; Straseske, C.A.; Childs, J.D. Y-Balance Test: A Reliability Study Involving Multiple Raters. Mil. Med. 2013, 178, 1264–1270. [Google Scholar] [CrossRef]

- Kramer, T.A.; Sacko, R.S.; Pfeifer, C.E.; Gatens, D.R.; Goins, J.M.; Stodden, D.F. The Association Between the Functional Movement ScreenTM, Y-Balance Test, and Physical Performance Tests in Male and Female High School Athletes. Int. J. Sports Phys. Ther. 2019, 14, 911–919. [Google Scholar] [CrossRef] [PubMed]

- Bonazza, N.A.; Smuin, D.; Onks, C.A.; Silvis, M.L.; Dhawan, A. Reliability, Validity, and Injury Predictive Value of the Functional Movement Screen. Am. J. Sports Med. 2017, 45, 725–732. [Google Scholar] [CrossRef]

- Musat, C.L.; Mereuta, C.; Nechita, A.; Tutunaru, D.; Voipan, A.E.; Voipan, D.; Mereuta, E.; Gurau, T.V.; Gurău, G.; Nechita, L.C. Diagnostic Applications of AI in Sports: A Comprehensive Review of Injury Risk Prediction Methods. Diagnostics 2024, 14, 2516. [Google Scholar] [CrossRef] [PubMed]

- Granacher, U.; Lesinski, M.; Büsch, D.; Muehlbauer, T.; Prieske, O.; Puta, C.; Gollhofer, A.; Behm, D.G. Effects of Resistance Training in Youth Athletes on Muscular Fitness and Athletic Performance: A Conceptual Model for Long-Term Athlete Development. Front. Physiol. 2016, 7, 164. [Google Scholar] [CrossRef] [PubMed]

- Steffen, K.; Emery, C.A.; Romiti, M.; Kang, J.; Bizzini, M.; Dvorak, J.; Finch, C.F.; Meeuwisse, W.H. High Adherence to a Neuromuscular Injury Prevention Programme (FIFA 11+) Improves Functional Balance and Reduces Injury Risk in Canadian Youth Female Football Players: A Cluster Randomised Trial. Br. J. Sports Med. 2013, 47, 794–802. [Google Scholar] [CrossRef]

- Andersson, S.H.; Bahr, R.; Clarsen, B.; Myklebust, G. Preventing Overuse Shoulder Injuries among Throwing Athletes: A Cluster-Randomised Controlled Trial in 660 Elite Handball Players. Br. J. Sports Med. 2017, 51, 1073–1080. [Google Scholar] [CrossRef]

- Tonekaboni, S.; Joshi, S.; McCradden, M.D.; Goldenberg, A. What Clinicians Want: Contextualizing Explainable Machine Learning for Clinical End Use. Proc. Mach. Learn. Res. 2019, 106, 359–380. [Google Scholar]

- Karuc, J.; Mišigoj-Duraković, M.; Šarlija, M.; Marković, G.; Hadžić, V.; Trošt-Bobić, T.; Sorić, M. Can Injuries Be Predicted by Functional Movement Screen in Adolescents? The Application of Machine Learning. J. Strength Cond. Res. 2021, 35, 910–919. [Google Scholar] [CrossRef]

- Rhon, D.I.; Teyhen, D.S.; Collins, G.S.; Bullock, G.S. Predictive Models for Musculoskeletal Injury Risk: Why Statistical Approach Makes All the Difference. BMJ Open Sport Exerc. Med. 2022, 8, e001388. [Google Scholar] [CrossRef]

| Profile | Strength (Z-Score) | YBT (Z-Score) | FMS (Category) | Flags |

|---|---|---|---|---|

| Functionally Weak (n = 8) | ||||

| 0.56 ± 0.09 (−0.15 ± 0.79) | 88.96 ± 4.28 (−0.04 ± 0.91) | 13.38 ± 0.74 (Low) | R = 0 Y = 4 G = 4 | |

| Strength-Deficient (n = 5) | ||||

| 0.45 ± 0.03 (−1.13 ± 0.24) | 93.45 ± 1.09 (0.92 ± 0.23) | 16.40 ± 1.14 (Medium) | R = 0 Y = 1 G = 4 | |

| Stability-Deficient (n = 6) | ||||

| 0.65 ± 0.08 (0.66 ± 0.67) | 84.34 ± 0.61 (−1.02 ± 0.13) | 18.67 ± 1.03 (Medium) | R = 0 Y = 0 G = 6 | |

| No clear dysfunction (n = 18) | ||||

| 0.59 ± 0.12 (0.16 ± 1.10) | 89.63 ± 5.11 (0.10 ± 1.09) | 18.00 ± 1.97 (Medium) | R = 1 Y = 3 G = 14 |

| Soccer (n = 37) | Handball (n = 9) | p | |

|---|---|---|---|

| Performance | |||

| Mean Strength (kg/%BW) | 0.58 ± 0.11 | 0.58 ± 0.08 | 1.0 |

| Mean YBT (%) | 89.15 ± 4.76 | 94.11 ± 5.90 | 0.035 * |

| FMS Total Score (0–21) | 16.89 ± 2.48 | 15.22 ± 2.68 | 0.118 |

| Profiles | |||

| Functionally Weak (%) | 8 (21.6%) | 2 (22.2%) | 1.0 |

| Strength-Deficient (%) | 5 (13.5%) | 1 (11.1%) | 1.0 |

| Stability-Deficient (%) | 6 (16.2%) | 1 (11.1%) | 1.0 |

| No Clear Dysfunction (%) | 18 (48.6%) | 5 (55.6%) | 1.0 |

| FMS | |||

| FMS Low (%) | 8 (21.6%) | 2 (22.2%) | 1.0 |

| FMS Medium (%) | 23 (62.2%) | 4 (44.4%) | 0.456 |

| FMS High (%) | 6 (16.2%) | 3 (33.3%) | 0.348 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilczyński, B.; Biały, M.; Zorena, K. An Interpretable Machine Learning Framework for Athlete Motor Profiling Using Multi-Domain Field Assessments: A Proof-of-Concept Study. Appl. Sci. 2025, 15, 6436. https://doi.org/10.3390/app15126436

Wilczyński B, Biały M, Zorena K. An Interpretable Machine Learning Framework for Athlete Motor Profiling Using Multi-Domain Field Assessments: A Proof-of-Concept Study. Applied Sciences. 2025; 15(12):6436. https://doi.org/10.3390/app15126436

Chicago/Turabian StyleWilczyński, Bartosz, Maciej Biały, and Katarzyna Zorena. 2025. "An Interpretable Machine Learning Framework for Athlete Motor Profiling Using Multi-Domain Field Assessments: A Proof-of-Concept Study" Applied Sciences 15, no. 12: 6436. https://doi.org/10.3390/app15126436

APA StyleWilczyński, B., Biały, M., & Zorena, K. (2025). An Interpretable Machine Learning Framework for Athlete Motor Profiling Using Multi-Domain Field Assessments: A Proof-of-Concept Study. Applied Sciences, 15(12), 6436. https://doi.org/10.3390/app15126436