Abstract

Multispectral detection leverages visible and infrared imaging to improve detection performance in complex environments. However, conventional convolution-based fusion methods predominantly rely on local feature interactions, limiting their capacity to fully exploit cross-modal information and making them more susceptible to interference from complex backgrounds. To overcome these challenges, the YOLO-MEDet multispectral target detection model is proposed. Firstly, the YOLOv5 architecture is redesigned into a two-stream backbone network, incorporating a midway fusion strategy to integrate multimodal features from the C3 to C5 layers, thereby enhancing detection accuracy and robustness. Secondly, the Attention-Enhanced Feature Fusion Framework (AEFF) is introduced to optimize both cross-modal and intra-modal feature representations by employing an attention mechanism, effectively boosting model performance. Finally, the C3-PSA (C3 Pyramid Compressed Attention) module is integrated to reinforce multiscale spatial feature extraction and refine feature representation, ultimately improving detection accuracy while reducing false alarms and missed detections in complex scenarios. Extensive experiments on the FLIR, KAIST, and M3FD datasets, along with additional validation using SimuNPS simulations, confirm the superiority of YOLO-MEDet. The results indicate that the proposed model outperforms existing approaches across multiple evaluation metrics, providing an innovative solution for multispectral target detection.

1. Introduction

The integration of multispectral sensors with deep learning algorithms has proven to be a highly effective approach across various applications [1,2]. These sensors can capture data across both visible and invisible spectral ranges, thereby providing extensive environmental information [3,4,5]. Moreover, when integrated with deep learning techniques such as computer vision and target detection models, these sensors facilitate the automation of tasks in the invisible spectrum [6]. As depicted in Figure 1, under optimal lighting conditions, RGB images accurately capture objects with distinct colors, textures, and contours. However, in thermal images, these visual details are often harder to distinguish. In contrast, under low-light or nighttime conditions, object details and the boundaries between edges and the background in RGB images may become blurred and challenging to discern. In such scenarios, thermal imaging, leveraging its unique energy-radiation-based imaging mechanism, can reveal distinguishable object contours, making it a viable alternative. The integration of additional modalities significantly enhances detection robustness compared to conventional unimodal RGB images, which exhibit inherent limitations.The samples in Figure 1 are taken from the public FLIR dataset.

Figure 1.

Separate images for different scenarios. The figure on the left shows visible light images captured under varying lighting conditions. The figure on the right presents the aligned visible light and infrared images.

Multimodal information fusion techniques have recently made significant strides in target detection, particularly in improving robustness under diverse environmental conditions. Traditional fusion methods, such as splicing or element-wise summation [7,8], provide basic multimodal integration but often struggle in complex scenarios. To address these challenges, attention-based approaches have been explored, leveraging adaptive weighting mechanisms to selectively enhance or suppress features. For instance, integrating attention modules into twinned convolutional neural networks has been shown to improve feature extraction efficiency [9]. Similarly, recurrent fusion strategies refine feature representation post-fusion, enabling better feature discrimination within detection frameworks [10]. Beyond attention-based enhancements, novel fusion architectures have been introduced to tackle specific challenges in multispectral detection. The Gated Fusion Single-Stage Multi-Frame Detector (GFD-SSD) incorporates specialized fusion units that dynamically integrate feature maps across intermediate layers, optimizing cross-modal feature learning [11]. Meanwhile, addressing the cross-modal disparity issue, regional feature alignment techniques have been proposed to reduce misalignment errors and improve multimodal consistency [12]. Further refinements at the pixel level utilize spatial and channel-wise attention mechanisms to enhance the granularity of feature fusion, facilitating more precise target representation [13]. Recent advancements have also been driven by the adoption of Transformer-based architectures, which introduce global attention mechanisms to capture long-range dependencies and improve multimodal fusion effectiveness [14]. At the same time, the increasing complexity of multispectral datasets, such as DroneVehicle [15], VEDAI [16], and LLVIP [17], has pushed the boundaries of detection algorithms, necessitating more sophisticated fusion strategies. Despite these advancements, challenges remain in achieving optimal cross-modal feature integration, highlighting the need for further innovation in multispectral target detection.

To overcome the constrained receptive field issue in multispectral image feature fusion and the limitations of convolution-based methods in modeling long-range dependencies, this paper proposes a Transformer-based modality fusion network called YOLO-MEDet. The network integrates RGB and thermal imaging modality features and introduces a novel AEFF to optimize cross-modal information interaction and improve detection accuracy. Furthermore, to address the limitations of existing networks in multiscale feature extraction, this paper introduces the C3-PSA module to enhance the capacity for capturing multiscale spatial information and improve the network’s adaptability to targets of varying scales. Specifically, the main contributions of this paper are as follows:

- Dual-stream backbone network and midway fusion strategy: The YOLOv5 feature extraction network is restructured into a dual-stream backbone, employing midway fusion as the default setting to integrate multimodal features from the C3 to C5 layers. This design effectively leverages multispectral information to enhance detection accuracy and robustness.

- AEFF: The AEFF framework is introduced to address the limitations of convolution-based feature fusion in modeling long-range feature dependencies. By incorporating an attention mechanism, it further optimizes both cross-modal and intra-modal feature representations, thereby enhancing overall model performance.

- C3-PSA module for multiscale feature representation: The C3-PSA module is introduced to enhance multiscale spatial feature extraction using the PSA mechanism while strengthening cross-channel attention. This improves detection accuracy and adaptability while reducing false alarms and missed detections under complex imaging conditions.

The structure of the present study is organized as follows. Section 2 briefly reviews the related works in multispectral object detection and identifies existing challenges. Section 3 presents our proposed YOLO-MEDet model incorporating the AEFF and C3-PSA modules. Section 4 describes the materials and methods, including the datasets, evaluation metrics, and implementation details. Section 5 presents the experimental results, covering comparative analysis, ablation studies, Per-IoU AP analysis, and qualitative evaluations. Section 6 summarizes the key contributions and outlines future research directions.

2. Related Works

2.1. Multispectral Target Detection

In recent years, the research in multispectral target detection has advanced significantly, with various approaches proposed to enhance feature fusion and improve detection performance. One direction focused on refining fused multispectral features through iterative refinement mechanisms, ensuring greater consistency in single-spectrum feature representation, while auxiliary tasks such as semantic segmentation provided additional supervisory signals to optimize detection accuracy [10]. Meanwhile, domain adaptation techniques have been explored to bridge the gap between traditional RGB-based detection and multispectral data processing, leading to the development of adversarial-learning-based models capable of robust feature representation across different spectral modalities [18]. Further enhancements in fusion strategies involved decomposing and reorganizing RGB and infrared (IR) feature maps, integrating co-selection and differential enhancement mechanisms to selectively reinforce multispectral information, which resulted in specialized detection networks like YOLOFusion [19].

Advancements in attention-based fusion techniques have also played a crucial role in improving multispectral target detection. Feature interaction and reconstruction modules were introduced to facilitate deeper modality integration, while self-attention mechanisms refined feature fusion strategies, leading to more adaptive and precise detection frameworks [20]. Multimodal fusion networks extended these efforts by integrating local, global, and channel-wise feature representations through techniques such as local window shift fusion, global interaction modeling, and cross-channel learning, enabling the generation of highly discriminative feature representations [21]. Additionally, multiscale attention-based architectures leveraged Transformer-based self-attention for feature extraction, which was complemented by dedicated fusion modules that further refined multiscale feature integration [22].

The recent research has increasingly emphasized the need to balance detection accuracy with model complexity in multispectral fusion networks. For instance, BCMFIFuse introduces a bilateral cross-modality guidance mechanism to enhance mutual information exchange while maintaining computational efficiency [23], and EfficientFuseDet adopts hierarchical attention and bottleneck modules to reduce model size without significantly sacrificing performance [24]. In contrast, our proposed AEFF and C3-PSA modules prioritize the robustness of cross-modal feature weighting and multiscale spatial enhancement, respectively. While these modules introduce moderate additional computational overhead compared to lightweight alternatives, they consistently improve detection accuracy in complex scenarios. This design philosophy aligns with recent advances in hybrid attention mechanisms, which aim to combine local and global feature modeling to strengthen cross-modal dependencies. Although Transformer-based or nonlocal networks offer powerful global context modeling, many existing implementations struggle to fully exploit global features across modalities [25]. Representative multimodal Transformer-based methods are summarized in Table 1. Our method addresses this by integrating attention-based fusion strategies capable of capturing and refining both local and global representations, thereby enhancing the reliability and accuracy of multispectral object detection.

Table 1.

Comparison of different multimodal Transformer methods.

2.2. Methods Based on Attention Mechanisms

Attention mechanisms, inspired by selective attention in human vision, have been extensively adopted in computer vision to enhance feature representation and improve model performance. Channel attention mechanisms have focused on adaptively weighting feature channels to emphasize informative features while suppressing irrelevant ones. Early approaches introduced fully connected networks to recalibrate channel-wise responses, strengthening key information extraction [30]. Further refinements explored inter-channel relationships using normalization techniques, balancing competition and cooperation among channels through global context embedding and gated adaptation, thereby enhancing the network’s capacity to capture essential features [31]. Spatial attention mechanisms, on the other hand, have been designed to improve adaptability to geometric variations by dynamically transforming spatial features. Parameterized sampling grids and localized transformation modules have been employed to enable flexible spatial manipulations of feature maps, allowing networks to adjust to spatial deformations more effectively [32]. Meanwhile, hybrid attention approaches have integrated both channel and spatial attention to refine feature representations further. A common strategy involves sequentially inferring channel and spatial attention maps to achieve more comprehensive feature enhancement, leveraging multiscale feature extraction and cross-dimensional weighting for improved fusion of local and global contextual information [33,34]. Recent advancements have extended attention mechanisms beyond conventional applications, incorporating hybrid strategies that fuse global and local features while enhancing multiscale feature extraction. These improvements have proven effective in tasks such as hyperspectral image denoising, where targeted loss functions and feature calibration techniques contribute to better noise suppression and feature preservation [29].

In this study, partial attention mechanisms are integrated to strengthen unimodal feature representations, enhancing the robustness and accuracy of multimodal fusion and target detection. By selectively emphasizing critical features across modalities, the proposed approach improves detection performance in complex environments. Various attention mechanisms and their characteristics are summarized in Table 2.

Table 2.

Comparison of attention mechanisms.

2.3. Transformer for Multimodal Learning

Transformer models have emerged as a powerful tool in multimodal learning, demonstrating remarkable scalability and adaptability across various modalities, including speech, vision, and hearing. Their success in diverse tasks such as language translation, image recognition, and speech processing can be attributed to their reduced dependence on modality-specific architectural constraints, such as translational invariance or local grid attention biases [35]. Unlike conventional architectures, Transformers process input as token sequences, allowing for flexible modeling of both intra-modal feature extraction and inter-modal correlation learning through controlled self-attention mechanisms [36]. Building on this foundation, recent studies have explored Transformer-based multimodal fusion strategies to enhance representation learning. Convolution-free architectures have been employed to jointly learn audio, video, and text modalities, leveraging modal signal preprocessing, feature extraction, and contrastive learning to improve cross-modal alignment [26]. Further advancements introduced decomposition and parallel decoding techniques, enabling the extraction of edge and subject features while predicting saliency maps through a sequence of fusion modules, leading to state-of-the-art performance in RGB-D salient target detection [27]. Expanding this approach to tri-modal data, unified Transformer models have integrated multimodal attention mechanisms to refine feature fusion, benefiting from large-scale pretraining and optimization of multiple loss functions to enhance detection performance [28].

These studies collectively validate the effectiveness of Transformer models in multimodal learning. To further exploit their ability to capture global contextual features and leverage complementary information between RGB and thermal imaging, this paper integrates the Transformer framework into multispectral target detection. By providing global semantic support for cross-modal feature fusion, this approach enhances target detection accuracy and overall model robustness in complex environments. A summary of representative Transformer-based multimodal learning methods is provided in Table 3.

Table 3.

Comparison of Transformer-based multimodal learning methods.

2.4. Summary of Existing Methods

Overall, the existing multispectral detection methods demonstrate considerable progress in enhancing feature fusion strategies and leveraging cross-modal information. Traditional convolution-based models benefit from strong spatial locality and mature design paradigms but often struggle with global context modeling, limiting their adaptability in complex environments. Attention-based methods, especially those combining channel and spatial mechanisms, improve cross-modal feature selection and robustness by emphasizing informative regions. However, these methods frequently introduce substantial computational overhead, posing challenges for real-time or resource-constrained deployment.

Transformer-based approaches excel in capturing global dependencies and inter-modal correlations, offering a powerful framework for modeling complex semantic relationships. Nevertheless, their effectiveness often depends on extensive annotated data and training time, which can be difficult to obtain in some practical settings. Additionally, current Transformer variants tend to lack efficient architectural designs that are well suited for lightweight applications.

In summary, the current approaches achieve varying degrees of success in balancing detection accuracy, computational complexity, and generalization capability. Future work should focus on designing lightweight yet effective fusion architectures that reduce redundancy, improve robustness, and better adapt to diverse imaging conditions across modalities.

3. Proposed Methodology

3.1. YOLOv5-Based Dual-Stream Architecture

To effectively extract and fuse multispectral features, we propose a dual-stream extension of the YOLOv5 architecture. Unlike single-stream detectors, this design processes visible and infrared modalities in parallel to retain spectral uniqueness. This subsection outlines the overall network architecture and details how each component—including the backbone, neck, and head—has been adapted to improve feature learning and fusion for multispectral object detection.

This study extends the YOLOv5 framework for multispectral target detection and introduces a dual-stream backbone network architecture. The proposed architecture processes both RGB and thermal images, incorporating improvements in three key components: the backbone network, neck network, and detection head.

The backbone network consists of Focus, CSPNet, and SPP modules. The Focus module reorganizes the original image information through slicing operations, enriching the input features without significantly increasing computational complexity. The CSPNet module employs cross-stage local connectivity to enhance the network’s learning ability while reducing computational redundancy. Additionally, the SPP module performs multiscale pooling operations on feature maps, enabling the model to better adapt to different object scales.

The neck network utilizes the FPN–PAN structure, which effectively transfers and fuses multiscale feature outputs from the backbone network. The Feature Pyramid Network (FPN) fuses high-resolution and low-resolution features in a top-down pathway, while the Path Aggregation Network (PAN) further enhances feature transfer in a bottom-up process. Finally, the detection head receives the fused multiscale feature maps and predicts the class, location, and size of the target through a series of convolution and prediction operations.

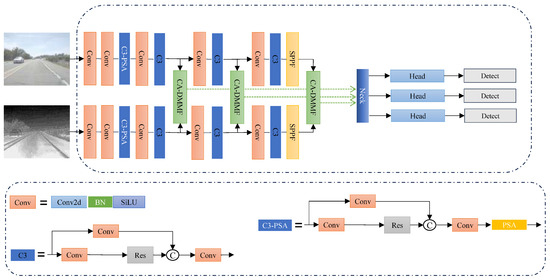

In this study, multimodal midway fusion is applied to key layers (e.g., C3–C5) of the backbone network, leveraging the characteristics of multispectral data. This fusion strategy is informed by the existing research, which demonstrates that midway fusion outperforms other approaches, and it establishes a robust foundation for subsequent multimodal feature interactions. The architecture of our proposed YOLO-MEDet model, as shown in Figure 2, illustrates the integration of this fusion strategy within the model’s dual-stream backbone, which is designed to extract and fuse features from both RGB and thermal images.

Figure 2.

Architecture of the YOLO-MEDet model. For performance considerations, the C3-PSA module is applied only to the first C3 layer of the model.

The proposed dual-stream design enables the network to preserve modality-specific representations before fusion, which is essential for maintaining spectral integrity and leveraging complementary information. This design is motivated by recent advances in multispectral learning, where modality-specific encoders have shown better adaptability to spectral discrepancies compared to early fusion strategies [18,21]. Furthermore, the structure retains compatibility with YOLOv5’s modular efficiency while enabling more flexible intermediate feature interactions. These characteristics contribute to the robustness and generalization ability of our model in complex environments.This dual-stream foundation sets the stage for advanced fusion strategies, which are introduced in the next subsection.

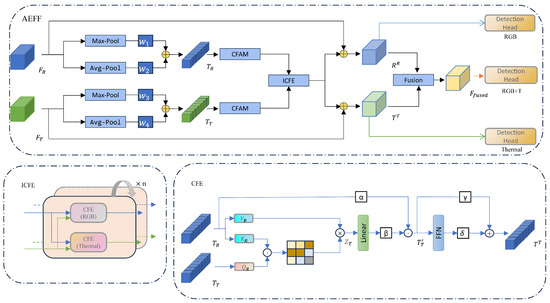

3.2. Hybrid Fusion Mechanism

Building upon the dual-stream architecture, we further introduce the Attention-Enhanced Feature Fusion (AEFF) module to improve modality interaction and cross-modal feature utilization. To fully exploit multispectral information and achieve effective modality complementarity, this study introduces the AEFF, which integrates cross-modal feature enhancement (CFE) and iterative learning strategies (ICFE), adaptive aggregation pooling and maximal pooling, and the convolutional and attentional fusion module (CAFM). The primary objective of AEFF is to maximize the retention of useful features while minimizing computational complexity, thereby improving the network’s adaptability to complex environments. Figure 3 illustrates the structure of the AEFF module, highlighting its role in enhancing feature fusion through convolutional and attentional mechanisms.

Figure 3.

The Attention-Enhanced Feature Fusion (AEFF) framework.

3.2.1. Cross-Modal Feature Enhancement

In this study, we introduce the CFE module to improve complementary feature extraction between RGB and thermal modalities. This module enables a single modality to learn feature information from the other modality at a global level using multiple cross-attention layers and residual structures. To fully leverage cross-modal complementarity, we implement the CFE module separately on the RGB and thermal branches, training their parameters independently. This strategy yields two distinct enhancement paths, allowing each modality to capture complementary information without parameter sharing.

Specifically, for an input RGB feature map and a thermal feature map , we first flatten both and incorporate a learnable position embedding to obtain positional information, which is represented as tokens and . Given that RGB–thermal image pairs are often imperfectly aligned, we employ separate CFE modules for the RGB and thermal branches to extract and enhance complementary information. The output of the CFE module for the thermal branch is given by

where and represent the RGB and thermal tokens input to the module, respectively. Here, denotes the augmented thermal features, and represents the CFE module for the thermal branch.

Within the CFE module, the thermal token is first projected onto the value matrix and key matrix , while the RGB token is projected onto the query matrix as follows:

where are learnable linear mappings. Next, the correlation matrix is computed via a dot product operation, which is normalized using the softmax function to measure the similarity between RGB and thermal features, and subsequently multiplied by , yielding

To capture cross-modal dependencies from different perspectives, we employ a multihead cross-attention mechanism with eight parallel heads. The resulting matrix is projected back to the original feature space through a nonlinear transformation and combined with residual connectivity to produce the fused features as follows:

where is the projection matrix, and and are learnable residual coefficients. Finally, a feedforward network (FFN) comprising two fully connected layers further enhances global information modeling:

where and are additional learnable coefficients, which are initialized to 1 during training. Similarly, a corresponding CFE module is applied to the RGB branch, with the output defined as

Through these two independent enhancement paths, the enhanced RGB features and thermal features effectively capture complementary global information, significantly improving the model’s cross-modal characterization capability and robustness.

3.2.2. Iterative Learning Strategy (ICFE Module)

To further strengthen the memory of inter-modal and intra-modal complementary information and improve the model’s performance, the ICFE module is introduced. Based on the CFE, this module deepens the network’s memory for cross-modal features by iteratively reusing shared parameters. Compared to simply stacking multiple CFE modules, the ICFE progressively optimizes features within cyclic iterations using the same parameters. This approach not only reduces the risk of overfitting caused by an overly deep model but also fully exploits complementary modal information with minimal additional parameter overhead. Taking iterations as an example, the process can be expressed as follows:

Here, represents the output sequence obtained after n iterative operations, and denotes the input sequence to the ICFE module. The output of each iteration serves as the input for the next iteration, with parameters shared across all iterations. Additionally, the input sequences and output sequences from the ICFE module are first converted into feature maps and then rescaled to the original feature map size using bilinear interpolation. This iterative learning strategy progressively optimizes cross-modal complementary information without increasing the number of parameters, providing an effective solution for enhancing multimodal target detection models.

While the CFE and ICFE modules significantly improve multimodal information fusion, further enhancements are required to strengthen the model’s ability to capture complex features. Inspired by DMFF and considering the specific needs of this study, we innovatively arrange the CAFM attention mechanism after adaptive aggregation mean pooling and maximum pooling. This novel arrangement aims to further optimize the feature fusion process and improve the model’s capability to process diverse modal features, enabling it to effectively handle challenges in multimodal target detection. The following section will detail the principles and advantages of the CAFM attention mechanism.

3.2.3. Adaptive Aggregation Pooling and Max Pooling

To reduce computational complexity while preserving key information, this study employs an adaptive aggregation pooling strategy that combines average pooling and max pooling. Average pooling computes the mean value over the pooled area, thereby preserving background information, while max pooling selects the maximum value, primarily retaining texture features of objects. By combining these two approaches, the model maintains useful information while effectively downscaling feature maps.

Specifically, given an input feature map F, the average pooling and maximum pooling operations are defined as

where S represents the scaling factor of the feature map. The pooled feature maps are then fused based on a learnable weight parameter:

where is a trainable parameter, which is initialized to 0.5 at the start of training. This adaptive fusion strategy allows the model to reduce spatial dimensionality while preserving critical information, thereby enhancing the performance of subsequent processing modules.

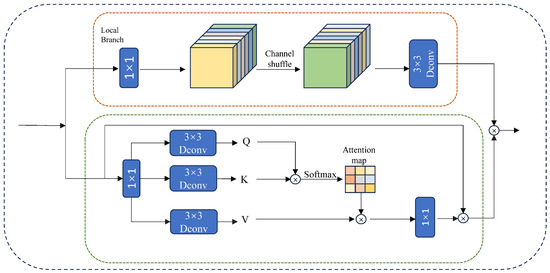

3.2.4. CAFM

To further enhance feature representation, we introduce the CAFM. This hybrid architecture synergistically combines spatial convolution operations with channel-aware attention mechanisms, enabling simultaneous capture of localized patterns and global contextual relationships. As illustrated in Figure 4, the CAFM consists of two complementary branches to handle both local and global information.

Figure 4.

Structure of CAFM.

The local feature branch focuses on fine-grained spatial interactions through multistage convolution operations. The computation flow comprises three key steps:

where and denote 1 × 1 and 3 × 3 × 3 convolutional layers, respectively. The channel shuffle operation implements cross-channel communication by dividing the input tensor into g groups along the channel dimension, applying depth-wise separable convolution within each group, and finally concatenating and rearranging channels to preserve topological structure.

Meanwhile, the global attention branch establishes long-range dependencies through compressed channel attention:

Here, reshapes tensors to , and is a learnable temperature parameter controlling the sharpness of the attention distribution. Finally, the two branches are fused through a residual connection, yielding the following CAFM output:

By integrating the CAFM, the model efficiently combines local and global features, significantly improving multiscale target detection and adapting to complex backgrounds.

3.3. C3-PSA Optimization

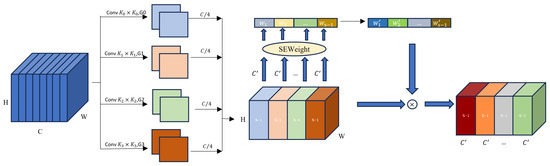

To improve the model’s capacity for multiscale and complex feature representation, we optimize the standard YOLOv5 backbone by introducing the C3-PSA module. Unlike the conventional C3 block, which struggles with scale variation and occlusion, C3-PSA enhances spatial adaptability and detail preservation by combining multibranch convolutions with dynamic channel attention. The structure of the parallel spatial attention mechanism is illustrated in Figure 5.

Figure 5.

The left side of the figure depicts the input feature tensor, characterized by height H, width W, and number of channels C, passing through four parallel convolutional paths. These paths extract multiscale features by dividing the number of channels into equal segments of (where S is 4 in the figure). The SEWeight module, positioned at the center, applies channel attention to generate weight vectors corresponding to each branch. On the right side, these weights are multiplied, channel by channel, by their respective branch features before being fused to produce the final output feature map.

In target detection tasks, the standard YOLOv5 model often encounters performance bottlenecks when handling complex scenes and multiscale targets. This limitation primarily arises because the C3 module in the core feature extraction network relies on conventional convolution and connection methods, which struggle to effectively capture critical information under dynamically changing conditions. When targets exhibit challenges such as scale variations or occlusions, the fixed convolutional kernel and connection method lack the flexibility needed to extract features at different scales adequately. Consequently, key features may be insufficiently captured or entirely missed, leading to a decline in detection accuracy and recall rates.

To mitigate this issue, we introduce the C3-PSA module, which is designed to enhance unimodal feature extraction and optimization through the PSA mechanism. Compared to the traditional C3 module, the proposed module significantly improves multiscale feature capturing and fusion by employing a two-step processing approach. The first step involves the initial extraction of edge and texture information from unimodal images using 3 × 3 convolutional layers, batch normalization (BN), and ReLU activation functions, producing compact feature maps of varying sizes. This process is mathematically expressed as

where represents the initially extracted feature map, denotes the 3 × 3 convolution operation, and and correspond to batch normalization and rectified linear unit activation, respectively. The extracted retains the spatial integrity of the scene while emphasizing critical regions, thereby supplying more robust feature inputs for the subsequent pyramid squeezing attention phase.

Following the initial feature extraction, the module integrates a multibranch Squeeze and Patch Convolution (SPC) structure, which applies parallel multiscale convolutional kernels to . The input feature channel dimensions are compressed from C to , and each branch employs a unique convolutional kernel size defined as

For the i-th branch, the operation is defined as

where represents a convolution operation with a kernel size of and a group size . Each scale generates a feature map , which is concatenated to obtain a fused multiscale feature representation defined as follows:

To further enhance channel-wise feature differentiation and suppress irrelevant information, the SEWeight module is employed to extract an attention weight vector:

These vectors are recalibrated using the softmax function:

where represents the normalized channel weights. Subsequently, each branch’s feature map is element-wise multiplied by its respective attention vector to generate the optimized feature representation:

The final output is concatenated as

Thereby yielding a multiscale attention-enhanced output feature representation. Since the original C3 module contains residual structures, replacing it with the C3-PSA module at the corresponding network positions significantly enhances feature extraction capabilities without altering the overall network topology. Extensive experiments demonstrate that incorporating the C3-PSA module into the YOLOv5 backbone effectively improves the ability to capture and refine multiscale information. This results in notable gains in both detection accuracy and recall rates. Compared to the conventional YOLOv5, the C3-PSA-enhanced version achieves superior target identification and localization, particularly in high-density or large-scale variation scenarios. Furthermore, it maintains robust detection performance across complex scenes. The effectiveness and applicability of this module will be further validated through detailed experimental comparisons and analyses.

4. Materials and Methods

This section presents the datasets used in our experiments, the evaluation metrics applied for model assessment, and the implementation details for training and testing. These elements collectively provide a foundation for replicating and understanding the experimental setup and analysis.

4.1. Datasets

To ensure comprehensive and robust evaluation, our experiments were conducted on three benchmark datasets: FLIR, KAIST, and FD. These datasets represent a wide range of multispectral detection challenges under varying environmental and lighting conditions.

4.1.1. FLIR Dataset

The FLIR ADAS dataset presents a significant challenge for multispectral target detection, encompassing complex scenes captured during both day and night. However, the original dataset contains a substantial number of misaligned visible–thermal image pairs, which hinders the effective training of deep learning models. To mitigate this issue, an “aligned” version of the dataset has been introduced, wherein misaligned image pairs have been manually filtered to enhance data quality and usability. The aligned dataset comprises 5142 precisely aligned multispectral image pairs, with 4129 designated for training and 1013 reserved for testing. It includes three target categories: pedestrians, cars, and bicycles. In this study, all experiments were conducted using the “aligned” version of the FLIR dataset, and all subsequent references to the FLIR dataset pertain exclusively to this version.

4.1.2. KAIST Datasets

The KAIST Multi-Spectral Day/Night dataset is a large-scale dataset designed for automated driving and advanced driver assistance system (ADAS) visual perception tasks. It encompasses a diverse range of drivable scenarios, spanning urban and residential areas, and includes data collected across various time periods (day and night) as well as finer temporal divisions (dawn, morning, afternoon, dusk, and night) to facilitate all-weather visual perception research. The dataset was acquired using a multisensor platform comprising RGB/thermal imaging cameras, RGB stereo cameras, 3D LiDAR, and inertial sensors (GPS/IMU). Optical alignment and calibration techniques were employed to ensure the precise synchronization of RGB and thermal imaging data. The KAIST dataset supports multiple visual perception tasks, including target detection, drivable area detection, image enhancement, depth estimation, and thermal image pseudo-coloring. It serves as a crucial benchmark for addressing visual perception challenges under low and extreme lighting conditions.

4.1.3. FD

The FD dataset is the most comprehensive multitarget detection (MOD) dataset available, encompassing the widest variety of scenes. The dataset contains 4200 pairs of aligned and labeled visible and infrared images, most of which have a high resolution of 1024 × 768. It includes six target categories—“people”, “cars”, “buses”, “motorcycles”, “trucks”, and “traffic signals”—which are commonly found in surveillance and autonomous driving scenarios. The images were captured by optical and infrared cameras mounted on vehicles and represent a diverse range of challenging scenarios, including daytime, cloudy, nighttime, and foggy conditions. As a result, the FD dataset is ideally suited for multitarget detection (MOD) research.

4.2. Evaluation Metrics

We adopted standard evaluation metrics to quantify detection performance and compare results across models. These include Precision (P), Recall (R), and mean Average Precision (mAP) at various IoU thresholds, which collectively offer a comprehensive view of model accuracy and robustness. To comprehensively evaluate the detection performance, we employed standard metrics, including Precision (P), Recall (R), and mean Average Precision (mAP) across different Intersection over Union (IoU) thresholds—specifically, mAP@0.50, mAP@0.75, and mAP@0.50:0.95. Precision measures the proportion of correctly predicted positive samples among all predicted positives, while Recall measures the proportion of actual positives that are correctly detected.

The mean Average Precision (mAP) is the primary evaluation metric in object detection tasks. It is calculated by averaging the Average Precision (AP) across all object classes. In particular, mAP@0.50 evaluates AP at an IoU threshold of 0.50, whereas mAP@0.50:0.95 provides a more rigorous measure by averaging AP values over IoU thresholds from 0.50 to 0.95 in increments of 0.05.

The evaluation metrics are mathematically defined as

where , , and denote the number of true positives, false positives, and false negatives, respectively.

For internal ablation studies, we report both Precision and Recall alongside to provide a detailed performance analysis. However, in comparison tables with prior works, only is reported, as most existing methods do not disclose consistent and values. This ensures a fair and standardized evaluation across models.

4.3. Implementation Details

This subsection describes the experimental settings used for model training and evaluation, including hardware configuration, software framework, training strategies, and data augmentation techniques. These details ensure reproducibility and provide insight into the computational environment and optimization setup.

In this study, our approach was implemented using the PyTorch 2.5.1 framework on a computing server running Windows 11. The server is equipped with an Intel Core i7-14700KF CPU, 32 GB of RAM, and an NVIDIA RTX 4080 SUPER GPU with 16 GB of graphics memory, providing robust computational support for model training. Additionally, SimuNPS was utilized for simulation-based validation and performance analysis, further ensuring the reliability of the experimental results. During the training phase, a total of 60 training cycles were performed, with a batch size set to 8. The optimizer employed Stochastic Gradient Descent (SGD) with an initial learning rate of , a momentum coefficient of 0.937, a weight decay factor of 0.0005, and a cosine annealing learning rate decay strategy. The input image resolution was set to 640 × 640 during training, while 640 × 512 was used as the input size for testing. To enhance the model’s generalization ability, mosaic data augmentation and random flipping were applied for data expansion. The loss function followed the loss calculation methods used in the YOLOv5 and FCOS object detectors. The experimental environment and hyperparameter settings are summarized in Table 4.

Table 4.

Experimental environment configuration.

5. Experimental Results

5.1. Comparison with Other Methods

This section evaluates the performance of the proposed YOLO-MEDet model against several state-of-the-art multispectral object detection methods across multiple datasets. We report the results using standard metrics and have conducted the comparisons under consistent conditions to ensure fairness and reproducibility.

5.1.1. Comparisons on FLIR

We selected several recently proposed multispectral feature fusion-based object detection methods and compared them with our YOLO-MEDet algorithm, using mAP@0.5 (mean Average Precision at an Intersection over Union (IoU) threshold of 0.5) and mAP@0.5:0.95 (mean Average Precision averaged over IoU thresholds from 0.5 to 0.95) as the evaluation metrics. Table 5 presents the evaluation results of these algorithms on the FLIR aligned test set, comparing HalfwayFusion [10], CFR_3 [10], GAFF [13], CAPTM [37], YOLO-MS [20], MRD-YOLO [38], CFT [39], CMAFF [19], CSAA [40], and ICAFusion [25].

Table 5.

Comparison of different methods on the FLIR aligned dataset.

As shown in Table 5, YOLO-MEDet outperformed the state-of-the-art methods on this dataset, achieving an 80.1% mAP@0.5 score and a 38.5% mAP@0.5:0.95 score, significantly surpassing the unimodal YOLOv5 model. Compared to ICAFusion, YOLO-MEDet improved the mAP@0.5 score by 1.6%, demonstrating its superior capability in multispectral object detection tasks.

Notably, although ICAFusion employs an intermediate feature fusion strategy, it did not achieve the same level of feature integration as YOLO-MEDet, which leverages a multidimensional fusion approach for more precise feature representation. This limitation arises because ICAFusion primarily focuses on global information fusion and interaction, whereas YOLO-MEDet integrates both local and global feature representations more effectively. Consequently, YOLO-MEDet surpassed ICAFusion and several other state-of-the-art methods in terms of its feature fusion capability and detection accuracy. Furthermore, while methods such as GAFF and CSAA have achieved strong results in this task, YOLO-MEDet further enhanced the detection accuracy through a more efficient feature fusion strategy, particularly in multispectral data processing.

5.1.2. Comparisons on FD

In addition, we selected 12 state-of-the-art image fusion algorithms to demonstrate the superiority of our YOLO-MEDet in fusion-based target detection tasks. These algorithms include SLBAF [42], EAEF [43], DAMSDet [44], RGB-X [45], DenseFuse [46], FusionGAN [47], RFN [48], GANMcC [49], DDcGAN [50], MFEIF [51], U2Fusion [52], and TarDAL [53]. The experiments were conducted using the FD dataset, where the following models were evaluated separately: (1) a mono-spectral YOLOv5 model trained using only visible or infrared data, (2) 12 fused YOLOv5 models trained with data generated by the fusion algorithms described above, and (3) our YOLO-MEDet model. Table 6 records the performance metrics for each model on the M3FD test set.

Table 6.

Comparison of different methods on the FD dataset.

As shown in Table 6, YOLO-MEDet achieved a mAP@0.5 score of 84.2% and a mAP@0.5:0.95 score of 52.4%, outperforming all other models in these comparisons. The high robustness of YOLO-MEDet in multispectral fusion and target detection has been further validated by its performance compared to the top-performing fusion detection model, TarDAL, where YOLO-MEDet improved the mAP@0.5 by 3.0% and the mAP@0.5:0.95 by 4.4%. Additionally, YOLO-MEDet demonstrated significant performance advantages over pixel-level fusion detection models such as RFN and MFEIF. These experimental results highlight that YOLO-MEDet not only significantly improves upon current fusion detection models but also sets a new benchmark for multispectral target detection on the FD dataset.

5.2. Ablation Study

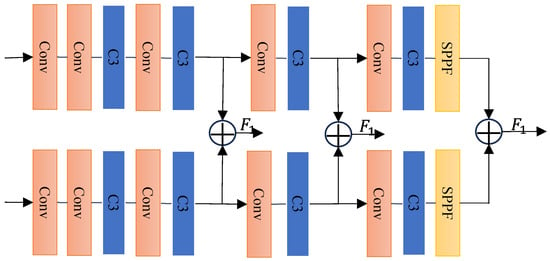

In this section, we conducted a series of ablation experiments to thoroughly evaluate the performance of the proposed network. For comparison, we constructed two parallel CSPDarknet53 networks using element-wise summation as the fusion operation to build the baseline backbone network, as shown in Figure 6. Subsequently, we introduced various improvements for experimentation, primarily including the AEFF and the C3-PSA module. In this study, we compared the performance of different model configurations based on the key evaluation metric, mean Average Precision (mAP), which covers all categories in the dataset. Specifically, M1, M2, and M3 represent the following: a YOLOv5 trained exclusively for visible light image detection, a YOLOv5 trained exclusively for infrared image detection, and a dual-stream YOLOv5 with element-wise summation for modality fusion. M4 and M5 correspond to the dual-stream YOLOv5 with AEFF and C3-PSA improvements, respectively, while M6 represents the fully enhanced dual-stream YOLOv5 with both AEFF and C3-PSA improvements. Through ablation experiments, we performed a quantitative analysis of the mean values for the three fusion metrics: mAP@0.5, mAP@0.75, and mAP@0.5:0.95. For simplicity in this section, the experiments focus on the FLIR aligned dataset, the KAIST dataset, and the M3FD dataset, with the relevant results presented in Table 7, Table 8 and Table 9.

Figure 6.

Structure of baseline.

Table 7.

Ablation findings for the FLIR dataset. ✓ indicates the component is enabled; ✗ indicates it is disabled.

Table 8.

Ablation experiments on KAIST dataset. The best-performing methods are highlighted in bold.

Table 9.

Ablation findings on the FD dataset. ✓ indicates the component is enabled; ✗ indicates it is disabled.

The results of the ablation experiments performed on the FLIR dataset show that the dual-stream YOLOv5 architecture (M3) demonstrated significant improvements across several performance metrics compared to the unimodal models (M1 and M2). In terms of the mAP@0.5 metric, M3 achieved a performance of 78.4, surpassing M1’s 67.8 and M2’s 73.9. This highlights the dual-stream architecture’s ability to effectively fuse visible and infrared image data, thereby enhancing overall detection performance. Upon the introduction of the AEFF module, the mAP@0.5 value of M4 improved to 80.8, further validating the effectiveness of the AEFF in facilitating information interaction between different modalities. The inclusion of the AEFF enhances the network’s ability to fuse cross-modal features, thus improving the model’s robustness. In contrast, while the introduction of the C3-PSA in M5 led to a slight reduction in the mAP@0.5 metric (79.8), it significantly enhanced feature representation by improving detail. Finally, when both the AEFF and C3-PSA were applied to M6, the model’s performance for mAP@0.5 stabilized at 79.9 and maintained high values for the mAP@0.75 and mAP@0.5:0.95 metrics. This demonstrates that the synergy of the two modules is essential for optimizing the model’s performance in multimodal target detection.

Table 8 presents the results of ablation experiments conducted on the KAIST dataset, comparing the two-stream YOLOv5 baseline model (M3) with the models incorporating the AEFF (M4), the C3-PSA module (M5), and a combination of both the AEFF and C3-PSA (M6). The performance differences in terms of the mAP@0.5 and mAP@0.5:0.95 metrics across these models are analyzed.

The baseline model, M3, achieved mAP@0.5 and mAP@0.5:0.95 scores of 74.9 and 32.8, respectively. With the addition of the AEFF (M4), these metrics improved to 75.23 and 32.95, respectively, demonstrating AEFF’s effectiveness in enhancing the interaction between cross-modal features. The application of the C3-PSA (M5) further increased the mAP@0.5 and mAP@0.5:0.95 metrics to 75.73 and 32.8, respectively, emphasizing the C3-PSA module’s role in focusing on key information and refining feature representation. Finally, when both the AEFF and C3-PSA were integrated into the model (M6), the mAP@0.5 rose to 75.8, while the mAP@0.5:0.95 improved to 33.11, achieving the best performance in this experiment. These results highlight the significant synergistic effect of the AEFF and C3-PSA in improving cross-modal feature fusion and target detection tasks.

In the ablation experiments on the FD dataset, the dual-stream YOLOv5 architecture (M6) also demonstrated superior performance compared to the unimodal configurations (M1 and M2), particularly in the mAP@0.5 (84.2) and mAP@0.5:0.95 (52.4) metrics. Compared to the unimodal models, the dual-stream YOLOv5 architecture significantly enhanced the model’s detection capability in complex environments by effectively fusing visible and infrared image features. The dual-stream YOLOv5 (M4), with the introduction of the AEFF, reached 84.4 for the mAP@0.5 score and 51.6 for the mAP@0.5:0.95 score, showcasing the positive impact of the AEFF in improving the efficiency of inter-modal feature fusion. However, the dual-stream YOLOv5 (M5), which integrates the C3-PSA, showed a slight decrease in the Recall metric but maintained relatively stable performance in the mAP@0.5 and mAP@0.5:0.95 metrics, further highlighting the effectiveness of the C3-PSA in enhancing inter-modal feature processing. Finally, when both the AEFF and C3-PSA were applied to the dual-stream YOLOv5 (M6), the model maintained high performance across all metrics, with 84.2 for the mAP@0.5 score and 52.4 for the mAP@0.5:0.95 score. This underscores the important role of the combination of these two techniques in enhancing the model’s detection performance.

To further quantify the computational cost of our proposed improvements, we report the FLOPs and parameter counts for key model variants in Table 10. Compared to ICAFusion, which incurs 192.6 GFLOPs and 120.3 million parameters, the model using only the AEFF increases to 216.8 GFLOPs and 120.8 M parameters, while the C3-PSA-only model reduces the parameters to 108.8 M with 203.7 GFLOPs. The full YOLO-MEDet model (M6), which integrates both the AEFF and C3-PSA, reaches 227.9 GFLOPs and 121.0 M parameters. These increases in complexity are justified by the observed performance gains in the Precision, Recall, and mAP metrics across multiple datasets. Although our model is not optimized for lightweight deployment, its computational scale remains comparable to state-of-the-art architectures commonly used in multispectral detection tasks.

Table 10.

Comparison of model complexity (FLOPs and parameters).

In summary, these results demonstrate that through the synergistic effects of feature fusion and enhancement techniques, dual-stream YOLOv5 shows substantial performance improvements in complex multimodal scenarios and significantly enhances the accuracy and robustness of target detection.

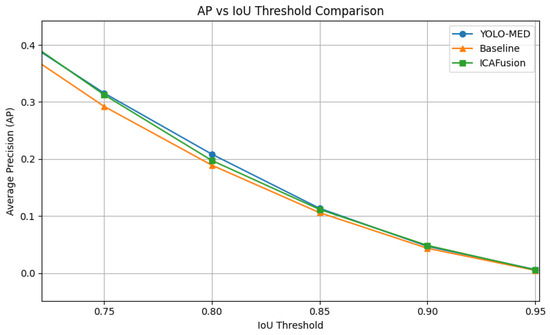

5.3. Per-IoU AP Analysis

To gain deeper insight into the localization capability of different fusion strategies under varying spatial precision requirements, we conducted a per-IoU AP evaluation on both the FLIR and FD datasets. Following the COCO-style evaluation protocol, we report the Average Precision (AP) scores at discrete IoU thresholds ranging from 0.50 to 0.95.

As shown in Figure 7, YOLO-MEDet consistently outperformed the baseline fusion method across intermediate IoU thresholds (0.75–0.85), highlighting its enhanced localization precision under moderately strict conditions. Although ICAFusion slightly exceeded YOLO-MEDet at the highest threshold levels (IoU ≥ 0.90), the overall trend indicates that YOLO-MEDet maintains more stable and robust localization across the entire IoU range. This helps explain the observed 2.9% decrease in the overall mAP@0.5:0.95, which is largely attributable to performance at stricter thresholds rather than a uniform decline.

Figure 7.

Comparison of per-IoU AP scores on the FLIR dataset for YOLO-MEDet, a baseline additive fusion model, and the state-of-the-art ICAFusion.

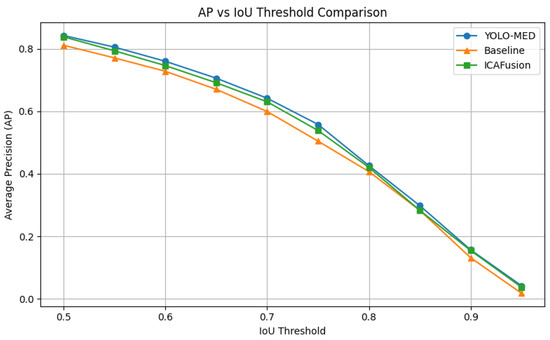

A similar pattern is observed on the FD dataset, as presented in Figure 8. YOLO-MEDet consistently surpassed the baseline across all IoU thresholds, with particularly strong advantages in the 0.60–0.85 range. While the performance outcomes of YOLO-MEDet and ICAFusion converged at higher thresholds, the proposed model demonstrates a more balanced and resilient performance under varying spatial overlap constraints.

Figure 8.

Comparison of per-IoU AP scores on the FD dataset.

These findings confirm that YOLO-MEDet achieves reliable localization across a spectrum of IoU conditions, with notable strengths in medium to strict overlap scenarios. This contributes to its practical applicability, even in cases where the overall mAP@0.5:0.95 appears marginally reduced.

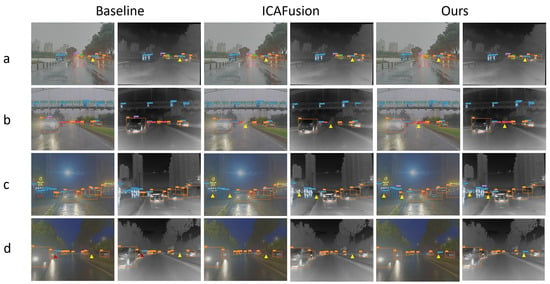

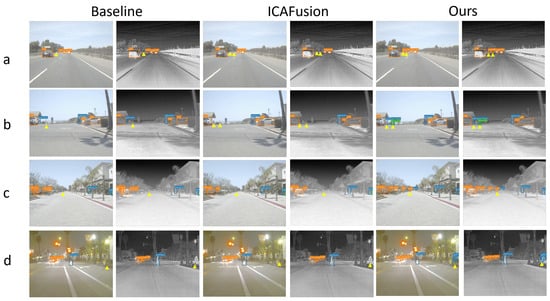

5.4. Qualitative Analysis

To comprehensively evaluate the performance of each model, we visualize the detection results of the baseline model, ICAFusion, and our proposed method on the FLIR and FD datasets and compare them with the ground truth annotations. Figure 9 illustrates the detection results under various lighting and environmental conditions, covering daytime and nighttime scenes with high target density, long target distances, and strong light interference.

Figure 9.

A visual comparison of the detection results of our model with those of SOTA and baseline methods on the FLIR dataset. Subfigures (a–d) represent different sample scenes. Yellow triangles indicate objects successfully detected by our model but missed by the other two methods, while red triangles denote incorrectly detected objects. Note that bounding box layouts may appear similar, but the overlaid triangle markers highlight key detection differences.

As one can observe, the baseline model and ICAFusion frequently failed to detect certain cars and pedestrians in daytime scenes, and their performance degraded significantly at night. In contrast, our method performed consistently under all lighting conditions, successfully detecting nearly all targets during both day and night. In target-intensive scenes (e.g., daytime scene a and nighttime scene c), our method accurately detected all pedestrians and vehicles within the field of view, whereas the baseline model and ICAFusion were particularly prone to missing small targets at night. Furthermore, when targets were at greater distances, the baseline model also struggled with misdetections under strong illumination in nighttime scene d, while our method continued to recognize all targets successfully.

Additionally, Figure 10 presents the detection results of the three methods in different scenarios, including regular scenes, small-target scenes, and crowded scenes. In regular scenes, both the baseline model and ICAFusion failed to detect certain targets compared to our model. In small-target scenes, our method consistently classified and localized all targets, regardless of daytime or nighttime, whereas the baseline model and ICAFusion missed more distant and smaller targets, such as pedestrians and vehicles. In crowded scenes, our model effectively captured all targets, while the baseline model and ICAFusion continued to suffer from missed detections.

Figure 10.

A visual comparison of the detection results of our model with those of SOTA and baseline methods on the FD dataset. Subfigures (a–d) represent different sample scenes. Yellow triangles indicate objects successfully detected by our model but missed by the other two methods, while red triangles denote incorrectly detected objects.

These experimental results further validate the advantages of our method in multispectral information fusion and cross-modal feature interaction, as well as its superior detection capability in complex scenes.

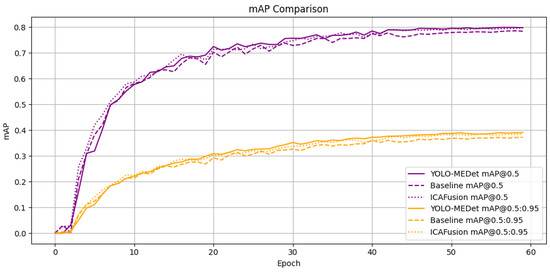

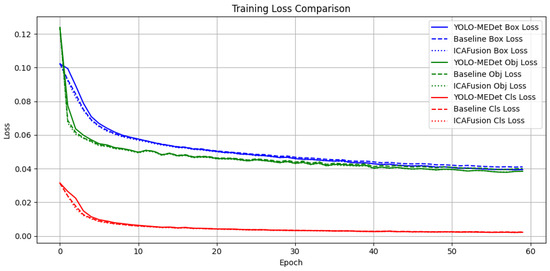

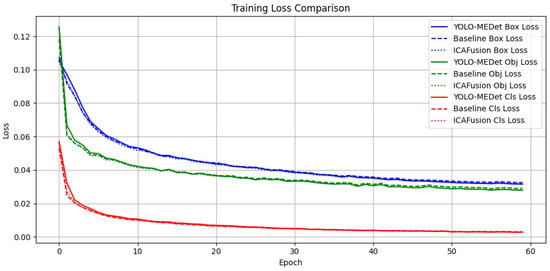

To complement the qualitative detection comparisons, we further visualized the training dynamics of each model to objectively validate performance differences. Figure 11 presents the evolution of the mAP@0.5 and mAP@0.5:0.95 scores on the FLIR dataset, while Figure 12 shows the training loss trajectories for box, objectness, and classification components.

Figure 11.

mAP progression curves of YOLO-MEDet, ICAFusion, and the baseline on the FLIR dataset.

Figure 12.

Training loss comparison on the FLIR dataset.

As seen in Figure 11, it is evident that YOLO-MEDet achieved faster convergence and higher accuracy across all IoU thresholds compared to the baseline and ICAFusion. Meanwhile, Figure 12 illustrates that our model maintained consistently lower training losses throughout training. These results confirm that the improvements introduced by the AEFF and C3-PSA not only enhance detection quality but also facilitate more stable and efficient optimization during training.

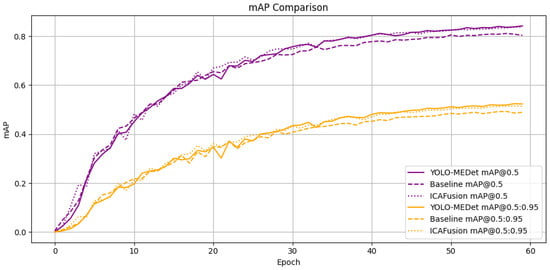

To verify the generality of this behavior, we conducted the same training visualization on the FD dataset. As shown in Figure 13 and Figure 14, similar trends are observed. YOLO-MEDet not only outperformed the baseline and ICAFusion models in terms of its mAP@0.5 and mAP@0.5:0.95 scores across epochs, but it also demonstrated superior convergence and lower training losses in all components. The consistent advantage on both datasets further validates the robustness and scalability of our proposed architecture under varying environmental and spectral conditions.

Figure 13.

mAP progression curves of YOLO-MEDet, ICAFusion, and the baseline on the FD dataset.

Figure 14.

Training loss comparison on the FD dataset.

These qualitative results demonstrate that the proposed method offers stronger feature discrimination, more complete object contours, and higher robustness against environmental interference. The improvements are primarily attributed to two architectural enhancements: the Attention-Enhanced Feature Fusion (AEFF) module, which enables effective cross-modal interaction between visible and thermal features, and the C3-PSA module, which enriches multiscale spatial awareness while preserving key structural details. Functionally, these modules improve detection precision under challenging conditions and enhance model reliability in both sparse and crowded scenes. Although they introduce moderate computational overhead, the model remains deployable on modern GPU-equipped platforms, and further optimization is possible for edge-oriented applications.

6. Conclusions

In this study, YOLO-MEDet was proposed as a bimodal object detection framework tailored for multispectral fusion of visible and infrared imagery. The model employs a dual-stream midway fusion backbone alongside two custom modules: the Attention-Enhanced Feature Fusion (AEFF) module for cross-modal adaptive integration and the C3-PSA module for efficient multiscale spatial attention. These components were jointly designed to address the challenges of detecting small, occluded, and distant targets in complex road environments.

Extensive experiments on the FLIR, KAIST, and FD datasets validated the effectiveness of YOLO-MEDet, with consistent performance improvements over state-of-the-art multispectral detectors. For instance, YOLO-MEDet achieved a +2.5% mAP@0.5 improvement on the FD dataset compared to the strongest baseline. The ablation results further confirmed the complementary benefits of the AEFF and C3-PSA modules.

While the model introduces additional computational complexity, it remains scalable for high-accuracy applications. Future work will focus on designing lightweight variants, integrating alignment-invariant fusion mechanisms, and exploring domain-adaptive training strategies to enhance deployment across diverse operational conditions.

Author Contributions

Conceptualization, Y.G.; Formal analysis, Y.Z.; Funding acquisition, Y.Z.; Investigation, X.Y.; Methodology, Y.G.; Resources, X.Y. and Y.G.; Software, Y.G.; Supervision, X.Y.; Validation, X.Y.; Visualization, Y.G.; Writing—original draft, Y.G.; Writing—review and editing, L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study is the Liaoning Provincial Department of Science and Technology’s pilot project (2022JH24/10200029).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are contained within the article.

Acknowledgments

The authors would like to thank the editors and reviewers for their insightful suggestions. We are grateful to Hongbiao Li for their constructive suggestions on the statistical analysis.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yuan, K.; Zhuang, X.; Schaefer, G.; Feng, J.; Guan, L.; Fang, H. Deep-learning-based multispectral satellite image segmentation for water body detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7422–7434. [Google Scholar] [CrossRef]

- Parelius, E.J. A review of deep-learning methods for change detection in multispectral remote sensing images. Remote Sens. 2023, 15, 2092. [Google Scholar] [CrossRef]

- Rana, S.; Gerbino, S.; Crimaldi, M.; Cirillo, V.; Carillo, P.; Sarghini, F.; Maggio, A. Comprehensive Evaluation of Multispectral Image Registration Strategies in Heterogenous Agriculture Environment. J. Imaging 2024, 10, 61. [Google Scholar] [CrossRef]

- McAllister, E.; Payo, A.; Novellino, A.; Dolphin, T.; Medina-Lopez, E. Multispectral satellite imagery and machine learning for the extraction of shoreline indicators. Coast. Eng. 2022, 174, 104102. [Google Scholar] [CrossRef]

- Jahoda, P.; Drozdovskiy, I.; Payler, S.J.; Turchi, L.; Bessone, L.; Sauro, F. Machine learning for recognizing minerals from multispectral data. Analyst 2021, 146, 184–195. [Google Scholar] [CrossRef]

- Li, K.Y.; Sampaio de Lima, R.; Burnside, N.G.; Vahtmäe, E.; Kutser, T.; Sepp, K.; Cabral Pinheiro, V.H.; Yang, M.D.; Vain, A.; Sepp, K. Toward automated machine learning-based hyperspectral image analysis in crop yield and biomass estimation. Remote Sens. 2022, 14, 1114. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar] [CrossRef]

- Sharma, M.; Dhanaraj, M.; Karnam, S.; Chachlakis, D.G.; Ptucha, R.; Markopoulos, P.P.; Saber, E. YOLOrs: Object detection in multimodal remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1497–1508. [Google Scholar] [CrossRef]

- Kou, J.; Zhan, T.; Zhou, D.; Xie, Y.; Da, Z.; Gong, M. Visual attention-based siamese CNN with SoftmaxFocal loss for laser-induced damage change detection of optical elements. Neurocomputing 2023, 517, 173–187. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefevre, S.; Avignon, B. Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 276–280. [Google Scholar] [CrossRef]

- Zheng, Y.; Izzat, I.H.; Ziaee, S. GFD-SSD: Gated fusion double SSD for multispectral pedestrian detection. arXiv 2019, arXiv:1903.06999. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Zhang, S.; Yang, X.; Qiao, H.; Huang, K.; Hussain, A. Cross-modality interactive attention network for multispectral pedestrian detection. Inf. Fusion 2019, 50, 20–29. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Guided attentive feature fusion for multispectral pedestrian detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 72–80. [Google Scholar] [CrossRef]

- Fu, L.; Gu, W.-b.; Ai, Y.-b.; Li, W.; Wang, D. Adaptive spatial pixel-level feature fusion network for multispectral pedestrian detection. Infrared Phys. Technol. 2021, 116, 103770. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-based RGB-infrared cross-modality vehicle detection via uncertainty-aware learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3496–3504. [Google Scholar] [CrossRef]

- Song, S.; Miao, Z.; Yu, H.; Fang, J.; Zheng, K.; Ma, C.; Wang, S. Deep domain adaptation based multi-spectral salient object detection. IEEE Trans. Multimed. 2020, 24, 128–140. [Google Scholar] [CrossRef]

- Qingyun, F.; Zhaokui, W. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, L.; Yu, X.; Xie, W. YOLO-MS: Multispectral object detection via feature interaction and self-attention guided fusion. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 2132–2143. [Google Scholar] [CrossRef]

- Yang, F.; Liang, B.; Li, W.; Zhang, J. Multidimensional Fusion Network for Multispectral Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 547–560. [Google Scholar] [CrossRef]

- You, S.; Xie, X.; Feng, Y.; Mei, C.; Ji, Y. Multi-scale aggregation transformers for multispectral object detection. IEEE Signal Process. Lett. 2023, 30, 1172–1176. [Google Scholar] [CrossRef]

- Gao, X.; Liu, S. BCMFIFuse: A Bilateral Cross-Modal Feature Interaction-Based Network for Infrared and Visible Image Fusion. Remote Sens. 2024, 16, 3136. [Google Scholar] [CrossRef]

- Gao, H.; Wang, Y.; Sun, J.; Jiang, Y.; Gai, Y.; Yu, J. Efficient multi-level cross-modal fusion and detection network for infrared and visible image. Alex. Eng. J. 2024, 108, 306–318. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Akbari, H.; Yuan, L.; Qian, R.; Chuang, W.H.; Chang, S.F.; Cui, Y.; Gong, B. Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. Adv. Neural Inf. Process. Syst. 2021, 34, 24206–24221. [Google Scholar]

- Chen, G.; Wang, Q.; Dong, B.; Ma, R.; Liu, N.; Fu, H.; Xia, Y. EM-Trans: Edge-Aware Multimodal Transformer for RGB-D Salient Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 3175–3188. [Google Scholar] [CrossRef]

- Qiu, J.; Jiang, C.; Wang, H. ETFormer: An Efficient Transformer Based on Multimodal Hybrid Fusion and Representation Learning for RGB-DT Salient Object Detection. IEEE Signal Process. Lett. 2024, 31, 2930–2934. [Google Scholar] [CrossRef]

- Hu, S.; Gao, F.; Zhou, X.; Dong, J.; Du, Q. Hybrid Convolutional and Attention Network for Hyperspectral Image Denoising. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5504005. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, L.; Wu, Y.; Yang, Y. Gated channel transformation for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11794–11803. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2017–2025. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar] [CrossRef]

- Jaegle, A.; Gimeno, F.; Brock, A.; Vinyals, O.; Zisserman, A.; Carreira, J. Perceiver: General perception with iterative attention. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 18–24 July 2021; pp. 4651–4664. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Zhou, H.; Sun, M.; Ren, X.; Wang, X. Visible-thermal image object detection via the combination of illumination conditions and temperature information. Remote Sens. 2021, 13, 3656. [Google Scholar] [CrossRef]

- Sun, C.; Chen, Y.; Qiu, X.; Li, R.; You, L. Mrd-yolo: A multispectral object detection algorithm for complex road scenes. Sensors 2024, 24, 3222. [Google Scholar] [CrossRef]

- Qingyun, F.; Dapeng, H.; Zhaokui, W. Cross-modality fusion transformer for multispectral object detection. arXiv 2021, arXiv:2111.00273. [Google Scholar] [CrossRef]

- Cao, Y.; Bin, J.; Hamari, J.; Blasch, E.; Liu, Z. Multimodal object detection by channel switching and spatial attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 403–411. [Google Scholar] [CrossRef]

- Yuyao, T.; Bo, J. The infrared-visible complementary recognition network based on context information. In Proceedings of the 2021 14th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 23–25 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Cheng, X.; Geng, K.; Wang, Z.; Wang, J.; Sun, Y.; Ding, P. SLBAF-Net: Super-Lightweight bimodal adaptive fusion network for UAV detection in low recognition environment. Multimed. Tools Appl. 2023, 82, 47773–47792. [Google Scholar] [CrossRef]

- Liang, M.; Hu, J.; Bao, C.; Feng, H.; Deng, F.; Lam, T.L. Explicit attention-enhanced fusion for RGB-thermal perception tasks. IEEE Robot. Autom. Lett. 2023, 8, 4060–4067. [Google Scholar] [CrossRef]

- Guo, J.; Gao, C.; Liu, F.; Meng, D.; Gao, X. Damsdet: Dynamic adaptive multispectral detection transformer with competitive query selection and adaptive feature fusion. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 464–481. [Google Scholar] [CrossRef]

- Deevi, S.A.; Lee, C.; Gan, L.; Nagesh, S.; Pandey, G.; Chung, S.J. Rgb-x object detection via scene-specific fusion modules. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 7366–7375. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5005014. [Google Scholar] [CrossRef]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X.P. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Jiang, J.; Liu, R.; Luo, Z. Learning a deep multi-scale feature ensemble and an edge-attention guidance for image fusion. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 105–119. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).