Improvement of the Cross-Scale Multi-Feature Stereo Matching Algorithm

Abstract

1. Introduction

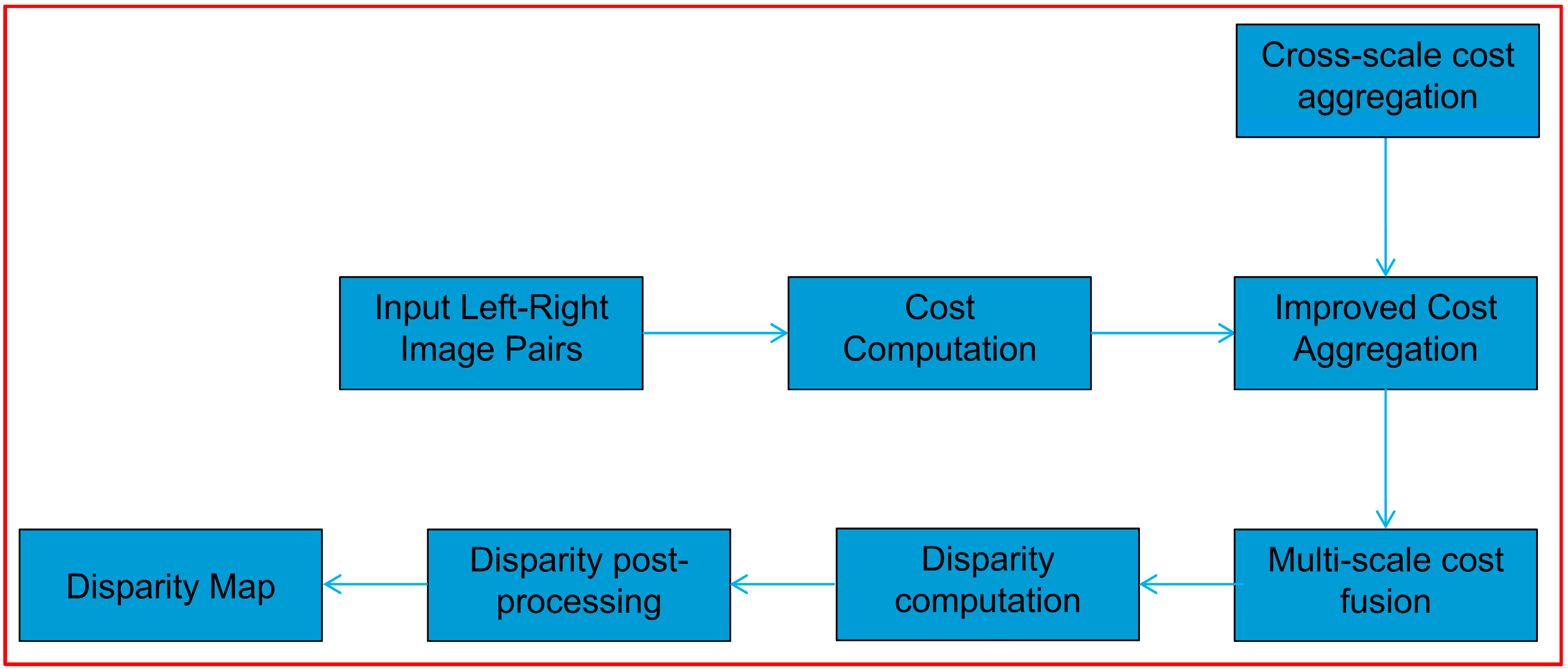

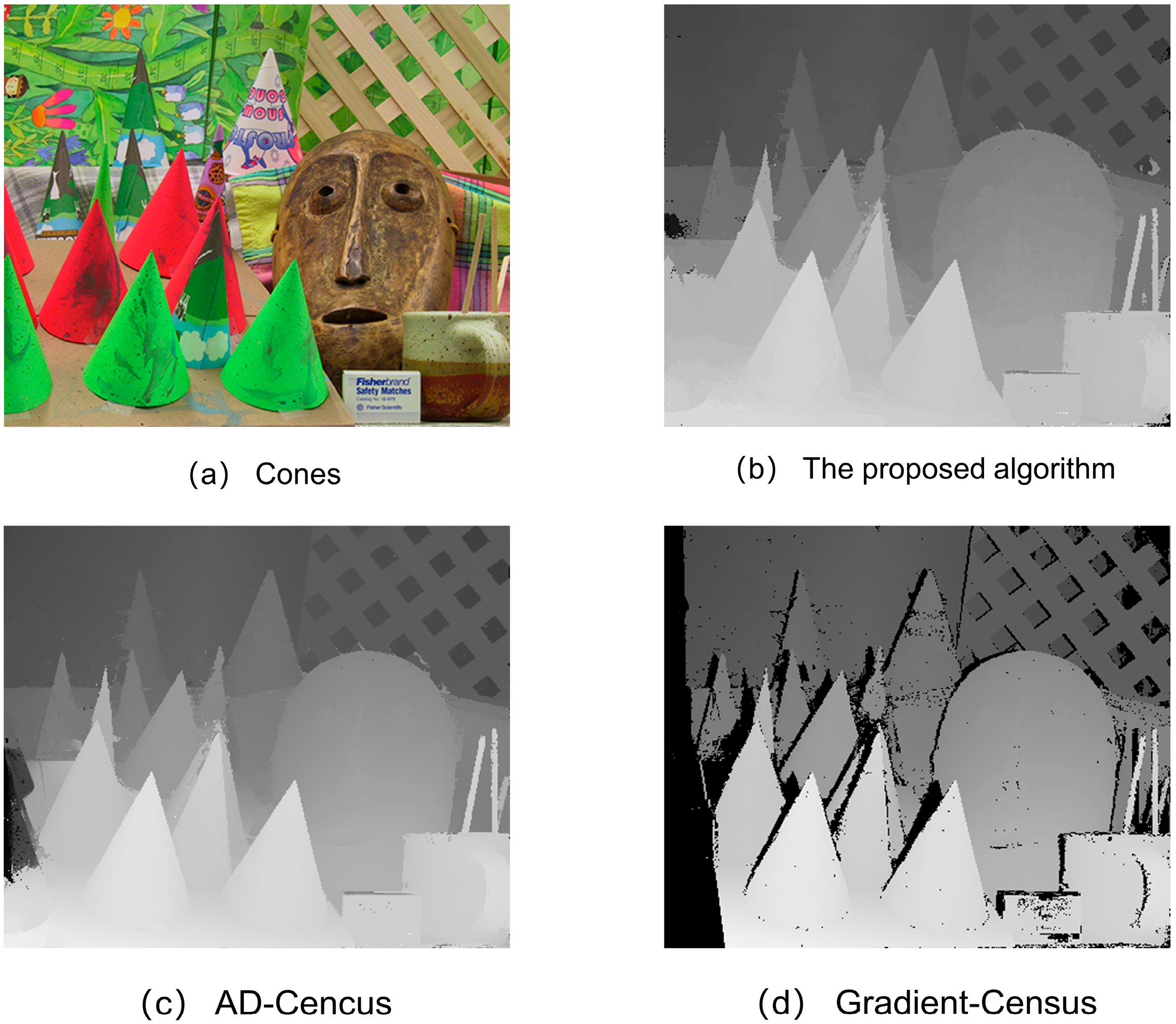

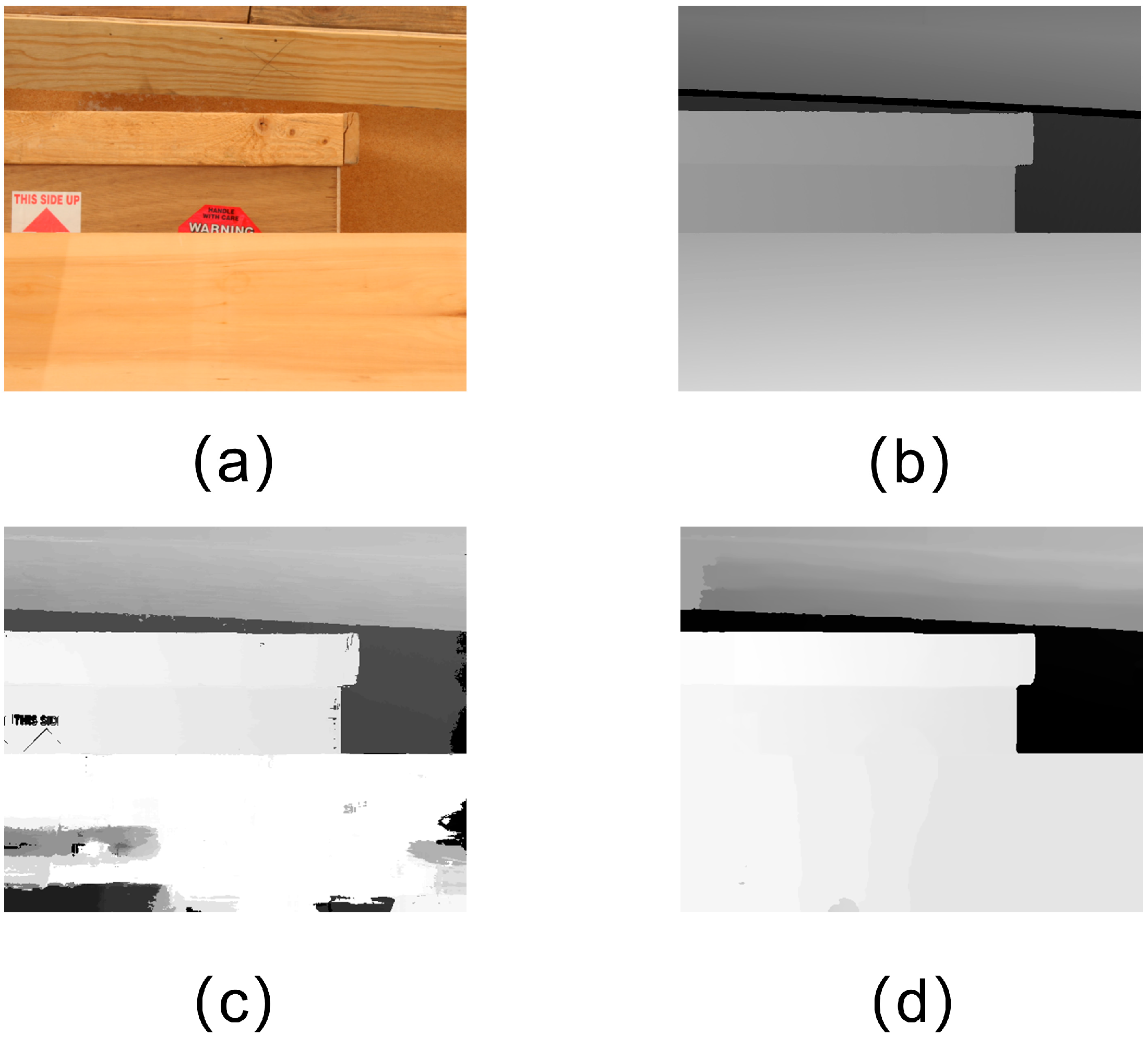

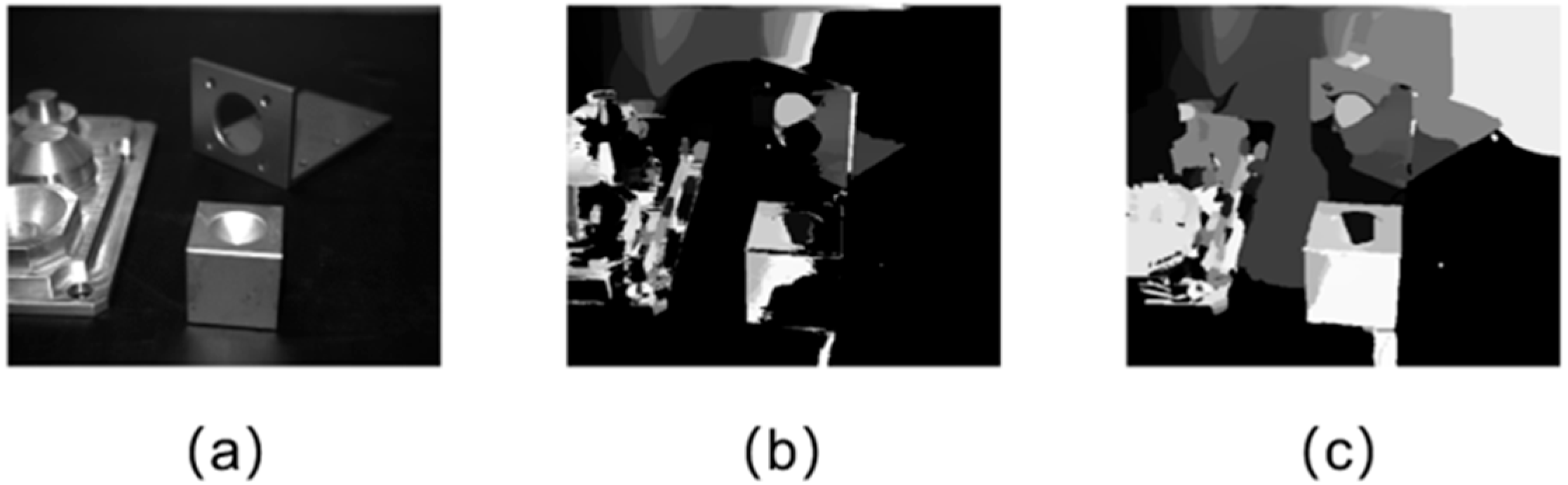

2. Algorithm Description

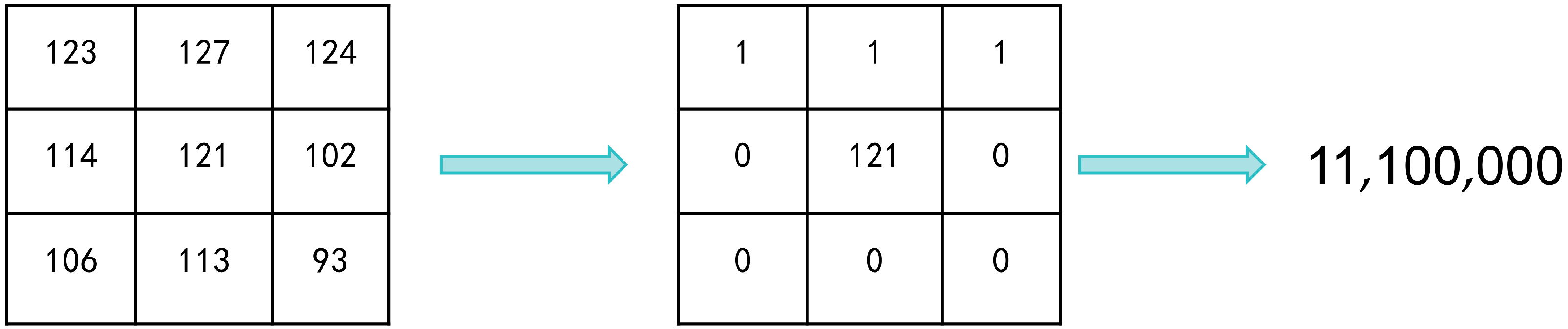

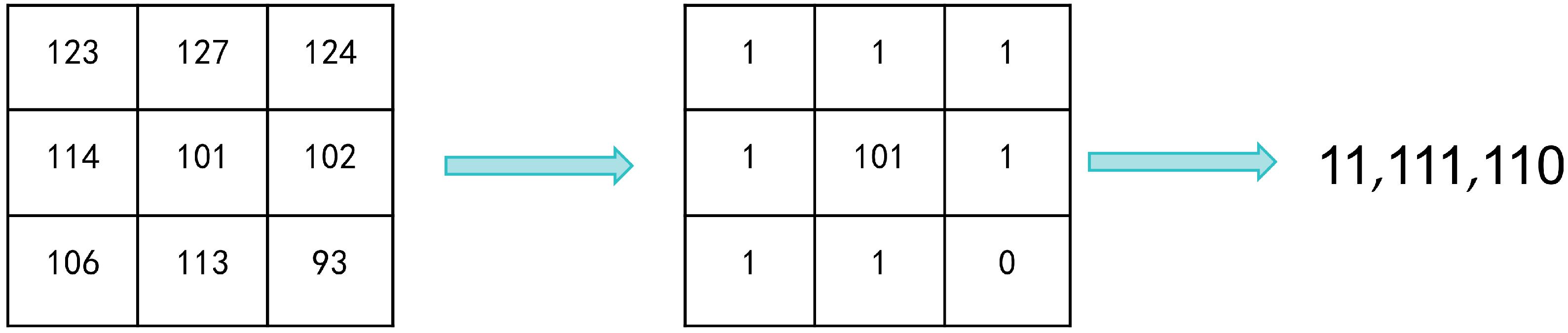

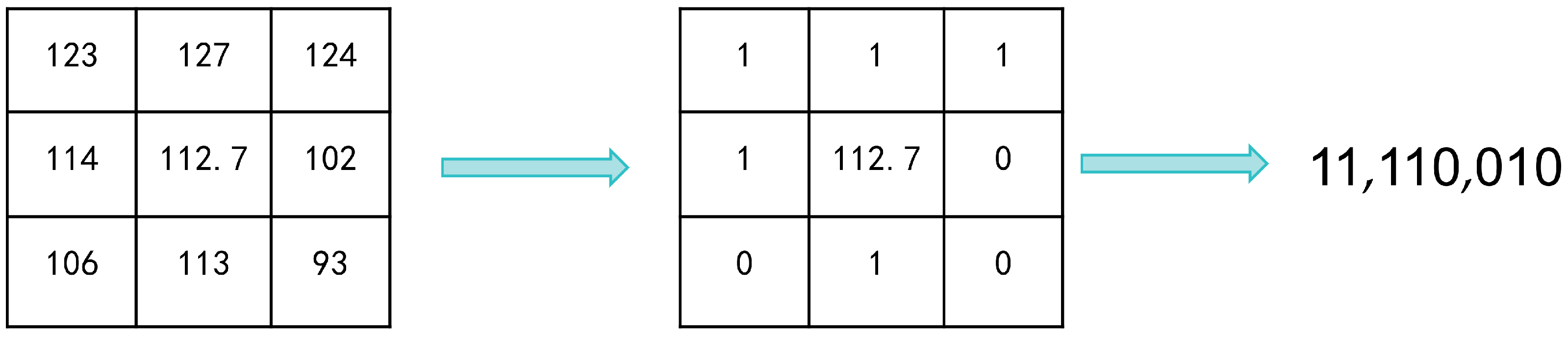

2.1. Cost Computation

2.2. Cost Aggregation

- Reduced mismatch rate compared to the unmodified approach

- Smooth disparity transitions in continuous regions

- Effective restoration of disparity values in weakly textured areas.

2.3. Disparity Computation and Post-Processing

- Left−right consistency check to eliminate mismatched pixels,

- Guided hole filling with valid disparity values for occluded regions,

- Median filtering to enhance smoothness consistency in weakly textured areas.

- Discontinuous mismatches caused by residual noise in initial disparity maps

- Smoothness degradation in low-texture zones

- Boundary artifacts around occlusion boundaries

3. Experimental Results and Analysis

- Non-occluded region error rate

- All-region error rate

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Cheng, Z.Y.; Lu, R.S.; Mao, C.L. Measurement Method of Three-Dimensional Shape of Bright Surface with Binocular Stereo Vision. Laser Optoelectron. Prog. 2020, 57, 204–211. [Google Scholar]

- Jin, Y.; Zhao, H.; Bu, P. Spatial-tree filter for cost aggregation in stereo matching. IET Image Process. 2021, 15, 2135–2145. [Google Scholar] [CrossRef]

- Xu, Y.Y.; Xu, X.Y.; Yu, R. Disparity optimization algorithm for stereo matching using improved guided filter. J. Adv. Comput. Intell. Inform. 2019, 23, 625–633. [Google Scholar] [CrossRef]

- Yu, W.J.; Ye, S.; Guo, Y.; Guo, J. Stereo Matching Algorithm Based on Improved Census Transform and Multi-Feature Fusion. Laser Optoelectron. Prog. 2022, 59, 0810011. [Google Scholar]

- Pham, C.C.; Jeon, J.W. Efficient image sharpening and denoising using adaptive guided image filtering. IET Image Process. 2015, 9, 71–79. [Google Scholar] [CrossRef]

- Cho, H.; Lee, H.; Kang, H.; Lee, S. Bilateral texture filtering. ACM Trans. Graph. 2014, 33, 128. [Google Scholar] [CrossRef]

- Tan, P.; Monasse, P. Stereo disparity through cost aggregation with guided filter. Image Process. Line 2014, 4, 252–275. [Google Scholar] [CrossRef][Green Version]

- Yang, C.Y.; Song, Z.R.; Zhang, X. A stereo matching algorithm for coal mine underground images based on threshold and weight under census Transform. Coal Sci. Technol. 2024, 52, 216–225. [Google Scholar]

- Wang, Y.; Gu, M.; Zhu, Y.F.; Chen, G.; Xu, Z.D.; Guo, Y.Q. Improvement of AD-census algorithm based on stereo vision. Sensors 2022, 22, 6933. [Google Scholar] [CrossRef]

- Zhang, S.M.; Wu, M.X.; Wu, G.X.; Liu, F. Fixed window aggregation AD-census algorithm for phase-based stereo matching. Appl. Opt. 2019, 58, 8950–8958. [Google Scholar] [CrossRef]

- Zhu, S.P.; Yan, L. Local stereo matching algorithm with efficient matching cost and adaptive guided image filter. Vis. Comput. 2017, 33, 1087–1102. [Google Scholar] [CrossRef]

- Xiao, X.W.; Guo, B.X.; Li, D.R.; Li, L.H.; Yang, N.; Liu, J.C.; Zhang, P.; Peng, Z. Multi-view stereo matching based on self-adaptive patch and image grouping for multiple unmanned aerial vehicle imagery. Remote Sens. 2016, 8, 89. [Google Scholar] [CrossRef]

- Irfan, M.A.; Magli, E. Exploiting color for graph-based 3D point cloud denoising. J. Vis. Commun. Image Represent. 2021, 75, 103027. [Google Scholar] [CrossRef]

- Deng, C.G.; Liu, D.Y.; Zhang, H.D.; Li, J.R.; Shi, B.J. Semi-Global Stereo Matching Algorithm Based on Multi-Scale Information Fusion. Appl. Sci. 2023, 13, 1027. [Google Scholar] [CrossRef]

- Liu, Z.G.; Li, Z.; Ao, W.G.; Zhang, S.S.; Liu, W.L.; He, Y.Z. Multi-Scale Cost Attention and Adaptive Fusion Stereo Matching Network. Electronics 2023, 12, 1594. [Google Scholar] [CrossRef]

- Guo, Y.Q.; Gu, M.J.; Xu, Z.D. Research on the Improvement of Semi-Global Matching Algorithm for Binocular Vision Based on Lunar Surface Environment. Sensors 2023, 23, 6901. [Google Scholar] [CrossRef]

- Bu, P.H.; Wang, H.; Dou, Y.H.; Wang, Y.; Yang, T.; Zhao, H. Weighted omnidirectional semi-global stereo matching. Signal Process. 2024, 220, 109439. [Google Scholar] [CrossRef]

- Zhou, Z.Q.; Pang, M. Stereo Matching Algorithm of Multi-Feature Fusion Based on Improved Census Transform. Electronics 2023, 12, 4594. [Google Scholar] [CrossRef]

- Park, I.K. Deep self-guided cost aggregation for stereo matching. Pattern Recognit. Lett. 2018, 112, 168–175. [Google Scholar]

- Stentoumis, C.; Grammatikopoulos, L.; Kalisperakis, I.; Karras, G. On accurate dense stereo-matching using a local adaptive multi-cost approach. ISPRS J. Photogramm. Remote Sens. 2014, 91, 29–49. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, J.X.; Dai, Y.; Su, H. Dense stereo matching based on cross-scale guided image filtering. Acta Opt. Sin. 2018, 38, 0115004. [Google Scholar]

- Hou, Y.G.; Liu, C.Y.; An, B.; Liu, Y. Stereo matching algorithm based on improved Census transform and texture filtering. Optik 2022, 249, 168186. [Google Scholar] [CrossRef]

- Kong, L.Y.; Zhu, J.P.; Ying, S.C. Stereo matching based on guidance image and adaptive support region. Acta Opt. Sin. 2020, 40, 0915001. [Google Scholar] [CrossRef]

- Zhu, C.T.; Chang, Y.Z. Simplified High-Performance Cost Aggregation for Stereo Matching. Appl. Sci. 2023, 13, 1791. [Google Scholar] [CrossRef]

- Hamid, M.S.; Abd Manap, N.F.; Hamzah, R.A.; Kadmin, A.F. Stereo matching algorithm based on deep learning: A survey. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 1663–1673. [Google Scholar] [CrossRef]

| Parameter | α | s | |||

|---|---|---|---|---|---|

| Value | 9 | 1 | 0.7 | 4 | 0.9 |

| Algorithm | Teddy | Cones | Venus | Average |

|---|---|---|---|---|

| Census | 6.34 | 3.49 | 0.36 | 3.40 |

| SGM | 7.23 | 3.71 | 0.79 | 3.91 |

| Proposed | 5.94 | 2.71 | 0.31 | 2.99 |

| Algorithm | Teddy | Cones | Venus | Average |

|---|---|---|---|---|

| Census | 10.4 | 9.43 | 0.53 | 6.79 |

| SGM | 11.2 | 9.07 | 0.91 | 7.06 |

| Proposed | 11.4 | 8.36 | 0.48 | 6.75 |

| Algorithm | Teddy | Cones | Venus | Average |

|---|---|---|---|---|

| Census | 23.1 | 32.4 | 27.3 | 27.6 |

| SGM | 36.8 | 39.2 | 35.1 | 37.03 |

| Proposed | 5.94 | 2.71 | 0.31 | 2.99 |

| Algorithm | Teddy | Cones | Venus | Average |

|---|---|---|---|---|

| Census | 37.4 | 45.3 | 41.7 | 41.47 |

| SGM | 44.6 | 53.7 | 46.5 | 48.27 |

| Proposed | 28.9 | 36.2 | 31.6 | 32.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; Shan, D.; Zhang, P. Improvement of the Cross-Scale Multi-Feature Stereo Matching Algorithm. Appl. Sci. 2025, 15, 5837. https://doi.org/10.3390/app15115837

Chen N, Shan D, Zhang P. Improvement of the Cross-Scale Multi-Feature Stereo Matching Algorithm. Applied Sciences. 2025; 15(11):5837. https://doi.org/10.3390/app15115837

Chicago/Turabian StyleChen, Nan, Dongri Shan, and Peng Zhang. 2025. "Improvement of the Cross-Scale Multi-Feature Stereo Matching Algorithm" Applied Sciences 15, no. 11: 5837. https://doi.org/10.3390/app15115837

APA StyleChen, N., Shan, D., & Zhang, P. (2025). Improvement of the Cross-Scale Multi-Feature Stereo Matching Algorithm. Applied Sciences, 15(11), 5837. https://doi.org/10.3390/app15115837